Environment-Aware Adaptive Reinforcement Learning-Based Routing for Vehicular Ad Hoc Networks

Abstract

:1. Introduction

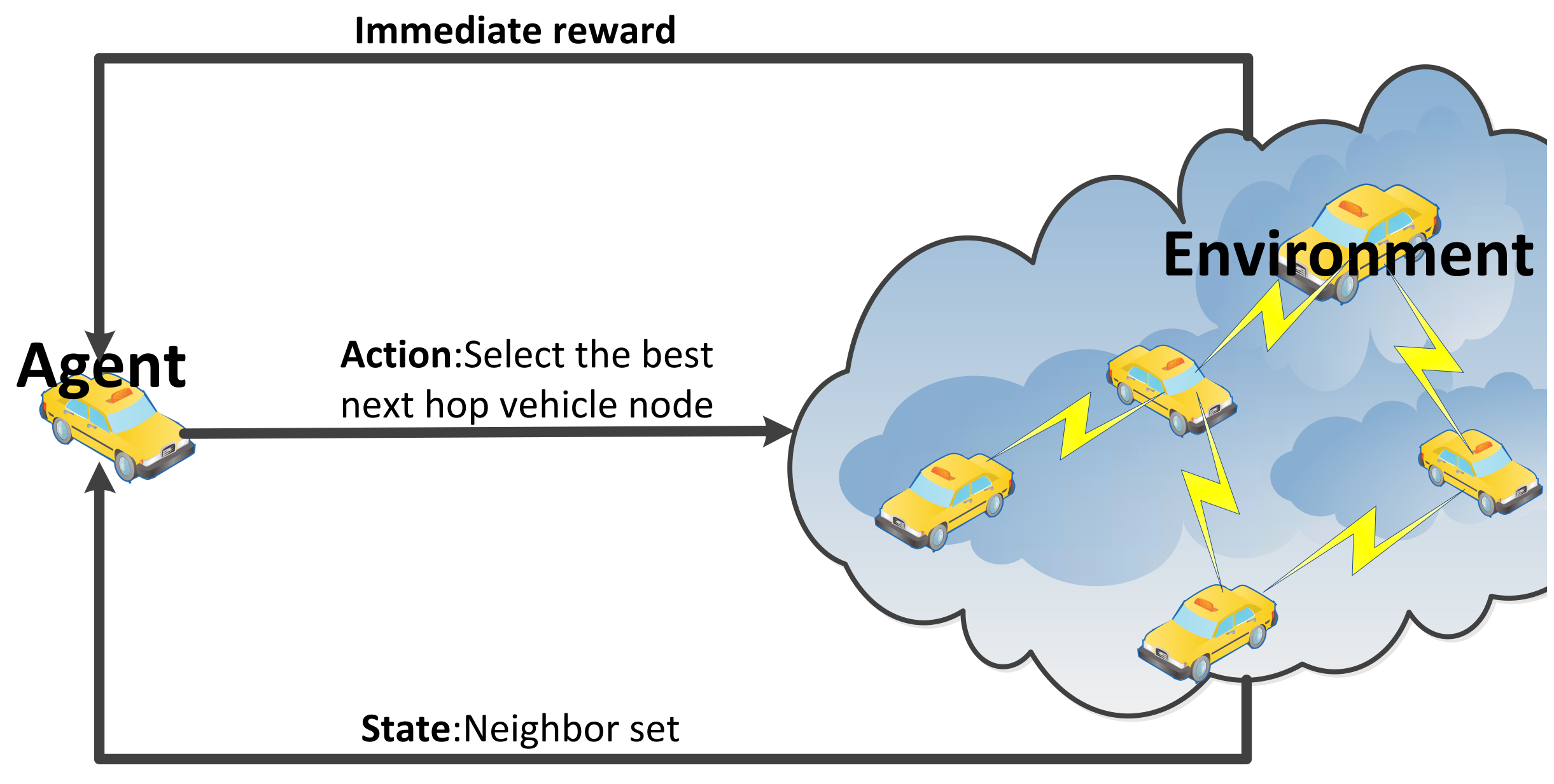

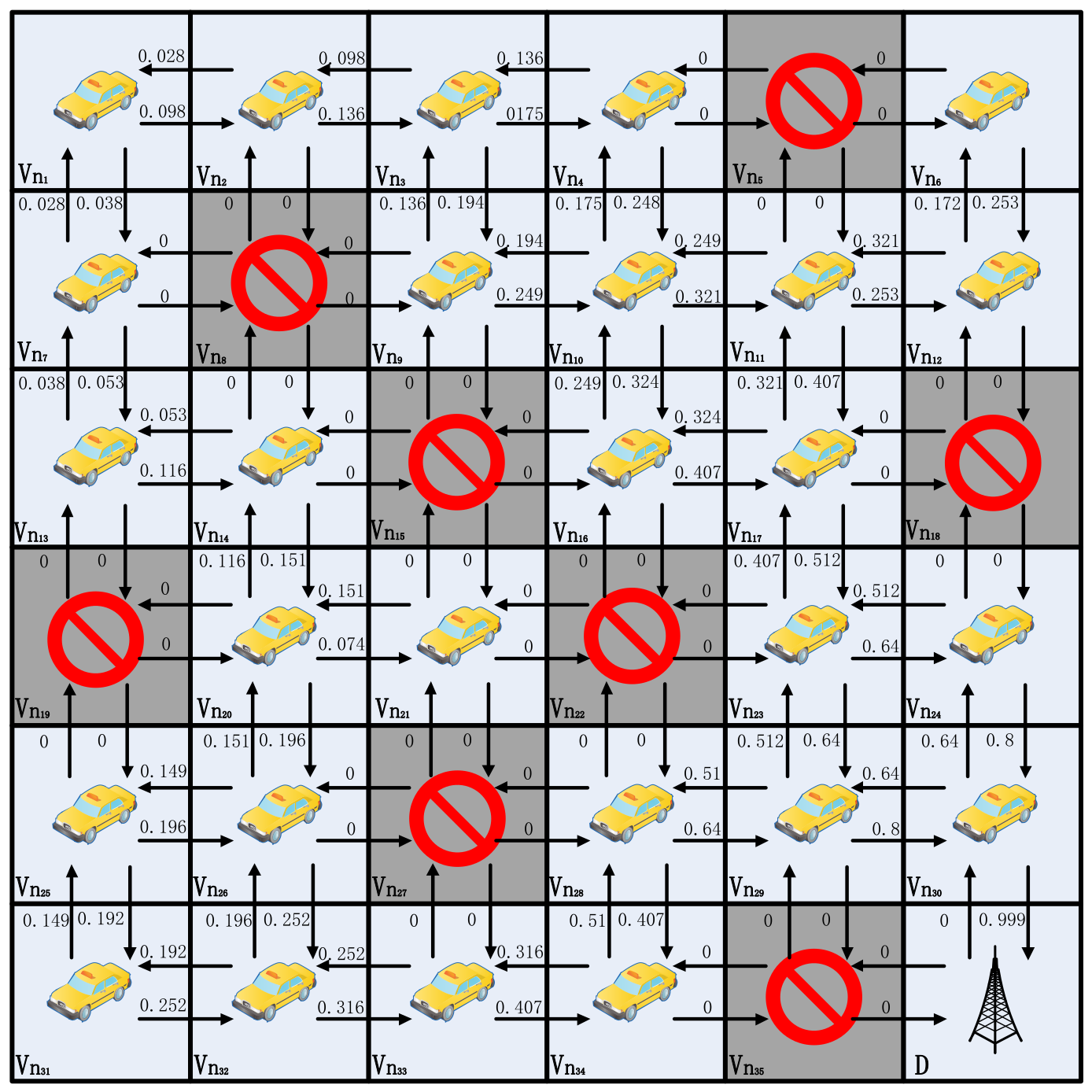

- Q tables instead of routing tables: This study proposes an environment-aware reinforcement learning-based routing (EARR) protocol for VANETs. EARR employs Q-learning for multimetric routing optimization and does not rely on fixed routing vector tables. Instead, it utilizes a continuously adaptive updated Q-value table. As a result, there is no need to maintain end-to-end links, thereby reducing the significant network overhead caused by routing maintenance. Given the highly dynamic topology of VANETs, ensuring route stability in a timely manner often leads to prohibitively high maintenance costs. By selecting the next-hop relay node based on the Q value, only local one-hop links need to be maintained, thereby forming optimal or suboptimal routes in the process of selecting the best next-hop for each hop.

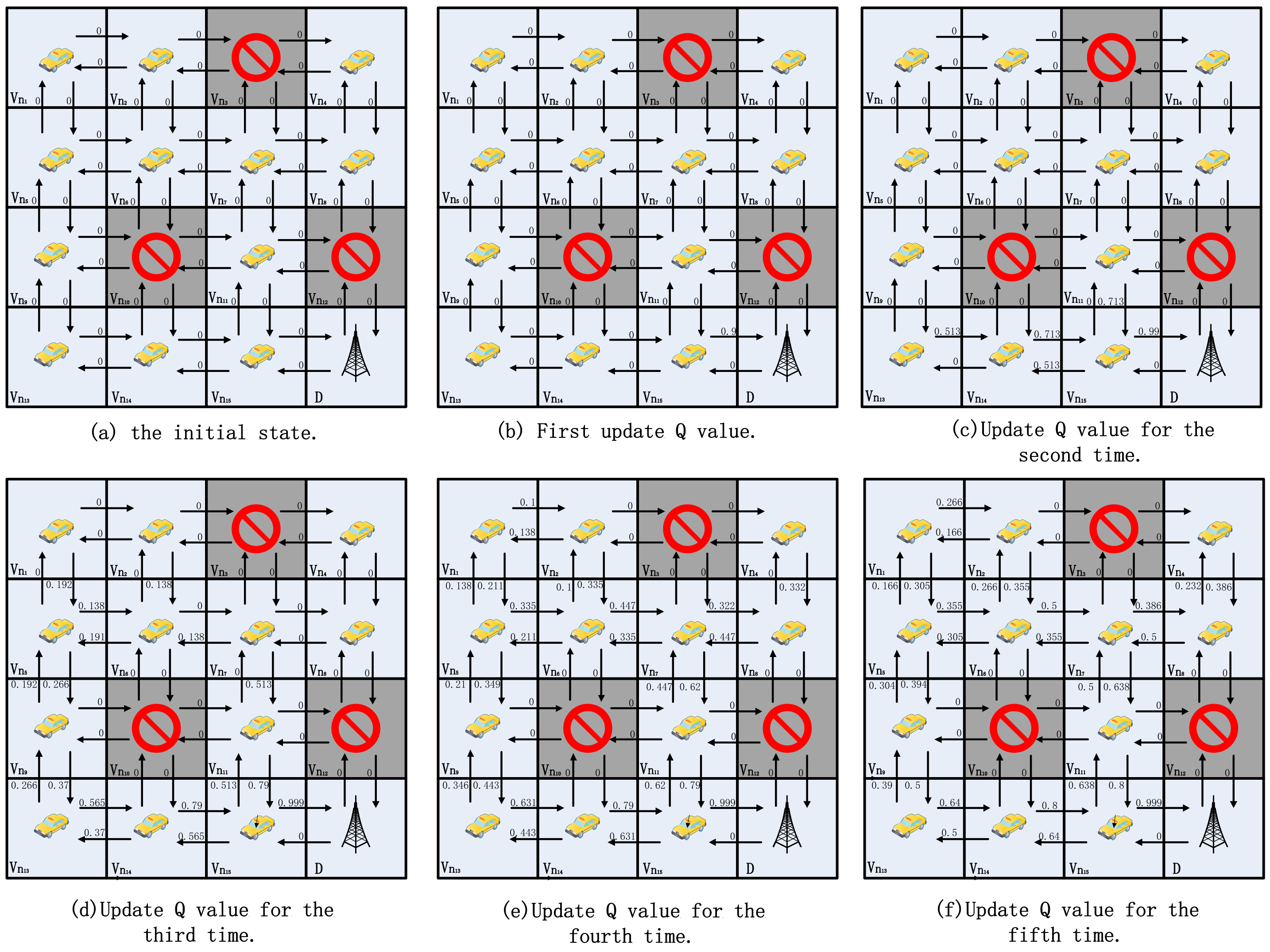

- Adaptive Q-learning: In highly dynamic VANETs, link stability is extremely fragile. The Q-learning approach rapidly comprehends the network environment through beacons and utilizes neighbor information alongside adaptive Q-learning techniques to autonomously adjust the learning rate and discount factor. By altering the learning rate and discount factor, the routing adapts itself to the current environmental changes.

- Broadcast updates: In this study, EARR updates Q-table values by using a special broadcast beacon. The feasibility of the scheme is proved by building a simple vehicle Q-value update model. It also explains the features of the routing scheme, such as solving the dilemma of Q-learning development and exploration and adjusting the parameters in time under the change in environment.

- Shadow fading and residual bandwidth: In the unique working environment of VANETs, factors such as urban vegetation and buildings significantly affect signal propagation, thereby resulting in substantial signal loss and reduced link lifetimes. To mitigate data packet loss caused by low-quality communication links, this study introduces the received signal-strength indication (RSSI) into the discount factor. Due to the nature of wireless communication, signals need to be transmitted in specific frequency bands. The network is susceptible to congestion due to excessive traffic, thereby hindering network performance improvement. Therefore, we incorporate residual bandwidth estimation during the link establishment process to assess network conditions and balance network load.

2. Related Work

2.1. Traditional Mobile Routing

2.2. Traffic-Aware Routing

2.3. Machine Learning-Based Routing

3. Preliminaries

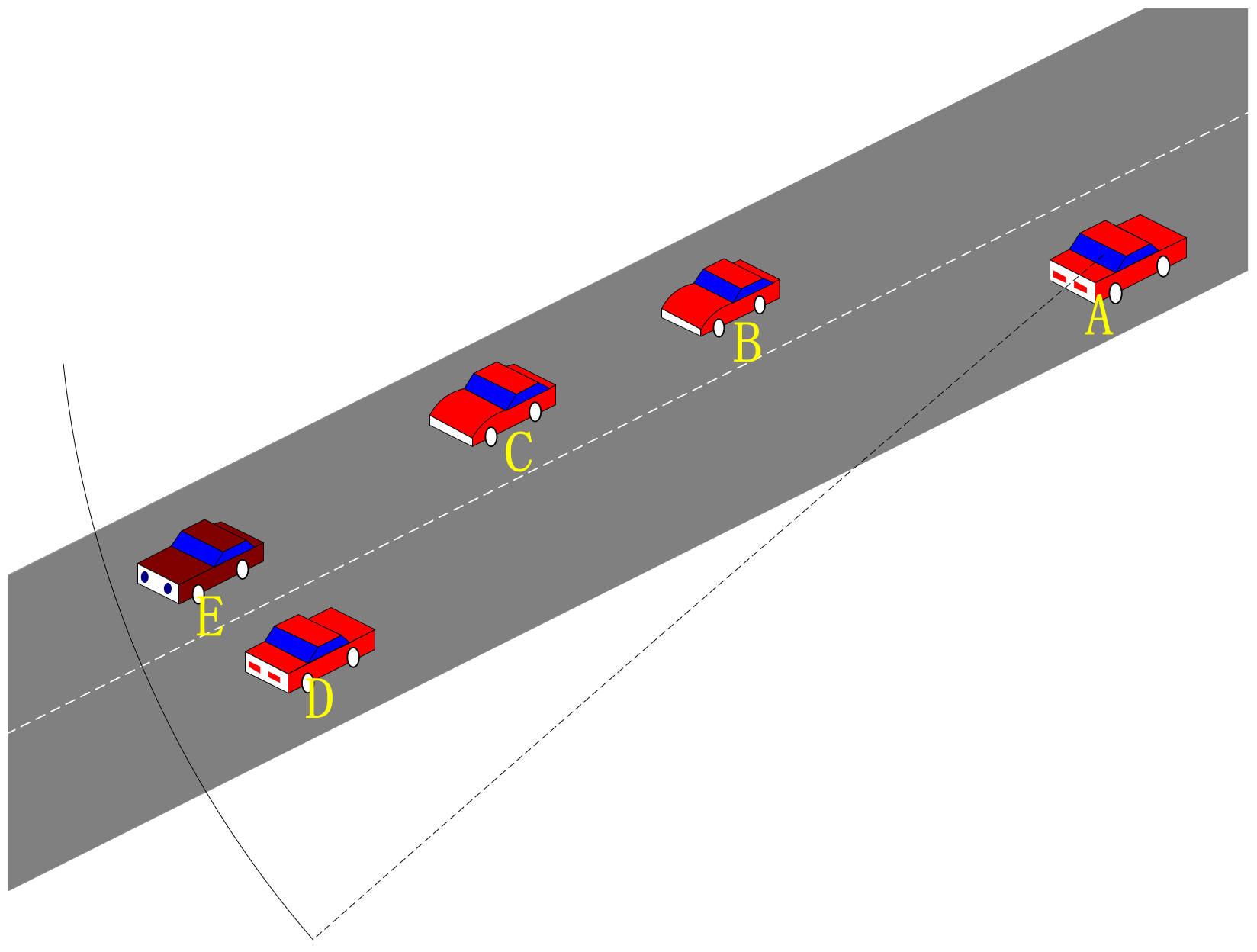

3.1. Problem Statement

- Many routing algorithms based on ML or RL are inherently complex. The intricate urban road structures and constantly changing vehicle conditions further exacerbate the challenges in program execution. These algorithms confront high levels of uncertainty, thereby making it difficult to achieve satisfactory results in the end.

- In VANET routing protocols based on Q-learning, many protocols rely on RSUs to forward data or monitor road traffic at intersections, which is difficult to meet in many practical scenarios.

- Many routing protocols cannot detect network congestion. The routing protocol should be able to select the forwarding according to the busy status of the next-hop node to improve the network performance.

- The protocol needs to adjust parameters in real-time based on the vehicle environment to prevent performance degradation due to the environment’s dynamic changes.

3.2. Motivation Scenario

3.3. Network Model

3.4. Q-Learning Framework

4. Algorithm Design

4.1. Link Stability Factor

4.2. Link Duration Factor

4.3. Received Signal-Strength Factor

4.4. Available Bandwidth

4.5. Routing Neighbor Discovery

| Algorithm 1 Beacon exchange for neighbor discovery |

| Input: Graph , , , and Output: Neighbor tables |

Phase 1: Broadcast Beacon

|

Phase 2: Location estimation

|

Phase 3: Neighbor vehicle discovery

|

4.6. Adaptive Q-Learning Parameters

4.7. Reward Function and Penalty

4.8. Q-Table Update

| Algorithm 2 Updating Q-table values for each vehicle |

| Input: Neighbor tables Output: Q-tables |

|

| Calculate reward |

4.9. EARR Routing Selection

| Algorithm 3 Routing-path selection model for each vehicle |

| Input: Q-tables Output: The next hop neighbor vehicle node is selected |

|

5. Simulation Results and Analysis

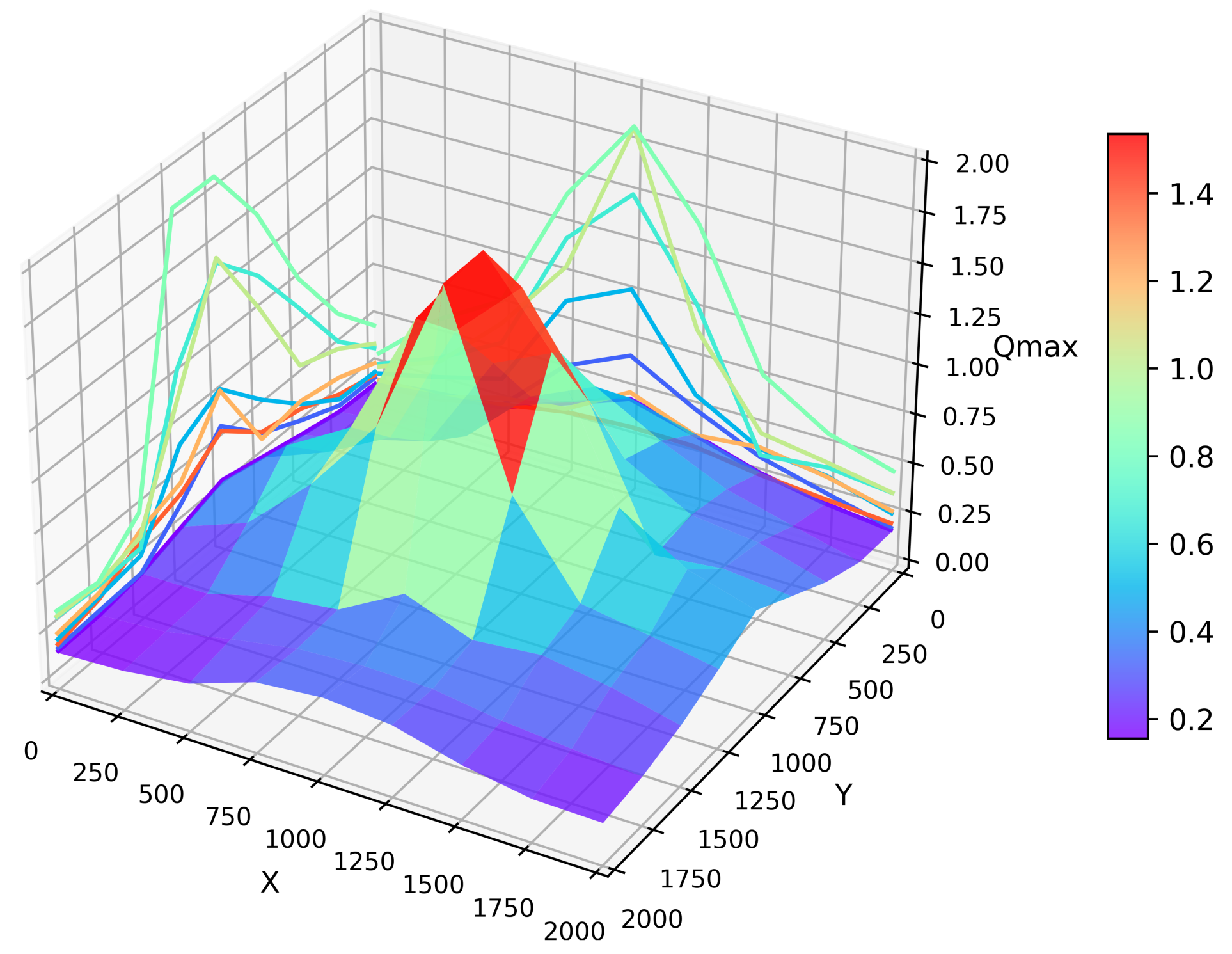

5.1. Simulation Environment and Parameters

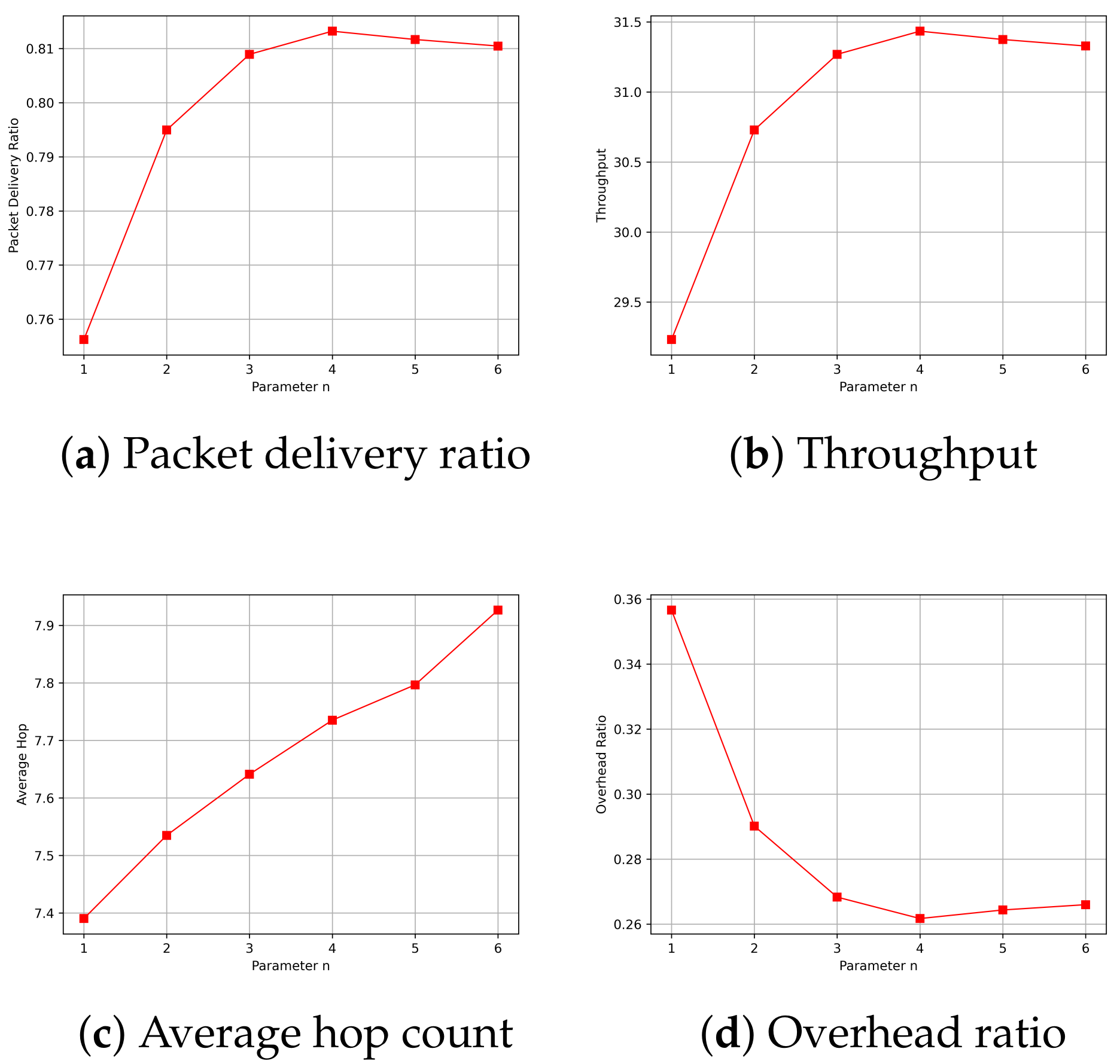

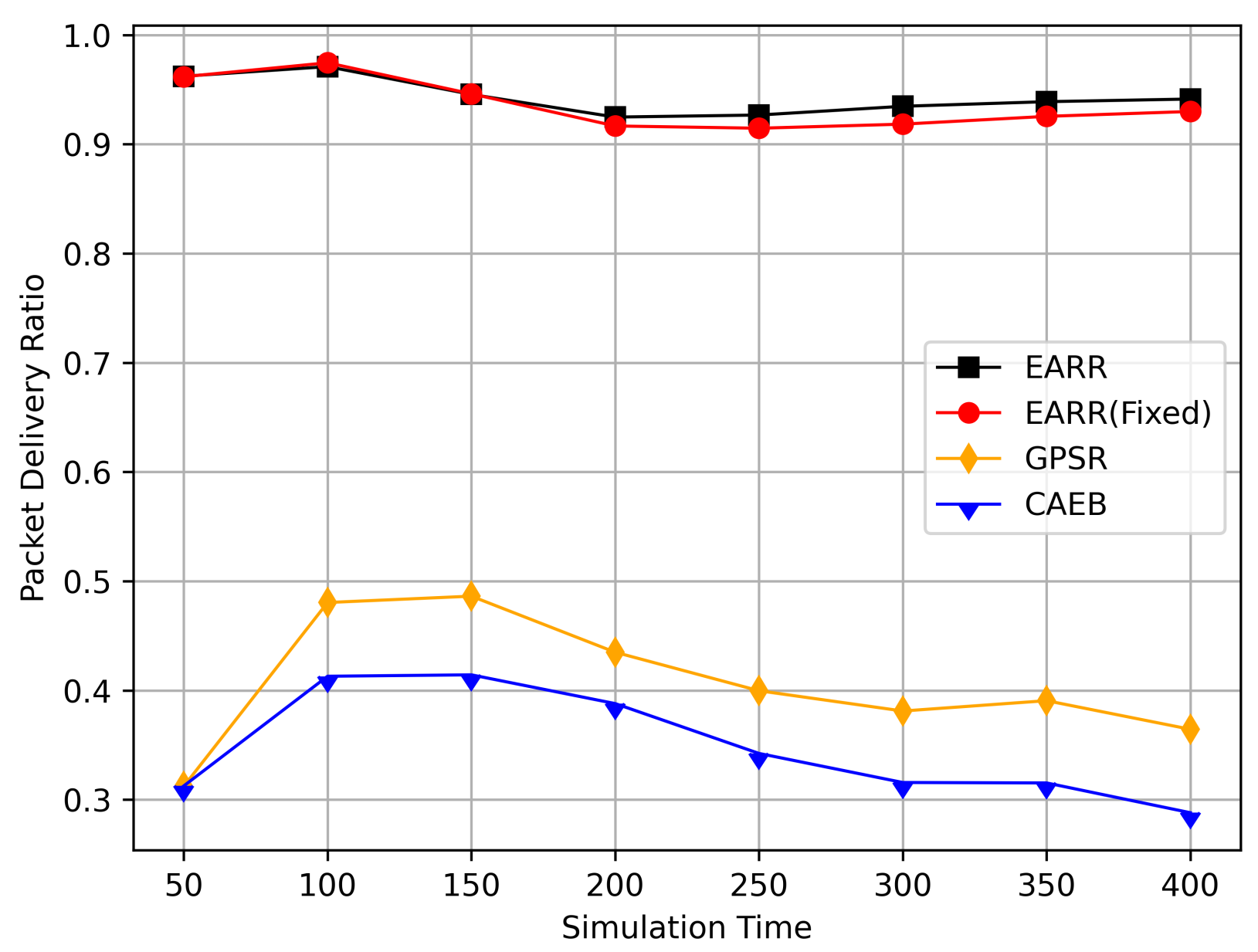

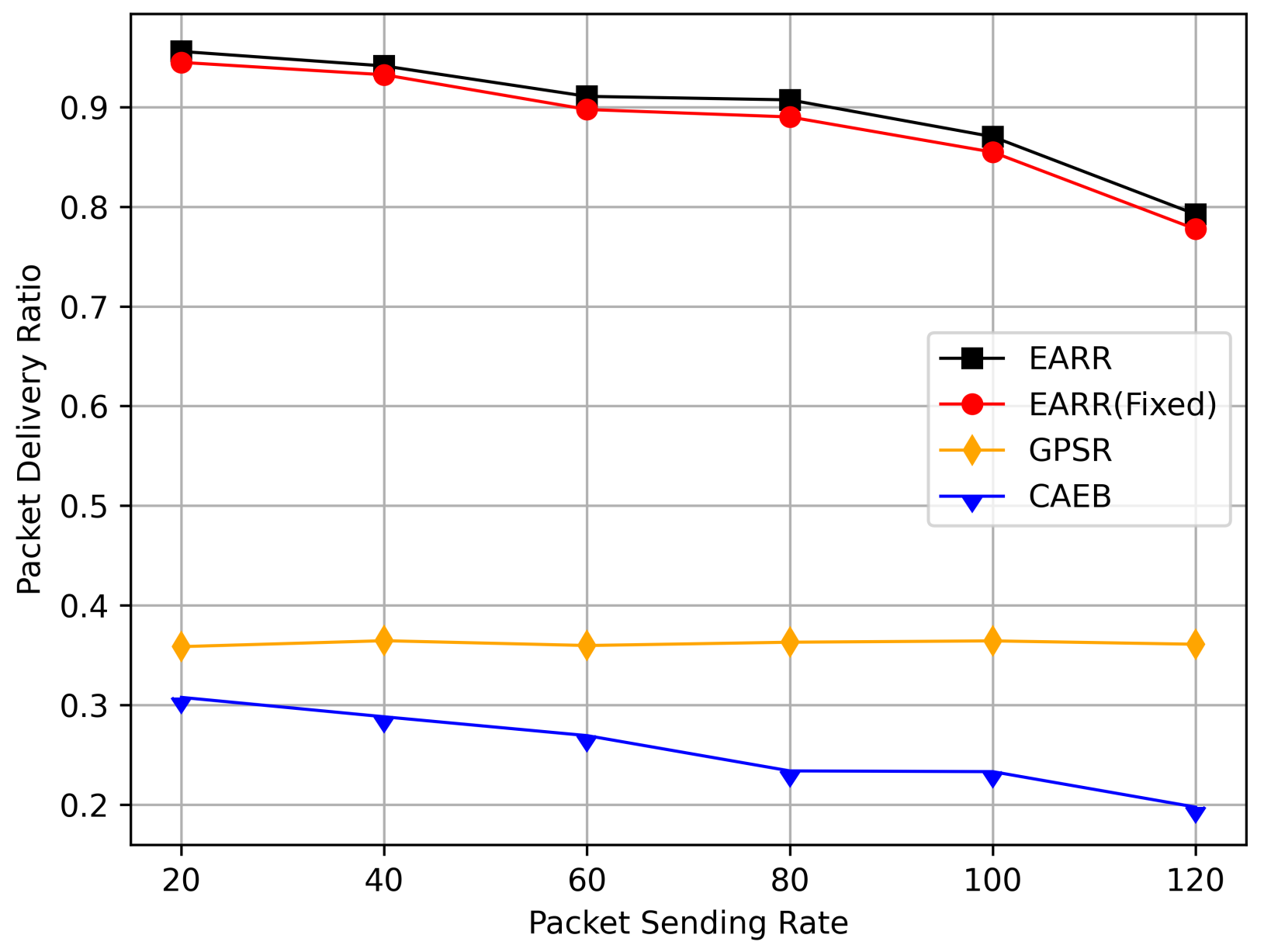

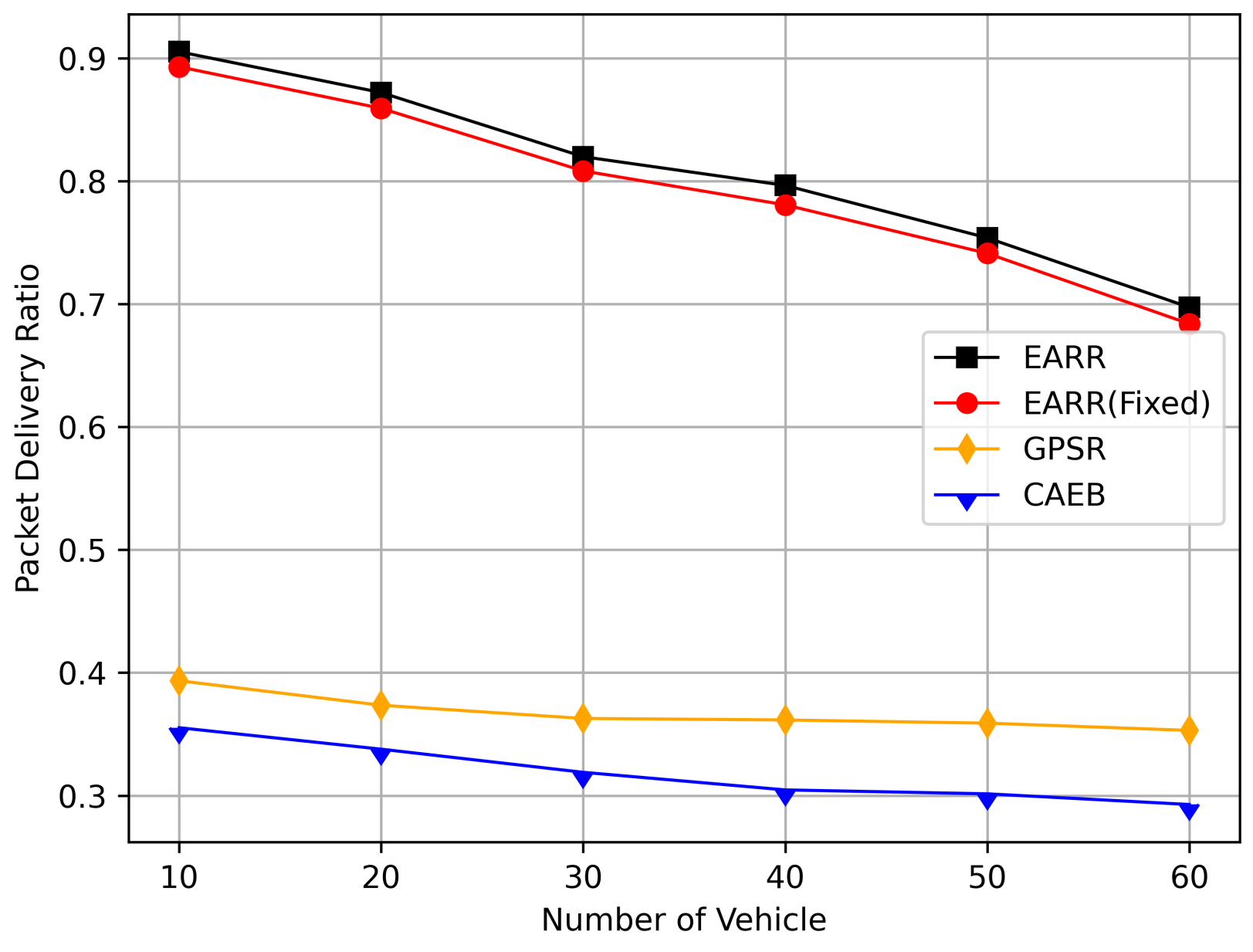

- Packet Delivery Ratio: The ratio of the total number of packets received at the destination node to the total number of packets generated by the source node.

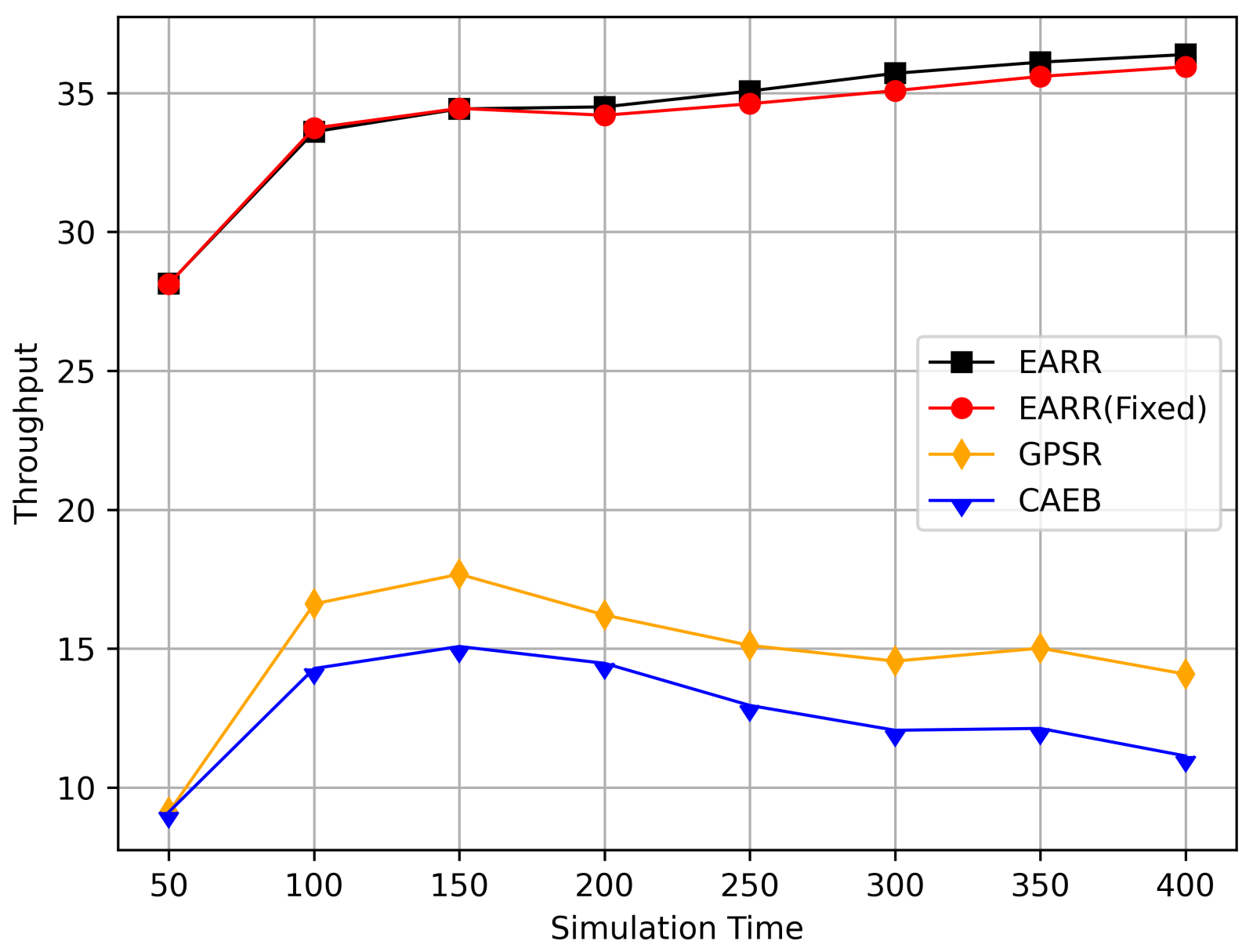

- Throughput: Throughput refers to the amount of data or the number of data packets successfully transmitted within a unit of time.

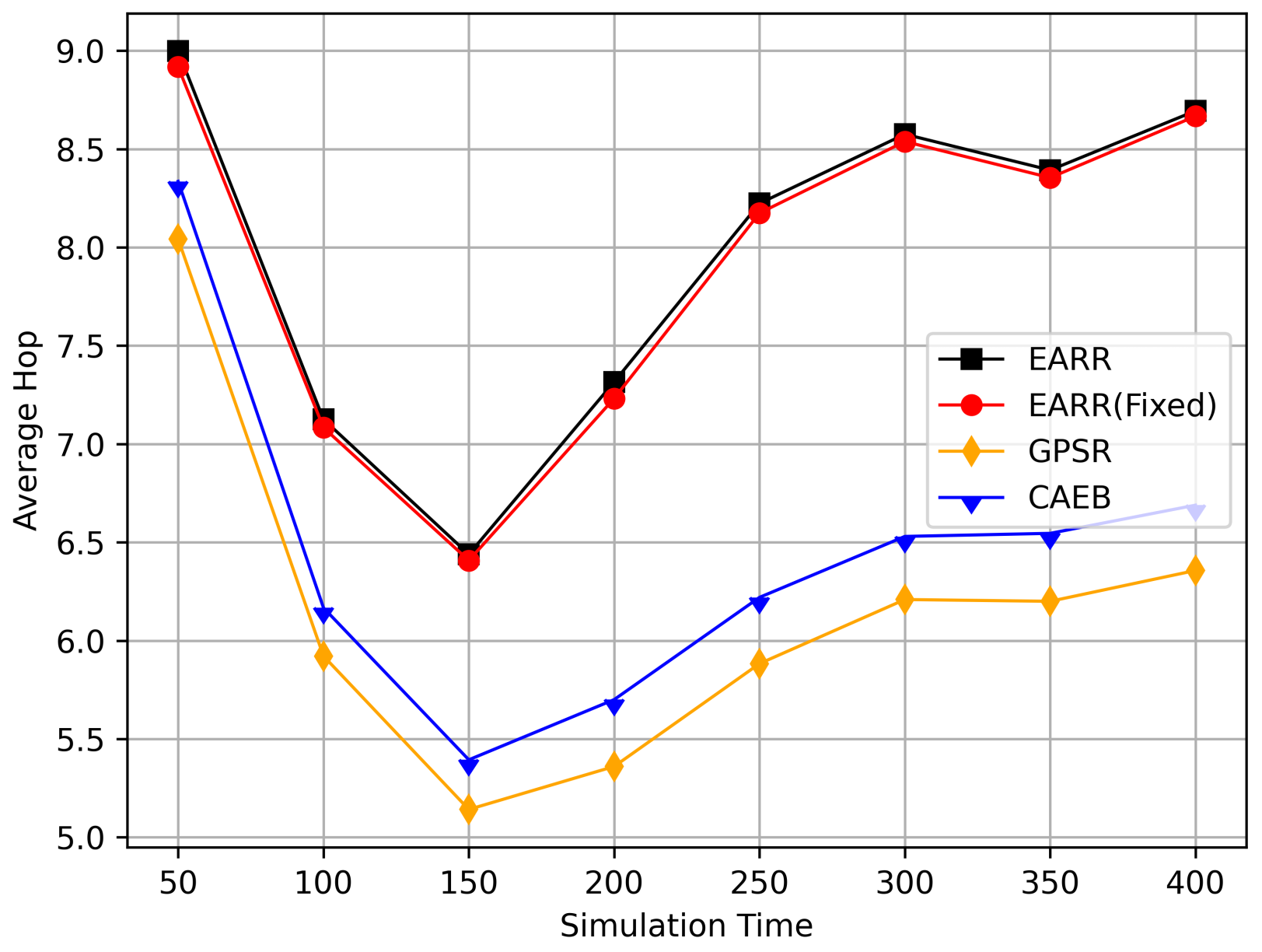

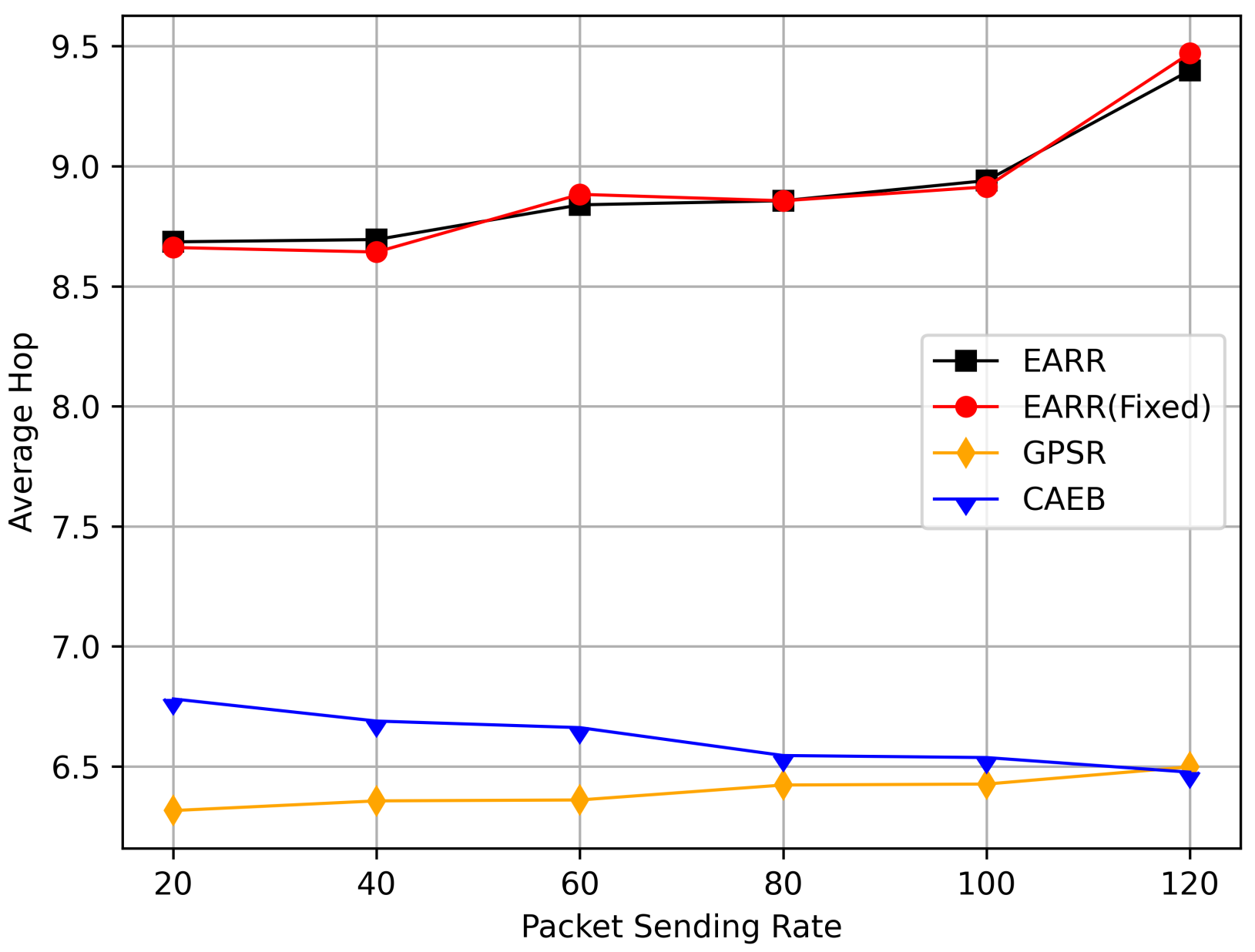

- Average Hop Count: The average number of nodes that a packet passes through on the routing path from the source node to destination node.

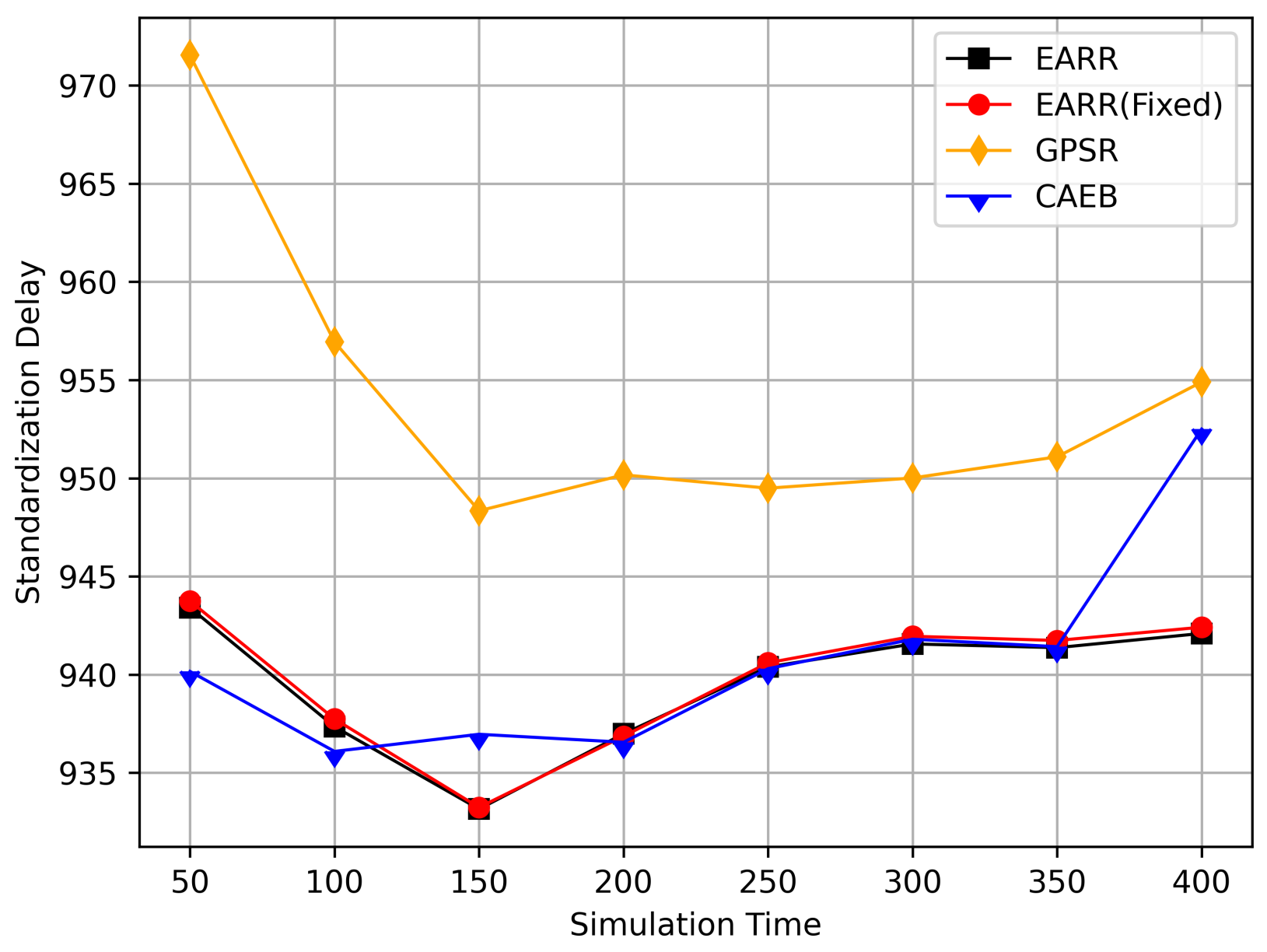

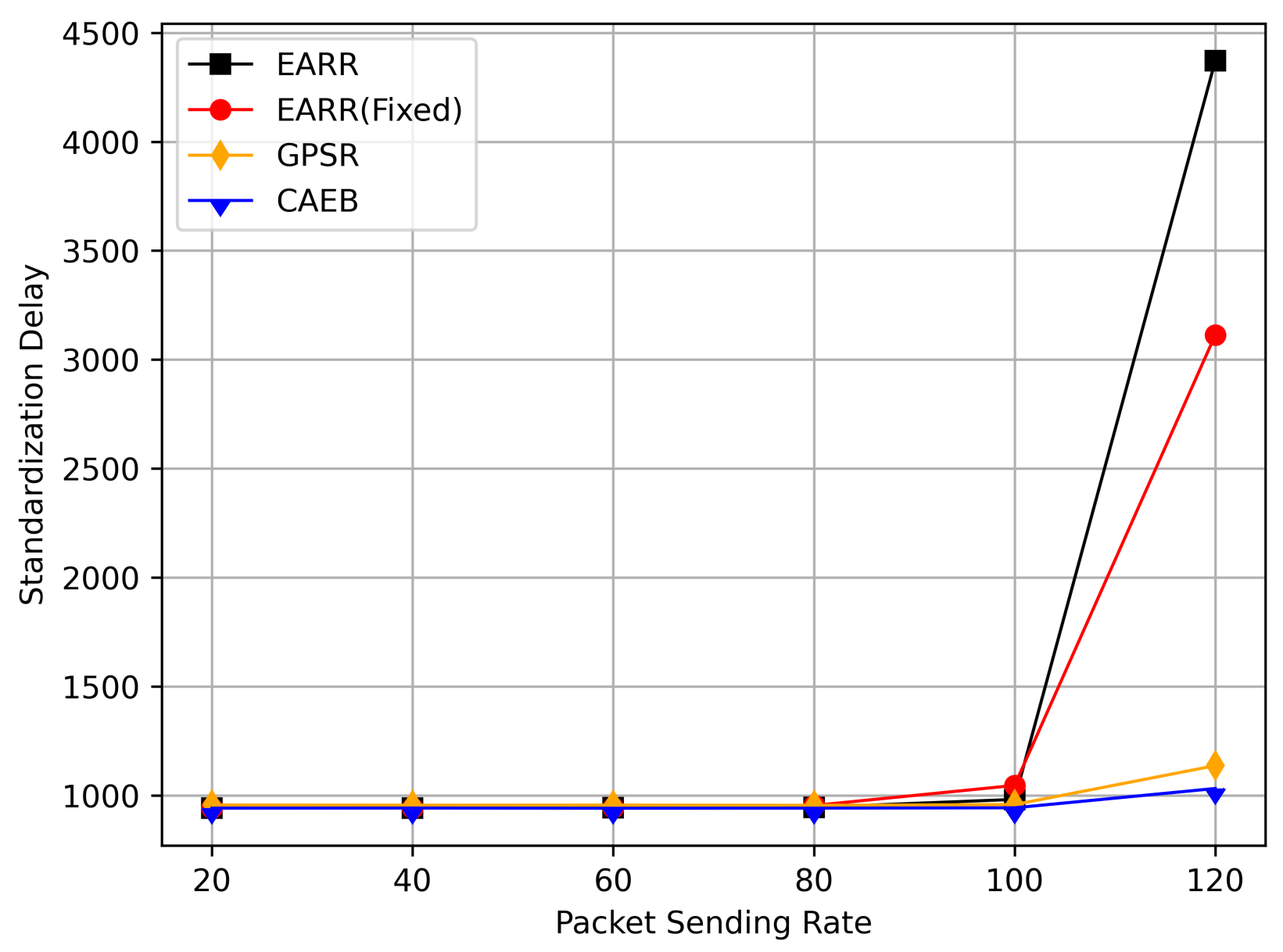

- Standardization Delay: The average end-to-end time refers to the time it takes for data to be transmitted from the source node to the destination node along the routing path. To fairly assess the delay experienced by mobile nodes, delay is standardized based on the length of the routing path.

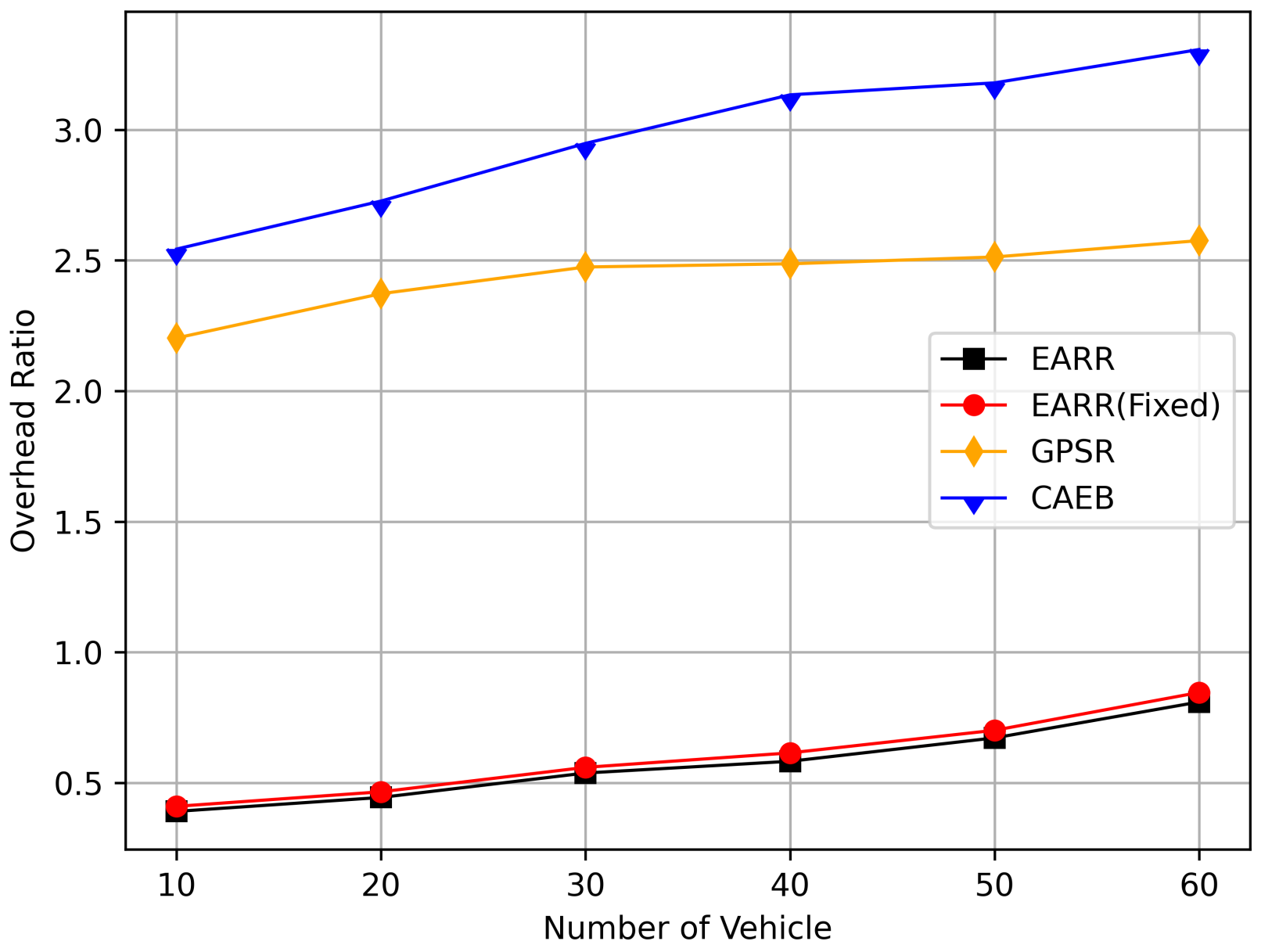

- Overhead Ratio: The total number of generated packets minus the numbers of packets successfully received by the destination and then divided by the numbers of packets successfully received by the destination.

- CBR Flows and Constant Bit Rate (CBR) traffic: During the simulation process, the source nodes for each CBR flow are initially randomly selected by the program and remain unchanged during the simulation. In this context, CBR flow simulation involves an increase from 10 to 60 nodes, with each node sending four data packets of 512 bytes per second.

5.2. Simulation Results and Analysis

5.2.1. Impact of Simulation Time

5.2.2. Impact of Data Interval

5.2.3. CBR Flows

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mchergui, A.; Moulahi, T.; Zeadally, S. Survey on artificial intelligence (ai) techniques for vehicular ad-hoc networks (vanets). Veh. Commun. 2022, 34, 100403. [Google Scholar] [CrossRef]

- Guo, J.; Liu, Z.; Tian, S.; Huang, F.; Li, J.; Li, X.; Igorevich, K.K.; Ma, J. Tfl-dt: A trust evaluation scheme for federated learning in digital twin for mobile networks. IEEE J. Sel. Areas Commun. 2023, 41, 3548–3560. [Google Scholar] [CrossRef]

- Ghaffari, A. Hybrid opportunistic and position-based routing protocol in vehicular ad hoc networks. J. Ambient. Intell. Humaniz. Comput. 2020, 11, 1593–1603. [Google Scholar] [CrossRef]

- Ksouri, C.; Jemili, I.; Mosbah, M.; Belghith, A. Towards general internet of vehicles networking: Routing protocols survey. Concurr. Comput. Pract. Exp. 2022, 34, e5994. [Google Scholar] [CrossRef]

- Guo, J.; Li, X.; Liu, Z.; Ma, J.; Yang, C.; Zhang, J.; Wu, D. Trove: A context-awareness trust model for vanets using reinforcement learning. IEEE Internet Things J. 2020, 7, 6647–6662. [Google Scholar] [CrossRef]

- Monfared, S.K.; Shokrollahi, S. Darvan: A fully decentralized anonymous and reliable routing for vanets. Comput. Netw. 2023, 223, 109561. [Google Scholar] [CrossRef]

- Goudarzi, F.; Asgari, H.; Al-Raweshidy, H.S. Traffic-aware vanet routing for city environments—A protocol based on ant colony optimization. IEEE Syst. J. 2018, 13, 571–581. [Google Scholar] [CrossRef]

- Nahar, A.; Das, D. Metalearn: Optimizing routing heuristics with a hybrid meta-learning approach in vehicular ad-hoc networks. Ad Hoc Netw. 2023, 138, 102996. [Google Scholar] [CrossRef]

- Kumbhar, F.H.; Shin, S.Y. Dt-var: Decision tree predicted compatibility-based vehicular ad-hoc reliable routing. IEEE Wirel. Commun. Lett. 2020, 10, 87–91. [Google Scholar] [CrossRef]

- Latif, S.; Mahfooz, S.; Jan, B.; Ahmad, N.; Cao, Y.; Asif, M. A comparative study of scenario-driven multi-hop broadcast protocols for vanets. Veh. Commun. 2018, 12, 88–109. [Google Scholar] [CrossRef]

- Srivastava, A.; Prakash, A.; Tripathi, R. Location based routing protocols in vanet: Issues and existing solutions. Veh. Commun. 2020, 23, 100231. [Google Scholar] [CrossRef]

- Himawan, H.; Hassan, A.; Bahaman, N.A. Performance analysis of communication model on position based routing protocol: Review analysis. Informatica 2022, 46, 6. [Google Scholar] [CrossRef]

- Karp, B.; Kung, H.T. Gpsr: Greedy perimeter stateless routing for wireless networks. In Proceedings of the 6th Annual International Conference on Mobile Computing and Networking, Boston, MA, USA, 6–11 August 2000; pp. 243–254. [Google Scholar]

- Darwish, T.; Bakar, K.A. Traffic aware routing in vehicular ad hoc networks: Characteristics and challenges. Telecommun. Syst. 2016, 61, 489–513. [Google Scholar] [CrossRef]

- Kumar, S.; Singh, R.P.; Kumar, A.; Yaduwanshi, R.; Dora, D.P. Ts-cagr: Traffic sensitive connectivity-aware geocast routing protocol in internet of vehicles. Ad Hoc Netw. 2023, 147, 103210. [Google Scholar]

- Wu, J.; Fang, M.; Li, H.; Li, X. Rsu-assisted traffic-aware routing based on reinforcement learning for urban vanets. IEEE Access 2020, 8, 5733–5748. [Google Scholar] [CrossRef]

- Gheisari, M.; Ebrahimzadeh, F.; Rahimi, M.; Moazzamigodarzi, M.; Liu, Y.; Dutta Pramanik, P.K.; Heravi, M.A.; Mehbodniya, A.; Ghaderzadeh, M.; Feylizadeh, M.R.; et al. Deep learning: Applications, architectures, models, tools, and frameworks: A comprehensive survey. CAAI Trans. Intell. Technol. 2023, 8, 581–606. [Google Scholar] [CrossRef]

- Li, G.; Cai, C.; Chen, Y.; Wei, Y.; Chi, Y. Is q-learning minimax optimal? A tight sample complexity analysis. Oper. Res. 2023. [Google Scholar] [CrossRef]

- Arafat, M.Y.; Moh, S. A q-learning-based topology-aware routing protocol for flying ad hoc networks. IEEE Internet Things J. 2021, 9, 1985–2000. [Google Scholar] [CrossRef]

- Luo, L.; Sheng, L.; Yu, H.; Sun, G. Intersection-based v2x routing via reinforcement learning in vehicular ad hoc networks. IEEE Trans. Intell. Transp. Syst. 2021, 23, 5446–5459. [Google Scholar] [CrossRef]

- Zhou, Y.; Cao, T.; Xiang, W. Anypath routing protocol design via q-learning for underwater sensor networks. IEEE Internet Things J. 2020, 8, 8173–8190. [Google Scholar] [CrossRef]

- Ryu, J.; Kim, S. Reputation-based opportunistic routing protocol using q-learning for manet attacked by malicious nodes. IEEE Access 2023, 11, 47701–47711. [Google Scholar] [CrossRef]

- Darabkh, K.A.; Judeh, M.S.A.; Salameh, H.B.; Althunibat, S. Mobility aware and dual phase aodv protocol with adaptive hello messages over vehicular adhoc networks. AEU-Int. J. Electron. Commun. 2018, 94, 277–292. [Google Scholar] [CrossRef]

- Saini, T.K.; Sharma, S.C. Recent advancements, review analysis, and extensions of the aodv with the illustration of the applied concept. Ad Hoc Netw. 2020, 103, 102148. [Google Scholar] [CrossRef]

- Khudayer, B.H.; Anbar, M.; Hanshi, S.M.; Wan, T.-C. Efficient route discovery and link failure detection mechanisms for source routing protocol in mobile ad-hoc networks. IEEE Access 2020, 8, 24019–24032. [Google Scholar] [CrossRef]

- Arega, K.L.; Raga, G.; Bareto, R. Survey on performance analysis of aodv, dsr and dsdv in manet. Comput. Eng. Intell. Syst. 2020, 11, 23–32. [Google Scholar]

- Bai, R.; Singhal, M. Doa: Dsr over aodv routing for mobile ad hoc networks. IEEE Trans. Mob. Comput. 2006, 5, 1403–1416. [Google Scholar]

- Rivoirard, L.; Wahl, M.; Sondi, P.; Berbineau, M.; Gruyer, D. Performance evaluation of aodv, dsr, grp and olsr for vanet with real-world trajectories. In Proceedings of the 15th International Conference on ITS Telecommunications (ITST), Warsaw, Poland, 29–31 May 2017; pp. 1–7. [Google Scholar]

- Alsharif, N.; Céspedes, S.; Shen, X.S. icar: Intersection-based connectivity aware routing in vehicular ad hoc networks. In Proceedings of the 2013 IEEE International Conference on Communications (ICC), Budapest, Hungary, 9–13 June 2013; pp. 1736–1741. [Google Scholar]

- Alsharif, N.; Shen, X.S. icarii: Intersection-based connectivity aware routing in vehicular networks. In Proceedings of the 2014 IEEE International Conference on Communications (ICC), Sydney, NSW, Australia, 10–14 June 2014; pp. 2731–2735. [Google Scholar]

- Jerbi, M.; Senouci, S.-M.; Rasheed, T.; Ghamri-Doudane, Y. Towards efficient geographic routing in urban vehicular networks. IEEE Trans. Veh. Technol. 2009, 58, 5048–5059. [Google Scholar] [CrossRef]

- Darwish, T.; Bakar, K.A. Lightweight intersection-based traffic aware routing in urban vehicular networks. Comput. Commun. 2016, 87, 60–75. [Google Scholar] [CrossRef]

- Darwish, T.S.J.; Bakar, K.A.; Haseeb, K. Reliable intersection-based traffic aware routing protocol for urban areas vehicular ad hoc networks. IEEE Intell. Transp. Syst. Mag. 2018, 10, 60–73. [Google Scholar] [CrossRef]

- Chen, C.; Liu, L.; Qiu, T.; Jiang, J.; Pei, Q.; Song, H. Routing with traffic awareness and link preference in internet of vehicles. IEEE Trans. Intell. Transp. Syst. 2020, 23, 200–214. [Google Scholar] [CrossRef]

- Rui, L.; Yan, Z.; Tan, Z.; Gao, Z.; Yang, Y.; Chen, X.; Liu, H. An intersection-based qos routing for vehicular ad hoc networks with reinforcement learning. IEEE Trans. Intell. Transp. Syst. 2023, 24, 9068–9083. [Google Scholar] [CrossRef]

- Zhao, L.; Li, Y.; Meng, C.; Gong, C.; Tang, X. A svm based routing scheme in vanets. In Proceedings of the 2016 16th International Symposium on Communications and Information Technologies (ISCIT), Qingdao, China, 26–28 September 2016; pp. 380–383. [Google Scholar]

- Sindhwani, M.; Sachdeva, S.; Gupta, A.; Tanwar, S.; Alqahtani, F.; Tolba, A.; Raboaca, M.S. A novel context-aware reliable routing protocol and svm implementation in vehicular area networks. Mathematics 2023, 11, 514. [Google Scholar] [CrossRef]

- Wu, C.; Ohzahata, S.; Kato, T. Flexible, portable, and practicable solution for routing in vanets: A fuzzy constraint q-learning approach. IEEE Trans. Veh. Technol. 2013, 62, 4251–4263. [Google Scholar] [CrossRef]

- Li, F.; Song, X.; Chen, H.; Li, X.; Wang, Y. Hierarchical routing for vehicular ad hoc networks via reinforcement learning. IEEE Trans. Veh. Technol. 2018, 68, 1852–1865. [Google Scholar] [CrossRef]

- An, C.; Wu, C.; Yoshinaga, T.; Chen, X.; Ji, Y. A context-aware edge-based vanet communication scheme for its. Sensors 2018, 18, 2022. [Google Scholar] [CrossRef]

- Khan, M.U.; Hosseinzadeh, M.; Mosavi, A. An intersection-based routing scheme using q-learning in vehicular ad hoc networks for traffic management in the intelligent transportation system. Mathematics 2022, 10, 3731. [Google Scholar] [CrossRef]

- Lansky, J.; Rahmani, A.M.; Hosseinzadeh, M. Reinforcement learning-based routing protocols in vehicular ad hoc networks for intelligent transport system (its): A survey. Mathematics 2022, 10, 4673. [Google Scholar] [CrossRef]

- Nazib, R.A.; Moh, S. Reinforcement learning-based routing protocols for vehicular ad hoc networks: A comparative survey. IEEE Access 2021, 9, 552–587. [Google Scholar] [CrossRef]

- Hao, J.; Yang, T.; Tang, H.; Bai, C.; Liu, J.; Meng, Z.; Liu, P.; Wang, Z. Exploration in deep reinforcement learning: From single-agent to multiagent domain. IEEE Trans. Neural Netw. Learn. Syst. 2023, 1–12. [Google Scholar] [CrossRef]

- Mohapatra, P.; Li, J.; Gui, C. Qos in mobile ad hoc networks. IEEE Wirel. Commun. 2003, 10, 44–53. [Google Scholar] [CrossRef]

- Chen, L.; Heinzelman, W.B. Qos-aware routing based on bandwidth estimation for mobile ad hoc networks. IEEE J. Sel. Areas Commun. 2005, 23, 561–572. [Google Scholar] [CrossRef]

| Notation | Description |

|---|---|

| MANET | Mobile ad hoc network |

| VANET | Vehicular ad hoc network |

| RSSI | Received signal-strength indication |

| OBU | On-board unit |

| Set of vehicle nodes | |

| Set of links | |

| (, ) | Routing path from the S to the D |

| () | Number of hops of the route |

| v, w | Vehicle node in the network |

| Vehicle with serial number | |

| Neighbor set of vehicle node v | |

| , | The position of vehicle node v, w at time : = (, ), = (, ) |

| (·) | Euclidean distance |

| (·) | Link stability factor |

| (·), (·) | Link duration and link duration factor |

| (·) | Received signal-strength factor |

| (·) | Channal occupation factor |

| (·) | Available bandwidth factor |

| (·) | Relative velocity |

| (·) | Beacon reception time |

| Neighbor information expiration interval | |

| Beacon interval | |

| (v) | The address of vehicle v |

| , | Learning rate and discount factor |

| Parameter | Value |

|---|---|

| Simulation area | 2 km × 2 km |

| Number of intersections | 25 |

| Number of segments | 40 |

| Number of vehicles | 400 |

| Signal transmission range | 250 m |

| Packet size | 512 bytes |

| Packet sending rate | 20 ∼ 120 packets/sec |

| MAC protocol | IEEE 802.11p |

| Bit rate | 6 Mbps |

| Path loss exponent | 2 |

| Propagation model | Free-space |

| Beacon interval | 1 s |

| Simulation duration | 400 s |

| 1 s | |

| 0.95, 0.6 | |

| 0.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, Y.; Zhu, J.; Yang, K. Environment-Aware Adaptive Reinforcement Learning-Based Routing for Vehicular Ad Hoc Networks. Sensors 2024, 24, 40. https://doi.org/10.3390/s24010040

Jiang Y, Zhu J, Yang K. Environment-Aware Adaptive Reinforcement Learning-Based Routing for Vehicular Ad Hoc Networks. Sensors. 2024; 24(1):40. https://doi.org/10.3390/s24010040

Chicago/Turabian StyleJiang, Yi, Jinlin Zhu, and Kexin Yang. 2024. "Environment-Aware Adaptive Reinforcement Learning-Based Routing for Vehicular Ad Hoc Networks" Sensors 24, no. 1: 40. https://doi.org/10.3390/s24010040

APA StyleJiang, Y., Zhu, J., & Yang, K. (2024). Environment-Aware Adaptive Reinforcement Learning-Based Routing for Vehicular Ad Hoc Networks. Sensors, 24(1), 40. https://doi.org/10.3390/s24010040