Utilizing High Resolution Satellite Imagery for Automated Road Infrastructure Safety Assessments

Abstract

1. Introduction

Related Works

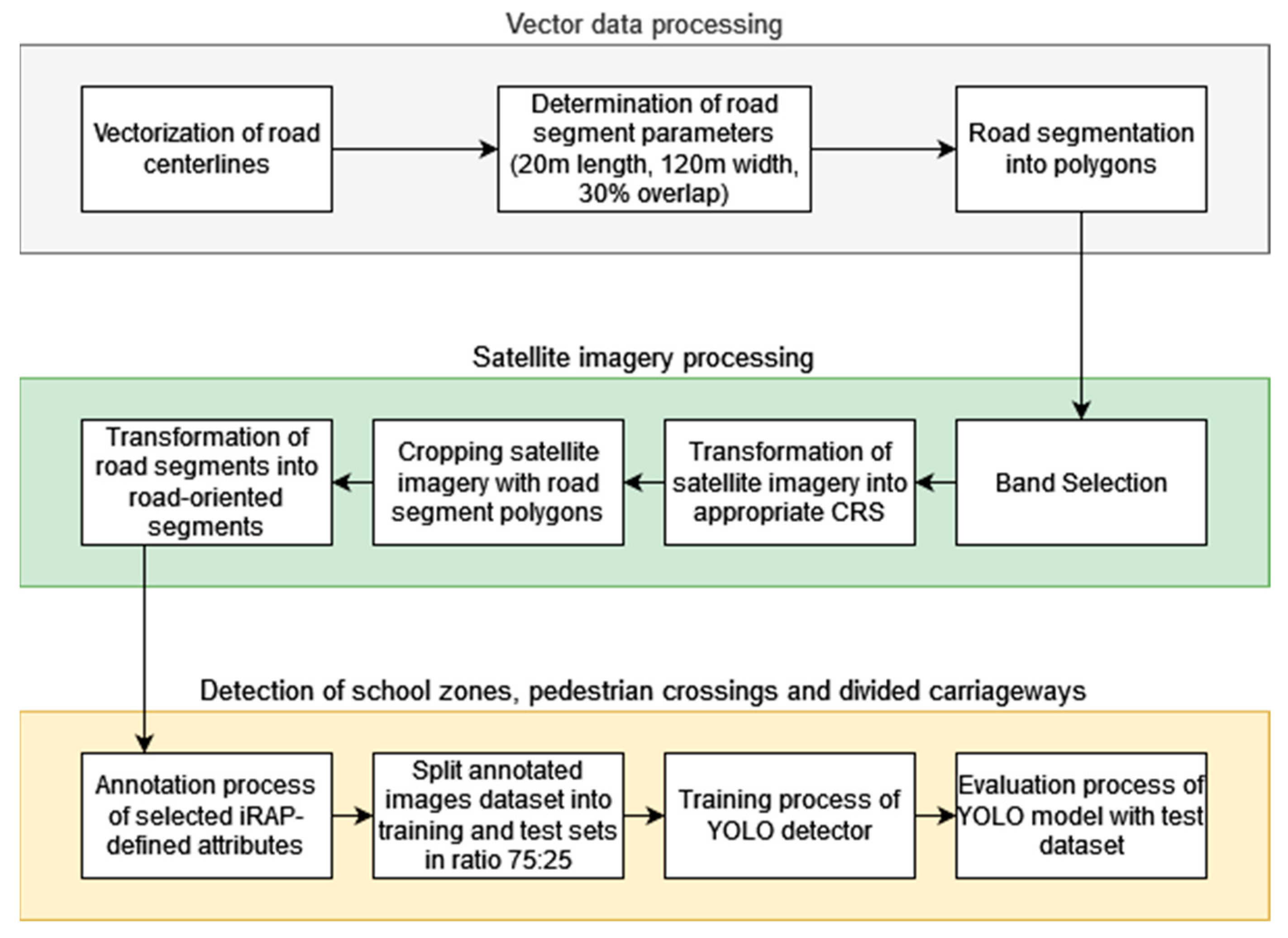

2. Materials and Methods

2.1. Vector Data Processing

2.2. Satellite Imagery Processing

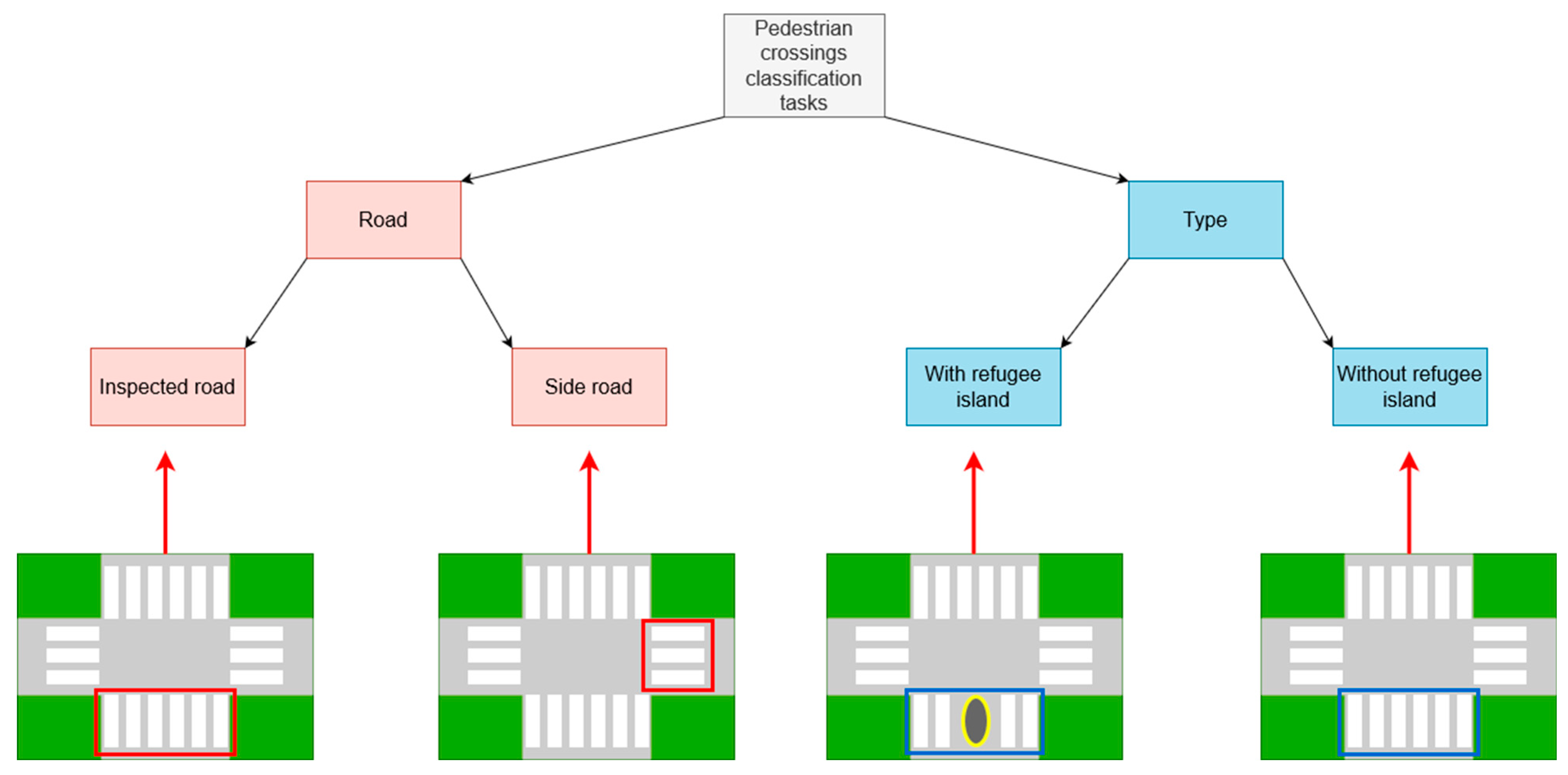

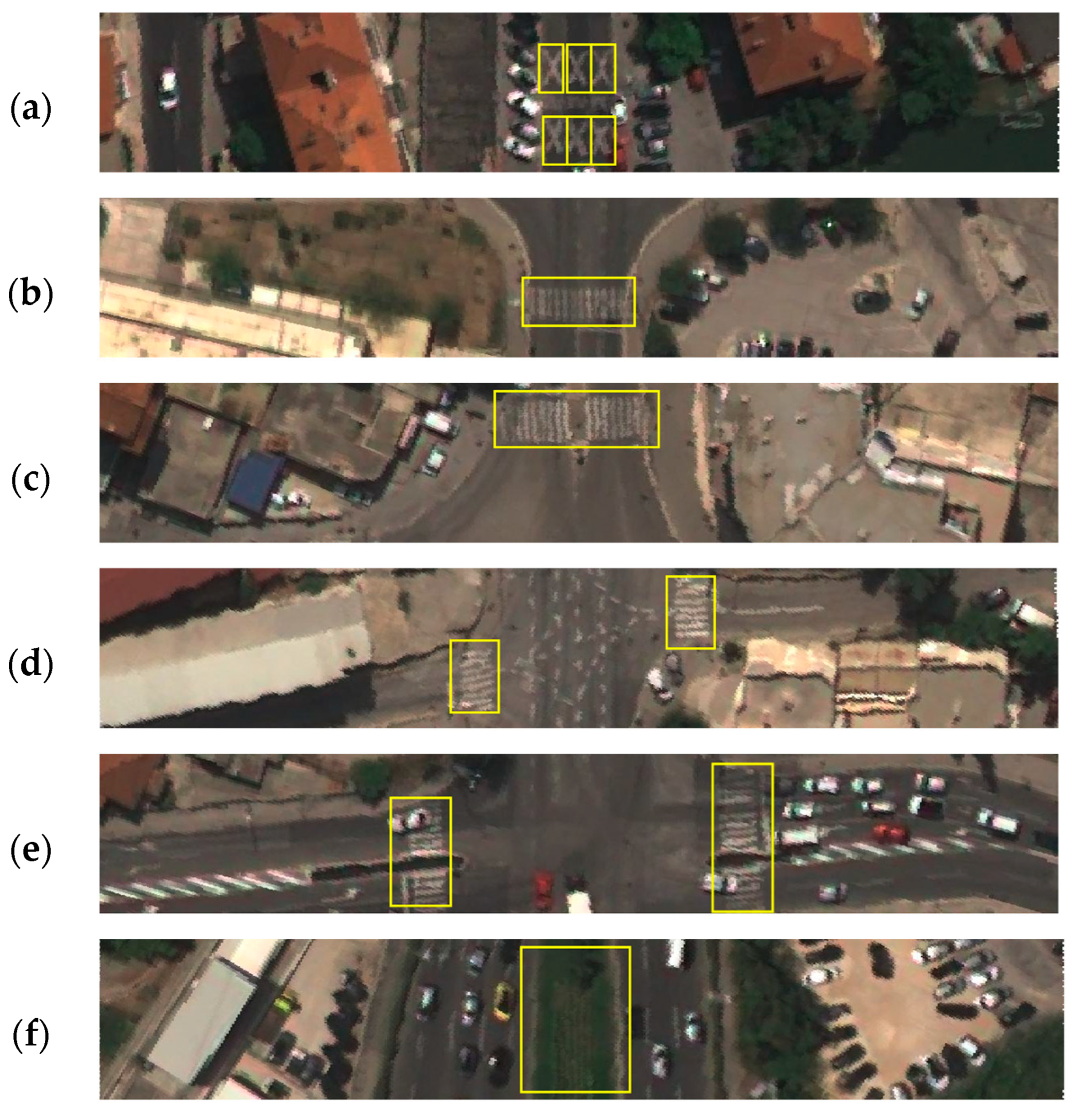

2.3. Detection of School Zones, Pedestrian Crossings, and Divided Carriageways

Experiment Analysis

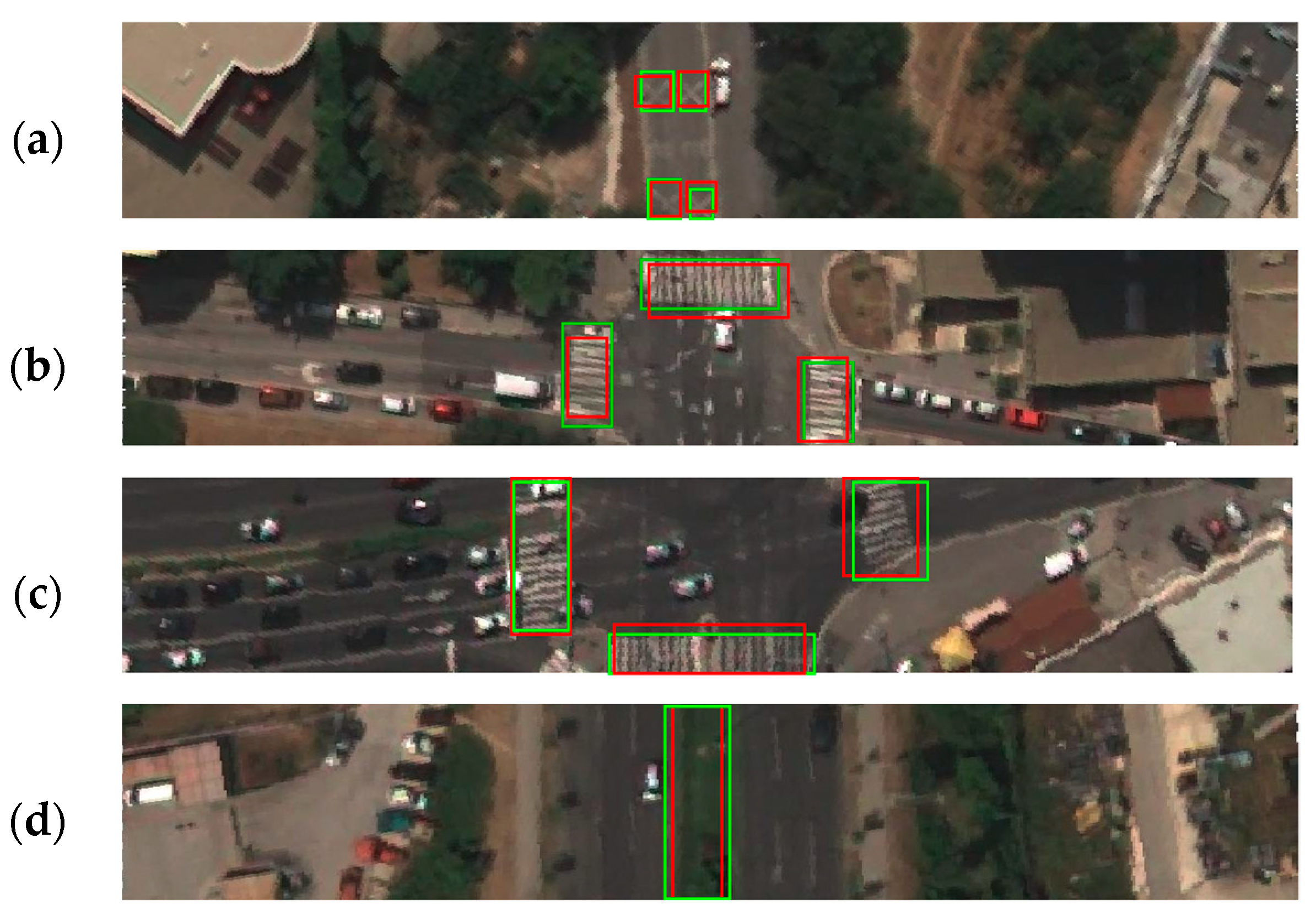

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- European Commision. Annual Statistical Report on Road Safety in the EU, 2021; European Commision: Brussels, Belgium, 2022. [Google Scholar]

- European Commision. Road Safety Thematic Report–Pedestrians; European Commision: Brussels, Belgium, 2021. [Google Scholar]

- European Commision. Next Steps towards “Vision Zero”; European Commision: Brussels, Belgium, 2021. [Google Scholar]

- Hove, E. The Handbook of Road Safety Measures; Elvik, R., Høye, A., Vaa, T., Sørensen, M., Eds.; Emerald Group Publishing Limited: Oslo, Norway, 2009; ISBN 978-1-84855-250-0. [Google Scholar]

- Directive (EU) 2019/1936; The European Parliament and Council of the European Union. Official Journal of the European Union: Strasbourg, France, 2019.

- International Road Assesment Programme (iRAP) IRAP Star Rating and Investment Plan Implementation Support Guide. Available online: https://irap.org/2021/06/irap-star-rating-and-investment-plan-manual-version-1-0-is-now-available-for-download/ (accessed on 22 April 2022).

- International Road Assessment Programme (iRAP) IRAP Coding Manual Drive on the Right Edition. Available online: www.irap.org/specifications (accessed on 22 April 2022).

- Song, W. Image-Based Roadway Assessment Using Convolutional Neural Image-Based Roadway Assessment Using Convolutional Neural Networks Networks. Theses Diss. Comput. Sci. Univ. Ky. 2019, 13. [Google Scholar] [CrossRef]

- Khan, M.A.; Ectors, W.; Bellemans, T.; Janssens, D.; Wets, G. Unmanned Aerial Vehicle-Based Traffic Analysis: A Case Study for Shockwave Identification and Flow Parameters Estimation at Signalized Intersections. Remote Sens. 2018, 10, 458. [Google Scholar] [CrossRef]

- Ke, R.; Feng, S.; Cui, Z.; Wang, Y. Advanced Framework for Microscopic and Lane-Level Macroscopic Traffic Parameters Estimation from UAV Video. IET Intell. Transp. Syst. 2020, 14, 724–734. [Google Scholar] [CrossRef]

- Ke, R.; Li, Z.; Tang, J.; Pan, Z.; Wang, Y. Real-Time Traffic Flow Parameter Estimation from UAV Video Based on Ensemble Classifier and Optical Flow. IEEE Trans. Intell. Transp. Syst. 2019, 20, 54–64. [Google Scholar] [CrossRef]

- Brkić, I.; Miler, M.; Ševrović, M.; Medak, D. An Analytical Framework for Accurate Traffic Flow Parameter Calculation from UAV Aerial Videos. Remote Sens. 2020, 12, 3844. [Google Scholar] [CrossRef]

- Guan, H.; Lei, X.; Yu, Y.; Zhao, H.; Peng, D.; Marcato Junior, J.; Li, J. Road Marking Extraction in UAV Imagery Using Attentive Capsule Feature Pyramid Network. Int. J. Appl. Earth Obs. Geoinf. 2022, 107, 102677. [Google Scholar] [CrossRef]

- Bu, T.; Zhu, J.; Ma, T. A UAV Photography–Based Detection Method for Defective Road Marking. J. Perform. Constr. Facil. 2022, 36, 04022035. [Google Scholar] [CrossRef]

- Biçici, S.; Zeybek, M. An Approach for the Automated Extraction of Road Surface Distress from a UAV-Derived Point Cloud. Autom. Constr. 2021, 122, 103475. [Google Scholar] [CrossRef]

- Yuan, Y.; Xiong, Z.; Wang, Q. An Incremental Framework for Video-Based Traffic Sign Detection, Tracking, and Recognition. IEEE Trans. Intell. Transp. Syst. 2017, 18, 1918–1929. [Google Scholar] [CrossRef]

- Changzhen, X.; Cong, W.; Weixin, M.; Yanmei, S. A Traffic Sign Detection Algorithm Based on Deep Convolutional Neural Network. In Proceedings of the 2016 IEEE International Conference on Signal and Image Processing, ICSIP 2016, Beijing, China, 13–15 August 2016; pp. 676–679. [Google Scholar] [CrossRef]

- Han, C.; Gao, G.; Zhang, Y. Real-Time Small Traffic Sign Detection with Revised Faster-RCNN. Multimed. Tools Appl. 2019, 78, 13263–13278. [Google Scholar] [CrossRef]

- Pubudu Sanjeewani, T.G.; Verma, B. Learning and Analysis of AusRAP Attributes from Digital Video Recording for Road Safety. In Proceedings of the International Conference Image and Vision Computing New Zealand 2019, Dunedin, New Zealand, 2–4 December 2019. [Google Scholar] [CrossRef]

- Sanjeewani, P.; Verma, B. Single Class Detection-Based Deep Learning Approach for Identification of Road Safety Attributes. Neural Comput. Appl. 2021, 33, 9691–9702. [Google Scholar] [CrossRef]

- Sanjeewani, P.; Verma, B. An Optimisation Technique for the Detection of Safety Attributes Using Roadside Video Data. In Proceedings of the International Conference Image and Vision Computing New Zealand 2020, Wellington, New Zealand, 25–27 November 2020. [Google Scholar] [CrossRef]

- Jan, Z.; Verma, B.; Affum, J.; Atabak, S.; Moir, L. A Convolutional Neural Network Based Deep Learning Technique for Identifying Road Attributes. In Proceedings of the International Conference Image and Vision Computing New Zealand 2019, Dunedin, New Zealand, 2–4 December 2019. [Google Scholar] [CrossRef]

- Neven, D.; de Brabandere, B.; Georgoulis, S.; Proesmans, M.; van Gool, L. Towards End-to-End Lane Detection: An Instance Segmentation Approach. IEEE Intell. Veh. Symp. Proc. (IV) 2018, 286–291. [Google Scholar] [CrossRef]

- Lee, S.; Kim, J.; Yoon, J.S.; Shin, S.; Bailo, O.; Kim, N.; Lee, T.-H.; Hong, H.S.; Han, S.-H.; Kweon, I.S. VPGNet: Vanishing Point Guided Network for Lane and Road Marking Detection and Recognition. In Proceedings of the IEEE International Conference on Computer Vision 2017, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Brkić, I.; Miler, M.; Ševrović, M.; Medak, D. Automatic Roadside Feature Detection Based on Lidar Road Cross Section Images. Sensors 2022, 22, 5510. [Google Scholar] [CrossRef] [PubMed]

- Gargoum, S.; Karstenl, L.; El-Basyouny, K.; Chen, X. Enriching Roadside Safety Assessments Using LiDAR Technology: Disaggregate Collision-Level Data Fusion and Analysis. Infrastructures 2022, 7, 7. [Google Scholar] [CrossRef]

- de Blasiis, M.R.; Benedetto, A.d.; Fiani, M.; Garozzo, M. Assessing the Effect of Pavement Distresses by Means of LiDAR Technology. In Computing in Civil Engineering 2019: Smart Cities, Sustainability, and Resilience-Selected Papers from the ASCE International Conference on Computing in Civil Engineering; American Society of Civil Engineers: Reston, VA, USA, 2019; pp. 146–153. [Google Scholar] [CrossRef]

- Weng, S.; Li, J.; Chen, Y.; Wang, C. Road Traffic Sign Detection and Classification from Mobile LiDAR Point Clouds. In Proceedings of the 2nd ISPRS International Conference on Computer Vision in Remote Sensing (CVRS 2015), Xiamen, China, 28–30 April 2015; Volume 9901, pp. 55–61. [Google Scholar] [CrossRef]

- Kilani, O.; Gouda, M.; Weiß, J.; El-Basyouny, K. Safety Assessment of Urban Intersection Sight Distance Using Mobile LiDAR Data. Sustainability 2021, 13, 9259. [Google Scholar] [CrossRef]

- Gargoum, S.A.; El-Basyouny, K.; Froese, K.; Gadowski, A. A Fully Automated Approach to Extract and Assess Road Cross Sections from Mobile LiDAR Data. IEEE Trans. Intell. Transp. Syst. 2018, 19, 3507–3516. [Google Scholar] [CrossRef]

- Holgado-Barco, A.; González-Aguilera, D.; Arias-Sanchez, P.; Martinez-Sanchez, J. Semiautomatic Extraction of Road Horizontal Alignment from a Mobile LiDAR System. Comput.-Aided Civ. Infrastruct. Eng. 2015, 30, 217–228. [Google Scholar] [CrossRef]

- Gargoum, S.; El-Basyouny, K.; Sabbagh, J. Automated Extraction of Horizontal Curve Attributes Using LiDAR Data. Transp. Res. Rec. 2018, 2672, 98–106. [Google Scholar] [CrossRef]

- Alshehhi, R.; Marpu, P.R. Hierarchical Graph-Based Segmentation for Extracting Road Networks from High-Resolution Satellite Images. ISPRS J. Photogramm. Remote Sens. 2017, 126, 245–260. [Google Scholar] [CrossRef]

- Henry, C.; Azimi, S.M.; Merkle, N. Road Segmentation in SAR Satellite Images with Deep Fully Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1867–1871. [Google Scholar] [CrossRef]

- Buslaev, A.; Seferbekov, S.; Iglovikov, V.; Shvets, A. Fully Convolutional Network for Automatic Road Extraction from Satellite Imagery. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 197–1973. [Google Scholar]

- Wijnands, J.S.; Zhao, H.; Nice, K.A.; Thompson, J.; Scully, K.; Guo, J.; Stevenson, M. Identifying Safe Intersection Design through Unsupervised Feature Extraction from Satellite Imagery. Comput.-Aided Civ. Infrastruct. Eng. 2021, 36, 346–361. [Google Scholar] [CrossRef]

- Prakash, T.; Comandur, B.; Chang, T.; Elfiky, N.; Kak, A. A Generic Road-Following Framework for Detecting Markings and Objects in Satellite Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 4729–4741. [Google Scholar] [CrossRef]

- Ghilardi, M.C.; Jacques Junior, J.; Manssour, I. Crosswalk Localization from Low Resolution Satellite Images to Assist Visually Impaired People. IEEE Comput. Graph. Appl. 2018, 38, 30–46. [Google Scholar] [CrossRef] [PubMed]

- Berriel, R.F.; Lopes, A.T.; De Souza, A.F.; Oliveira-Santos, T. Deep Learning-Based Large-Scale Automatic Satellite Crosswalk Classification. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1513–1517. [Google Scholar] [CrossRef]

- Ahmetovic, D.; Manduchi, R.; Coughlan, J.M.; Mascetti, S. Mind Your Crossings. ACM Trans. Access. Comput. (TACCESS) 2017, 9, 11. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Luo, R.; Li, J.; Du, J.; Wang, C. U-Net Based Road Area Guidance for Crosswalks Detection from Remote Sensing Images. Can. J. Remote Sens. 2021, 47, 83–99. [Google Scholar] [CrossRef]

- Croatian Bureau of Statistics. Census of Population, Households and Dwellings 2021 Results by Settlements; Croatian Bureau of Statistics: Zagreb, Croatia, 2022. [Google Scholar]

- Airbus Defence and Space.Pléiades Neo; 2021. Available online: https://www.intelligence-airbusds.com/imagery/constellation/pleiades-neo/ (accessed on 31 March 2023).

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. A Review of Yolo Algorithm Developments. Procedia Comput. Sci. 2022, 199, 1066–1073. [Google Scholar] [CrossRef]

- Carranza-García, M.; Torres-Mateo, J.; Lara-Benítez, P.; García-Gutiérrez, J. On the Performance of One-Stage and Two-Stage Object Detectors in Autonomous Vehicles Using Camera Data. Remote Sens. 2020, 13, 89. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Stoken, A.; Borovec, J.; NanoCode012; Kwon, Y.; Michael, K.; Xie, T.; Fang, J.; imyhxy; et al. Ultralytics/Yolov5: V7.0-YOLOv5 SOTA Realtime Instance Segmentation. Zenodo 2022, 7347926. [Google Scholar] [CrossRef]

- Althnian, A.; AlSaeed, D.; Al-Baity, H.; Samha, A.; Dris, A.B.; Alzakari, N.; Abou Elwafa, A.; Kurdi, H. Impact of Dataset Size on Classification Performance: An Empirical Evaluation in the Medical Domain. Appl. Sci. 2021, 11, 796. [Google Scholar] [CrossRef]

- Tzutalin LabelImg. Available online: https://github.com/heartexlabs/labelImg (accessed on 10 April 2023).

- Oksuz, K.; Cam, B.C.; Akbas, E.; Kalkan, S. Localization Recall Precision (LRP): A New Performance Metric for Object Detection; Springer: Cham, Switzerland, 2018; ISBN 978-3-030-01234-2. [Google Scholar]

- Henderson, P.; Ferrari, V. End-to-End Training of Object Class Detectors for Mean Average Precision. In Computer Vision–ACCV 2016: 13th Asian Conference on Computer Vision, Taipei, Taiwan, 20–24 November 2016, Revised Selected Papers, Part V 13; Springer International Publishing: Berlin/Heidelberg, Germany, 2017. [Google Scholar] [CrossRef]

- Davis, J.; Goadrich, M. The Relationship Between Precision-Recall and ROC Curves. In Proceedings of the 23rd International Conference on Machine Learning 2006, Pittsburgh, PA, USA, 25–29 June 2006. [Google Scholar] [CrossRef]

- Mohammad, H.; Md Nasair, S. A Review on Evaluation Metrics for Data Classification Evaluations. Int. J. Data Min. Knowl. Manag. Process 2015, 5, 1–11. [Google Scholar] [CrossRef]

- Nepal, U.; Eslamiat, H. Comparing YOLOv3, YOLOv4 and YOLOv5 for Autonomous Landing Spot Detection in Faulty UAVs. Sensors 2022, 22, 464. [Google Scholar] [CrossRef] [PubMed]

| Ground Truth | ||||||||

|---|---|---|---|---|---|---|---|---|

| SZ | IRPC | IRRI | SRPC | SRRI | DC | BFP | ||

| Predicted | SZ | 55 | 9 | |||||

| IRPC | 102 | 1 | 10 | |||||

| IRRI | 5 | 45 | 3 | |||||

| SRPC | 147 | 3 | 19 | |||||

| SRRI | 1 | 4 | 22 | 2 | ||||

| DC | 433 | 26 | ||||||

| BFN | 4 | 10 | 1 | 14 | 1 | 21 | ||

| Accuracy | Recall | Precision | F1 Score | AP | |

|---|---|---|---|---|---|

| SZ | 0.988 | 0.849 | 0.938 | 0.891 | 0.968 |

| IRPC | 0.957 | 0.870 | 0.891 | 0.880 | 0.939 |

| IRRI | 0.988 | 0.759 | 0.846 | 0.800 | 0.887 |

| SRPC | 0.986 | 0.859 | 0.932 | 0.894 | 0.923 |

| SRRI | 0.972 | 0.903 | 0.872 | 0.887 | 0.798 |

| DC | 0.950 | 0.943 | 0.954 | 0.949 | 0.979 |

| Mean | 0.974 | 0.864 | 0.905 | 0.884 | 0.916 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Brkić, I.; Ševrović, M.; Medak, D.; Miler, M. Utilizing High Resolution Satellite Imagery for Automated Road Infrastructure Safety Assessments. Sensors 2023, 23, 4405. https://doi.org/10.3390/s23094405

Brkić I, Ševrović M, Medak D, Miler M. Utilizing High Resolution Satellite Imagery for Automated Road Infrastructure Safety Assessments. Sensors. 2023; 23(9):4405. https://doi.org/10.3390/s23094405

Chicago/Turabian StyleBrkić, Ivan, Marko Ševrović, Damir Medak, and Mario Miler. 2023. "Utilizing High Resolution Satellite Imagery for Automated Road Infrastructure Safety Assessments" Sensors 23, no. 9: 4405. https://doi.org/10.3390/s23094405

APA StyleBrkić, I., Ševrović, M., Medak, D., & Miler, M. (2023). Utilizing High Resolution Satellite Imagery for Automated Road Infrastructure Safety Assessments. Sensors, 23(9), 4405. https://doi.org/10.3390/s23094405