Multi-Domain Feature Alignment for Face Anti-Spoofing

Abstract

1. Introduction

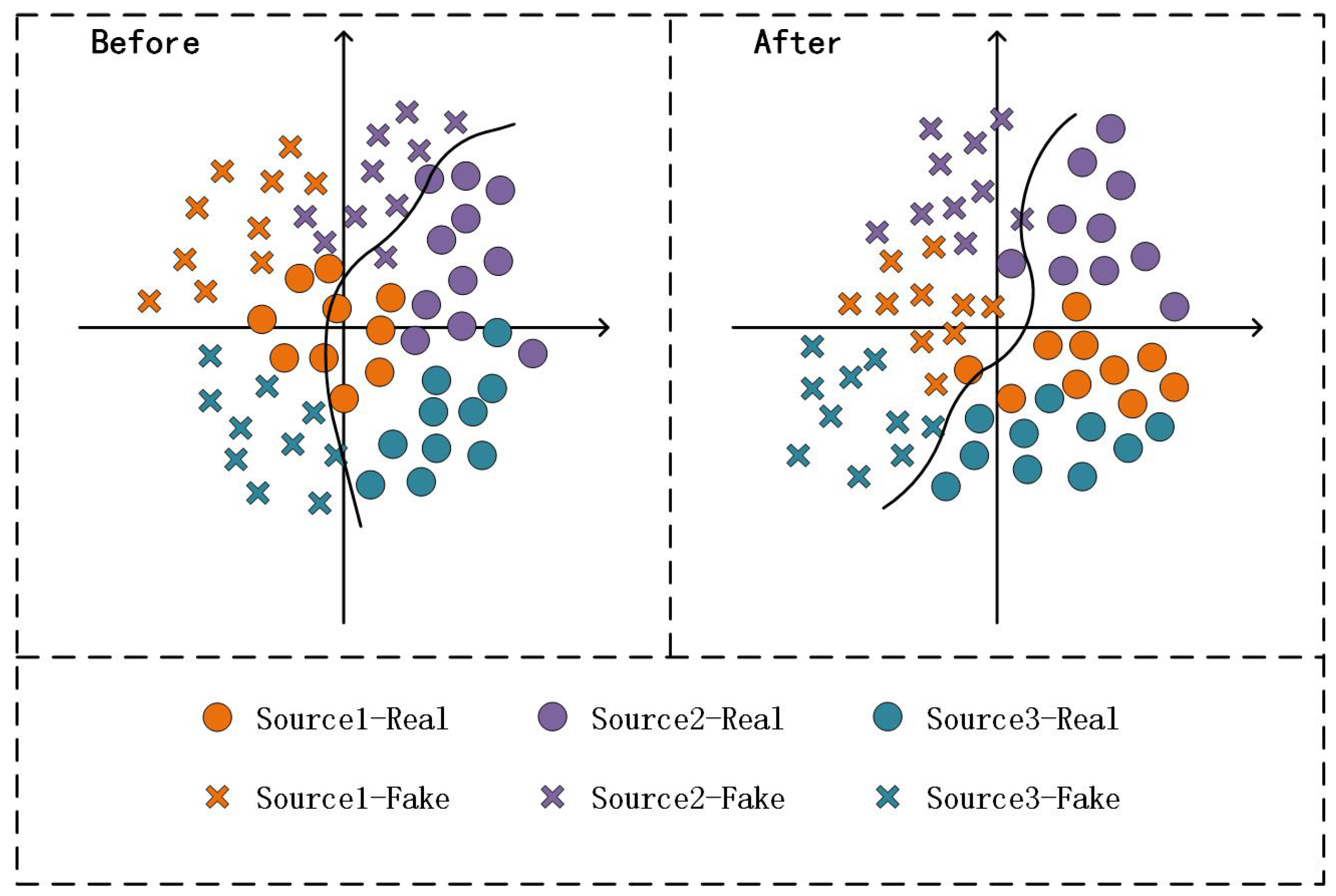

- We propose a novel source-domain-alignment domain generalization algorithm that utilizes margin disparity discrepancy and adversarial learning. This approach is designed to improve the generalization performance of the FAS model significantly.

- In the context of multi-domain problems, we devise two alignment strategies and modularize the alignment process. The experimental findings demonstrate that multi-domain alignment surpasses cross-domain alignment in terms of generalization performance, rendering it more advantageous in multi-domain scenarios.

- We combine the new algorithm with multi-directional triple mining and analyze the source domain feature space. This results in a novel multi-domain feature alignment framework (MADG), which improves the classification accuracy of the FAS model.

- Extensive experiments and comparisons have been conducted, demonstrating that our proposed approach achieves state-of-the-art performance on most protocols.

2. Related Work

2.1. Face Anti-Spoofing Methods

2.2. Multi-Domain Learning

2.3. Domain Generalization

3. Proposed Approach

3.1. Overview

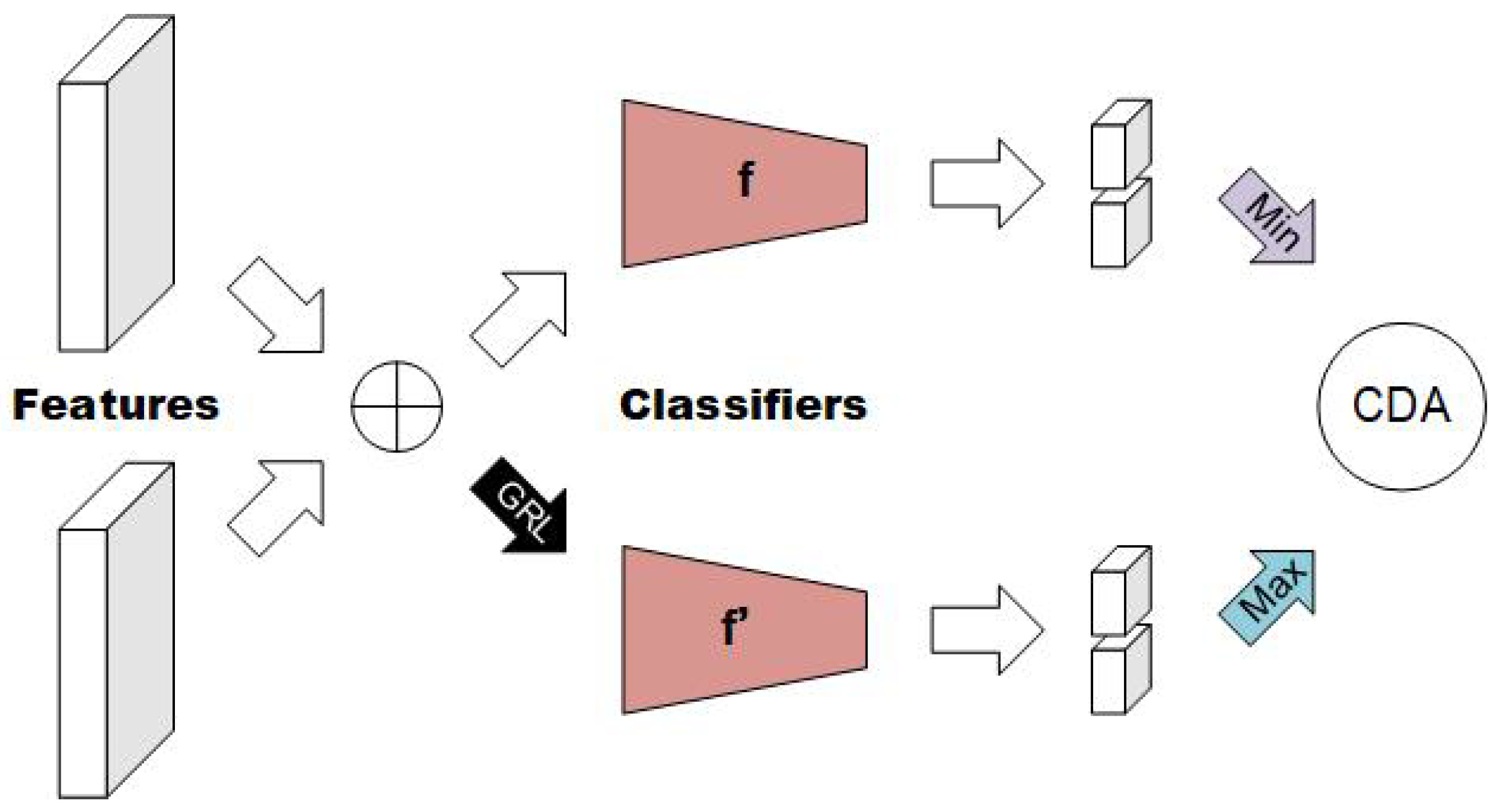

3.2. Multi-Domain Feature Alignment

3.3. Multi-Directional Triplet Mining

3.4. Loss Function

4. Experiments

4.1. Experimental Setting

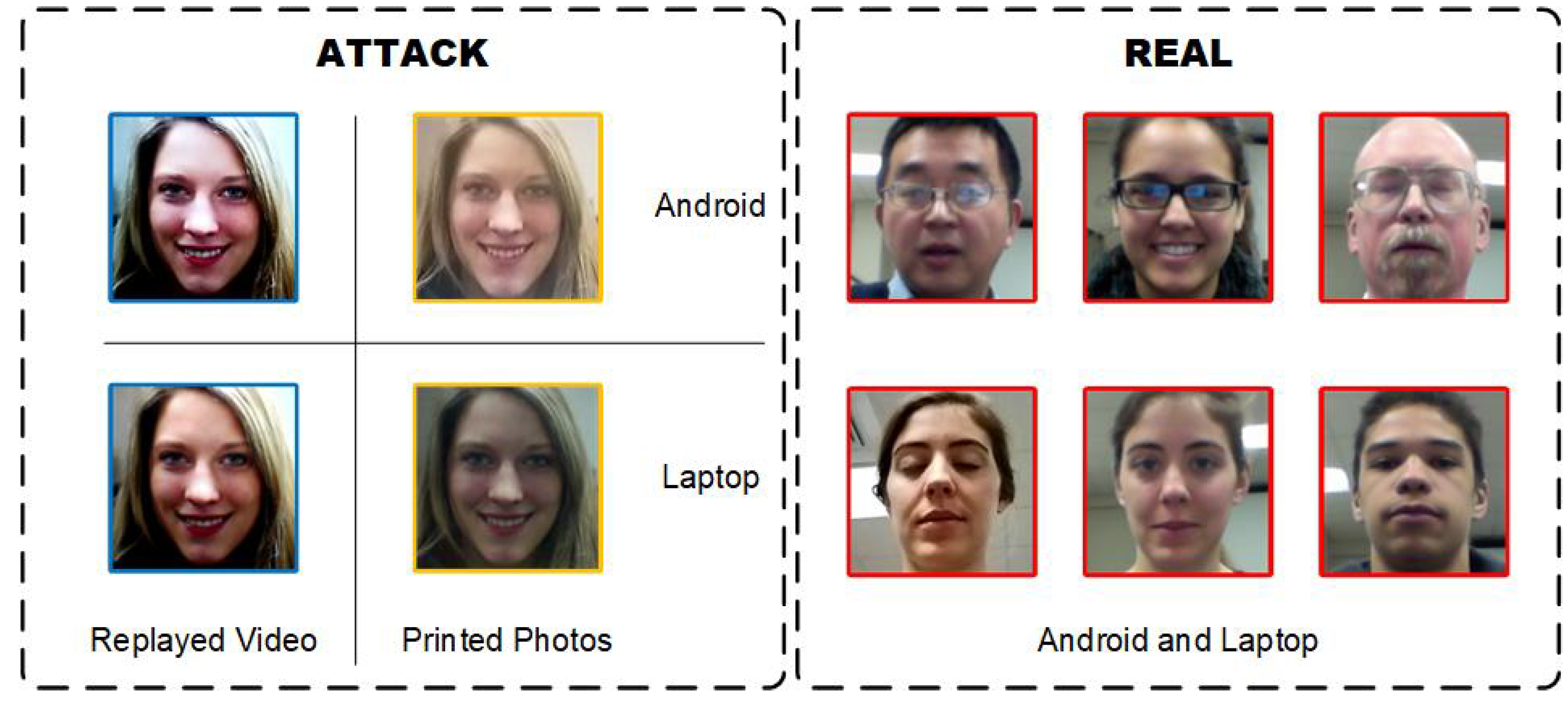

4.1.1. Databases and Protocols

4.1.2. Evaluation Metrics

4.2. Implementation Details

4.2.1. Data Preprocessing

4.2.2. Network Structure

4.2.3. Training Setting

4.2.4. Testing Setting

4.3. Discussion

4.3.1. Ablation Study

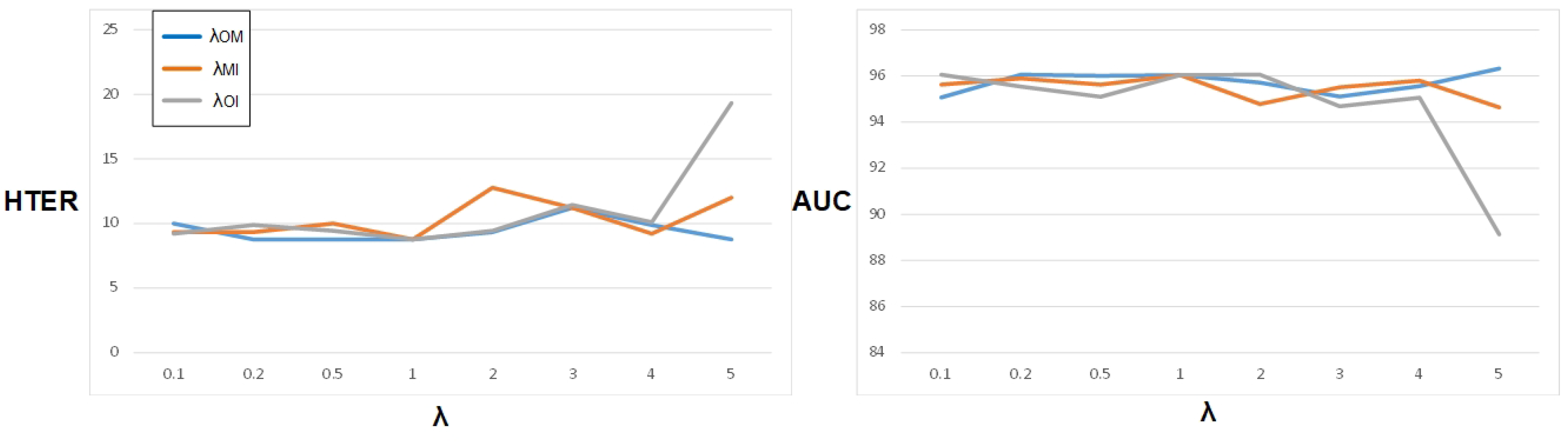

4.3.2. Comparisons of Different Alignment Weights

4.3.3. Experiments on Limited Source Domains

4.3.4. Comparison of Different Alignment Strategies

4.4. Comparison with State-of-the-Art Methods

4.4.1. Comparison on Limited Source Domains

4.4.2. Comparison with Baseline Methods

4.4.3. Comparison with Other SOTA Methods

4.5. Visualization and Analysis

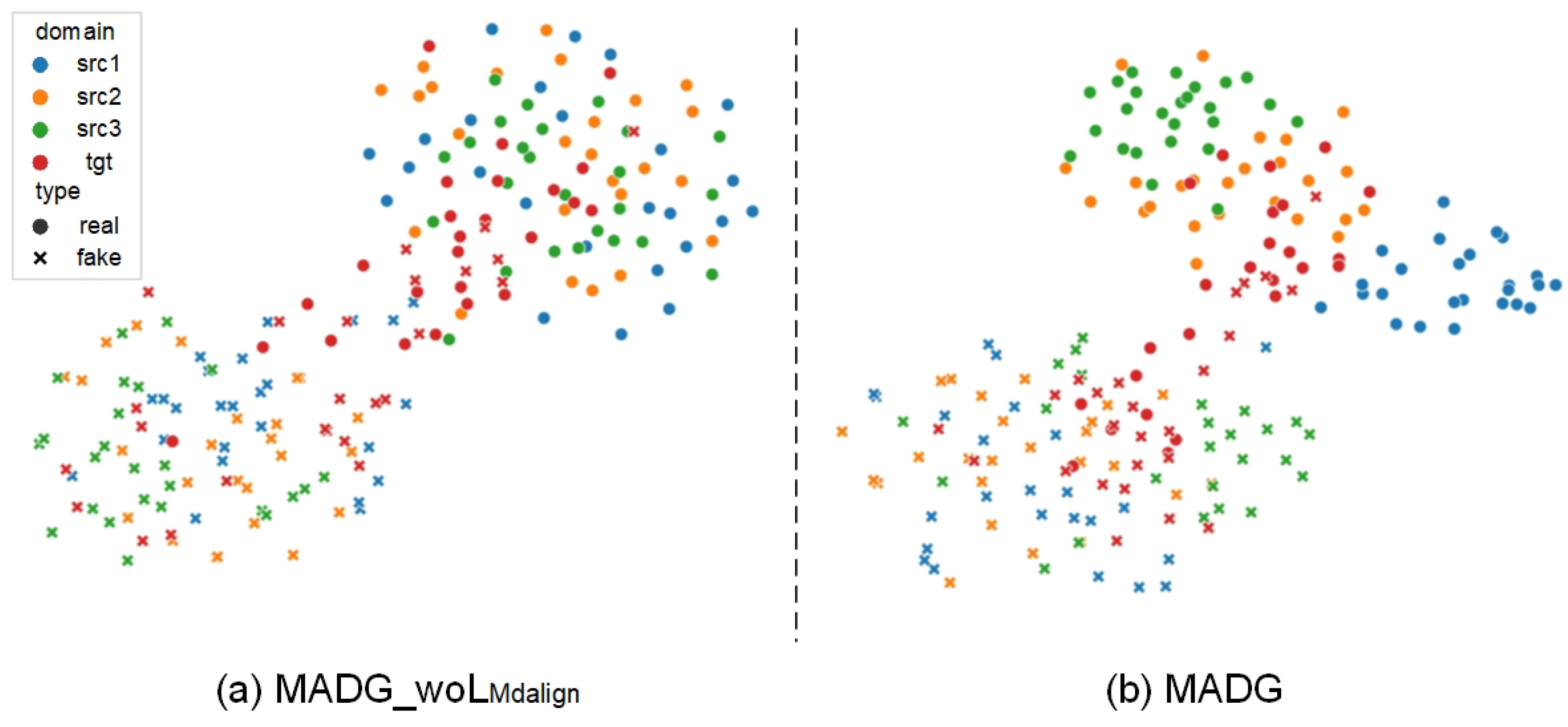

4.5.1. Feature Visualization

4.5.2. Analysis of Misclassified Samples

4.6. Conclusion of Experiments

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Deng, J.; Guo, J.; Xue, N.; Zafeiriou, S. Arcface: Additive angular margin loss for deep face recognition. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4690–4699. [Google Scholar]

- Wang, M.; Deng, W. Deep face recognition: A survey. Neurocomputing 2021, 429, 215–244. [Google Scholar] [CrossRef]

- Yu, Z.; Qin, Y.; Li, X.; Zhao, C.; Lei, Z.; Zhao, G. Deep learning for face anti-spoofing: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 5609–5631. [Google Scholar] [CrossRef] [PubMed]

- Benlamoudi, A.; Bekhouche, S.E.; Korichi, M.; Bensid, K.; Ouahabi, A.; Hadid, A.; Taleb-Ahmed, A. Face Presentation Attack Detection Using Deep Background Subtraction. Sensors 2022, 22, 3760. [Google Scholar] [CrossRef]

- Li, S.; Dutta, V.; He, X.; Matsumaru, T. Deep Learning Based One-Class Detection System for Fake Faces Generated by GAN Network. Sensors 2022, 22, 7767. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Wang, Z.; Yu, Z.; Deng, W.; Li, J.; Gao, T.; Wang, Z. Domain Generalization via Shuffled Style Assembly for Face Anti-Spoofing. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 4123–4133. [Google Scholar]

- Shao, R.; Lan, X.; Li, J.; Yuen, P.C. Multi-adversarial discriminative deep domain generalization for face presentation attack detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 10023–10031. [Google Scholar]

- Jia, Y.; Zhang, J.; Shan, S.; Chen, X. Single-side domain generalization for face anti-spoofing. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 8484–8493. [Google Scholar]

- Määttä, J.; Hadid, A.; Pietikäinen, M. Face spoofing detection from single images using micro-texture analysis. In Proceedings of the 2011 International Joint Conference on Biometrics (IJCB), Washington, DC, USA, 11–13 October 2011; pp. 1–7. [Google Scholar]

- Freitas Pereira, T.d.; Komulainen, J.; Anjos, A.; De Martino, J.M.; Hadid, A.; Pietikäinen, M.; Marcel, S. Face liveness detection using dynamic texture. EURASIP J. Image Video Process. 2014, 2014, 2. [Google Scholar] [CrossRef]

- Patel, K.; Han, H.; Jain, A.K. Secure face unlock: Spoof detection on smartphones. IEEE Trans. Inf. Forensics Secur. 2016, 11, 2268–2283. [Google Scholar] [CrossRef]

- Kollreider, K.; Fronthaler, H.; Faraj, M.I.; Bigun, J. Real-time face detection and motion analysis with application in “liveness” assessment. IEEE Trans. Inf. Forensics Secur. 2007, 2, 548–558. [Google Scholar] [CrossRef]

- Pan, G.; Sun, L.; Wu, Z.; Lao, S. Eyeblink-based anti-spoofing in face recognition from a generic webcamera. In Proceedings of the 2007 IEEE 11th International Conference on Computer Vision (ICCV), Rio De Janeiro, Brazil, 14–21 October 2007; pp. 1–8. [Google Scholar]

- Sun, L.; Pan, G.; Wu, Z.; Lao, S. Blinking-based live face detection using conditional random fields. In Proceedings of the International Conference on Biometrics (ICB), Seoul, Republic of Korea, 27–29 August 2007; pp. 252–260. [Google Scholar]

- Yang, J.; Lei, Z.; Li, S.Z. Learn convolutional neural network for face anti-spoofing. arXiv 2014, arXiv:1408.5601. [Google Scholar]

- Jourabloo, A.; Liu, Y.; Liu, X. Face de-spoofing: Anti-spoofing via noise modeling. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 290–306. [Google Scholar]

- Liu, Y.; Jourabloo, A.; Liu, X. Learning deep models for face anti-spoofing: Binary or auxiliary supervision. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 389–398. [Google Scholar]

- Liu, S.; Yuen, P.C.; Zhang, S.; Zhao, G. 3D mask face anti-spoofing with remote photoplethysmography. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 85–100. [Google Scholar]

- Liu, S.Q.; Lan, X.; Yuen, P.C. Remote photoplethysmography correspondence feature for 3D mask face presentation attack detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 558–573. [Google Scholar]

- Yang, X.; Luo, W.; Bao, L.; Gao, Y.; Gong, D.; Zheng, S.; Li, Z.; Liu, W. Face anti-spoofing: Model matters, so does data. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 3507–3516. [Google Scholar]

- Roy, K.; Hasan, M.; Rupty, L.; Hossain, M.S.; Sengupta, S.; Taus, S.N.; Mohammed, N. Bi-fpnfas: Bi-directional feature pyramid network for pixel-wise face anti-spoofing by leveraging fourier spectra. Sensors 2021, 21, 2799. [Google Scholar] [CrossRef]

- Liu, Y.; Stehouwer, J.; Jourabloo, A.; Liu, X. Deep tree learning for zero-shot face anti-spoofing. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4680–4689. [Google Scholar]

- Liu, Y.; Stehouwer, J.; Liu, X. On disentangling spoof trace for generic face anti-spoofing. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 406–422. [Google Scholar]

- Zhang, K.Y.; Yao, T.; Zhang, J.; Tai, Y.; Ding, S.; Li, J.; Huang, F.; Song, H.; Ma, L. Face anti-spoofing via disentangled representation learning. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 641–657. [Google Scholar]

- Feng, L.; Po, L.M.; Li, Y.; Xu, X.; Yuan, F.; Cheung, T.C.H.; Cheung, K.W. Integration of image quality and motion cues for face anti-spoofing: A neural network approach. J. Vis. Commun. Image Represent. 2016, 38, 451–460. [Google Scholar] [CrossRef]

- Li, L.; Feng, X.; Boulkenafet, Z.; Xia, Z.; Li, M.; Hadid, A. An original face anti-spoofing approach using partial convolutional neural network. In Proceedings of the 2016 Sixth International Conference on Image Processing Theory, Tools and Applications (IPTA), Oulu, Finland, 12–15 December 2016; pp. 1–6. [Google Scholar]

- Patel, K.; Han, H.; Jain, A.K. Cross-database face antispoofing with robust feature representation. In Proceedings of the Biometric Recognition: 11th Chinese Conference (CCBR), Chengdu, China, 14–16 October 2016; pp. 611–619. [Google Scholar]

- Chingovska, I.; Anjos, A.; Marcel, S. On the effectiveness of local binary patterns in face anti-spoofing. In Proceedings of the 2012 BIOSIG-Proceedings of the International Conference of Biometrics Special Interest Group (BIOSIG), Darmstadt, Germany, 6–7 September 2012; pp. 1–7. [Google Scholar]

- Zhang, Z.; Yan, J.; Liu, S.; Lei, Z.; Yi, D.; Li, S.Z. A face antispoofing database with diverse attacks. In Proceedings of the 2012 5th IAPR International Conference on Biometrics (ICB), New Delhi, India, 29 March–1 April 2012; pp. 26–31. [Google Scholar]

- Wen, D.; Han, H.; Jain, A.K. Face spoof detection with image distortion analysis. IEEE Trans. Inf. Forensics Secur. 2015, 10, 746–761. [Google Scholar] [CrossRef]

- Boulkenafet, Z.; Komulainen, J.; Li, L.; Feng, X.; Hadid, A. OULU-NPU: A mobile face presentation attack database with real-world variations. In Proceedings of the 2017 12th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2017), Washington, DC, USA, 30 May–3 June 2017; pp. 612–618. [Google Scholar]

- Rebuffi, S.A.; Bilen, H.; Vedaldi, A. Learning multiple visual domains with residual adapters. arXiv 2017, arXiv:1705.08045. [Google Scholar]

- Li, H.; Li, W.; Cao, H.; Wang, S.; Huang, F.; Kot, A.C. Unsupervised domain adaptation for face anti-spoofing. IEEE Trans. Inf. Forensics Secur. 2018, 13, 1794–1809. [Google Scholar] [CrossRef]

- Mancini, M.; Ricci, E.; Caputo, B.; Rota Bulo, S. Adding new tasks to a single network with weight transformations using binary masks. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Rebuffi, S.A.; Bilen, H.; Vedaldi, A. Efficient parametrization of multi-domain deep neural networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8119–8127. [Google Scholar]

- Tu, X.; Zhang, H.; Xie, M.; Luo, Y.; Zhang, Y.; Ma, Z. Deep transfer across domains for face antispoofing. J. Electron. Imaging 2019, 28, 043001. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, T.; Long, M.; Jordan, M. Bridging theory and algorithm for domain adaptation. In Proceedings of the International Conference on Machine Learning (ICML), Long Beach, CA, USA, 9–15 June 2019; pp. 7404–7413. [Google Scholar]

- Wang, G.; Han, H.; Shan, S.; Chen, X. Improving cross-database face presentation attack detection via adversarial domain adaptation. In Proceedings of the 2019 International Conference on Biometrics (ICB), Crete, Greece, 4–7 June 2019; pp. 1–8. [Google Scholar]

- Wang, G.; Han, H.; Shan, S.; Chen, X. Unsupervised adversarial domain adaptation for cross-domain face presentation attack detection. IEEE Trans. Inf. Forensics Secur. 2020, 16, 56–69. [Google Scholar] [CrossRef]

- Ariza-Colpas, P.; Piñeres-Melo, M.; Barceló-Martínez, E.; De la Hoz-Franco, E.; Benitez-Agudelo, J.; Gelves-Ospina, M.; Echeverri-Ocampo, I.; Combita-Nino, H.; Leon-Jacobus, A. Enkephalon-technological platform to support the diagnosis of alzheimer’s disease through the analysis of resonance images using data mining techniques. In Proceedings of the Advances in Swarm Intelligence: 10th International Conference, ICSI 2019, Chiang Mai, Thailand, 26–30 July 2019; pp. 211–220. [Google Scholar]

- Liu, Z.; Gu, X.; Chen, J.; Wang, D.; Chen, Y.; Wang, L. Automatic recognition of pavement cracks from combined GPR B-scan and C-scan images using multiscale feature fusion deep neural networks. Autom. Constr. 2023, 146, 104698. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Yang, J.; Lan, G.; Xiao, S.; Li, Y.; Wen, J.; Zhu, Y. Enriching facial anti-spoofing datasets via an effective face swapping framework. Sensors 2022, 22, 4697. [Google Scholar] [CrossRef]

- Guo, X.; Liu, Y.; Jain, A.; Liu, X. Multi-domain Learning for Updating Face Anti-spoofing Models. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; pp. 230–249. [Google Scholar]

- Motiian, S.; Piccirilli, M.; Adjeroh, D.A.; Doretto, G. Unified deep supervised domain adaptation and generalization. In Proceedings of the 2017 IEEE/CVF International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 5715–5725. [Google Scholar]

- Ghifary, M.; Kleijn, W.B.; Zhang, M.; Balduzzi, D. Domain generalization for object recognition with multi-task autoencoders. In Proceedings of the 2015 IEEE/CVF International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 2551–2559. [Google Scholar]

- Li, H.; Pan, S.J.; Wang, S.; Kot, A.C. Domain generalization with adversarial feature learning. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 5400–5409. [Google Scholar]

- Saha, S.; Xu, W.; Kanakis, M.; Georgoulis, S.; Chen, Y.; Paudel, D.P.; Van Gool, L. Domain agnostic feature learning for image and video based face anti-spoofing. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Seattle, WA, USA, 13–19 June 2020; pp. 802–803. [Google Scholar]

- Kim, T.; Kim, Y. Suppressing spoof-irrelevant factors for domain-agnostic face anti-spoofing. IEEE Access 2021, 9, 86966–86974. [Google Scholar] [CrossRef]

- Shao, R.; Lan, X.; Yuen, P.C. Regularized fine-grained meta face anti-spoofing. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11974–11981. [Google Scholar]

- Chen, Z.; Yao, T.; Sheng, K.; Ding, S.; Tai, Y.; Li, J.; Huang, F.; Jin, X. Generalizable representation learning for mixture domain face anti-spoofing. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), Vancouver, BC, Canada, 2–9 February 2021; Volume 35, pp. 1132–1139. [Google Scholar]

- Wang, J.; Zhang, J.; Bian, Y.; Cai, Y.; Wang, C.; Pu, S. Self-domain adaptation for face anti-spoofing. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), Vancouver, BC, Canada, 2–9 February 2021; Volume 35, pp. 2746–2754. [Google Scholar]

- Zhang, K.; Zhang, Z.; Li, Z.; Qiao, Y. Joint face detection and alignment using multitask cascaded convolutional networks. IEEE Signal Process. Lett. 2016, 23, 1499–1503. [Google Scholar] [CrossRef]

- Wang, G.; Han, H.; Shan, S.; Chen, X. Cross-domain face presentation attack detection via multi-domain disentangled representation learning. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 6678–6687. [Google Scholar]

- Liu, S.; Zhang, K.Y.; Yao, T.; Bi, M.; Ding, S.; Li, J.; Huang, F.; Ma, L. Adaptive normalized representation learning for generalizable face anti-spoofing. In Proceedings of the 29th ACM International Conference on Multimedia (ACM MM), Virtual Event, China, 20–24 October 2021; pp. 1469–1477. [Google Scholar]

- Liu, S.; Zhang, K.Y.; Yao, T.; Sheng, K.; Ding, S.; Tai, Y.; Li, J.; Xie, Y.; Ma, L. Dual reweighting domain generalization for face presentation attack detection. arXiv 2021, arXiv:2106.16128. [Google Scholar]

| Feature Generator | |||

|---|---|---|---|

| Layer | Chan./Stri. | Resampling | Output Shape |

| Input | |||

| image | |||

| conv1-1 | 64/2 | None | |

| pool1 | -/2 | - | |

| layer1 | |||

| conv1-2 | 64/1 | None | |

| conv2-1 | 64/1 | None | |

| conv1-3 | 64/1 | None | |

| conv2-2 | 64/1 | None | |

| layer2 | |||

| conv1-4 | 128/2 | None | |

| conv2-3 | 128/1 | None | |

| conv2-4 | 128/2 | Down | |

| conv1-5 | 128/1 | None | |

| conv2-5 | 128/1 | None | |

| layer3 | |||

| conv1-6 | 256/2 | None | |

| conv2-6 | 256/1 | None | |

| conv2-7 | 256/2 | Down | |

| conv1-7 | 256/1 | None | |

| conv2-8 | 256/1 | None | |

| Feature Embedder and Classifier | |||

|---|---|---|---|

| Layer | Chan./Stri. | Resampling | Output Shape |

| Input | |||

| Conv2-8 | |||

| layer4 | |||

| conv1-8 | 512/2 | None | |

| conv2-9 | 512/1 | None | |

| conv2-10 | 512/2 | Down | |

| conv1-9 | 512/1 | None | |

| conv2-11 | 512/1 | None | |

| bottleneck | |||

| Average pooling | |||

| fc1-1 | 512/1 | - | 512 |

| fc1-2 | 512/1 | - | 512 |

| fc2-2 | 2/1 | - | 2 |

| Alignment Classifier | ||

|---|---|---|

| Layer | Chan./Stri. | Output Shape |

| Input | ||

| Conv2-11 | ||

| bottleneck | ||

| pool | -/1 | |

| fc3-1 | 1024/1 | 1024 |

| head | ||

| fc4-1 | 1024/1 | |

| fc5-1 | 2/1 | 2 |

| grl layer | ||

| 1024 | ||

| adv head | ||

| fc4-2 | 1024/1 | |

| fc5-2 | 2/1 | 2 |

| Method | O&C&I to M | O&M&I to C | O&C&M to I | I&C&M to O | ||||

|---|---|---|---|---|---|---|---|---|

| HTER (%) | AUC (%) | HTER (%) | AUC (%) | HTER (%) | AUC (%) | HTER (%) | AUC (%) | |

| 8.63 | 95.79 | 11.22 | 94.73 | 16.50 | 91.42 | 14.88 | 92.84 | |

| 8.40 | 96.72 | 8.56 | 96.95 | 22.14 | 77.60 | 19.88 | 87.53 | |

| MADG | 7.20 | 97.57 | 8.78 | 96.33 | 15.64 | 92.53 | 13.99 | 94.19 |

| Protocal | SSDG-R [8] | MADG-M (Ours) | MADG-S (Ours) | |||

|---|---|---|---|---|---|---|

| HTER (%) | AUC (%) | HTER (%) | AUC (%) | HTER (%) | AUC (%) | |

| O&M to C | 8.11 | 96.80 | 8.89 | 97.04 | 7.78 | 97.39 |

| O&I to C | 24.67 | 84.24 | 21.89 | 88.12 | 20.78 | 87.63 |

| M&I to C | 22.67 | 83.54 | 23.33 | 82.32 | 25.22 | 81.66 |

| C&M to O | 13.54 | 93.718 | 15.09 | 92.47 | 15.10 | 92.35 |

| C&I to O | 16.06 | 92.25 | 15.42 | 91.74 | 13.87 | 93.17 |

| M&I to O | 24.17 | 83.28 | 26.29 | 81.50 | 27.31 | 81.19 |

| C&O to M | 6.96 | 97.26 | 8.40 | 96.33 | 8.87 | 95.09 |

| C&I to M | 11.51 | 94.91 | 11.75 | 94.25 | 10.30 | 95.35 |

| I&O to M | 8.87 | 94.97 | 10.31 | 94.18 | 9.83 | 96.72 |

| C&M to I | 21.57 | 85.25 | 20.00 | 85.91 | 22.21 | 78.25 |

| C&O to I | 23.43 | 84.22 | 24.21 | 83.37 | 19.43 | 88.99 |

| O&M to I | 17.14 | 90.84 | 14.43 | 93.96 | 19.29 | 88.80 |

| Protocal | SSDG-R [8] | MADG-M (Ours) | MADG-S (Ours) | |||

|---|---|---|---|---|---|---|

| HTER (%) | AUC (%) | HTER (%) | AUC (%) | HTER (%) | AUC (%) | |

| O&C&I to M | 7.38 | 97.17 | 7.43 | 96.40 | 7.20 | 97.57 |

| O&M&I to C | 10.44 | 95.94 | 8.78 | 96.33 | 9.33 | 95.35 |

| O&C&M to I | 11.71 | 96.59 | 17.14 | 91.78 | 15.64 | 92.53 |

| I&C&M to O | 15.61 | 91.54 | 13.99 | 94.19 | 15.50 | 92.76 |

| Method | M&I to C | M&I to O | ||

|---|---|---|---|---|

| HTER (%) | AUC (%) | HTER (%) | AUC (%) | |

| MS LBP [9] | 51.16 | 52.09 | 43.63 | 58.07 |

| IDA [30] | 45.16 | 58.80 | 54.52 | 42.17 |

| LBP-TOP [10] | 45.27 | 54.88 | 47.26 | 50.21 |

| MADDG [7] | 41.02 | 64.33 | 39.35 | 65.10 |

| SSDG-M [8] | 31.89 | 71.29 | 36.01 | 66.88 |

| DR-MD-Net [56] | 31.67 | 75.23 | 34.02 | 72.65 |

| ANRL [57] | 31.06 | 72.12 | 30.73 | 74.10 |

| SSAN-M [6] | 30.00 | 76.20 | 29.44 | 76.62 |

| MADG (Ours) | 23.33 | 82.32 | 26.29 | 81.50 |

| Method | O&C&I to M | O&M&I to C | O&C&M to I | I&C&M to O | ||||

|---|---|---|---|---|---|---|---|---|

| HTER (%) | AUC (%) | HTER (%) | AUC (%) | HTER (%) | AUC (%) | HTER (%) | AUC (%) | |

| MS LBP [9] | 29.76 | 78.50 | 54.28 | 44.98 | 50.30 | 51.64 | 50.29 | 49.31 |

| Binary-CNN [15] | 29.25 | 82.87 | 34.88 | 71.94 | 34.47 | 65.88 | 29.61 | 77.54 |

| IDA [30] | 66.67 | 27.86 | 55.17 | 39.05 | 28.35 | 78.25 | 54.20 | 44.59 |

| Color Texture [31] | 28.09 | 78.47 | 30.58 | 76.89 | 40.40 | 62.78 | 63.59 | 32.71 |

| LBP-TOP [10] | 36.90 | 70.80 | 42.60 | 61.05 | 49.45 | 49.54 | 53.15 | 44.09 |

| Auxiliary (Depth) | 22.72 | 85.88 | 33.52 | 73.15 | 29.14 | 71.69 | 30.17 | 77.61 |

| Auxiliary [17] | - | - | 28.40 | - | 27.60 | - | - | - |

| MMD-AAE [49] | 27.08 | 83.19 | 44.59 | 58.29 | 31.58 | 75.18 | 40.98 | 63.08 |

| MADDG [7] | 17.69 | 88.06 | 24.50 | 84.51 | 22.19 | 84.99 | 27.89 | 80.02 |

| PAD-GAN [56] | 17.02 | 90.10 | 19.68 | 87.43 | 20.87 | 86.72 | 25.02 | 81.47 |

| RFM [52] | 13.89 | 93.98 | 20.27 | 88.16 | 17.30 | 90.48 | 16.45 | 91.16 |

| SSDG-M [8] | 16.67 | 90.47 | 23.11 | 85.45 | 18.21 | 94.61 | 25.17 | 81.83 |

| SSDG-R [8] | 7.38 | 97.17 | 10.44 | 95.94 | 11.71 | 96.59 | 15.61 | 91.54 |

| ANRL [57] | 16.03 | 91.04 | 10.83 | 96.75 | 17.85 | 89.26 | 15.67 | 91.90 |

| DRDG [58] | 15.56 | 91.79 | 12.43 | 95.81 | 19.05 | 88.79 | 15.63 | 91.75 |

| MADG (Ours) | 7.20 | 97.57 | 8.78 | 96.33 | 15.64 | 92.53 | 13.99 | 94.19 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, S.; Nie, W. Multi-Domain Feature Alignment for Face Anti-Spoofing. Sensors 2023, 23, 4077. https://doi.org/10.3390/s23084077

Zhang S, Nie W. Multi-Domain Feature Alignment for Face Anti-Spoofing. Sensors. 2023; 23(8):4077. https://doi.org/10.3390/s23084077

Chicago/Turabian StyleZhang, Shizhe, and Wenhui Nie. 2023. "Multi-Domain Feature Alignment for Face Anti-Spoofing" Sensors 23, no. 8: 4077. https://doi.org/10.3390/s23084077

APA StyleZhang, S., & Nie, W. (2023). Multi-Domain Feature Alignment for Face Anti-Spoofing. Sensors, 23(8), 4077. https://doi.org/10.3390/s23084077