Analyzing Factors Influencing Situation Awareness in Autonomous Vehicles—A Survey

Abstract

1. Introduction

- Problems that can be resolved include the following: decreasing pollutants, preventing road accidents, and reducing traffic congestion;

- Possibilities emerge, such as reallocating driving time and conveying the mobility handicapped;

- New trends include mobility as a service (MaaS) consumption and the logistics revolution.

2. Roadmap of the Survey

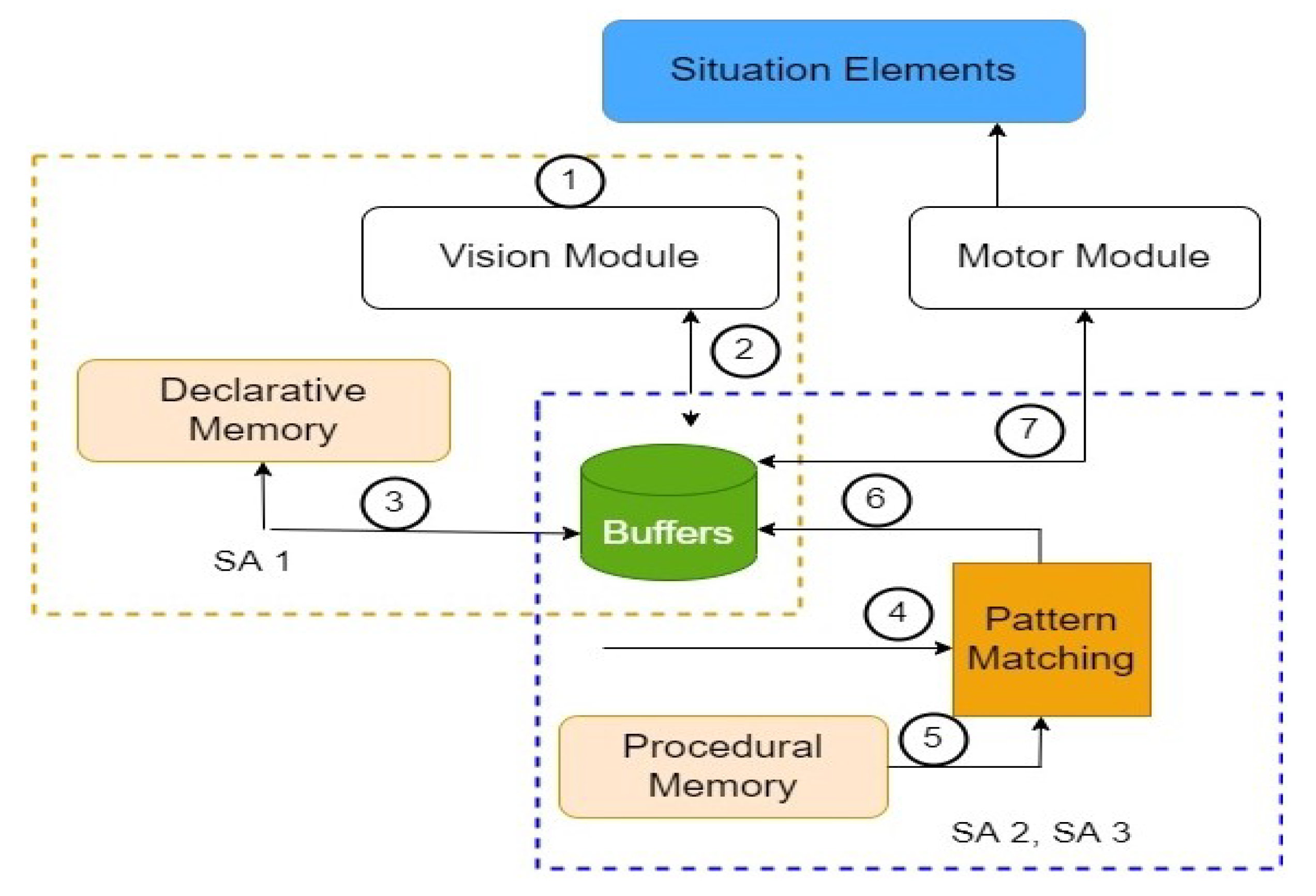

2.1. A Brief Overview about Situation Awareness

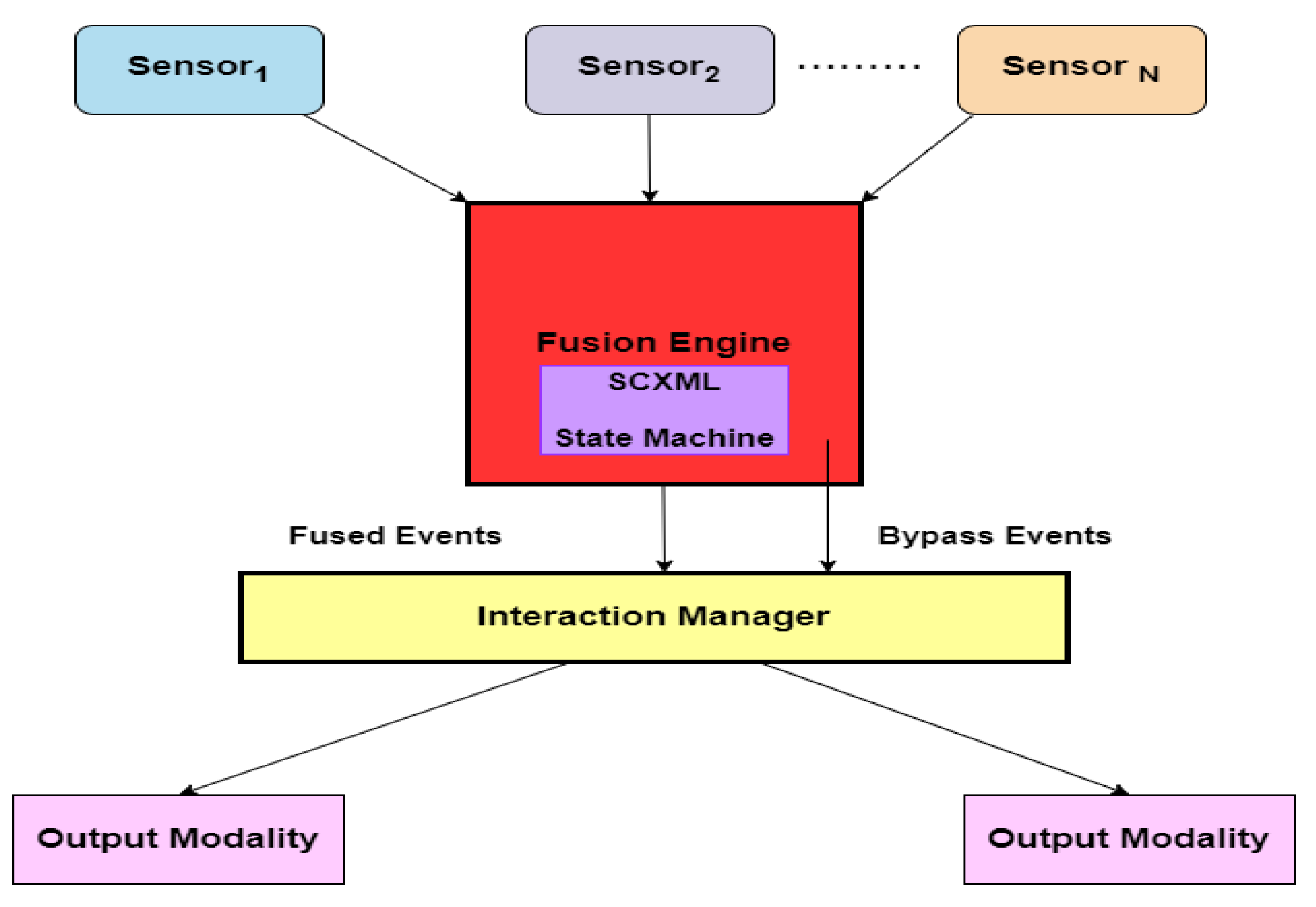

2.2. Basics of Data Fusion and the Perception System of AV

- Data from multiple sensors and sources are combined to create something more smart, definite, intuitive, and precise information. The data from each sensor may not make much sense on its own;

- Computing the (N) independent observations yields a statistical benefit of fusion; one may expect the data to be combined in the best possible way;

- Manufacturing minimal power consumption sensors, which reduces the frequent replacement of batteries during their lifespan, is a key problem in IoT. This prevailing condition reduces the demand for energy-efficient sensors in the market. It is well known that high-precision sensors use a lot of power;

- To address this problem, a set of low-accuracy sensors with very low power consumption can be deployed. Data fusion allows for the creation of extremely accurate information;

- Data fusion can assist with IoT big data challenges because we are combining data from various sensors into more specific and reliable information;

- Another significant advantage of data fusion is that it helps in the concealment of essential data or semantics that are accountable for the fused outcomes. Military applications, some important medical sections, and intelligence buildings, are examples of this.

- Artificial Intelligence (AI) based approaches such as classical machine learning, fuzzy logic, artificial neural networks (ANN), and genetic assessment;

- Probability-based methods such as Bayesian analysis, statistics, and recursive operators;

- Evidence-based data fusion strategies based on theory.

2.2.1. History of Fusion

- 1.

- What is the issue with SDF?

- 2.

- Which SDF problem component requires ML, and why?

- 3.

- Which scenarios effectively use AI/ML techniques to solve fusion-related problems?

- 4.

- When does it become difficult to use machine learning for SDF?

2.2.2. Sensors in Fusion Perception System

2.2.3. Millimeter Wave Radar

2.2.4. Cameras

2.2.5. LiDAR

- 1.

- What are the recent trends adapted to pre-process heterogeneous vehicular data?

- 2.

- What level of data accuracy is obtained by the referred studies after pre-processing the data?

- 3.

- Does the initial pre-processing tasks improve the situation awareness of the AVs?

- 4.

- Do the referred decision-making models provide instant solutions for all roadside events?

- 5.

- Which type of evaluation criteria in the referred decision-making models plays a vital role? Simulation-based (or) real-time implementation?

- 6.

- Does the survey identify the pitfalls in the existing literature?

- 7.

- What solution does this study propose for overcoming the identified drawbacks in the existing literature?

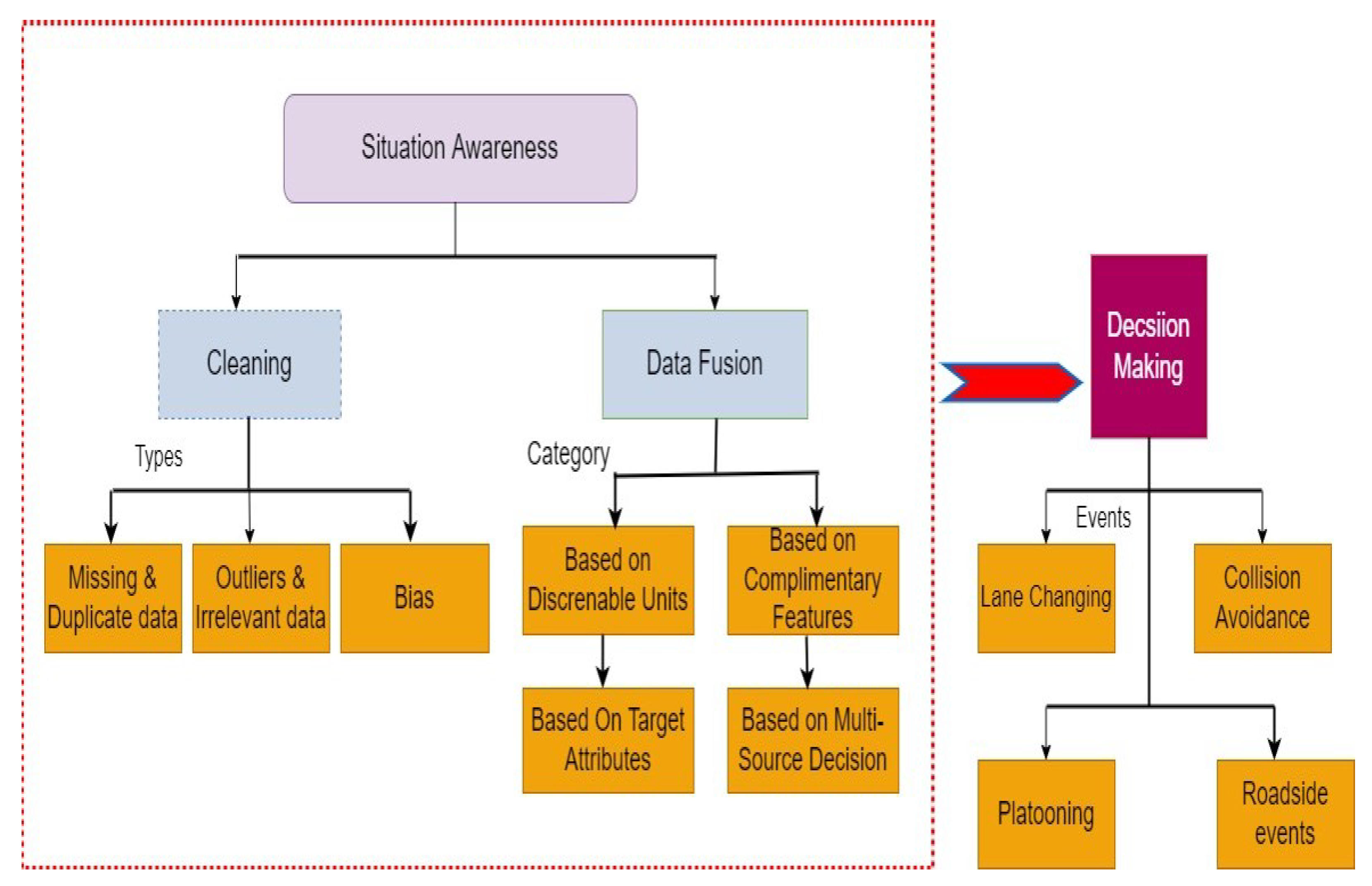

3. State of the Art in Data Fusion, Situation Awareness, and Decision-Making

3.1. Multimodal Fusion

- 1.

- Object Detection: The AVs must understand both stationary and mobile objects. The AVs use advanced algorithms to detect objects such as pedestrians, cycles, etc., for effective decision-making. Traffic light detection and traffic sign detection are also a part of object detection. Many algorithms use predefined boundaries (or) boxes to detect objects; 2D object detection uses parameters such as (x, y, h, w, c) whereas 3D methods use more parameters, such as (x, y, z, h, w, l, c, ).

- 2.

- Semantic Segmentation: The main objective of semantic segmentation is to cluster the pixel values and 3D data points obtained from multiple sources into a useful segment that gives appropriate meaning to the context.

- 3.

- Other tasks: Other vital tasks include object classification, depth completion, and prediction. Classification determines the category from the point cloud data and image data fed to a model. While depth detection and prediction estimate the distance between every pixel of an image and the 3D point cloud data of a LiDAR sensor.

3.1.1. Image Representation

- 1.

- Point-based Point Cloud Representation: The data acquired from LiDARS are in the form of 3D point cloud data. The raw data from the majority of LiDARs is often quaternion-like (x, y, z, r), where r denotes the reflectance of each point. Variable textures result in different reflectances, which provide more information in a variety of jobs. Some methods use the 3D points directly obtained from the LiDARs using point-based feature extraction methods. Due to the redundancy of information and slow execution experience by quaternion methods, many researchers transform the 3D point cloud data to voxel (or) 2D projection before feeding them to higher modules that perform complicated tasks related to the creation of perception in the AVs;

- 2.

- Voxel-based Point Cloud Representation: In this method, the 3D data obtained from the CNN models are discretized by transforming the 3D space to 3D voxel data. Here where stands for a feature vector where stands for the centroid of the voxelized cuboid while stands for statistical information;

- 3.

- 2D mapping based Point Cloud Representation: In this approach the 3D point cloud information is projected into a 2D space . Many works use a 2D CNN backbone to perform this transformation. The drawback of this approach is the information loss obtained when 3D to 2D transformation is done.

3.1.2. Fusion Strategy Based on Discernible Units (FSBDU)

3.1.3. Fusion Strategy Based on Complimentary Features (FSBCF)

- 1.

- Target parameter extraction: From the pre-processed data, it extracts target information such as dimension, range, orientation, velocity, and acceleration. Many studies create regions of interest (ROIs), which immediately translate the position of the object detected by the radar into an image to produce a region, extract location characteristics from radar or LiDAR targets, and aid image identification;

- 2.

- Data feature extraction: The main objective of feature extraction is to separate the needed features from all formats of processed data to classify and detect the objects. A few examples are color, shape, edge, spectral frequency, texture, velocity, latitude, longitude, etc.

3.1.4. Fusion Strategy Based on Target Attributes (FSBTA)

3.1.5. Fusion Strategy Based on Multi-Source Decision (FSBMD)

3.2. Situation Awareness

3.3. Decision-Making

3.3.1. Lane Changing

3.3.2. Collision Avoidance

3.3.3. Multiple Decisions on Roadside Events

3.3.4. Surveys Related to Decision-Making

4. Pitfalls and Future Research Direction

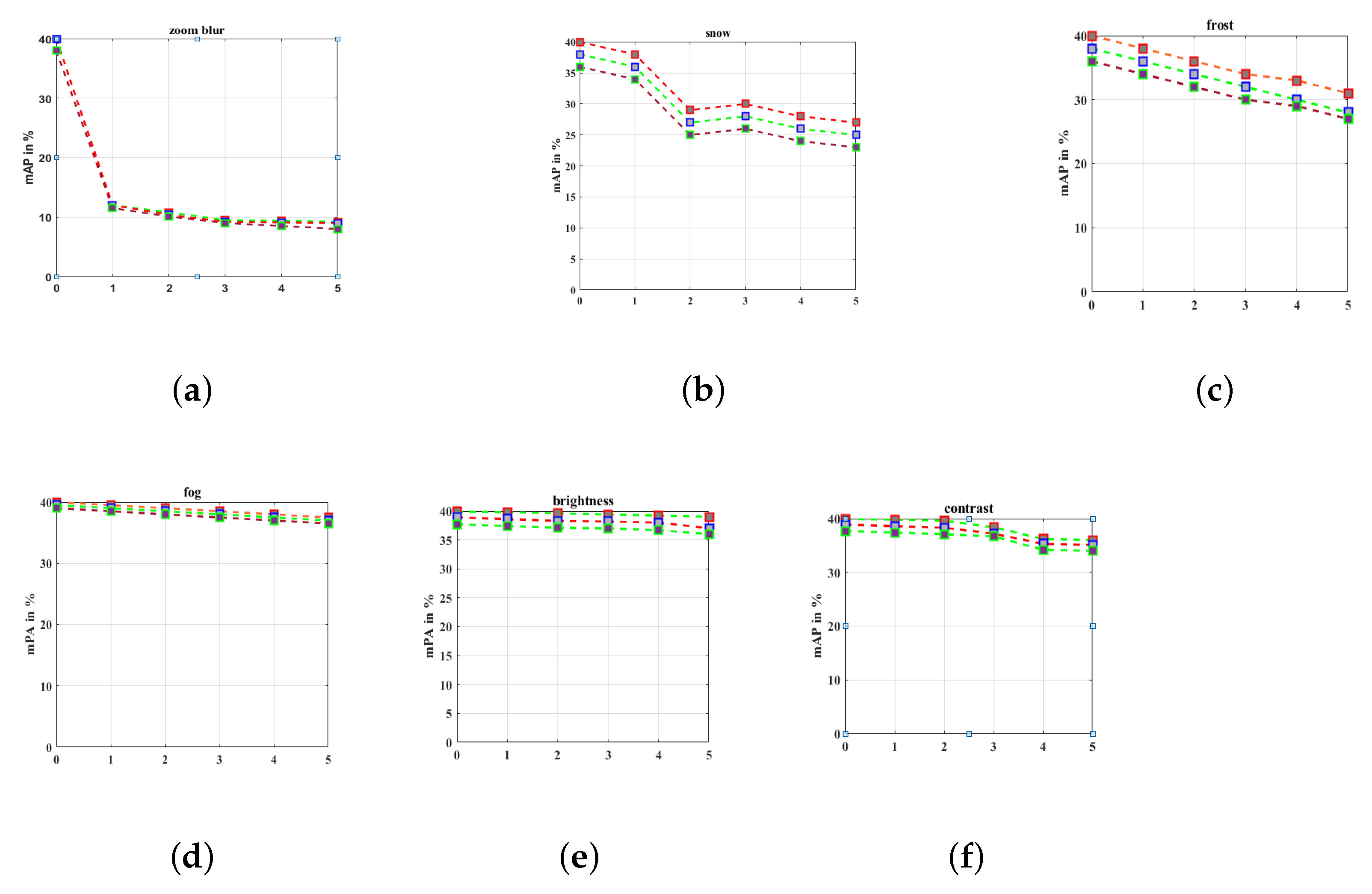

4.1. Analysis of Fusion Strategies and Perceived Results

4.2. Decision-Making Models

4.3. Influence of Data Fusion towards Situation Awareness

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Korosec, K. Aptiv’s Self-Driving Cars Have Given 100,000 Paid Rides on the Lyft App. 2020. Available online: https://utulsa.edu/aptivs-self-driving-cars-have-given-100000-paid-rides-on-lyft-app (accessed on 13 November 2022).

- Nissan Motor Corporation Businesses Need Smarter Tech in Their Fleets to Survive E-Commerce Boom. 2019. Available online: https://alliancernm.com/2019/02/26/businesses-need-smarter-tech-in-their-fleets-to-survive-e-commerce-boom (accessed on 13 November 2022).

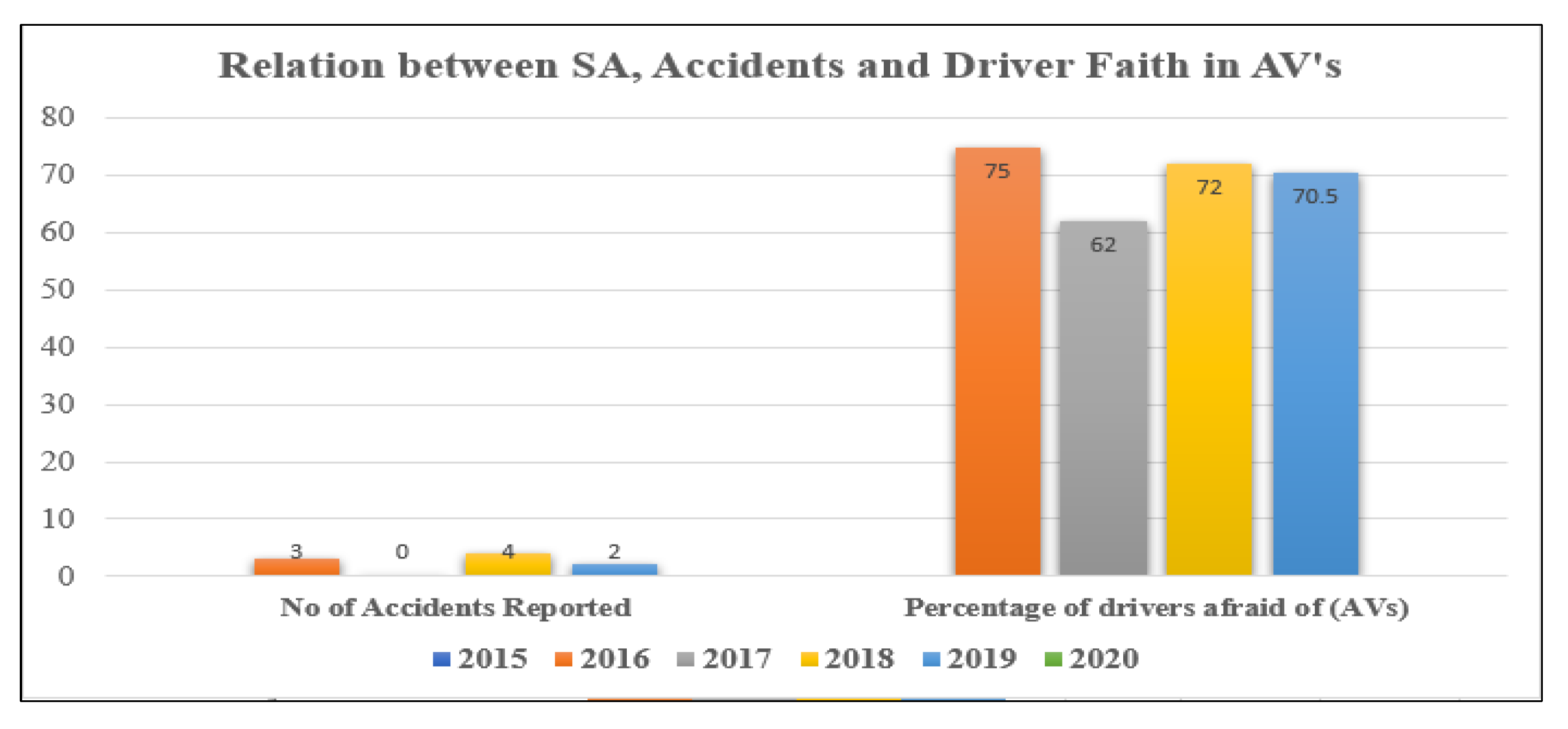

- Reinhart, R. Americans Hit the Brakes on Self-Driving Cars. 2020. Available online: https://news.gallup.com/poll/228032/americans-hit-brakes-self-driving-cars.aspx (accessed on 13 November 2022).

- Singh, S. Critical Reasons for Crashes Investigated in the National Motor Vehicle Crash Causation Survey; Technical Report; The National Academies of Sciences, Engineering, and Medicine: Washington, DC, USA, 2015. [Google Scholar]

- Crayton, T.J.; Meier, B.M. Autonomous vehicles: Developing a public health research agenda to frame the future of transportation policy. J. Transp. Health 2017, 6, 245–252. [Google Scholar] [CrossRef]

- Davies, A. Google’s Self-Driving Car Caused Its First Crash. Wired 2016. Available online: https://www.wired.com/2016/02/googles-self-driving-car-may-caused-first-crash (accessed on 13 November 2022).

- Rosique, F.; Navarro, P.J.; Fernández, C.; Padilla, A. A systematic review of perception system and simulators for autonomous vehicles research. Sensors 2019, 19, 648. [Google Scholar] [CrossRef] [PubMed]

- Yurtsever, E.; Lambert, J.; Carballo, A.; Takeda, K. A survey of autonomous driving: Common practices and emerging technologies. IEEE Access 2020, 8, 58443–58469. [Google Scholar] [CrossRef]

- Liu, S.; Liu, L.; Tang, J.; Yu, B.; Wang, Y.; Shi, W. Edge computing for autonomous driving: Opportunities and challenges. Proc. IEEE 2019, 107, 1697–1716. [Google Scholar] [CrossRef]

- Montgomery, W.D.; Mudge, R.; Groshen, E.L.; Helper, S.; MacDuffie, J.P.; Carson, C. America’s Workforce and the Self-Driving Future: Realizing Productivity Gains and Spurring Economic Growth; The National Academies of Sciences, Engineering, and Medicine: Washington, DC, USA, 2018; Available online: https://trid.trb.org/view/1516782 (accessed on 13 November 2022).

- UN DESA. World Population Prospects: The 2015 Revision, Key Findings and Advance Tables; Working PaperNo; United Nations Department of Economic and Social Affairs/Population Division: New York, NY, USA, 2015. [Google Scholar]

- Hoegaerts, L.; Schönenberger, B. The Automotive Digital Transformation and the Economic Impacts of Existing Data Access Models Reports Produced for the FIA (Research Paper); Schönenberger Advisory Services: Zürich, Switzerland, 2019. [Google Scholar]

- Razzaq, M.A.; Cleland, I.; Nugent, C.; Lee, S. Multimodal sensor data fusion for activity recognition using filtered classifier. Proceedings 2018, 2, 1262. [Google Scholar]

- Endsley, M.R. Design and evaluation for situation awareness enhancement. SAGE 1988, 32, 97–101. [Google Scholar] [CrossRef]

- Endsley, M.R. From here to autonomy: Lessons learned from human–automation research. Hum. Factors 2017, 59, 5–27. [Google Scholar] [CrossRef]

- Mutzenich, C.; Durant, S.; Helman, S.; Dalton, P. Updating our understanding of situation awareness in relation to remote operators of autonomous vehicles. Cogn. Res. Princ. Implic. 2021, 6, 1–17. [Google Scholar] [CrossRef]

- Kemp, I. Autonomy & motor insurance what happens next. In An RSA Report into Autonomous Vehicles & Experiences from the GATEway Project, Thatcham Research; 2018; pp. 1–31. Available online: https://static.rsagroup.com/rsa/news-and-insights/case-studies/rsa-report-autonomy-and-motor-insurance-what-happens-next.pdf (accessed on 13 November 2022).

- Koopman, P.; Wagner, M. Autonomous vehicle safety: An interdisciplinary challenge. IEEE Intell. Transp. Syst. Mag. 2017, 9, 90–96. [Google Scholar] [CrossRef]

- Endsley, M. Situation awareness in driving. In Handbook of Human Factors for Automated, Connected and Intelligent Vehicles; Taylor and Francis: London, UK, 2020. [Google Scholar]

- Gite, S.; Agrawal, H. On context awareness for multisensor data fusion in IoT. In Proceedings of the Second International Conference on Computer and Communication Technologies; Springer: New Delhi, India, 2016; pp. 85–93. [Google Scholar]

- Hall, D.; Llinas, J. Multisensor Data Fusion; CRC Press: Boca Raton, FL, USA, 2001. [Google Scholar]

- Nii, H.P.; Feigenbaum, E.A.; Anton, J.J. Signal-to-symbol transformation: HASP/SIAP case study. AI Mag. 1982, 3, 23. [Google Scholar]

- Franklin, J.E.; Carmody, C.L.; Keller, K.; Levitt, T.S.; Buteau, B.L. Expert system technology for the military: Selected samples. Proc. IEEE 1988, 76, 1327–1366. [Google Scholar] [CrossRef]

- Shadrin, S.S.; Varlamov, O.O.; Ivanov, A.M. Experimental autonomous road vehicle with logical artificial intelligence. J. Adv. Transp. 2017, 2017, 2492765. [Google Scholar] [CrossRef]

- Alam, F.; Mehmood, R.; Katib, I.; Albogami, N.N.; Albeshri, A. Data fusion and IoT for smart ubiquitous environments: A survey. IEEE Access 2017, 5, 9533–9554. [Google Scholar] [CrossRef]

- Choi, S.C.; Park, J.H.; Kim, J. A networking framework for multiple-heterogeneous unmanned vehicles in FANETs. In Proceedings of the 2019 Eleventh International Conference on Ubiquitous and Future Networks (ICUFN), Zagreb, Croatia, 2–5 July 2019; pp. 13–15. [Google Scholar]

- Wang, S.; Dai, X.; Xu, N.; Zhang, P. A review of environmetal sensing technology for autonomous vehicle. J. Chang. Univ. Sci. Technol. (Nat. Sci. Ed.) 2017, 40, 1672–9870. [Google Scholar]

- Duan, J.; Zheng, K.; Zhou, J. multilayer Lidar’s environmental sensing in autonomous vehicle. J. Beijing Univ. Technol. 2014, 40, 1891–1898. [Google Scholar]

- Ignatiousa, H.A.; Hesham-El-Sayeda, M.K.; Kharbasha, S.; Malika, S. A Novel Micro-services Cluster Based Framework for Autonomous Vehicles. J. Ubiquitous Syst. Pervasive Netw. 2021, 3, 1–10. [Google Scholar]

- Goodin, C.; Carruth, D.; Doude, M.; Hudson, C. Predicting the Influence of Rain on LIDAR in ADAS. Electronics 2019, 8, 89. [Google Scholar] [CrossRef]

- Vural, S.; Ekici, E. On multihop distances in wireless sensor networks with random node locations. IEEE Trans. Mob. Comput. 2009, 9, 540–552. [Google Scholar] [CrossRef]

- Raol, J.R. Data Fusion Mathematics: Theory and Practice; CRC Press: Boca Raton, FL, USA, 2015. [Google Scholar]

- Mandic, D.P.; Obradovic, D.; Kuh, A.; Adali, T.; Trutschell, U.; Golz, M.; De Wilde, P.; Barria, J.; Constantinides, A.; Chambers, J. Data fusion for modern engineering applications: An overview. In Proceedings of the International Conference on Artificial Neural Networks, Warsaw, Poland, 11–15 September 2005; pp. 715–721. [Google Scholar]

- Guerriero, M.; Svensson, L.; Willett, P. Bayesian data fusion for distributed target detection in sensor networks. IEEE Trans. Signal Process. 2010, 58, 3417–3421. [Google Scholar] [CrossRef]

- Biresaw, T.A.; Cavallaro, A.; Regazzoni, C.S. Tracker-level fusion for robust Bayesian visual tracking. IEEE Trans. Circuits Syst. Video Technol. 2014, 25, 776–789. [Google Scholar] [CrossRef]

- Zhu, X.; Kui, F.; Wang, Y. Predictive analytics by using Bayesian model averaging for large-scale Internet of Things. Int. J. Distrib. Sens. Netw. 2013, 9, 723260. [Google Scholar] [CrossRef]

- Kwan, C.; Chou, B.; Kwan, L.Y.M.; Larkin, J.; Ayhan, B.; Bell, J.F.; Kerner, H. Demosaicing enhancement using pixel-level fusion. Signal Image Video Process. 2018, 12, 749–756. [Google Scholar] [CrossRef]

- Harrer, F.; Pfeiffer, F.; Löffler, A.; Gisder, T.; Biebl, E. Synthetic aperture radar algorithm for a global amplitude map. In Proceedings of the 2017 14th Workshop on Positioning, Navigation and Communications (WPNC), Bremen, Germany, 25–26 October 2017; pp. 1–6. [Google Scholar]

- Weston, R.; Cen, S.; Newman, P.; Posner, I. Probably unknown: Deep inverse sensor modelling radar. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 5446–5452. [Google Scholar]

- Gao, F.; Yang, Y.; Wang, J.; Sun, J.; Yang, E.; Zhou, H. A deep convolutional generative adversarial networks (DCGANs)-based semi-supervised method for object recognition in synthetic aperture radar (SAR) images. Remote Sens. 2018, 10, 846. [Google Scholar] [CrossRef]

- Shi, X.; Zhou, F.; Yang, S.; Zhang, Z.; Su, T. Automatic target recognition for synthetic aperture radar images based on super-resolution generative adversarial network and deep convolutional neural network. Remote Sens. 2019, 11, 135. [Google Scholar] [CrossRef]

- Kim, B.; Kim, D.; Park, S.; Jung, Y.; Yi, K. Automated complex urban driving based on enhanced environment representation with GPS/map, radar, lidar and vision. IFAC-PapersOnLine 2016, 49, 190–195. [Google Scholar] [CrossRef]

- Caltagirone, L.; Bellone, M.; Svensson, L.; Wahde, M. LIDAR–camera fusion for road detection using fully convolutional neural networks. Robot. Auton. Syst. 2019, 111, 125–131. [Google Scholar] [CrossRef]

- Lekic, V.; Babic, Z. Automotive radar and camera fusion using generative adversarial networks. Comput. Vis. Image Underst. 2019, 184, 1–8. [Google Scholar] [CrossRef]

- Yektakhah, B.; Sarabandi, K. A method for detection of flat walls in through-the-wall SAR imaging. IEEE Geosci. Remote Sens. Lett. 2020, 18, 2102–2106. [Google Scholar] [CrossRef]

- Ouyang, Z.; Liu, Y.; Zhang, C.; Niu, J. A cgans-based scene reconstruction model using lidar point cloud. In Proceedings of the 2017 IEEE International Symposium on Parallel and Distributed Processing with Applications and 2017 IEEE International Conference on Ubiquitous Computing and Communications (ISPA/IUCC), Shenzhen, China, 15–17 December 2017; pp. 1107–1114. [Google Scholar]

- Jia, P.; Wang, X.; Zheng, K. Distributed clock synchronization based on intelligent clustering in local area industrial IoT systems. IEEE Trans. Ind. Inform. 2019, 16, 3697–3707. [Google Scholar] [CrossRef]

- Gao, H.; Cheng, B.; Wang, J.; Li, K.; Zhao, J.; Li, D. Object classification using CNN-based fusion of vision and LIDAR in autonomous vehicle environment. IEEE Trans. Ind. Inform. 2018, 14, 4224–4231. [Google Scholar] [CrossRef]

- Xiao, Y.; Codevilla, F.; Gurram, A.; Urfalioglu, O.; López, A.M. Multimodal end-to-end autonomous driving. IEEE Trans. Intell. Transp. Syst. 2020, 23, 537–547. [Google Scholar] [CrossRef]

- Liu, G.H.; Siravuru, A.; Prabhakar, S.; Veloso, M.; Kantor, G. Learning end-to-end multimodal sensor policies for autonomous navigation. In Proceedings of the Conference on Robot Learning, Mountain View, CA, USA, 13–15 November 2017; pp. 249–261. [Google Scholar]

- Narayanan, A.; Siravuru, A.; Dariush, B. Gated recurrent fusion to learn driving behavior from temporal multimodal data. IEEE Robot. Autom. Lett. 2020, 5, 1287–1294. [Google Scholar] [CrossRef]

- Crommelinck, S.; Bennett, R.; Gerke, M.; Nex, F.; Yang, M.Y.; Vosselman, G. Review of automatic feature extraction from high-resolution optical sensor data for UAV-based cadastral mapping. Remote Sens. 2016, 8, 689. [Google Scholar] [CrossRef]

- Srivastava, S.; Divekar, A.V.; Anilkumar, C.; Naik, I.; Kulkarni, V.; Pattabiraman, V. Comparative analysis of deep learning image detection algorithms. J. Big Data 2021, 8, 1–27. [Google Scholar] [CrossRef]

- Song, Y.; Hu, J.; Dai, Y.; Jin, T.; Zhou, Z. Estimation and mitigation of time-variant RFI in low-frequency ultra-wideband radar. IEEE Geosci. Remote Sens. Lett. 2018, 15, 409–413. [Google Scholar] [CrossRef]

- Wang, M.; Zhang, Y.D.; Cui, G. Human motion recognition exploiting radar with stacked recurrent neural network. Digit. Signal Process. 2019, 87, 125–131. [Google Scholar] [CrossRef]

- Savelonas, M.A.; Pratikakis, I.; Theoharis, T.; Thanellas, G.; Abad, F.; Bendahan, R. Spatially sensitive statistical shape analysis for pedestrian recognition from LIDAR data. Comput. Vis. Image Underst. 2018, 171, 1–9. [Google Scholar] [CrossRef]

- Han, X.; Lu, J.; Tai, Y.; Zhao, C. A real-time lidar and vision based pedestrian detection system for unmanned ground vehicles. In Proceedings of the 2015 3rd IAPR Asian Conference on Pattern Recognition (ACPR), Kuala Lumpur, Malaysia, 3–6 November 2015; pp. 635–639. [Google Scholar]

- Xiao, L.; Wang, R.; Dai, B.; Fang, Y.; Liu, D.; Wu, T. Hybrid conditional random field based camera-LIDAR fusion for road detection. Inf. Sci. 2018, 432, 543–558. [Google Scholar] [CrossRef]

- Malawade, A.V.; Mortlock, T.; Al Faruque, M.A. HydraFusion: Context-aware selective sensor fusion for robust and efficient autonomous vehicle perception. In Proceedings of the 2022 ACM/IEEE 13th International Conference on Cyber-Physical Systems (ICCPS), Milano, Italy, 4–6 May 2022; pp. 68–79. [Google Scholar]

- Rawashdeh, N.A.; Bos, J.P.; Abu-Alrub, N.J. Camera–Lidar sensor fusion for drivable area detection in winter weather using convolutional neural networks. Opt. Eng. 2022, 62, 031202. [Google Scholar] [CrossRef]

- Steinbaeck, J.; Steger, C.; Holweg, G.; Druml, N. Design of a low-level radar and time-of-flight sensor fusion framework. In Proceedings of the 2018 21st Euromicro Conference on Digital System Design (DSD), Prague, Czech Republic, 29–31 August 2018; pp. 268–275. [Google Scholar]

- Botha, F.J.; van Daalen, C.E.; Treurnicht, J. Data fusion of radar and stereo vision for detection and tracking of moving objects. In Proceedings of the 2016 Pattern Recognition Association of South Africa and Robotics and Mechatronics International Conference (PRASA-RobMech), Stellenbosch, South Africa, 30 November–2 December 2016; pp. 1–7. [Google Scholar]

- Sun, T.P.; Lu, Y.C.; Shieh, H.L. A novel readout integrated circuit with a dual-mode design for single-and dual-band infrared focal plane array. Infrared Phys. Technol. 2013, 60, 56–65. [Google Scholar] [CrossRef]

- Wang, Z.; Wu, Y.; Niu, Q. Multi-sensor fusion in automated driving: A survey. IEEE Access 2019, 8, 2847–2868. [Google Scholar] [CrossRef]

- Wang, C.; Sun, Q.; Li, Z.; Zhang, H.; Ruan, K. Cognitive competence improvement for autonomous vehicles: A lane change identification model for distant preceding vehicles. IEEE Access 2019, 7, 83229–83242. [Google Scholar] [CrossRef]

- Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multi-view 3d object detection network for autonomous driving. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1907–1915. [Google Scholar]

- Patel, N.; Choromanska, A.; Krishnamurthy, P.; Khorrami, F. Sensor modality fusion with CNNs for UGV autonomous driving in indoor environments. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 1531–1536. [Google Scholar]

- Jian, Z.; Hongbing, C.; Jie, S.; Haitao, L. Data fusion for magnetic sensor based on fuzzy logic theory. In Proceedings of the 2011 Fourth International Conference on Intelligent Computation Technology and Automation, Shenzhen, China, 28–29 March 2011; Volume 1, pp. 87–92. [Google Scholar]

- Sun, D.; Huang, X.; Yang, K. A multimodal vision sensor for autonomous driving. In Proceedings of the Counterterrorism, Crime Fighting, Forensics, and Surveillance Technologies III, Strasbourg, France, 9–11 September 2019; Volume 11166, p. 111660L. [Google Scholar]

- Veselsky, J.; West, J.; Ahlgren, I.; Thiruvathukal, G.K.; Klingensmith, N.; Goel, A.; Jiang, W.; Davis, J.C.; Lee, K.; Kim, Y. Establishing trust in vehicle-to-vehicle coordination: A sensor fusion approach. In Proceedings of the 2022 2nd Workshop on Data-Driven and Intelligent Cyber-Physical Systems for Smart Cities Workshop (DI-CPS), Milan, Italy, 3–6 May 2022; pp. 7–13. [Google Scholar]

- Dasgupta, S.; Rahman, M.; Islam, M.; Chowdhury, M. A Sensor Fusion-based GNSS Spoofing Attack Detection Framework for Autonomous Vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 23559–23572. [Google Scholar] [CrossRef]

- Sun, L.; Zhang, Y.; Fu, Z.; Zheng, G.; He, Z.; Pu, J. An approach to multi-sensor decision fusion based on the improved jousselme evidence distance. In Proceedings of the 2018 International Conference on Control, Automation and Information Sciences (ICCAIS), Hangzhou, China, 24–27 October 2018; pp. 189–193. [Google Scholar]

- Asvadi, A.; Garrote, L.; Premebida, C.; Peixoto, P.; Nunes, U.J. Multimodal vehicle detection: Fusing 3D-LIDAR and color camera data. Pattern Recognit. Lett. 2018, 115, 20–29. [Google Scholar] [CrossRef]

- Zakaria, R.; Sheng, O.Y.; Wern, K.; Shamshirband, S.; Petković, D.; Pavlović, N.T. Adaptive neuro-fuzzy evaluation of the tapered plastic multimode fiber-based sensor performance with and without silver thin film for different concentrations of calcium hypochlorite. IEEE Sens. J. 2014, 14, 3579–3584. [Google Scholar] [CrossRef]

- Noh, S.M.C.; Shamshirband, S.; Petković, D.; Penny, R.; Zakaria, R. Adaptive neuro-fuzzy appraisal of plasmonic studies on morphology of deposited silver thin films having different thicknesses. Plasmonics 2014, 9, 1189–1196. [Google Scholar] [CrossRef]

- Hellmund, A.M.; Wirges, S.; Taş, Ö.Ş.; Bandera, C.; Salscheider, N.O. Robot operating system: A modular software framework for automated driving. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 1564–1570. [Google Scholar]

- Kim, H.; Lee, I. LOCALIZATION OF A CAR BASED ON MULTI-SENSOR FUSION. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 247–250. [Google Scholar] [CrossRef]

- Yahia, O.; Guida, R.; Iervolino, P. Weights based decision level data fusion of landsat-8 and sentinel-L for soil moisture content estimation. In Proceedings of the IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 8078–8081. [Google Scholar]

- Salentinig, A.; Gamba, P. Multi-scale decision level data fusion by means of spatial regularization and image weighting. In Proceedings of the 2017 Joint Urban Remote Sensing Event (JURSE), Dubai, United Arab Emirates, 6–8 March 2017; pp. 1–4. [Google Scholar]

- Wang, H.; Wang, B.; Liu, B.; Meng, X.; Yang, G. Pedestrian recognition and tracking using 3D LiDAR for autonomous vehicle. Robot. Auton. Syst. 2017, 88, 71–78. [Google Scholar] [CrossRef]

- Knoll, A.K.; Clarke, D.C.; Zhang, F.Z. Vehicle detection based on LiDAR and camera fusion. In Proceedings of the 17th International IEEE Conference on Intelligent Transportation Systems (ITSC), Qingdao, China, 8–11 October 2014; pp. 1620–1625. [Google Scholar]

- Dimitrievski, M.; Veelaert, P.; Philips, W. Behavioral pedestrian tracking using a camera and lidar sensors on a moving vehicle. Sensors 2019, 19, 391. [Google Scholar] [CrossRef]

- Jha, H.; Lodhi, V.; Chakravarty, D. Object detection and identification using vision and radar data fusion system for ground-based navigation. In Proceedings of the 2019 6th International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 7–8 March 2019; pp. 590–593. [Google Scholar]

- Alencar, F.A.; Rosero, L.A.; Massera Filho, C.; Osório, F.S.; Wolf, D.F. Fast metric tracking by detection system: Radar blob and camera fusion. In Proceedings of the 2015 12th Latin American Robotics Symposium and 2015 3rd Brazilian Symposium on Robotics (LARS-SBR), Uberlandia, Brazil, 29–31 October 2015; pp. 120–125. [Google Scholar]

- Wang, X.; Xu, L.; Sun, H.; Xin, J.; Zheng, N. On-road vehicle detection and tracking using MMW radar and monovision fusion. IEEE Trans. Intell. Transp. Syst. 2016, 17, 2075–2084. [Google Scholar] [CrossRef]

- Kim, K.E.; Lee, C.J.; Pae, D.S.; Lim, M.T. Sensor fusion for vehicle tracking with camera and radar sensor. In Proceedings of the 2017 17th International Conference on Control, Automation and Systems (ICCAS), Jeju, Republic of Korea, 18–21 October 2017; pp. 1075–1077. [Google Scholar]

- Wang, J.G.; Chen, S.J.; Zhou, L.B.; Wan, K.W.; Yau, W.Y. Vehicle detection and width estimation in rain by fusing radar and vision. In Proceedings of the 2018 15th International Conference on Control, Automation, Robotics and Vision (ICARCV), Singapore, 18–21 November 2018; pp. 1063–1068. [Google Scholar]

- Vickers, N.J. Animal communication: When i’m calling you, will you answer too? Curr. Biol. 2017, 27, R713–R715. [Google Scholar] [CrossRef] [PubMed]

- Xu, X.; Zhang, L.; Yang, J.; Cao, C.; Tan, Z.; Luo, M. Object detection based on fusion of sparse point cloud and image information. IEEE Trans. Instrum. Meas. 2021, 70, 1–12. [Google Scholar] [CrossRef]

- Ilic, V.; Marijan, M.; Mehmed, A.; Antlanger, M. Development of sensor fusion based ADAS modules in virtual environments. In Proceedings of the 2018 Zooming Innovation in Consumer Technologies Conference (ZINC), Novi Sad, Serbia, 30–31 May 2018; pp. 88–91. [Google Scholar]

- Hong, S.B.; Kang, C.M.; Lee, S.H.; Chung, C.C. Multi-rate vehicle side slip angle estimation using low-cost GPS/IMU. In Proceedings of the 2017 17th International Conference on Control, Automation and Systems (ICCAS), Jeju, Republic of Korea, 18–21 October 2017; pp. 35–40. [Google Scholar]

- Wahyudi; Listiyana, M.S.; Sudjadi; Ngatelan. Tracking Object based on GPS and IMU Sensor. In Proceedings of the 2018 5th International Conference on Information Technology, Computer, and Electrical Engineering (ICITACEE), Semarang, Indonesia, 26–28 September 2018; pp. 214–218. [Google Scholar]

- Zhang, Z.; Wang, H.; Chen, W. A real-time visual-inertial mapping and localization method by fusing unstable GPS. In Proceedings of the 2018 13th World Congress on Intelligent Control and Automation (WCICA), Changsha, China, 4–8 July 2018; pp. 1397–1402. [Google Scholar]

- Chen, M.; Zhan, X.; Tu, J.; Liu, M. Vehicle-localization-based and DSRC-based autonomous vehicle rear-end collision avoidance concerning measurement uncertainties. IEEJ Trans. Electr. Electron. Eng. 2019, 14, 1348–1358. [Google Scholar] [CrossRef]

- Cui, Y.; Xu, H.; Wu, J.; Sun, Y.; Zhao, J. Automatic vehicle tracking with roadside LiDAR data for the connected-vehicles system. IEEE Intell. Syst. 2019, 34, 44–51. [Google Scholar] [CrossRef]

- Jo, K.; Lee, M.; Kim, J.; Sunwoo, M. Tracking and behavior reasoning of moving vehicles based on roadway geometry constraints. IEEE Trans. Intell. Transp. Syst. 2016, 18, 460–476. [Google Scholar] [CrossRef]

- Mees, O.; Eitel, A.; Burgard, W. Choosing smartly: Adaptive multimodal fusion for object detection in changing environments. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 151–156. [Google Scholar]

- Lee, S.; Yoon, Y.J.; Lee, J.E.; Kim, S.C. Human–vehicle classification using feature-based SVM in 77-GHz automotive FMCW radar. IET Radar Sonar Navig. 2017, 11, 1589–1596. [Google Scholar] [CrossRef]

- Zhong, Z.; Liu, S.; Mathew, M.; Dubey, A. Camera radar fusion for increased reliability in ADAS applications. Electron. Imaging 2018, 2018, 1–4. [Google Scholar] [CrossRef]

- Bouain, M.; Berdjag, D.; Fakhfakh, N.; Atitallah, R.B. Multi-sensor fusion for obstacle detection and recognition: A belief-based approach. In Proceedings of the 2018 21st International Conference on Information Fusion (FUSION), Cambridge, UK, 10–13 July 2018; pp. 1217–1224. [Google Scholar]

- Han, S.; Wang, X.; Xu, L.; Sun, H.; Zheng, N. Frontal object perception for Intelligent Vehicles based on radar and camera fusion. In Proceedings of the 2016 35th Chinese Control Conference (CCC), Chengdu, China, 27–29 July 2016; pp. 4003–4008. [Google Scholar]

- Kocić, J.; Jovičić, N.; Drndarević, V. Sensors and sensor fusion in autonomous vehicles. In Proceedings of the 2018 26th Telecommunications Forum (TELFOR), Belgrade, Serbia, 20–21 November 2018; pp. 420–425. [Google Scholar]

- Zhao, J.; Xu, H.; Liu, H.; Wu, J.; Zheng, Y.; Wu, D. Detection and tracking of pedestrians and vehicles using roadside LiDAR sensors. Transp. Res. Part C Emerg. Technol. 2019, 100, 68–87. [Google Scholar] [CrossRef]

- Kang, Z.; Jia, F.; Zhang, L. A robust image matching method based on optimized BaySAC. Photogramm. Eng. Remote Sens. 2014, 80, 1041–1052. [Google Scholar] [CrossRef]

- Baftiu, I.; Pajaziti, A.; Cheok, K.C. Multi-mode surround view for ADAS vehicles. In Proceedings of the 2016 IEEE International Symposium on Robotics and Intelligent Sensors (IRIS), Tokyo, Japan, 17–20 December 2016; pp. 190–193. [Google Scholar]

- Baras, N.; Nantzios, G.; Ziouzios, D.; Dasygenis, M. Autonomous obstacle avoidance vehicle using lidar and an embedded system. In Proceedings of the 2019 8th International Conference on Modern Circuits and Systems Technologies (MOCAST), Thessaloniki, Greece, 13–15 May 2019; pp. 1–4. [Google Scholar]

- Reina, G.; Milella, A.; Rouveure, R. Traversability analysis for off-road vehicles using stereo and radar data. In Proceedings of the 2015 IEEE International Conference on Industrial Technology (ICIT), Seville, Spain, 17–19 March 2015; pp. 540–546. [Google Scholar]

- Zhou, Y.; Zhang, L.; Xing, C.; Xie, P.; Cao, Y. Target three-dimensional reconstruction from the multi-view radar image sequence. IEEE Access 2019, 7, 36722–36735. [Google Scholar] [CrossRef]

- Wei, P.; Cagle, L.; Reza, T.; Ball, J.; Gafford, J. LiDAR and camera detection fusion in a real-time industrial multi-sensor collision avoidance system. Electronics 2018, 7, 84. [Google Scholar] [CrossRef]

- Wulff, F.; Schäufele, B.; Sawade, O.; Becker, D.; Henke, B.; Radusch, I. Early fusion of camera and lidar for robust road detection based on U-Net FCN. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Suzhou, China, 26–30 June 2018; pp. 1426–1431. [Google Scholar]

- Bhadani, R.K.; Sprinkle, J.; Bunting, M. The cat vehicle testbed: A simulator with hardware in the loop for autonomous vehicle applications. arXiv 2018, arXiv:1804.04347. [Google Scholar] [CrossRef]

- Tsai, C.M.; Lai, Y.H.; Perng, J.W.; Tsui, I.F.; Chung, Y.J. Design and application of an autonomous surface vehicle with an AI-based sensing capability. In Proceedings of the 2019 IEEE Underwater Technology (UT), Kaohsiung, Taiwan, 16–19 April 2019; pp. 1–4. [Google Scholar]

- Oh, J.; Kim, K.S.; Park, M.; Kim, S. A comparative study on camera-radar calibration methods. In Proceedings of the 2018 15th International Conference on Control, Automation, Robotics and Vision (ICARCV), Singapore, 18–21 November 2018; pp. 1057–1062. [Google Scholar]

- Kim, J.; Han, D.S.; Senouci, B. Radar and vision sensor fusion for object detection in autonomous vehicle surroundings. In Proceedings of the 2018 Tenth International Conference on Ubiquitous and Future Networks (ICUFN), Prague, Czech Republic, 3–6 July 2018; pp. 76–78. [Google Scholar]

- Sim, S.; Sock, J.; Kwak, K. Indirect correspondence-based robust extrinsic calibration of LiDAR and camera. Sensors 2016, 16, 933. [Google Scholar] [CrossRef] [PubMed]

- Pusztai, Z.; Eichhardt, I.; Hajder, L. Accurate calibration of multi-lidar-multi-camera systems. Sensors 2018, 18, 2139. [Google Scholar] [CrossRef]

- Castorena, J.; Kamilov, U.S.; Boufounos, P.T. Autocalibration of lidar and optical cameras via edge alignment. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 2862–2866. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? the kitti vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Sun, P.; Kretzschmar, H.; Dotiwalla, X.; Chouard, A.; Patnaik, V.; Tsui, P.; Guo, J.; Zhou, Y.; Chai, Y.; Caine, B.; et al. Scalability in perception for autonomous driving: Waymo open dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 2446–2454. [Google Scholar]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuscenes: A multimodal dataset for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11621–11631. [Google Scholar]

- Huang, X.; Cheng, X.; Geng, Q.; Cao, B.; Zhou, D.; Wang, P.; Lin, Y.; Yang, R. The apolloscape dataset for autonomous driving. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 954–960. [Google Scholar]

- Xiao, P.; Shao, Z.; Hao, S.; Zhang, Z.; Chai, X.; Jiao, J.; Li, Z.; Wu, J.; Sun, K.; Jiang, K.; et al. Pandaset: Advanced sensor suite dataset for autonomous driving. In Proceedings of the 2021 IEEE International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–22 September 2021; pp. 3095–3101. [Google Scholar]

- Yan, Z.; Sun, L.; Krajník, T.; Ruichek, Y. EU long-term dataset with multiple sensors for autonomous driving. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 10697–10704. [Google Scholar]

- Ligocki, A.; Jelinek, A.; Zalud, L. Brno urban dataset-the new data for self-driving agents and mapping tasks. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 3284–3290. [Google Scholar]

- Pham, Q.H.; Sevestre, P.; Pahwa, R.S.; Zhan, H.; Pang, C.H.; Chen, Y.; Mustafa, A.; Chandrasekhar, V.; Lin, J. A 3D dataset: Towards autonomous driving in challenging environments. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 2267–2273. [Google Scholar]

- Jiang, P.; Osteen, P.; Wigness, M.; Saripalli, S. Rellis-3d dataset: Data, benchmarks and analysis. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 1110–1116. [Google Scholar]

- Wang, Z.; Ding, S.; Li, Y.; Fenn, J.; Roychowdhury, S.; Wallin, A.; Martin, L.; Ryvola, S.; Sapiro, G.; Qiu, Q. Cirrus: A long-range bi-pattern lidar dataset. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 5744–5750. [Google Scholar]

- Mao, J.; Niu, M.; Jiang, C.; Liang, H.; Chen, J.; Liang, X.; Li, Y.; Ye, C.; Zhang, W.; Li, Z.; et al. One million scenes for autonomous driving: Once dataset. arXiv 2021, arXiv:2106.11037. [Google Scholar]

- Zhao, S. Remote sensing data fusion using support vector machine. In Proceedings of the IGARSS 2004, 2004 IEEE International Geoscience and Remote Sensing Symposium, Anchorage, AK, USA, 20–24 September 2004; Volume 4, pp. 2575–2578. [Google Scholar]

- Bigdeli, B.; Samadzadegan, F.; Reinartz, P. A decision fusion method based on multiple support vector machine system for fusion of hyperspectral and LIDAR data. Int. J. Image Data Fusion 2014, 5, 196–209. [Google Scholar] [CrossRef]

- El Gayar, N.; Schwenker, F.; Suen, C. Artificial Neural Networks in Pattern Recognition; Lecture Notes in Artificial Intelligence; Springer Nature: Cham, Switzerland, 2014; Volume 8774. [Google Scholar]

- Manjunatha, P.; Verma, A.; Srividya, A. Multi-sensor data fusion in cluster based wireless sensor networks using fuzzy logic method. In Proceedings of the 2008 IEEE Region 10 and the Third International Conference on Industrial and Information Systems, Kharagpur, India, 8–10 December 2008; pp. 1–6. [Google Scholar]

- Ran, G.L.; Wu, H.H. Fuzzy fusion approach for object tracking. In Proceedings of the 2010 Second WRI Global Congress on Intelligent Systems, Wuhan, China, 16–17 December 2010; Volume 3, pp. 219–222. [Google Scholar]

- Rashed, H.; El Sallab, A.; Yogamani, S.; ElHelw, M. Motion and depth augmented semantic segmentation for autonomous navigation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Feng, D.; Haase-Schuetz, C.; Rosenbaum, L.; Hertlein, H.; Glaeser, C.; Timm, F.; Wiesbeck, W.; Dietmayer, K. Deep multi-modal object detection and semantic segmentation for autonomous driving: Datasets, methods, and challenges. IEEE Trans. Intell. Transp. Syst. 2020, 22, 1341–1360. [Google Scholar] [CrossRef]

- Endsley, M.R. Situation awareness in future autonomous vehicles: Beware of the unexpected. In Proceedings of the Congress of the International Ergonomics Association, Florence, Italy, 26–30 August 2018; pp. 303–309. [Google Scholar]

- Stapel, J.; El Hassnaoui, M.; Riender, H. Measuring driver perception: Combining eye-tracking and automated road scene perception. Hum. Factors 2022, 64, 714–731. [Google Scholar] [CrossRef]

- Liang, N.; Yang, J.; Yu, D.; Prakah-Asante, K.O.; Curry, R.; Blommer, M.; Swaminathan, R.; Pitts, B.J. Using eye-tracking to investigate the effects of pre-takeover visual engagement on situation awareness during automated driving. Accid. Anal. Prev. 2021, 157, 106143. [Google Scholar] [CrossRef]

- Papandreou, A.; Kloukiniotis, A.; Lalos, A.; Moustakas, K. Deep multi-modal data analysis and fusion for robust scene understanding in CAVs. In Proceedings of the 2021 IEEE 23rd International Workshop on Multimedia Signal Processing (MMSP), Tampere, Finland, 6–8 October 2021; pp. 1–6. [Google Scholar]

- Rangesh, A.; Deo, N.; Yuen, K.; Pirozhenko, K.; Gunaratne, P.; Toyoda, H.; Trivedi, M.M. Exploring the situational awareness of humans inside autonomous vehicles. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 190–197. [Google Scholar]

- Mohseni-Kabir, A.; Isele, D.; Fujimura, K. Interaction-aware multi-agent reinforcement learning for mobile agents with individual goals. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 3370–3376. [Google Scholar]

- Liu, T.; Huang, B.; Deng, Z.; Wang, H.; Tang, X.; Wang, X.; Cao, D. Heuristics-oriented overtaking decision making for autonomous vehicles using reinforcement learning. IET Electr. Syst. Transp. 2020, 10, 417–424. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, X.; Li, L.; Cheng, S.; Chen, Z. A novel lane change decision-making model of autonomous vehicle based on support vector machine. IEEE Access 2019, 7, 26543–26550. [Google Scholar] [CrossRef]

- Nie, J.; Zhang, J.; Ding, W.; Wan, X.; Chen, X.; Ran, B. Decentralized cooperative lane-changing decision-making for connected autonomous vehicles. IEEE Access 2016, 4, 9413–9420. [Google Scholar] [CrossRef]

- Wray, K.H.; Lange, B.; Jamgochian, A.; Witwicki, S.J.; Kobashi, A.; Hagaribommanahalli, S.; Ilstrup, D. POMDPs for Safe Visibility Reasoning in Autonomous Vehicles. In Proceedings of the 2021 IEEE International Conference on Intelligence and Safety for Robotics (ISR), Tokoname, Japan, 4–6 March 2021; pp. 191–195. [Google Scholar]

- Rhim, J.; Lee, G.b.; Lee, J.H. Human moral reasoning types in autonomous vehicle moral dilemma: A cross-cultural comparison of Korea and Canada. Comput. Hum. Behav. 2020, 102, 39–56. [Google Scholar] [CrossRef]

- Yuan, H.; Sun, X.; Gordon, T. Unified decision-making and control for highway collision avoidance using active front steer and individual wheel torque control. Veh. Syst. Dyn. 2019, 57, 1188–1205. [Google Scholar] [CrossRef]

- Iberraken, D.; Adouane, L.; Denis, D. Reliable risk management for autonomous vehicles based on sequential bayesian decision networks and dynamic inter-vehicular assessment. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 2344–2351. [Google Scholar]

- Xiao, D.; Xu, X.; Kang, S. Paving the Way for Evaluation of Connected and Autonomous Vehicles in Buses-Preliminary Analysis. IEEE Access 2020, 8, 6162–6167. [Google Scholar] [CrossRef]

- Papadoulis, A. Safety Impact of Connected and Autonomous Vehicles on Motorways: A Traffic Microsimulation Study. Ph.D. Thesis, Loughborough University, Loughborough, UK, 2019. [Google Scholar]

- Burns, C.G.; Oliveira, L.; Thomas, P.; Iyer, S.; Birrell, S. Pedestrian decision-making responses to external human-machine interface designs for autonomous vehicles. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 70–75. [Google Scholar]

- You, C.; Lu, J.; Filev, D.; Tsiotras, P. Highway traffic modeling and decision making for autonomous vehicle using reinforcement learning. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Suzhou, China, 26–30 June 2018; pp. 1227–1232. [Google Scholar]

- Fisac, J.F.; Bronstein, E.; Stefansson, E.; Sadigh, D.; Sastry, S.S.; Dragan, A.D. Hierarchical game-theoretic planning for autonomous vehicles. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 9590–9596. [Google Scholar]

- Ferrando, A.; Dennis, L.A.; Ancona, D.; Fisher, M.; Mascardi, V. Verifying and validating autonomous systems: Towards an integrated approach. In Proceedings of the International Conference on Runtime Verification, Limassol, Cyprus, 10–13 November 2018; pp. 263–281. [Google Scholar]

- Pourmohammad-Zia, N.; Schulte, F.; Negenborn, R.R. Platform-based Platooning to Connect Two Autonomous Vehicle Areas. In Proceedings of the 2020 IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), Rhodes, Greece, 20–23 September 2020; pp. 1–6. [Google Scholar]

- Zhao, W.; Ngoduy, D.; Shepherd, S.; Liu, R.; Papageorgiou, M. A platoon based cooperative eco-driving model for mixed automated and human-driven vehicles at a signalised intersection. Transp. Res. Part C Emerg. Technol. 2018, 95, 802–821. [Google Scholar] [CrossRef]

- Teixeira, M.; d’Orey, P.M.; Kokkinogenis, Z. Simulating collective decision-making for autonomous vehicles coordination enabled by vehicular networks: A computational social choice perspective. Simul. Model. Pract. Theory 2020, 98, 101983. [Google Scholar] [CrossRef]

- Jin, S.; Sun, D.H.; Liu, Z. The Impact of Spatial Distribution of Heterogeneous Vehicles on Performance of Mixed Platoon: A Cyber-Physical Perspective. KSCE J. Civ. Eng. 2021, 25, 303–315. [Google Scholar] [CrossRef]

- Mushtaq, A.; ul Haq, I.; un Nabi, W.; Khan, A.; Shafiq, O. Traffic Flow Management of Autonomous Vehicles Using Platooning and Collision Avoidance Strategies. Electronics 2021, 10, 1221. [Google Scholar] [CrossRef]

- Alves, G.V.; Dennis, L.; Fernandes, L.; Fisher, M. Reliable Decision-Making in Autonomous Vehicles. In Validation and Verification of Automated Systems; Springer: Berlin/Heidelberg, Germany, 2020; pp. 105–117. [Google Scholar]

- Chang, B.J.; Hwang, R.H.; Tsai, Y.L.; Yu, B.H.; Liang, Y.H. Cooperative adaptive driving for platooning autonomous self driving based on edge computing. Int. J. Appl. Math. Comput. Sci. 2019, 29, 213–225. [Google Scholar] [CrossRef]

- Kuderer, M.; Gulati, S.; Burgard, W. Learning driving styles for autonomous vehicles from demonstration. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 2641–2646. [Google Scholar]

- Aranjuelo, N.; Unzueta, L.; Arganda-Carreras, I.; Otaegui, O. Multimodal deep learning for advanced driving systems. In Proceedings of the International Conference on Articulated Motion and Deformable Objects, Palma de Mallorca, Spain, 12–13 July 2018; pp. 95–105. [Google Scholar]

- Oliveira, G.L.; Burgard, W.; Brox, T. Efficient deep models for monocular road segmentation. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 4885–4891. [Google Scholar]

- Ding, L.; Wang, Y.; Laganiere, R.; Huang, D.; Fu, S. Convolutional neural networks for multispectral pedestrian detection. Signal Process. Image Commun. 2020, 82, 115764. [Google Scholar] [CrossRef]

- Guo, Y.; Liu, Y.; Georgiou, T.; Lew, M.S. A review of semantic segmentation using deep neural networks. Int. J. Multimed. Inf. Retr. 2018, 7, 87–93. [Google Scholar] [CrossRef]

- Schwarting, W.; Alonso-Mora, J.; Rus, D. Planning and decision-making for autonomous vehicles. Annu. Rev. Control. Robot. Auton. Syst. 2018, 1, 187–210. [Google Scholar] [CrossRef]

- Hubmann, C.; Becker, M.; Althoff, D.; Lenz, D.; Stiller, C. Decision making for autonomous driving considering interaction and uncertain prediction of surrounding vehicles. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 1671–1678. [Google Scholar]

- Liu, W.; Kim, S.W.; Pendleton, S.; Ang, M.H. Situation-aware decision making for autonomous driving on urban road using online POMDP. In Proceedings of the 2015 IEEE Intelligent Vehicles Symposium (IV), Seoul, Republic of Korea, 28 June–1 July 2015; pp. 1126–1133. [Google Scholar]

- Zhou, B.; Schwarting, W.; Rus, D.; Alonso-Mora, J. Joint multi-policy behavior estimation and receding-horizon trajectory planning for automated urban driving. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 2388–2394. [Google Scholar]

- Brechtel, S.; Gindele, T.; Dillmann, R. Probabilistic decision-making under uncertainty for autonomous driving using continuous POMDPs. In Proceedings of the 17th international IEEE conference on intelligent transportation systems (ITSC), Qingdao, China, 8–11 October 2014; pp. 392–399. [Google Scholar]

- Markkula, G.; Romano, R.; Madigan, R.; Fox, C.W.; Giles, O.T.; Merat, N. Models of human decision-making as tools for estimating and optimizing impacts of vehicle automation. Transp. Res. Rec. 2018, 2672, 153–163. [Google Scholar] [CrossRef]

- Li, L.; Ota, K.; Dong, M. Humanlike driving: Empirical decision-making system for autonomous vehicles. IEEE Trans. Veh. Technol. 2018, 67, 6814–6823. [Google Scholar] [CrossRef]

- Jiménez, F.; Naranjo, J.E.; Gómez, Ó. Autonomous collision avoidance system based on accurate knowledge of the vehicle surroundings. IET Intell. Transp. Syst. 2015, 9, 105–117. [Google Scholar] [CrossRef]

- Sezer, V. Intelligent decision making for overtaking maneuver using mixed observable markov decision process. J. Intell. Transp. Syst. 2018, 22, 201–217. [Google Scholar] [CrossRef]

- Gu, X.; Han, Y.; Yu, J. A novel lane-changing decision model for autonomous vehicles based on deep autoencoder network and XGBoost. IEEE Access 2020, 8, 9846–9863. [Google Scholar] [CrossRef]

- Othman, K. Public acceptance and perception of autonomous vehicles: A comprehensive review. AI Ethics 2021, 1, 355–387. [Google Scholar] [CrossRef]

| SDF Problem | Use of ML | Challenges of ML | New Research | |

|---|---|---|---|---|

| ABdelzaher | Physics-based and human-driven IF | Big data analysis if edge sensing | Unlabeled data | Coordination of DL through multiple AI/ML networks |

| Basch | User augmentation | High-dimensional learning | Heterogeneous analysis | Model based methods to address the unknown |

| Baines | Contextual support | Interpretable analysis | Determining various users | Explainable results |

| Chong | Data Association | Training from data | Relevant Models | Context-based AI |

| Koch | Perceiving and action | Data processing for object assessment | Combining data and models for situation assessment | Need common terms for ethical, social and usable deployment. |

| Leung | Image fusion | Change Detection | Real-time labeling | Joint multimodal image data fusion |

| Pham | Multi domain coordination | Rapidly learn, adapt, and reason to act. | Interface in sparse and congested areas | Learning in the edge |

| Type | Advantages | Disadvantages | Max Working Distance |

|---|---|---|---|

| MMW-RADAR | 1. Long working distance 2. Available for radial velocity 3. Applicable for all weather conditions | 1. Not suitable for static objects 2. Frequent false alarm generation | 5–200 m |

| Camera | 1. Excellent discernibility 2. Available lateral velocity 3. Available for color distribution | 1. Heavy calculation 2. Light interference 3. Weather susceptible | 250 m (depending on the lens) |

| LiDAR | 1. Wide field of view 2. High range resolution 3. High angle resolution | 1. Insufferable for bad weather 2. High price | 200 m |

| Ultrasonic | 1. Inexpensive | 1. Low resolution 2. Inapplicable for high speed | 2 m |

| DSRC | 1. Applicable for high speed (up to 150 km/h) 2. Relatively matured technology 3. Low latency (0.2 ms) | 1. Low data rate 2. Small coverage | 300–100 m |

| LTE-V2X | 1. Long working distance 2. Relatively high data transmission rate (Up to 300 mbps) | 1. High latency in long distances (>1 s) 2. Inapplicable for time-critical events | Up to 2 km |

| 5G-V2X | 1. Ultra-high data transmission rate 2. Low latency (<80 ms) 3. High bandwidth 4. Applicable for high speed (up to 500 km/h) | 1. Immature application | 100–300 m |

| Scenario | Specific Task | Ref | Sensor Types |

|---|---|---|---|

| Perception of Moving Objects in Traffic Environment (Including pedestrians, bicycles, vehicles, etc.) | Pedestrians | [56,80] | L |

| [81] | RL | ||

| [57,82] | CL | ||

| [83] | RC | ||

| Vehicle | [62,83,84,85,86,87] | RC | |

| [88,89] | CL | ||

| [90] | RCLUV | ||

| [91,92] | GI | ||

| [93] | GL | ||

| [94] | VG | ||

| [95] | LV | ||

| [81] | RL | ||

| [96] | CLGI | ||

| [97] | RL | ||

| Pedestrian and Vehicle | [98] | R | |

| [88,99,100,101] | RC | ||

| [102] | RCLGI | ||

| [103] | LV | ||

| [47] | CL | ||

| [51] | CL | ||

| Lane detection, Obstacle Detection, and Path Planning | [104] | RCLG | |

| [105] | RCL | ||

| [58,82] | CL | ||

| [95] | VG | ||

| [77] | CG | ||

| [106] | L | ||

| Reconstruction and Visualization of the Front Area | Safety Zone Division | [107] | RC |

| [108] | RC | ||

| Motion Analysis | [96] | CLGI | |

| [82] | CL | ||

| Visualization | [80] | L | |

| [44] | RC | ||

| [69] | CL | ||

| Safety Zone Construction and Collision Warning | Obstacle Avoidance | [109] | CL |

| [87] | RC | ||

| [109] | C | ||

| [94] | VG | ||

| Safety Zone Division | [107,110] | RC | |

| [43] | LC | ||

| Multisensory Calibration and Data Fusion Platform | Data Fusion Platform | [76,111,112] | N/A |

| [112] | RCL | ||

| [69] | CLGI | ||

| Multi sensor Calibration | [113,114] | RC | |

| [115,116,117] | CL | ||

| Object Characteristics | Driver Behaviour | [51] | CL |

| Occupancy Probability | Parking Space | [68] | CL |

| Dataset | Year | Hours | Traffic Scenario | Diversity |

|---|---|---|---|---|

| KITTI [118] | 2012 | 1.5 | Urban, Suburban, Highway | - |

| Waymo [119] | 2019 | 6.4 | Urban, Suburban | Locations |

| nuScenes [120] | 2019 | 5.5 | Urban, Suburban, Highway | Locations, Weather |

| ApolloScape [121] | 2018 | 2 | Urban, Suburban, Highway | Weather, Locations |

| PandaSet [122] | 2021 | - | Urban | Locations |

| EU Long-term [123] | 2020 | 1 | Urban, Suburban | Seasons |

| Brno Urban [124] | 2020 | 10 | Urban, Highway | Weather |

| A * 3D [125] | 2020 | 55 | Urban | Weather |

| RELLIS [126] | 2021 | - | Suburban | - |

| Cirrus [127] | 2021 | - | Urban | - |

| HUAWEI ONCE [128] | 2021 | 144 | Urban, Suburban | Weather, Locations |

| Citation | Model | Advantages | Disadvantages | Drawbacks Rectified from Previous Study (?) |

|---|---|---|---|---|

| [120] | MM | Use of large 3D dataset for evaluation | Have not discussed image point level semantic labels. | ✓ |

| [49] | MM | Effectively handled 3D image data using CARLA | Unexploited to evaluate multisensory data | ✓ |

| [50] | MM | Evaluated their model using heterogeneous dataset. | Missed testing in real-time robotic environment. | ✓ |

| [129] | ML | Better in handling larger datasets in real-time scenarios. | Not expected level of accuracy (74%) | ✓ |

| [130] | ML | Enhanced accuracy (84%) | Can use advanced Fuzzy concepts optimize their model | ✓ |

| [131] | ML | An effective optimization method for heterogeneous data | Difficulty in obtaining proper metrics. | ✓ |

| [132] | ML | Involved more metrics to enhance the accuracy | Missed collecting data from different sensor points | ✓ |

| [68] | ML | Uses different data points collected using magnetic sensors | Complicated fuzzy rules | ✓ |

| [133] | ML | More accuracy | Evaluation done with minimum dataset. | ✓ |

| [66] | ML | Outperforms existing LiDAR and image based fusion models | Consumes more optimization time | ✓ |

| [97] | ML | CNN framework improves optimization time of fusion | Minimum classes. | ✓ |

| [67] | ML | Multiple classification | Needs changes in network topologies and training techniques | ✓ |

| [51] | ML | Eliminates the need to design and train separate network blocks | Missing complex multimodal context | ✓ |

| [134] | ML | Uses multiple inputs | Did not use structural restrictions. | ✓ |

| [69] | ML | Used structured CNN architecture to fuse data | Lacks optimization problems. | ✓ |

| [49] | ML | Better optimization of parameters using advanced statistics | Needs more real-world evaluation | ✓ |

| [135] | ML | Advanced image transformation using real time data. | The model misses tackling data missed from defective sensors. | ✓ |

| Manufacturer | 2015 | 2016 | 2017 |

|---|---|---|---|

| Google/Waymo | 1244 | 5128 | 5596 |

| Nissan | 14 | 146 | 208 |

| Delpi | 41 | 17.5 | 22.4 |

| Mercedes Benz | 1.5 | 2 | 4.5 |

| Citation | Event | Advantages | Disadvantages | Drawbacks Rectified from Previous Study (?) |

|---|---|---|---|---|

| [141] | LC | Instant decisions making (Intelligent agent) | More time-consuming to train new models | ✓ |

| [142] | LC | Minimizes training time | Missing detailed analysis on various lane changing scenarios | ✓ |

| [143] | LC | Considers all lane changing possibilities (85%) accuracy | Used limited datasets to evaluate the model | ✓ |

| [144] | LC | Optimal decision-making | Efficiency of model over many metrics not discussed properly. | ✓ |

| [145] | CA | Makes effective decision in limited visibility scenarios | Requires more detailed analysis | ✓ |

| [146] | CA | More decision rules | Evaluation done with minimum dataset | ✓ |

| [147] | CA | Effective decision-making with constrained dynamic optimization | Needs adaptive optimal mechanisms to handle dynamic scenarios | ✓ |

| [94] | CA | Innovative DSRC based model to avoid rear-end collision | Needs more real-time scenarios to evaluate the model | ✓ |

| Citation | Event | Advantages | Disadvantages | Drawbacks Rectified from Previous Study (?) |

|---|---|---|---|---|

| [148] | ME | Frames multiple decision rules | Short response time, safety mechanism not included | ✓ |

| [149] | ME | Covers safety metrics. Uses various features for model evaluation | Uses minimum dataset for model evaluation | ✓ |

| [150] | ME | Model works for both AVs and CAVs | Difficulty in accessing real word CAV data | ✓ |

| [154] | ME | Use real-time data for evaluation | No full-fledged data for evaluation (partially observed) | ✓ |

| [151] | ME | Discuss various possibilities of pedestrian behavior | Needs more research in ideal communication method between AVs and pedestrians | ✓ |

| [152] | ME | Better optimized approach for overtaking and tailgating decisions | Fewer cases to evaluate the model | ✓ |

| [153] | ME | Model tested using real-time data. | Efficiency of the model not discussed. | ✓ |

| Citation | Event | Advantages | Disadvantages | Drawbacks Rectified from Previous Study (?) |

|---|---|---|---|---|

| [155] | PL | Simplifies the demand in resource poling. | Limited scenarios used for evaluation. | ✓ |

| [156] | PL | Uses more evaluating scenarios. | Cannot handle complex scenarios. | ✓ |

| [157] | PL | Handles larger datasets. | Time-consuming to optimize the model. | ✓ |

| [158] | PL | Good optimized model to handle spatial data. | Needs to consider mixed platooning issues. | ✓ |

| [159] | PL | Considers all platooning cases. | Latency issues affecting accuracy. | ✓ |

| [160] | PL | Can better handle emergency scenarios. | Needs valid proof to verify decision-making. | ✓ |

| Reference No. | Vehicle Type | Category | Events | Methodology | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| AV | Manual | Survey | Model | LC | AC | PC | CC | AI/ML | MM | |

| [167] | ✓ | × | ✓ | × | ✓ | × | × | × | × | ✓ |

| [144] | ✓ | × | × | ✓ | ✓ | × | × | × | × | ✓ |

| [153] | ✓ | × | × | ✓ | ✓ | ✓ | × | × | ✓ | × |

| [143] | ✓ | × | × | ✓ | × | × | × | × | × | ✓ |

| [148] | ✓ | × | × | ✓ | ✓ | ✓ | × | × | × | ✓ |

| [146] | ✓ | × | × | ✓ | ✓ | ✓ | × | × | ✓ | ✓ |

| [152] | ✓ | × | × | ✓ | ✓ | ✓ | ✓ | × | × | ✓ |

| [149] | × | ✓ | ✓ | × | ✓ | ✓ | × | × | × | × |

| [154] | ✓ | × | × | ✓ | ✓ | × | × | × | × | ✓ |

| [151] | ✓ | × | × | ✓ | × | × | ✓ | × | ✓ | × |

| [150] | ✓ | × | × | ✓ | × | ✓ | × | × | ✓ | × |

| [160] | ✓ | × | × | ✓ | ✓ | ✓ | ✓ | × | × | ✓ |

| [147] | ✓ | × | × | ✓ | ✓ | ✓ | × | ✓ | × | ✓ |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ignatious, H.A.; El-Sayed, H.; Khan, M.A.; Mokhtar, B.M. Analyzing Factors Influencing Situation Awareness in Autonomous Vehicles—A Survey. Sensors 2023, 23, 4075. https://doi.org/10.3390/s23084075

Ignatious HA, El-Sayed H, Khan MA, Mokhtar BM. Analyzing Factors Influencing Situation Awareness in Autonomous Vehicles—A Survey. Sensors. 2023; 23(8):4075. https://doi.org/10.3390/s23084075

Chicago/Turabian StyleIgnatious, Henry Alexander, Hesham El-Sayed, Manzoor Ahmed Khan, and Bassem Mahmoud Mokhtar. 2023. "Analyzing Factors Influencing Situation Awareness in Autonomous Vehicles—A Survey" Sensors 23, no. 8: 4075. https://doi.org/10.3390/s23084075

APA StyleIgnatious, H. A., El-Sayed, H., Khan, M. A., & Mokhtar, B. M. (2023). Analyzing Factors Influencing Situation Awareness in Autonomous Vehicles—A Survey. Sensors, 23(8), 4075. https://doi.org/10.3390/s23084075