Abstract

Critically ill patients often lack cognitive or communicative functions, making it challenging to assess their pain levels using self-reporting mechanisms. There is an urgent need for an accurate system that can assess pain levels without relying on patient-reported information. Blood volume pulse (BVP) is a relatively unexplored physiological measure with the potential to assess pain levels. This study aims to develop an accurate pain intensity classification system based on BVP signals through comprehensive experimental analysis. Twenty-two healthy subjects participated in the study, in which we analyzed the classification performance of BVP signals for various pain intensities using time, frequency, and morphological features through fourteen different machine learning classifiers. Three experiments were conducted using leave-one-subject-out cross-validation to better examine the hidden signatures of BVP signals for pain level classification. The results of the experiments showed that BVP signals combined with machine learning can provide an objective and quantitative evaluation of pain levels in clinical settings. Specifically, no pain and high pain BVP signals were classified with 96.6% accuracy, 100% sensitivity, and 91.6% specificity using a combination of time, frequency, and morphological features with artificial neural networks (ANNs). The classification of no pain and low pain BVP signals yielded 83.3% accuracy using a combination of time and morphological features with the AdaBoost classifier. Finally, the multi-class experiment, which classified no pain, low pain, and high pain, achieved 69% overall accuracy using a combination of time and morphological features with ANN. In conclusion, the experimental results suggest that BVP signals combined with machine learning can offer an objective and reliable assessment of pain levels in clinical settings.

1. Introduction

Pain acts as a biomarker of numerous injuries, disorders, or stress conditions and assists the brain by providing a warning to operate against abnormalities in the body [1]. Pain serves as an autonomic warning mechanism to avoid likely alarming situations, for example, headaches that may indicate fatigue or stress and chest pains may be a sign of heart disease. Pain detection and sensation are essential functions of the human body and are based on the brain, spinal cord, and peripheral nervous system (PNS) [2]. Pain-processing mechanisms help prevent possible life-threatening conditions and are critical for survival [3]. These pain-processing mechanisms include recording and analyzing nociceptive sensory information, shifting the focus of attention toward pain processing, holding the information associated with pain in working memory, establishing quick communication with the motor system to prevent further harm, and avoiding future damage through memory encoding the current problem [4].

Pain is a highly subjective experience, and there is a lack of clinically available diagnostic tools to assess it objectively. Pain sensation and its tolerance to the body varies among individuals. There are two prominent methods employed for the assessment of pain in clinical procedures: self-reported and clinical assessment. In self-reported approaches, the pain of the patient is measured through verbal communication or numeric self-rating scales, such as verbal descriptor scales, the McGill pain questionnaire [5], or visual analog scales [6]. In contrast, pain measurement through clinical judgment relies on testing and learning from observations of the type, significance, and context of the patient’s pain perception [7]. The self-reporting method is considered the gold standard and presents the most valid assessment in clinical practices. However, clinical assessments can be used when self-reports are not available or are unreliable.

Self-report-based pain assessment furnishes significant clinical information and is considered valid in most cases, but it fails in certain scenarios [8]. For instance, patients who suffer from advanced dementia, communication disorders, intellectual disabilities, or are in a state of coma or in a minimally conscious condition may not be able to provide sufficient and accurate self-reports of pain [9,10,11]. An inaccurate assessment of these patients may lead to suboptimal or improper pain management, which may lead to other clinical complications, such as depression and psychological distress [12]. In addition, the self-reported pain assessment is highly subjective and very difficult to acquire continuously or in real time. Therefore, there is a need for more objective metrics to measure human pain in clinical practice to improve pain management, reduce risk factors, and contribute to a more valid and reliable diagnosis.

Previous studies have mainly focused on the detection and classification of pain through analysis of patterns of human behavior, such as facial expressions [13,14,15], body motions [16], and vocalizations [17]. Behavior-based assessment techniques are not suitable to be applied to patients suffering from paralysis or other motor diseases impacting their behaviors. Significant information can be extracted from observation of an individual’s face about the affective state, including pain. However, pain detection using facial expressions requires targeting a particular region of the subject’s face, which may become very complex and tedious in some clinical environments. In addition, facial expression-based pain assessment can be very difficult to use for subjects who are in a prone position; head posture and movement tracking are additional issues to consider. Keeping in view the aforementioned problems, research has also diverted toward developing physiological signal-based pain assessment approaches that do not depend on the individual’s behaviors [18,19,20].

Existing research includes pain analysis through various physiological signals. These physiological-based assessments includes electrodermal activity (EDA) [21], heart rate variability [22,23], electroencephalography (EEG) [24], electromyography (EMG) [25], and functional near-infrared spectroscopy fNIRS [4,7,26]. Most of the existing research employing physiological signals for pain assessment provides qualitative analysis for differentiating between pain and no pain conditions using machine learning [27]. In this context, machine learning has been fundamental for the success of the use of physiological signals in the study of pain; for a comprehensive review of machine learning in pain research, the interested reader is referred to [28]. The results of these physiological-based studies using machine learning demonstrate that the classification and identification of human pain are plausible.

Although current studies have shown promising results, there is still a lack of acceptable pain detection and classification method based on low-cost and convenient physiological signals. With the growing presence of wearable technology, it is possible to design a computer-aided diagnosis system for pain assessment using non-invasive wearable sensors. Photoplethysmography (PPG) is a commonly available sensing modality for physiological monitoring that provides blood volume pulse (BVP) information. As compared to impedance plethysmography, PPG does not need skin contact [29]. BVP captures even slight variations induced due to modulation perfusion of skin tissue using visible or IR sensing technology [30]. As compared to multi-channel systems (e.g., EEG, fNIRS), PPG is computationally efficient, as it has only one channel. Another advantage of PPG is that is less obstructive than other sensing technologies (e.g., ECG and EMG) in clinical settings, as it can be placed on the earlobe or the fingers.

Therefore, the objective of this study is to explore variations in pain intensities using BVP signals to develop an efficient pain classification system. The main contributions of this study are listed below:

- A new BVP signal dataset featuring various pain intensities was recorded by inducing pain at different anatomical locations of healthy human subjects.

- The exploration of time, frequency, and morphological features extracted from BVP as a potential indicator of various pain levels.

- The comparison of a wide range of machine learning classification algorithms for recognition of pain in different experimental configurations.

- The experimental analysis demonstrates that the BVP signal is a strong candidate to be employed for pain assessment in clinical settings.

This article is structured as follows: Section 2 “Materials and methods” provides details about the pain dataset and developed methodologies for the assessment of pain BVP signals. Section 3 “Results” illustrates the experimental results of the BVP signal analysis. The discussion of results and comparison with previous studies is also provided in Section 4. Finally, Section 5 concludes this article and provides future research directions.

2. Materials and Methods

2.1. Participants

Twenty-two participants (12 F/10 M) took part in the experiment. Their age ranged from 19 to 36 years old (mean age std). No participants reported a prior history of neurological or psychiatric disorder, a current unstable medical condition, chronic pain, regularly taking medications, or being under medication at the time of testing. Participants were given a detailed explanation of the experimental procedures upon their arrival. Written informed consent was obtained before the start of the experiment. The experimental procedures involving human subjects described in this paper were approved by the University of Canberra’s Human Ethics Committee (number: 11837).

2.2. Experimental Procedure and Dataset

All experiments were conducted at the Human–Machine Interface Laboratory at the University of Canberra, Australia. The participants were seated on a chair in front of a table with both arms resting on the table. The blood volume pulse (BVP) sensor was placed on the middle finger of the left hand, the sensor was made by Biosignal plux (Lisbon, Portugal) [31]. On the right arm, the electrodes of a transcutaneous electrical nerve stimulation (TENS) machine (Medihightec Medical Co., Ltd., Taiwan) were placed on the inner forearm and on the back of the hand. These two anatomical locations will be used to explore the possibility to identify the source of pain in our future work. The location and intensity of pain stimulus were counterbalanced to avoid habituation to repeated experimental pain and to avoid confounding due to order effects.

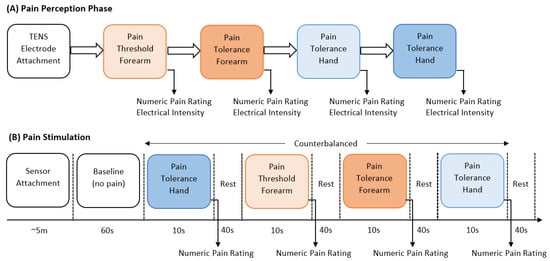

The experiment consisted of two main parts, the identification of individual pain perceptions and the pain stimulation part. Figure 1 presents a schematic representation of the experimental procedure. In the first part of the experiment, pain perceptions were obtained using the quantitative sensory testing (QST) protocol [32]; no sensor was used in this part of the experiment. The QST protocol is used to determine an individual’s pain threshold and pain tolerance. We defined pain threshold (low pain) as the lowest stimulus intensity at which stimulation becomes painful, and pain tolerance (high pain) as the highest intensity of pain at which stimulus becomes unbearable [7]. The participants were exposed to gradually increasing stimulus and were instructed to verbally rate (0 = ‘no pain’, 10 = ‘maximum pain’) the pain intensity when the stimulation became painful (pain threshold) and then when the stimulation became unbearable (pain tolerance). The intensity of the TENS machine, in which the threshold and tolerance of pain occurred, was recorded to be used as the intensity during the stimulation part.

Figure 1.

Schematic representation of stimulation and perception of pain.

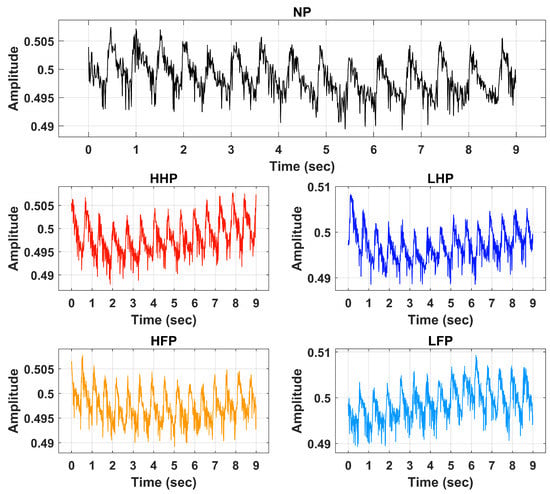

In the second part, the pain intensity and anatomical location were studied. Before the start of the electrical pain stimulation sequence, the physiological sensor was placed on the hand of the participant. At the start of the experiment, a 60 s baseline period was recorded, in which the participants were instructed to remain calm; this baseline period served as the no-pain condition for the classification tasks. After that, the counterbalanced design alternated stimuli intensity (low or high) and location (forearm or hand). Six repetitions with a duration of 10 s for each stimulus were recorded. Immediately after each stimulus, the participants were asked to verbally rate the stimulation using the same scale (0 = no pain, 10 = maximum pain) used during the pain perception. After rating the pain intensity, a 40 s rest period was offered to allow all physiological signals to return to baseline. Each experiment lasted for approximately one hour (30 min preparation and individual pain perception, and 30 min pain stimulation experiment). Table 1 provides a summary with all details about the dataset employed in this study. Raw BVP signals of No Pain (NP), High Hand Pain (HHP), High Forearm Pain (HFP), Low Hand Pain (LHP), and Low Forearm Pain (LFP) are shown in Figure 2.

Table 1.

Summary of the dataset used for the detection and classification of pain.

Figure 2.

Raw BVP signals of No Pain (NP), High Hand Pain (HHP), High Forearm Pain (HFP), Low Hand Pain (LHP), and Low Forearm Pain (LFP).

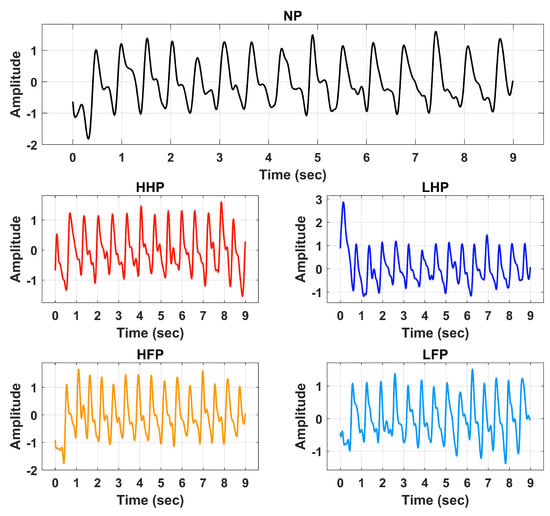

2.3. BVP Signal Preprocessing

Signal preprocessing is a crucial step in signal analysis and classification frameworks. Preprocessing is performed to remove the unwanted frequencies and offsets incurred during data recording activities. In this research, the BVP signals of various pain levels were first preprocessed through an IIR band pass filter of the 4th order. The passband frequency range of 0.5 Hz to 5 Hz was selected for the filtration operation. The filter was designed to suppress the DC offset contained by the input signal. It also efficiently attenuates high-frequency components and harmonics. Figure 3 illustrates the preprocessed BVP signals of NP, HHP, HFP, LHP, and LFP.

Figure 3.

Processed signals of No Pain (NP), High Hand Pain (HHP), High Forearm Pain (HFP), Low Hand Pain (LHP), and Low Forearm Pain (LFP).

2.4. Feature Extraction

Features express the most important patterns of the signal data in a binary, categorical, or continuous form. The objective of the feature extraction step is to represent signal data in a simplified and compact manner having maximum representation from the raw data. Feature extraction is performed after preprocessing and signal denoising operations. The extracted features are identified to possess minimum differences within the same class and maximum differences between centroids of other classes. In this study, we extracted features of various types from the BVP signal to better classify pain and its intensities. These features include time, frequency, and morphological type features (explained below). In general, pain causes sympathetic nervous system activation, leading to vasoconstriction and reduced blood flow to the affected area. This can result in a decrease in BVP amplitude in the affected area, indicating reduced blood volume. This variation in signal amplitude is better captured through time and morphological features. Frequency domain analysis of BVP signals can provide valuable insights into changes in the signal due to pain stimulation, particularly in terms of sympathetic and parasympathetic activity. For each classification task, the analysis is performed using an individual set of features as well as their different combination in order to determine the optimal features.

2.4.1. Time Features

The shape of BVP is normally divided into two periods. The rising part of the wave is called the anacrotic phase, and the falling edge of the pulse is known as the catacrotic phase. The first part is related to the systole, and the second phase is concerned with diastole and wave reflections from the periphery. A set of twelve-time domain features such as mean (M), variance (V), skewness (S), kurtosis (K), crest factor (CF), shape factor (SF), impulse factor (IF), margin factor (MF), Shannon energy (SE), log energy (LE), mobility (Mob), and complexity (Comp) were extracted from BVP signal to represent the pain and non-pain signals in a compact manner [33]. Table 2 provides a mathematical description of all time-domain features used in this work.

Table 2.

Mathematical description of time and spectral features used in this work.

2.4.2. Frequency Features

Fourier transform maps the preprocessed BVP signal to the frequency domain and exposes the spectral information of the signal. This spectral information helps to extract discriminative features from the BVP signals about various pain conditions. In this study, we extracted a set of twelve frequency domain features, namely, spectral flux (SpF), spectral crest (SpC), spectral flatness (SpFt), spectral centroid (SpCent), spectral kurtosis (SpK), spectral spread (SpSp), spectral roll-off (SpR), spectral slope (SpS), spectral decrease (SpD), spectral entropy (SpE), and mean frequency (SpM) [34]. Mathematical formulation of all spectral features is provided in Table 2.

2.4.3. Morphological Features

Morphological features represent the shape characteristics of the BVP signal of no pain and various pain categories. Analysis revealed that there exist significant differences in the dicrotic notch portion of the BVP signal for various cardiac conditions [35]. In this study, we extracted a group of twelve morphological features. Table 3 provides complete details of selected morphological features used in this study.

Table 3.

Details of morphological features extracted from BVP signals.

2.5. Classification Methods

Detection and classification of pain using extracted time, frequency, and morphological features were performed using shallow classifiers: support vector machines (SVM), adaboost (AB), random forest (RF), and fine-decision tree (Ftree), k-nearest neighbors (KNN), and different versions of artificial neural networks (ANNs). These algorithms are commonly employed for classification tasks in biomedical signals [36,37,38].

KNN is a type of supervised machine learning model typically used for regression and classification applications [33]. KNN determines the class of input data by computing the distance of current features will previous data points. The output class is predicted using class information of the close neighbors. KNN does not have any specific training algorithm, and all training data are used to compare the input features in the prediction stage; therefore, the computational cost of KNN is very high. The value of ‘K’ is selected to determine the number of neighbors to be considered for output prediction. In this work, we employed Fine-KNN (FKNN), where the value of K is set to 1, and Weighted-KNN (WKNN) uses a weighted distance metric to compute the similarity between data points.

Support vector machine (SVM) a supervised learning algorithm that adopts a hyperplane-based mechanism to classify features of various categories. A hyperplane is the form of a separation line designed to separate binary classes. SVM enhances the difference between classes using kernel trick. Data are translated/mapped to higher dimensions using non-linear kernel functions, such as quadratic, cubic, Gaussian, and radial basis functions. In this study, we used Linear-SVM (LSVM), Quadratic-SVM (QSVM), Cubic-SVM (CSVM), Gaussian-SVM (GSVM), and Radial-SVM (RSVM) [36,39,40].

Ensemble classification algorithms are based on numerous classifiers that train multiple hypotheses to address the same problem. RF is constructed using a set of decision tree classifiers to distribute the feature data into multiple classes fitting to tree branches [41]. Output prediction is based on the feature similarity of the random features. The AB (adaptive boosting) ensemble learning algorithm used for the classification of BVP signals of pain uses numerous iterations to construct a single composite powerful learner [42]. This model is created by adding a new weak learner in each round by adjusting the weighting vector in a way that the focus is on correctly predicting the observations that were misclassified in the previous round. As a result, an ensemble of various weak models is generated that has an overall better performance. AB is more resistant to the overfitting problem as compared to other machine learning classifiers.

Artificial neural networks (ANNs) are used to model the complex relationships between inputs and outputs or to find patterns in data [43,44]. Feed-forward neural networks consist of input, output, and hidden layers. The neurons in the input layer get features extracted from the BVP signal as input and pass them to fully connected hidden layers. Hidden layers fine-tune the input weightings until the neural network’s loss function is minimized. Information from the hidden layers is passed to the output layer, which depends on the number of classes in the given dataset. In this study, four types of neural network structures were used for the analysis of pain BVP signals. A narrow neural network (NN) consists of a single hidden layer of size 10. A wide neural network (WNN) is created using 100 neurons in a hidden layer. Two hidden layers, each with 100 neurons, were used in a bi-layered neural network (BLNN). A tri-layered neural network (TLNN) was constructed with three hidden layers, each having 100 neurons. All networks were trained using a backpropagation algorithm that iteratively minimized the mean square error between the output produced by feed-forward networks and target labels. Rectified linear unit (ReLU) was used as an activation function with an iteration limit set to 1000. In the present study, an experimental evaluation of BVP pain assessment was performed on 14 baseline ML models. The reason to maintain default parameters was to avoid building models that were highly optimized for our specific dataset. However, we report results for the five most prominent classification algorithms in all three learning tasks, i.e., QSVM, WKNN, AB, BLNN, and TLNN. The classification parameters of these models are shown in Table 4.

Table 4.

Hyperparameters used for each classifier.

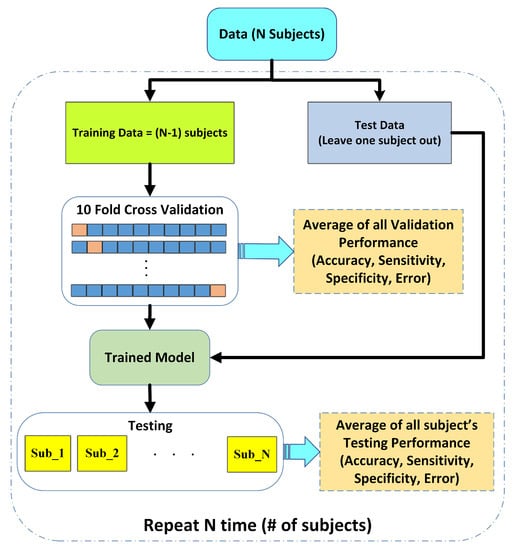

2.6. Leave One Subject out Cross-Validation

The performance of the proposed pain assessment system is evaluated using leave one subject out cross-validation (LOSOCV). Figure 4 illustrates the complete flow of the LOSOCV scheme and the details of the reported performance analysis in this article. In LOSOCV, features belonging to only one subject/person are employed as test sets (Ts), while the remaining subject data are combined to make a training set. LOSOCV provides reliable performance evaluation of the classification model by using the unseen subject’s data during the training stage. Mixing the same subject’s data in both test and training sets gives the classification model prior knowledge and results in biased high performance. Hence, LOSOCV provides a better-generalized assessment of the algorithms for unseen subject data. Validation is carried out using 10-fold cross validation (CV).

Figure 4.

Performance evaluation scheme using leave one subject out cross validation (LOSOCV).

2.7. Performance Evaluation

Experiments were performed by considering pain BVP signals as a ‘positive class’ and ‘non-pain’ as a ‘negative class’. The overall accuracy (Acc) of the model is defined as follows:

where True Positive (TP)—pain signals correctly predicted as pain; false positive (FP)—no-pain BVP signals misclassified as pain class; true negative (TN)—no-pain recordings are rightly identified as no-pain; and false negative (FN)—pain signals were incorrectly predicted as no-pain. Standard performance measures of sensitivity (Sen), specificity (Sp), positive predictive value (PPV), negative predictive value (NPV), and F1-score were also used to consolidate the experimental analysis.

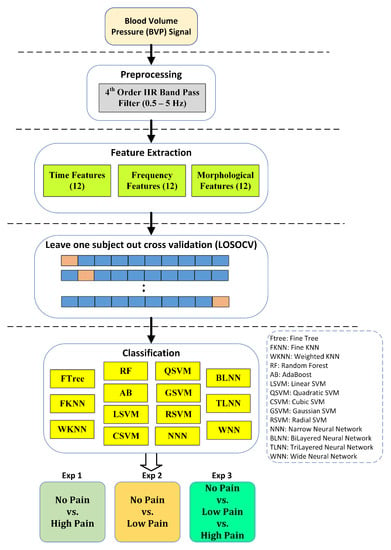

2.8. Overview

Finally, Figure 5 illustrates the flowchart of the steps adopted in this work to develop a pain classification mechanism. In the first step, the blood volume pulse (BVP) signal is denoised using an IIR band pass filter with a cutoff range from 0.5 Hz to 5 Hz. Next, the denoised BVP signal is passed to the feature extraction stage, where a set of time, frequency, and morphological features are computed. The feature dataset was divided into training and testing segments using the leave one subject out cross-validation (LOSOCV) strategy. Individual feature sets and their various combinations were applied to train well-known classification methods, such as support vector machines, ensemble methods, neural networks, k-nearest neighbors, etc.

Figure 5.

Design of study for assessment of pain using BVP signatures. Performance results are reported using the five most consistent classifiers.

Three different experiments were performed for better assessment of BVP signals towards the identification of pain. Experiment 1 is a binary problem and aims to classify no pain against high pain. Experiment 2 aims to distinguish no pain vs. low pain classes of BVP signals. Experiment 3 is a multiclass problem and classifies no pain vs. low pain vs. high pain.

3. Results

Our motivation is to investigate the performance of time, frequency, and morphological features extracted from BVP features with well-known classification techniques. Comprehensive analysis is conducted in three experimental configurations. All experiments were performed in MATLAB 2020 running on a personal laptop (Intel Core i5 with 16 GB RAM). The experimental analysis from each experiment is discussed in detail in the following sections.

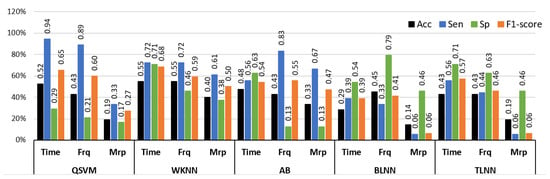

3.1. Experiment 1: No Pain vs. High Pain

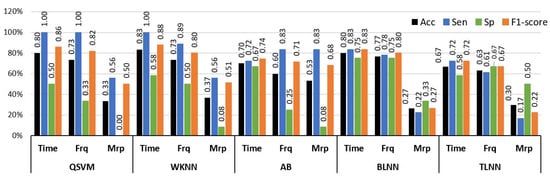

The binary class experiment was designed to distinguish no pain (NP) and high pain (HP) BVP signals collected from arm and forearm positions. This makes the dataset of BVP signals more diverse and enables the algorithm to detect different signatures of pain originating from multiple locations. A set of time, frequency, and morphological features was tested separately with well-known classification methods. Figure 6 shows a graphical comparison of different classifiers with time, frequency, and morphological features. The best performance of 83.3% accuracy, 100% sensitivity, and 58.3% specificity was obtained via time domain features with the WKNN classifier. Frequency domain features offered 76.7% accuracy with BLNN and 73.3% when used in conjunction with WKNN and QSVM. Individual classification ability of morphological features was on the lower side as compared to time and frequency features.

Figure 6.

Performance evaluation with time, frequency (Frq), and morphological (Mrp) features using different classifiers for Experiment 1 (no pain vs. high pain). (QSVM: Quadratic-SVM, WKNN: Weighted-KNN, AB: AdaBoost, BLNN: bi-layered neural network, TLNN: tri-layered neural network).

The analysis of single-domain features exhibited that the use of these features independently was not adequate to provide discriminative information of pain from BVP signals (Figure 6). Therefore, the experimental analysis was extended by including feature sets that were composed of attributes from different domains. Table 5 provides a comprehensive performance evaluation of these combined features and classifiers for the classification of NP and HP BVP signals.

Table 5.

Performance evaluation with combinations of time, frequency (Frq), and morphological (Mrp) features using different classifiers for Experiment 1 (no pain vs. high pain). (QSVM: Quadratic-SVM, WKNN: Weighted-KNN, AB: AdaBoost, BLNN: Bi-layered Neural Network, TLNN: Tri-layered Neural Network). Bold text indicates the best results.

The highest performance results of 96.6% accuracy, 100% sensitivity, and 91.6% specificity were obtained through a hybrid feature set that consists of combined attributes computed from the time, frequency, and morphological domains and the TLNN classifier. The same feature set offered 83.3% mean accuracy with WKNN and 80% with BLNN classification methods (Table 5). A combination of time and frequency features showed 80% accuracy with both QSVM and WKNN classifiers. A combination of time and frequency features with QSVM and WKNN classifiers yielded 80% mean accuracy. An improvement in accuracy was observed by including morphological features with time and frequency features when employed with the TLNN classification model (from 73.3% to 96.6%).

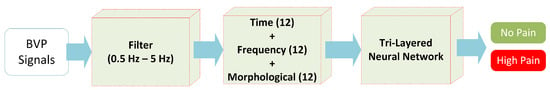

To summarize, Figure 7 shows the simplified view of the classification mechanism of HP and NP BVP signals through hybrid features (time+frequency+morphological) with the 3-layered neural network structure.

Figure 7.

The proposed methodology for experiment 1 (no pain vs. high pain).

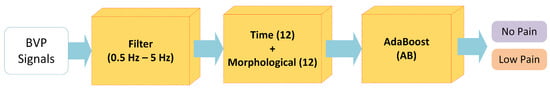

3.2. Experiment 2: No Pain vs. Low Pain

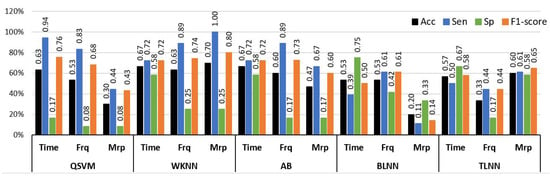

Experiment 2 was designed to analyze the difference between the no pain (NP) and low pain (LP) classes of BVP signals. The LP signals were recorded by stimulating in two sites, i.e., hand and forearm. This was done to increase the diversity of data recorded from the same subjects. This diversity also makes the classification tasks more challenging. Similarly to Experiment 1, a comprehensive analysis was performed using a set of time, frequency, and morphological features with a range of machine learning classifiers. The results of the analysis with individual feature sets are illustrated in Figure 8 in terms of accuracy, sensitivity, and specificity. The highest individual feature performance of 70% accuracy for distinguishing NP and LP BVP signals was obtained via morphological features and the WKNN classifier. The time and frequency domain features offered 66.6% and 63.3% accuracies with AB, and WKNN classifiers, respectively. It is important to highlight that only morphological features with WKNN achieved good accuracy of 70% and sensitivity value of 100%, but the specificity value dropped to 25%. However, this shows that morphological features have better recognition for positive class (sensitivity), which is LP in our case. A balanced performance of 72.2% sensitivity and 58% specificity was obtained with time features and AB classifier.

Figure 8.

Performance evaluation with time, frequency (Frq), and morphological (Mrp) features using different classifiers for Experiment 2 (No Pain vs. Low Pain). (QSVM: Quadratic-SVM, WKNN: Weighted-KNN, AB: AdaBoost, BLNN: bi-layered neural network, TLNN: tri-layered neural network).

The individual feature analysis (Figure 8) demonstrated that a single type of feature was not ample for identifying hidden distinguishable content from BVP signals for NP and LP conditions. Again, the experimental analysis was extended by validating the classification algorithms through hybrid features that were constructed by combining features from different domains. Table 6 provides a comprehensive comparison of different classifiers with a combination of time, frequency, and morphological features. The highest results of 86.6% accuracy, 100% sensitivity, and 66% specificity were obtained through the AB ensemble classifier with a hybrid feature set consisting of time, frequency, and morphological features of BVP signals. While a fusion of time+morphological and frequency+morphological feature sets yielded, with QSVM classifiers, 83.3% and 80% accuracy, respectively. A combination of time+morphological features achieved a performance of 73.3% accuracy with the TLNN classifier.

Table 6.

Performance evaluation with combinations of time, frequency (Frq), and morphological (Mrp) features using different classifiers for Experiment 2 (no pain vs. low pain). (QSVM: Quadratic-SVM, WKNN: Weighted-KNN, AB: AdaBoost, BLNN: bi-layered neural network, TLNN: tri-layered neural network). Bold text indicates the best results.

The results obtained through the AB were the highest as compared to QSVM, WKNN, BLNN, and TLNN classifiers. Figure 9 summarizes the best-performing methodology for the classification of NP and LP BVP signals.

Figure 9.

The proposed methodology for Experiment 2 (no pain vs. low pain).

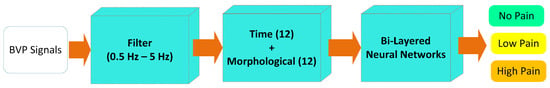

3.3. Experiment 3: No Pain vs. Low Pain vs. High Pain

This experiment was designed to identify different levels of pain (LP and HP) and baseline (NP) BVP signals. HP BVP dataset consists of High Hand Pain (HHP) and High Forearm Pain (HFP), and the LP class consists of BVP recordings of Low Hand Pain (LHP) and Low Forearm Pain (LFP). Again, the analysis of preprocessed BVP signals for these categories was performed by computing time, frequency, and morphological features and classifying them with a range of different machine-learning models.

Figure 10 illustrates the graphical comparison of individual feature sets with QSVM, WKNN, AB, BLNN, and TLNN classifiers. The highest accuracy of 54.7% was obtained via time features with the WKNN classifier. Time features with QSVM provided comparable results with 52.3% accuracy for identifying NP, LP, and HP BVP signals. The highest performance of frequency domain features was similar to the time features achieving 54.7% accuracy with the WKNN classifier. Individual feature analysis showed that the time and frequency domain features have better recognition capability as compared to morphological features.

Figure 10.

Performance evaluation with time, frequency (Frq), and morphological (Mrp) features using different classifiers for Experiment 3 (no pain vs. low pain vs. high pain). (QSVM: Quadratic-SVM, WKNN: Weighted-KNN, AB: AdaBoost, BLNN: bi-layered neural network, TLNN: tri-layered neural network).

In order to perform successful multiclass classification of NP, LP, and HP BVP signals, time, frequency, and morphological features were further examined by using hybrid features composed of multiple domains. Table 7 provides a comprehensive analysis of results for multilevel pain assessment using a combination of two or more feature sets with QSVM, WKNN, AB, BLNN, and TLNN classifiers. The overall best performance of 69% accuracy, 83.3% sensitivity, and 75% specificity were obtained using a combination of time and morphological features with a BLNN classifier. The combination of time+frequency+morphological features also provided comparable results of 64% accuracy with WKNN and 61% accuracy with TLNN.

Table 7.

Performance evaluation with combinations of time, frequency (Frq), and morphological (Mrp) features using different classifiers for Experiment 3 (No Pain vs. Low Pain vs. High Pain). (QSVM: Quadratic-SVM, WKNN: Weighted-KNN, AB: AdaBoost, BLNN: bi-layered neural network, TLNN: tri-layered neural network. Bold text indicates the best results.

Figure 11 illustrates the overall best-performing framework that consists of the filtration stage, a combination of time and morphological features and the BLNN classifier.

Figure 11.

The proposed methodology for Experiment 3 (no pain vs. low pain vs. high pain).

4. Discussion

In this study, we employed different experimental configurations for the assessment of pain intensities using BVP signals. BVP signals were analyzed with various time, frequency, and morphological features using a diverse range of classification methods. A summary of the best results for all experiments is provided in Table 8.

Table 8.

Summary of best-performing features and classification methods for all experiments. Experiment 1: no pain vs. high pain, Experiment 2: no pain vs. low pain, Experiment 3: no pain vs. low pain vs. high pain. Bold text indicates significant results.

Experiment 1 (NP vs. HP) using BVP signals obtained the best results of 96.6% accuracy using a hybrid of time, frequency, and morphological features with the TLNN classifier. Direct comparisons with other studies are difficult because of the use of different experimental conditions (e.g., thermal, mechanical, or electrical stimuli), BVP acquisition systems, sampled populations with different demographics, validation methods, and classification models. However, in relation to other similar studies, our study presented comparable results. For example, Cao et al. [45] performed a classification of No Pain versus high-intensity pain using PPG signals and reported an accuracy of 79.4%. The authors employed empirical mode decomposition-based features combined with SVM for classification. Thiam et al. [46] reported 66% accuracy for the classification of No Pain (baseline) against high-intensity pain using respiratory signals. In comparison to [45,46], the results of our methods are significantly high, i.e., 96.6%. It is important to mention that 65 features were used in [46] as compared to our 36 features. Compared to the results provided in [45,46], our proposed framework achieved 17.2% and 36% increases, respectively.

Experiment 2 was designed to discriminate BVP signal features for NP and Low Pain (LP) states. The proposed method achieved 86.6% accuracy using a combination of time, frequency, and morphological features with the AdaBoost (AB) classifier. In comparison to published studies, Cao et al. reported 81.4% accuracy for no pain versus low-intensity pain using an SVM classifier [45]. In another study [46], authors obtained 50% accuracy for respiratory signal-based low-intensity pain assessment. The proposed method in this study for the binary classification of no pain and low pain levels using BVP signals provided better results.

Experiment 3 was designed to explore the discriminating power of BVP signals for multi-levels of pain intensities with baseline (no pain). The proposed method obtained 69% accuracy for a three-class problem (NP vs. LP vs. HP) using a combination of time and morphological features and a BLNN classifier. As compared to the first two binary experiments, the performance of the multi-class model is slightly low; however, this was expected, as the baseline accuracy in the three-class classification problem would be or 1/3. Another explanation for the low accuracy might be due to the similarities between BVP signals of various pain intensity levels. To our best knowledge, the existing research lacks multi-class pain analysis. Most of the existing research followed a binary classification approach where baseline (no pain) was compared with various pain levels [45,47].

Previous research on pain assessment utilizing PPG signals were unable to introduce models capable of distinguishing between various pain signatures. This deficiency is a crucial concern for pain assessment within the human body, considering the various origins, intensities, and durations of pain (such as peripheral, visceral, emotional, and phantom pain) [4]. Each pain type exhibits unique signatures and is transmitted to the central nervous system through distinct sensory receptors. Therefore, developing machine learning models that can differentiate between multiple pain signatures with varying intensities would be more practical for real-life scenarios. This is particularly critical for patients who are unable to communicate verbally, such as those in a coma or with advanced dementia, or when the source of pain is unclear [48].

The selection and appropriate use of different features and machine learning models significantly contribute towards better performance. In our research, we found that the best-performing models for all experiments include time and morphological features that show their valuable contribution. Typically, pain triggers the activation of the sympathetic nervous system, which induces vasoconstriction and reduces blood flow to the area of pain. Consequently, there is a reduction in the amplitude of BVP in the affected region, reflecting a decrease in blood volume. The changes in signal amplitude are more accurately represented by examining the time and morphological features of the BVP signal. This is confirmed by the findings of this study as well.

Preliminary results presented in this study are promising; however, we acknowledge that this study presents some limitations that should be addressed in our future research. Confounding factors, such as stress or anxiety, can affect the quality of the data, in particular, due to anticipation of the upcoming pain stimulation. Therefore, in our future research experiments, we will try to minimize the participants’ stress as much as possible by asking subjects to keep their eyes closed to avoid pain anticipation [49]. Another limitation of the current study is the short length of pain stimulation (∼9 s) used in this experiment, in clinical contexts pain may have variable onset dynamics; thus, employing stimuli of different lengths will be more realistic in our future research. In the present study, baseline ML classification algorithms were used for the analysis; however, in future work, we will analyze the prediction performance by tuning the hyperparameters of classifiers. Finally, we will validate the obtained features to improve their feasibility in more realistic scenarios and their physiological significance and will include participants with a broader age range, so the learning model could generalize better to different populations.

5. Conclusions

In this study, we aimed to assess pain and its intensities using BVP signals in a scenario that is independent of the person being tested. To achieve this, we collected a new dataset that consisted of BVP recordings from healthy individuals who experienced various levels of pain induced in different locations of their bodies. Our findings demonstrate that BVP signatures exhibit good performance and offer a desirable trade-off between computational cost and accuracy, compared to other modalities. We conducted different experiments with varying pain configurations, utilizing a wide range of features and classifiers. The results of the experiments revealed classification performances of 96.6% (no pain vs. high pain), 86.66% (no pain vs. low pain), and 69% (no pain vs. low pain vs. high pain). These findings demonstrate the potential of BVP signals for pain assessment tasks.

In the future, our research group aims to explore more advanced feature descriptors using BVP and other modalities. We will also explore more advanced classification techniques (e.g., deep learning) that can help us improve the current results [50]. Our future work will also target pain assessment through the inclusion of non-communicable patients and newborns. We also plan to design a portable embedded system for the accurate classification of pain levels using wearables.

Author Contributions

M.U.K.: Design of study, Methodology, Software, Analysis, Interpretation of data, Writing—original draft. S.A.: Design of study, Writing—original draft, Methodology, Software, Validation, Investigation. N.H.: Acquisition of data, Data Curation, Resources, Writing—Proofread. C.J.: Data Curation, Resources, Acquisition of data, Project administration. J.L.: Resources, Data Curation, Writing—Proofread. R.F.-R.: Conceptualization, Acquisition of data, Supervision, Project administration, Writing—review and editing. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The experimental procedures involving human subjects described in this paper were approved by the University of Canberra’s Human Ethics Committee (Number: 11837).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data that support the findings of this study are available from R.F.R., upon reasonable request.

Conflicts of Interest

The authors declare that they have no known competing financial interest or personal relationships that could have appeared to influence the work reported in this paper.

References

- Jain, K.K.; Jain, K.K. The Handbook of Biomarkers; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Marieb, E.N.; Hoehn, K. Human Anatomy & Physiology; Pearson Education: Upper Saddle River, NJ, USA, 2007. [Google Scholar]

- Bornhövd, K.; Quante, M.; Glauche, V.; Bromm, B.; Weiller, C.; Büchel, C. Painful stimuli evoke different stimulus–response functions in the amygdala, prefrontal, insula and somatosensory cortex: A single-trial fMRI study. Brain 2002, 125, 1326–1336. [Google Scholar] [CrossRef] [PubMed]

- Fernandez Rojas, R.; Huang, X.; Ou, K.L. A machine learning approach for the identification of a biomarker of human pain using fNIRS. Sci. Rep. 2019, 9, 5645. [Google Scholar] [CrossRef] [PubMed]

- Melzack, R. The McGill pain questionnaire. Anesthesiology 2005, 103, 199–202. [Google Scholar] [CrossRef]

- McCarthy, M., Jr.; Chang, C.H.; Pickard, A.S.; Giobbie-Hurder, A.; Price, D.D.; Jonasson, O.; Gibbs, J.; Fitzgibbons, R.; Neumayer, L. Visual analog scales for assessing surgical pain. J. Am. Coll. Surg. 2005, 201, 245–252. [Google Scholar] [CrossRef]

- Rojas, R.F.; Huang, X.; Ou, K.L. Toward a functional near-infrared spectroscopy-based monitoring of pain assessment for nonverbal patients. J. Biomed. Opt. 2017, 22, 106013. [Google Scholar]

- Brown, J.E.; Chatterjee, N.; Younger, J.; Mackey, S. Towards a physiology-based measure of pain: Patterns of human brain activity distinguish painful from non-painful thermal stimulation. PLoS ONE 2011, 6, e24124. [Google Scholar] [CrossRef] [PubMed]

- Li, D.; Puntillo, K.; Miaskowski, C. A review of objective pain measures for use with critical care adult patients unable to self-report. J. Pain 2008, 9, 2–10. [Google Scholar] [CrossRef]

- Herr, K.; Bjoro, K.; Decker, S. Tools for assessment of pain in nonverbal older adults with dementia: A state-of-the-science review. J. Pain Symptom Manag. 2006, 31, 170–192. [Google Scholar] [CrossRef]

- Schnakers, C.; Zasler, N.D. Pain assessment and management in disorders of consciousness. Curr. Opin. Neurol. 2007, 20, 620–626. [Google Scholar] [CrossRef]

- Roulin, M.J.; Ramelet, A.S. Pain indicators in brain-injured critical care adults: An integrative review. Aust. Crit. Care 2012, 25, 110–118. [Google Scholar] [CrossRef]

- Lucey, P.; Cohn, J.F.; Matthews, I.; Lucey, S.; Sridharan, S.; Howlett, J.; Prkachin, K.M. Automatically detecting pain in video through facial action units. IEEE Trans. Syst. Man Cybern. Part B (Cybernetics) 2010, 41, 664–674. [Google Scholar] [CrossRef]

- Virrey, R.A.; Liyanage, C.D.S.; Petra, M.I.b.P.H.; Abas, P.E. Visual data of facial expressions for automatic pain detection. J. Vis. Commun. Image Represent. 2019, 61, 209–217. [Google Scholar] [CrossRef]

- Hassan, T.; Seuß, D.; Wollenberg, J.; Weitz, K.; Kunz, M.; Lautenbacher, S.; Garbas, J.U.; Schmid, U. Automatic detection of pain from facial expressions: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1815–1831. [Google Scholar] [CrossRef]

- Young, J.; Siffleet, J.; Nikoletti, S.; Shaw, T. Use of a Behavioural Pain Scale to assess pain in ventilated, unconscious and/or sedated patients. Intensive Crit. Care Nurs. 2006, 22, 32–39. [Google Scholar] [CrossRef]

- Puntillo, K.A.; Morris, A.B.; Thompson, C.L.; Stanik-Hutt, J.; White, C.A.; Wild, L.R. Pain behaviors observed during six common procedures: Results from Thunder Project II. Crit. Care Med. 2004, 32, 421–427. [Google Scholar] [CrossRef]

- Shankar, K.; Subbiah, B.V.; Jackson, D. An empirical approach for objective pain measurement using dermal and cardiac parameters. In Proceedings of the 13th International Conference on Biomedical Engineering, Singapore, 3–6 December 2008; Springer: Berlin/Heidelberg, Germany, 2009; pp. 678–681. [Google Scholar]

- Treister, R.; Kliger, M.; Zuckerman, G.; Aryeh, I.G.; Eisenberg, E. Differentiating between heat pain intensities: The combined effect of multiple autonomic parameters. Pain® 2012, 153, 1807–1814. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Abbod, M.; Shieh, J.S. Pain and stress detection using wearable sensors and devices—A review. Sensors 2021, 21, 1030. [Google Scholar] [CrossRef] [PubMed]

- Kong, Y.; Posada-Quintero, H.F.; Chon, K.H. Real-Time High-Level Acute Pain Detection Using a Smartphone and a Wrist-Worn Electrodermal Activity Sensor. Sensors 2021, 21, 3956. [Google Scholar] [CrossRef]

- Rommel, D.; Nandrino, J.; Jeanne, M.; Logier, R. Heart rate variability analysis as an index of emotion regulation processes: Interest of the Analgesia Nociception Index (ANI). In Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012; IEEE: New York, NY, USA, 2012; pp. 3432–3435. [Google Scholar]

- Faye, P.M.; De Jonckheere, J.; Logier, R.; Kuissi, E.; Jeanne, M.; Rakza, T.; Storme, L. Newborn infant pain assessment using heart rate variability analysis. Clin. J. Pain 2010, 26, 777–782. [Google Scholar] [CrossRef] [PubMed]

- Chen, D.; Zhang, H.; Kavitha, P.T.; Loy, F.L.; Ng, S.H.; Wang, C.; Phua, K.S.; Tjan, S.Y.; Yang, S.Y.; Guan, C. Scalp EEG-Based Pain Detection Using Convolutional Neural Network. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 274–285. [Google Scholar] [CrossRef] [PubMed]

- Oliveira, M.; Machado, A.R.; Chagas, V.; Granado, T.C.; Pereira, A.A.; Andrade, A.O. On the use of evoked potentials for quantification of pain. In Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012; IEEE: New York, NY, USA, 2012; pp. 1578–1581. [Google Scholar]

- Rojas, R.F.; Huang, X.; Romero, J.; Ou, K.L. FNIRS approach to pain assessment for non-verbal patients. In Proceedings of the International Conference on Neural Information Processing, Long Beach, CA, USA, 4–9 December 2017; Springer: New York, NY, USA, 2017; pp. 778–787. [Google Scholar]

- Chu, Y.; Zhao, X.; Han, J.; Su, Y. Physiological signal-based method for measurement of pain intensity. Front. Neurosci. 2017, 11, 279. [Google Scholar] [CrossRef] [PubMed]

- Lötsch, J.; Ultsch, A. Machine learning in pain research. Pain 2018, 159, 623. [Google Scholar] [CrossRef]

- Ling, P.; Siyuan, Y.; Wei, W.; Quan, G.; Bo, G. Assessment of postoperative pain intensity by using photoplethysmography. J. Anesth. 2014, 28, 846–853. [Google Scholar] [CrossRef]

- Argüello-Prada, E.J.; Valencia, R.D.M. On the use of indexes derived from photoplethysmographic (PPG) signals for postoperative pain assessment: A narrative review. Biomed. Signal Process. Control 2023, 80, 104335. [Google Scholar] [CrossRef]

- Hirachan, N.; Mathews, A.; Romero, J.; Rojas, R.F. Measuring cognitive workload using multimodal sensors. In Proceedings of the 2022 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Glasgow, UK, 1–15 July 2022; IEEE: New York, NY, USA, 2022; pp. 4921–4924. [Google Scholar]

- Rolke, R.; Magerl, W.; Campbell, K.A.; Schalber, C.; Caspari, S.; Birklein, F.; Treede, R.D. Quantitative sensory testing: A comprehensive protocol for clinical trials. Eur. J. Pain 2006, 10, 77–88. [Google Scholar] [CrossRef]

- Khan, M.U.; Aziz, S.; Iqtidar, K.; Zaher, G.F.; Alghamdi, S.; Gull, M. A two-stage classification model integrating feature fusion for coronary artery disease detection and classification. Multimed. Tools Appl. 2022, 81, 13661–13690. [Google Scholar] [CrossRef]

- Khan, M.U.; Aziz, S.; Akram, T.; Amjad, F.; Iqtidar, K.; Nam, Y.; Khan, M.A. Expert hypertension detection system featuring pulse plethysmograph signals and hybrid feature selection and reduction scheme. Sensors 2021, 21, 247. [Google Scholar] [CrossRef] [PubMed]

- Elgendi, M. PPG Signal Analysis: An Introduction Using MATLAB®; CRC Press: Boca Raton, FL, USA, 2020. [Google Scholar]

- Khan, M.U.; Aziz, S.; Iqtidar, K.; Fernandez-Rojas, R. Computer-aided diagnosis system for cardiac disorders using variational mode decomposition and novel cepstral quinary patterns. Biomed. Signal Process. Control 2023, 81, 104509. [Google Scholar] [CrossRef]

- Aziz, S.; Khan, M.U.; Iqtidar, K.; Ali, S.; Remete, A.N.; Javid, M.A. Pulse plethysmograph signal analysis method for classification of heart diseases using novel local spectral ternary patterns. Expert Syst. 2022, 39, e13011. [Google Scholar] [CrossRef]

- Iqtidar, K.; Qamar, U.; Aziz, S.; Khan, M.U. Phonocardiogram signal analysis for classification of Coronary Artery Diseases using MFCC and 1D adaptive local ternary patterns. Comput. Biol. Med. 2021, 138, 104926. [Google Scholar] [CrossRef]

- Khan, M.U.; Aziz, S. A novel pulse plethysmograph signal analysis method for identification of myocardial infarction, dilated cardiomyopathy, and hypertension. Turk. J. Electr. Eng. Comput. Sci. 2021, 29, 962–977. [Google Scholar] [CrossRef]

- Aziz, S.; Awais, M.; Khan, M.U.; Iqtidar, K.; Qamar, U. Classification of cardiac disorders using 1D local ternary patterns based on pulse plethysmograph signals. Expert Syst. 2021, 38, e12664. [Google Scholar] [CrossRef]

- Yang, P.; Wang, D.; Zhao, W.B.; Fu, L.H.; Du, J.L.; Su, H. Ensemble of kernel extreme learning machine based random forest classifiers for automatic heartbeat classification. Biomed. Signal Process. Control 2021, 63, 102138. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Prakarsha, K.R.; Sharma, G. Time series signal forecasting using artificial neural networks: An application on ECG signal. Biomed. Signal Process. Control 2022, 76, 103705. [Google Scholar] [CrossRef]

- Khan, A.; Aziz, S.; Bashir, M.; Khan, M.U. IoT and wireless sensor network based autonomous farming robot. In Proceedings of the 2020 International Conference on Emerging Trends in Smart Technologies (ICETST), Karachi, Pakistan, 26–27 March 2020; IEEE: New York, NY, USA, 2020; pp. 1–5. [Google Scholar]

- Cao, R.; Aqajari, S.A.H.; Naeini, E.K.; Rahmani, A.M. Objective pain assessment using wrist-based ppg signals: A respiratory rate based method. In Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Guadalajara, Mexico, 1–5 November 2021; IEEE: New York, NY, USA, 2021; pp. 1164–1167. [Google Scholar]

- Thiam, P.; Schwenker, F. Multi-modal data fusion for pain intensity assessment and classification. In Proceedings of the 2017 Seventh International Conference on Image Processing Theory, Tools and Applications (IPTA), Montreal, QC, Canada, 28 November–1 December 2017; IEEE: New York, NY, USA, 2017; pp. 1–6. [Google Scholar]

- Subramaniam, S.D.; Dass, B. Automated nociceptive pain assessment using physiological signals and a hybrid deep learning network. IEEE Sens. J. 2020, 21, 3335–3343. [Google Scholar] [CrossRef]

- Rohel, A.; Bouffard, J.; Patricio, P.; Mavromatis, N.; Billot, M.; Roy, J.S.; Bouyer, L.; Mercier, C.; Masse-Alarie, H. The effect of experimental pain on the excitability of the corticospinal tract in humans: A systematic review and meta-analysis. Eur. J. Pain 2021, 25, 1209–1226. [Google Scholar] [CrossRef]

- Fernandez Rojas, R.; Liao, M.; Romero, J.; Huang, X.; Ou, K.L. Cortical network response to acupuncture and the effect of the hegu point: An fNIRS study. Sensors 2019, 19, 394. [Google Scholar] [CrossRef]

- Rojas, R.F.; Romero, J.; Lopez-Aparicio, J.; Ou, K.L. Pain assessment based on fnirs using bi-lstm rnns. In Proceedings of the 2021 10th International IEEE/EMBS Conference on Neural Engineering (NER), Virtual, Italy, 4–6 May 2021; IEEE: New York, NY, USA, 2021; pp. 399–402. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).