An Indoor Fingerprint Positioning Algorithm Based on WKNN and Improved XGBoost

Abstract

:1. Introduction

- In view of the shortcomings of the traditional KNN positioning algorithm in practical application, the WKNN algorithm was fused with the XGBoost algorithm in this study to form a positioning algorithm based on WKNN and XGBoost, which improved the large ranging error induced by external interference.

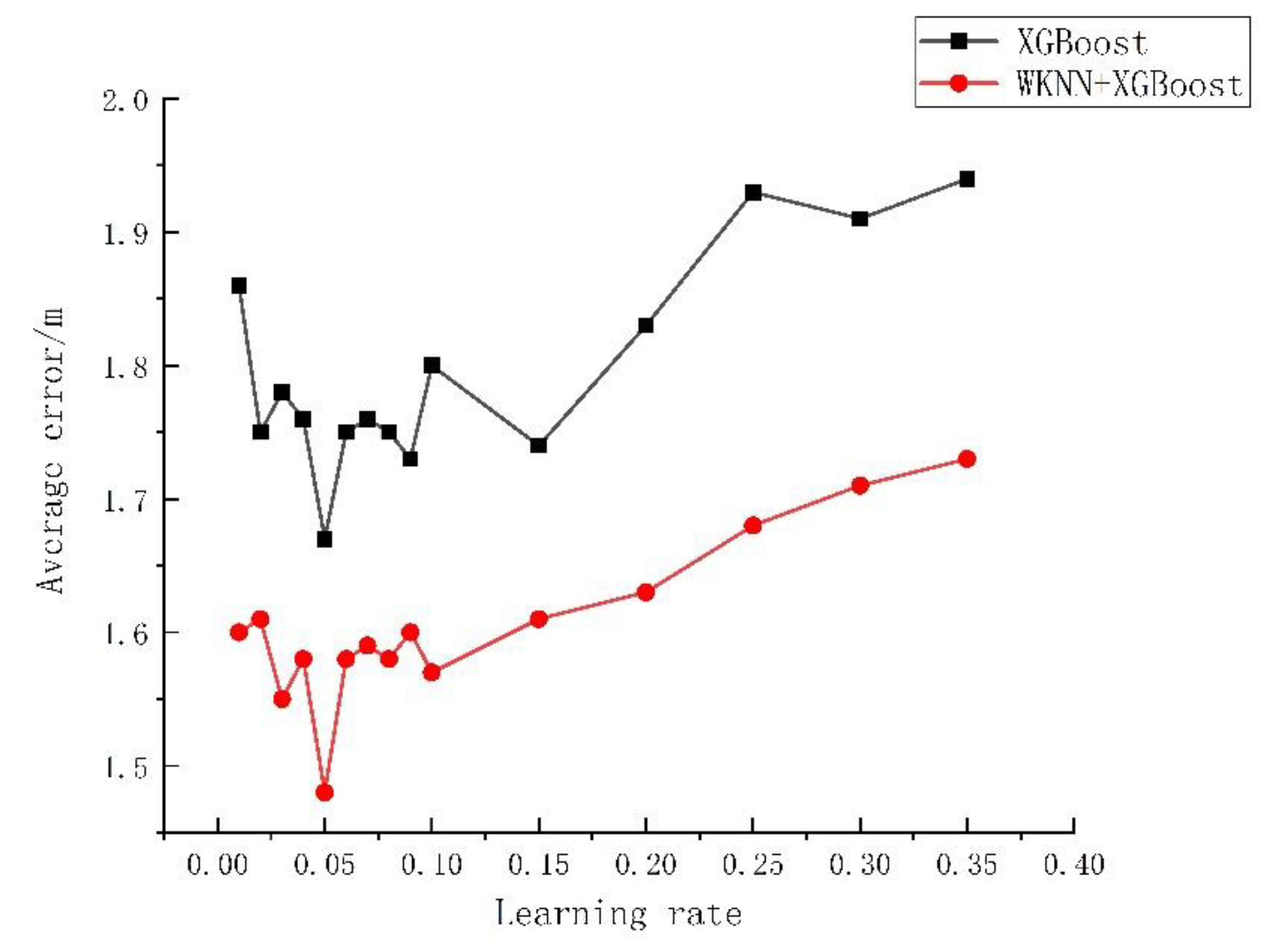

- The influences of such parameters as the K value, the number of regression trees, the depth of decision trees, and the learning rate in the XGBoost algorithm were investigated, and such parameters as the number of regression trees, the depth of decision trees, and the learning rate in the XGBoost algorithm were optimized through the genetic algorithm (GA).

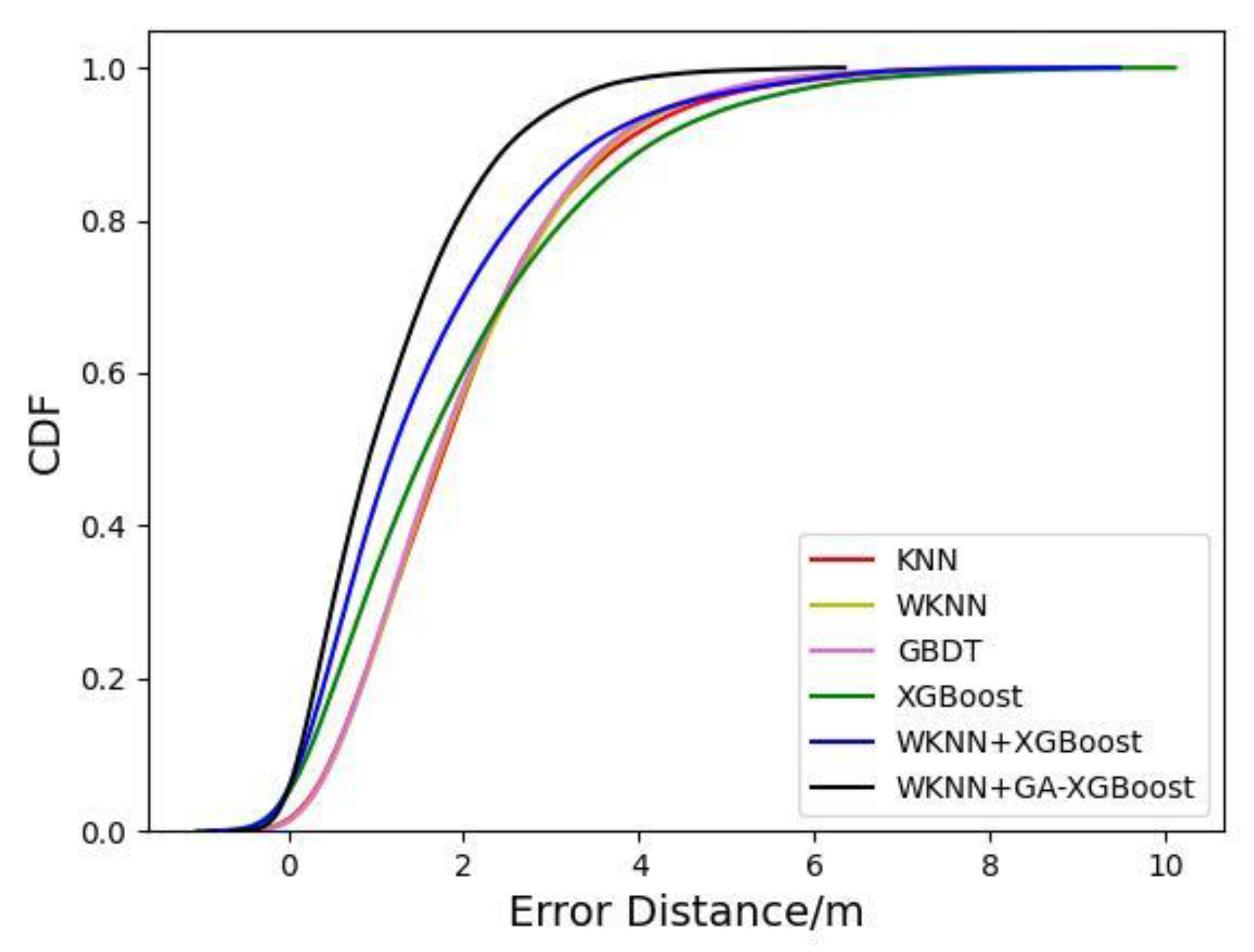

- The corresponding verification experiment was designed. Then, the proposed algorithm was compared with the KNN, WKNN, XGBoost, and WKNN+XGBoost algorithms in the other literature regarding the positioning effect, which was evaluated using the positioning error, root-mean-square error (RMSE), mean absolute error (MAE), and cumulative distribution function (CDF) of probability. Finally, the superiority of the proposed algorithm was verified.

2. Introduction of Relevant Basic Algorithms

2.1. KNN and WKNN Algorithms

2.2. XGBoost Algorithm

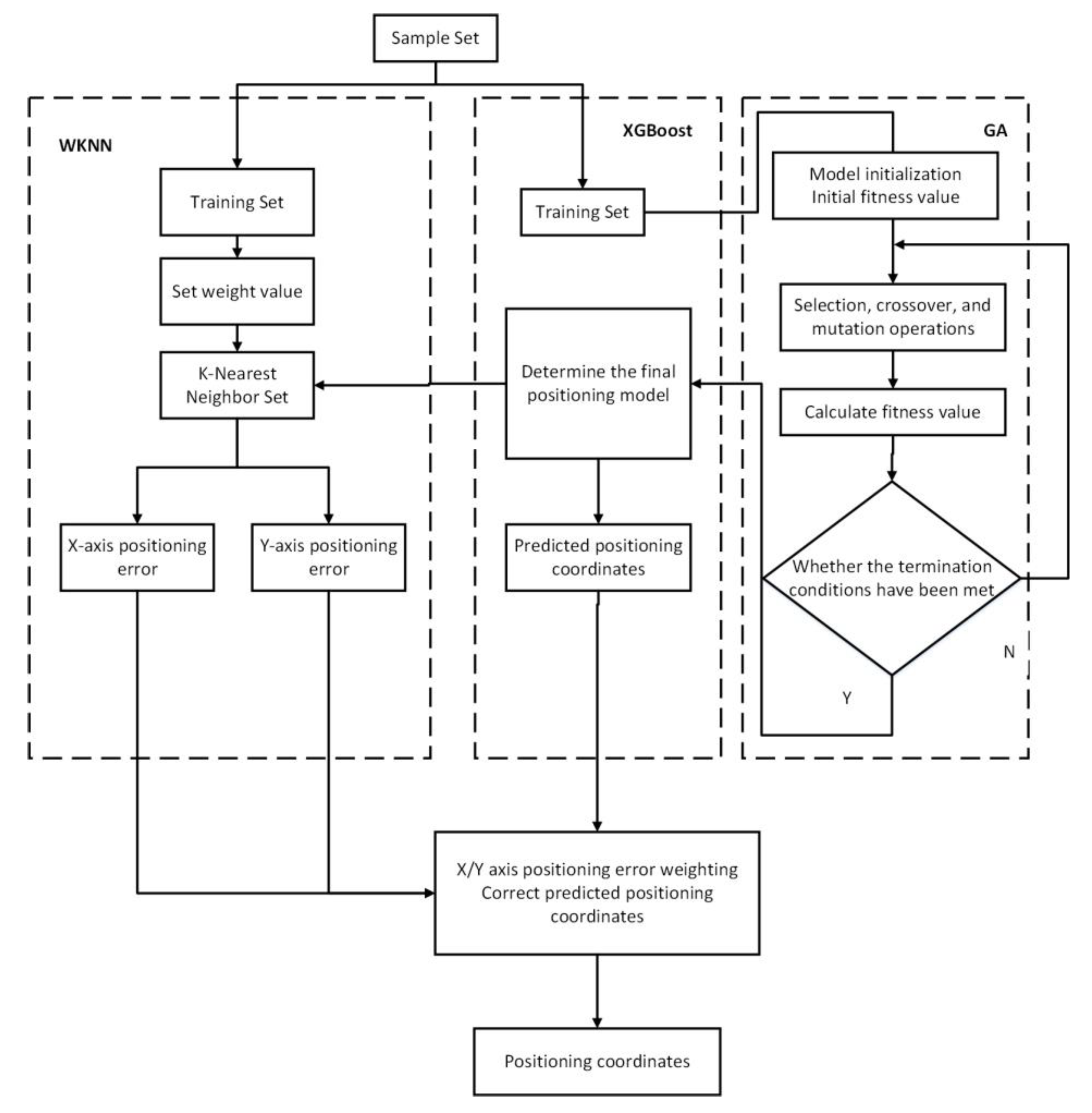

3. Design of Indoor-Fingerprint-Positioning Algorithm Based on WKNN and GA-Optimized XGBoost

3.1. GA

3.2. Implementation of Indoor-Fingerprint-Positioning Algorithm Based on WKNN and GA-Optimized XGBoost

4. Experiments and Result Analysis

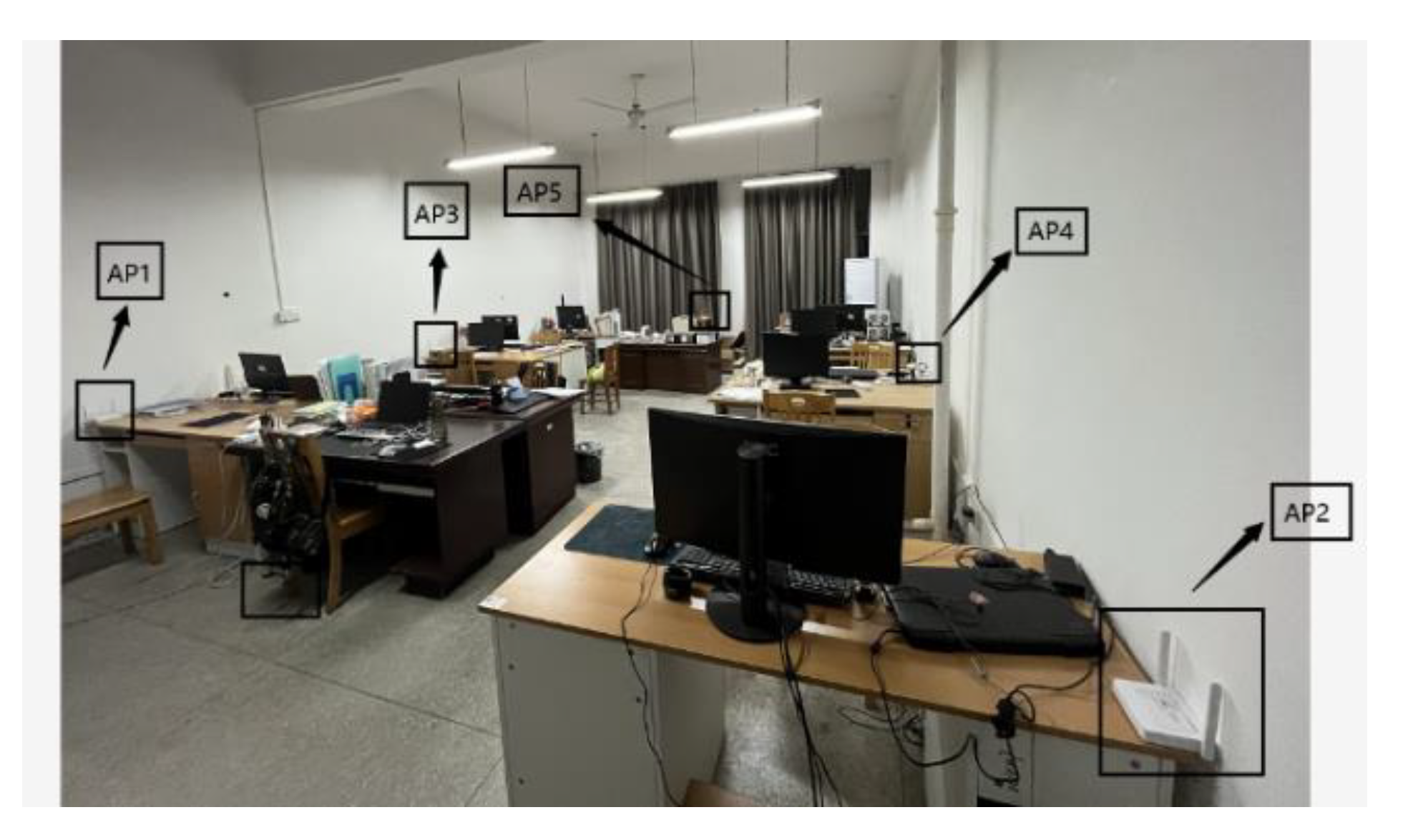

4.1. Acquisition of WiFi Fingerprint Information

4.2. Data Preprocessing of the Fingerprint Database

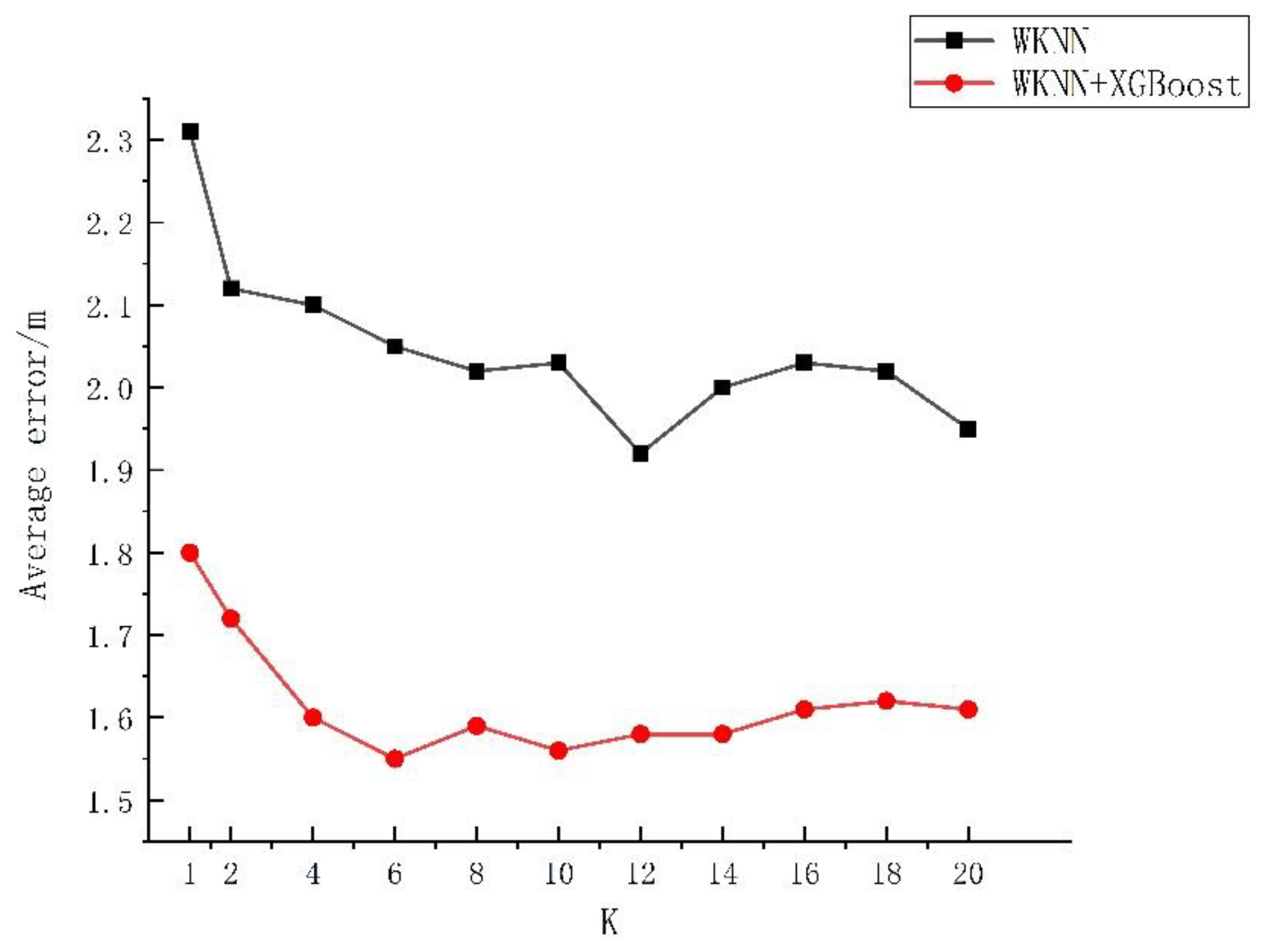

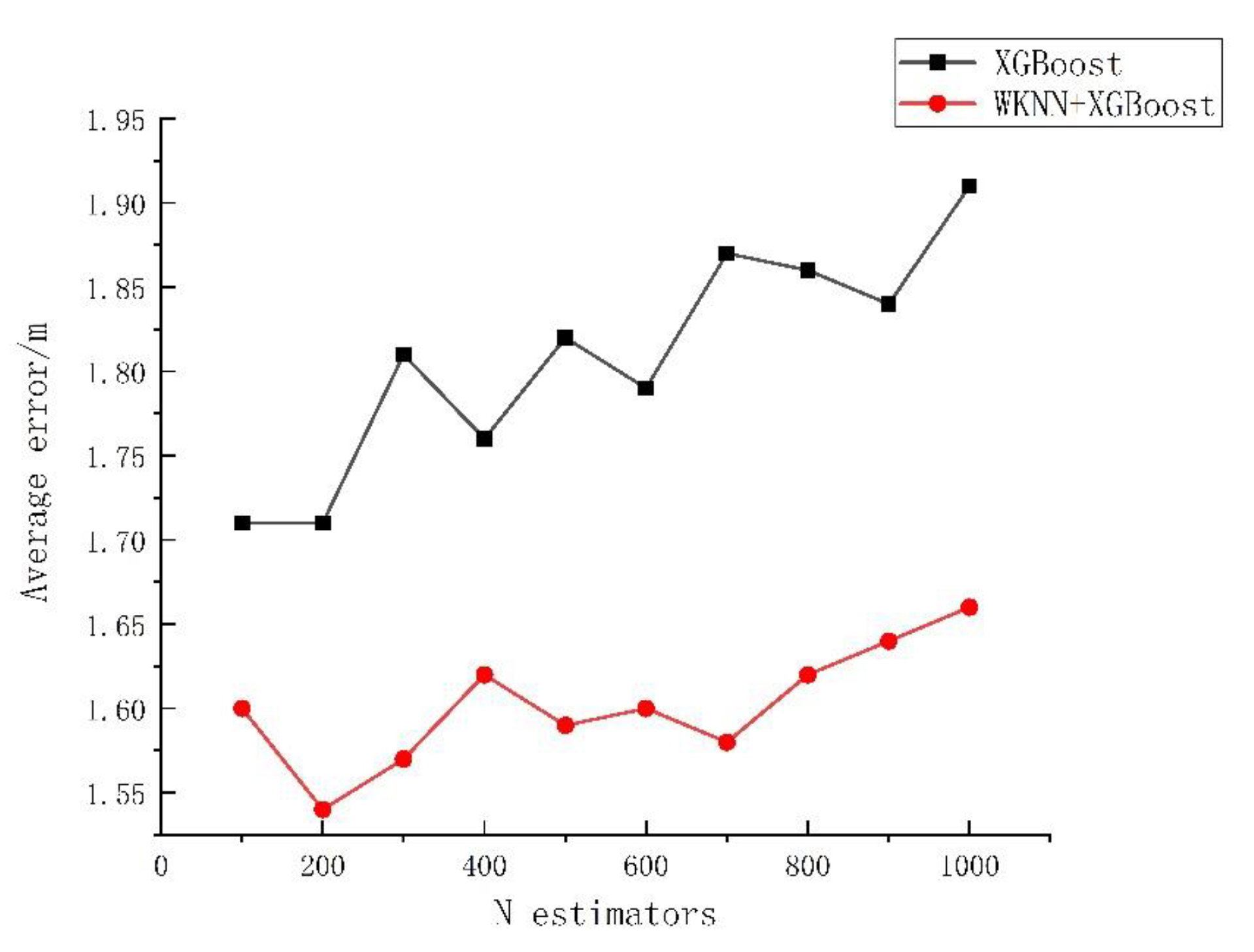

4.3. Research on Algorithm Parameters

4.4. Positioning Error and Experimental Analysis

5. Conclusions

- In this study, the parameters of the XGBoost algorithm were optimized via GA. It was known through experiments that the XGBoost algorithm was influenced differently by different parameters. In the experiments, the K value and learning rate exerted the greatest influences on the algorithm performance.

- By fusing WKNN and XGBoost algorithms, an indoor-fingerprint-positioning algorithm based on WKNN+GA-XGBoost was put forward in this study. The experimental results revealed that compared with the other five algorithms, the proposed algorithm reduced the positioning error by 20.26–45.58%, with the RMSE and MAE reduced by over 25%. Moreover, the CDF curve of this algorithm could converge faster.

- The algorithm proposed in this paper has certain advantages in positioning accuracy and stability. In addition, the fingerprint database was manually acquired offline, which consumed a large quantity of manpower and material resources and led to the failure of real-time updating. Hence, how to simplify the fingerprint database while maintaining a high-accuracy positioning effect remains to be further explored. The experimental results in this article have slightly higher errors. After analysis, the main reason is that the performance of the AP device is weak and the anti-interference ability is poor. We will correct it in future research. Only the positioning of fixed targets in the indoor environment was investigated in this study. The future research focus will be shifted to the positioning of moving targets in indoor environments.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sarcevic, P.; Csik, D.; Odry, A. Indoor 2D Positioning Method for Mobile Robots Based on the Fusion of RSSI and Magnetometer Fingerprints. Sensors 2023, 23, 1855. [Google Scholar] [CrossRef] [PubMed]

- Hong, X.; Cui, Y. Research on indoor positioning algorithm based on fireworks optimized particle swarm optimization. Electron. Meas. Technol. 2022, 45, 59–64. [Google Scholar]

- Wu, Z.; Cai, R.; Xu, S.; Wu, X.; Fu, Y. Research and improvement of WiFi location based on K-nearest neighbor method. Comput. Eng. 2017, 43, 289–293. [Google Scholar]

- Babalola, O.P.; Balyan, V. WiFi fingerprinting indoor localization based on dynamic mode decomposition feature selection with hidden Markov model. Sensors 2021, 21, 6778. [Google Scholar] [CrossRef] [PubMed]

- Xiang, W.; Ma, T.; Liang, Y.; Yang, Z.; Peng, J. Research on KNN building location algorithm based on entropy weight. Mod. Radar 2021, 43, 32–37. [Google Scholar]

- Yadav, R.K.; Bhattarai, B.; Gang, H.S.; Pyun, J.Y. Trusted K Nearest Bayesian Estimation for Indoor Positioning System. IEEE Access 2019, 7, 51484–51498. [Google Scholar] [CrossRef]

- Njima, W.; Bazzi, A.; Chafii, M. DNN-based Indoor Localization Under Limited Dataset using GANs and Semi-Supervised Learning. IEEE Access 2022, 10, 69896–69909. [Google Scholar] [CrossRef]

- Pan, W.; Kang, K.; Zhang, W.; Wang, H. An improved algorithm for indoor positioning accuracy based on WiFi. Comput. Eng. 2020, 46, 207–213. [Google Scholar]

- Bundak, C.; Rahman, M.; Karim, M.; Nurul, H. Fuzzy rank cluster top k Euclidean distance and triangle-based algorithm for magnetic field indoor positioning system-Science Direct. Alex. Eng. J. 2021, 61, 3645–3655. [Google Scholar] [CrossRef]

- Zhou, C.; Chen, J.; Huang, F. WiFi-PDR fusion indoor positioning technology based on traceless particle filter. Comput. Sci. 2022, 49 (Suppl. S1), 606–611. [Google Scholar]

- Ninh, D.B.; He, J.; Trung, V.T.; Huy, D.P. An effective random statistical method for Indoor Positioning System using WiFi fingerprinting. Future Gener. Comput. Syst. 2020, 109, 238–248. [Google Scholar] [CrossRef]

- Xu, T.; He, J.; Zhu, N.; Deng, W.; Wu, S.; He, Y. Improved VWKNN location fingerprint location algorithm based on discrete coefficient. J. Beijing Univ. Aeronaut. Astronaut. 2022, 48, 1242–1251. [Google Scholar]

- Pheng, S.; Li, J.; Luo, X. Bluetooth-Based WKNNPF and WKNNEKF Indoor Positioning Algorithm. In Proceedings of the 2021 13th International Conference on Advanced Computational Intelligence (ICACI), Chongqing, China, 14–16 May 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 378–382. [Google Scholar]

- Asaad, S.M.; Ghafoor, K.Z.; Sarhang, H.; Mulahuwaish, A.; Ali, A.M. Fingerprinting Based Positioning Techniques Using Machine Learning Algorithms Principles, Approaches and Challenges. In Trust, Security and Privacy for Big Data; Routledge: Abingdon-on-Thames, UK, 2022; pp. 112–128. [Google Scholar]

- Lee, S.H.; Cheng, C.H.; Lin, C.C.; Huang, Y.F. PSO-Based Target Localization and Tracking in Wireless Sensor Networks. Electronics 2023, 12, 905. [Google Scholar] [CrossRef]

- Wang, Y.; Lei, Y.; Zhang, Y.; Yao, L. A robust indoor localization method with calibration strategy based on joint distribution adaptation. Wirel. Netw. 2021, 27, 1739–1753. [Google Scholar] [CrossRef]

- Yao, Y. Research on Indoor Location Technology Based on WiFi Location Fingerprint. Ph.D. Thesis, Dalian University of Technology, Dalian, China, 2022. [Google Scholar]

- Kumrai, T.; Korpela, J.; Zhang, Y.; Ohara, K.; Murakami, T.; Abeysekera, H.; Maekawa, T. Automated construction of Wi-Fi-based indoor logical location predictor using crowd-sourced photos with Wi-Fi signals. Pervasive Mob. Comput. 2023, 89, 101742. [Google Scholar] [CrossRef]

- Ding, S.; Su, C.; Yu, J. An optimizing BP neural network algorithm based on genetic algorithm. Artif. Intell. Rev. 2011, 36, 153–162. [Google Scholar] [CrossRef]

- Li, Y.; Wan, Y.; Zhang, Y.; Kuang, H. Path planning of storage robot based on artificial bee colony—Adaptive genetic algorithm. J. Instrum. 2022, 43, 282–290. [Google Scholar]

- Yang, J.; Li, Y.; Cheng, W. A new algorithm for fingerprint indoor location based on nearest neighbor correlation coefficient. J. Northwest Polytech. Univ. 2017, 35, 676–682. [Google Scholar]

- Zheng, J.; Ai, T.; Yan, X.; Lu, W. Multi-building WiFi location fingerprint indoor location method based on XGBoost. Mapp. Geogr. Inf. 2019, 44, 65–68. [Google Scholar]

- Li, M. Research on WIFI Indoor Positioning Technology Based on Machine Learning. Ph.D. Thesis, Xi’an University of Science and Technology, Xi’an, China, 2020. [Google Scholar]

| ID | AP1 | AP2 | AP3 | AP4 | AP5 | x | y |

|---|---|---|---|---|---|---|---|

| RP1 RP2 RP3 … | −37.2 −34.7 −32.9 … | −57.7 −53.7 −65.3 … | −68.2 −57.5 −71.2 … | −70.4 −57.4 −53.9 … | −55.2 −57.5 −55.2 … | 10 20 30 … | 10 10 10 … |

| Algorithm | Parameter | Parameter Specific Numerical Value |

|---|---|---|

| WKNN | K | 12 |

| KNN | K | 12 |

| XGBoost | n_estimators max_depth learning_rate | 200 5 0.05 |

| WKNN+ XGBoost | K n_estimators max_depth learning_rate | 12 200 5 0.05 |

| Algorithm | Parameter | Parameter Specific Numerical Value |

|---|---|---|

| GA | ps pc pm | 60 0.5 0.01 |

| Parameter | Numerical Value |

|---|---|

| K n_estimators max_depth learning_rate | 6 210 5 0.05 |

| Algorithm | RMSE | Test Set Quantity |

|---|---|---|

| KNN WKNN XGBoost GBDT WKNN + XGBoost WKNN + GA- XGBoost | 2.712 2.673 2.687 2.712 2.226 1.655 | 1000 1000 1000 1000 1000 1000 |

| Algorithm | MAE | Test Set Quantity |

|---|---|---|

| KNN WKNN XGBoost GBDT KNN+XGBoost WKNN+GA-XGBoost | 2.292 2.243 2.102 2.112 1.745 1.305 | 1000 1000 1000 1000 1000 1000 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, H.; Zhang, L.; Chen, H.; Zhang, S.; Wang, S.; Peng, H.; Zou, J. An Indoor Fingerprint Positioning Algorithm Based on WKNN and Improved XGBoost. Sensors 2023, 23, 3952. https://doi.org/10.3390/s23083952

Lu H, Zhang L, Chen H, Zhang S, Wang S, Peng H, Zou J. An Indoor Fingerprint Positioning Algorithm Based on WKNN and Improved XGBoost. Sensors. 2023; 23(8):3952. https://doi.org/10.3390/s23083952

Chicago/Turabian StyleLu, Haizhao, Lieping Zhang, Hongyuan Chen, Shenglan Zhang, Shoufeng Wang, Huihao Peng, and Jianchu Zou. 2023. "An Indoor Fingerprint Positioning Algorithm Based on WKNN and Improved XGBoost" Sensors 23, no. 8: 3952. https://doi.org/10.3390/s23083952

APA StyleLu, H., Zhang, L., Chen, H., Zhang, S., Wang, S., Peng, H., & Zou, J. (2023). An Indoor Fingerprint Positioning Algorithm Based on WKNN and Improved XGBoost. Sensors, 23(8), 3952. https://doi.org/10.3390/s23083952