Towards an Evolved Immersive Experience: Exploring 5G- and Beyond-Enabled Ultra-Low-Latency Communications for Augmented and Virtual Reality

Abstract

1. Introduction

1.1. Background and Motivation

1.2. Vision and Insight

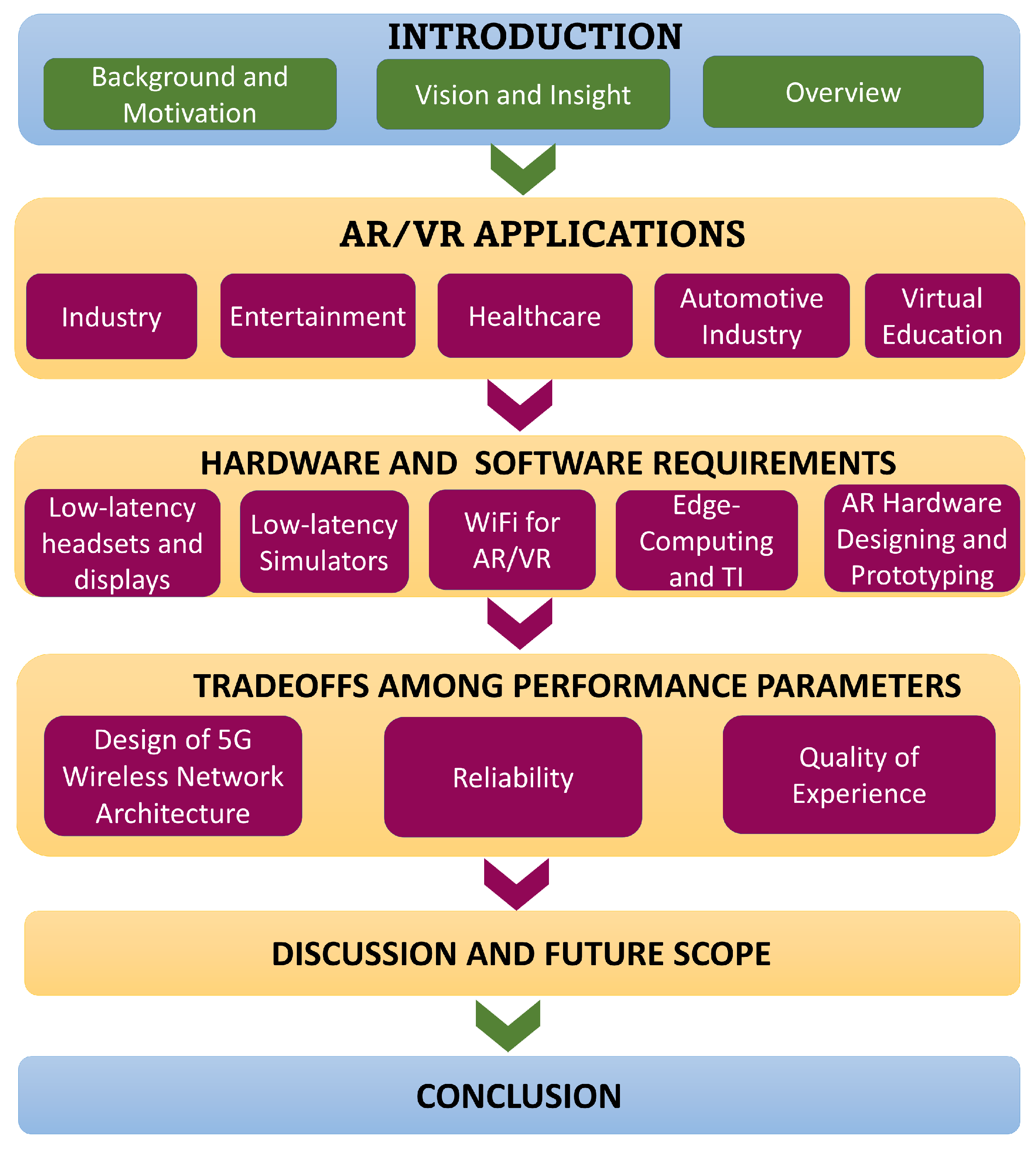

1.3. Paper Overview

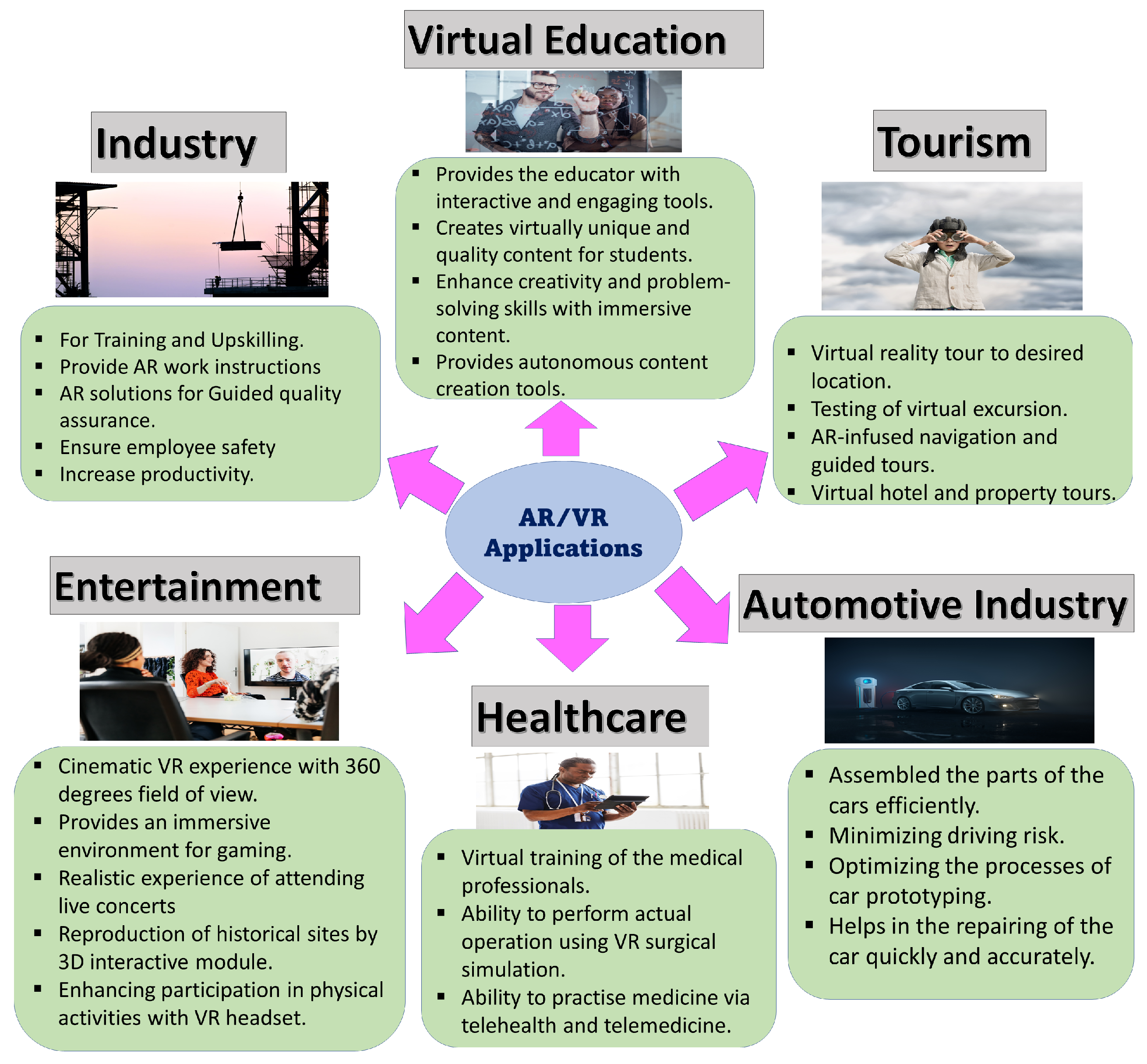

2. AR/VR Applications

2.1. Industry 4.0

2.2. Entertainment

2.3. Healthcare

2.4. Automotive Industry

2.5. Travel and Tourism

2.6. Virtual Education

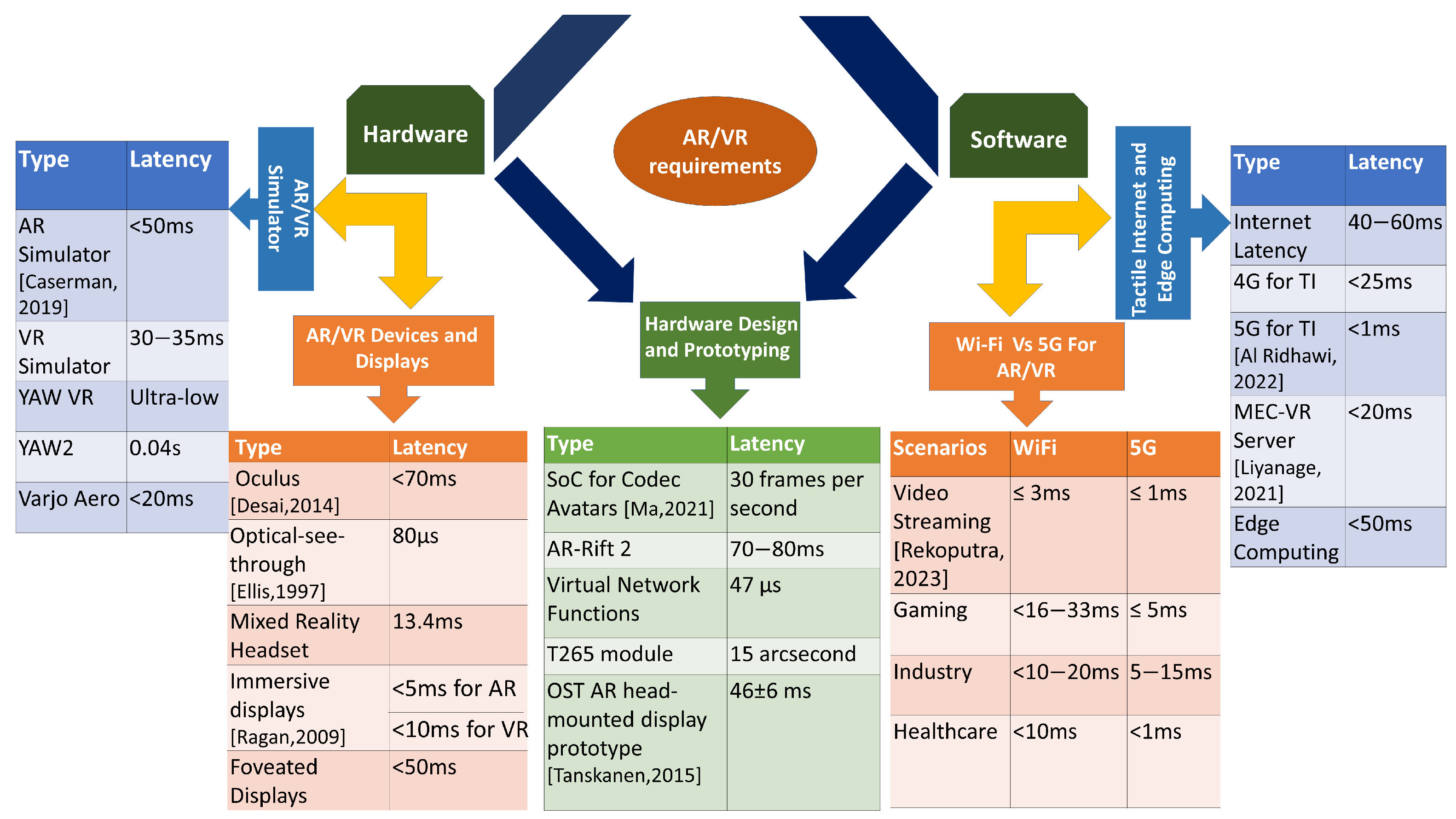

3. AR/VR Hardware and Software Requirements

3.1. Low-Latency Displays and Headsets for AR/VR

3.2. Designing Low-Latency AR/VR Simulators

3.3. AR/VR Hardware: Designing and Prototyping

3.4. WiFi for AR/VR

3.5. Edge Computing and the Tactile Internet

4. Trade-Offs among Performance Parameters

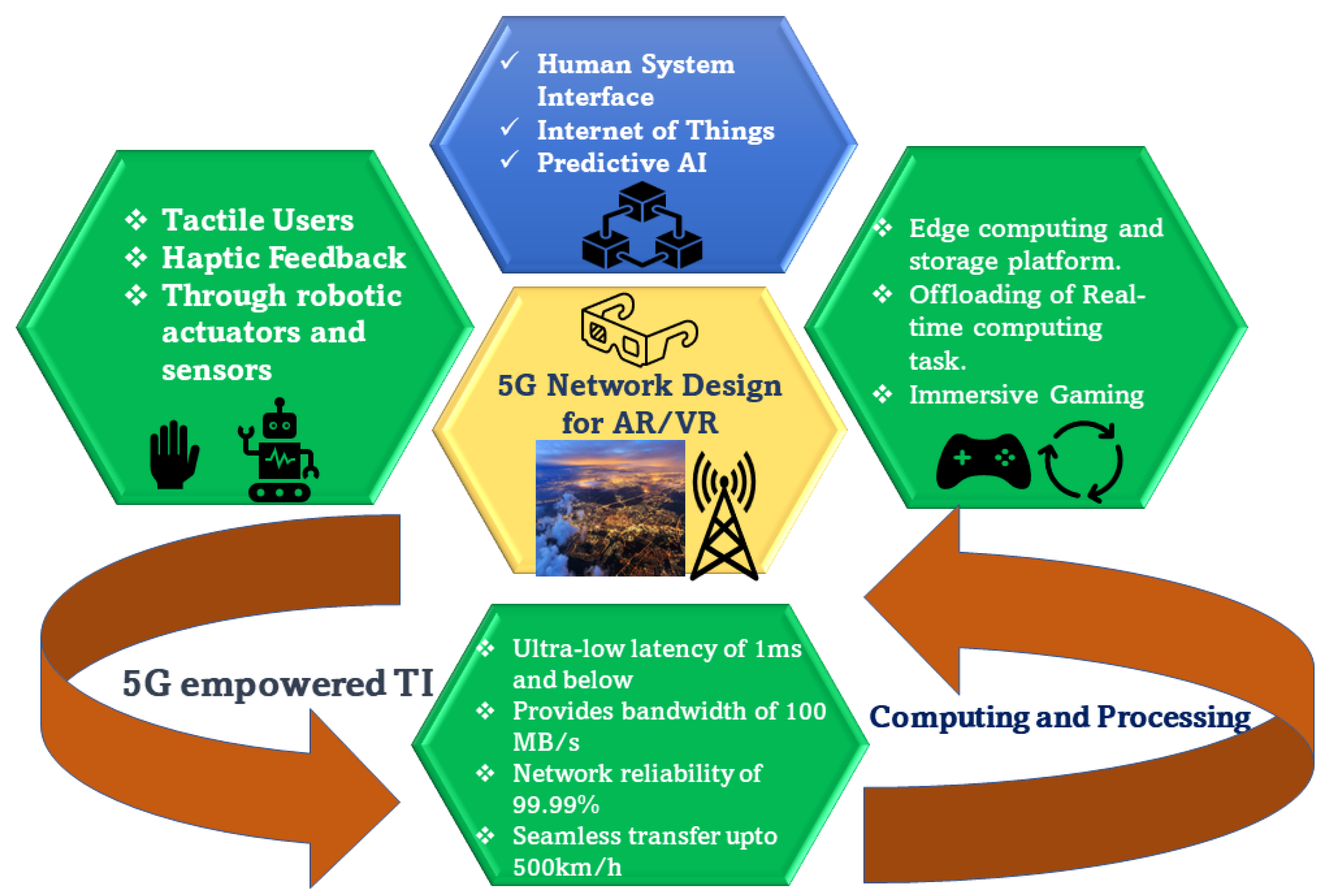

4.1. Design of 5G Wireless Network Architecture

4.2. Reliability

4.3. Quality of Experience

5. Discussion and Future Scope

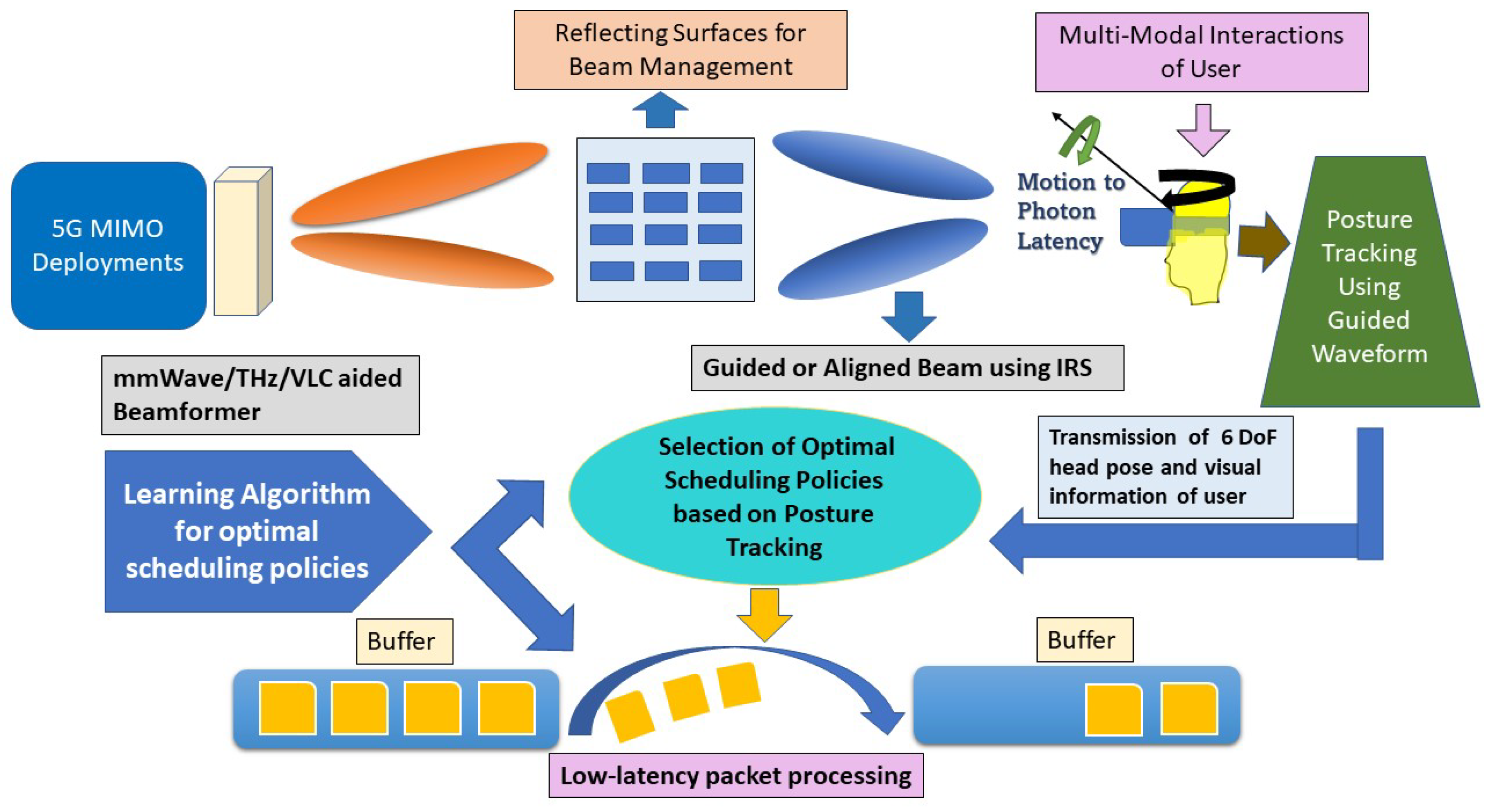

- We divide the environment into simple scenarios (watching movies, attending virtual meetings, etc.) and hard scenarios (playing games, virtual training, etc.) based on the movement of the user’s HMD.

- For simple scenarios, we can consider reinforcement learning [125], where the the environment is defined by utilizing highly focused directional beams to track the posture of the HMD. Then, the action is based on the selection of the optimal beam for data transmission by learning (exploiting) the user’s environment, i.e., the posture tracking of the user. Finally, the reward is decided by the action, which is an optimal scheduling policy that minimizes the delay during data delivery.

- For hard scenarios, we can apply the combination of reinforcement learning and supervised learning, known as imitation learning (IL) [126], to tackle the rapid loss of the buffer packets as a result of frequent multi-modal interactions in such scenarios. This includes a preprocessing step, which involves generating an expert dataset through supervised training based on the possible outcome of the guided beams given that the pose of tracking (feedback) is known to the user. The action is based on learning a policy through behavior cloning [127] by mapping the multi-sensory information with the respective optimal beam based on the pre-trained dataset. The reward is then determined from the action to select the best scheduling policy for a low-latency data delivery. Hence, the goal of achieving the trade-off between beam alignment and data transmission in such scenarios for low-latency communication in AR/VR is made possible by IL.

6. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Henrique, P.S.R.; Prasad, R. 6G: The Road to the Future Wireless Technologies 2030; CRC Press: Boca Raton, FL, USA, 2022. [Google Scholar]

- Physical Layer Enhancements for NR Ultra-Reliable and Low Latency Communication (URLLC), Document 3GPP RP-191584, TSG-RAN#84, 2019. Rel-16. Available online: https://portal.3gpp.org/desktopmodules/Specifications/SpecificationDetails.aspx?specificationId=3498 (accessed on 12 February 2023).

- Popovski, P.; Stefanović, Č.; Nielsen, J.J.; De Carvalho, E.; Angjelichinoski, M.; Trillingsgaard, K.F.; Bana, A.S. Wireless access in ultra-reliable low-latency communication (URLLC). IEEE Trans. Commun. 2019, 67, 5783–5801. [Google Scholar] [CrossRef]

- Park, J.; Samarakoon, S.; Shiri, H.; Abdel-Aziz, M.K.; Nishio, T.; Elgabli, A.; Bennis, M. Extreme ultra-reliable and low-latency communication. Nat. Electron. 2022, 5, 133–141. [Google Scholar] [CrossRef]

- Rekoputra, N.M.; Tseng, C.W.; Wang, J.T.; Liang, S.H.; Cheng, R.G.; Li, Y.F.; Yang, W.H. Implementation and Evaluation of 5G MEC-Enabled Smart Factory. Electronics 2023, 12, 1310. [Google Scholar] [CrossRef]

- Oughton, E.J.; Lehr, W.; Katsaros, K.; Selinis, I.; Bubley, D.; Kusuma, J. Revisiting wireless Internet connectivity: 5G vs. WiFi 6. Telecommun. Policy 2021, 45, 102127. [Google Scholar] [CrossRef]

- Zreikat, A. Performance evaluation of 5G/WiFi-6 coexistence. Int. J. Circuits Syst. Signal Process. NAUN 2020, 14, 904–913. [Google Scholar] [CrossRef]

- Qualcomm Technologies, Inc. VR and AR Pushing Connectivity Limits. October, 2018. Available online: https://www.qualcomm.com/content/dam/qcomm-martech/dm-assets/documents/presentation_-_vr_and_ar_are_pushing_connectivity_limits_-web_0.pdf (accessed on 11 February 2023).

- Garcia-Rodriguez, A.; López-Pérez, D.; Galati-Giordano, L.; Geraci, G. IEEE 802.11be: WiFi 7 Strikes Back. IEEE Commun. Mag. 2021, 59, 102–108. [Google Scholar] [CrossRef]

- Bonci, A.; Caizer, E.; Giannini, M.C.; Giuggioloni, F.; Prist, M. Ultra Wide Band communication for condition-based monitoring, a bridge between edge and cloud computing. Procedia Comput. Sci. 2023, 217, 1670–1677. [Google Scholar] [CrossRef]

- Ding, C.; Sun, H.H.; Zhu, H.; Guo, Y.J. Achieving wider bandwidth with full-wavelength dipoles for 5G base stations. IEEE Trans. Antennas Propag. 2019, 68, 1119–1127. [Google Scholar] [CrossRef]

- Levanen, T.; Pirskanen, J.; Valkama, M. Radio interface design for ultra-low latency millimeter-wave communications in 5G era. In Proceedings of the 2014 IEEE Globecom Workshops (GC Wkshps), Austin, TX, USA, 8–12 December 2014; pp. 1420–1426. [Google Scholar]

- Bansal, D.; Kaur, M.; Kumar, P.; Kumar, A. Design of a wide bandwidth terahertz MEMS Ohmic switch for 6G communication applications. Microsyst. Technol. 2023, 29, 271–2777. [Google Scholar] [CrossRef]

- Torres Vega, M.; Liaskos, C.; Abadal, S.; Papapetrou, E.; Jain, A.; Mouhouche, B.; Kalem, G.; Ergut, S.; Mach, M.; Sabol, T.; et al. Immersive Interconnected Virtual and Augmented Reality: A 5G and IoT Perspective. J. Netw. Syst. Manag. 2020, 28, 796–826. [Google Scholar] [CrossRef]

- Rubin, P. The Inside Story of Oculus Rift and How Virtual Reality Became Reality. 20 May 2014. Available online: https://www.wired.com/2014/05/oculus-rift-4/ (accessed on 2 August 2022).

- Zabels, R.; Smukulis, R.; Fenuks, R.; Kučiks, A.; Linina, E.; Osmanis, K.; Osmanis, I. Reducing motion to photon latency in multi-focal augmented reality near-eye display. In Proceedings of the Optical Architectures for Displays and Sensing in Augmented, Virtual, and Mixed Reality (AR, VR, MR) II, Virtual, 28–31 March 2021; Kress, B.C., Peroz, C., Eds.; International Society for Optics and Photonics, SPIE: Bellingham, WA, USA, 2021; Volume 11765, p. 117650W. [Google Scholar] [CrossRef]

- Van Waveren, J.M.P. The Asynchronous Time Warp for Virtual Reality on Consumer Hardware. In Proceedings of the 22nd ACM Conference on Virtual Reality Software and Technology, VRST′16, Munich, Germany, 2–4 November 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 37–46. [Google Scholar] [CrossRef]

- Smit, F.A.; van Liere, R.; Fröhlich, B. An Image-Warping VR-Architecture: Design, Implementation and Applications. In Proceedings of the 2008 ACM Symposium on Virtual Reality Software and Technology, VRST′08, Bordeaux, France, 27–29 October 2008; Association for Computing Machinery: New York, NY, USA, 2008; pp. 115–122. [Google Scholar] [CrossRef]

- Holzwarth, V.; Gisler, J.; Hirt, C.; Kunz, A. Comparing the accuracy and precision of steamvr tracking 2.0 and oculus quest 2 in a room scale setup. In Proceedings of the International Conference on Virtual and Augmented Reality Simulations, Melbourne, VIC, Australia, 20–22 March 2021; pp. 42–46. [Google Scholar]

- Sejan, M.A.S.; Rahman, M.H.; Aziz, M.A.; Kim, D.S.; You, Y.H.; Song, H.K. A Comprehensive Survey on MIMO Visible Light Communication: Current Research, Machine Learning and Future Trends. Sensors 2023, 23, 739. [Google Scholar] [CrossRef] [PubMed]

- Ślusarczyk, B. Industry 4.0-Are We Ready? Pol. J. Manag. Stud. 2018, 17, 232–248. [Google Scholar] [CrossRef]

- Choudhari, A.; Talkar, S.; Rayar, P.; Rane, A. Design and Manufacturing of Compact and Portable Smart CNC Machine. In Proceedings of the International Conference on Intelligent Manufacturing and Automation, Wuhan, China, 16–18 October 2020; Vasudevan, H., Kottur, V.K.N., Raina, A.A., Eds.; Springer: Singapore, 2020; pp. 201–210. [Google Scholar]

- Gupta, A.; Choudhari, A.; Kadaka, T.; Rayar, P. Design and analysis of vertical vacuum fryer. In Proceedings of the International Conference on Intelligent Manufacturing and Automation, Zhuhai, China, 17–19 May 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 133–149. [Google Scholar]

- Dalenogare, L.S.; Benitez, G.B.; Ayala, N.F.; Frank, A.G. The expected contribution of Industry 4.0 technologies for industrial performance. Int. J. Prod. Econ. 2018, 204, 383–394. [Google Scholar] [CrossRef]

- Gonzalez, W. How Augmented and Virtual Reality Are Shaping a Variety of Industries. Available online: https://www.forbes.com/sites/forbesbusinesscouncil/2021/07/02/how-augmented-and-virtual-reality-are-shaping-a-variety-of-industries/ (accessed on 2 July 2021).

- Khan, A.; Sepasgozar, S.; Liu, T.; Yu, R. Integration of BIM and Immersive Technologies for AEC: A Scientometric-SWOT Analysis and Critical Content Review. Buildings 2021, 11, 126. [Google Scholar] [CrossRef]

- Yousefpour, A.; Fung, C.; Nguyen, T.; Kadiyala, K.; Jalali, F.; Niakanlahiji, A.; Kong, J.; Jue, J.P. All one needs to know about fog computing and related edge computing paradigms: A complete survey. J. Syst. Archit. 2019, 98, 289–330. [Google Scholar] [CrossRef]

- Yin, J.H.; Chng, C.B.; Wong, P.M.; Ho, N.; Chua, M.; Chui, C.K. VR and AR in human performance research―An NUS experience. Virtual Real. Intell. Hardw. 2020, 2, 381–393. [Google Scholar] [CrossRef]

- Nahavandi, S. Industry 5.0—A Human-Centric Solution. Sustainability 2019, 11, 4371. [Google Scholar] [CrossRef]

- Size, G.M. Video Streaming Market Size, Share & Trends Analysis Report by Streaming Type, by Solution, by Platform, by Service, by Revenue Model, by Deployment Type, by User and Segment Forecasts, 2020–2027; Grand View Research: Pune, India, 2020. [Google Scholar]

- McLellan, H. Virtual realities. Handbook of Research for Educational Communications and Technology; Springer: Berlin/Heidelberg, Germany, 1996; pp. 457–487. [Google Scholar]

- Narin, N.G. A content analysis of the metaverse articles. J. Metaverse 2021, 1, 17–24. [Google Scholar]

- Karuzaki, E.; Partarakis, N.; Patsiouras, N.; Zidianakis, E.; Katzourakis, A.; Pattakos, A.; Kaplanidi, D.; Baka, E.; Cadi, N.; Magnenat-Thalmann, N.; et al. Realistic virtual humans for cultural heritage applications. Heritage 2021, 4, 4148–4171. [Google Scholar] [CrossRef]

- Oriti, D.; Manuri, F.; De Pace, F.; Sanna, A. Harmonize: A shared environment for extended immersive entertainment. Virtual Real. 2021, 1–14. [Google Scholar] [CrossRef]

- Sukhmani, S.; Sadeghi, M.; Erol-Kantarci, M.; El Saddik, A. Edge Caching and Computing in 5G for Mobile AR/VR and Tactile Internet. IEEE Multimed. 2019, 26, 21–30. [Google Scholar] [CrossRef]

- Zukin, S. The Innovation Complex: Cities, Tech, and the New Economy; Oxford University Press: Oxford, UK, 2020. [Google Scholar]

- Dascal, J.; Reid, M.W.; Ishak, W.W.; Spiegel, B.M.R.; Recacho, J.; Rosen, B.; Danovitch, I. Virtual Reality and Medical Inpatients: A Systematic Review of Randomized, Controlled Trials. Innov. Clin. Neurosci. 2017, 14, 14–21. [Google Scholar] [PubMed]

- van Krevelen, D.W.F.; Poelman, R. A Survey of Augmented Reality Technologies, Applications and Limitations. Int. J. Virtual Real. 2010, 9, 1–20. [Google Scholar] [CrossRef]

- Lee, S.; Lee, J.; Lee, A.; Park, N.; Lee, S.; Song, S.; Seo, A.; Lee, H.; Kim, J.I.; Eom, K. Augmented reality intravenous injection simulator based 3D medical imaging for veterinary medicine. Vet. J. 2013, 196, 197–202. [Google Scholar] [CrossRef]

- Daniels, J.; Schwartz, J.N.; Voss, C.; Haber, N.; Fazel, A.; Kline, A.; Washington, P.; Feinstein, C.; Winograd, T.; Wall, D.P. Exploratory study examining the at-home feasibility of a wearable tool for social-affective learning in children with autism. NPJ Digit. Med. 2018, 1, 1–10. [Google Scholar] [CrossRef]

- Wright, J.L.; Hoffman, H.G.; Sweet, R.M. Virtual reality as an adjunctive pain control during transurethral microwave thermotherapy. Urology 2005, 66, 1320.e1–1320.e3. [Google Scholar] [CrossRef]

- Eckhoff, D.; Sandor, C.; Cheing, G.L.Y.; Schnupp, J.; Cassinelli, A. Thermal pain and detection threshold modulation in augmented reality. Front. Virtual Real. 2022, 3, 952637. [Google Scholar] [CrossRef]

- Shanu, S.; Narula, D.; Pathak, L.K.; Mahato, S. AR/VR Technology for Autonomous Vehicles and Knowledge-Based Risk Assessment. In Virtual and Augmented Reality for Automobile Industry: Innovation Vision and Applications; Springer: Berlin/Heidelberg, Germany, 2022; pp. 87–109. [Google Scholar]

- Narzt.; Pomberger.; Ferscha.; Kolb.; Muller.; Wieghardt.; Hortner.; Lindinger. Pervasive information acquisition for mobile AR-navigation systems. In Proceedings of the Fifth IEEE Workshop on Mobile Computing Systems and Applications, Monterey, CA, USA, 9–10 October 2003; pp. 13–20. [Google Scholar] [CrossRef]

- Yang, H.; Shen, Y.; Hasan, M.; Perez, D.; Shull, J. Framework for Interactive M3 Visualization of Microscopic Traffic Simulation. Transp. Res. Rec. 2018, 2672, 62–71. [Google Scholar] [CrossRef]

- Perez, D.; Hasan, M.; Shen, Y.; Yang, H. AR-PED: A framework of augmented reality enabled pedestrian-in-the-loop simulation. Simul. Model. Pract. Theory 2019, 94, 237–249. [Google Scholar] [CrossRef]

- Schwebel, D.C.; Gaines, J.; Severson, J. Validation of virtual reality as a tool to understand and prevent child pedestrian injury. Accid. Anal. Prev. 2008, 40, 1394–1400. [Google Scholar] [CrossRef]

- Banducci, S.E.; Ward, N.; Gaspar, J.G.; Schab, K.R.; Crowell, J.A.; Kaczmarski, H.; Kramer, A.F. The Effects of Cell Phone and Text Message Conversations on Simulated Street Crossing. Hum. Factors 2016, 58, 150–162. [Google Scholar] [CrossRef] [PubMed]

- Rosselló, J.; Santana-Gallego, M.; Awan, W. Infectious disease risk and international tourism demand. Health Policy Plan. 2017, 32, 538–548. [Google Scholar] [CrossRef] [PubMed]

- Koohang, A.; Nord, J.; Ooi, K.; Tan, G.; Al-Emran, M.; Aw, E.; Baabdullah, A.; Buhalis, D.; Cham, T.; Dennis, C.; et al. Shaping the metaverse into reality: Multidisciplinary perspectives on opportunities, challenges, and future research. J. Comput. Inf. Syst. 2023. [Google Scholar]

- Guttentag, D.A. Virtual reality: Applications and implications for tourism. Tour. Manag. 2010, 31, 637–651. [Google Scholar] [CrossRef]

- Arlati, S.; Spoladore, D.; Baldassini, D.; Sacco, M.; Greci, L. VirtualCruiseTour: An AR/VR Application to Promote Shore Excursions on Cruise Ships. In Proceedings of the Augmented Reality, Virtual Reality, and Computer Graphics, Otranto, Italy, 24–27 June 2018; De Paolis, L.T., Bourdot, P., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 133–147. [Google Scholar]

- Çeltek, E. 12 Gamification: Augmented Reality, Virtual Reality Games and Tourism Marketing Applications. Gamification Tour. 2021, 92, 237–260. [Google Scholar]

- Ardiny, H.; Khanmirza, E. The Role of AR and VR Technologies in Education Developments: Opportunities and Challenges. In Proceedings of the 2018 6th RSI International Conference on Robotics and Mechatronics (IcRoM), Tehran, Iran, 23–25 October 2018; pp. 482–487. [Google Scholar] [CrossRef]

- Chen, C.H.; Yang, J.C.; Shen, S.; Jeng, M.C. A Desktop Virtual Reality Earth Motion System in Astronomy Education. J. Educ. Technol. Soc. 2007, 10, 289–304. [Google Scholar]

- Puggioni, M.P.; Frontoni, E.; Paolanti, M.; Pierdicca, R.; Malinverni, E.S.; Sasso, M. A content creation tool for ar/vr applications in education: The scoolar framework. In Proceedings of the International Conference on Augmented Reality, Virtual Reality and Computer Graphics, Lecce, Italy, 7–10 September 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 205–219. [Google Scholar]

- Ardito, C.; Buono, P.; Costabile, M.F.; Lanzilotti, R.; Piccinno, A. Enabling interactive exploration of cultural heritage: An experience of designing systems for mobile devices. Knowl. Technol. Policy 2009, 22, 79–86. [Google Scholar] [CrossRef]

- Kljun, M.; Geroimenko, V.; Čopič Pucihar, K. Augmented reality in education: Current status and advancement of the field. In Augmented Reality in Education: A New Technology for Teaching and Learning; Springer: Berlin/Heidelberg, Germany, 2020; pp. 3–21. [Google Scholar]

- Lele, A. Virtual reality and its military utility. J. Ambient. Intell. Humaniz. Comput. 2013, 4, 17–26. [Google Scholar] [CrossRef]

- Caserman, P.; Garcia-Agundez, A.; Göbel, S. A survey of full-body motion reconstruction in immersive virtual reality applications. IEEE Trans. Vis. Comput. Graph. 2019, 26, 3089–3108. [Google Scholar] [CrossRef]

- Lincoln, P.; Blate, A.; Singh, M.; Whitted, T.; State, A.; Lastra, A.; Fuchs, H. From Motion to Photons in 80 Microseconds: Towards Minimal Latency for Virtual and Augmented Reality. IEEE Trans. Vis. Comput. Graph. 2016, 22, 1367–1376. [Google Scholar] [CrossRef]

- Holloway, R.L. Registration Error Analysis for Augmented Reality. Presence Teleoperators Virtual Environ. 1997, 6, 413–432. [Google Scholar] [CrossRef]

- NVIDIA Reflex Now Reducing System Latency in Shadow Warrior 3 & Ready or Not. 15 March 2022. Available online: https://www.nvidia.com/en-us/geforce/news/march-2022-reflex-game-hardware-updates/ (accessed on 13 February 2023).

- Heckbert, P. Color image quantization for frame buffer display. ACM Siggraph Comput. Graph. 1982, 16, 297–307. [Google Scholar] [CrossRef]

- Jiang, H.; Padebettu, R.R.; Sakamoto, K.; Bastani, B. Architecture of Integrated Machine Learning in Low Latency Mobile VR Graphics Pipeline. In SIGGRAPH Asia 2019 Technical Briefs; Association for Computing Machinery: New York, NY, USA, 2019; pp. 41–44. [Google Scholar]

- Ellis, S.; Breant, F.; Manges, B.; Jacoby, R.; Adelstein, B. Factors influencing operator interaction with virtual objects viewed via head-mounted see-through displays: Viewing conditions and rendering latency. In Proceedings of the IEEE 1997 Annual International Symposium on Virtual Reality, Albuquerque, NM, USA, 1–5 March 1997; pp. 138–145. [Google Scholar] [CrossRef]

- Mack, J.; Arda, S.E.; Ogras, U.Y.; Akoglu, A. Performant, multi-objective scheduling of highly interleaved task graphs on heterogeneous system on chip devices. IEEE Trans. Parallel Distrib. Syst. 2021, 33, 2148–2162. [Google Scholar] [CrossRef]

- Gruen, R.; Ofek, E.; Steed, A.; Gal, R.; Sinclair, M.; Gonzalez-Franco, M. Measuring System Visual Latency through Cognitive Latency on Video See-Through AR devices. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Atlanta, GA, USA, 22–26 March 2020; pp. 791–799. [Google Scholar] [CrossRef]

- Blissing, B.; Bruzelius, F.; Eriksson, O. Effects of visual latency on vehicle driving behavior. ACM Trans. Appl. Percept. TAP 2016, 14, 1–12. [Google Scholar] [CrossRef]

- Shajin, F.H.; Rajesh, P. Trusted secure geographic routing protocol: Outsider attack detection in mobile ad hoc networks by adopting trusted secure geographic routing protocol. Int. J. Pervasive Comput. Commun. 2022, 18, 603–621. [Google Scholar] [CrossRef]

- Eriksen, B.A.; Eriksen, C.W. Effects of noise letters upon the identification of a target letter in a nonsearch task. Percept. Psychophys. 1974, 16, 143–149. [Google Scholar] [CrossRef]

- Ragan, E.; Wilkes, C.; Bowman, D.A.; Hollerer, T. Simulation of Augmented Reality Systems in Purely Virtual Environments. In Proceedings of the 2009 IEEE Virtual Reality Conference, Lafayette, LA, USA, 14–18 March 2009; pp. 287–288. [Google Scholar] [CrossRef]

- Nabiyouni, M.; Scerbo, S.; Bowman, D.A.; Höllerer, T. Relative effects of real-world and virtual-world latency on an augmented reality training task: An ar simulation experiment. Front. ICT 2017, 3, 34. [Google Scholar] [CrossRef]

- Ma, S.; Simon, T.; Saragih, J.; Wang, D.; Li, Y.; De La Torre, F.; Sheikh, Y. Pixel codec avatars. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 64–73. [Google Scholar]

- Lombardi, S.; Saragih, J.; Simon, T.; Sheikh, Y. Deep appearance models for face rendering. ACM Trans. Graph. ToG 2018, 37, 1–13. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, D.; Chuang, P.; Ma, S.; Chen, D.; Li, Y. F-CAD: A Framework to Explore Hardware Accelerators for Codec Avatar Decoding. In Proceedings of the 2021 58th ACM/IEEE Design Automation Conference (DAC), San Francisco, CA, USA, 5–9 December 2021; pp. 763–768. [Google Scholar] [CrossRef]

- Abrash, M. Creating the future: Augmented reality, the next human-machine interface. In Proceedings of the 2021 IEEE International Electron Devices Meeting (IEDM), San Francisco, CA, USA, 11–15 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–2. [Google Scholar]

- Sumbul, H.E.; Wu, T.F.; Li, Y.; Sarwar, S.S.; Koven, W.; Murphy-Trotzky, E.; Cai, X.; Ansari, E.; Morris, D.H.; Liu, H.; et al. System-Level Design and Integration of a Prototype AR/VR Hardware Featuring a Custom Low-Power DNN Accelerator Chip in 7 nm Technology for Codec Avatars. In Proceedings of the 2022 IEEE Custom Integrated Circuits Conference (CICC), Newport Beach, CA, USA, 24–27 April 2022; pp. 1–8. [Google Scholar] [CrossRef]

- Mandal, D.K.; Jandhyala, S.; Omer, O.J.; Kalsi, G.S.; George, B.; Neela, G.; Rethinagiri, S.K.; Subramoney, S.; Hacking, L.; Radford, J.; et al. Visual inertial odometry at the edge: A hardware-software co-design approach for ultra-low latency and power. In Proceedings of the 2019 Design, Automation & Test in Europe Conference & Exhibition (DATE), Florence, Italy, 25–29 March 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 960–963. [Google Scholar]

- Einicke, G.A.; White, L.B. Robust extended Kalman filtering. IEEE Trans. Signal Process. 1999, 47, 2596–2599. [Google Scholar] [CrossRef]

- Tanskanen, P.; Naegeli, T.; Pollefeys, M.; Hilliges, O. Semi-direct EKF-based monocular visual-inertial odometry. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 6073–6078. [Google Scholar] [CrossRef]

- Šoberl, D.; Zimic, N.; Leonardis, A.; Krivic, J.; Moškon, M. Hardware implementation of FAST algorithm for mobile applications. J. Signal Process. Syst. 2015, 79, 247–256. [Google Scholar] [CrossRef]

- Avallone, S.; Imputato, P.; Redieteab, G.; Ghosh, C.; Roy, S. Will OFDMA improve the performance of 802.11 WiFi networks? IEEE Wirel. Commun. 2021, 28, 100–107. [Google Scholar] [CrossRef]

- Lang, B. Qualcomm Says New WiFi 6E Chips Support VR-Class Low Latency for VR Streaming. 2020. Available online: https://www.roadtovr.com/qualcomm-wifi-6e-fastconnect-vr-streaming-latency/ (accessed on 12 February 2023).

- Deng, C.; Fang, X.; Han, X.; Wang, X.; Yan, L.; He, R.; Long, Y.; Guo, Y. IEEE 802.11 be WiFi 7: New challenges and opportunities. IEEE Commun. Surv. Tutorials 2020, 22, 2136–2166. [Google Scholar] [CrossRef]

- Du, R.; Xie, H.; Hu, M.; Narengerile; Xin, Y.; McCann, S.; Montemurro, M.; Han, T.X.; Xu, J. An Overview on IEEE 802.11bf: WLAN Sensing. arXiv 2022, arXiv:2207.04859. [Google Scholar] [CrossRef]

- Zhang, J.; Chen, B.; Zhao, Y.; Cheng, X.; Hu, F. Data security and privacy-preserving in edge computing paradigm: Survey and open issues. IEEE Access 2018, 6, 18209–18237. [Google Scholar] [CrossRef]

- Andrae, A.S. Comparison of several simplistic high-level approaches for estimating the global energy and electricity use of ICT networks and data centers. Int. J. 2019, 5, 51. [Google Scholar] [CrossRef]

- Hu, Y.C.; Patel, M.; Sabella, D.; Sprecher, N.; Young, V. Mobile edge computing—A key technology towards 5G. ETSI White Pap. 2015, 11, 1–16. [Google Scholar]

- Liyanage, M.; Porambage, P.; Ding, A.Y.; Kalla, A. Driving forces for multi-access edge computing (MEC) IoT integration in 5G. ICT Express 2021, 7, 127–137. [Google Scholar] [CrossRef]

- Sachs, J.; Andersson, L.A.; Araújo, J.; Curescu, C.; Lundsjö, J.; Rune, G.; Steinbach, E.; Wikström, G. Adaptive 5G low-latency communication for tactile Internet services. Proc. IEEE 2018, 107, 325–349. [Google Scholar] [CrossRef]

- Fettweis, G.P. The Tactile Internet: Applications and Challenges. IEEE Veh. Technol. Mag. 2014, 9, 64–70. [Google Scholar] [CrossRef]

- Mourtzis, D.; Angelopoulos, J.; Panopoulos, N. Smart Manufacturing and Tactile Internet Based on 5G in Industry 4.0: Challenges, Applications and New Trends. Electronics 2021, 10, 3175. [Google Scholar] [CrossRef]

- Al Ridhawi, I.; Aloqaily, M.; Karray, F.; Guizani, M.; Debbah, M. Realizing the tactile Internet through intelligent zero touch networks. IEEE Network. 2022, 36, 243–250. [Google Scholar] [CrossRef]

- Elbamby, M.S.; Perfecto, C.; Bennis, M.; Doppler, K. Toward Low-Latency and Ultra-Reliable Virtual Reality. IEEE Netw. 2018, 32, 78–84. [Google Scholar] [CrossRef]

- Ahmad, M.; Jafri, S.U.; Ikram, A.; Qasmi, W.N.A.; Nawazish, M.A.; Uzmi, Z.A.; Qazi, Z.A. A low latency and consistent cellular control plane. In Proceedings of the Annual Conference of the ACM, Special Interest Group on Data Communication on the Applications, Technologies, Architectures, and Protocols for Computer Communication, Virtual Event, 10–14 August 2020; pp. 648–661. [Google Scholar]

- Tanenbaum, A.S.; Steen, M.V. Distributed Systems: Principles and Paradigms, 1st ed.; Prentice Hall PTR: Hoboken, NJ, USA, 2001. [Google Scholar]

- Li, Y.; Yuan, Z.; Peng, C. A Control-Plane Perspective on Reducing Data Access Latency in LTE Networks. In Proceedings of the 23rd Annual International Conference on Mobile Computing and Networking, MobiCom ′17, Snowbird, UT, USA, 16–20 October 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 56–69. [Google Scholar] [CrossRef]

- Masaracchia, A.; Li, Y.; Nguyen, K.K.; Yin, C.; Khosravirad, S.R.; Da Costa, D.B.; Duong, T.Q. UAV-enabled ultra-reliable low-latency communications for 6G: A comprehensive survey. IEEE Access 2021, 9, 137338–137352. [Google Scholar] [CrossRef]

- Viitanen, M.; Vanne, J.; Hämäläinen, T.D.; Kulmala, A. Low Latency Edge Rendering Scheme for Interactive 360 Degree Virtual Reality Gaming. In Proceedings of the 2018 IEEE 38th International Conference on Distributed Computing Systems (ICDCS), Vienna, Austria, 2–5 July 2018; pp. 1557–1560. [Google Scholar] [CrossRef]

- Shi, J.; Xu, Z.; Niu, W.; Li, D.; Wu, X.; Li, Z.; Zhang, J.; Shen, C.; Wang, G.; Wang, X.; et al. Si-substrate vertical-structure InGaN/GaN micro-LED-based photodetector for beyond 10 Gbps visible light communication. Photonics Res. 2022, 10, 2394–2404. [Google Scholar] [CrossRef]

- Hu, F.; Chen, S.; Li, G.; Zou, P.; Zhang, J.; Hu, J.; Zhang, J.; He, Z.; Yu, S.; Jiang, F.; et al. Si-substrate LEDs with multiple superlattice interlayers for beyond 24 Gbps visible light communication. Photonics Res. 2021, 9, 1581–1591. [Google Scholar] [CrossRef]

- Qi, Y.; Li, J.; Wei, C.; Wu, B. Free-space optical stealth communication based on wide-band spontaneous emission. Opt. Contin. 2022, 1, 2298–2307. [Google Scholar] [CrossRef]

- Li, W.; Yu, J.; Wang, Y.; Wang, F.; Zhu, B.; Zhao, L.; Zhou, W.; Yu, J.; Zhao, F. OFDM-PS-256QAM signal delivery at 47.45 Gb/s over 4.6-kilometers wireless distance at the W band. Opt. Lett. 2022, 47, 4072–4075. [Google Scholar] [CrossRef]

- Shi, S.; Gupta, V.; Hwang, M.; Jana, R. Mobile VR on Edge Cloud: A Latency-Driven Design. In Proceedings of the 10th ACM Multimedia Systems Conference, MMSys ′19, Amherst, MA, USA, 18–21 June 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 222–231. [Google Scholar] [CrossRef]

- Wang, Y.; Yu, T.; Sakaguchi, K. Context-Based MEC Platform for Augmented-Reality Services in 5G Networks. In Proceedings of the 2021 IEEE 94th Vehicular Technology Conference (VTC2021-Fall), Virtual Conference, 27 September–28 October 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Hałaburda, H. Unravelling in two-sided matching markets and similarity of preferences. Games Econ. Behav. 2010, 69, 365–393. [Google Scholar] [CrossRef]

- Vlahovic, S.; Suznjevic, M.; Skorin-Kapov, L. A survey of challenges and methods for Quality of Experience assessment of interactive VR applications. J. Multimodal User Interfaces 2022, 16, 257–291. [Google Scholar] [CrossRef]

- Brunnström, K.; Dima, E.; Qureshi, T.; Johanson, M.; Andersson, M.; Sjöström, M. Latency impact on Quality of Experience in a virtual reality simulator for remote control of machines. Signal Process. Image Commun. 2020, 89, 116005. [Google Scholar] [CrossRef]

- Desai, P.R.; Desai, P.N.; Ajmera, K.D.; Mehta, K. A review paper on oculus rift-a virtual reality headset. arXiv 2014, arXiv:1408.1173. [Google Scholar]

- Hsu, C.H. MEC-Assisted FoV-Aware and QoE-Driven Adaptive 360° Video Streaming for Virtual Reality. In Proceedings of the 2020 16th International Conference on Mobility, Sensing and Networking (MSN), Tokyo, Japan, 17–19 December 2020; pp. 291–298. [Google Scholar] [CrossRef]

- Feng, D.; Lai, L.; Luo, J.; Zhong, Y.; Zheng, C.; Ying, K. Ultra-reliable and low-latency communications: Applications, opportunities and challenges. Sci. China Inf. Sci. 2021, 64, 1–12. [Google Scholar] [CrossRef]

- Chen, M.; Dreibholz, T.; Zhou, X.; Yang, X. Improvement and implementation of a multi-path management algorithm based on MPTCP. In Proceedings of the 2020 IEEE 45th Conference on Local Computer Networks (LCN), Sydney, Australia, 16–19 November 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 134–143. [Google Scholar]

- Milovanovic, D.; Bojkovic, Z.; Indoonundon, M.; Fowdur, T.P. 5G Low-latency communication in Virtual Reality services: Performance requirements and promising solutions. WSEAS Trans. Commun. 2021, 20, 77–81. [Google Scholar] [CrossRef]

- Coutinho, R.W.; Boukerche, A. Design of Edge Computing for 5G-Enabled Tactile Internet-Based Industrial Applications. IEEE Commun. Mag. 2022, 60, 60–66. [Google Scholar] [CrossRef]

- Wang, C.; Yu, X.; Xu, L.; Wang, Z.; Wang, W. Multimodal semantic communication accelerated bidirectional caching for 6G MEC. Future Gener. Comput. Syst. 2023, 140, 225–237. [Google Scholar] [CrossRef]

- Lokumarambage, M.; Gowrisetty, V.; Rezaei, H.; Sivalingam, T.; Rajatheva, N.; Fernando, A. Wireless End-to-End Image Transmission System using Semantic Communications. arXiv 2023, arXiv:2302.13721. [Google Scholar]

- Manolova, A.; Tonchev, K.; Poulkov, V.; Dixir, S.; Lindgren, P. Context-aware holographic communication based on semantic knowledge extraction. Wirel. Pers. Commun. 2021, 120, 2307–2319. [Google Scholar] [CrossRef]

- Huang, M.; Zeng, Y.; Chen, L.; Sun, B. Optimisation of mobile intelligent terminal data pre-processing methods for crowd sensing. CAAI Trans. Intell. Technol. 2018, 3, 101–113. [Google Scholar] [CrossRef]

- Bawazir, S.S.; Sofotasios, P.C.; Muhaidat, S.; Al-Hammadi, Y.; Karagiannidis, G.K. Multiple access for visible light communications: Research challenges and future trends. IEEE Access 2018, 6, 26167–26174. [Google Scholar] [CrossRef]

- Almadani, Y.; Plets, D.; Bastiaens, S.; Joseph, W.; Ijaz, M.; Ghassemlooy, Z.; Rajbhandari, S. Visible light communications for industrial applications—Challenges and potentials. Electronics 2020, 9, 2157. [Google Scholar] [CrossRef]

- Huang, C.; Hu, S.; Alexandropoulos, G.C.; Zappone, A.; Yuen, C.; Zhang, R.; Di Renzo, M.; Debbah, M. Holographic MIMO surfaces for 6G wireless networks: Opportunities, challenges, and trends. IEEE Wirel. Commun. 2020, 27, 118–125. [Google Scholar] [CrossRef]

- Zhang, S.; Zhu, D. Towards artificial intelligence enabled 6G: State of the art, challenges, and opportunities. Comput. Netw. 2020, 183, 107556. [Google Scholar] [CrossRef]

- Taleb, T.; Nadir, Z.; Flinck, H.; Song, J. Extremely interactive and low-latency services in 5G and beyond mobile systems. IEEE Commun. Stand. Mag. 2021, 5, 114–119. [Google Scholar] [CrossRef]

- Arulkumaran, K.; Deisenroth, M.P.; Brundage, M.; Bharath, A.A. Deep reinforcement learning: A brief survey. IEEE Signal Process. Mag. 2017, 34, 26–38. [Google Scholar] [CrossRef]

- Hussein, A.; Gaber, M.M.; Elyan, E.; Jayne, C. Imitation learning: A survey of learning methods. ACM Comput. Surv. CSUR 2017, 50, 1–35. [Google Scholar] [CrossRef]

- Bloem, M.; Bambos, N. Ground delay program analytics with behavioral cloning and inverse reinforcement learning. J. Aerosp. Inf. Syst. 2015, 12, 299–313. [Google Scholar] [CrossRef]

- Angjelichinoski, M.; Trillingsgaard, K.F.; Popovski, P. A Statistical Learning Approach to Ultra-Reliable Low Latency Communication. IEEE Trans. Commun. 2019, 67, 5153–5166. [Google Scholar] [CrossRef]

| Acronym | Definition | Acronym | Definition |

|---|---|---|---|

| 5GB | 5G and Beyond | MEMS | Micro-electromechanical systems |

| AR | Augmented reality | MTP | Motion-to-photon |

| AV | Autonomous vehicles | NCC | Normalized cross-correlation |

| DNN | Deep neural network | NLOS | Non-line-of-sight |

| DoF | Degree of freedom | OFDM | Orthogonal frequency-division multiplexing |

| EKF | Extended Kalman filter | OST | Optical see-through |

| eMBB | Enhanced Mobile Broadband | PDCP | Packet Data Convergence Protocol |

| FOV | Field of view | PER | Packet error rate |

| fps | Frames per second | QoE | Quality of experience |

| HARQ | Hybrid automatic repeat request | QoS | Quality of service |

| HDD | Heads down display | RTT | Round-trip time |

| HDR | High dynamic range | SOC | System-on-a-chip |

| HMD | Head-mounted display | TI | Tactile Internet |

| HUD | Heads up display | URLLC | Ultra-reliable low-latency communication |

| IoT | Internet of Things | UWB | Ultra-wideband |

| IMU | Inertial measurement unit | VIO | Visual–inertial odometry |

| KPI | Key performance indicator | VLC | Visible-light communication |

| MAC | Multiply–accumulate | VR | Virtual reality |

| MAR | Mobile augmented reality | WLAN | Wireless local area network |

| MEC | Mobile edge computing |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hazarika, A.; Rahmati, M. Towards an Evolved Immersive Experience: Exploring 5G- and Beyond-Enabled Ultra-Low-Latency Communications for Augmented and Virtual Reality. Sensors 2023, 23, 3682. https://doi.org/10.3390/s23073682

Hazarika A, Rahmati M. Towards an Evolved Immersive Experience: Exploring 5G- and Beyond-Enabled Ultra-Low-Latency Communications for Augmented and Virtual Reality. Sensors. 2023; 23(7):3682. https://doi.org/10.3390/s23073682

Chicago/Turabian StyleHazarika, Ananya, and Mehdi Rahmati. 2023. "Towards an Evolved Immersive Experience: Exploring 5G- and Beyond-Enabled Ultra-Low-Latency Communications for Augmented and Virtual Reality" Sensors 23, no. 7: 3682. https://doi.org/10.3390/s23073682

APA StyleHazarika, A., & Rahmati, M. (2023). Towards an Evolved Immersive Experience: Exploring 5G- and Beyond-Enabled Ultra-Low-Latency Communications for Augmented and Virtual Reality. Sensors, 23(7), 3682. https://doi.org/10.3390/s23073682