SS-CPGAN: Self-Supervised Cut-and-Pasting Generative Adversarial Network for Object Segmentation

Abstract

1. Introduction

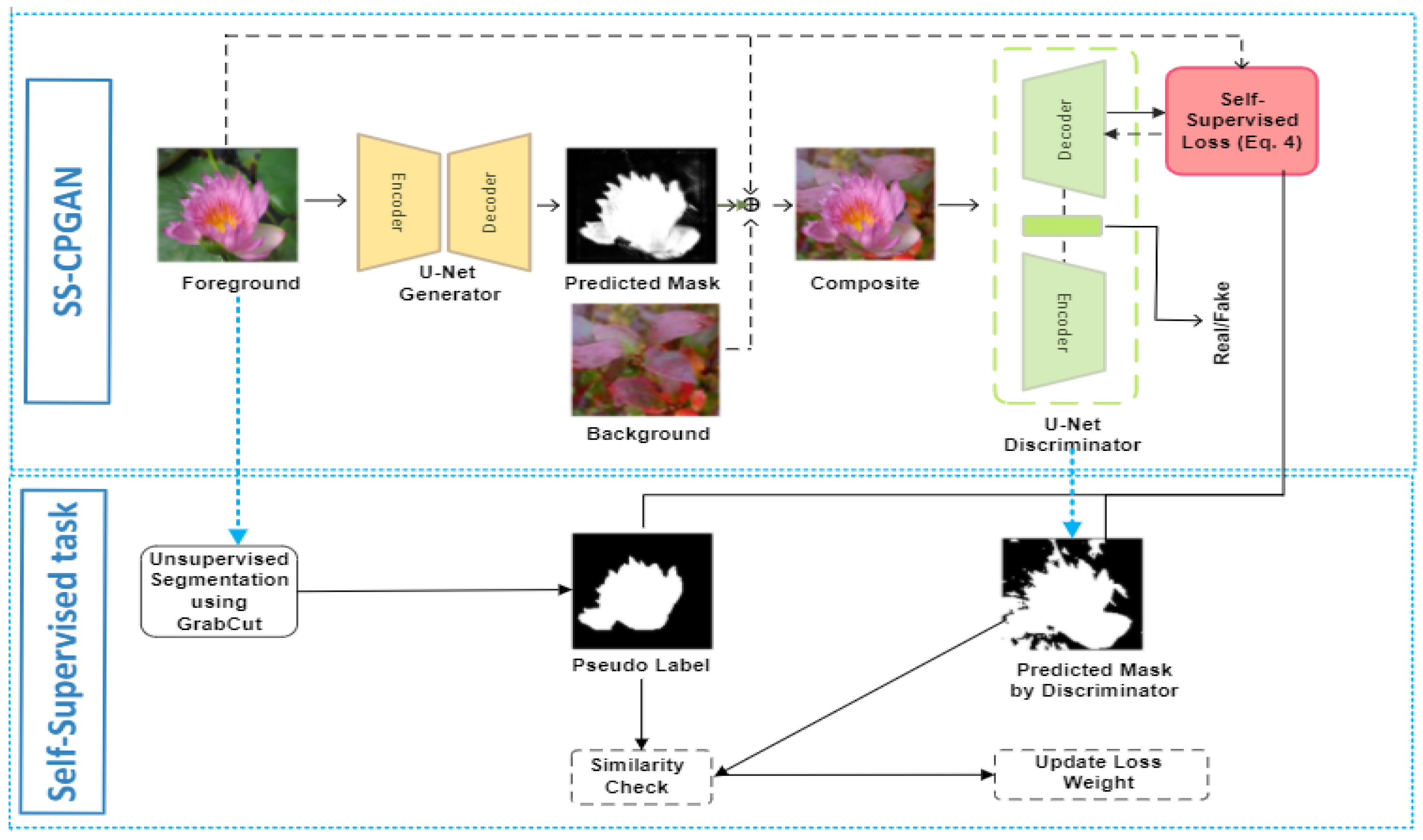

- This paper proposes a novel Self-Supervised Cut-and-Paste GAN (SS-CPGAN), which unifies cut-and-paste adversarial training with a segmentation self-supervised task. SS-CPGAN leverages unlabeled data to maximize segmentation performance and generates highly realistic composite images.

- The proposed self-supervised task in SS-CPGAN improves the discriminator’s representation ability by enhancing structure learning with global and local feedback. This enables the generator with additional discriminatory signals to achieve superior results and stabilize the training process.

- This paper comprehensively analyzes the benchmark datasets and compares the proposed method with the baseline methods.

2. Related Works

2.1. Unsupervised Object Segmentation via GANs

2.2. Self-Supervised Learning

3. Method

3.1. Adversarial Training

3.2. Encoder–Decoder Discriminator

3.3. Self-Supervised Cut-and-Paste GAN (SS-CPGAN)

4. Experimentation

4.1. Datasets

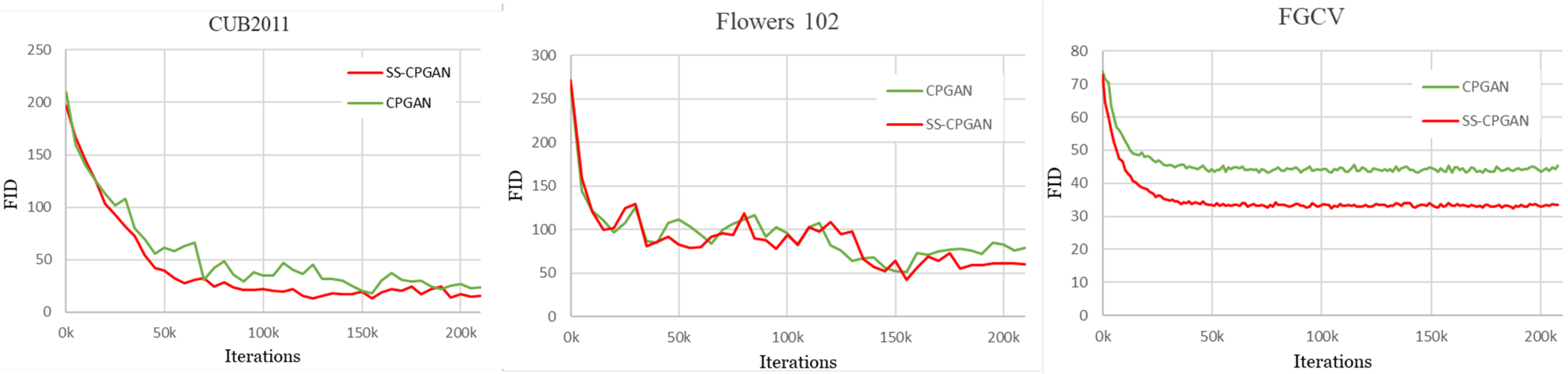

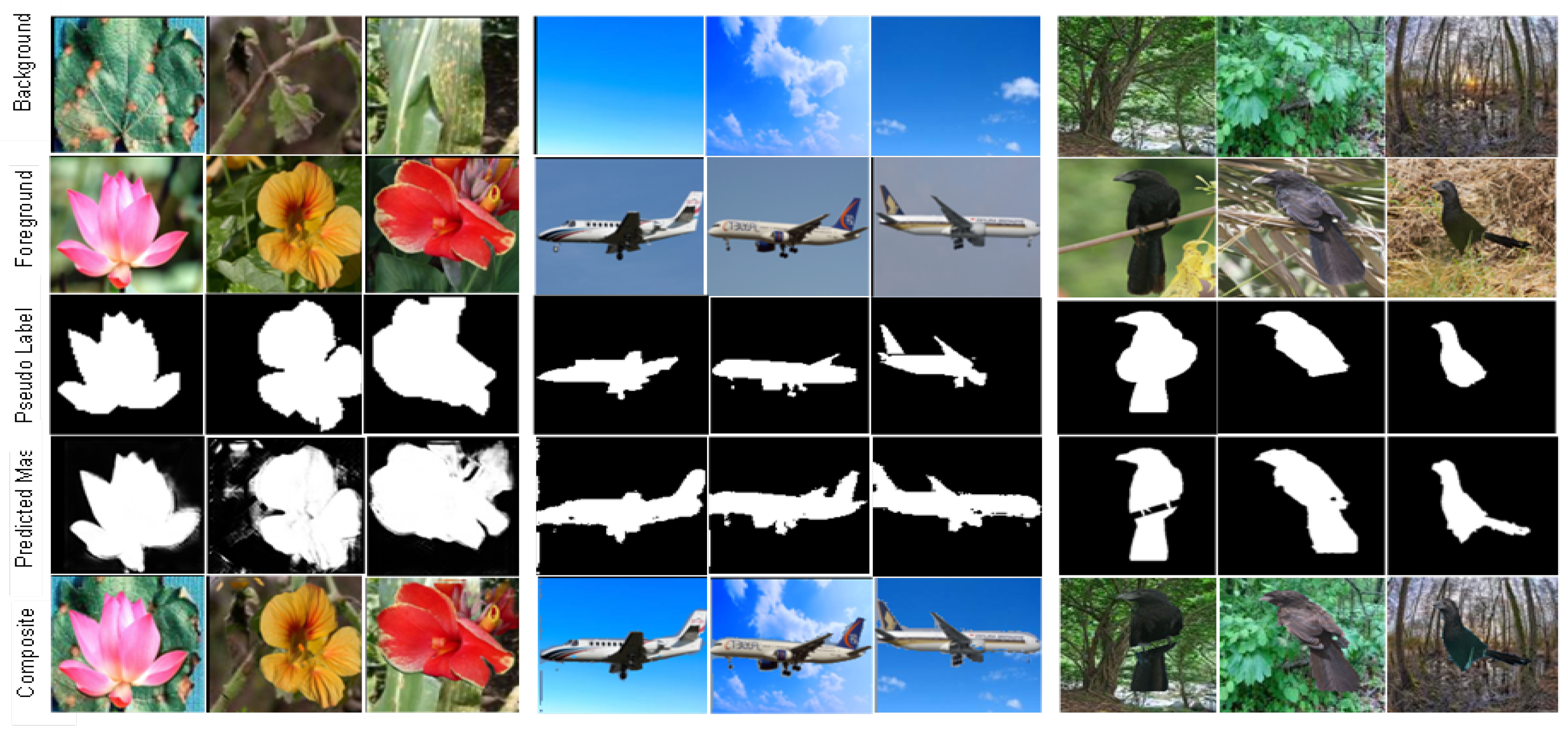

- Caltech-UCSD Birds (CUB) 200-2011 is a frequently used benchmark for unsupervised image segmentation. It consists of 11,788 images from 200 bird species.

- Oxford 102 Flowers consists of 8189 images from 102 flower classes.

- FGVC Aircraft (Airplanes) contains 102 different aircraft model variants with 100 images of each. This dataset was used initially for fine-grained visual categorization.

- MIT Places2 is a scene-centric dataset with more than 10 million images consisting of over 400 unique scene classes. However, in the experiments, we use the classes rainforest, forest, sky, and swamp as a background set for the Caltech-UCSD Birds dataset, and in the Oxford 102 Flowers, we use the class: herb garden as a background set.

- Singapore Whole-sky IMaging CATegories (SWIMCAT)contains 784 images of five categories: patterned clouds, clear sky, thick dark clouds, veil clouds, and thick white clouds. We use the SWIMCAT dataset as a background set for the FGCV dataset.

4.2. Experimental Setings

4.3. Hyper-Parameter Range

4.4. Results

4.5. Comparison with the State-of-the-Art

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, USA, 8–13 December 2014; Volume 27. [Google Scholar]

- Brock, A.; Donahue, J.; Simonyan, K. Large scale GAN training for high fidelity natural image synthesis. arXiv 2018, arXiv:1809.11096. [Google Scholar]

- Chaturvedi, K.; Braytee, A.; Vishwakarma, D.K.; Saqib, M.; Mery, D.; Prasad, M. Automated Threat Objects Detection with Synthetic Data for Real-Time X-ray Baggage Inspection. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18–22 July 2021; pp. 1–8. [Google Scholar]

- Chen, M.; Artières, T.; Denoyer, L. Unsupervised object segmentation by redrawing. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Volume 32. [Google Scholar]

- Bielski, A.; Favaro, P. Emergence of object segmentation in perturbed generative models. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Volume 32. [Google Scholar]

- Abdal, R.; Zhu, P.; Mitra, N.J.; Wonka, P. Labels4free: Unsupervised segmentation using stylegan. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021; pp. 13970–13979. [Google Scholar]

- Arandjelović, R.; Zisserman, A. Object discovery with a copy-pasting gan. arXiv 2019, arXiv:1905.11369. [Google Scholar]

- Zhang, Q.; Ge, L.; Hensley, S.; Isabel Metternicht, G.; Liu, C.; Zhang, R. PolGAN: A deep-learning-based unsupervised forest height estimation based on the synergy of PolInSAR and LiDAR data. ISPRS J. Photogramm. Remote Sens. 2022, 186, 123–139. [Google Scholar] [CrossRef]

- Zhan, C.; Dai, Z.; Samper, J.; Yin, S.; Ershadnia, R.; Zhang, X.; Wang, Y.; Yang, Z.; Luan, X.; Soltanian, M.R. An integrated inversion framework for heterogeneous aquifer structure identification with single-sample generative adversarial network. J. Hydrol. 2022, 610, 127844. [Google Scholar] [CrossRef]

- Zhou, G.; Song, B.; Liang, P.; Xu, J.; Yue, T. Voids Filling of DEM with Multiattention Generative Adversarial Network Model. Remote Sens. 2022, 14, 1206. [Google Scholar] [CrossRef]

- Li, W.; Tang, Y.M.; Yu, K.M.; To, S. SLC-GAN: An automated myocardial infarction detection model based on generative adversarial networks and convolutional neural networks with single-lead electrocardiogram synthesis. Inf. Sci. 2022, 589, 738–750. [Google Scholar] [CrossRef]

- Fu, L.; Li, J.; Zhou, L.; Ma, Z.; Liu, S.; Lin, Z.; Prasad, M. Utilizing Information from Task-Independent Aspects via GAN-Assisted Knowledge Transfer. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Zhang, L.; Li, J.; Huang, T.; Ma, Z.; Lin, Z.; Prasad, M. GAN2C: Information Completion GAN with Dual Consistency Constraints. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Chen, T.; Zhai, X.; Ritter, M.; Lucic, M.; Houlsby, N. Self-supervised gans via auxiliary rotation loss. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 12154–12163. [Google Scholar]

- Patel, P.; Kumari, N.; Singh, M.; Krishnamurthy, B. Lt-gan: Self-supervised gan with latent transformation detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 5–9 January 2021; pp. 3189–3198. [Google Scholar]

- Huang, R.; Xu, W.; Lee, T.Y.; Cherian, A.; Wang, Y.; Marks, T. Fx-gan: Self-supervised gan learning via feature exchange. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 1–5 March 2020; pp. 3194–3202. [Google Scholar]

- Hou, L.; Shen, H.; Cao, Q.; Cheng, X. Self-Supervised GANs with Label Augmentation. In Proceedings of the Advances in Neural Information Processing Systems, Online, 6–12 December 2021; Volume 34. [Google Scholar]

- Shi, Y.; Xu, X.; Xi, J.; Hu, X.; Hu, D.; Xu, K. Learning to Detect 3D Symmetry From Single-View RGB-D Images With Weak Supervision. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 4882–4896. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Che, P.; Liu, C.; Wu, D.; Du, Y. Cross-scene pavement distress detection by a novel transfer learning framework. Comput.-Aided Civ. Infrastruct. Eng. 2021, 36, 1398–1415. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, Z.; Liu, X.; Wang, L.; Xia, X. Efficient image segmentation based on deep learning for mineral image classification. Adv. Powder Technol. 2021, 32, 3885–3903. [Google Scholar] [CrossRef]

- Dong, C.; Li, Y.; Gong, H.; Chen, M.; Li, J.; Shen, Y.; Yang, M. A Survey of Natural Language Generation. ACM Comput. Surv. 2022, 55, 173. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Zhang, H.; Luo, G.; Li, J.; Wang, F.Y. C2FDA: Coarse-to-Fine Domain Adaptation for Traffic Object Detection. IEEE Trans. Intell. Transp. Syst. 2022, 23, 12633–12647. [Google Scholar] [CrossRef]

- Yang, B.; Gu, S.; Zhang, B.; Zhang, T.; Chen, X.; Sun, X.; Chen, D.; Wen, F. Paint by Example: Exemplar-based Image Editing with Diffusion Models. arXiv 2022, arXiv:2211.13227. [Google Scholar] [CrossRef]

- Xie, B.; Li, S.; Lv, F.; Liu, C.H.; Wang, G.; Wu, D. A Collaborative Alignment Framework of Transferable Knowledge Extraction for Unsupervised Domain Adaptation. IEEE Trans. Knowl. Data Eng. 2022. Early Access. [Google Scholar] [CrossRef]

- Dang, W.; Guo, J.; Liu, M.; Liu, S.; Yang, B.; Yin, L.; Zheng, W. A Semi-Supervised Extreme Learning Machine Algorithm Based on the New Weighted Kernel for Machine Smell. Appl. Sci. 2022, 12, 9213. [Google Scholar] [CrossRef]

- Ericsson, L.; Gouk, H.; Loy, C.C.; Hospedales, T.M. Self-Supervised Representation Learning: Introduction, advances, and challenges. IEEE Signal Process. Mag. 2022, 39, 42–62. [Google Scholar] [CrossRef]

- Feng, J.; Zhao, N.; Shang, R.; Zhang, X.; Jiao, L. Self-Supervised Divide-and-Conquer Generative Adversarial Network for Classification of Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5536517. [Google Scholar] [CrossRef]

- Baykal, G.; Unal, G. Deshufflegan: A self-supervised gan to improve structure learning. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 25–28 October 2020; pp. 708–712. [Google Scholar]

- Thanh-Tung, H.; Tran, T. Catastrophic forgetting and mode collapse in GANs. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–10. [Google Scholar]

- Mao, Q.; Lee, H.Y.; Tseng, H.Y.; Ma, S.; Yang, M.H. Mode seeking generative adversarial networks for diverse image synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1429–1437. [Google Scholar]

- Tran, N.T.; Tran, V.H.; Nguyen, B.N.; Yang, L.; Cheung, N.M.M. Self-supervised gan: Analysis and improvement with multi-class minimax game. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, USA, 8–13 December 2019; Volume 32. [Google Scholar]

- Xie, B.; Li, S.; Li, M.; Liu, C.; Huang, G.; Wang, G. SePiCo: Semantic-Guided Pixel Contrast for Domain Adaptive Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 1–17. [Google Scholar] [CrossRef]

- Yang, D.; Zhu, T.; Wang, S.; Wang, S.; Xiong, Z. LFRSNet: A robust light field semantic segmentation network combining contextual and geometric features. Front. Environ. Sci. 2022, 10, 1443. [Google Scholar] [CrossRef]

- Sheng, H.; Cong, R.; Yang, D.; Chen, R.; Wang, S.; Cui, Z. UrbanLF: A Comprehensive Light Field Dataset for Semantic Segmentation of Urban Scenes. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 7880–7893. [Google Scholar] [CrossRef]

- Chen, Y.; Wei, Y.; Wang, Q.; Chen, F.; Lu, C.; Lei, S. Mapping post-earthquake landslide susceptibility: A U-Net like approach. Remote Sens. 2020, 12, 2767. [Google Scholar] [CrossRef]

- Tran, L.A.; Le, M.H. Robust U-Net-based road lane markings detection for autonomous driving. In Proceedings of the 2019 International Conference on System Science and Engineering (ICSSE), Dong Hoi, Vietnam, 20–21 July 2019; pp. 62–66. [Google Scholar]

- Huang, H.; Lin, L.; Tong, R.; Hu, H.; Zhang, Q.; Iwamoto, Y.; Han, X.; Chen, Y.W.; Wu, J. UNet 3+: A Full-Scale Connected UNet for Medical Image Segmentation. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 1055–1059. [Google Scholar] [CrossRef]

- Welinder, P.; Branson, S.; Mita, T.; Wah, C.; Schroff, F.; Belongie, S.; Perona, P. Caltech-UCSD Birds 200. 2010. Available online: https://www.vision.caltech.edu/datasets/cub_200_2011/ (accessed on 4 November 2020).

- Nilsback, M.E.; Zisserman, A. Automated Flower Classification over a Large Number of Classes. 2008. Available online: https://www.robots.ox.ac.uk/~vgg/data/flowers/102/ (accessed on 4 November 2020).

- Maji, S.; Rahtu, E.; Kannala, J.; Blaschko, M.; Vedaldi, A. Fine-Grained Visual Classification of Aircraft. 2013. Available online: https://www.robots.ox.ac.uk/~vgg/data/fgvc-aircraft/ (accessed on 4 November 2020).

- Zhou, B.; Lapedriza, A.; Khosla, A.; Oliva, A.; Torralba, A. Places: A 10 Million Image Database for Scene Recognition. 2017. Available online: http://places2.csail.mit.edu/download.html (accessed on 4 November 2020).

- Dev, S.; Lee, Y.H.; Winkler, S. Categorization of Cloud Image Patches Using an Improved Texton-Based Approach. 2015. Available online: https://stefan.winkler.site/Publications/icip2015cat.pdf (accessed on 4 November 2020).

- Rother, C.; Kolmogorov, V.; Blake, A. “GrabCut” interactive foreground extraction using iterated graph cuts. ACM Trans. Graph. (TOG) 2004, 23, 309–314. [Google Scholar] [CrossRef]

- Zhang, H.; Xu, T.; Li, H.; Zhang, S.; Wang, X.; Huang, X.; Metaxas, D.N. Stackgan++: Realistic image synthesis with stacked generative adversarial networks. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 1947–1962. [Google Scholar] [CrossRef]

- Benny, Y.; Wolf, L. Onegan: Simultaneous unsupervised learning of conditional image generation, foreground segmentation, and fine-grained clustering. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 514–530. [Google Scholar]

- Yang, J.; Kannan, A.; Batra, D.; Parikh, D. Lr-gan: Layered recursive generative adversarial networks for image generation. arXiv 2017, arXiv:1703.01560. [Google Scholar]

- Yang, Y.; Bilen, H.; Zou, Q.; Cheung, W.Y.; Ji, X. Unsupervised Foreground-Background Segmentation with Equivariant Layered GANs. arXiv 2021, arXiv:2104.00483. [Google Scholar]

- Singh, K.K.; Ojha, U.; Lee, Y.J. Finegan: Unsupervised hierarchical disentanglement for fine-grained object generation and discovery. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–19 June 2019; pp. 6490–6499. [Google Scholar]

- Mo, S.; Kang, H.; Sohn, K.; Li, C.L.; Shin, J. Object-aware contrastive learning for debiased scene representation. In Proceedings of the Advances in Neural Information Processing Systems, Online, 6–12 December 2021; Volume 34. [Google Scholar]

- Kim, W.; Kanezaki, A.; Tanaka, M. Unsupervised learning of image segmentation based on differentiable feature clustering. IEEE Trans. Image Process. 2020, 29, 8055–8068. [Google Scholar] [CrossRef]

- Ji, X.; Henriques, J.F.; Vedaldi, A. Invariant information clustering for unsupervised image classification and segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9865–9874. [Google Scholar]

- Melas-Kyriazi, L.; Rupprecht, C.; Laina, I.; Vedaldi, A. Finding an unsupervised image segmenter in each of your deep generative models. arXiv 2021, arXiv:2105.08127. [Google Scholar]

- Voynov, A.; Morozov, S.; Babenko, A. Object segmentation without labels with large-scale generative models. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 10596–10606. [Google Scholar]

| SSIM ↑ | |||||

|---|---|---|---|---|---|

| lambda | 0.1 | 0.5 | 1 | 10 | 100 |

| SSIM | 0.657 | 0.834 | 0.450 | 0.421 | 0.125 |

| FID ↓ | ||||

|---|---|---|---|---|

| Methods | Image Size | Caltech UCSD-Bird 200 | FGCV- Aircraft | Oxford 102 Flowers |

| CPGAN | 64 × 64 | 26.724 | 43.353 | 81.724 |

| 128 × 128 | 23.002 | 39.674 | 44.825 | |

| 256 × 256 | 21.346 | 44.825 | 51.218 | |

| SS-CPGAN | 64 × 64 | 22.342 | 39.578 | 63.343 |

| 128 × 128 | 15.634 | 37.756 | 54.982 | |

| 256 × 256 | 13.113 | 33.149 | 49.181 | |

| mIoU ↑ | |||

|---|---|---|---|

| Methods | Image Size | Caltech UCSD-Bird 200 | Oxford 102 Flowers |

| w/o Self-Supervision | 64 × 64 | 0.537 | 0.632 |

| 128 × 128 | 0.492 | 0.674 | |

| 256 × 256 | 0.484 | 0.779 | |

| Self-Supervision | 64 × 64 | 0.571 | 0.625 |

| 128 × 128 | 0.543 | 0.719 | |

| 256 × 256 | 0.518 | 0.791 | |

| Method | FID |

|---|---|

| StackGANv2 | 21.4 |

| FineGAN | 23.0 |

| OneGAN | 20.5 |

| LR-GAN | 34.91 |

| ELGAN | 15.7 |

| SS-CPGAN | 13.11 |

| Dataset | Method | mIoU |

|---|---|---|

| Caltech UCSD-Bird 200 | PerturbGAN | 0.380 |

| ContraCAM | 0.460 | |

| ReDO | 0.426 | |

| UISB | 0.442 | |

| IIC-seg | 0.365 | |

| SS-CPGAN | 0.571 | |

| Oxford 102 flowers | ReDO | 0.764 |

| Kyriazi et. al. | 0.541 | |

| Voynov et al. | 0.540 | |

| SS-CPGAN | 0.791 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chaturvedi, K.; Braytee, A.; Li, J.; Prasad, M. SS-CPGAN: Self-Supervised Cut-and-Pasting Generative Adversarial Network for Object Segmentation. Sensors 2023, 23, 3649. https://doi.org/10.3390/s23073649

Chaturvedi K, Braytee A, Li J, Prasad M. SS-CPGAN: Self-Supervised Cut-and-Pasting Generative Adversarial Network for Object Segmentation. Sensors. 2023; 23(7):3649. https://doi.org/10.3390/s23073649

Chicago/Turabian StyleChaturvedi, Kunal, Ali Braytee, Jun Li, and Mukesh Prasad. 2023. "SS-CPGAN: Self-Supervised Cut-and-Pasting Generative Adversarial Network for Object Segmentation" Sensors 23, no. 7: 3649. https://doi.org/10.3390/s23073649

APA StyleChaturvedi, K., Braytee, A., Li, J., & Prasad, M. (2023). SS-CPGAN: Self-Supervised Cut-and-Pasting Generative Adversarial Network for Object Segmentation. Sensors, 23(7), 3649. https://doi.org/10.3390/s23073649