Abstract

Home appliances are considered to account for a large portion of smart homes’ energy consumption. This is due to the abundant use of IoT devices. Various home appliances, such as heaters, dishwashers, and vacuum cleaners, are used every day. It is thought that proper control of these home appliances can reduce significant amounts of energy use. For this purpose, optimization techniques focusing mainly on energy reduction are used. Current optimization techniques somewhat reduce energy use but overlook user convenience, which was the main goal of introducing home appliances. Therefore, there is a need for an optimization method that effectively addresses the trade-off between energy saving and user convenience. Current optimization techniques should include weather metrics other than temperature and humidity to effectively optimize the energy cost of controlling the desired indoor setting of a smart home for the user. This research work involves an optimization technique that addresses the trade-off between energy saving and user convenience, including the use of air pressure, dew point, and wind speed. To test the optimization, a hybrid approach utilizing GWO and PSO was modeled. This work involved enabling proactive energy optimization using appliance energy prediction. An LSTM model was designed to test the appliances’ energy predictions. Through predictions and optimized control, smart home appliances could be proactively and effectively controlled. First, we evaluated the RMSE score of the predictive model and found that the proposed model results in low RMSE values. Second, we conducted several simulations and found the proposed optimization results to provide energy cost savings used in appliance control to regulate the desired indoor setting of the smart home. Energy cost reduction goals using the optimization strategies were evaluated for seasonal and monthly patterns of data for result verification. Hence, the proposed work is considered a better candidate solution for proactively optimizing the energy of smart homes.

1. Introduction

Home appliances are considered to account for a large portion of smart home energy usage because appliances have increased in number in recent years. This is due to the introduction of many new home appliances that help consumers enjoy a comfortable lifestyle [1]. In fact, this comfort comes with the costs associated with home appliances used to support modern and ever-evolving technologies. Some of these IoT devices [2] are dishwashers, refrigerators, microwaves, and smart cars [3].

Due to the increase in appliances, energy efficiency has become a major challenge for many organizations. With an ever-growing population, increasing demand for energy, and the need to reduce energy consumption in smart homes, it is increasingly important to find ways to make energy consumption more efficient and sustainable. However, this can be challenging due to the complexity of the processes and systems involved. Using data to predict energy efficiency can be an effective solution to this problem. Data can be used to address many different types of inefficiencies.

The IoT [4] in the energy field is rapidly expanding as it becomes more affordable and efficient. Recently, some startups have focused on developing small home-appliance monitors to assist users at home. These devices can monitor weather conditions, appliance conditions, and other environmental information and send the data to a monitoring server. This technology enables energy systems to monitor smart homes without having to make house calls. In the future, IoT devices will enable users to deliver the remote control of energy settings around the world. This will allow us to create an eco-friendly environment.

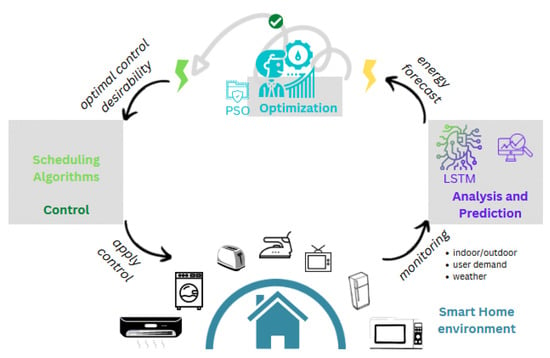

CPS systems [5] are based on prediction, analysis, optimization, control, and scheduling, and are considered to be the solution to this domain [6]. An exemplary CPS architecture shown in Figure 1 is needed because of the diversity and variation involved in area and user-dependent energy data. In such systems, many smart home appliances could be monitored remotely using technologies such as wearables and smart-home devices. In addition, if intelligence is embedded into these movable devices/appliances [7,8], it is possible to enhance the performances of energy systems in many ways, e.g., energy optimization based on real-time monitoring. This could also help cut costs in smart homes by reducing the requirement for the user to stay at home for controlling the home appliances.

Figure 1.

Complex Problem-Solving (CPS) environment: analysis, prediction, optimization, scheduling, and control.

The CPS problems are divided into layers, such that there is a clear relationship between each entity of a layer. This enables an open design where dependency is removed by following and employing a service-oriented architecture. The main components involved in an energy-optimization system that are meant to solve complex problems are the predictor, optimizer, scheduler, and controller, which can be visualized in Figure 1.

Monitoring [9] of smart home and healthcare appliances could be done through the use of technologies such as wearables and smart devices [10], as depicted in Figure 1. It enables the energy systems to get more insights into the data patterns, which could help in planning the future efficiently. This could also help cut costs in smart homes by reducing the requirement for the user to stay at home by controlling the home appliances, thereby reducing the other complications associated with the optimization of smart home energy control systems. It could also help maintain a healthy environment with fewer errors and streamline administrative tasks. In this work, we have used the appliance energy data [11,12] and the information related to energy-system considerations [13,14].

Optimization is an important part of saving resources [15]. Regarding energy, a variety of optimization methods can be performed by tweaking a few settings in the home, office, or hospital [16]. Some of these settings are manually adjusted, such as controlling temperature and humidity by carefully switching appliances on and off at the correct times of the day. For this purpose, optimization techniques are used in machine learning techniques to train the model.

Current optimization techniques somewhat reduce energy usage but overlook user convenience, which is the main goal of introducing home appliances. Therefore, there is a need for an optimization method that effectively addresses the trade-off between energy saving and user convenience. In current optimization techniques, the inclusion of weather metrics other than temperature and humidity is also needed to effectively optimize the energy cost of controlling user-desired room settings. This research involves work for an optimization technique that addresses the trade-off between energy saving and user convenience and includes air pressure, dew point, and wind speed. To test the optimization, a hybrid approach utilizing GWO [17] and PSO [18] was modeled for this purpose. In addition, this work involves using appliance energy prediction [19] to enable proactive energy optimization. The LSTM model was designed for energy prediction. Both prediction and optimized control allow the proactive and effective control of smart home appliances, and can be evaluated through the results provided.

The optimization layer shown in Figure 1 refers to finding an optimal solution to the problem under consideration. This can be related to minimizing or maximizing the target variable. For this, an objective function was designed, implemented, and evaluated, which helps in making the decision of selecting the best candidate algorithm for optimization. It allows the system to make decisions about system scheduling and control so that the problem is solved accordingly. This layer, i.e., optimization, uses many algorithmic techniques, such as PSO, GWO, GA, Bayesian optimization.

Prediction is a way of knowing the future. From Figure 1, provision of better control over energy systems can be realized in the domain of CPS. Making better use of data in this way can also help to improve the reliability and availability of the system, which can be an important benefit in environments where resources may be limited. Being able to predict [20] and prevent many problems ahead of time can make it easier to implement changes and improvements as necessary, saving time and increasing productivity overall.

Many companies are already using predictive data analytics to improve their energy efficiency and reduce costs. IBM is an example of a company that is applying this approach in many areas, including energy management systems, supply-chain optimization, and urban infrastructure management. IBM has worked with a wide range of clients, including large government agencies and private companies, such as Walmart and FedEx. The company has also developed a number of its products that use predictive data analytics to improve energy efficiency and reduce costs.

The prediction layer is responsible for providing relevant predictions about the environmental metrics involved in system. For this, machine learning techniques are used, e.g., LSTM [21,22,23]. For data patterns that change over time, RNN techniques are used. Similarly, many techniques can be applied according to data attributes.

Scheduling [24] the appliances is very important, as it may affect the energy consumption and device performance. Thus, it must be carefully planned. The information being used in decisions for control devices has turned out to be very handy, as it provides knowledge and the state of the environment. This includes both operational data and information about existing systems, and data about current patterns of energy use and information about future requirements. When used effectively, data can be used to predict and prevent many of these problems. This can help to improve efficiency and reduce operating costs. It can also help reduce the environmental impact of operations by improving the sustainability of the system and reducing the use of energy resources in an optimized way. For example, data collected during the testing of new equipment can be used to create a system model that can be used to accurately predict the performance of new equipment when it is installed into an existing system.

Scheduling and controlling [25,26] are the next steps after finding the best solution to the identified complex problem and can be visualized in Figure 1. These techniques give us the opportunity to apply the optimal appliance configurations and their settings to save the energy in smart homes. These steps allow the system to implement optimal solutions and control the environment, such that the systems; behavior can be analyzed before and after. To schedule tasks and control behavior, an IoT “app store” is used, in which tasks are mapped, assigned execution times, and deployed accordingly.

In addition, machine learning in smart applications [27] can be used to process and analyze vast amounts of data and improve energy utilization by taking control of the appliances and optimizing smart homes’ energy use. The advancement in technology has also made it possible to deploy AI-powered robots [28] in the operating room to perform control tasks while allowing the user to operate remotely using a control appliance attached to the robot. These movable devices can also assist with monitoring vital signs while functioning so that the time to change control parameters of the smart home can respond proactively to ensure the comfort of the user and optimization of energy at the same time.

This paper aims to provide a solution to the problem of maximizing the energy efficiency of a particular home through advanced machine learning algorithms. To attain this goal, we first discuss the various models that are used to build energy-optimization models for homes and the challenges that arise when implementing these models in the real world. We also introduce techniques that can be used to optimize energy usage based on machine learning. Thereafter, we compare the performances of these techniques and finally present our results. Following this, cost analyses are performed for identifying the monetary costs associated with achieving optimal energy efficiency from a particular home. Finally, we discuss these findings in the context of energy saving by providing energy management recommendations that will minimize energy costs and also improve the efficiency of these homes. This paper has a list of contributions to this domain of research.

- Weather data analytics: We found the importance of each feature and estimated its impact on the target variable, i.e., appliance energy.

- Energy-optimization model: We modeled the energy-optimization model not only based on temperature and humidity but several other weather factors too, i.e., air pressure, dew point, and wind speed. We integrated their weight factors, which were calculated based on the importance of each feature.

- Energy-forecasting model: It was modeled to include the weight factors of each feature in LSTM. It was evaluated over different months, seasons, etc.

In Section 2, insights about existing literature are provided. Current mechanisms’ shortages are highlighted, which the proposal tends to solve by a proactive approach. In Section 3, details of the proposed system are provided, in which the prediction model and optimization algorithm are formulated. In Section 4, details about the proposed system’s implementation, experimentation, and evaluation are provided. The dataset, i.e., AEP, is also explained. Finally, the conclusive remarks are made and highlighted in Section 6.

2. Literature Review

Home appliances are thought to account for a large portion of the energy consumption of smart homes. This is due to the abundant use of IoT devices [29]. Various home appliances, such as heaters, dishwashers, and vacuum cleaners, are used every day. It is believed that a significant amount of energy use can be reduced through proper control of such home appliances. For this purpose, optimization [30] techniques focusing mainly on energy reduction are used. In addition, predictive techniques [21,22,23] are also used for the proactive control in smart homes. This section highlights the shortcomings of existing studies and then briefs the reader on the challenges.

For the optimization of energy, several works [30,31,32,33] have been proposed. In these works, different energy saving mechanisms and techniques have been described, proposed, and evaluated through the provision of justifiable results.

One author proposed a PSO-based optimization technique [31] such that it considers temperature and humidity metrics. This work also evaluates the proposed technique and tends to save a justifiable amount of energy. However, it does not consider other weather metrics such as air pressure, dew point, and wind speed. Considering these metrics is important because the cost of energy varies by season and refers to dependency on multiple factors, which could have an huge impact on the scope of optimization technique. Based on this understanding, we enhanced the optimization technique by utilizing a hybrid optimization technique, i.e., PSO-GWO.

Various optimization models [30,31] have been proposed, and the best model was proposed to improve the optimization performance. This includes the comfort factors in the optimization procedure. This involves the addition of user-desired temperature and humidity. By following this approach, the trade-off between user comfort and energy-cost saving could be made. However, this method also lacks the evaluation of optimization model without considering other weather metrics.

The authors from the articles [30,31] proposed a system consisting of an optimal technique to save energy of appliances in the smart-home environment. They utilized the PSO-based optimization technique to save in the energy use of appliances. This includes the use of two parameters for the overall house. However, there could be an enhancement in terms of bringing proactiveness to this process. This will not only enable before-hand optimal control of smart-home energy, but also enhance the monitoring systems of smart homes’ alarm notifications. The algorithms can be utilized by advanced energy systems [34,35] to manage the energy efficiently. Based on these studies, it has been found that the predictions enable proactiveness in controlling the room’s conditions according to the user-desired settings. The proposed solution enables this by providing energy optimization for the energy forecasts instead, which not only enables proactiveness, but allows the system to be prepared for alerting the consumer.

The authors of the article [32] proposed a system comprising PSO-based ensembled models for the energy forecast. This includes the feature-selection approach for enhancing the forecast accuracy. However, the analysis could be improved by extending it to different seasons, months, areas, weekends, weekdays, and times of the day. To this end, our proposal tends to include this evaluation in the energy forecasts by considering the weight factor of each feature, rather than just selecting the features. This evaluation was required to enhance the prediction model’s diversity. We performed a thorough examination of the data comprising 29 features, of which a few were selected based on the co-variance with appliance energy consumption. By doing so, we utilized these weight factors in the optimization model as well. The predictions considering the weight factors allowed us to improve the RMSE score.

Energy optimization can be achieved in many ways [36]. However, this paper focuses on analyzing the data and finding the importance of each feature for the optimization of energy use using machine learning techniques, and on minimizing the cost of energy consumption required for controlling the appliances. To achieve this, it is necessary to utilize certain machine learning techniques, such as PSO and GWO, that can be used for building the model to achieve optimal efficiency by altering the energy demand in a particular home. The model trained with these techniques was then validated using the data [11,12] in different cases to achieve the optimal level of energy conservation in a home. In addition, energy forecasting is also discussed to give an idea of the better control it provides, to save the energy in advance.

For the prediction of appliance energy, existing forecasting models [21,22,23] take into account the latest techniques. In these works, time-series forecasting methodologies, mechanisms, and techniques are described, proposed, and evaluated through the provision of justifiable results.

In [21], an LSTM ensemble network was trained to learn the adaptive weighting mechanism. Some techniques [22] utilize deep learning to improve the performance, whereas some of them [23] make short-term forecasts. However, the seasonal factor is missing in these works, which could be accounted for in the training process after the thorough analysis of seasonal data. Based on this analysis, they could be assigned weights varying over the season, month, weekdays, weekdays, etc. We tend to improve the accuracy of forecasting model [37] in the proposed system by evaluating the RMSE score of our model applied over the given datasets described in Section 4.2.

Overall, the proposed system tends to enhance the optimization for better control of energy use in smart homes. Firstly, for better control, a prediction model was designed. This was designed in such a way that it performs better in various weather conditions due to the consideration of weight factors of the selected features. This includes the addition of other weather metric weight factors for air pressure, dew point, and wind speed. Secondly, it enables proactiveness in the optimization problem of energy systems in smart homes. In addition, it includes evaluation factors that make the prediction/forecast model diverse in nature and applicable to many regions—the secondary objective of this work. Conclusively, according to the comparison of our results with the current literature [30,31,33], the other above-mentioned weather factors play important roles in saving energy better, as they also impact energy consumption due to the fact that each season’s energy consumption is different, thereby highlighting the requirement of such an optimization technique to cater to them. The proposed system’s results regarding prediction and optimization together prove the it outperforms the existing energy-optimization systems and enables better control over smart-home energy use.

3. Proposed System

This section is categorized into three sections, i.e., preprocessing, prediction, and optimization. Details on preprocessing the data and extraction of feature weight factors is described are Section 3.1. Description of appliance energy forecasting can be found in Section 3.2. The technique for controlling the appliances to save on energy in an optimal way is explained in Section 3.3.

3.1. Preprocessing

In this section, we describe the steps we followed to find the importance of features and to calculate the weights representing the impacts of features. This was required to enhance the performances of prediction and optimization models. In prediction, the weights were passed as an input during the training process, whereas the weights were applied while calculating the energy cost to control the appliances in the optimization simulations.

The preprocessing shown in Section 4.2 included the removal of nulls, zeros, and unnecessary features, and evaluating the importance of each feature against the target variable, i.e., appliance energy. These actions are required for enhancing the performance of the the proposed time-series forecasting model.

Table 1 describes the variables used for scaling the dataset.

Table 1.

Data and variables used in scaling.

Firstly, the unnecessary features must be removed—those which do not have any impact or may have redundant data. For this purpose, we utilized the covariance matrix values. Based on this analysis, some of the temperature and humidity features were removed from the dataset. The column lights were also removed due to abundance of zeros. All the filters were applied after thorough analysis, from the findings of correlation, zeros, nulls, etc.

Secondly, scaling dataset features is a required step [38] before training the model. This is because the existing models perform well over a smaller range of numbers. From the perspective of optimizers, the learning rate can easily be detected and enables a friendly environment for experimentation. Standard and min-max scalers are widely used for this purpose. Equation (1) depicts scaling of the dataset, a pre-processing step required before training. refers to the scaled dataset. It is retrieved after applying to scale down data within a discrete set, i.e., between −1 and 1. The scalar value x refers to a value from a feature vector X; and refer to minimum and maximum scalar values from feature vector X, respectively. With the use of Equation (1), the dataset’s features are scaled as follows:

Thirdly, weight factors of each feature are calculated. This is accomplished by applying the scaled data to multiple models for the selection of the best model configurations and training settings.

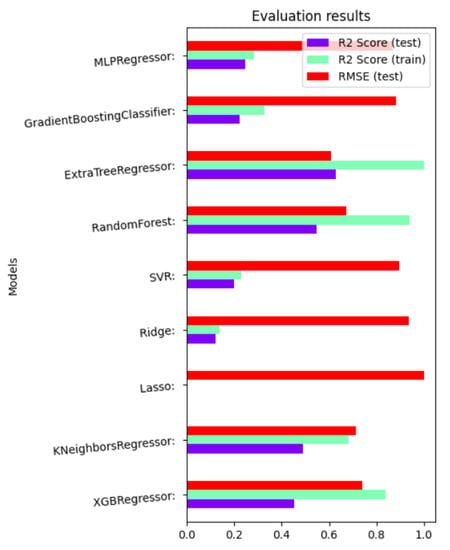

To get the best hyper-parameter [11] configurations for a model, we applied multiple models, e.g., MLP regressor, GBC, XTR, RF, SVR, Ridge, lasso, k-NN regressor, and XGB regressor. Among all these models, the extra tree regressor outputs the best results with the least RMSE score and maximum R2 score shown in Figure 2. Based on these results, we applied Boruta algorithm and GSCV to get the weight coefficients which refer to the importance of each feature represented by a scalar value. Weight coefficients can be retrieved by using the command . The importance values are then scaled within the range of 0 and 1, which are then used in the prediction and optimization problems.

Figure 2.

Root Mean Square Error (RMSE) and R2 scores of multiple models.

The Boruta algorithm was used to identify the feature importance, i.e., weight coefficients. Based on all features’ importance values, the weight factor of each was calculated and assigned to each feature so that they could be applied in prediction and optimization mechanisms for better performance.

3.2. Prediction

Table 2 describes the variables used in the prediction problem.

Table 2.

Data and variables used in prediction.

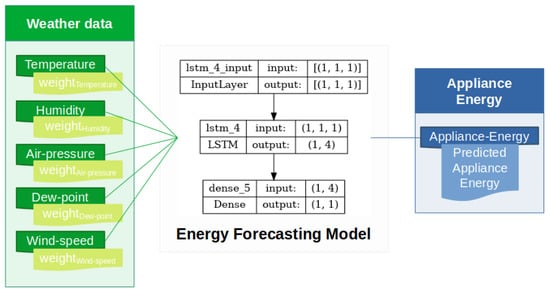

The proposed appliance-energy-prediction model is shown Figure 3. It shows an LSTM based model which comprises input, hidden, and output layers, along with its LSTM units. It can be visualized that the temperature, humidity, air pressure, dew point, wind speed, etc., are passed in, along with their calculated weights. The addition of weight factors refers to the importance of that feature. This weight factor shows the impact of that feature over the target variable, i.e., appliance energy.

Figure 3.

Proposed appliance energy prediction system.

For the prediction, we utilize an LSTM layer, and then a fully connected dense layer. Applying features and their calculated weight factors enhances the performance of prediction over the temporal variation. The configuration of proposed LSTM model also involves setting the numbers of layers, neurons, epochs (50), and learning-rate (0.01) for better training. The data features used as an input for prediction of appliance energy are temperature, humidity, air pressure, dew point, and wind speed, along with their weight factors. Formulation of the proposed time-series prediction model based on LSTM is explained in the following section.

Problem Formulation

Formulation of prediction problem is required so that it is applied over the daily and hourly data for evaluation, such that the performance remains stable. The main procedures involved in LSTM prediction module are input gate , forget gate , and output gate .

The LSTM model input gate is formulated in Equation (2), i.e., . This gate decides what relevant data need to be added from the LSTM unit.

The LSTM model forget gate is formulated in Equation (3), i.e., . This gate decides which data need to be retained and which can be forgotten.

The LSTM model output gate is formulated in Equation (4), i.e., . This gate finalizes the next hidden state.

The LSTM model regulator is formulated in Equation (5), i.e., . It is used to regulate the vector values between −1 and 1.

LSTM model memory cell is formulated in Equation (6), i.e., , which is retained and transferred to the next LSTM unit.

The LSTM model unit’s hidden state is formulated in Equation (7), i.e., . It decides to whether hidden state will be used in next LSTM unit or not. It is denoted as follows.

Collectively, all Equations (2)–(7) mentioned above provide a network of LSTM units and their inter-connectivity. The configuration of LSTM also involves setting the number of layers, neurons, epochs, and learning rate for better training. The proposed time-series prediction model, i.e., LSTM, is modeled below.

3.3. Optimization

Table 3 describes the variables used in the optimization problem.

Table 3.

Data and variables used in optimization.

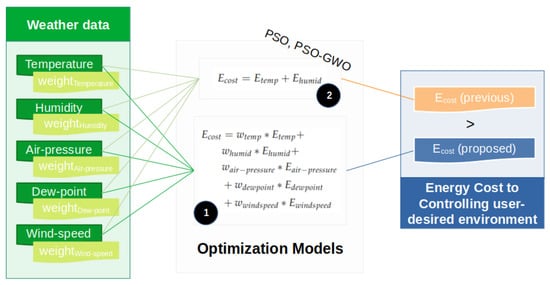

In this section, the optimization model is described, which includes the explanation of its internals and the formulation. In Figure 4, it can be seen that the proposed optimization makes the use of PSO-GWO hybrid approach and involves the other weather metrics, which are temperature, humidity, air pressure, dew point, and wind speed. In contrast, the previous approach only makes the use of temperature and humidity. Along with this, the proposed approach also makes the use of weight factors calculated through feature importance, as described in Section 3.1.

Figure 4.

Proposed energy-optimization system.

An optimization model is defined for the optimal control of the energy use of smart homes. The dataset [11,39] contains 29 features, from which only the most important features were selected, and the following formulation is be applied.

Problem Formulation

The optimization is formulated as per Equation (8). All dataset features are applied to the optimization formula to optimally reduce appliance energy consumption by considering the weights associated with each feature based on their coefficients.

Equation (9) refers to the basic energy-optimization method that does not include/ consider environmental parameters other than the temperature and humidity. refers to the associated cost in controlling the energy, whereas and refer to the energy costs of controlling temperature and humidity, respectively. The former is as follows:

Equation (10) refers to the proposed energy-optimization method that considers weather conditions along with the temperature and humidity factors. The proposal introduces the use of weight factors for each optimization parameter, which is based on the data analysis performed with the features. , , , , and refer to the weights associated with temperature, humidity, air pressure, dew point, and wind speed, respectively. The goal of this formula is to provide a minimal cost for controlling the appliance energy. Based on this cost, we assume that these metrics reduce the energy of an appliance. The optimization formula in Equation (10) is represented as follows:

In Algorithm 1, the current state of the environment is fetched. The number of particles in a swarm is the number of iterations, and the optimal solution is found accordingly. This approach considers the minimization function explained in Equation (10). The user-desired settings are also considered in optimization, which are temperature and humidity ranges. Based on this information, the updated cost calculated and finds the minimum value, as the goal of this work is to minimize the cost spent on controlling cost. The total is compared with previous work, and the proposed Algorithm 1 shows better performance in terms of optimizing the energy cost of the provided environment.

| Algorithm 1: Energy-optimization algorithm. |

|

Overall, the proactiveness and optimization shown in Section 3.2 enable the proactive control, optimization, and scheduling of energy-optimization systems for smart homes.

4. Experiment Results

In this section, the process of setting up the testing environment is explained. The details about dataset and its preprocessing are explained. At the end, the evaluations are made in terms of RMSE for the predictions and actual energy costs when controlling appliances for energy optimization.

4.1. Test Environment

The environment used for experimentation is shown in Table 4. All experiments were performed on an Ubuntu operating system. Python was used for designing the appliance energy forecast model and the simulation of optimization. Other packages and libraries were used, including Conda, Keras, TensorFlow, Sci-Kit learn, Fuzzy-Module, Matplotlib, Numpy, and Pandas.

Table 4.

System environment and software packages.

4.2. Dataset

The dataset [11] shown in Table 5 was used in the study [39], and it contains 19,735 records and 29 columns. The data are categorized as indoor and outdoor data. Of these categories, some of which are temperature and humidity, represented by T and H, for rooms inside a home and nearby a station. The dataset also contains some information about the light, visibility, air pressure, wind speed, dew point, etc., from the nearest weather station at Chievres Airport, Belgium. The dataset’s accuracy [40] shows that the data are valid and ready to use for analysis and contain useful insights regarding monthly, weekly, daily, and hourly energy usage patterns.

Table 5.

Appliance energy dataset reprinted/adapted with permission from Ref. [11]. 2023, Kaggle-GoKagglers.

The second dataset [12] shown in Table 6 also contains data that include four seasons, i.e., 12 months data. It contains the temperature and humidity values over 10 min intervals, which are associated with the energy consumption of the house. Evaluation of optimization was performed using this dataset, and it resulted in better performance in terms of energy saving. The experiments performed over this data were based on seasonal, monthly, and daily energy usage patterns.

Table 6.

Appliance energy dataset (South Korea) [12].

The preceding dataset was used for experimentation for the following reasons:

- The dataset has been used in previous studies in our laboratory. It was used to provide optimal control parameters with the use of PSO based on temperature and humidity, and user-desired environmental conditions in the house.

- The dataset contains four seasons’ data representing different weather conditions. It also contains information other than temperature and humidity, such as air pressure, dew point, and wind speed. This type of data provides the ability to realize the performance better.

4.3. Evaluation

This section provides insights about the evaluation of the appliance-energy-prediction model and energy-optimization technique.

4.3.1. Preprocessing

This section describes the process of preprocessing the dataset. This is required to enhance the performances of both prediction and optimization models.

The correlation formula is shown in Equation (11), which results in the correlation analysis shown in Figure 5. In this equation, represents a feature to be compared with from the dataset, whereas the and represent their respective means.

Figure 5.

Feature covariance(s).

Based on the analysis of correlation graph shown in Figure 5, we concluded that some of the features needed to be dropped. Thus, several room temperature and humidity features were dropped: outdoor temperature and light, and both random-variable features due to their low impact on the appliance energy and due to the abundance of zeros values for them. The columns that were dropped overall were , , , , , , , , and . The column was dropped due to the high number of zeros in it which could impact a model’s performance. was removed as it was redundant and almost contained the same values as . The other columns having lower scores than were removed in this work.

For experimentation, we utilized two popular scaling techniques, i.e., minmax scaler and standard scaler. From the results shown in Table 7, we found out that minmax-scaler helps results are better than the ones using the standard scaler. The minimum and maximum values set for scaling were −1 and 1, respectively.

Table 7.

RMSE comparison.

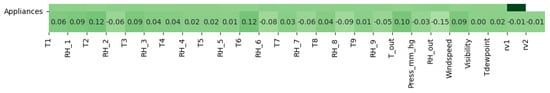

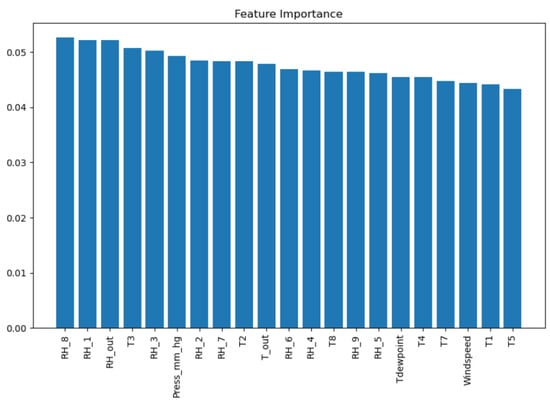

Utilizing the filtered feature set, the importance of each feature was calculated, which provided more insights on the need for associating their weights to be used in training process, initially. The feature importance values are shown in Figure 6. These are also termed as coefficients in this manuscript, which are considered to be the weights associated with each feature used in the proposed system. The term coefficients is often replaced with the level of importance in this manuscript to show the weight w of each feature separately, and to also show its level of impact on the appliance energy.

Figure 6.

Filtered features’ levels of importance.

Overall, based on preprocessing results, the training of the prediction model and simulation of optimization model can be evaluated. Due to the addition of weight factors, the effects on the models’ performances are evaluated in the following sections.

4.3.2. Prediction

In this section, the appliance energy forecast model is evaluated by its RMSE score over the time. The performance of this model was tested using the dataset shown in Table 5.

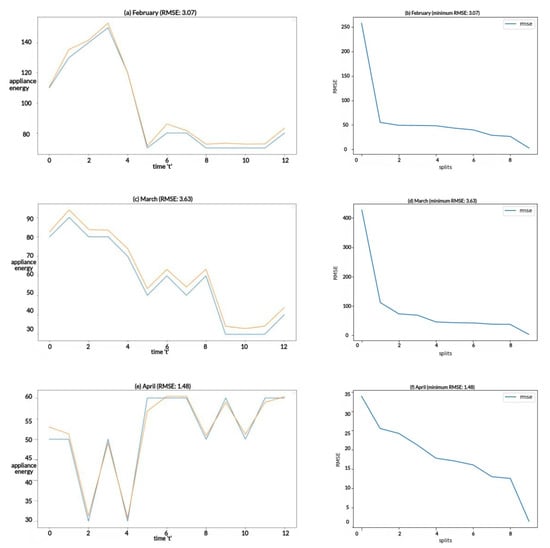

The RMSEs when using the other approaches [41] are compared to our RMSE results. The RMSE values shown in Table 7 are for three different months, i.e., February, March, and April. It can be seen that the evaluations vary for each day of the month, referring to the fact that each day of the month has different requirements. This highlights the need for a model that can deal with the patterns of data from different months, days, weekdays, and weekends.

The results for the energy forecast model evaluation are visualized in Figure 7, which reflect the performances of the predictions. The orange and blue colors refer to the forecast and actual appliance energy. Figure 7a–f reflect the prediction comparisons and RMSE scores for the months of February, March, and April.

Figure 7.

Appliance energy forecasts and RMSE score of the model.

In the evaluations shown in Table 7, it can be seen that the RMSE is low, as shown in the predictions in Figure 7. We found that 10 splits gives the best performance if scaling is used with minimum and maximum values of 1 and −1, respectively. Performance was analyzed and evaluated for all months, and the results for the months of February, March, and April are shown in Table 7.

4.3.3. Optimization

In this section, the energy optimization model is evaluated by its energy cost when controlling the appliances in consideration of user-desired room settings. Simulations of the proposed hybrid optimization approach, i.e., PSO-GWO, and PSO-based optimization reliant on temperature and humidity metrics [30], showed that the proposed approach saves more energy cost when controlling the appliances in a smart home.

For evaluation, we used two optimization techniques specified in Table 8. The PSO-based method, our previous work, makes use of temperature and humidity metrics as per Equation (9). The proposed optimization model, the PSO-GWO approach, makes use of additional metrics of the model as well, i.e., temperature, humidity, air pressure, dew point, and wind speed; and the formulation can be seen in Equation (10). In addition, weight-factors based on analysis are applied in the proposed optimization model.

Table 8.

Different model configurations used for comparison.

The energy savings using the previous approach [31] that uses PSO as the model and two factors, temperature and humidity, are compared with the proposed optimization technique’s results (it makes the use of other weather factors in its cost function). The model configurations are shown in Table 8.

The criteria for the user-desired temperature and humidity were set to 25 and between 53 and 56, which are shown in the form of Table 9. It can be seen that if the the temperature is cool or hot, we have defined the criteria for keeping the user-desired temperature by taking appropriate actions. The same hold for the humidity level.

Table 9.

Criteria for controlling the temperature and humidity.

The metrics, temperature and humidity, are the user-desired metrics used in this work. Other metrics, i.e., air pressure, dew point, and wind speed, have shown effects in Table 10 due to their inclusion in the cost function of the optimization procedure formulated in Equation (10). The costs associated with controlling the appliances according to the user-desired settings are compared between the proposed PSO-GWO- and PSO-based optimizations [31]. They differ in their cost functions, depicted in Equations (9) and (10), respectively. Due to the fluctuation of energy consumption in seasons, the performance of the optimization model might be affected. Therefore, season-wise performance comparison was tabulated to prove the credibility of the proposed optimization technique, due to the use of weather factors and their weights other than temperature and humidity.

Table 10.

Season-wise energy-cost comparison.

It is also a known fact energy consumption fluctuates among the months, which might affect the performance of the optimization model. Even in different months, the optimization performs well, hence proving the credibility of the weight of each factor. Due to its better performance, the smart home management systems are allowed to configure the optimal control for as long as it is desired.

The total number of saved kW units accumulated for all seasons was 17,094.95, in comparison to the PSO-based approach without the outdoor weather metrics. Table 10 shows that the proposed optimization technique works quite well in the different seasons as well.

In Table 11, a month-wise comparison is provided for the evaluation of the proposed optimization technique, i.e., the PSO-GWO approach. It can be seen in comparison of energy cost in controlling the appliances, between the proposed optimization technique and previous approach [31], that the proposed optimization technique provides better results. It is also a known fact the energy consumption fluctuates by month, which might affect the performance of the optimization model. Even in different months, the optimization performs well, proving the credibility of the weight of each factor. Due to its better performance, the smart home management systems are allowed to configure the optimal control for as long as it is desired.

Table 11.

Month-wise energy-cost comparison for dataset [39].

The proposed optimization model was applied to the second dataset [12]. The relevant results are shown in Table 12, which reflect the optimization in the form of minimizing the cost associated with controlling the appliances according to the user-desired environmental settings of the room.

Table 12.

Month-wise energy cost-comparison for dataset [12]/Table 6.

The total number of saved kW units accumulated for the period of 12 months was 17,091.20, in comparison to the PSO-based approach without the outdoor weather metrics. Table 11 shows that the proposed optimization technique performs better for all months as well.

In Table 13, a comparison of the energy cost by increasing the number of months is provided. It can be seen that the energy cost of the proposed optimization technique, i.e., PSO-GWO, is very low as compared to that of the previous approach [31]. It can also be seen that an increase in the number of months does not affect the performance, which allows the stakeholder to configure the optimal control for as long as desired.

Table 13.

Energy-cost comparison when increasing the number of months.

Same results for the dataset [12] can be visualized in Table 14. It is evident that the energy cost optimization is better when the proposed methodology is used as it results in more energy controlling cost savings.

Table 14.

Energy-cost comparison when increasing the number of months for dataset [12].

The experiments have shown that the data requirements change by season, month, day, weekday or weekend status, and time of day. This highlights the need for a model that can first identify the factors, and then apply the time-series forecasting of the model. This can be validated by the use of different datasets representing variances over different regions. In this work, we utilized two different datasets, i.e., Chievres Airport, Belgium [11], and Cheonan, South Korea [12]. This definitely enhanced the performance of prediction and optimization in terms of the RMSEs of accuracy and energy-cost saving when controlling the appliances. In addition to this, the GWO- and PSO-based optimizations were formulated together, which can be utilized and applied to the appliance energy forecasts. The experimental results reflect the fact that each weather factor has an important role to play in the prediction and optimization of energy because of the geographical, seasonal, and day-type (weekend, weekday) variations, among others. Based on the results, it is concluded that the inclusion of air pressure, dew point, and wind speed enhance the performance and result in reducing the energy costs involved in controlling the smart home appliances.

5. Discussion

In this section, the findings of the current work are discussed. Current energy systems have the limitation of performing with different levels of efficacy depending on the season or month. This is due to the fact that they do not account for the level of impact features related to the time of year have on the energy. Analysis and supporting results from Section 4 showed that our energy-optimization scheme works rather well in all of the seasons and months.

The energy-saving results shown in Table 10, Table 11 and Table 13 also support the idea of considering weight coefficients in existing optimization techniques to enhance their performances in certain seasons and months as well. The values that we used in this work for the dataset are shown in Table 15.

Table 15.

Coefficients and weight values.

The primary findings of this work are that the inclusion of properly analyzed weight coefficients could enhance optimization techniques and result in energy savings. They also play an important role in the prediction of appliance energy use.

The secondary focus of this work was to consider the level of impact of a specific feature on the energy consumption. For this purpose, the weight coefficients are included in both the energy prediction and optimization models.

From physical point of view, energy savings could be explained as such: if the appliances’ optimal control parameters are not frequently updated, then their energy efficiency is significantly reduced. This wasted energy could be saved for that specific dataset/area where the appliances were unnecessarily turned on.

It can also be deduced from the seasonal and month-wise comparison results that the weight factors play an important role in the optimization problem. In this work, we primarily focused on finding the best weight factors for the region used in this study. It can be confidently said that if the same technique used to estimate the weight factors is applied carefully for another dataset representing different regions with their own weather conditions, the optimization model will perform the same. This is due to the use of weight factors which reflect different regional weather conditions.

6. Conclusions

From the previous results, we can conclude that the proposed system can enable proactiveness and efficiency in current energy-optimization systems. It can be stated that this enhancement is provided by the inclusion of weight coefficients that refer to the levels of impact of weather metrics on the appliance energy. This not only enhances the performance of the optimization technique but also that of the prediction technique. with the use of properly estimated weight factors, this optimization approach could be applied to multi-regional datasets. The second interesting point of this work is that it considered the level of impact of each feature, which again refers to the fact that this optimization has a broader scope in terms of its application by using a variety of features.

Further enhancements to the optimization module by optimizing, scheduling, and controlling appliance energy based on season, month, day, week, weekend, and time using GWO-PSO-based optimization showed that the proposed system can provide optimal control parameters through the use of weather metrics other than just temperature and humidity. This addition broadens the scope of both prediction and optimization models and enhances them to perform better in various seasons, months, day-types.

Author Contributions

Conceptualization, A.M.; methodology, A.M.; validation, K.-T.L.; formal analysis, A.M.; investigation, D.-H.K.; resources, K.-T.L.; data curation, A.M.; writing—original draft, A.M.; writing–review & editing, A.M. and K.-T.L.; visualization, D.-H.K.; supervision, K.-T.L. and D.-H.K.; funding acquisition, K.-T.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Research Foundation of Korea (NRF)—Ministry of Education grant number 2020R1I1A3070744 and the APC was funded by 2020R1I1A3070744.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Dataset 1: https://www.kaggle.com/datasets/loveall/appliances-energy-prediction (accessed on 26 March 2023). Dataset 2: https://doi.org/10.21227/9m0k-cm61 (accessed on 26 March 2023).

Acknowledgments

This work was supported by the Basic Science Research Program through the National Research Foundation of Korea (NRF), funded by the Ministry of Education under Grant 2020R1I1A3070744.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| CPS | Complex Problem-Solving |

| GA | Genetic Algorithm |

| GBC | Gradient Boosting Classifier |

| GSCV | Grid Search Cross Validation |

| GWO | Grey Wolf Optimizer |

| IBM | International Business Machines |

| IoT | Internet of Things |

| k-NN | K-Neighbors |

| kW | Kilowatt |

| LSTM | Long Short-Term Memory |

| MLP | Multi-layer Perceptron |

| PSO | Particle Swarm Optimizer |

| RF | Random Forest |

| RNN | Recurrent Neural Network |

| RMSE | Root Mean Square Error |

| SVR | Support Vector Regression |

| XGB | Extreme Gradient Boosting |

| XTR | Extra Tree Regressor |

References

- Candanedo, L.M.; Feldheim, V.; Deramaix, D. Data driven prediction models of energy use of appliances in a low-energy house. Energy Build. 2017, 140, 81–97. [Google Scholar] [CrossRef]

- Mehmood, F.; Ahmad, S.; Ullah, I.; Jamil, F.; Kim, D. Towards a dynamic virtual iot network based on user requirements. Comput. Mater. Contin. 2021, 69, 2231–2244. [Google Scholar] [CrossRef]

- Shewale, A.; Mokhade, A.; Funde, N.; Bokde, N.D. An overview of demand response in smart grid and optimization techniques for efficient residential appliance scheduling problem. Energies 2020, 13, 4266. [Google Scholar] [CrossRef]

- Mocrii, D.; Chen, Y.; Musilek, P. IoT-based smart homes: A review of system architecture, software, communications, privacy and security. Internet Things 2018, 1, 81–98. [Google Scholar] [CrossRef]

- Graesser, A.; Kuo, B.C.; Liao, C.H. Complex problem solving in assessments of collaborative problem solving. J. Intell. 2017, 5, 10. [Google Scholar] [CrossRef]

- Funke, J. Complex problem solving. In Encyclopedia of the Sciences of Learning (682–685); Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Sepasgozar, S.; Karimi, R.; Farahzadi, L.; Moezzi, F.; Shirowzhan, S.; Ebrahimzadeh, S.M.; Hui, F.; Aye, L. A systematic content review of artificial intelligence and the internet of things applications in smart home. Appl. Sci. 2020, 10, 3074. [Google Scholar] [CrossRef]

- Stadler, M.; Becker, N.; Gödker, M.; Leutner, D.; Greiff, S. Complex problem solving and intelligence: A meta-analysis. Intelligence 2015, 53, 92–101. [Google Scholar] [CrossRef]

- Pandya, S.; Ghayvat, H.; Kotecha, K.; Awais, M.; Akbarzadeh, S.; Gope, P.; Mukhopadhyay, S.C.; Chen, W. Smart home anti-theft system: A novel approach for near real-time monitoring and smart home security for wellness protocol. Appl. Syst. Innov. 2018, 1, 42. [Google Scholar] [CrossRef]

- Ahmad, S.; Mehmood, F.; Khan, F.; Whangbo, T.K. Architecting Intelligent Smart Serious Games for Healthcare Applications: A Technical Perspective. Sensors 2022, 22, 810. [Google Scholar] [CrossRef]

- Kaggle, G. Appliance Energy Prediction. Available online: https://www.kaggle.com/datasets/loveall/appliances-energy-prediction (accessed on 26 March 2023).

- Mehmood, A.; Mehmood, F. Appliance Energy in Cheonan, South Korea. 2023. IEEE DataPort. Available online: https://ieee-dataport.org/documents/appliance-energy-cheonan-south-korea (accessed on 26 March 2023). [CrossRef]

- Energy.Gov. Energy Data Facts. Available online: https://rpsc.energy.gov/energy-data-facts/ (accessed on 13 January 2023).

- Energy Consumption By Sector. Available online: https://www.eia.gov/totalenergy/data/monthly/pdf/sec2.pdf (accessed on 13 January 2023).

- Naz, M.; Iqbal, Z.; Javaid, N.; Khan, Z.A.; Abdul, W.; Almogren, A.; Alamri, A. Efficient power scheduling in smart homes using hybrid grey wolf differential evolution optimization technique with real time and critical peak pricing schemes. Energies 2018, 11, 384. [Google Scholar] [CrossRef]

- Mehmood, A.; Mehmood, F.; Song, W.C. Cloud based E-Prescription management system for healthcare services using IoT devices. In Proceedings of the 2019 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 16–18 October 2019; pp. 1380–1386. [Google Scholar]

- Abualigah, L.; Gandomi, A.H.; Elaziz, M.A.; Hussien, A.G.; Khasawneh, A.M.; Alshinwan, M.; Houssein, E.H. Nature-inspired optimization algorithms for text document clustering—A comprehensive analysis. Algorithms 2020, 13, 345. [Google Scholar] [CrossRef]

- Abushawish, A.; Jarndal, A. Hybrid particle swarm optimization-grey wolf optimization based small-signal modeling applied to GaN devices. Int. J. RF Microw. Comput. Aided Eng. 2022, 32, e23081. [Google Scholar] [CrossRef]

- Khan, P.W.; Kim, Y.; Byun, Y.C.; Lee, S.J. Influencing Factors Evaluation of Machine Learning-Based Energy Consumption Prediction. Energies 2021, 14, 7167. [Google Scholar] [CrossRef]

- Arghira, N.; Hawarah, L.; Ploix, S.; Jacomino, M. Prediction of appliances energy use in smart homes. Energy 2012, 48, 128–134. [Google Scholar] [CrossRef]

- Choi, J.Y.; Lee, B. Combining LSTM network ensemble via adaptive weighting for improved time series forecasting. Math. Probl. Eng. 2018, 2018. [Google Scholar] [CrossRef]

- Zhu, W.; Lan, C.; Xing, J.; Zeng, W.; Li, Y.; Shen, L.; Xie, X. Co-occurrence feature learning for skeleton based action recognition using regularized deep LSTM networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- Zhao, Z.; Chen, W.; Wu, X.; Chen, P.C.; Liu, J. LSTM network: A deep learning approach for short-term traffic forecast. IET Intell. Transp. Syst. 2017, 11, 68–75. [Google Scholar] [CrossRef]

- Iqbal, M.M.; Sajjad, M.I.A.; Amin, S.; Haroon, S.S.; Liaqat, R.; Khan, M.F.N.; Waseem, M.; Shah, M.A. Optimal scheduling of residential home appliances by considering energy storage and stochastically modelled photovoltaics in a grid exchange environment using hybrid grey wolf genetic algorithm optimizer. Appl. Sci. 2019, 9, 5226. [Google Scholar] [CrossRef]

- Mehmood, F.; Ullah, I.; Ahmad, S.; Kim, D.H. A novel approach towards the design and implementation of virtual network based on controller in future iot applications. Electronics 2020, 9, 604. [Google Scholar] [CrossRef]

- Shafqat, W.; Lee, K.T.; Kim, D.H. A Comprehensive Predictive-Learning Framework for Optimal Scheduling and Control of Smart Home Appliances Based on User and Appliance Classification. Sensors 2023, 23, 127. [Google Scholar] [CrossRef]

- Saba, D.; Cheikhrouhou, O.; Alhakami, W.; Sahli, Y.; Hadidi, A.; Hamam, H. Intelligent Reasoning Rules for Home Energy Management (IRRHEM): Algeria Case Study. Appl. Sci. 2022, 12, 1861. [Google Scholar] [CrossRef]

- Mumtaz, N.; Nazar, M. Artificial intelligence robotics in agriculture: See & spray. J. Intell. Pervasive Soft Comput. 2022, 1, 21–24. [Google Scholar]

- Bezawada, B.; Bachani, M.; Peterson, J.; Shirazi, H.; Ray, I.; Ray, I. Behavioral fingerprinting of iot devices. In Proceedings of the 2018 Workshop on Attacks and Solutions in Hardware Security, Toronto, ON, Canada, 15–19 October 2018; pp. 41–50. [Google Scholar]

- Malik, S.; Shafqat, W.; Lee, K.T.; Kim, D.H. A Feature Selection-Based Predictive-Learning Framework for Optimal Actuator Control in Smart Homes. Actuators 2021, 10, 84. [Google Scholar] [CrossRef]

- Malik, S.; Lee, K.; Kim, D. Optimal control based on scheduling for comfortable smart home environment. IEEE Access 2020, 8, 218245–218256. [Google Scholar] [CrossRef]

- Shafqat, W.; Malik, S.; Lee, K.T.; Kim, D.H. PSO based optimized ensemble learning and feature selection approach for efficient energy forecast. Electronics 2021, 10, 2188. [Google Scholar] [CrossRef]

- Shaheen, M.A.; Hasanien, H.M.; Alkuhayli, A. A novel hybrid GWO-PSO optimization technique for optimal reactive power dispatch problem solution. Ain Shams Eng. J. 2021, 12, 621–630. [Google Scholar] [CrossRef]

- Mehmood, A.; Muhammad, A.; Khan, T.A.; Rivera, J.J.D.; Iqbal, J.; Islam, I.U.; Song, W.C. Energy-efficient auto-scaling of virtualized network function instances based on resource execution pattern. Comput. Electr. Eng. 2020, 88, 106814. [Google Scholar] [CrossRef]

- Mehmood, A.; Khan, T.A.; Rivera, J.D.; Wang-Cheol, S. An intent-based mechanism to create a network slice using contracts. J. Korean Commun. Assoc. 2018, 180–181. [Google Scholar]

- Makhadmeh, S.N.; Khader, A.T.; Al-Betar, M.A.; Naim, S.; Abasi, A.K.; Alyasseri, Z.A.A. Optimization methods for power scheduling problems in smart home: Survey. Renew. Sustain. Energy Rev. 2019, 115, 109362. [Google Scholar] [CrossRef]

- Khan, P.W.; Byun, Y.C. Adaptive error curve learning ensemble model for improving energy consumption forecasting. CMC-Comput. Mater. Contin 2021, 69, 1893–1913. [Google Scholar]

- Ahsan, M.M.; Mahmud, M.P.; Saha, P.K.; Gupta, K.D.; Siddique, Z. Effect of data scaling methods on machine learning algorithms and model performance. Technologies 2021, 9, 52. [Google Scholar] [CrossRef]

- Hora, S.K.; Poongodan, R.; de Prado, R.P.; Wozniak, M.; Divakarachari, P.B. Long short-term memory network-based metaheuristic for effective electric energy consumption prediction. Appl. Sci. 2021, 11, 11263. [Google Scholar] [CrossRef]

- Candanedo, L.M.; Feldheim, V. Accurate occupancy detection of an office room from light, temperature, humidity and CO2 measurements using statistical learning models. Energy Build. 2016, 112, 28–39. [Google Scholar] [CrossRef]

- Pranolo, A.; Mao, Y.; Wibawa, A.P.; Utama, A.B.P.; Dwiyanto, F.A. Robust LSTM with Tuned-PSO and Bifold-Attention Mechanism for Analyzing Multivariate Time-Series. IEEE Access 2022, 10, 78423–78434. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).