CLIP-Based Adaptive Graph Attention Network for Large-Scale Unsupervised Multi-Modal Hashing Retrieval

Abstract

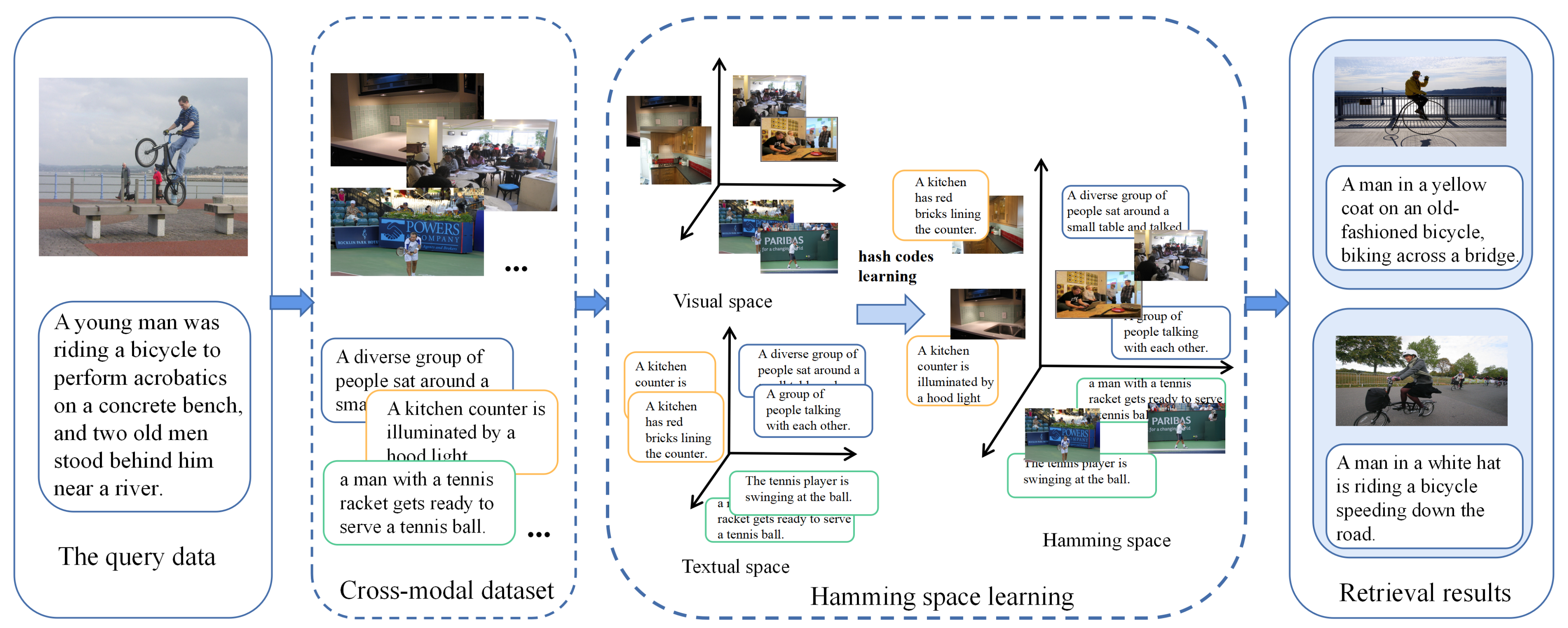

1. Introduction

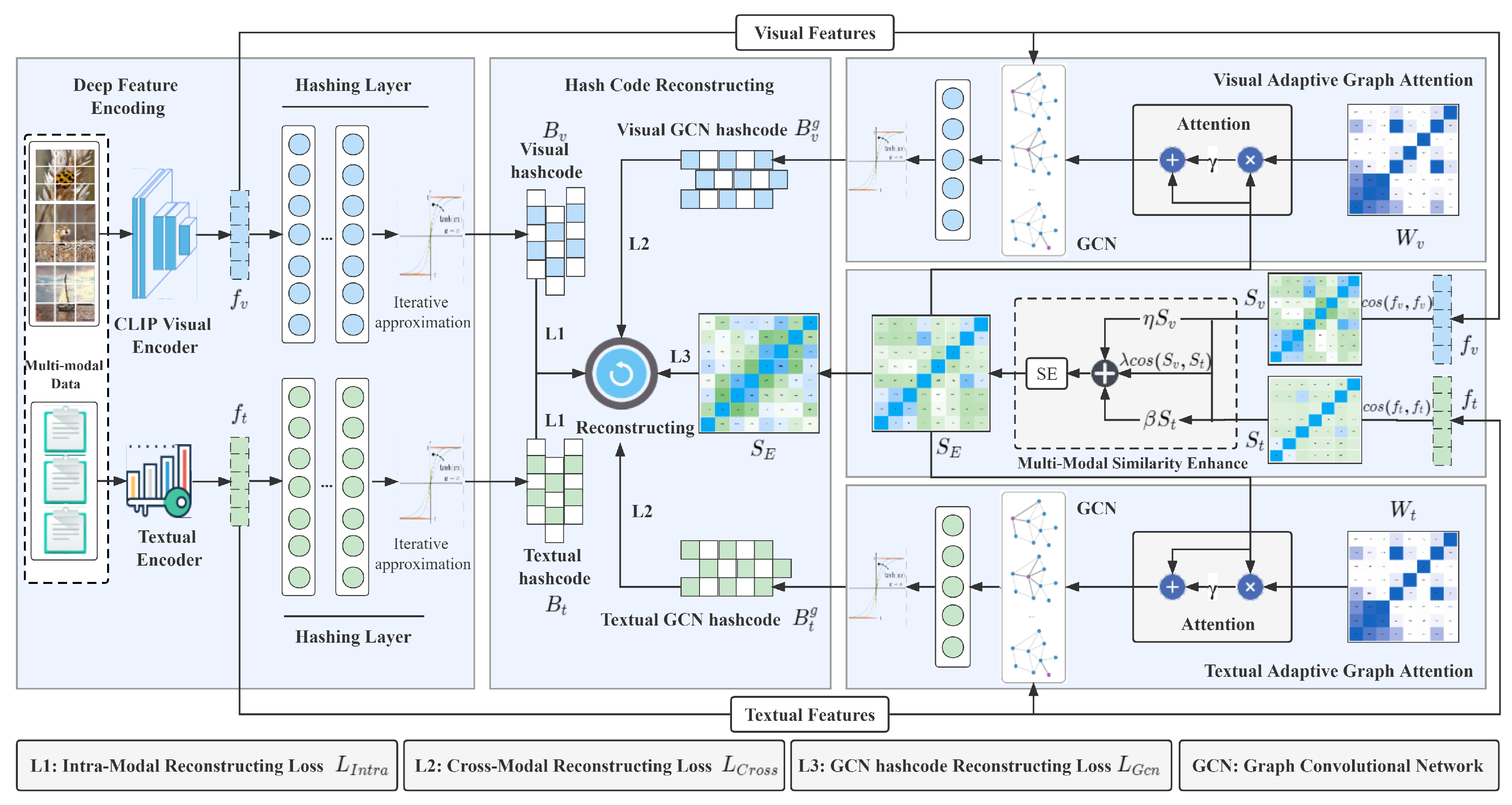

- We propose a novel unsupervised cross-modal hashing method that uses the multi-modal model CLIP (Contrastive Language-Image Pre-Training) [21,22] to extract cross-modal features and designs a cross-modal similarity enhancement module to integrate the similar information of different modalities, thereby providing a better supervisory signal for hash code learning.

- To alleviate the problem of unbalanced multi-modal learning, an adaptive graph attention module was designed to act as an auxiliary network to assist in the learning of hash functions. The module employs an attention mechanism to enhance similar information and suppress irrelevant information and mines graph neighborhood correlations through graph convolutional neural networks.

- In addition, an iterative approximation optimization strategy is used to reduce the information loss in the hash code binarization process. Sufficient experiments on three benchmark datasets show the proposed method outperforms other state-of-the-art deep unsupervised cross-modal hashing methods.

2. Related Work

2.1. Deep Unsupervised Multi-Modal Hashing

2.2. Attention-Based Methods

2.3. Graph-Based Methods

3. Methodology

3.1. Notation and Problem Definition

3.2. Framework Overview

3.3. Objective Function and Optimization

| Algorithm 1 CLIP-based Adaptive Graph Attention Hashing |

Input: The training set ; the number of iterations: n, mini-batch size: m, the length of hash codes: c, hyper-parameters: . Output: The parameters of the whole network: the parameters of visual and textual networks , the parameters of the graph convolutional networks .

|

4. Experiments

4.1. Datasets

4.2. Evaluation Metrics

4.3. Implementation Details

4.4. Comparison Results and Discussions

4.5. Ablation Study

- CAGAN-1: CAGAN-1 indicates that the variant model uses only visual similarity. It uses only the cosine similarity of the image modalities to construct the similarity matrix as a supervised signal.

- CAGAN-2: It indicates that the variant uses only textual similarity, which uses only the cosine similarity of the text modalities to construct the similarity matrix as a supervised signal.

- CAGAN-3: It refers to the variant without the adaptive graph attention module; the adaptive graph attention module can further aggregate information from similar data to produce consistent hash codes.

- CAGAN-4: To alleviate the problem of information loss during binarization, we adopt an iterative approximate optimization strategy to generate hash codes. CAGAN-4 indicates that the variant does not employ an iterative approximate optimization strategy.

- CAGAN-5: We removed the attention mechanism from the model to test whether the attention mechanism could learn similarities between different modal data.

- Analysis of Table 4 shows that each module plays a significant role in the overall model. Among them, CAGAN-2 has the most obvious performance drop because language is human-refined information, and the similarity matrix constructed from text is sparse. However, CAGAN-1 only uses image features to build a similarity matrix but with less performance degradation. One potential reason for this is that images contain richer, fine-grained semantic information. The results from CAGAN-1 and CAGAN-2 demonstrate the effectiveness of our proposed multi-modal similarity enhancement module.

- The adaptive graph attention module also has an impact on the performance of the proposed CAGAN. Specifically, from the results of CAGAN-3 and CAGAN-5, it can be seen that both the graph convolutional neural network and the attention mechanism contribute to the performance improvement of the model by about 1.5–2.5%.

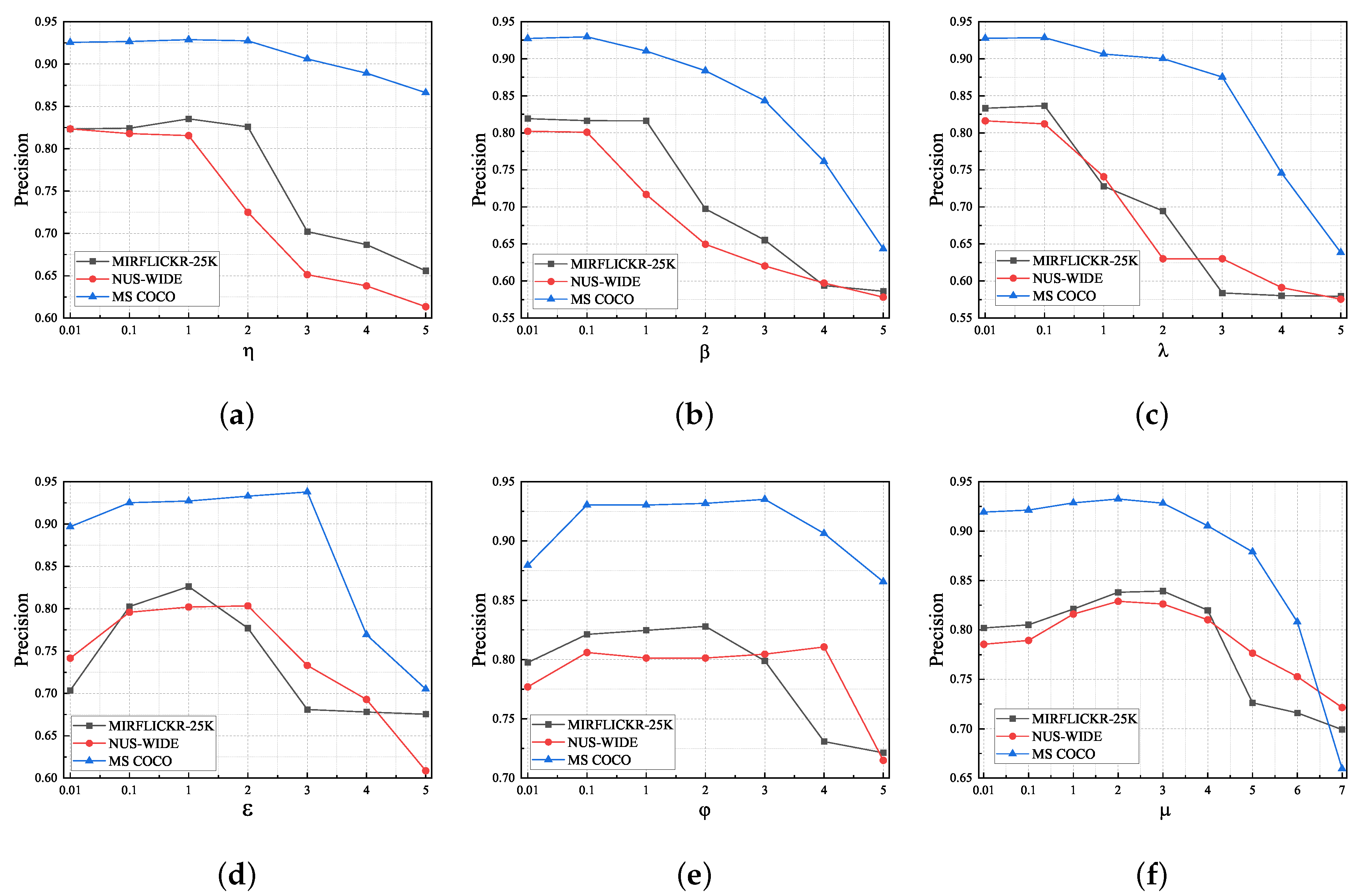

4.6. Parameter Sensitivity Analysis

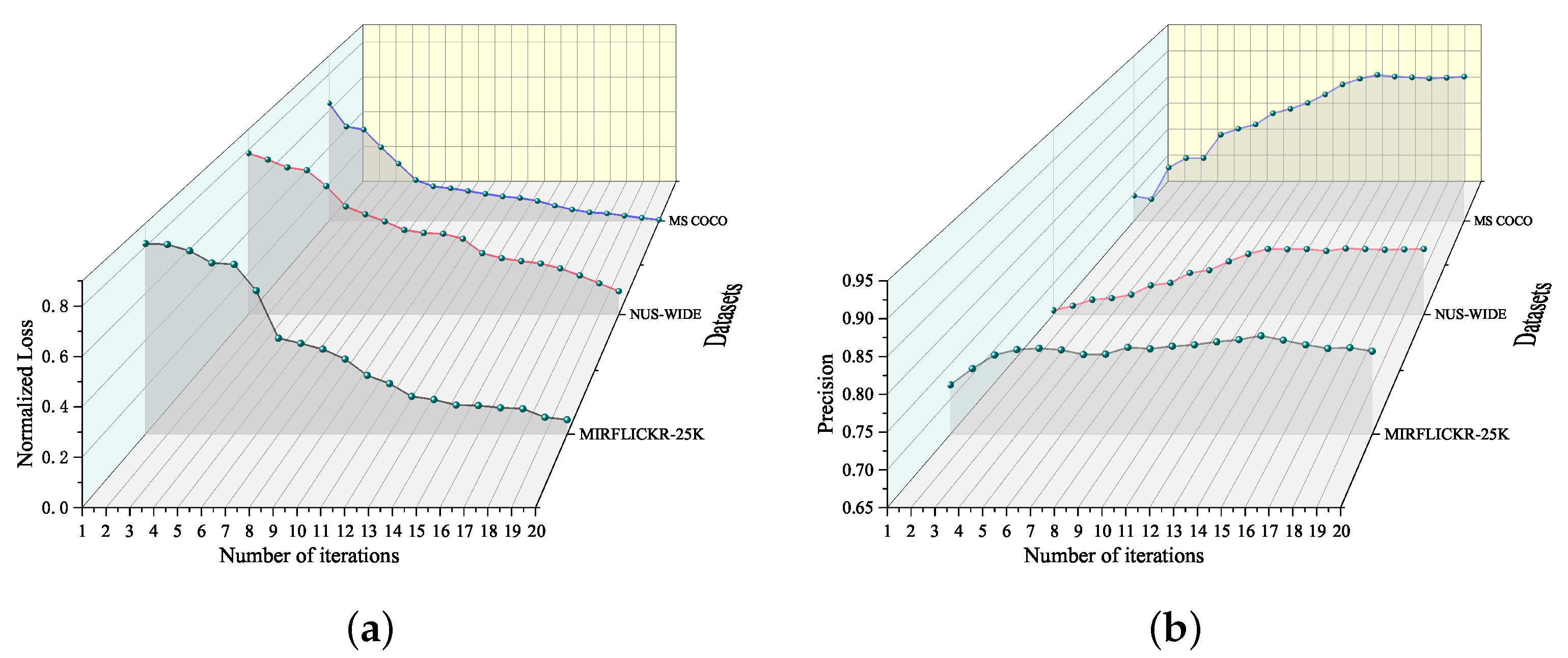

4.7. Training Efficiency and Convergence Testing

4.8. Visualization

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cui, H.; Zhu, L.; Li, J.; Cheng, Z.; Zhang, Z. Two-pronged Strategy: Lightweight Augmented Graph Network Hashing for Scalable Image Retrieval. In Proceedings of the 29th ACM International Conference on Multimedia, Virtual Event, 20–24 October 2021; pp. 1432–1440. [Google Scholar]

- Yang, W.; Wang, L.; Cheng, S. Deep parameter-free attention hashing for image retrieval. Sci. Rep. 2022, 12, 7082. [Google Scholar] [CrossRef]

- Gong, Q.; Wang, L.; Lai, H.; Pan, Y.; Yin, J. ViT2Hash: Unsupervised Information-Preserving Hashing. arXiv 2022, arXiv:2201.05541. [Google Scholar]

- Zhan, Y.W.; Luo, X.; Wang, Y.; Xu, X.S. Supervised hierarchical deep hashing for cross-modal retrieval. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 3386–3394. [Google Scholar]

- Duan, Y.; Chen, N.; Zhang, P.; Kumar, N.; Chang, L.; Wen, W. MS2GAH: Multi-label semantic supervised graph attention hashing for robust cross-modal retrieval. Pattern Recognit. 2022, 128, 108676. [Google Scholar] [CrossRef]

- Wu, G.; Lin, Z.; Han, J.; Liu, L.; Ding, G.; Zhang, B.; Shen, J. Unsupervised Deep Hashing via Binary Latent Factor Models for Large-scale Cross-modal Retrieval. In Proceedings of the IJCAI, Stockholm, Sweden, 13–19 July 2018; Volume 1, p. 5. [Google Scholar]

- Jiang, Q.Y.; Li, W.J. Deep cross-modal hashing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3232–3240. [Google Scholar]

- Qu, L.; Liu, M.; Wu, J.; Gao, Z.; Nie, L. Dynamic modality interaction modeling for image-text retrieval. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual, 11–15 July 2021; pp. 1104–1113. [Google Scholar]

- Zhang, D.; Wu, X.J.; Xu, T.; Kittler, J. WATCH: Two-stage Discrete Cross-media Hashing. IEEE Trans. Knowl. Data Eng. 2022. [Google Scholar] [CrossRef]

- Su, S.; Zhong, Z.; Zhang, C. Deep joint-semantics reconstructing hashing for large-scale unsupervised cross-modal retrieval. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 28 October–2 November 2019; pp. 3027–3035. [Google Scholar]

- Liu, S.; Qian, S.; Guan, Y.; Zhan, J.; Ying, L. Joint-modal distribution-based similarity hashing for large-scale unsupervised deep cross-modal retrieval. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Xi’an, China, 25–30 July 2020; pp. 1379–1388. [Google Scholar]

- Cheng, M.; Jing, L.; Ng, M.K. Robust unsupervised cross-modal hashing for multimedia retrieval. ACM Trans. Inf. Syst. (TOIS) 2020, 38, 1–25. [Google Scholar] [CrossRef]

- Zhang, J.; Peng, Y. Multi-pathway generative adversarial hashing for unsupervised cross-modal retrieval. IEEE Trans. Multimed. 2019, 22, 174–187. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Yu, J.; Zhou, H.; Zhan, Y.; Tao, D. Deep graph-neighbor coherence preserving network for unsupervised cross-modal hashing. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; Volume 35, pp. 4626–4634. [Google Scholar]

- Yang, D.; Wu, D.; Zhang, W.; Zhang, H.; Li, B.; Wang, W. Deep semantic-alignment hashing for unsupervised cross-modal retrieval. In Proceedings of the 2020 International Conference on Multimedia Retrieval, Dublin, Ireland, 8–11 June 2020; pp. 44–52. [Google Scholar]

- Wang, X.; Ke, B.; Li, X.; Liu, F.; Zhang, M.; Liang, X.; Xiao, Q. Modality-Balanced Embedding for Video Retrieval. In Proceedings of the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval, Madrid, Spain, 11–15 July 2022; pp. 2578–2582. [Google Scholar]

- Wu, N.; Jastrzebski, S.; Cho, K.; Geras, K.J. Characterizing and overcoming the greedy nature of learning in multi-modal deep neural networks. In Proceedings of the International Conference on Machine Learning, Baltimore, MA, USA, 17–23 July 2022; pp. 24043–24055. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning. PMLR, Online, 6–14 December 2021; pp. 8748–8763. [Google Scholar]

- Guzhov, A.; Raue, F.; Hees, J.; Dengel, A. Audioclip: Extending clip to image, text and audio. In Proceedings of the ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 976–980. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Khan, S.; Naseer, M.; Hayat, M.; Zamir, S.W.; Khan, F.S.; Shah, M. Transformers in vision: A survey. ACM Comput. Surv. (CSUR) 2022, 54, 1–41. [Google Scholar] [CrossRef]

- Zhang, P.F.; Luo, Y.; Huang, Z.; Xu, X.S.; Song, J. High-order nonlocal Hashing for unsupervised cross-modal retrieval. World Wide Web 2021, 24, 563–583. [Google Scholar] [CrossRef]

- Shi, Y.; Zhao, Y.; Liu, X.; Zheng, F.; Ou, W.; You, X.; Peng, Q. Deep Adaptively-Enhanced Hashing with Discriminative Similarity Guidance for Unsupervised Cross-modal Retrieval. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 7255–7268. [Google Scholar] [CrossRef]

- Wang, M.; Xing, J.; Liu, Y. Actionclip: A new paradigm for video action recognition. arXiv 2021, arXiv:2109.08472. [Google Scholar]

- Zhuo, Y.; Li, Y.; Hsiao, J.; Ho, C.; Li, B. CLIP4Hashing: Unsupervised Deep Hashing for Cross-Modal Video-Text Retrieval. In Proceedings of the 2022 International Conference on Multimedia Retrieval, Newark, NJ, USA, 27–30 June 2022; pp. 158–166. [Google Scholar]

- Wang, X.; Zou, X.; Bakker, E.M.; Wu, S. Self-constraining and attention-based hashing network for bit-scalable cross-modal retrieval. Neurocomputing 2020, 400, 255–271. [Google Scholar] [CrossRef]

- Shen, X.; Zhang, H.; Li, L.; Liu, L. Attention-Guided Semantic Hashing for Unsupervised Cross-Modal Retrieval. In Proceedings of the 2021 IEEE International Conference on Multimedia and Expo (ICME), Shenzhen, China, 5–9 July 2021; pp. 1–6. [Google Scholar]

- Yao, H.L.; Zhan, Y.W.; Chen, Z.D.; Luo, X.; Xu, X.S. TEACH: Attention-Aware Deep Cross-Modal Hashing. In Proceedings of the 2021 International Conference on Multimedia Retrieval, Taipei, Taiwan, 21–24 August 2021; pp. 376–384. [Google Scholar]

- Chen, S.; Wu, S.; Wang, L.; Yu, Z. Self-attention and adversary learning deep hashing network for cross-modal retrieval. Comput. Electr. Eng. 2021, 93, 107262. [Google Scholar] [CrossRef]

- Zhang, X.; Lai, H.; Feng, J. Attention-aware deep adversarial hashing for cross-modal retrieval. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 591–606. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. arXiv 2016, arXiv:1609.02907. [Google Scholar]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph attention networks. arXiv 2017, arXiv:1710.10903. [Google Scholar]

- Zhang, P.F.; Li, Y.; Huang, Z.; Xu, X.S. Aggregation-based graph convolutional hashing for unsupervised cross-modal retrieval. IEEE Trans. Multimed. 2021, 24, 466–479. [Google Scholar] [CrossRef]

- Wang, W.; Shen, Y.; Zhang, H.; Yao, Y.; Liu, L. Set and rebase: Determining the semantic graph connectivity for unsupervised cross-modal hashing. In Proceedings of the 29th International Conference on International Joint Conferences on Artificial Intelligence, Yokohama, Japan, 7–15 January 2021; pp. 853–859. [Google Scholar]

- Xu, R.; Li, C.; Yan, J.; Deng, C.; Liu, X. Graph Convolutional Network Hashing for Cross-Modal Retrieval. In Proceedings of the IJCAI, Macao, China, 10–16 August 2019; Volume 2019, pp. 982–988. [Google Scholar]

- Lu, X.; Zhu, L.; Liu, L.; Nie, L.; Zhang, H. Graph Convolutional Multi-modal Hashing for Flexible Multimedia Retrieval. In Proceedings of the 29th ACM International Conference on Multimedia, Virtual Event, China, 20–24 October 2021; pp. 1414–1422. [Google Scholar]

- Peng, X.; Wei, Y.; Deng, A.; Wang, D.; Hu, D. Balanced Multimodal Learning via On-the-fly Gradient Modulation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 8238–8247. [Google Scholar]

- He, M.; Wei, Z.; Huang, Z.; Xu, H. Bernnet: Learning arbitrary graph spectral filters via bernstein approximation. Adv. Neural Inf. Process. Syst. 2021, 34, 14239–14251. [Google Scholar]

- Zhou, J.; Ding, G.; Guo, Y. Latent semantic sparse hashing for cross-modal similarity search. In Proceedings of the 37th international ACM SIGIR Conference on Research & Development in Information Retrieval, Madrid, Spain, 11–15 July 2014; pp. 415–424. [Google Scholar]

- Song, J.; Yang, Y.; Yang, Y.; Huang, Z.; Shen, H.T. Inter-media hashing for large-scale retrieval from heterogeneous data sources. In Proceedings of the 2013 ACM SIGMOD International Conference on Management of Data, New York, NY, USA, 22–27 June 2013; pp. 785–796. [Google Scholar]

- Ding, G.; Guo, Y.; Zhou, J.; Gao, Y. Large-scale cross-modality search via collective matrix factorization hashing. IEEE Trans. Image Process. 2016, 25, 5427–5440. [Google Scholar] [CrossRef] [PubMed]

- Wang, D.; Wang, Q.; Gao, X. Robust and flexible discrete hashing for cross-modal similarity search. IEEE Trans. Circuits Syst. Video Technol. 2017, 28, 2703–2715. [Google Scholar] [CrossRef]

- Zhang, J.; Peng, Y.; Yuan, M. Unsupervised generative adversarial cross-modal hashing. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–3 February 2018; Volume 32. [Google Scholar]

- Hu, H.; Xie, L.; Hong, R.; Tian, Q. Creating something from nothing: Unsupervised knowledge distillation for cross-modal hashing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3123–3132. [Google Scholar]

- Mikriukov, G.; Ravanbakhsh, M.; Demir, B. Deep Unsupervised Contrastive Hashing for Large-Scale Cross-Modal Text-Image Retrieval in Remote Sensing. arXiv 2022, arXiv:2201.08125. [Google Scholar]

- Huiskes, M.J.; Lew, M.S. The mir flickr retrieval evaluation. In Proceedings of the 1st ACM International Conference on Multimedia Information Retrieval, Vancouver, BC, Canada, 30–31 October 2008; pp. 39–43. [Google Scholar]

- Chua, T.S.; Tang, J.; Hong, R.; Li, H.; Luo, Z.; Zheng, Y. Nus-wide: A real-world web image database from national university of singapore. In Proceedings of the ACM International Conference on Image and Video Retrieval, Santorini Island, Greece, 8–10 July 2009; pp. 1–9. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

| Datasets | MIRFLICKR-25K | NUS-WIDE | MS COCO |

|---|---|---|---|

| Database | 25,000 | 186,577 | 123,287 |

| Training | 5000 | 5000 | 10,000 |

| Testing | 2000 | 2000 | 5000 |

| Labels | 24 | 10 | 91 |

| Task | Method | MIRFLICKR-25K | NUS-WIDE | MS COCO | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 16 bits | 32 bits | 64 bits | 128 bits | 16 bits | 32 bits | 64 bits | 128 bits | 16 bits | 32 bits | 64 bits | 128 bits | ||

| I→T | LSSH [42] | 0.6756 | 0.6777 | 0.6821 | 0.6844 | 0.6780 | 0.7060 | 0.7029 | 0.6947 | 0.8127 | 0.8320 | 0.8386 | 0.8481 |

| IMH [43] | 0.6816 | 0.6594 | 0.6434 | 0.6333 | 0.6072 | 0.6237 | 0.6193 | 0.5910 | 0.7372 | 0.6869 | 0.6807 | 0.6591 | |

| RFDH [45] | 0.6366 | 0.6483 | 0.6587 | 0.6814 | 0.5511 | 0.5724 | 0.6083 | 0.6496 | 0.6902 | 0.7106 | 0.7493 | 0.7826 | |

| CMFH [44] | 0.6868 | 0.6925 | 0.7011 | 0.7187 | 0.6353 | 0.6641 | 0.6997 | 0.7317 | 0.7252 | 0.7576 | 0.7776 | 0.8162 | |

| DJSRH [10] | 0.6729 | 0.7015 | 0.7304 | 0.7443 | 0.5872 | 0.6715 | 0.7177 | 0.7437 | 0.7542 | 0.8156 | 0.8614 | 0.8614 | |

| JDSH [11] | 0.7254 | 0.7312 | 0.7524 | 0.7615 | 0.6781 | 0.7248 | 0.7434 | 0.7565 | 0.6905 | 0.7584 | 0.8884 | 0.8902 | |

| DSAH [18] | 0.6395 | 0.7663 | 0.7793 | 0.7898 | 0.7243 | 0.7530 | 0.7720 | 0.7780 | 0.8507 | 0.8813 | 0.9007 | 0.9005 | |

| HNH [25] | 0.7305 | 0.7449 | 0.7385 | 0.7211 | 0.6843 | 0.7215 | 0.7405 | 0.7374 | 0.8305 | 0.8552 | 0.8686 | 0.8502 | |

| DGCPN [17] | 0.7599 | 0.7815 | 0.7796 | 0.7880 | 0.7158 | 0.7456 | 0.7559 | 0.7538 | 0.8805 | 0.9020 | 0.9021 | 0.9063 | |

| DUCH [48] | 0.6670 | 0.6887 | 0.7064 | 0.7230 | 0.6866 | 0.7144 | 0.7282 | 0.7469 | 0.8472 | 0.8666 | 0.8767 | 0.8837 | |

| DAEH [26] | 0.7826 | 0.7940 | 0.8004 | 0.8047 | 0.7309 | 0.7542 | 0.7728 | 0.7794 | 0.8946 | 0.9029 | 0.9058 | 0.9104 | |

| CAGAN | 0.7902 | 0.8195 | 0.8248 | 0.8341 | 0.7565 | 0.7890 | 0.7950 | 0.8059 | 0.9166 | 0.9205 | 0.9290 | 0.9322 | |

| T→I | LSSH [42] | 0.6482 | 0.6535 | 0.6623 | 0.6602 | 0.5669 | 0.5878 | 0.6240 | 0.6286 | 0.7085 | 0.7452 | 0.7795 | 0.8003 |

| IMH [43] | 0.6812 | 0.6673 | 0.6543 | 0.6399 | 0.6260 | 0.6442 | 0.6384 | 0.6175 | 0.7682 | 0.7172 | 0.7152 | 0.6942 | |

| RFDH [45] | 0.6251 | 0.6460 | 0.6544 | 0.6637 | 0.5512 | 0.5684 | 0.5927 | 0.6304 | 0.7012 | 0.7172 | 0.7413 | 0.7774 | |

| CMFH [44] | 0.6611 | 0.6699 | 0.6799 | 0.6953 | 0.6092 | 0.6418 | 0.6726 | 0.6966 | 0.7577 | 0.7895 | 0.8099 | 0.8382 | |

| DJSRH [10] | 0.6756 | 0.6909 | 0.6985 | 0.7124 | 0.6010 | 0.6567 | 0.7076 | 0.7197 | 0.7593 | 0.8326 | 0.8621 | 0.8697 | |

| JDSH [11] | 0.6989 | 0.7192 | 0.7241 | 0.7354 | 0.6749 | 0.7155 | 0.7115 | 0.7181 | 0.7581 | 0.8296 | 0.8949 | 0.8952 | |

| DSAH [18] | 0.6462 | 0.7540 | 0.7593 | 0.7586 | 0.6688 | 0.7167 | 0.7484 | 0.7457 | 0.8546 | 0.8868 | 0.8904 | 0.8919 | |

| HNH [25] | 0.7234 | 0.7204 | 0.7060 | 0.7002 | 0.6711 | 0.6996 | 0.6962 | 0.6931 | 0.8398 | 0.8635 | 0.8669 | 0.8517 | |

| DGCPN [17] | 0.7273 | 0.7507 | 0.7571 | 0.7575 | 0.7023 | 0.7230 | 0.7426 | 0.7362 | 0.8807 | 0.8978 | 0.8991 | 0.9015 | |

| DUCH [48] | 0.6521 | 0.6684 | 0.6818 | 0.6972 | 0.6619 | 0.6943 | 0.7097 | 0.7130 | 0.8607 | 0.8855 | 0.8980 | 0.9032 | |

| DAEH [26] | 0.7607 | 0.7676 | 0.7743 | 0.7814 | 0.7132 | 0.7335 | 0.7485 | 0.7510 | 0.8882 | 0.8988 | 0.9007 | 0.9033 | |

| CAGAN | 0.7790 | 0.8018 | 0.8160 | 0.8272 | 0.7350 | 0.7472 | 0.7676 | 0.7697 | 0.9048 | 0.9057 | 0.9072 | 0.9172 | |

| Configurations | Method | I→T | T→I | ||||||

|---|---|---|---|---|---|---|---|---|---|

| 16 bits | 32 bits | 64 bits | 128 bits | 16 bits | 32 bits | 64 bits | 128 bits | ||

| MAP@50 in [6] | UDCMH [6] | 0.689 | 0.698 | 0.714 | 0.717 | 0.692 | 0.704 | 0.718 | 0.733 |

| AGCH [36] | 0.865 | 0.887 | 0.892 | 0.912 | 0.829 | 0.849 | 0.852 | 0.880 | |

| CAGAN | 0.904 | 0.929 | 0.928 | 0.941 | 0.882 | 0.889 | 0.898 | 0.901 | |

| MAP@All in [46] | UGACH [46] | 0.676 | 0.693 | 0.702 | 0.706 | 0.676 | 0.692 | 0.703 | 0.707 |

| SRCH [37] | 0.680 | 0.691 | 0.699 | * | 0.697 | 0.708 | 0.715 | * | |

| UKD [47] | 0.700 | 0.706 | 0.709 | 0.707 | 0.704 | 0.705 | 0.714 | 0.712 | |

| CAGAN | 0.708 | 0.723 | 0.714 | 0.725 | 0.715 | 0.722 | 0.731 | 0.743 | |

| Configurations | Method | I→T | T→I | ||||||

|---|---|---|---|---|---|---|---|---|---|

| 16 bits | 32 bits | 64 bits | 128 bits | 16 bits | 32 bits | 64 bits | 128 bits | ||

| MAP@50 in [6] | UDCMH [6] | 0.511 | 0.519 | 0.524 | 0.558 | 0.637 | 0.653 | 0.695 | 0.716 |

| AGCH [36] | 0.809 | 0.830 | 0.831 | 0.852 | 0.769 | 0.780 | 0.798 | 0.802 | |

| CAGAN | 0.816 | 0.827 | 0.845 | 0.862 | 0.782 | 0.791 | 0.804 | 0.815 | |

| MAP@All in [46] | UGACH [46] | 0.613 | 0.623 | 0.628 | 0.631 | 0.603 | 0.614 | 0.640 | 0.641 |

| SRCH [37] | 0.544 | 0.556 | 0.567 | * | 0.553 | 0.567 | 0.575 | * | |

| UKD [47] | 0.584 | 0.578 | 0.586 | 0.613 | 0.587 | 0.599 | 0.599 | 0.615 | |

| CAGAN | 0.628 | 0.641 | 0.647 | 0.656 | 0.632 | 0.643 | 0.658 | 0.662 | |

| Task | Method | Configuration | MIRFLICKR-25K | NUS-WIDE | MS COCO | |||

|---|---|---|---|---|---|---|---|---|

| 32 bits | 128 bits | 32 bits | 128 bits | 32 bits | 128 bits | |||

| I→T | CAGAN-1 | 0.7660 | 0.8155 | 0.7415 | 0.7954 | 0.9030 | 0.9266 | |

| CAGAN-2 | 0.7578 | 0.7975 | 0.7495 | 0.7722 | 0.8643 | 0.9036 | ||

| CAGAN-3 | 0.7965 | 0.8107 | 0.7776 | 0.7998 | 0.8981 | 0.9137 | ||

| CAGAN-4 | 0.7960 | 0.8123 | 0.7711 | 0.7910 | 0.9036 | 0.9121 | ||

| CAGAN-5 | 0.7969 | 0.8225 | 0.7738 | 0.7875 | 0.9045 | 0.9152 | ||

| CAGAN | ALL | 0.8195 | 0.8341 | 0.7890 | 0.8059 | 0.9205 | 0.9322 | |

| T→I | CAGAN-1 | 0.7603 | 0.8053 | 0.7339 | 0.7553 | 0.8754 | 0.9098 | |

| CAGAN-2 | 0.7561 | 0.7787 | 0.7411 | 0.7551 | 0.8600 | 0.8867 | ||

| CAGAN-3 | 0.7954 | 0.8041 | 0.7451 | 0.7654 | 0.8537 | 0.8876 | ||

| CAGAN-4 | 0.7830 | 0.8131 | 0.7531 | 0.7647 | 0.8852 | 0.9053 | ||

| CAGAN-5 | 0.7757 | 0.8000 | 0.7352 | 0.7550 | 0.8867 | 0.9042 | ||

| CAGAN | ALL | 0.8018 | 0.8272 | 0.7472 | 0.7697 | 0.9057 | 0.9172 | |

| MIRFLICKR-25K | ||||

|---|---|---|---|---|

| Backbones | I→T | T→I | ||

| 32-bits | 128-bits | 32-bits | 128-bits | |

| AlexNet [15] | 0.7763 | 0.7951 | 0.7622 | 0.7894 |

| Densenet [23] | 0.8212 | 0.8313 | 0.7982 | 0.8132 |

| ResNet-50 [14] | 0.7905 | 0.8122 | 0.7774 | 0.8036 |

| ResNet-152 [14] | 0.8230 | 0.8267 | 0.8033 | 0.8274 |

| VIT-B-16 [24] | 0.7780 | 0.8131 | 0.7714 | 0.8066 |

| CLIP-B/16 [21] | 0.8196 | 0.8342 | 0.8014 | 0.8272 |

| Method | Parameter | Training Time | Query Time | ||||||

|---|---|---|---|---|---|---|---|---|---|

| 16 bits | 32 bits | 64 bits | 128 bits | 16 bits | 32 bits | 64 bits | 128 bits | ||

| UDCMH [6] | ∼364M | 1101.55 | 1129.36 | 1157.24 | 1149.12 | 58.22 | 56.48 | 59.13 | 59.28 |

| DJSRH [10] | ∼368M | 995.58 | 1029.27 | 1032.88 | 1025.11 | 57.51 | 59.30 | 59.96 | 58.47 |

| AGCH [36] | ∼385M | 1122.18 | 1124.43 | 1130.68 | 1139.86 | 59.46 | 58.88 | 59.29 | 59.58 |

| Ours | ∼393M | 1110.50 | 1115.42 | 1125.78 | 1130.25 | 59.48 | 59.56 | 58.72 | 59.60 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Ge, M.; Li, M.; Li, T.; Xiang, S. CLIP-Based Adaptive Graph Attention Network for Large-Scale Unsupervised Multi-Modal Hashing Retrieval. Sensors 2023, 23, 3439. https://doi.org/10.3390/s23073439

Li Y, Ge M, Li M, Li T, Xiang S. CLIP-Based Adaptive Graph Attention Network for Large-Scale Unsupervised Multi-Modal Hashing Retrieval. Sensors. 2023; 23(7):3439. https://doi.org/10.3390/s23073439

Chicago/Turabian StyleLi, Yewen, Mingyuan Ge, Mingyong Li, Tiansong Li, and Sen Xiang. 2023. "CLIP-Based Adaptive Graph Attention Network for Large-Scale Unsupervised Multi-Modal Hashing Retrieval" Sensors 23, no. 7: 3439. https://doi.org/10.3390/s23073439

APA StyleLi, Y., Ge, M., Li, M., Li, T., & Xiang, S. (2023). CLIP-Based Adaptive Graph Attention Network for Large-Scale Unsupervised Multi-Modal Hashing Retrieval. Sensors, 23(7), 3439. https://doi.org/10.3390/s23073439