A Sliding Scale Signal Quality Metric of Photoplethysmography Applicable to Measuring Heart Rate across Clinical Contexts with Chest Mounting as a Case Study

Abstract

1. Introduction

2. Materials and Methods

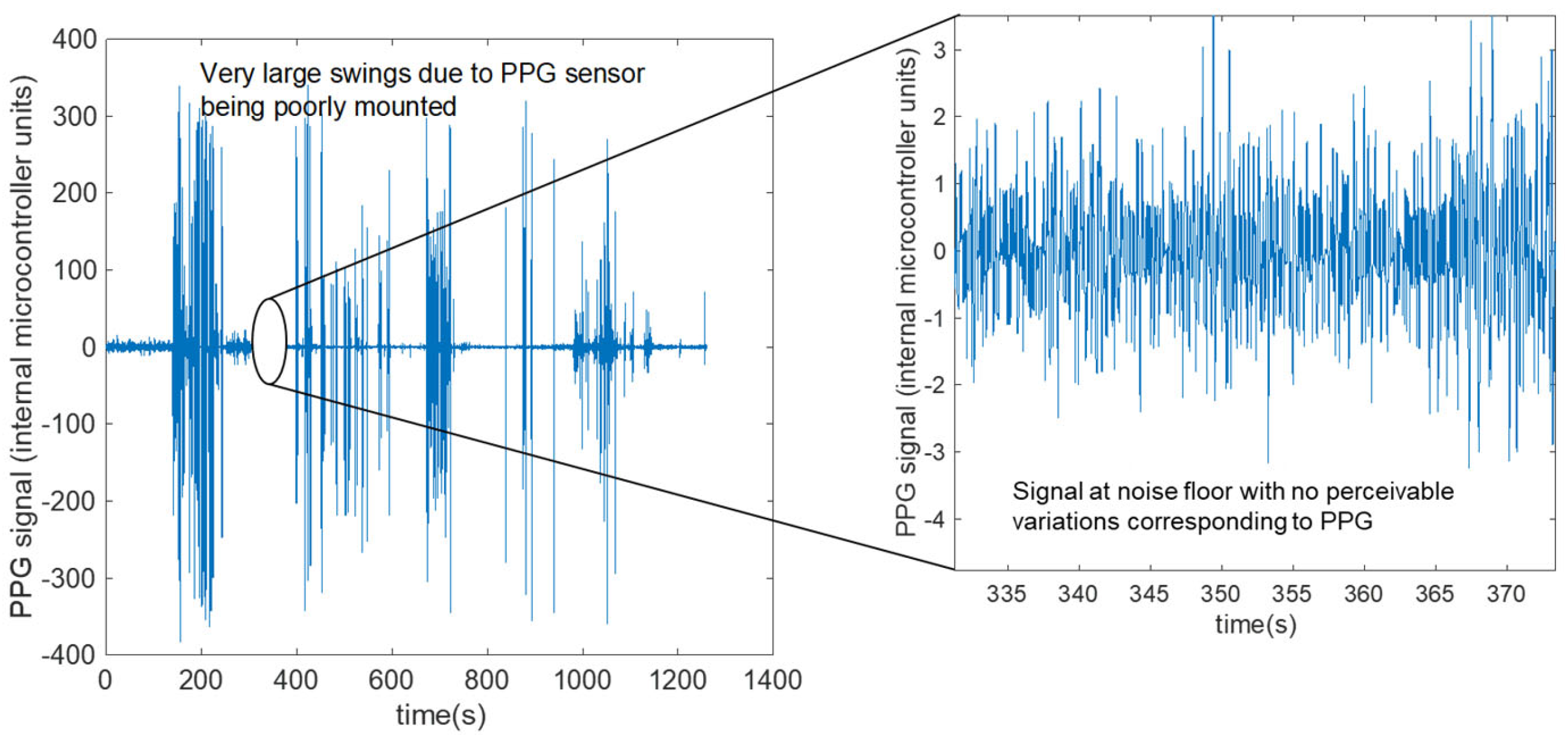

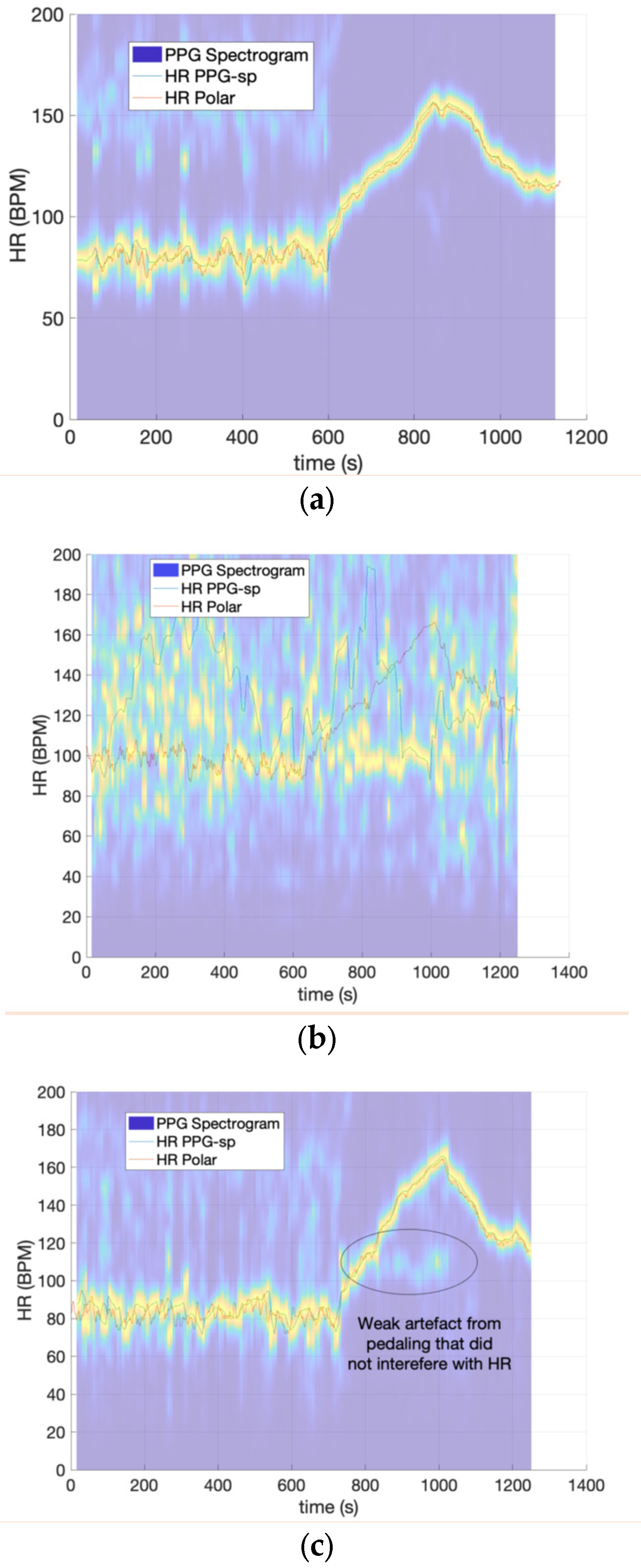

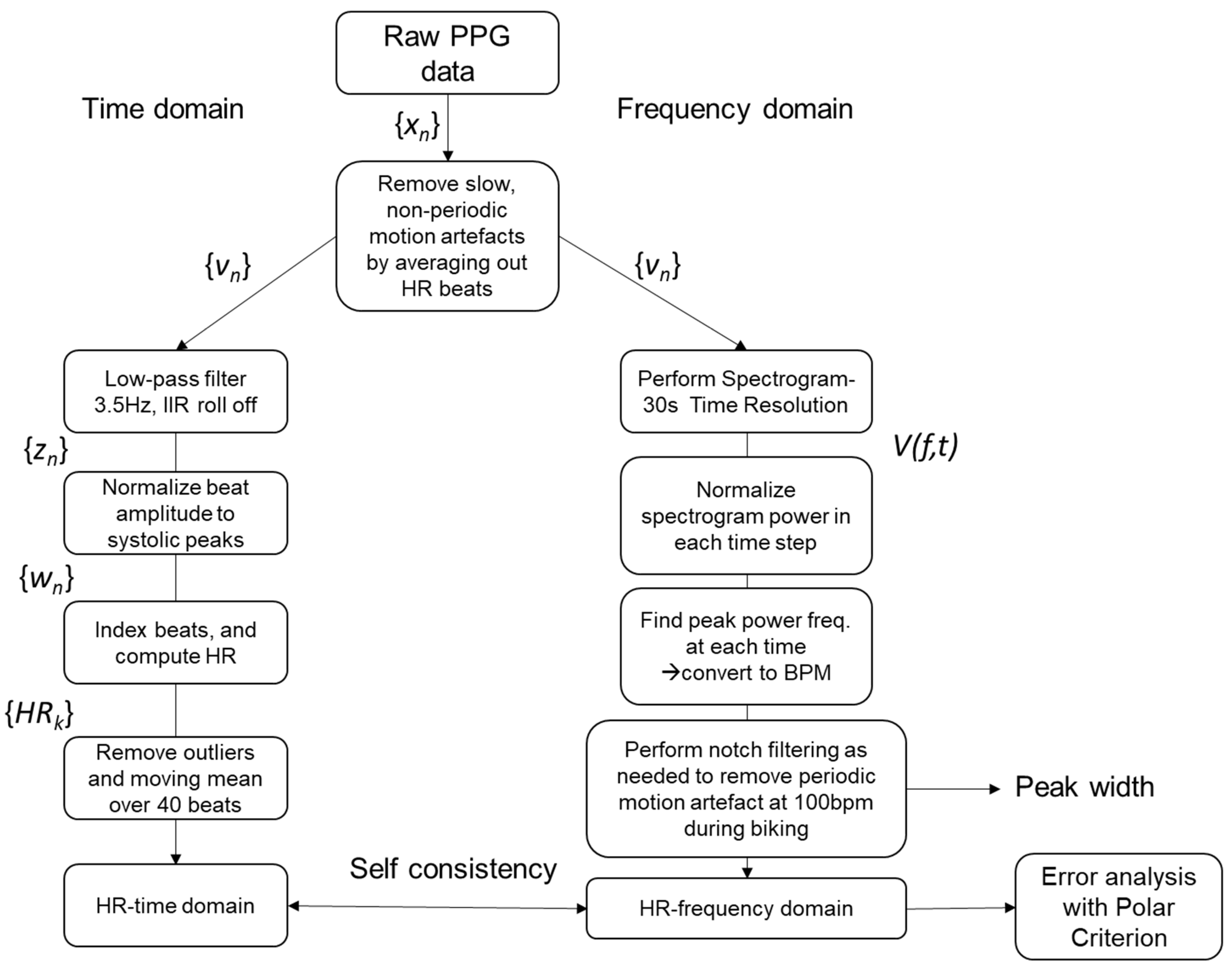

2.1. Signal Processing

2.2. Statistical Analysis

3. Results

3.1. Part 1—Signal Quality Agreement

3.2. Part 2—Associations between Signal Quality and Heart Rate Accuracy

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Joyner, M.J. Preclinical and clinical evaluation of autonomic function in humans. J. Physiol. 2016, 594, 4009–4013. [Google Scholar] [CrossRef] [PubMed]

- Godoy, L.C.; Frankfurter, C.; Cooper, M.; Lay, C.; Maunder, R.; Farkouh, M.E. Association of Adverse Childhood Experiences with Cardiovascular Disease Later in Life: A Review. JAMA Cardiol. 2021, 6, 228–235. [Google Scholar] [CrossRef] [PubMed]

- Wang, G.; Atef, M.; Lian, Y. Towards a continuous non-invasive cuffless blood pressure monitoring system using PPG: Systems and circuits review. IEEE Circuits Syst. Mag. 2018, 18, 6–26. [Google Scholar] [CrossRef]

- Castaneda, D.; Esparza, A.; Ghamari, M.; Soltanpur, C.; Nazeran, H. A review on wearable photoplethysmography sensors and their potential future applications in health care. Int. J. Biosens. Bioelectron. 2018, 4, 195. [Google Scholar]

- Sana, F.; Isselbacher, E.M.; Singh, J.P.; Heist, E.K.; Pathik, B.; Armoundas, A.A. Wearable devices for ambulatory cardiac monitoring: JACC state-of-the-art review. J. Am. Coll. Cardiol. 2020, 75, 1582–1592. [Google Scholar] [CrossRef]

- Creaser, A.V.; Clemes, S.A.; Costa, S.; Hall, J.; Ridgers, N.D.; Barber, S.E.; Bingham, D.D. The Acceptability, Feasibility, and Effectiveness of Wearable Activity Trackers for Increasing Physical Activity in Children and Adolescents: A Systematic Review. Int. J. Environ. Res. Public Health 2021, 18, 6211. [Google Scholar] [CrossRef]

- Nakajima, K.; Tamura, T.; Miike, H. Monitoring of heart and respiratory rates by photoplethysmography using a digital filtering technique. Med. Eng. Phys. 1996, 18, 365–372. [Google Scholar] [CrossRef]

- Lu, G.; Yang, F.; Taylor, J.; Stein, J. A comparison of photoplethysmography and ECG recording to analyse heart rate variability in healthy subjects. J. Med. Eng. Technol. 2009, 33, 634–641. [Google Scholar] [CrossRef]

- Weiler, D.T.; Villajuan, S.O.; Edkins, L.; Cleary, S.; Saleem, J.J. Wearable heart rate monitor technology accuracy in research: A comparative study between PPG and ECG technology. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Austin, TX, USA, 9–13 October 2017; SAGE Publications: Los Angeles, CA, USA, 2017; Volume 61, pp. 1292–1296. [Google Scholar]

- Krishnan, R.; Natarajan, B.; Warren, S. Two-stage approach for detection and reduction of motion artifacts in photoplethysmographic data. IEEE Trans. Biomed. Eng. 2010, 57, 1867–1876. [Google Scholar] [CrossRef]

- Elgendi, M. Optimal signal quality index for photoplethysmogram signals. Bioengineering 2016, 3, 21. [Google Scholar] [CrossRef]

- Pradhan, N.; Rajan, S.; Adler, A.; Redpath, C. Classification of the quality of wristband-based photoplethysmography signals. In Proceedings of the 2017 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Rochester, MN, USA, 7–10 May 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 269–274. [Google Scholar]

- Li, H.; Huang, S. A High-Efficiency and Real-Time Method for Quality Evaluation of PPG Signals. In Proceedings of the 2019 International Conference on Optoelectronic Science and Materials, Hefei, China, 20–22 September 2019; IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2020; Volume 711, p. 012100. [Google Scholar]

- Orphanidou, C. Quality Assessment for the photoplethysmogram (PPG). In Signal Quality Assessment in Physiological Monitoring: State of the Art and Practical Considerations; Springer: Berlin/Heidelberg, Germany, 2018; pp. 41–63. [Google Scholar]

- Lee, S.; Ibey, B.L.; Xu, W.; Wilson, M.A.; Ericson, M.N.; Cote, G.L. Processing of pulse oximeter data using discrete wavelet analysis. IEEE Trans. Biomed. Eng. 2005, 52, 1350–1352. [Google Scholar] [CrossRef] [PubMed]

- Graybeal, J.; Petterson, M. Adaptive filtering and alternative calculations revolutionizes pulse oximetry sensitivity and specificity during motion and low perfusion. In Proceedings of the 26th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Francisco, CA, USA, 1–5 September 2004; IEEE: Piscataway, NJ, USA, 2004; Volume 2, pp. 5363–5366. [Google Scholar]

- Kumar, A.; Komaragiri, R.; Kumar, M. A Review on Computation Methods Used in Photoplethysmography Signal Analysis for Heart Rate Estimation. Arch. Comput. Methods Eng. 2022, 29, 921–940. [Google Scholar]

- Kumar, A.; Komaragiri, R.; Kumar, M. Reference signal less Fourier analysis based motion artifact removal algorithm for wearable photoplethysmography devices to estimate heart rate during physical exercises. Comput. Biol. Med. 2022, 141, 105081. [Google Scholar]

- Dao, D.; Salehizadeh, S.M.; Noh, Y.; Chong, J.W.; Cho, C.H.; McManus, D.; Darling, C.E.; Mendelson, Y.; Chon, K.H. A robust motion artifact detection algorithm for accurate detection of heart rates from photoplethysmographic signals using time—Frequency spectral features. IEEE J. Biomed. Health Inform. 2016, 21, 1242–1253. [Google Scholar] [CrossRef] [PubMed]

- Reddy, K.A.; George, B.; Kumar, V.J. Use of fourier series analysis for motion artifact reduction and data compression of photoplethysmographic signals. IEEE Trans. Instrum. Meas. 2008, 58, 1706–1711. [Google Scholar] [CrossRef]

- Biswas, D.; Simões-Capela, N.; Van Hoof, C.; Van Helleputte, N. Heart rate estimation from wrist-worn photoplethysmography: A review. IEEE Sens. J. 2019, 19, 6560–6570. [Google Scholar] [CrossRef]

- Sartor, F.; Papini, G.; Cox, L.G.E.; Cleland, J. Methodological shortcomings of wrist-worn heart rate monitors validations. J. Med. Internet Res. 2018, 20, e10108. [Google Scholar] [CrossRef]

- Goldberger, A.L.; Amaral, L.A.; Glass, L.; Hausdorff, J.M.; Ivanov, P.C.; Mark, R.G.; Mietus, J.E.; Moody, G.B.; Peng, C.-K.; Stanley, H.E. PhysioBank, PhysioToolkit, and PhysioNet: Components of a new research resource for complex physiologic signals. Circulation 2000, 101, e215–e220. [Google Scholar] [CrossRef]

- Nemcova, A.; Smisek, R.; Vargova, E.; Maršánová, L.; Vitek, M.; Smital, L. Brno University of Technology Smartphone PPG Database (BUT PPG). PhysioNet 2021, 101, e215–e220. [Google Scholar] [CrossRef]

- Nemcova, A.; Vargova, E.; Smisek, R.; Marsanova, L.; Smital, L.; Vitek, M. Brno University of Technology Smartphone PPG Database (BUT PPG): Annotated Dataset for PPG Quality Assessment and Heart Rate Estimation. BioMed Res. Int. 2021, 2021, 3453007. [Google Scholar] [CrossRef]

- Zhang, Z.; Pi, Z.; Liu, B. TROIKA: A general framework for heart rate monitoring using wrist-type photoplethysmographic signals during intensive physical exercise. IEEE Trans. Biomed. Eng. 2014, 62, 522–531. [Google Scholar] [CrossRef] [PubMed]

- Sachdeva, S. Fitzpatrick skin typing: Applications in dermatology. Indian J. Dermatol. Venereol. Leprol. 2009, 75, 93. [Google Scholar] [CrossRef] [PubMed]

- Puranen, A.; Halkola, T.; Kirkeby, O.; Vehkaoja, A. Effect of skin tone and activity on the performance of wrist-worn optical beat-to-beat heart rate monitoring. In Proceedings of the 2020 IEEE SENSORS, Rotterdam, The Netherlands, 25–28 October 2020; pp. 1–4. [Google Scholar]

- Oppel, E.; Kamann, S.; Reichl, F.X.; Högg, C. The Dexcom glucose monitoring system—An isobornyl acrylate-free alternative for diabetic patients. Contact Dermatitis 2019, 81, 32–36. [Google Scholar] [CrossRef] [PubMed]

- Massa, G.G.; Gys, I.; Op‘t Eyndt, A.; Bevilacqua, E.; Wijnands, A.; Declercq, P.; Zeevaert, R. Evaluation of the FreeStyle® Libre flash glucose monitoring system in children and adolescents with type 1 diabetes. Hormone Res. Paediatr. 2018, 89, 189–199. [Google Scholar] [CrossRef] [PubMed]

- Schaffarczyk, M.; Rogers, B.; Reer, R.; Gronwald, T. Validity of the polar H10 sensor for heart rate variability analysis during resting state and incremental exercise in recreational men and women. Sensors 2022, 22, 6536. [Google Scholar] [CrossRef]

- Chen, M.J.; Fan, X.; Moe, S.T. Criterion-related validity of the Borg ratings of perceived exertion scale in healthy individuals: A meta-analysis. J. Sports Sci. 2002, 20, 873–899. [Google Scholar] [CrossRef]

- Yu, C.; Liu, Z.; McKenna, T.; Reisner, A.T.; Reifman, J. A method for automatic identification of reliable heart rates calculated from ECG and PPG waveforms. J. Am. Med. Inform. Assoc. 2006, 13, 309–320. [Google Scholar] [CrossRef]

- Ulaby, F.T.; Maharbiz, M.M. Circuits; NTS Press: Glasgow, UK, 2010. [Google Scholar]

- Chong, J.W.; Dao, D.K.; Salehizadeh, S.; McManus, D.D.; Darling, C.E.; Chon, K.H.; Mendelson, Y. Photoplethysmograph signal reconstruction based on a novel hybrid motion artifact detection–reduction approach. Part I: Motion and noise artifact detection. Ann. Biomed. Eng. 2014, 42, 2238–2250. [Google Scholar] [CrossRef]

- Selvaraj, N.; Mendelson, Y.; Shelley, K.H.; Silverman, D.G.; Chon, K.H. Statistical approach for the detection of motion/noise artifacts in Photoplethysmogram. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; pp. 4972–4975. [Google Scholar]

- Krishnan, R.; Natarajan, B.; Warren, S. Analysis and detection of motion artifact in photoplethysmographic data using higher order statistics. In Proceedings of the 2008 IEEE International Conference on Acoustics, Speech and Signal Processing, Las Vegas, NV, USA, 31 March–4 April 2008; pp. 613–616. [Google Scholar]

- Yan, Y.-S.; Poon, C.C.; Zhang, Y.-T. Reduction of motion artifact in pulse oximetry by smoothed pseudo Wigner-Ville distribution. J. Neuroeng. Rehabil. 2005, 2, 3. [Google Scholar] [CrossRef]

- Cohen, J. A coefficient of agreement for nominal scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- Landis, J.R.; Koch, G.G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef] [PubMed]

- Neshitov, A.; Tyapochkin, K.; Smorodnikova, E.; Pravdin, P. Wavelet analysis and self-similarity of photoplethysmography signals for HRV estimation and quality assessment. Sensors 2021, 21, 6798. [Google Scholar] [CrossRef] [PubMed]

- Sukor, J.A.; Redmond, S.; Lovell, N. Signal quality measures for pulse oximetry through waveform morphology analysis. Physiol. Meas. 2011, 32, 369. [Google Scholar] [CrossRef]

- Wright, S.P.; Collier, S.R.; Brown, T.S.; Sandberg, K. An analysis of how consumer physical activity monitors are used in biomedical research. FASEB J. 2017, 31, 1020.24. [Google Scholar]

- Elgendi, M. On the analysis of fingertip photoplethysmogram signals. Curr. Cardiol. Rev. 2012, 8, 14–25. [Google Scholar] [CrossRef] [PubMed]

- Chirinos, J.A.; Kips, J.G.; Jacobs, D.R.; Brumback, L.; Duprez, D.A.; Kronmal, R.; Bluemke, D.A.; Townsend, R.R.; Vermeersch, S.; Segers, P. Arterial wave reflections and incident cardiovascular events and heart failure: MESA (Multiethnic Study of Atherosclerosis). J. Am. Coll. Cardiol. 2012, 60, 2170–2177. [Google Scholar] [CrossRef] [PubMed]

- Kaess, B.M.; Rong, J.; Larson, M.G.; Hamburg, N.M.; Vita, J.A.; Levy, D.; Benjamin, E.J.; Vasan, R.S.; Mitchell, G.F. Aortic stiffness, blood pressure progression, and incident hypertension. JAMA 2012, 308, 875–881. [Google Scholar] [CrossRef]

- DeCoster, J.; Gallucci, M.; Iselin, A.-M.R. Best practices for using median splits, artificial categorization, and their continuous alternatives. J. Exp. Psychopathol. 2011, 2, 197–209. [Google Scholar] [CrossRef]

- Bent, B.; Goldstein, B.A.; Kibbe, W.A.; Dunn, J.P. Investigating sources of inaccuracy in wearable optical heart rate sensors. NPJ Digit. Med. 2020, 3, 18. [Google Scholar] [CrossRef]

- Sjoding, M.W.; Dickson, R.P.; Iwashyna, T.J.; Gay, S.E.; Valley, T.S. Racial bias in pulse oximetry measurement. N. Engl. J. Med. 2020, 383, 2477–2478. [Google Scholar] [CrossRef]

| Session | ID | Accuracy | Self-Consistency | STD-Width | RMSE | MAE (BPM) | MAE (%) |

|---|---|---|---|---|---|---|---|

| 1 | 2 | 14.38% | 23.2 | 11.63 | 26.38 | 26.34 | 24.90% |

| 2 | 3 | 12.38% | 26.2 | 11.86 | 41.78 | 35.33 | 36.70% |

| 3 | 3 | 15.43% | 25.91 | 11.19 | 39.76 | 34.32 | 30.91% |

| 4 | 1 | 93.13% | 94.91 | 4.07 | 2.32 | 1.74 | 1.89% |

| 5 | 1 | 96.57% | 98.26 | 3.05 | 1.66 | 1.4 | 1.45% |

| 6 | 1 | 93.74% | 73.95 | 5.02 | 2.23 | 1.62 | 1.99% |

| 7 | 1 | 93.22% | 93.37 | 3.86 | 2.28 | 1.78 | 1.97% |

| 8 | 3 | 75.72% | 33.43 | 9.06 | 5.58 | 3.71 | 4.06% |

| 9 | 9 | 90.54% | 67.35 | 4.61 | 2.7 | 2.03 | 1.79% |

| 10 | 5 | 14.48% | 12.05 | 12.82 | 41.26 | 32.6 | 30.94% |

| 11 | 2 | 62.83% | 58.14 | 8.56 | 10.43 | 6.3 | 5.27% |

| 12 | 6 | 91.43% | 98.03 | 4.18 | 2.62 | 2.09 | 1.91% |

| 13 | 7 | 87.86% | 96.57 | 5.49 | 2.56 | 2.07 | 2.19% |

| 14 | 10 | 34.59% | 28.04 | 12.66 | 21.12 | 15.71 | 15.13% |

| 15 | 13 | 69.51% | 44.38 | 6.58 | 8.82 | 5.76 | 5.80% |

| 16 | 7 | 79.02% | 81.69 | 5.13 | 3.89 | 2.93 | 3.27% |

| 17 | 10 | 78.20% | 41.46 | 3.90 | 15.46 | 6.58 | 5.83% |

| 18 | 11 | 77.73% | 38.73 | 8.96 | 5.1 | 3.52 | 3.58% |

| 19 | 14 | 89.67% | 83.96 | 3.92 | 2.95 | 2.11 | 1.81% |

| Adequate Signal (N = 14) | Poor Signal (N = 5) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Mean | Min | Max | Std. Deviation | Mean | Min | Max | Std. Deviation | p < 0.01 | |

| Accuracy (%) | 84.23 | 62.83 | 96.57 | 10.34 | 18.25 | 12.38 | 34.59 | 9.20 | * |

| RMSE (BPM) | 4.90 | 1.66 | 15.46 | 4.02 | 34.06 | 21.12 | 41.78 | 9.62 | * |

| MAE (BPM) | 3.12 | 1.40 | 6.58 | 1.82 | 28.86 | 15.71 | 35.33 | 8.14 | * |

| MAE (%) | 3.06 | 1.45 | 5.83 | 1.59 | 27.72 | 15.13 | 36.70 | 8.18 | * |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

McLean, M.K.; Weaver, R.G.; Lane, A.; Smith, M.T.; Parker, H.; Stone, B.; McAninch, J.; Matolak, D.W.; Burkart, S.; Chandrashekhar, M.V.S.; et al. A Sliding Scale Signal Quality Metric of Photoplethysmography Applicable to Measuring Heart Rate across Clinical Contexts with Chest Mounting as a Case Study. Sensors 2023, 23, 3429. https://doi.org/10.3390/s23073429

McLean MK, Weaver RG, Lane A, Smith MT, Parker H, Stone B, McAninch J, Matolak DW, Burkart S, Chandrashekhar MVS, et al. A Sliding Scale Signal Quality Metric of Photoplethysmography Applicable to Measuring Heart Rate across Clinical Contexts with Chest Mounting as a Case Study. Sensors. 2023; 23(7):3429. https://doi.org/10.3390/s23073429

Chicago/Turabian StyleMcLean, Marnie K., R. Glenn Weaver, Abbi Lane, Michal T. Smith, Hannah Parker, Ben Stone, Jonas McAninch, David W. Matolak, Sarah Burkart, M. V. S. Chandrashekhar, and et al. 2023. "A Sliding Scale Signal Quality Metric of Photoplethysmography Applicable to Measuring Heart Rate across Clinical Contexts with Chest Mounting as a Case Study" Sensors 23, no. 7: 3429. https://doi.org/10.3390/s23073429

APA StyleMcLean, M. K., Weaver, R. G., Lane, A., Smith, M. T., Parker, H., Stone, B., McAninch, J., Matolak, D. W., Burkart, S., Chandrashekhar, M. V. S., & Armstrong, B. (2023). A Sliding Scale Signal Quality Metric of Photoplethysmography Applicable to Measuring Heart Rate across Clinical Contexts with Chest Mounting as a Case Study. Sensors, 23(7), 3429. https://doi.org/10.3390/s23073429