Facial Expression Recognition Using Local Sliding Window Attention

Abstract

1. Introduction

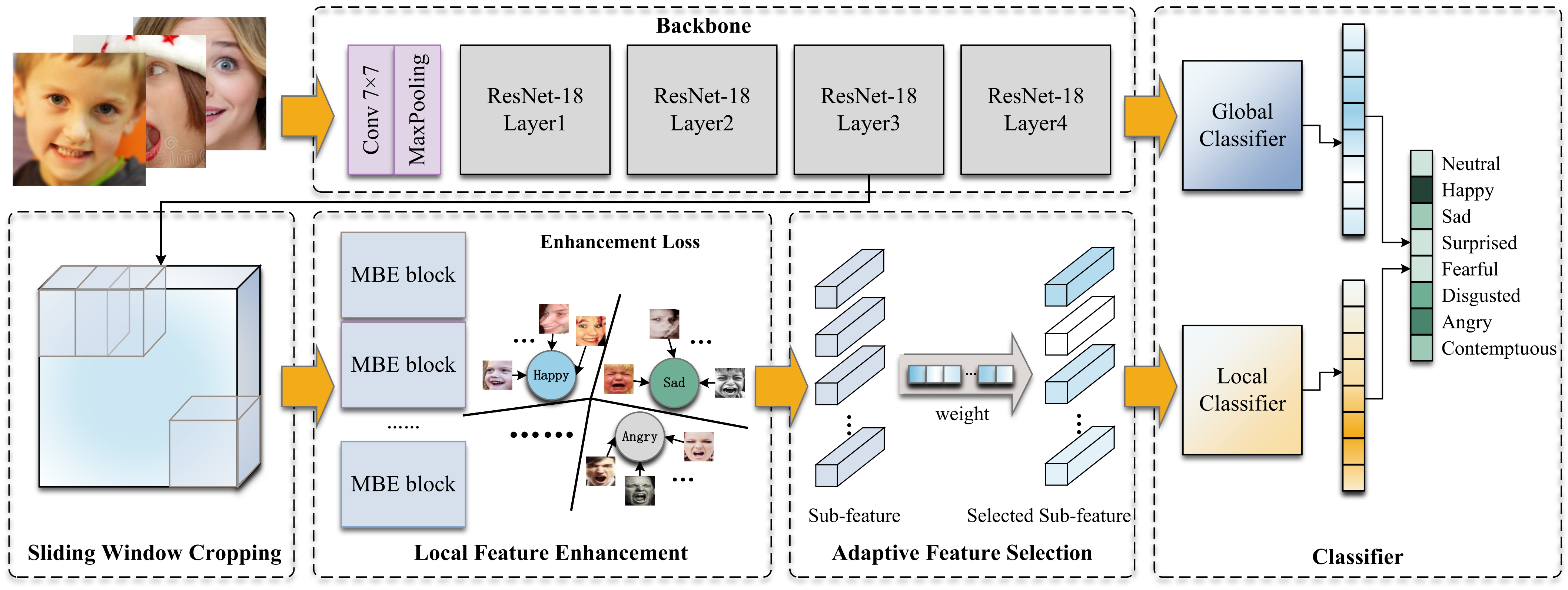

- We propose a Sliding Window Cropping strategy that can crop out all local regions to alleviate the negative effects of occlusion and pose experienced in the wild. This method neither requires complex preprocessing nor destroys the integrity of the features.

- We propose the Local Feature Enhancement module to extract more fine-grained features containing semantic information.

- The Adaptive Feature Selection module is introduced to help the model to autonomously find discriminant features from the local information.

- We conduct comprehensive experiments on widely used wild datasets and specialized occlusion and pose datasets to certify the superiority of the SWA-Net method. Our method shows the ability to solve occlusion and pose problems in the wild.

2. Related Work

2.1. Facial Expression Recognition in the Wild

2.2. Facial Expression Recognition Based on Local Features

3. Proposed Method

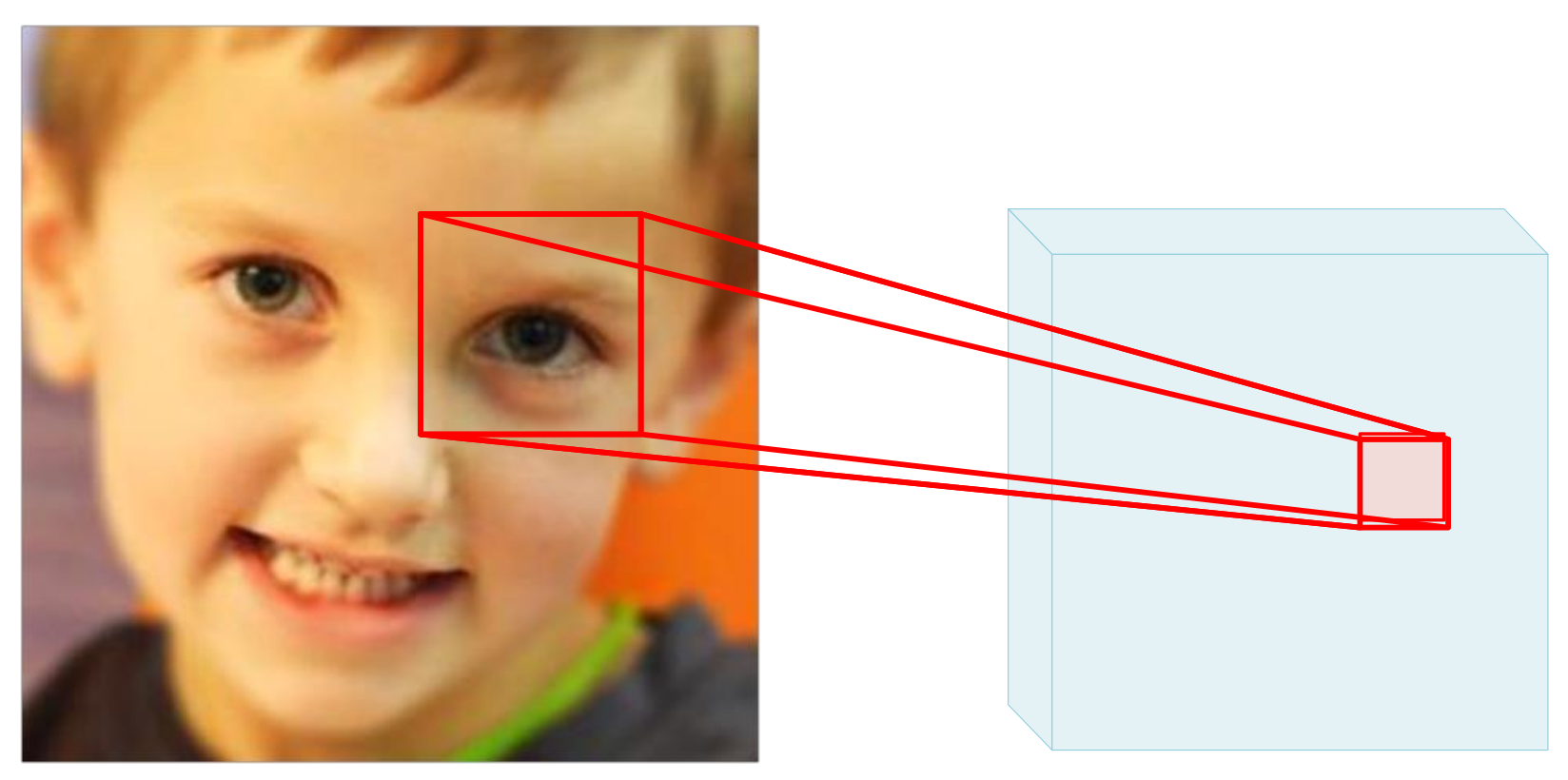

3.1. Sliding Window Cropping Module

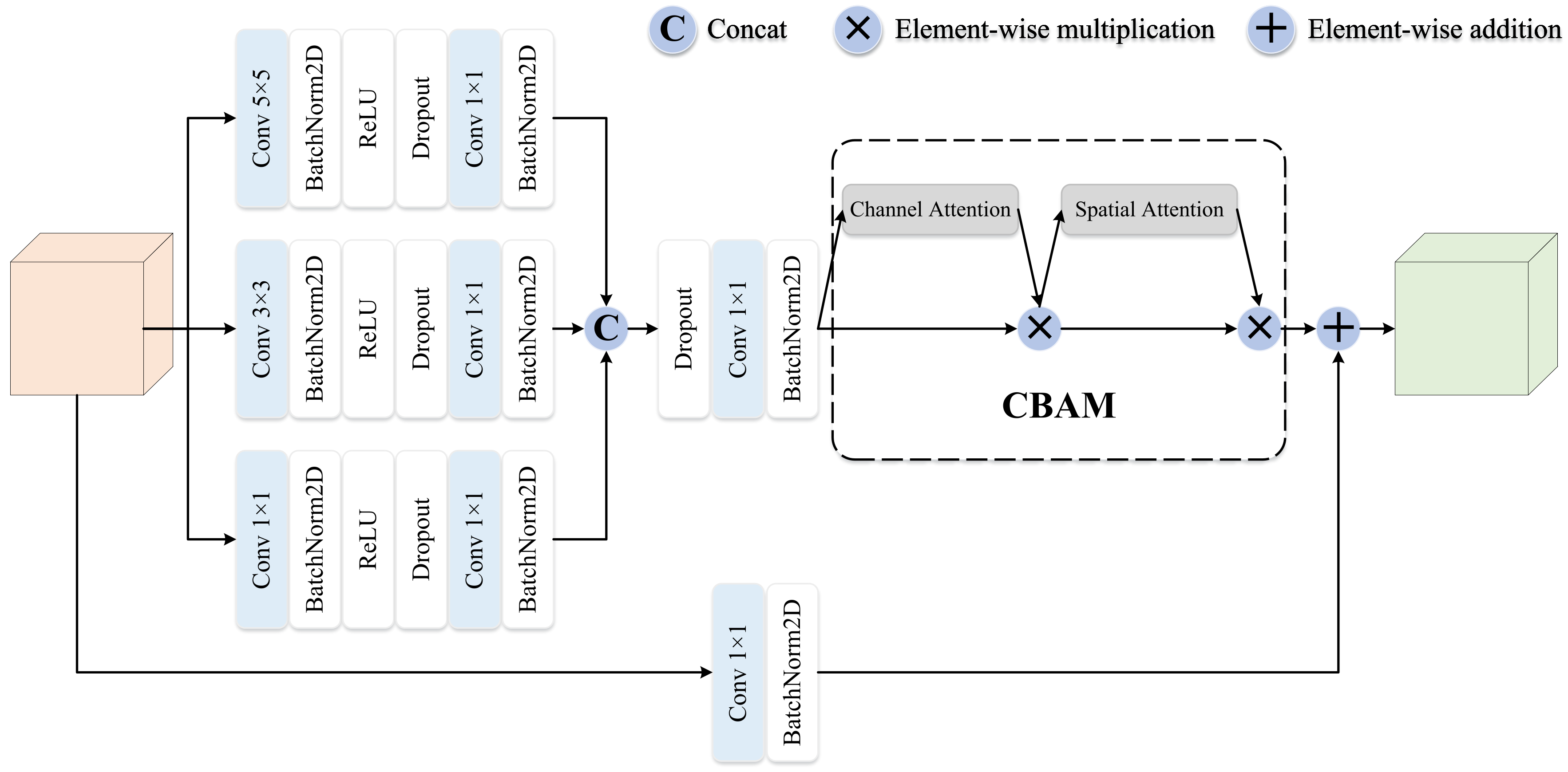

3.2. Local Feature Enhancement Module

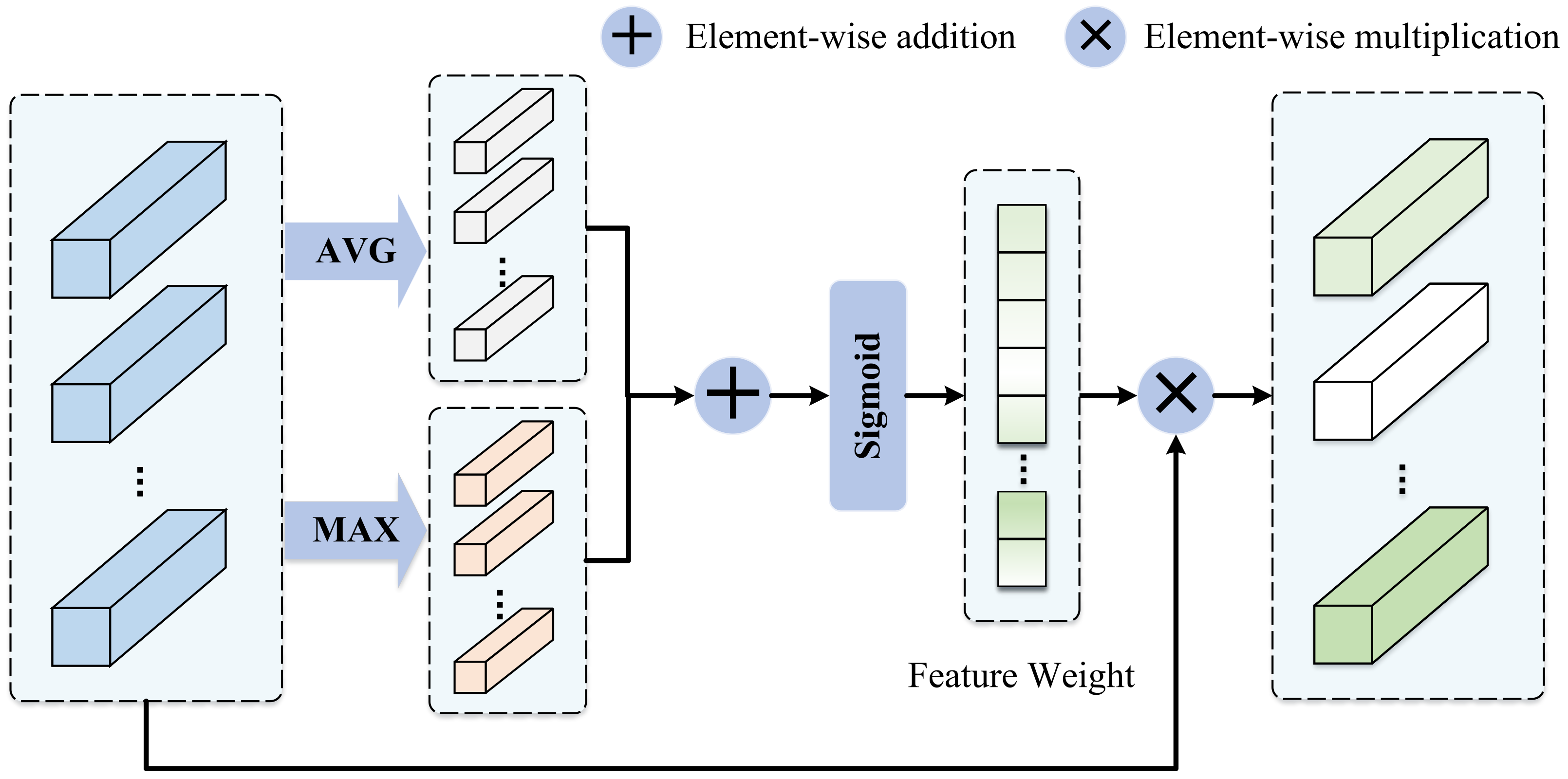

3.3. Adaptive Feature Selection Module

3.4. Fusion Strategy

4. Experimental Results

4.1. Datasets & Evaluation Criteria

4.1.1. Datasets

4.1.2. Evaluation Criteria

4.2. Implementation Details

4.3. Ablation Study

4.3.1. Sliding Window Cropping Module

4.3.2. Local Feature Enhancement Module

4.3.3. Adaptive Feature Selection Module

4.3.4. Fusion Strategy

4.3.5. The & Hyperparameters

4.4. Comparisons with State-of-the-Art Methods

4.4.1. Comparisons on the RAF-DB Dataset

4.4.2. Comparisons on the FERPlus Dataset

4.4.3. Comparisons on the AffectNet Dataset

4.5. Experiments on Realistic Occlusion and Pose Variation

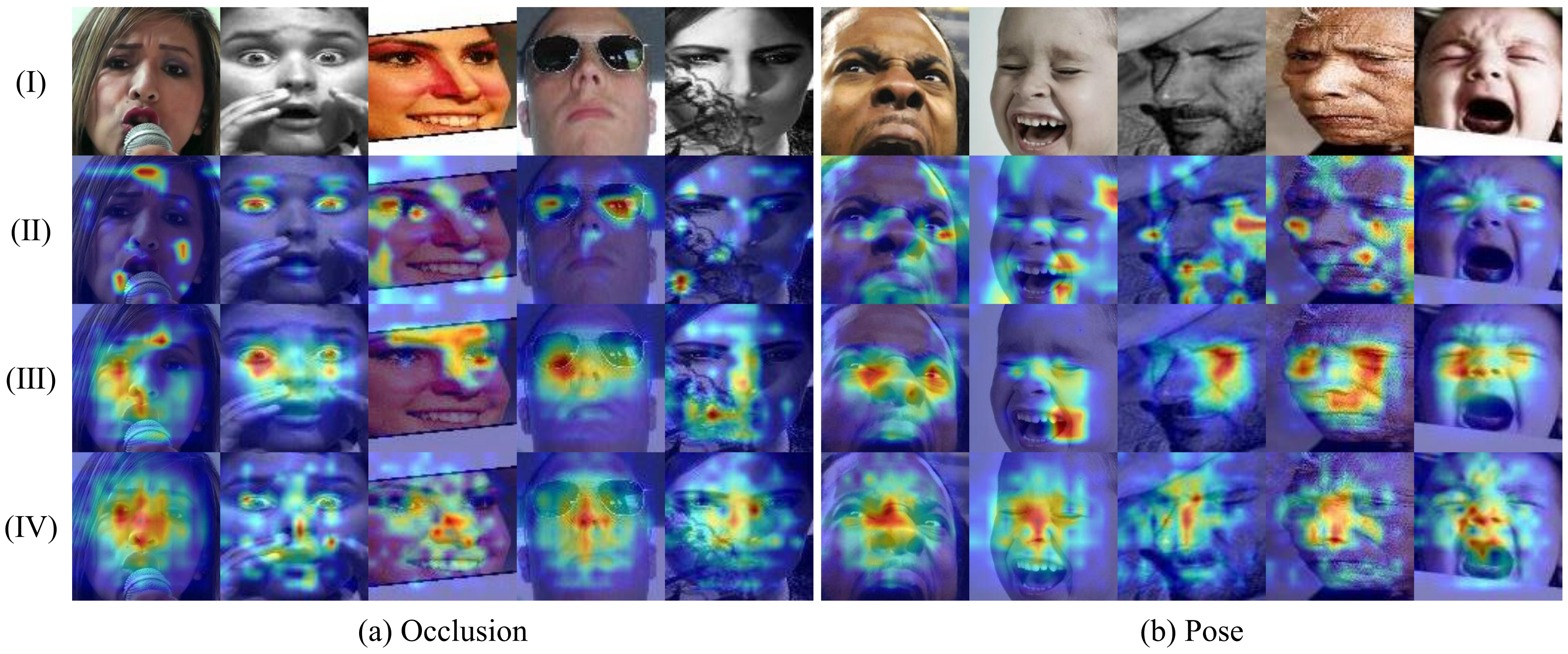

4.6. Visualizations

4.7. Failure Case Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chowdary, M.K.; Nguyen, T.N.; Hemanth, D.J. Deep learning-based facial emotion recognition for human–computer interaction applications. Neural Comput. Appl. 2021, 1–18. [Google Scholar] [CrossRef]

- Zhang, Y.; Hua, C. Driver fatigue recognition based on facial expression analysis using local binary patterns. Optik 2015, 126, 4501–4505. [Google Scholar] [CrossRef]

- Bisogni, C.; Castiglione, A.; Hossain, S.; Narducci, F.; Umer, S. Impact of deep learning approaches on facial expression recognition in healthcare industries. IEEE Trans. Ind. Inform. 2022, 18, 5619–5627. [Google Scholar] [CrossRef]

- Ruiz-Garcia, A.; Webb, N.; Palade, V.; Eastwood, M.; Elshaw, M. Deep learning for real time facial expression recognition in social robots. In Proceedings of the International Conference on Neural Information Processing (ICONIP); Springer: Cham, Switzerland, 2018; pp. 392–402. [Google Scholar]

- Karnati, M.; Seal, A.; Yazidi, A.; Krejcar, O. FLEPNet: Feature Level Ensemble Parallel Network for Facial Expression Recognition. IEEE Trans. Affect. Comput. 2022, 13, 2058–2070. [Google Scholar] [CrossRef]

- Ruan, D.; Yan, Y.; Lai, S.; Chai, Z.; Shen, C.; Wang, H. Feature Decomposition and Reconstruction Learning for Effective Facial Expression Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Virtual, 19–25 June 2021; pp. 7656–7665. [Google Scholar]

- Lucey, P.; Cohn, J.F.; Kanade, T.; Saragih, J.; Ambadar, Z.; Matthews, I. The Extended Cohn-Kanade Dataset (CK+): A complete dataset for action unit and emotion-specified expression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), San Francisco, CA, USA, 13–18 June 2010; pp. 94–101. [Google Scholar]

- Pantic, M.; Valstar, M.; Rademaker, R.; Maat, L. Web-based database for facial expression analysis. In Proceedings of the IEEE International Conference on Multimedia and Expo (ICME), Amsterdam, The Netherlands, 6–9 July 2005; p. 5. [Google Scholar]

- Lyons, M.; Akamatsu, S.; Kamachi, M.; Gyoba, J. Coding facial expressions with Gabor wavelets. In Proceedings of the IEEE International Conference on Automatic Face and Gesture Recognition (FG), Nara, Japan, 14–16 April 1998; pp. 200–205. [Google Scholar]

- Goodfellow, I.J.; Erhan, D.; Carrier, P.L.; Courville, A.; Mirza, M.; Hamner, B.; Cukierski, W.; Tang, Y.; Thaler, D.; Lee, D.H.; et al. Challenges in representation learning: A report on three machine learning contests. In Proceedings of the International Conference on Neural Information Processing (ICONIP), Daegu, Republic of Korea, 3–7 November 2013; pp. 117–124. [Google Scholar]

- Li, S.; Deng, W.; Du, J. Reliable Crowdsourcing and Deep Locality-Preserving Learning for Expression Recognition in the Wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2584–2593. [Google Scholar]

- Mollahosseini, A.; Hasani, B.; Mahoor, M.H. AffectNet: A Database for Facial Expression, Valence, and Arousal Computing in the Wild. IEEE Trans. Affect. Comput. 2019, 10, 18–31. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, C.; Ling, X.; Deng, W. Learn from all: Erasing attention consistency for noisy label facial expression recognition. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; pp. 418–434. [Google Scholar]

- Xue, F.; Wang, Q.; Guo, G. TransFER: Learning Relation-aware Facial Expression Representations with Transformers. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Virtual Event, 11–17 October 2021; pp. 3581–3590. [Google Scholar]

- Li, Y.; Gao, Y.; Chen, B.; Zhang, Z.; Lu, G.; Zhang, D. Self-Supervised Exclusive-Inclusive Interactive Learning for Multi-Label Facial Expression Recognition in the Wild. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 3190–3202. [Google Scholar] [CrossRef]

- Liu, H.; Cai, H.; Lin, Q.; Li, X.; Xiao, H. Adaptive Multilayer Perceptual Attention Network for Facial Expression Recognition. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 6253–6266. [Google Scholar] [CrossRef]

- Wang, K.; Peng, X.; Yang, J.; Meng, D.; Qiao, Y. Region Attention Networks for Pose and Occlusion Robust Facial Expression Recognition. IEEE Trans. Image Process. 2020, 29, 4057–4069. [Google Scholar] [CrossRef]

- Li, Y.; Zeng, J.; Shan, S.; Chen, X. Occlusion Aware Facial Expression Recognition Using CNN With Attention Mechanism. IEEE Trans. Image Process. 2019, 28, 2439–2450. [Google Scholar] [CrossRef]

- Zhao, Z.; Liu, Q.; Wang, S. Learning Deep Global Multi-Scale and Local Attention Features for Facial Expression Recognition in the Wild. IEEE Trans. Image Process. 2021, 30, 6544–6556. [Google Scholar] [CrossRef]

- Ng, P.C.; Henikoff, S. SIFT: Predicting amino acid changes that affect protein function. Nucleic Acids Res. 2003, 31, 3812–3814. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Diego, CA, USA, 20–26 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Liu, C.; Wechsler, H. Gabor feature based classification using the enhanced fisher linear discriminant model for face recognition. IEEE Trans. Image Process. 2002, 11, 467–476. [Google Scholar] [PubMed]

- Fasel, B. Robust face analysis using convolutional neural networks. In Proceedings of the 2002 International Conference on Pattern Recognition, Quebec City, QC, Canada, 11–15 August 2002; Volume 2, pp. 40–43. [Google Scholar]

- Tang, Y. Deep learning using linear support vector machines. arXiv 2013, arXiv:1306.0239. [Google Scholar]

- Kahou, S.E.; Pal, C.; Bouthillier, X.; Froumenty, P.; Gülçehre, Ç.; Memisevic, R.; Vincent, P.; Courville, A.; Bengio, Y.; Ferrari, R.C.; et al. Combining modality specific deep neural networks for emotion recognition in video. In Proceedings of the 15th ACM International Conference on Multimodal Interaction (ICMI), Sydney, Australia, 9–13 December 2013; pp. 543–550. [Google Scholar]

- Li, S.; Deng, W. Deep facial expression recognition: A survey. IEEE Trans. Affect. Comput. 2020, 13, 1195–1215. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, C.; Deng, W. Relative Uncertainty Learning for Facial Expression Recognition. Adv. Neural Inf. Process. Syst. 2021, 34, 17616–17627. [Google Scholar]

- Zou, X.; Yan, Y.; Xue, J.H.; Chen, S.; Wang, H. Learn-to-Decompose: Cascaded Decomposition Network for Cross-Domain Few-Shot Facial Expression Recognition. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; pp. 683–700. [Google Scholar]

- Fan, Y.; Li, V.O.; Lam, J.C. Facial expression recognition with deeply-supervised attention network. IEEE Trans. Affect. Comput. 2020, 13, 1057–1071. [Google Scholar] [CrossRef]

- Jiang, J.; Deng, W. Disentangling Identity and Pose for Facial Expression Recognition. IEEE Trans. Affect. Comput. 2022, 13, 1868–1878. [Google Scholar] [CrossRef]

- Krithika, L.; Priya, G.L. MAFONN-EP: A minimal angular feature oriented neural network based emotion prediction system in image processing. J. King Saud-Univ.-Comput. Inf. Sci. 2022, 34, 1320–1329. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Wen, Y.; Zhang, K.; Li, Z.; Qiao, Y. A discriminative feature learning approach for deep face recognition. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 499–515. [Google Scholar]

- Emery, A.E.; Muntoni, F.; Quinlivan, R. Duchenne Muscular Dystrophy; Number 67; Oxford Monographs on Medical Genetics: Oxford, UK, 2015. [Google Scholar]

- Martinez, B.; Valstar, M.F.; Jiang, B.; Pantic, M. Automatic analysis of facial actions: A survey. IEEE Trans. Affect. Comput. 2017, 10, 325–347. [Google Scholar] [CrossRef]

- Barsoum, E.; Zhang, C.; Ferrer, C.C.; Zhang, Z. Training deep networks for facial expression recognition with crowd-sourced label distribution. In Proceedings of the 18th ACM International Conference on Multimodal Interaction (ICMI), Tokyo, Japan, 12–16 November 2016; pp. 279–283. [Google Scholar]

- Guo, Y.; Zhang, L.; Hu, Y.; He, X.; Gao, J. Ms-celeb-1m: A dataset and benchmark for large-scale face recognition. In Proceedings of the European Conference on Computer Vision (ECCV), Las Vegas, NV, USA, 27–30 June 2016; pp. 87–102. [Google Scholar]

- Zeng, J.; Shan, S.; Chen, X. Facial expression recognition with inconsistently annotated datasets. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 222–237. [Google Scholar]

- Wang, K.; Peng, X.; Yang, J.; Lu, S.; Qiao, Y. Suppressing uncertainties for large-scale facial expression recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 6897–6906. [Google Scholar]

- She, J.; Hu, Y.; Shi, H.; Wang, J.; Shen, Q.; Mei, T. Dive into ambiguity: Latent distribution mining and pairwise uncertainty estimation for facial expression recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Virtual, 19–25 June 2021; pp. 6248–6257. [Google Scholar]

- Ma, F.; Sun, B.; Li, S. Facial Expression Recognition with Visual Transformers and Attentional Selective Fusion. IEEE Trans. Affect. Comput. 2021, 1–13. [Google Scholar] [CrossRef]

- Zeng, D.; Lin, Z.; Yan, X.; Liu, Y.; Wang, F.; Tang, B. Face2Exp: Combating Data Biases for Facial Expression Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022; pp. 20291–20300. [Google Scholar]

- Zhang, J.; Yu, H. Improving the Facial Expression Recognition and Its Interpretability via Generating Expression Pattern-map. Pattern Recognit. 2022, 129, 108737. [Google Scholar] [CrossRef]

- Parkhi, O.M.; Vedaldi, A.; Zisserman, A. Deep face recognition. In Proceedings of the British Machine Vision Conference 2015, Swansea, UK, 7–10 September 2015. [Google Scholar]

- Arnaud, E.; Dapogny, A.; Bailly, K. Thin: Throwable information networks and application for facial expression recognition in the wild. IEEE Trans. Affect. Comput. 2022. [Google Scholar] [CrossRef]

- Fan, X.; Deng, Z.; Wang, K.; Peng, X.; Qiao, Y. Learning discriminative representation for facial expression recognition from uncertainties. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Virtual, 25–28 October 2020; pp. 903–907. [Google Scholar]

- Farzaneh, A.H.; Qi, X. Discriminant distribution-agnostic loss for facial expression recognition in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 13–19 June 2020; pp. 406–407. [Google Scholar]

- Li, Y.; Lu, Y.; Chen, B.; Zhang, Z.; Li, J.; Lu, G.; Zhang, D. Learning informative and discriminative features for facial expression recognition in the wild. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 3178–3189. [Google Scholar] [CrossRef]

- Xie, W.; Wu, H.; Tian, Y.; Bai, M.; Shen, L. Triplet loss with multistage outlier suppression and class-pair margins for facial expression recognition. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 690–703. [Google Scholar] [CrossRef]

- Hayale, W.; Negi, P.S.; Mahoor, M. Deep Siamese neural networks for facial expression recognition in the wild. IEEE Trans. Affect. Comput. 2021. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

| SWC | LFE | AFS | Acc. (%) |

|---|---|---|---|

| — | — | — | 87.13 |

| ✓ | — | — | 88.92 |

| ✓ | ✓ | — | 89.37 |

| ✓ | ✓ | ✓ | 90.03 |

| The Values of Window Parameter | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Stride | 2 | 2 | 2 | 2 | 2 | 3 | 3 | 3 | 3 | 3 |

| Scale | 3 | 5 | 7 | 9 | 11 | 3 | 5 | 7 | 9 | 11 |

| Acc. (%) | 88.85 | 89.42 | 90.03 | 88.95 | 88.69 | 89.55 | 88.68 | 89.18 | 89.37 | 88.53 |

| Fusion Strategy | Acc. (%) |

|---|---|

| Feature-Level Fusion | 89.12 |

| Decision-Level Fusion | 90.03 |

| The Values of | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | |

| Acc. (%) | 89.28 | 89.61 | 89.71 | 89.80 | 90.03 | 89.57 | 89.15 | 88.98 | 88.95 |

| The Values of | |||||||

|---|---|---|---|---|---|---|---|

| 0.1 | 0.01 | 0.001 | 0.0001 | 0.00001 | 0.000001 | 0.0000001 | |

| Acc. (%) | 86.41 | 86.51 | 87.56 | 90.03 | 89.57 | 89.31 | 89.24 |

| Methods | Backbone | Year | Acc. (%) |

|---|---|---|---|

| IPA2LT [39] | ResNet (80layers) | 2018 | 86.77 |

| gACNN [18] | ResNet-18 | 2019 | 85.07 |

| RAN [17] | ResNet-18 | 2019 | 86.90 |

| SCN [40] | ResNet-18 | 2020 | 87.03 |

| MA-Net [19] | ResNet-18 | 2021 | 88.40 |

| DMUE [41] | ResNet-18 | 2021 | 88.76 |

| VTFF [42] | ResNet-18 | 2021 | 88.14 |

| RUL [27] | ResNet-18 | 2021 | 88.98 |

| Face2Exp [43] | ResNet-50 | 2022 | 88.54 |

| EEE [44] | VGG-Face [45] | 2022 | 87.10 |

| IPD-FER [30] | ResNet-18 | 2022 | 88.89 |

| THIN [46] | VGG-16 | 2022 | 87.81 |

| SWA-Net (Ours) | ResNet-18 | — | 90.03 |

| Methods | Backbone | Year | Acc. (%) |

|---|---|---|---|

| PLD [37] | VGG-13 | 2016 | 85.10 |

| RAN [17] | ResNet-18 | 2019 | 88.55 |

| SCN [40] | ResNet-18 | 2020 | 88.01 |

| LDR [47] | ResNet-18 | 2020 | 87.60 |

| RUL [27] | ResNet-18 | 2021 | 88.75 |

| DMUE [41] | ResNet-18 | 2021 | 88.61 |

| VTFF [42] | ResNet-18 | 2021 | 88.81 |

| IPD-FER [30] | ResNet-18 | 2022 | 88.42 |

| SWA-Net (Ours) | ResNet-18 | — | 89.22 |

| Methods | Backbone | Year | Acc. (%) |

|---|---|---|---|

| gACNN [18] | ResNet-18 | 2019 | 58.78 |

| RAN [17] | ResNet-18 | 2019 | 59.50 |

| DDA Loss [48] | ResNet-18 | 2020 | 62.34 |

| IDFL [49] | ResNet-50 | 2021 | 59.20 |

| T21DST [50] | ResNet-18 | 2021 | 60.12 |

| SDW [51] | ResNet-18 | 2021 | 61.11 |

| DMUE [41] | ResNet-18 | 2021 | 62.84 |

| DMUE [41] | Res-50IBN | 2021 | 63.11 |

| EEE [44] | VGG-Face | 2022 | 62.10 |

| IPD-FER [30] | ResNet-18 | 2022 | 62.23 |

| SWA-Net (Ours) | ResNet-18 | — | 63.97 |

| Datasets | Methods | Year | Occlusion | Pose ≥ 30° | Pose ≥ 45° |

|---|---|---|---|---|---|

| RAF-DB | RAN | 2019 | 82.72 | 86.74 | 85.20 |

| MA-Net | 2021 | 83.65 | 89.66 | 87.99 | |

| SWA-Net | — | 87.62 | 89.71 | 89.61 | |

| FERPlus | RAN | 2019 | 83.63 | 82.23 | 80.40 |

| MA-Net | 2021 | — | — | — | |

| SWA-Net | — | 85.79 | 88.80 | 87.05 | |

| AffectNet | RAN | 2019 | 58.50 | 53.90 | 53.19 |

| MA-Net | 2021 | 59.59 | 57.51 | 57.78 | |

| SWA-Net | — | 60.81 | 59.92 | 61.19 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qiu, S.; Zhao, G.; Li, X.; Wang, X. Facial Expression Recognition Using Local Sliding Window Attention. Sensors 2023, 23, 3424. https://doi.org/10.3390/s23073424

Qiu S, Zhao G, Li X, Wang X. Facial Expression Recognition Using Local Sliding Window Attention. Sensors. 2023; 23(7):3424. https://doi.org/10.3390/s23073424

Chicago/Turabian StyleQiu, Shuang, Guangzhe Zhao, Xiao Li, and Xueping Wang. 2023. "Facial Expression Recognition Using Local Sliding Window Attention" Sensors 23, no. 7: 3424. https://doi.org/10.3390/s23073424

APA StyleQiu, S., Zhao, G., Li, X., & Wang, X. (2023). Facial Expression Recognition Using Local Sliding Window Attention. Sensors, 23(7), 3424. https://doi.org/10.3390/s23073424