Unsupervised Video Summarization Based on Deep Reinforcement Learning with Interpolation

Abstract

1. Introduction

2. Background and Related Work

2.1. Video Summarization

2.2. Policy Gradient Method

3. Method

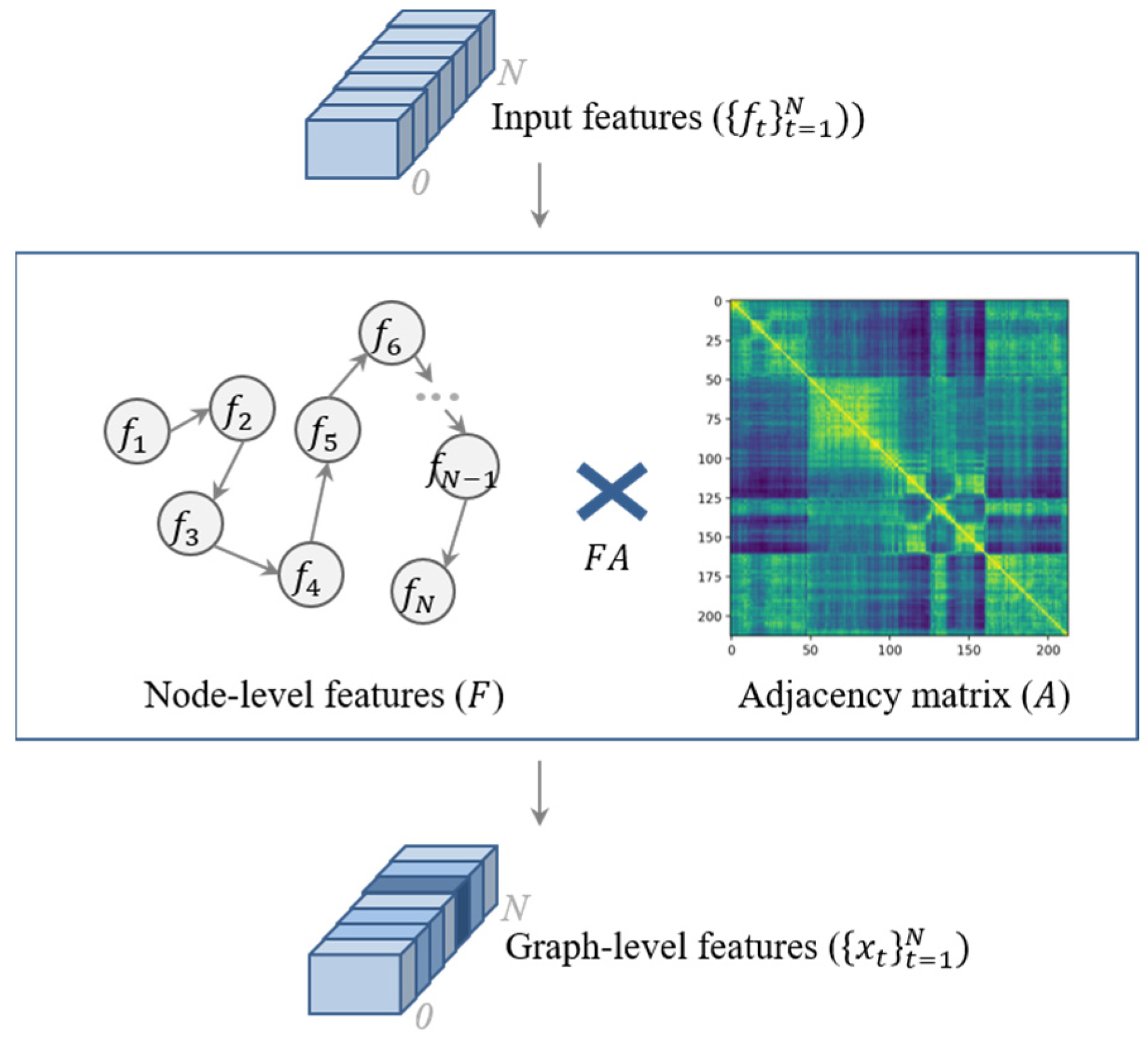

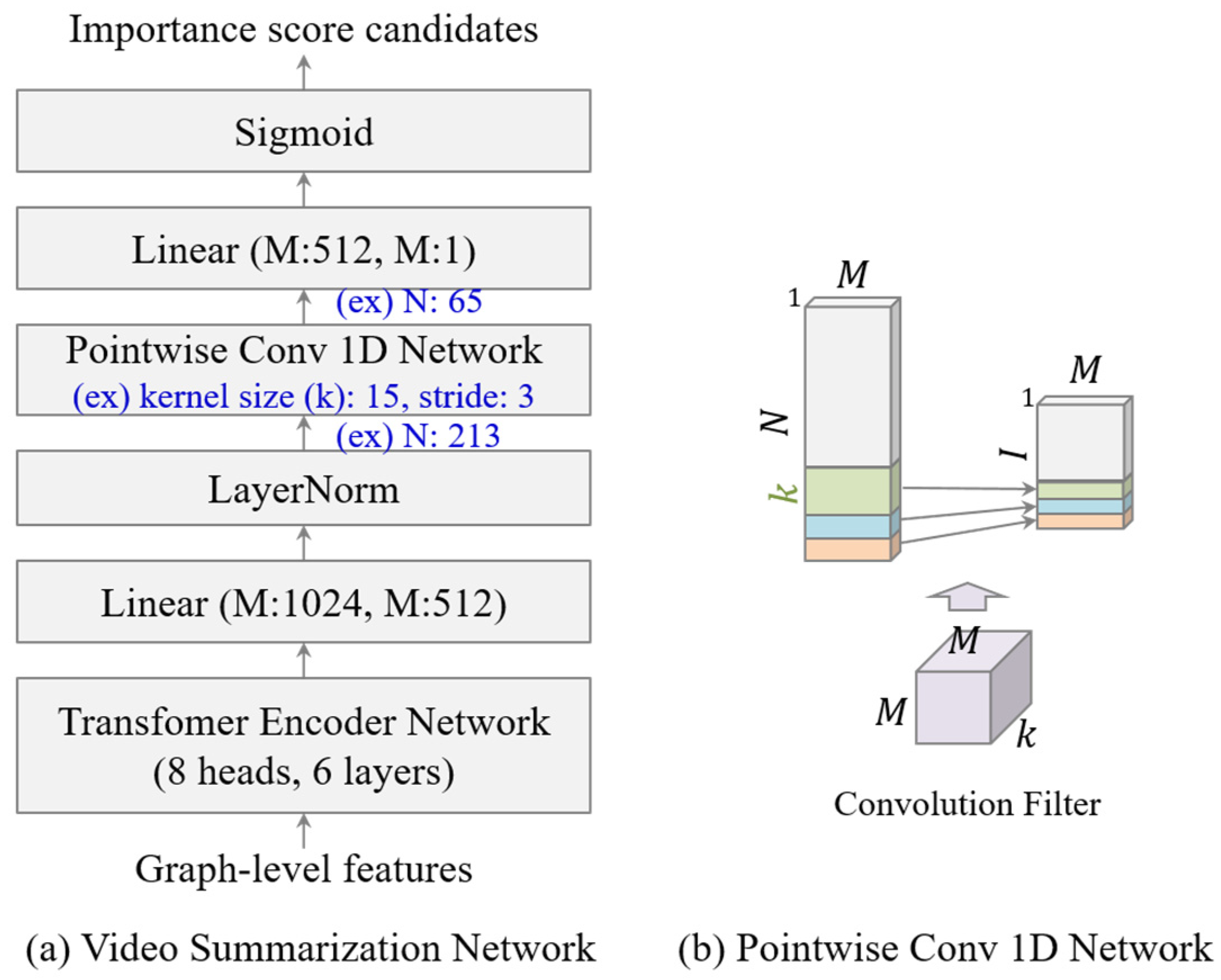

3.1. Video Summarization Network

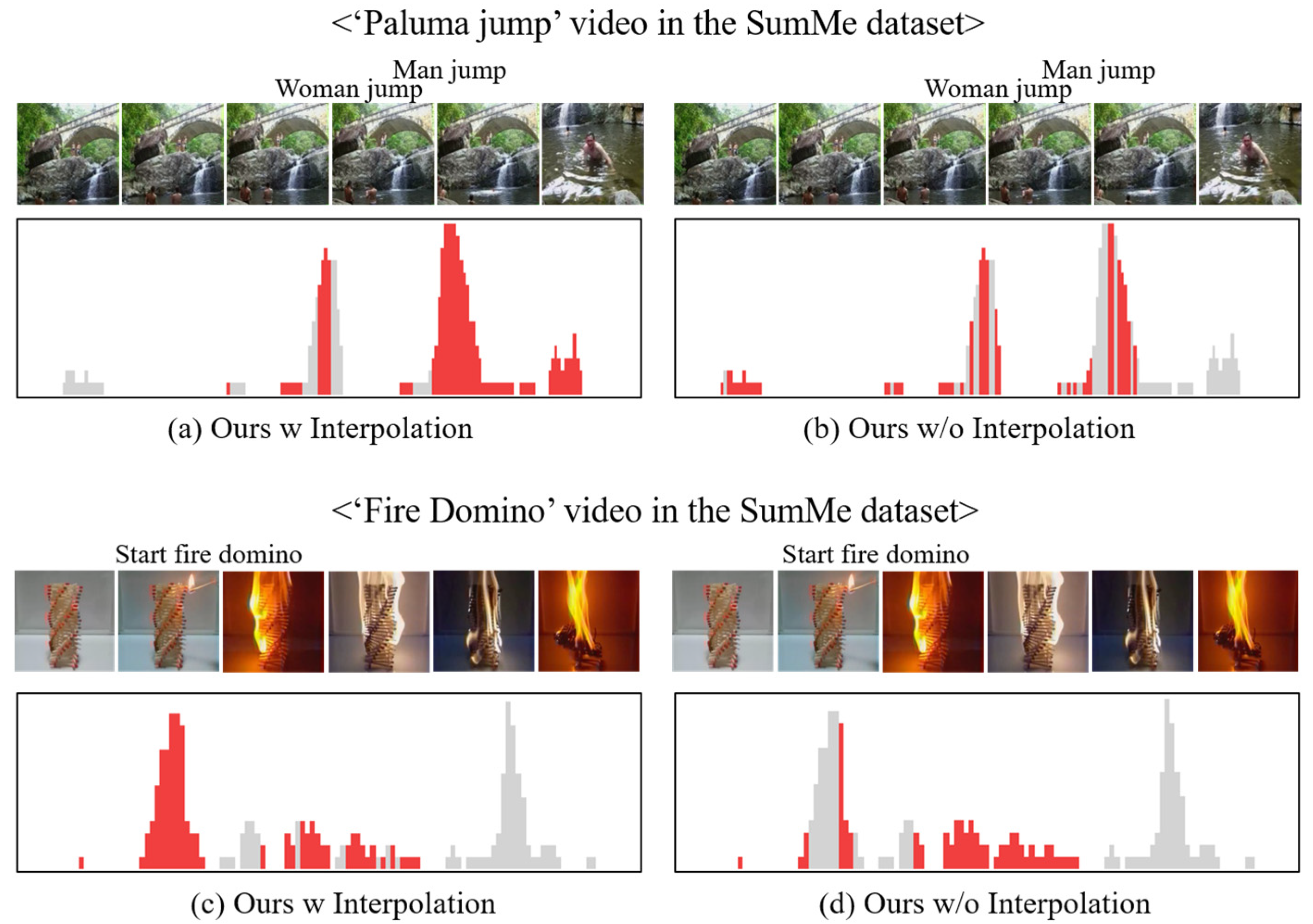

3.2. Piecewise Linear Interpolation

3.3. Reward Functions

3.4. Training with Policy Gradient

3.5. Regularization

| Algorithm 1. Training Video Summarization Network |

| 1: Input: Graph-level features of the video () 2: Output: Proposed network’s parameters () 3: 4: for the number of iterations do 6: Network() % Generate importance score candidate 7: Piecewise linear interpolation of 8: Bernoulli Distribution()% Action from the score 9: % Calculate Reward Functions and A Loss Function using and 10: % Minimization 11: % Update the network using the policy gradient method: 12: end for |

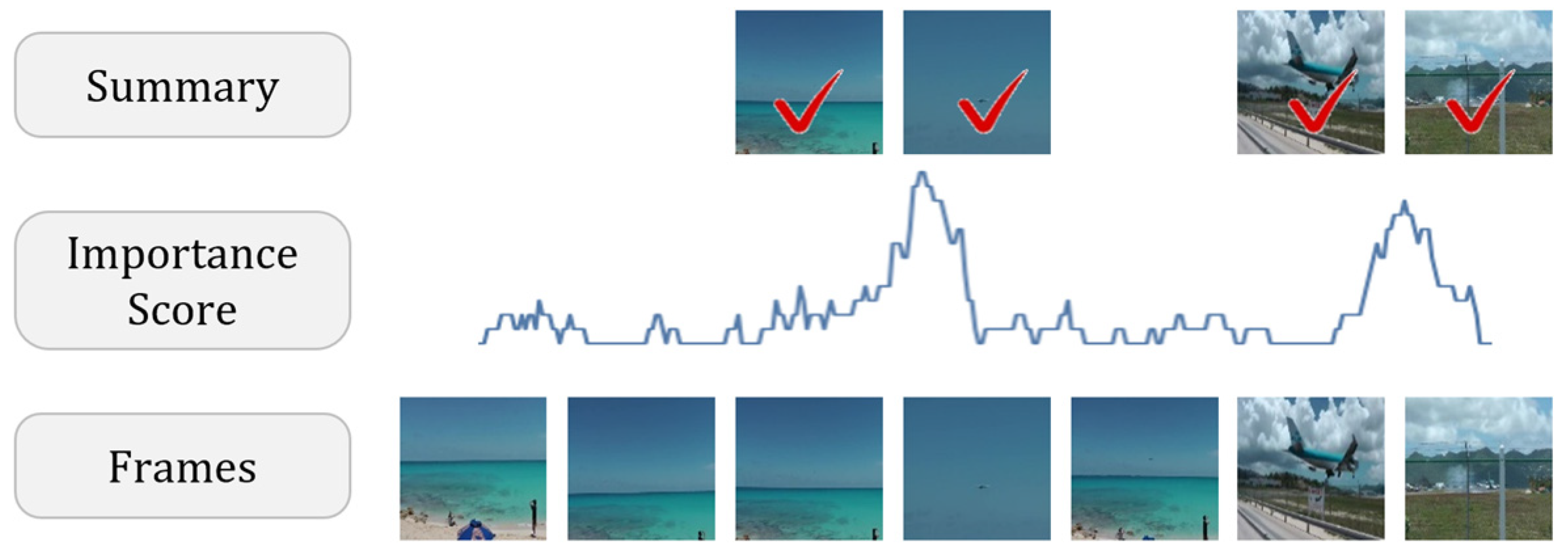

3.6. Generating a Video Summary

4. Experiments

4.1. Dataset

4.2. Evaluation Setup

4.3. Implementation Details

4.4. Performance Evaluation

4.4.1. Quantitative Evaluation

4.4.2. Qualitative Evaluation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ejaz, N.; Mehmood, I.; Baik, S.W. Efficient visual attention based framework for extracting key frames from videos. J. Image Commun. 2013, 28, 34–44. [Google Scholar] [CrossRef]

- Gygli, M.; Grabner, H.; Riemenschneider, H.; Gool, L.V. Creating summaries from user videos. In Proceedings of the European Conference on Computer Vision (ECCV), Santiago, Chile, 7–13 December 2015; pp. 505–520. [Google Scholar]

- Yoon, U.N.; Hong, M.D.; Jo, G.S. Interp-SUM: Unsupervised Video Summarization with Piecewise Linear Interpolation. Sensors 2021, 21, 4562. [Google Scholar] [CrossRef] [PubMed]

- Apostolidis, E.; Adamantidou, E.; Metsai, A.; Mezaris, V.; Patras, I. Unsupervised Video Summarization via Attention-Driven Adversarial Learning. In Proceedings of the International Conference on Multimedia Modeling (MMM), Daejeon, Korea, 5–8 January 2020; pp. 492–504. [Google Scholar]

- Jung, Y.J.; Cho, D.Y.; Kim, D.H.; Woo, S.H.; Kweon, I.S. Discriminative feature learning for unsupervised video summarization. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 8537–8544. [Google Scholar]

- Zhou, K.; Qiao, Y.; Xiang, T. Deep Reinforcement Learning for Unsupervised Video Summarization with Diversity-Representativeness Reward. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32, pp. 7582–7589. [Google Scholar]

- Song, Y.; Vallmitjana, J.; Stent, A.; Jaimes, A. Tvsum: Summarizing web videos using titles. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 5179–5187. [Google Scholar]

- Feng, L.; Li, Z.; Kuang, Z.; Zhang, W. Extractive Video Summarizer with Memory Augmented Neural Networks. In Proceedings of the 26th ACM International Conference on Multimedia, Seoul, Republic of Korea, 22–26 October 2018; pp. 976–983. [Google Scholar]

- Zhang, K.; Chao, W.L.; Sha, F.; Grauman, K. Video Summarization with Long Short-term Memory. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016; pp. 766–782. [Google Scholar]

- Zhang, Y.; Kampffmeyer, M.; Zhao, X.; Tan, M. DTR-GAN: Dilated Temporal Relational Adversarial Network for Video Summarization. In Proceedings of the ACM Turing Celebration Conference (ACM TURC), Shanghai, China, 19–20 May 2018; pp. 1–6. [Google Scholar]

- Ji, J.; Xiong, K.; Pang, Y.; Li, X. Video Summarization with Attention-Based Encoder-Decoder Networks. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 1709–1717. [Google Scholar] [CrossRef]

- Mahasseni, B.; Lam, M.; Todorovic, S. Unsupervised Video Summarization with Adversarial LSTM Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 202–211. [Google Scholar]

- Yuan, L.; Tay, F.E.; Li, P.; Zhou, L.; Feng, F. Cycle-SUM: Cycle-consistent Adversarial LSTM Networks for Unsupervised Video Summarization. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 9143–9150. [Google Scholar]

- Kaufman, D.; Levi, G.; Hassner, T.; Wolf, L. Temporal Tessellation: A Unified Approach for Video Analysis. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 94–104. [Google Scholar]

- Rochan, M.; Ye, L.; Wang, Y. Video Summarization Using Fully Convolutional Sequence Networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 347–363. [Google Scholar]

- Silver, D.; Lever, G.; Heess, N.; Degris, T.; Wierstra, D.; Riedmiller, M. Deterministic Policy Gradient Algorithms. In Proceedings of the 31st International Conference on International Conference on Machine Learning (ICML), Beijing, China, 21–26 June 2014; pp. 387–395. [Google Scholar]

- Yu, Y. Towards Sample Efficient Reinforcement Learning. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence (IJCAI), Stockholm, Sweden, 13–19 July 2018; pp. 5739–5743. [Google Scholar]

- Lehnert, L.; Laroche, R.; Seijen, H.V. On Value Function Representation of Long Horizon Problems. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 3457–3465. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, B.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Wu, y.; Omar, B.F.E.; Xi, L.; Fei, W. Adaptive Graph Representation Learning for Video Person Re-Identification. IEEE Trans. Image 2020, 29, 8821–8830. [Google Scholar] [CrossRef] [PubMed]

- Nachum, O.; Norouzi, M.; Schuurmans, D. Improving Policy Gradient by Exploring Under-Appreciated Rewards. arXiv 2016, arXiv:1611.09321. [Google Scholar]

- Potapov, D.; Douze, M.; Harchaoui, Z.; Schmid, C. Category-specifc video summarization. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 540–555. [Google Scholar]

- Rochan, M.; Wang, Y. Video Summarization by Learning from Unpaired Data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 7902–7911. [Google Scholar]

- Yunjae, J.; Donghyeon, C.; Sanghyun, W.; Inso, K. Global-and-Local Relative Position Embedding for Unsupervised Video Summarization. In Proceedings of the European Conference on Computer Vision (ECCV), Virtual, 23–28 August 2020; Volume 12370, pp. 167–183. [Google Scholar]

- Evlampios, A.; Eleni, A.; Alexandros, M.I.; Vasileios, M.; Ioannis, P. AC-SUM-GAN: Connecting Actor-Critic and Generative Adversarial Networks for Unsupervised Video Summarization. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 3278–3292. [Google Scholar]

- Aniwat, P.; Yi, G.; Fangli, Y.; Wentian, X.; Zheng, Z. Self-Attention Recurrent Summarization Network with Reinforcement Learning for Video Summarization Task. In Proceedings of the IEEE International Conference on Multimedia and Expo (ICME), Virtual, 5–9 July 2021; pp. 1–6. [Google Scholar]

- Xu, W.; Yujie, L.; Haoyu, W.; Longzhao, H.; Shuxue, D. A Video Summarization Model Based on Deep Reinforcement Learning with Long-Term Dependency. Sensors 2022, 22, 7689. [Google Scholar]

| Kernel Size | Stride Size | SumMe | TVSum |

|---|---|---|---|

| 3 | 3 | 50.78 | 59.76 |

| 5 | 3 | 51.58 | 60.08 |

| 10 | 3 | 49.74 | 59.54 |

| 15 | 3 | 51.66 | 59.86 |

| 15 | 5 | 50.70 | 59.62 |

| 15 | 10 | 47.88 | 56.54 |

| 20 | 3 | 49.16 | 59.06 |

| 25 | 3 | 46.22 | 56.46 |

| Method | SumMe | TVSum |

|---|---|---|

| Ours w/o 1D Conv Network and w/o Interpolation | 50.02 | 58.70 |

| Ours w/o Temporal Consistency Reward | 49.88 | 60.58 |

| Ours w/o Graph-level Features | 50.46 | 57.26 |

| Ours | 51.66 | 59.86 |

| Method | SumMe | TVSum |

|---|---|---|

| SUM-GAN [12] | 39.1 (−) | 51.7 (−) |

| SUM-FCN [15] | 41.5 (−) | 52.7 (−) |

| DR-DSN [6] | 41.4 (−) | 57.6 (−) |

| Cycle-SUM [13] | 41.9 (−) | 57.6 (−) |

| CSNet [5] | 51.3 (−) | 58.8 (−) |

| UnpairedVSN [23] | 47.5 (−) | 55.6 (−) |

| SUM-GAN-AAE [4] | 48.9 (−) | 58.3 (−) |

| CSNet+GL+RPE [24] | 50.2 (−) | 59.1 (−) |

| AC-SUM-GAN [25] | 50.8 (−) | 60.6 (+) |

| DSR-RL-GRU [26] | 50.3 (−) | 60.2 (+) |

| AuDSN-SD [27] | 47.7 (−) | 59.8 (−) |

| Interp-SUM [3] | 47.68 (−) | 59.14 (−) |

| Ours | 51.66 | 59.86 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yoon, U.N.; Hong, M.D.; Jo, G.-S. Unsupervised Video Summarization Based on Deep Reinforcement Learning with Interpolation. Sensors 2023, 23, 3384. https://doi.org/10.3390/s23073384

Yoon UN, Hong MD, Jo G-S. Unsupervised Video Summarization Based on Deep Reinforcement Learning with Interpolation. Sensors. 2023; 23(7):3384. https://doi.org/10.3390/s23073384

Chicago/Turabian StyleYoon, Ui Nyoung, Myung Duk Hong, and Geun-Sik Jo. 2023. "Unsupervised Video Summarization Based on Deep Reinforcement Learning with Interpolation" Sensors 23, no. 7: 3384. https://doi.org/10.3390/s23073384

APA StyleYoon, U. N., Hong, M. D., & Jo, G.-S. (2023). Unsupervised Video Summarization Based on Deep Reinforcement Learning with Interpolation. Sensors, 23(7), 3384. https://doi.org/10.3390/s23073384