Formal Analysis of Trust and Reputation for Service Composition in IoT

Abstract

1. Introduction

2. Formal Methods

2.1. Formal Definitions

2.2. HOL Syntax and Semantics

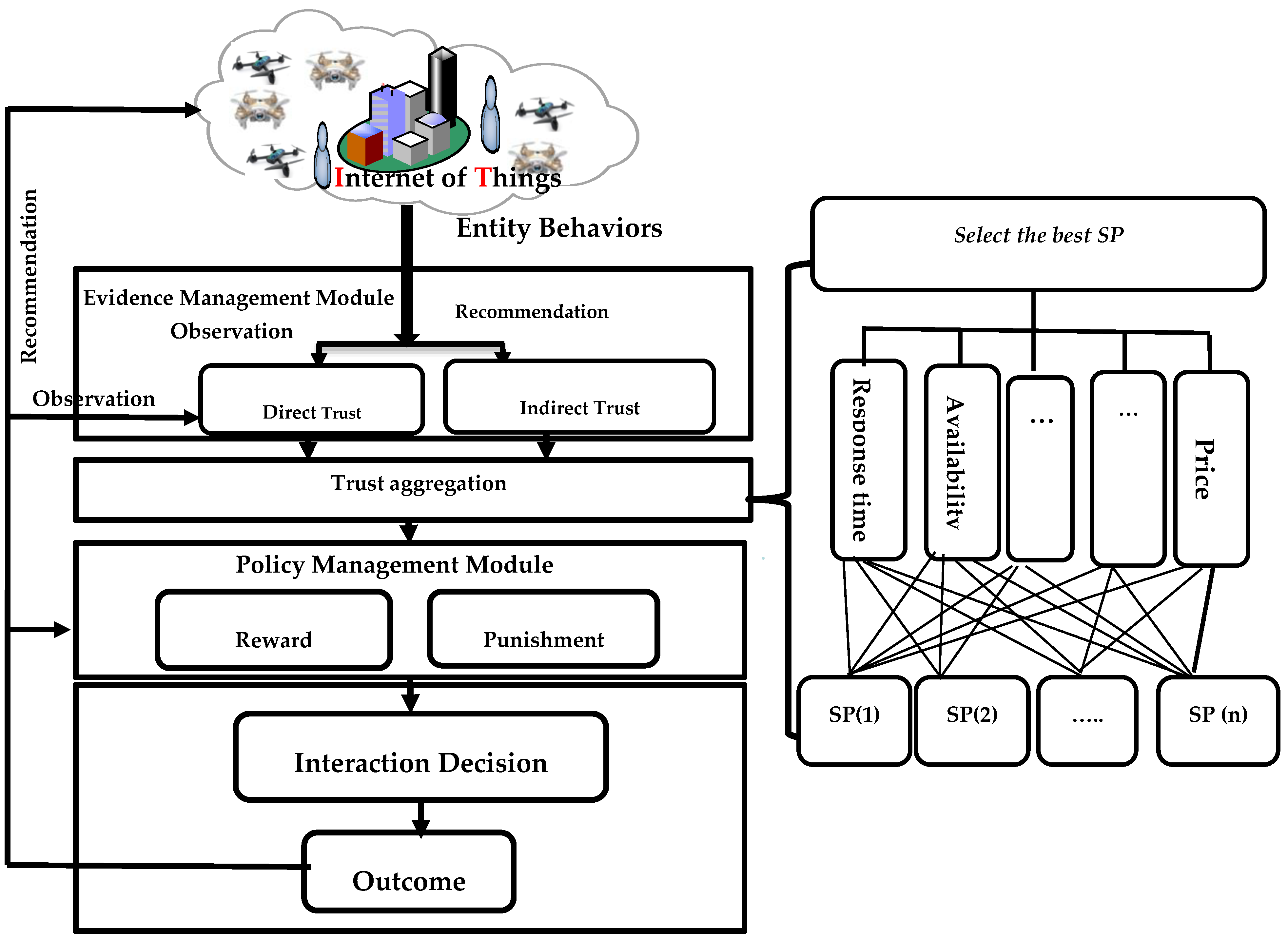

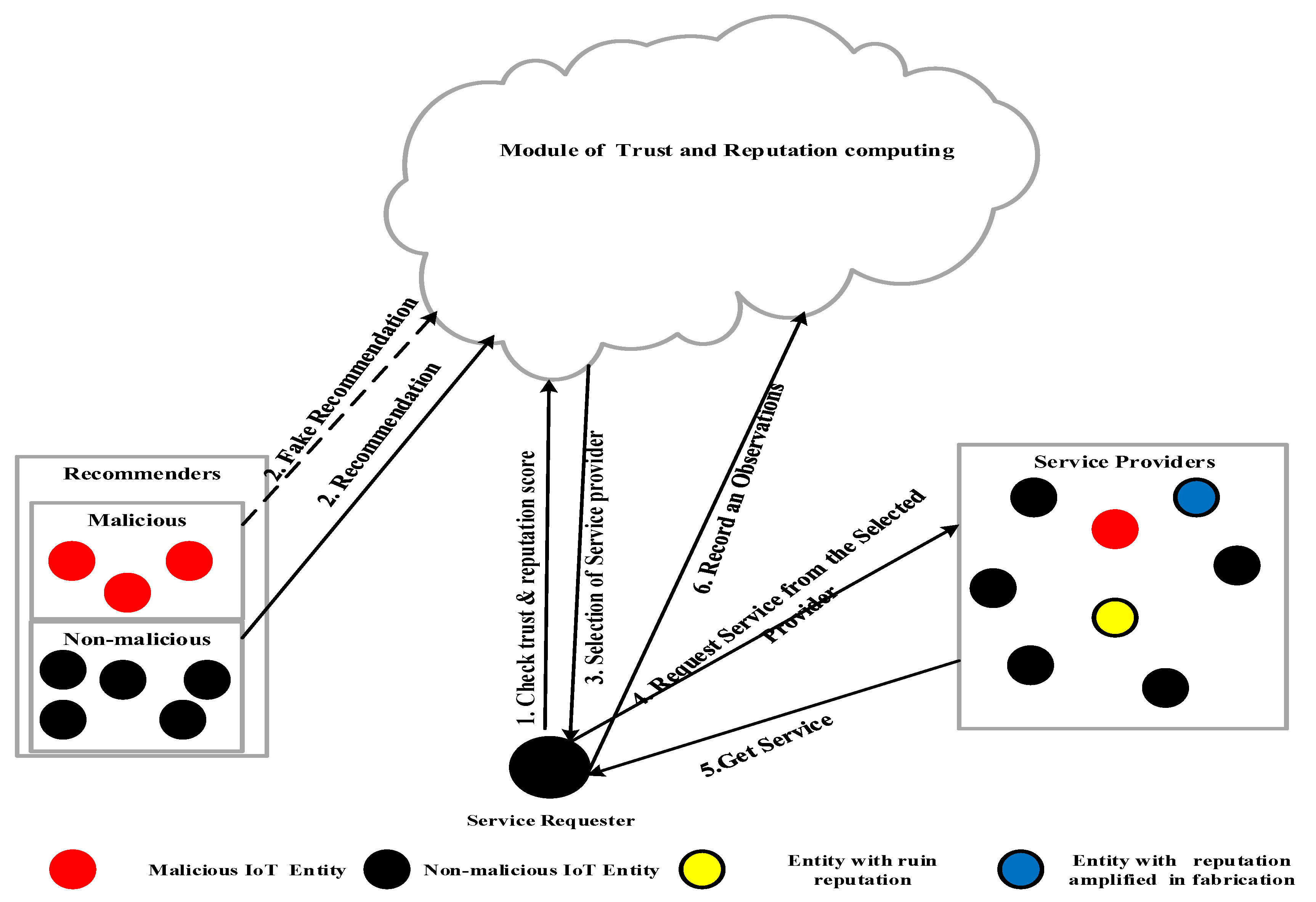

3. Formal Representation of Trust-Based IoT System

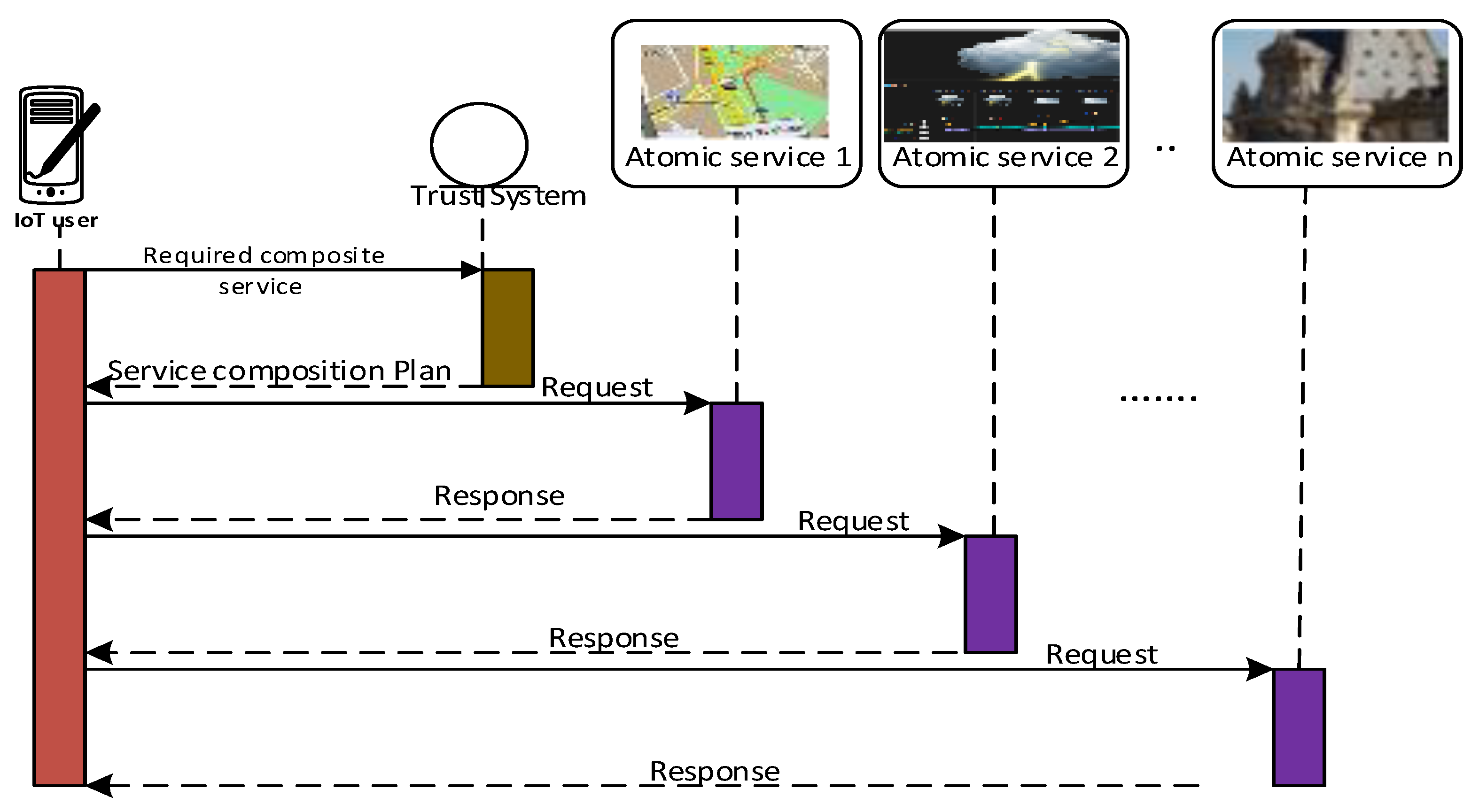

3.1. Transactions in SOA-IoT System

3.2. Formal Representation Trust-Based Service Composition

4. Formal Representation of Trust System

4.1. Formal Representation of Trust Model

- Maintaining self-observation (direct trust);

- Providing recommendations (indirect trust) to other SRs;

- Computing the overall trust value.

4.1.1. Recommendation (Indirect Trust)

4.1.2. Self-Observation (Direct Trust)

4.2. Formal Representation of Entities’ Behavior Model

4.2.1. Formal Representation of Honest Model

4.2.2. Formal Representation of Attacker Models

- Atomic actions

- Attacker action

- The formal representation of rewards and punishments in the trust system

5. Execution Semantics

6. Performance Metrics of Trust System

6.1. Accuracy

6.2. Resiliency

6.3. Convergence

7. Service Composition: A Case Study

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Asghari, P.; Rahmani, A.M.; Javadi, H.H.S. Service composition approaches in IoT: A systematic review. J. Netw. Comput. Appl. 2018, 120, 61–77. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, I.R.; Cho, J.H.; Tsai, J.J. A Comparative Analysis of Trust-based Service Composition Algorithms in Service-Oriented Ad Hoc Networks. In Proceedings of the 2017 International Conference on Information System and Data Mining, Charleston, SC, USA, 1–3 April 2017. [Google Scholar]

- Cho, J.H.; Swami, A.; Chen, R. A survey on trust management for mobile ad hoc networks. IEEE Commun. Surv. Tutor. 2011, 13, 562–583. [Google Scholar] [CrossRef]

- Scott, J.A. Integrating Trust-Based Adaptive Security Framework with Risk Mitigation to Enhance SaaS User Identity and Access Control Based on User Behavior; Luleå University of Technology: Luleå, Sweden, 2022. [Google Scholar]

- Meghanathan, N.; Boumerdassi, S.; Chaki, N.; Nagamalai, D. Recent Trends in Network Security and Applications. In Proceedings of the Third International Conference, CNSA 2010, Chennai, India, 23–25 July 2010; Springer: Berlin/Heidelberg, Germany, 2010; Volume 89. [Google Scholar]

- Packer, H.S.; Drăgan, L.; Moreau, L. An Auditable Reputation Service for Collective Adaptive Systems. In Social Collective Intelligence; Springer: Berlin/Heidelberg, Germany, 2014; pp. 159–184. [Google Scholar]

- Yaich, R.; Boissier, O.; Jaillon, P.; Picard, G. An adaptive and socially-compliant trust management system for virtual communities. In Proceedings of the 27th Annual ACM Symposium on Applied Computing, Trento, Italy, 26–30 March 2012; ACM: New York, NY, USA, 2012. [Google Scholar]

- Ganeriwal, S.; Balzano, L.K.; Srivastava, M.B. Reputation-based framework for high integrity sensor networks. ACM Trans. Sens. Netw. 2008, 4, 15. [Google Scholar] [CrossRef]

- Han, G.; Jiang, J.; Shu, L.; Niu, J.; Chao, H.C. Management and applications of trust in Wireless Sensor Networks: A survey. J. Comput. Syst. Sci. 2014, 80, 602–617. [Google Scholar] [CrossRef]

- Tarable, A.; Nordio, A.; Leonardi, E.; Marsan, M.A. The importance of being earnest in crowdsourcing systems. In Proceedings of the 2015 IEEE Conference on Computer Communications (INFOCOM), Hong Kong, China, 26 April–1 May 2015; IEEE: Piscataway, NJ, USA, 2015. [Google Scholar]

- Yu, Y.; Li, K.; Zhou, W.; Li, P. Trust mechanisms in wireless sensor networks: Attack analysis and countermeasures. J. Netw. Comput. Appl. 2012, 35, 867–880. [Google Scholar] [CrossRef]

- Bidgoly, A.J.; Ladani, B.T. Modeling and quantitative verification of trust systems against malicious attackers. Comput. J. 2016, 59, 1005–1027. [Google Scholar] [CrossRef]

- Drawel, N.; Bentahar, J.; Laarej, A.; Rjoub, G. Formal verification of group and propagated trust in multi-agent systems. Auton. Agents Multi-Agent Syst. 2022, 36, 1–31. [Google Scholar] [CrossRef]

- Hoffman, K.; Zage, D.; Nita-Rotaru, C. A survey of attack and defense techniques for reputation systems. ACM Comput. Surv. 2009, 42, 1–31. [Google Scholar] [CrossRef]

- Jøsang, A. Robustness of trust and reputation systems: Does it matter? In Proceedings of the IFIP International Conference on Trust Management, Surat, India, 21–25 May 2012; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Jøsang, A.; Golbeck, J. Challenges for robust trust and reputation systems. In Proceedings of the 5th International Workshop on Security and Trust Management (SMT 2009), Saint Malo, France, 24–25 September 2009. [Google Scholar]

- Kerr, R.; Cohen, R. Smart cheaters do prosper: Defeating trust and reputation systems. In Proceedings of the 8th International Conference on Autonomous Agents and Multiagent Systems-Volume 2, Budapest, Hungary, 10–15 May 2009; International Foundation for Autonomous Agents and Multiagent Systems: Pullman, WA, USA, 2009. [Google Scholar]

- Mármol, F.G.; Pérez, G.M. Security threats scenarios in trust and reputation models for distributed systems. Comput. Secur. 2009, 28, 545–556. [Google Scholar] [CrossRef]

- Sun, Y.; Liu, Y. Security of online reputation systems: The evolution of attacks and defenses. IEEE Signal Process. Mag. 2012, 29, 87–97. [Google Scholar] [CrossRef]

- Zhang, L.; Jiang, S.; Zhang, J.; Ng, W.K. Robustness of trust models and combinations for handling unfair ratings. In Proceedings of the IFIP International Conference on Trust Management, Surat, India, 21–25 May 2012; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Souri, A.; Rahmani, A.M.; Navimipour, N.J.; Rezaei, R. A hybrid formal verification approach for QoS-aware multi-cloud service composition. Clust. Comput. 2020, 23, 2453–2470. [Google Scholar] [CrossRef]

- Ghannoudi, M.; Chainbi, W. Formal verification for web service composition: A model-checking approach. In Proceedings of the 2015 International Symposium on Networks, Computers and Communications (ISNCC), Yasmine Hammamet, Tunisia, 13–15 May 2015; IEEE: Piscataway, NJ, USA, 2015. [Google Scholar]

- Kil, H.; Nam, W. Semantic Web service composition using formal verification techniques. In Proceedings of the Computer Applications for Database, Education, and Ubiquitous Computing, Gangneug, Korea, 16–19 December 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 72–79. [Google Scholar]

- Chen, J.; Huang, L. Formal verification of service composition in pervasive computing environments. In Proceedings of the First Asia-Pacific Symposium on Internetware, Beijing, China, 17–18 October 2009; ACM: New York, NY, USA, 2009. [Google Scholar]

- Van Benthem, J.; Doets, K. Higher-order logic. In Handbook of Philosophical Logic; Springer: Berlin/Heidelberg, Germany, 1983; pp. 275–329. [Google Scholar]

- Andrews, P. Church’s Type Theory; Stanford University: Stanford, CA, USA, 2008. [Google Scholar]

- Chen, R.; Guo, J.; Bao, F. Trust management for SOA-based IoT and its application to service composition. IEEE Trans. Serv. Comput. 2016, 9, 482–495. [Google Scholar] [CrossRef]

- Guo, J.; Chen, R.; Tsai, J.J. A survey of trust computation models for service management in internet of things systems. Comput. Commun. 2017, 97, 1–14. [Google Scholar] [CrossRef]

- Chen, R.; Bao, F.; Guo, J. Trust-based service management for social internet of things systems. IEEE Trans. Dependable Secur. Comput. 2016, 13, 684–696. [Google Scholar] [CrossRef]

- Ahmed, A.I.A.; Khan, S.; Gani, A.; Ab Hamid, S.H.; Guizani, M. Entropy-based fuzzy AHP model for trustworthy service provider selection in Internet of Things. In Proceedings of the 2018 IEEE 43rd Conference on Local Computer Networks (LCN), Chicago, IL, USA, 1–4 October 2018; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar]

- Chen, R.; Guo, J.; Wang, D.C.; Tsai, J.J.; Al-Hamadi, H.; You, I. Trust-based service management for mobile cloud iot systems. IEEE Trans. Netw. Serv. Manag. 2019, 16, 246–263. [Google Scholar] [CrossRef]

- Yin, M.; Wortman Vaughan, J.; Wallach, H. Understanding the effect of accuracy on trust in machine learning models. In Proceedings of the 2019 Chi Conference on Human Factors in Computing Systems, Glasgow, Scotland, 4–9 May 2019; ACM: New York, NY, USA, 2019. [Google Scholar]

- Khan, Z.A.; Ullrich, J.; Voyiatzis, A.G.; Herrmann, P. A trust-based resilient routing mechanism for the internet of things. In Proceedings of the 12th International Conference on Availability, Reliability and Security, Reggio Calabria, Italy, 29 August–1 September 2017; ACM: New York, NY, USA, 2017. [Google Scholar]

- Rao, J.; Su, X. A survey of automated web service composition methods. In Proceedings of the International Workshop on Semantic Web Services and Web Process Composition, San Diego, CA, USA, 6 July 2004; Springer: Berlin/Heidelberg, Germany, 2004. [Google Scholar]

| Study | Trust-Based | Model/Logic | Application Domain |

|---|---|---|---|

| This study | Yes | Higher-order, logic-based | IoT |

| A hybrid formal verification approach for QoS-aware multi-cloud service composition [21] | No | Multi-labeled transition systems-based model, checking, and Pi-calculus-based process. | Cloud computing |

| Formal verification for web service composition: a model-checking approach [22] | No | Temporal logic and model-checking approach for verifying service composition. | General |

| Semantic web service composition Using Formal Verification Techniques [23] | No | Semantic matchmaking and formal verification techniques: Boolean satisfiability solving and symbolic-model checking. | General |

| Formal verification of Service composition in pervasive computing environments [24] | No | Labeled transition system, by transforming concurrent regular expressions into Finite State Process notation. | General |

| Symbols | Meaning | Explanations |

|---|---|---|

| Exist | ||

| Conjunction | ||

| Disjunction | . | |

| Implication | . states the truth of X if either Y or Z are true. | |

| Bi-conditional | asserts X if and only if Y | |

| Negation | states that X does not yield X | |

| Equality | . | |

| Lambda function | ||

| Replacement statement | ||

| Summation function in the range of 0 to k |

| Category | Symbol | Description |

|---|---|---|

| Trust model | Trust score (history) | |

| Update function | ||

| Recommendation function | ||

| Honest model | Trust function | |

| Honest entity | ||

| Behavioral function | ||

| Decision function | ||

| Attackers/malicious model | Attacker | |

| Atomic action | ||

| Intermediate cost | ||

| Intermediate gain |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ahmed, A.I.A.; Hamid, S.H.A.; Gani, A.; Abdelaziz, A.; Abaker, M. Formal Analysis of Trust and Reputation for Service Composition in IoT. Sensors 2023, 23, 3192. https://doi.org/10.3390/s23063192

Ahmed AIA, Hamid SHA, Gani A, Abdelaziz A, Abaker M. Formal Analysis of Trust and Reputation for Service Composition in IoT. Sensors. 2023; 23(6):3192. https://doi.org/10.3390/s23063192

Chicago/Turabian StyleAhmed, Abdelmuttlib Ibrahim Abdalla, Siti Hafizah Ab Hamid, Abdullah Gani, Ahmed Abdelaziz, and Mohammed Abaker. 2023. "Formal Analysis of Trust and Reputation for Service Composition in IoT" Sensors 23, no. 6: 3192. https://doi.org/10.3390/s23063192

APA StyleAhmed, A. I. A., Hamid, S. H. A., Gani, A., Abdelaziz, A., & Abaker, M. (2023). Formal Analysis of Trust and Reputation for Service Composition in IoT. Sensors, 23(6), 3192. https://doi.org/10.3390/s23063192