Large Displacement Detection Using Improved Lucas–Kanade Optical Flow

Abstract

1. Introduction

2. Methods

2.1. General Description

2.2. Camera Calibration and World Coordinate

2.3. Image Smoothing

2.4. Image Derivative

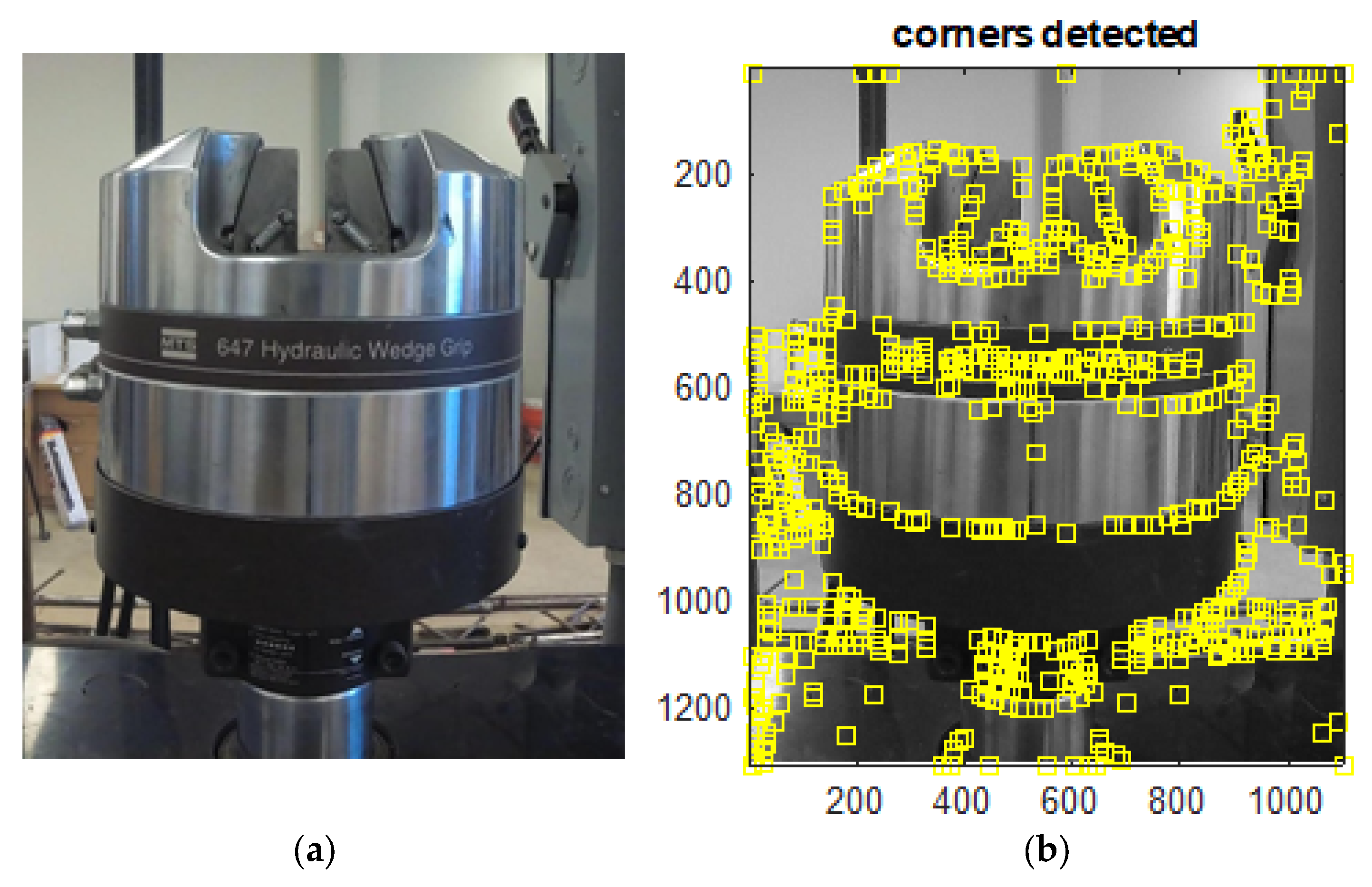

2.5. Feature Points Selection

2.6. Target Tracking

2.6.1. Optical Flow Method

2.6.2. Pyramid Optical Flow for Large Displacement Tracking

2.6.3. Warp Optical Flow for Large Displacement Tracking

2.7. Template Matching Method

3. Experiments and Results

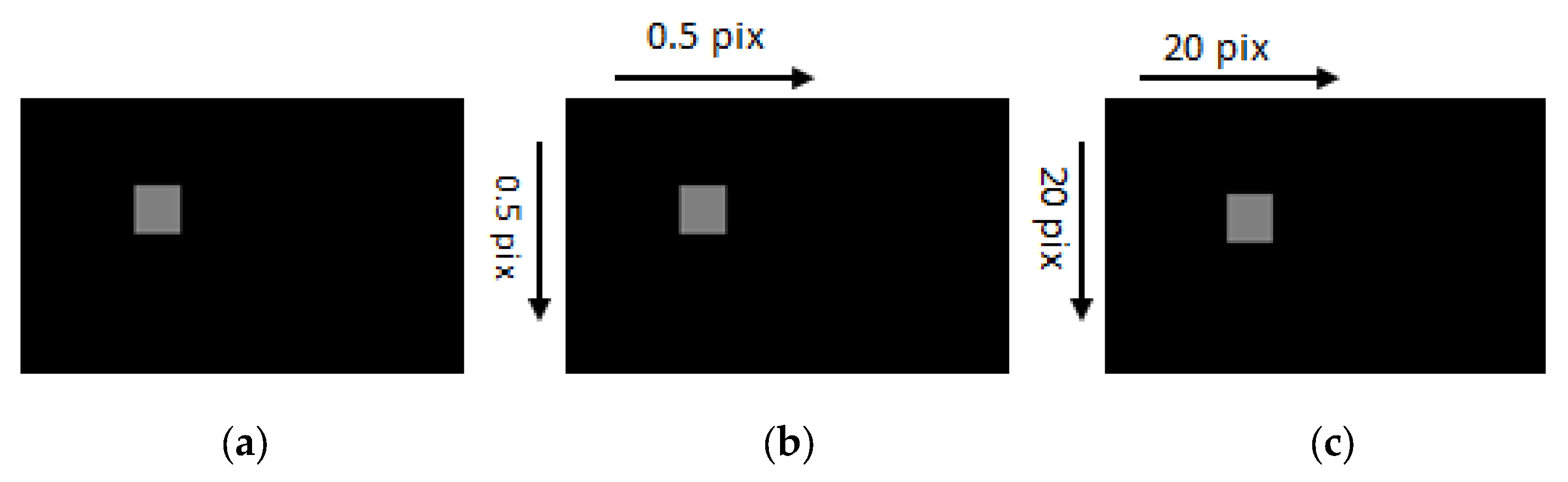

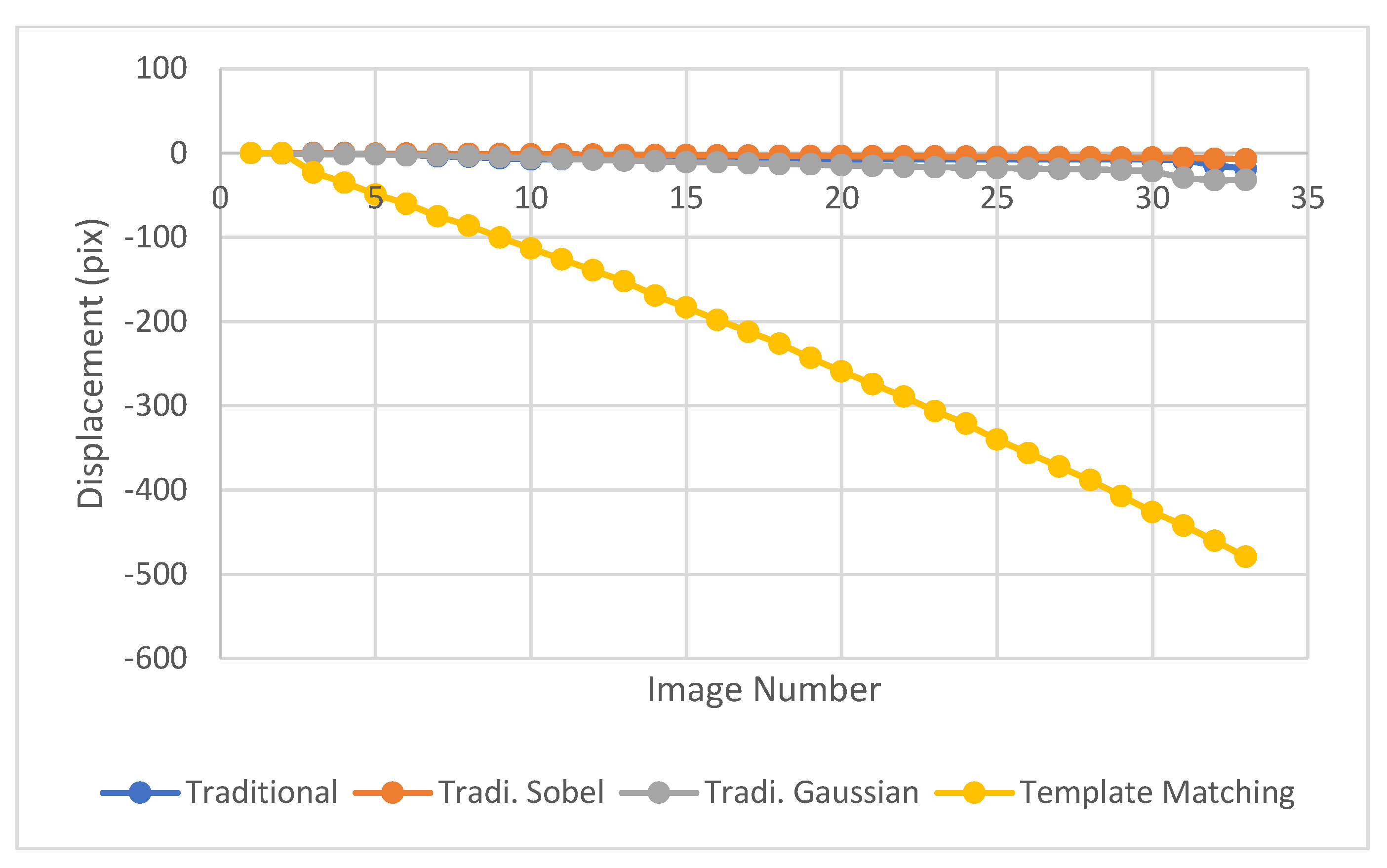

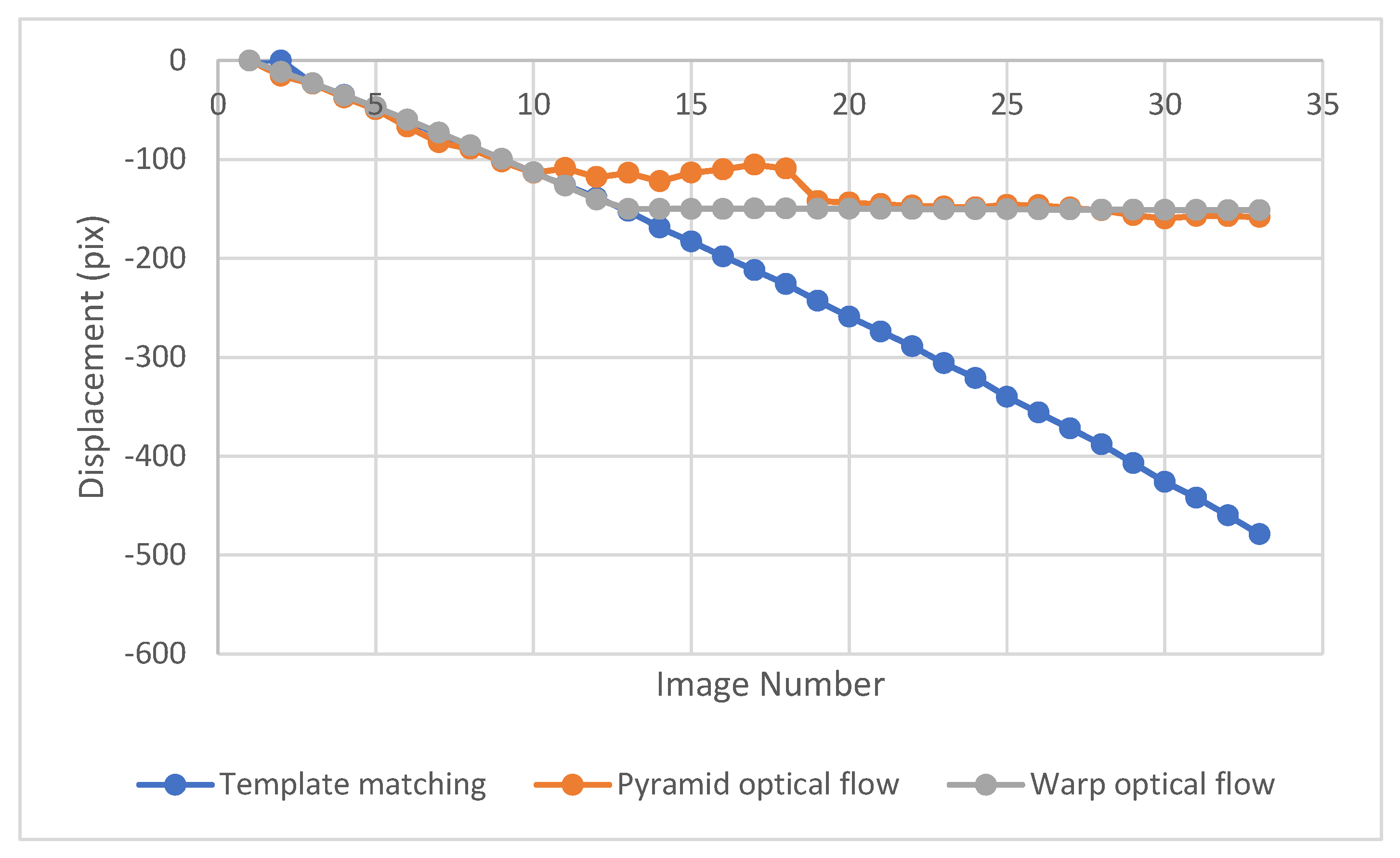

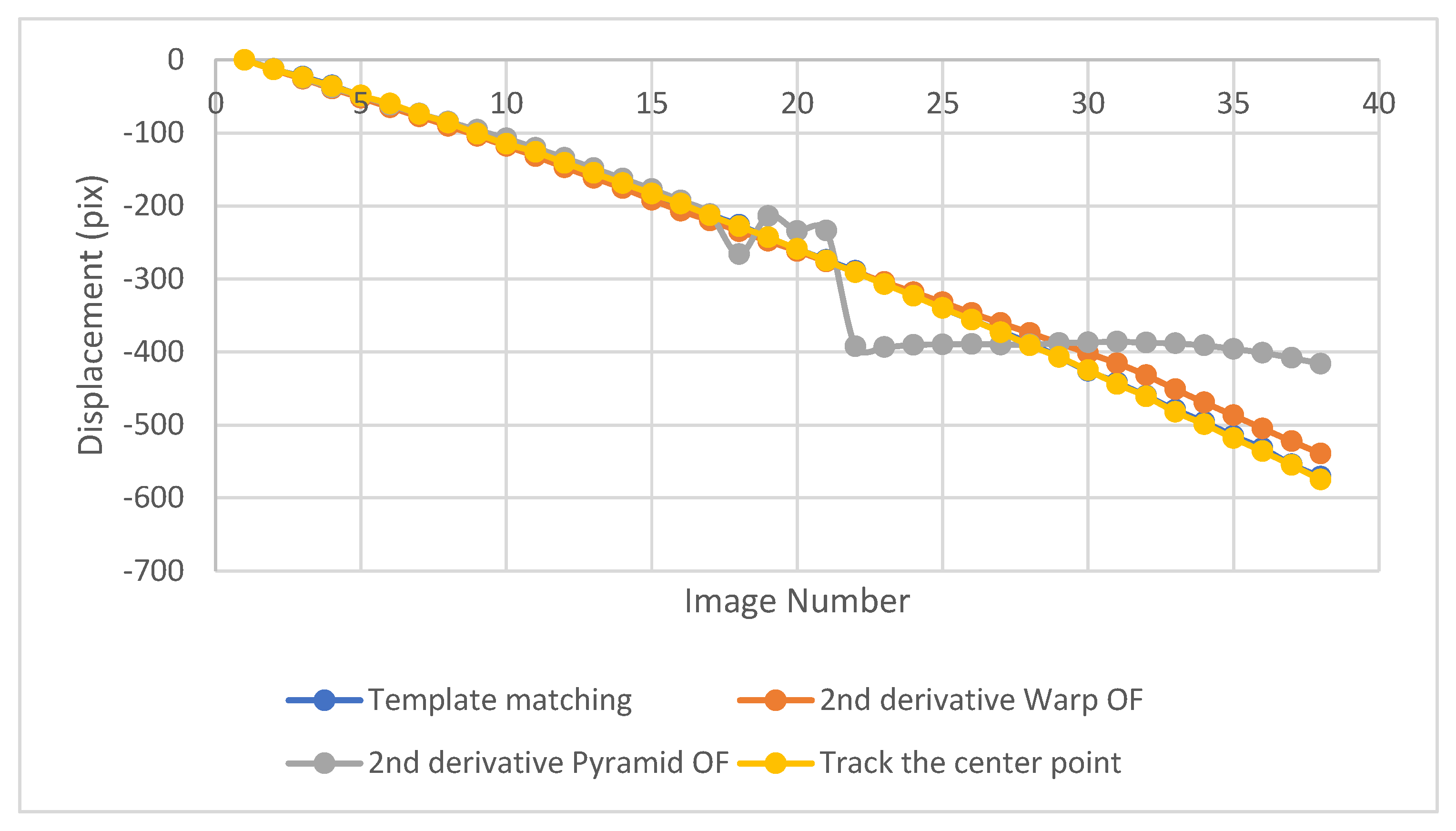

3.1. Method Verification

3.2. Experimental Setup

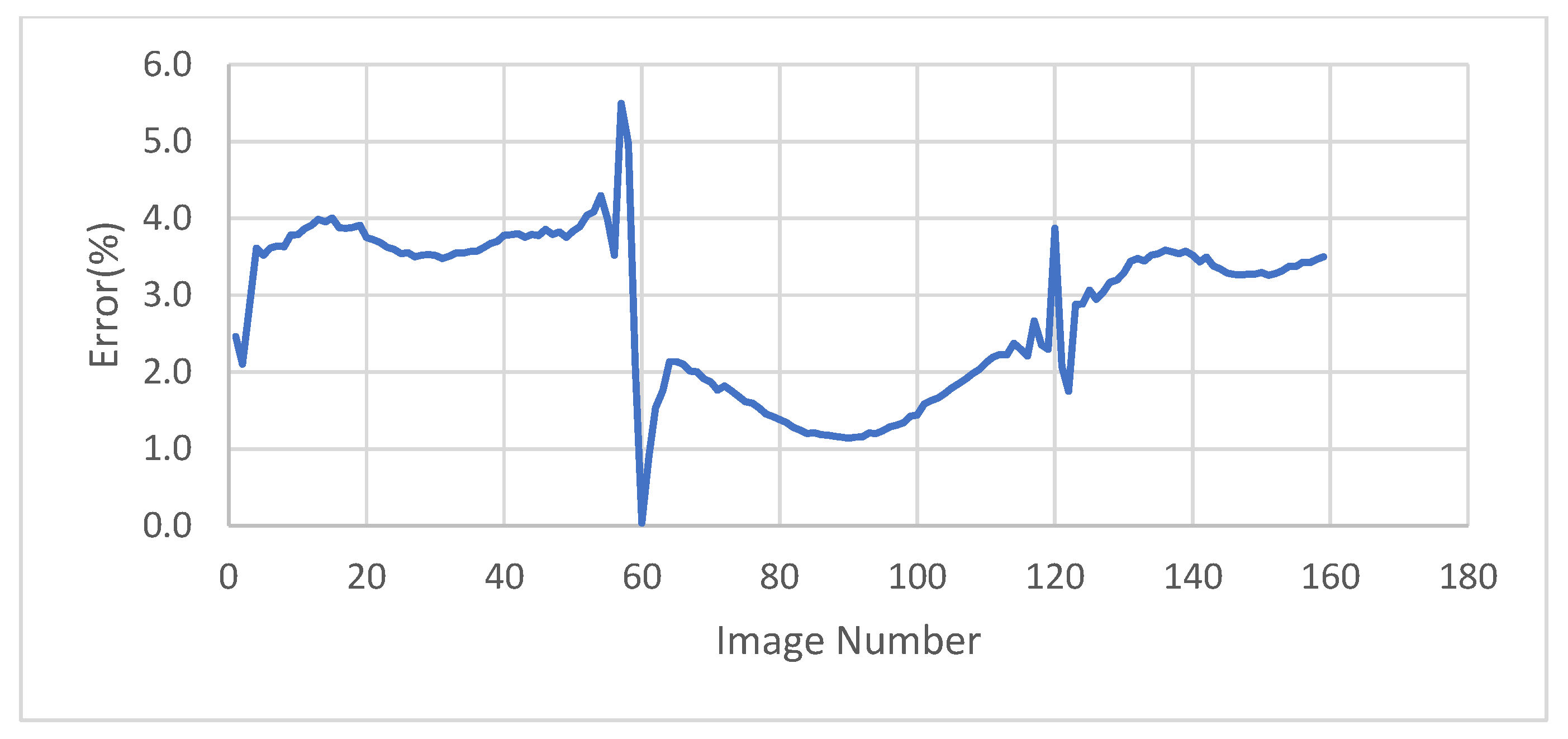

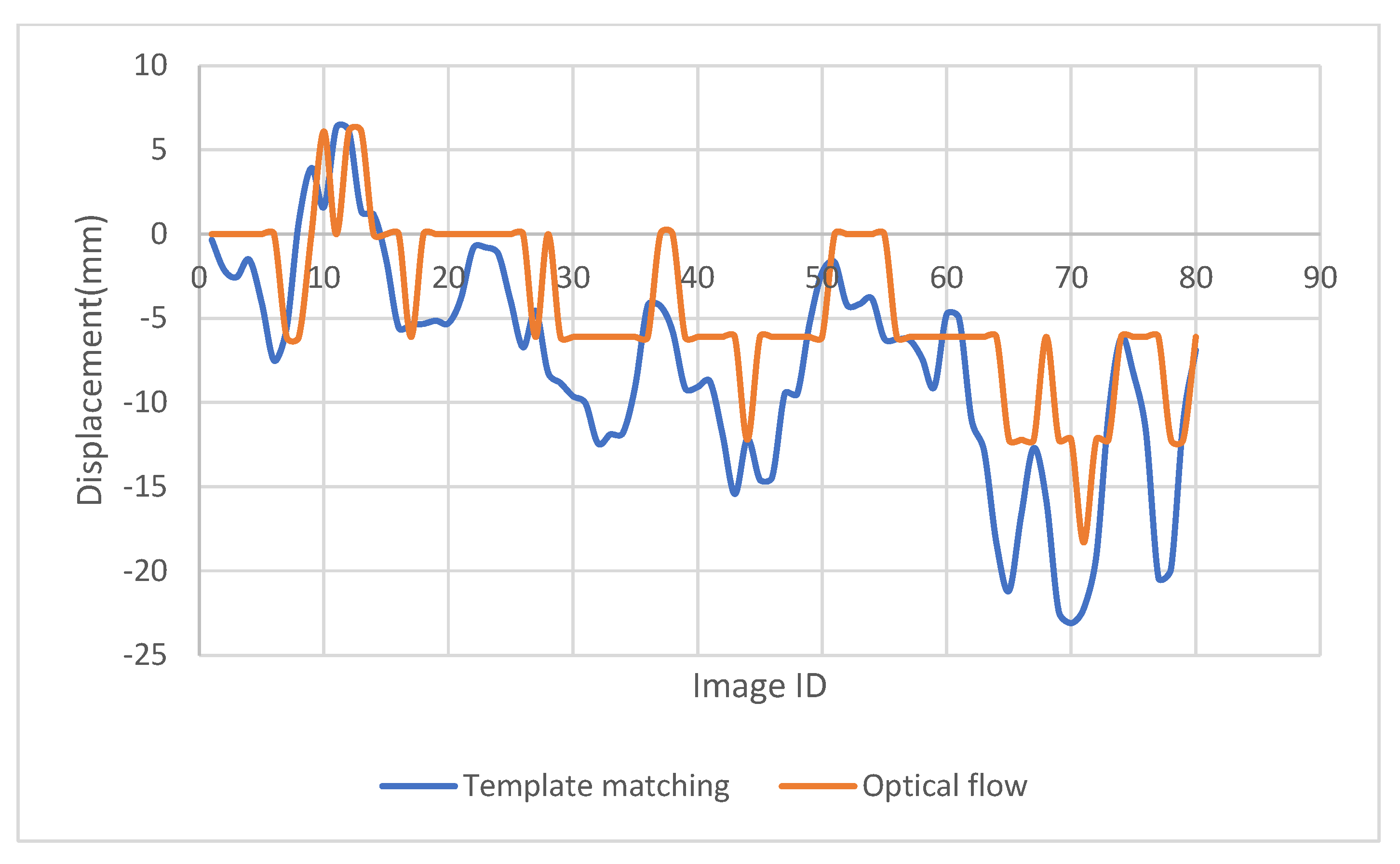

3.3. Measurement and Analysis Results

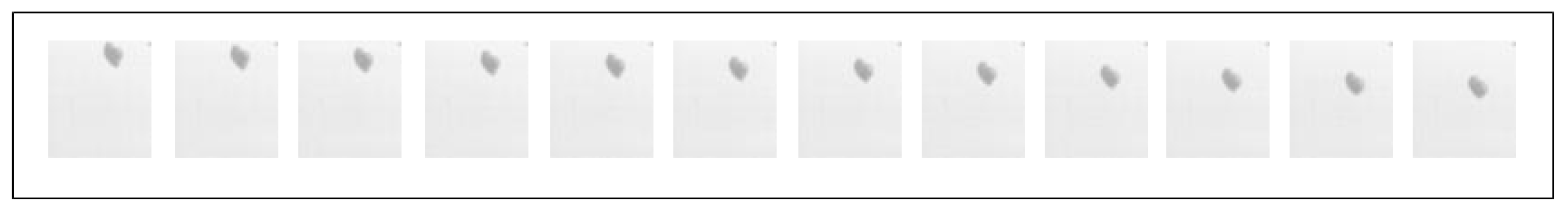

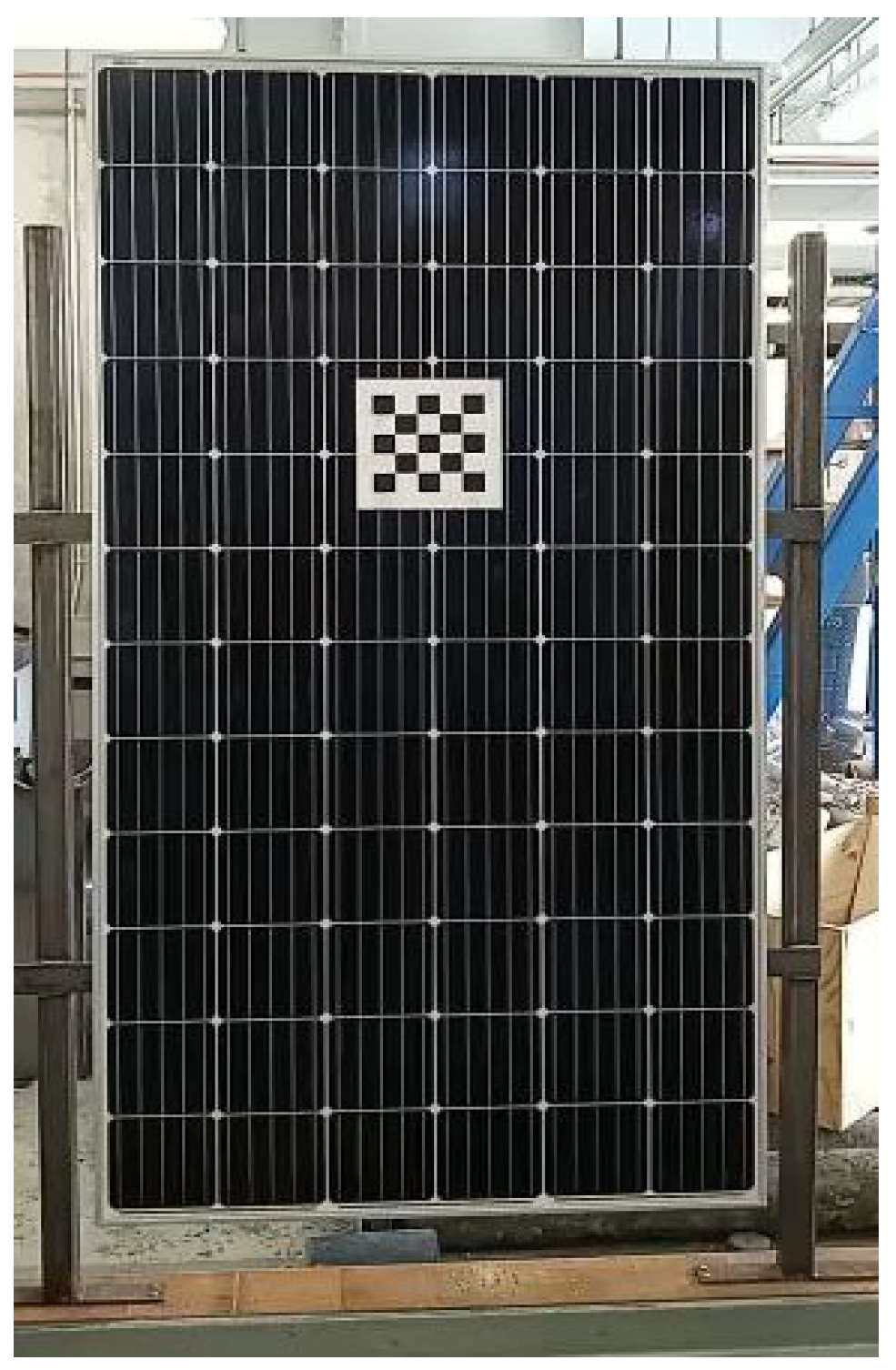

4. Application of the Method in Monitoring a Solar Frame

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Tian, B.; Liu, H.; Yang, N.; Zhao, Y.; Jiang, Z. Design of a Piezoelectric Accelerometer with High Sensitivity and Low Transverse Effect. Sensors 2016, 16, 1587. [Google Scholar] [CrossRef]

- Wang, H.; Li, A.; Guo, T.; Tao, T. Establishment and Application of the Wind and Structural Health Monitoring System for the Runyang Yangtze River Bridge. Shock Vib. 2014, 2014, 421038. [Google Scholar] [CrossRef]

- Wang, H.; Tao, T.; Guo, T.; Li, J.; Li, A. Full-scale measurements and system identification on Sutong cable-stayed bridge during typhoon Fung-Wong. Sci. World J. 2014, 2014, 936832. [Google Scholar] [CrossRef]

- Zhu, L.; Fu, Y.; Chow, R.; Spencer, B.F.; Park, J.W.; Mechitov, K. Development of a High-Sensitivity Wireless Accelerometer for Structural Health Monitoring. Sensors 2018, 18, 262. [Google Scholar] [CrossRef]

- Qu, C.X.; Yi, T.H.; Li, H.N.; Chen, B. Closely spaced modes identification through modified frequency domain decomposition. Measurement 2018, 128, 388–392. [Google Scholar] [CrossRef]

- Qu, C.X.; Yi, T.H.; Li, H.N. Mode identification by eigensystem realization algorithm through virtual frequency response function. Struct. Control Health Monit. 2019, 26, e2429. [Google Scholar] [CrossRef]

- Li, S.; Wu, Z. Development of distributed long-gage fiber optic sensing system for structural health monitoring. Struct. Health Monit. 2007, 6, 133–143. [Google Scholar] [CrossRef]

- Li, J.; Mechitov, K.A.; Kim, R.E.; Spencer, B.F. Efficient time synchronization for structural health monitoring using wireless smart sensor networks. Struct. Control Health Monit. 2016, 23, 470–486. [Google Scholar] [CrossRef]

- Xu, Y.; Brownjohn, J.M.W. Review of machine-vision based methodologies for displacement measurement in civil structures. J. Civil Struct. Health Monit. 2018, 8, 91–110. [Google Scholar] [CrossRef]

- Kim, S.W.; Jeon, B.G.; Kim, N.S.; Park, J.C. Vision-based monitoring system for evaluating cable tensile forces on a cable-stayed bridge. Struct. Health Monit. 2013, 12, 440–456. [Google Scholar] [CrossRef]

- Dong, C.Z.; Celik, O.; Catbas, F.N.; O’Brien, E.J.; Taylor, S. Structural displacement monitoring using deep learning-based full field optical flow methods. Struct. Infrastruct. Eng. 2020, 16, 51–71. [Google Scholar] [CrossRef]

- Khuc, T.; Catbas, F.N. Computer vision-based displacement and vibration monitoring without using physical target on structures. Struct. Infrastruct. Eng. 2017, 13, 505–516. [Google Scholar] [CrossRef]

- O’Byrne, M.; Ghosh, B.; Schoefs, F.; O’Donnell, D.; Wright, R.; Pakrashi, V. Acquisition and analysis of dynamic responses of a historic pedestrian bridge using video image processing. J. Phys. Conf. Ser. 2015, 628, 012054. [Google Scholar] [CrossRef]

- Lucas, B.D.; Kanade, T. Iterative Image Registration Technique with an Application to Stereo Vision. In Proceedings of the 7th International Joint Conference on Artificial Intelligence, Vancouver, BC, Canada, 24–28 August 1981. [Google Scholar]

- Lydon, D.; Lydon, M.; del Rincon, J.M.; Taylor, S.E.; Robinson, D.; O’Brien, E.; Catbas, F.N. Development and field testing of a time-synchronized system for multi-point displacement calculation using low-cost wireless vision-based sensors. IEEE Sens. J. 2018, 18, 9744–9754. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A combined edge and corner detector. In Proceedings of the 4th Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Dong, C.Z.; Celik, O.; Catbas, F.N. Marker-free monitoring of the grandstand structures and modal identification using computer vision methods. Struct. Health Monit. 2019, 18, 1491–1509. [Google Scholar] [CrossRef]

- Zhu, J.; Lu, Z.; Zhang, C. A marker-free method for structural dynamic displacement measurement based on optical flow. Struct. Infrastruct. Eng. 2021, 18, 84–96. [Google Scholar] [CrossRef]

- Xu, Y.; Brownjohn, J.; Kong, D. A non-contact vision-based system for multipoint displacement monitoring in a cable-stayed footbridge. Struct. Control Health Monit. 2018, 25, e2155. [Google Scholar] [CrossRef]

- Boncelet, C. Image Noise Models. In The Essential Guide to Image Processing; Academic Press: Cambridge, MA, USA, 2009; pp. 143–167. [Google Scholar]

- Aslani, S.; Mahdavi-nasab, H. Optical Flow Based Moving Object Detection and Tracking for Traffic Surveillance. Int. J. Electr. Comput. Energetic Electron. Commun. Eng. 2013, 7, 1252–1256. [Google Scholar]

- Tanimoto, S.L. Template matching in pyramids. Comput. Graph. Image Process. 1981, 16, 356–369. [Google Scholar] [CrossRef]

- Shi, G.; Xu, X.; Dai, Y. SIFT feature point matching based on improved RANSAC algorithm. In Proceedings of the 2013 5th International Conference on Intelligent Human-Machine Systems and Cybernetics (IHMSC), Hangzhou, China, 26–27 August 2013. [Google Scholar]

- Dong, C.Z.; Catbas, F.N. A review of computer vision–based structural health monitoring at local and global levels. Struct. Health Monit. 2021, 20, 692–743. [Google Scholar] [CrossRef]

| Main Processor | Ambarella A95E chipset, 32-MPixel image sensor pipeline, and an advanced encoder with 4k resolution |

| Image Sensor | Sony IMX377, ½.3′′, 12 Megapixels CMOS image sensor |

| LCD Screen | 2.19″, 640 × 360 resolution, 330 ppi, 250 cd/m2 brightness, 30 FPS, 160″ FOV, 16:9 |

| Lens | F2.B aperture/155″ wide angle lens, 7G, f = 2.66 ± 5% mm |

| Lens distortion correction | 100% of the wide angle lens distortion correction |

| Test Method | True Displacement | Traditional OF | Pyramid OF | Warp OF | ||||

|---|---|---|---|---|---|---|---|---|

| Direction | x | y | x | y | x | y | x | y |

| First test | 0.5 pix | 0.5 pix | 0.5 pix | 0.51 pix | --- | --- | --- | --- |

| Second test | 20 pix | 20 pix | 2.6 pix | 2.6 pix | 22 pix | 24 pix | 20 pix | 20 pix |

| Sensor | 12-Bit CMOS Sensor with 5 µm Square Pixels |

|---|---|

| Max resolution | 1920 × 1080 |

| Light sensitivity | 1600 to 12,800″ iso monochrome, 800 to 6400″ iso color |

| Shutter | 3 µs to 41.654 ms |

| POI | x-Inter. 1 | Min pt. 1 | x-Inter. 2 | Max. pt. | x-Inter. 2 | Min pt. 2 |

|---|---|---|---|---|---|---|

| Accuracy | 97.5 | 96.5 | 95.0 | 98.8 | 96.1 | 96.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al-Qudah, S.; Yang, M. Large Displacement Detection Using Improved Lucas–Kanade Optical Flow. Sensors 2023, 23, 3152. https://doi.org/10.3390/s23063152

Al-Qudah S, Yang M. Large Displacement Detection Using Improved Lucas–Kanade Optical Flow. Sensors. 2023; 23(6):3152. https://doi.org/10.3390/s23063152

Chicago/Turabian StyleAl-Qudah, Saleh, and Mijia Yang. 2023. "Large Displacement Detection Using Improved Lucas–Kanade Optical Flow" Sensors 23, no. 6: 3152. https://doi.org/10.3390/s23063152

APA StyleAl-Qudah, S., & Yang, M. (2023). Large Displacement Detection Using Improved Lucas–Kanade Optical Flow. Sensors, 23(6), 3152. https://doi.org/10.3390/s23063152