General-Purpose Deep Learning Detection and Segmentation Models for Images from a Lidar-Based Camera Sensor

Abstract

1. Introduction

2. Related Work

2.1. Lidar-Based Perception

2.2. Deep Learning-Based Object Detection

2.3. Deep Learning-Based Instance and Semantic Segmentation

3. Methodology

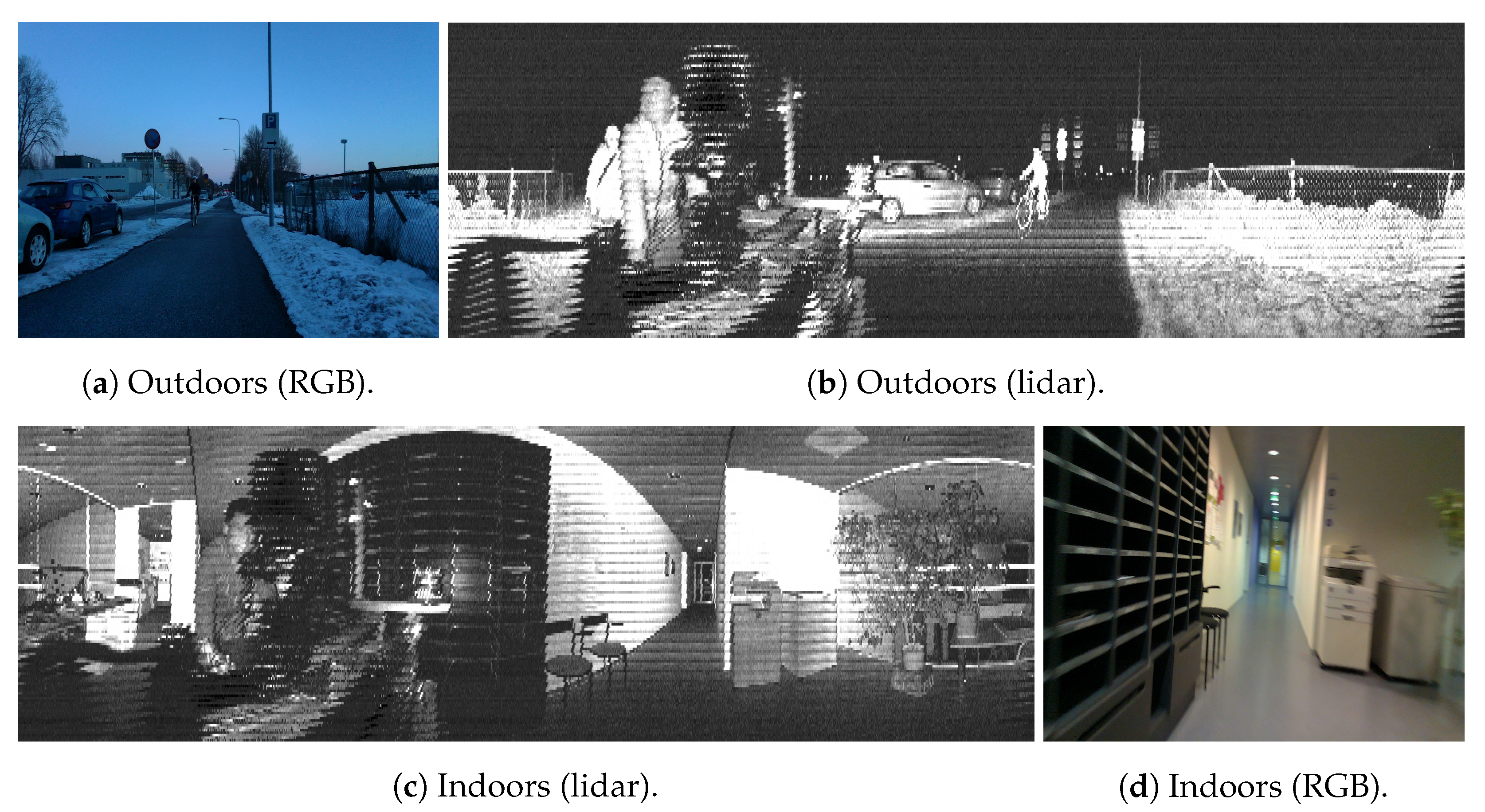

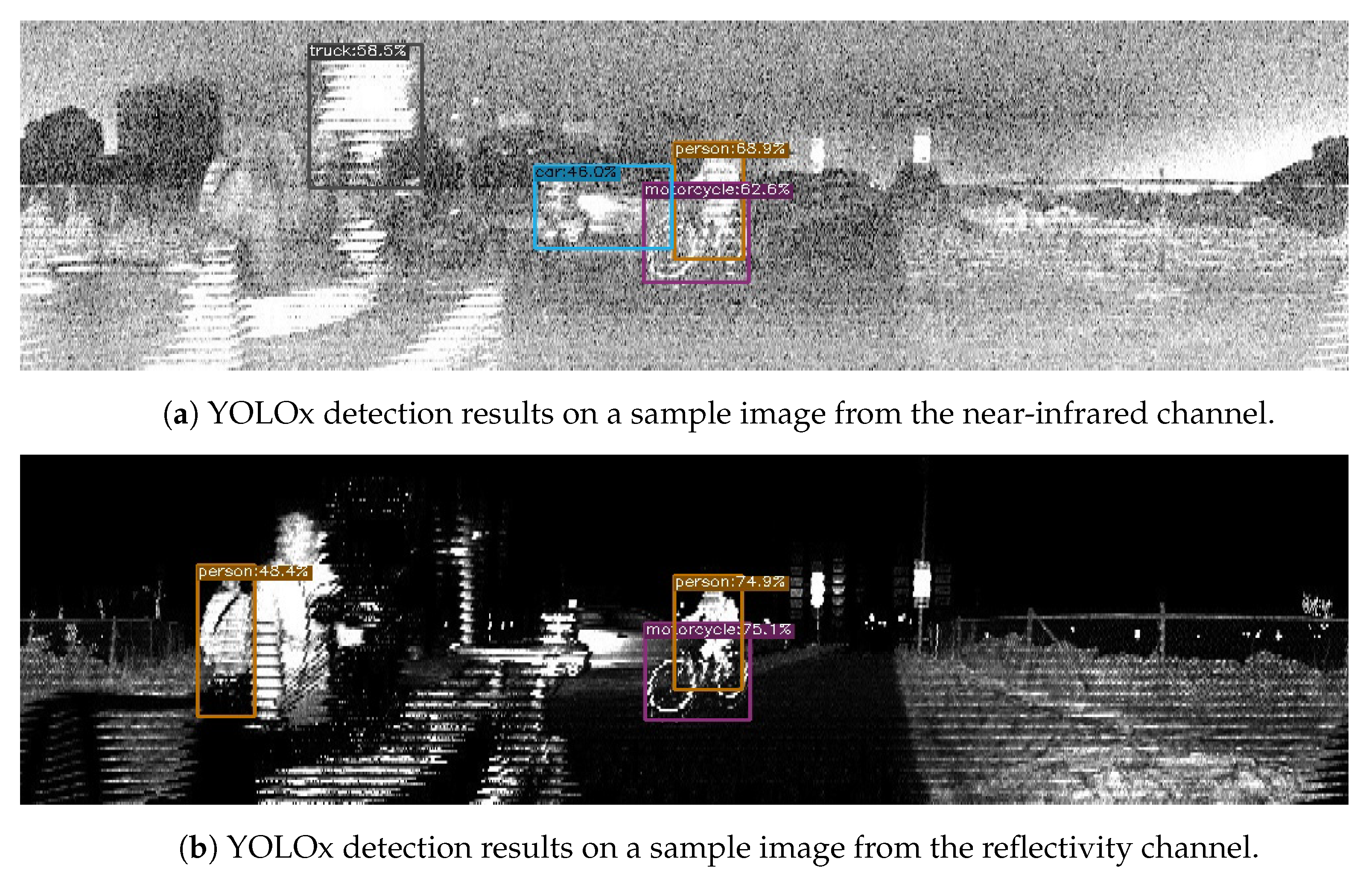

3.1. Hardware

3.2. Data Acquisition

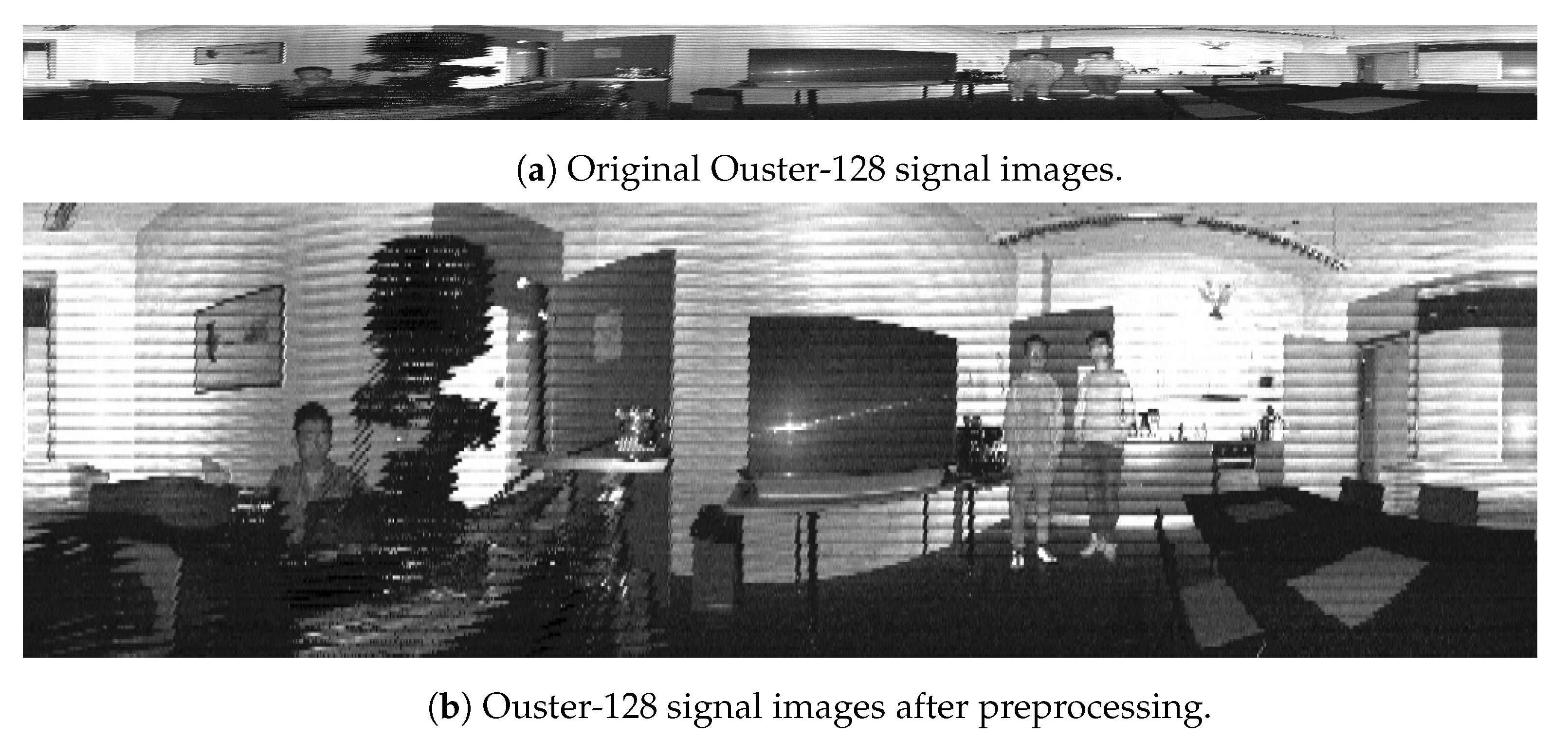

3.3. Data Preprocessing

3.4. Object Detection Approaches

3.4.1. Two-Stage Object Detection

3.4.2. One-Stage Object Detection

3.5. Image Segmentation Approaches

4. Experimental Results

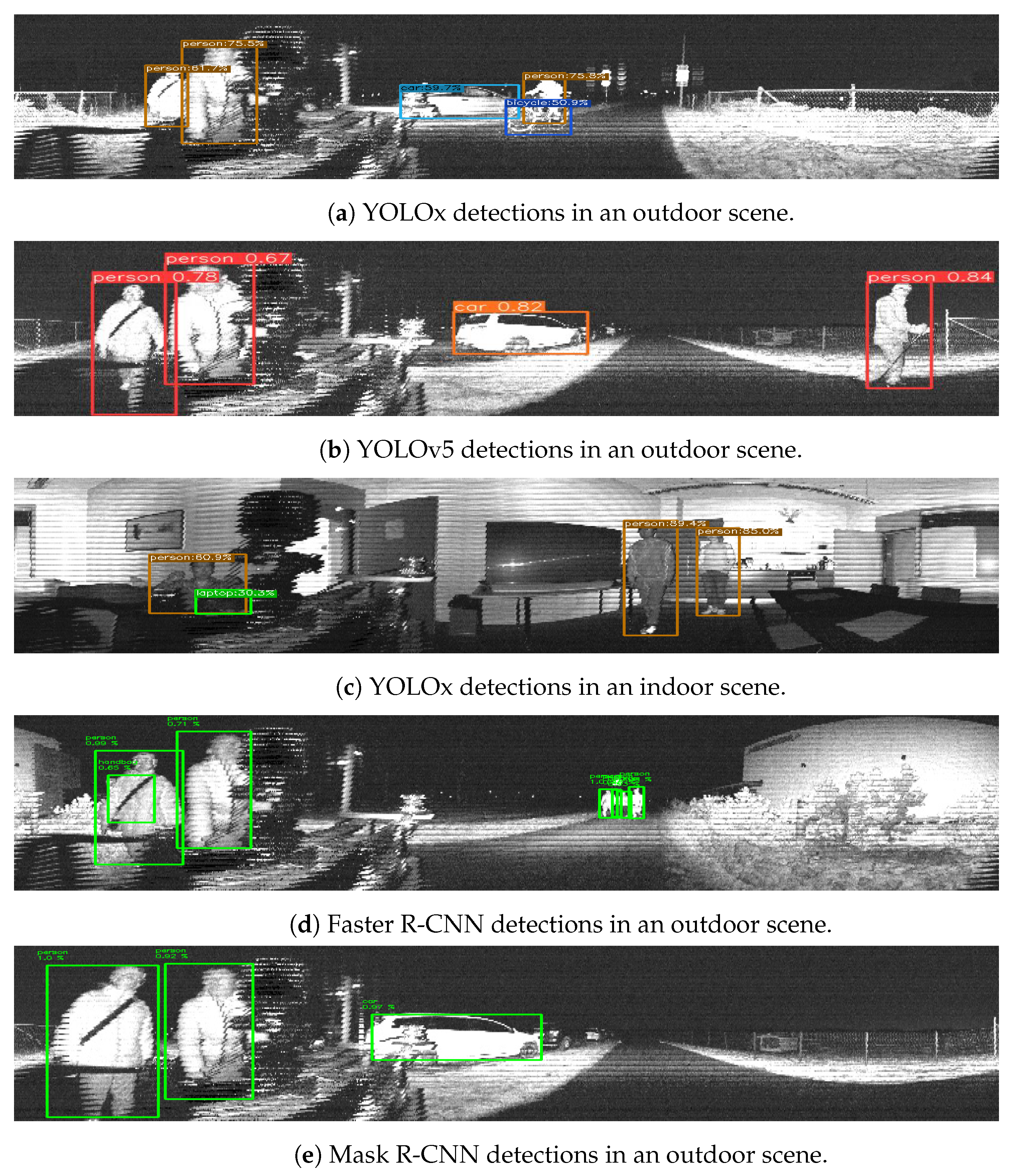

4.1. Detection Results

4.2. Segmentation Results

4.3. Real-Time Performance Evaluation

5. Discussion

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Fan, R.; Jiao, J.; Ye, H.; Yu, Y.; Pitas, I.; Liu, M. Key ingredients of self-driving cars. arXiv 2019, arXiv:1906.02939. [Google Scholar]

- Kato, S.; Tokunaga, S.; Maruyama, Y.; Maeda, S.; Hirabayashi, M.; Kitsukawa, Y.; Monrroy, A.; Ando, T.; Fujii, Y.; Azumi, T. Autoware on board: Enabling autonomous vehicles with embedded systems. In Proceedings of the 2018 ACM/IEEE 9th International Conference on Cyber-Physical Systems (ICCPS), Porto, Portugal, 11–13 April 2018; pp. 287–296. [Google Scholar]

- Liu, X.; Nardari, G.V.; Ojeda, F.C.; Tao, Y.; Zhou, A.; Donnelly, T.; Qu, C.; Chen, S.W.; Romero, R.A.; Taylor, C.J.; et al. Large-scale Autonomous Flight with Real-time Semantic SLAM under Dense Forest Canopy. IEEE Robot. Autom. Lett. (RA-L) 2022, 7, 5512–5519. [Google Scholar] [CrossRef]

- Maksymova, I.; Steger, C.; Druml, N. Review of LiDAR sensor data acquisition and compression for automotive applications. Multidiscip. Digit. Publ. Inst. Proc. 2018, 2, 852. [Google Scholar]

- Yoo, J.H.; Kim, Y.; Kim, J.; Choi, J.W. 3d-cvf: Generating joint camera and lidar features using cross-view spatial feature fusion for 3d object detection. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 720–736. [Google Scholar]

- Zhong, H.; Wang, H.; Wu, Z.; Zhang, C.; Zheng, Y.; Tang, T. A survey of LiDAR and camera fusion enhancement. Procedia Comput. Sci. 2021, 183, 579–588. [Google Scholar] [CrossRef]

- Li, Q.; Queralta, J.P.; Gia, T.N.; Zou, Z.; Westerlund, T. Multi-sensor fusion for navigation and mapping in autonomous vehicles: Accurate localization in urban environments. Unmanned Syst. 2020, 8, 229–237. [Google Scholar] [CrossRef]

- Cui, Y.; Chen, R.; Chu, W.; Chen, L.; Tian, D.; Li, Y.; Cao, D. Deep learning for image and point cloud fusion in autonomous driving: A review. IEEE Trans. Intell. Transp. Syst. 2021, 23, 722–739. [Google Scholar] [CrossRef]

- Li, Q.; Queralta, J.P.; Gia, T.N.; Westerlund, T. Offloading Monocular Visual Odometry with Edge Computing: Optimizing Image Compression Ratios in Multi-Robot Systems. In Proceedings of the 5th ICSCC, Wuhan, China, 21–23 December 2019. [Google Scholar]

- Pierson, H.A.; Gashler, M.S. Deep learning in robotics: A review of recent research. Adv. Robot. 2017, 31, 821–835. [Google Scholar] [CrossRef]

- Zhao, W.; Queralta, J.P.; Westerlund, T. Sim-to-real transfer in deep reinforcement learning for robotics: A survey. In Proceedings of the 2020 IEEE Symposium Series on Computational Intelligence (SSCI), Canberra, Australia, 1–4 December 2020; pp. 737–744. [Google Scholar]

- Queralta, J.P.; Taipalmaa, J.; Pullinen, B.C.; Sarker, V.K.; Gia, T.N.; Tenhunen, H.; Gabbouj, M.; Raitoharju, J.; Westerlund, T. Collaborative multi-robot search and rescue: Planning, coordination, perception, and active vision. IEEE Access 2020, 8, 191617–191643. [Google Scholar] [CrossRef]

- Li, Y.; Ma, L.; Zhong, Z.; Liu, F.; Chapman, M.A.; Cao, D.; Li, J. Deep learning for lidar point clouds in autonomous driving: A review. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 3412–3432. [Google Scholar] [CrossRef] [PubMed]

- Caltagirone, L.; Bellone, M.; Svensson, L.; Wahde, M. LIDAR–camera fusion for road detection using fully convolutional neural networks. Robot. Auton. Syst. 2019, 111, 125–131. [Google Scholar] [CrossRef]

- Tsiourva, M.; Papachristos, C. LiDAR Imaging-Based Attentive Perception. In Proceedings of the 2020 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 1–4 September 2020; pp. 622–626. [Google Scholar]

- Tampuu, A.; Aidla, R.; van Gent, J.A.; Matiisen, T. LiDAR-as-Camera for End-to-End Driving. arXiv 2022, arXiv:2206.15170. [Google Scholar] [CrossRef]

- Pacala, A. Lidar as a Camera-Digital Lidar’s Implications for Computer Vision, Ouster Blog Online Resource. 2018. Available online: https://ouster.com/blog/the-camera-is-in-the-lidar/ (accessed on 3 March 2023).

- Zhou, Y.; Tuzel, O. Voxelnet: End-to-end learning for point cloud based 3d object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4490–4499. [Google Scholar]

- Li, B. 3d fully convolutional network for vehicle detection in point cloud. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 1513–1518. [Google Scholar]

- Pang, S.; Morris, D.; Radha, H. CLOCs: Camera-LiDAR object candidates fusion for 3D object detection. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 10386–10393. [Google Scholar]

- Wen, L.H.; Jo, K.H. Fast and accurate 3D object detection for lidar-camera-based autonomous vehicles using one shared voxel-based backbone. IEEE Access 2021, 9, 22080–22089. [Google Scholar] [CrossRef]

- Li, J.; Qin, H.; Wang, J.; Li, J. OpenStreetMap-based autonomous navigation for the four wheel-legged robot via 3D-Lidar and CCD camera. IEEE Trans. Ind. Electron. 2021, 69, 2708–2717. [Google Scholar] [CrossRef]

- Schlosser, J.; Chow, C.K.; Kira, Z. Fusing lidar and images for pedestrian detection using convolutional neural networks. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 2198–2205. [Google Scholar]

- Asvadi, A.; Garrote, L.; Premebida, C.; Peixoto, P.; Nunes, U.J. Multimodal vehicle detection: Fusing 3D-LIDAR and color camera data. Pattern Recognit. Lett. 2018, 115, 20–29. [Google Scholar] [CrossRef]

- Sier, H.; Yu, X.; Catalano, I.; Peña Queralta, J.; Zou, Z.; Westerlund, T. UAV Tracking with Lidar as a Camera Sensors in GNSS-Denied Environments. arXiv 2023, arXiv:2303.00277. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Kim, J.; Kim, J.; Cho, J. An advanced object classification strategy using YOLO through camera and LiDAR sensor fusion. In Proceedings of the 2019 13th International Conference on Signal Processing and Communication Systems (ICSPCS), Gold Coast, Australia, 16–18 December 2019; pp. 1–5. [Google Scholar]

- Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multi-view 3D Object Detection Network for Autonomous Driving. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6526–6534. [Google Scholar] [CrossRef]

- Ku, J.; Mozifian, M.; Lee, J.; Harakeh, A.; Waslander, S.L. Joint 3d proposal generation and object detection from view aggregation. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1–8. [Google Scholar]

- Geng, K.; Dong, G.; Yin, G.; Hu, J. Deep dual-modal traffic objects instance segmentation method using camera and lidar data for autonomous driving. Remote Sens. 2020, 12, 3274. [Google Scholar] [CrossRef]

- Wu, B.; Wan, A.; Yue, X.; Keutzer, K. Squeezeseg: Convolutional neural nets with recurrent crf for real-time road-object segmentation from 3d lidar point cloud. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 1887–1893. [Google Scholar]

- Imad, M.; Doukhi, O.; Lee, D.J. Transfer learning based semantic segmentation for 3D object detection from point cloud. Sensors 2021, 21, 3964. [Google Scholar] [CrossRef]

- Zou, Z.; Shi, Z.; Guo, Y.; Ye, J. Object detection in 20 years: A survey. arXiv 2019, arXiv:1905.05055. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Jocher, G.; Nishimura, K.; Mineeva, T.; Vilariño, R. yolov5. Code Repository. 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 3 March 2023).

- Garcia-Garcia, A.; Orts-Escolano, S.; Oprea, S.; Villena-Martinez, V.; Martinez-Gonzalez, P.; Garcia-Rodriguez, J. A survey on deep learning techniques for image and video semantic segmentation. Appl. Soft Comput. 2018, 70, 41–65. [Google Scholar] [CrossRef]

- Hafiz, A.M.; Bhat, G.M. A survey on instance segmentation: State of the art. Int. J. Multimed. Inf. Retr. 2020, 9, 171–189. [Google Scholar] [CrossRef]

- Yuan, Y.; Chen, X.; Chen, X.; Wang, J. Segmentation transformer: Object-contextual representations for semantic segmentation. arXiv 2019, arXiv:1909.11065. [Google Scholar]

- Kirillov, A.; Wu, Y.; He, K.; Girshick, R. Pointrend: Image segmentation as rendering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 9799–9808. [Google Scholar]

| Channels | FoV | Range | Frequency | Image Resolution | |

|---|---|---|---|---|---|

| Ouster OS1-64 | 64 | 360° × 45° | 120 m | 10 Hz | 2048 × 128 |

| Ouster OS0-128 | 128 | 360° × 90° | 50 m | 10 Hz | 2048 × 64 |

| RealSense L515 | N/A | 70° × 55° | 9 m | 30 Hz | 1920 × 1080 |

| Indoors | Outdoors | ||||

|---|---|---|---|---|---|

| Person | Chair | Person | Car | Bike | |

| Instances | 43 | 42 | 103 | 37 | 14 |

| Faster R-CNN | Mask R-CNN | YOLOv5 | YOLOx | ||

|---|---|---|---|---|---|

| Indoors | Person | 0.837 | 0.837 | 0.924 | 0.953 |

| Chair | 0.357 | 0.333 | 0.398 | 0.515 | |

| Outdoors | Person | 0.524 | 0.485 | 0.630 | 0.633 |

| Car | 0.865 | 0.811 | 0.893 | 0.866 | |

| Bike | 0.357 | 0.643 | 0.143 | 0.571 |

| Faster R-CNN | Mask R-CNN | YOLOv5 | YOLOx | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | Precision | Recall | Precision | Recall | Precision | Recall | ||

| In | Person | 0.72 | 0.837 | 0.95 | 0.905 | 0.976 | 0.930 | 1.0 | 0.953 |

| Chair | 1.0 | 0.115 | 0.57 | 0.826 | 1.0 | 0.115 | 1.0 | 0.315 | |

| Out | Person | 0.912 | 0.505 | 0.957 | 0.464 | 0.872 | 0.854 | 0.969 | 0.653 |

| Car | 0.943 | 0.688 | 0.712 | 0.627 | 0.919 | 0.829 | 0.825 | 0.618 | |

| Bike | 0.357 | 1.00 | 0.643 | 1.00 | 0.143 | 1.00 | 0.571 | 1.00 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, X.; Salimpour, S.; Queralta, J.P.; Westerlund, T. General-Purpose Deep Learning Detection and Segmentation Models for Images from a Lidar-Based Camera Sensor. Sensors 2023, 23, 2936. https://doi.org/10.3390/s23062936

Yu X, Salimpour S, Queralta JP, Westerlund T. General-Purpose Deep Learning Detection and Segmentation Models for Images from a Lidar-Based Camera Sensor. Sensors. 2023; 23(6):2936. https://doi.org/10.3390/s23062936

Chicago/Turabian StyleYu, Xianjia, Sahar Salimpour, Jorge Peña Queralta, and Tomi Westerlund. 2023. "General-Purpose Deep Learning Detection and Segmentation Models for Images from a Lidar-Based Camera Sensor" Sensors 23, no. 6: 2936. https://doi.org/10.3390/s23062936

APA StyleYu, X., Salimpour, S., Queralta, J. P., & Westerlund, T. (2023). General-Purpose Deep Learning Detection and Segmentation Models for Images from a Lidar-Based Camera Sensor. Sensors, 23(6), 2936. https://doi.org/10.3390/s23062936