Pedestrian Crossing Intention Forecasting at Unsignalized Intersections Using Naturalistic Trajectories

Abstract

1. Introduction

- Utilization of random forest (RF) for detailed pedestrian intention forecasting using naturalistic trajectories that were captured using a drone;

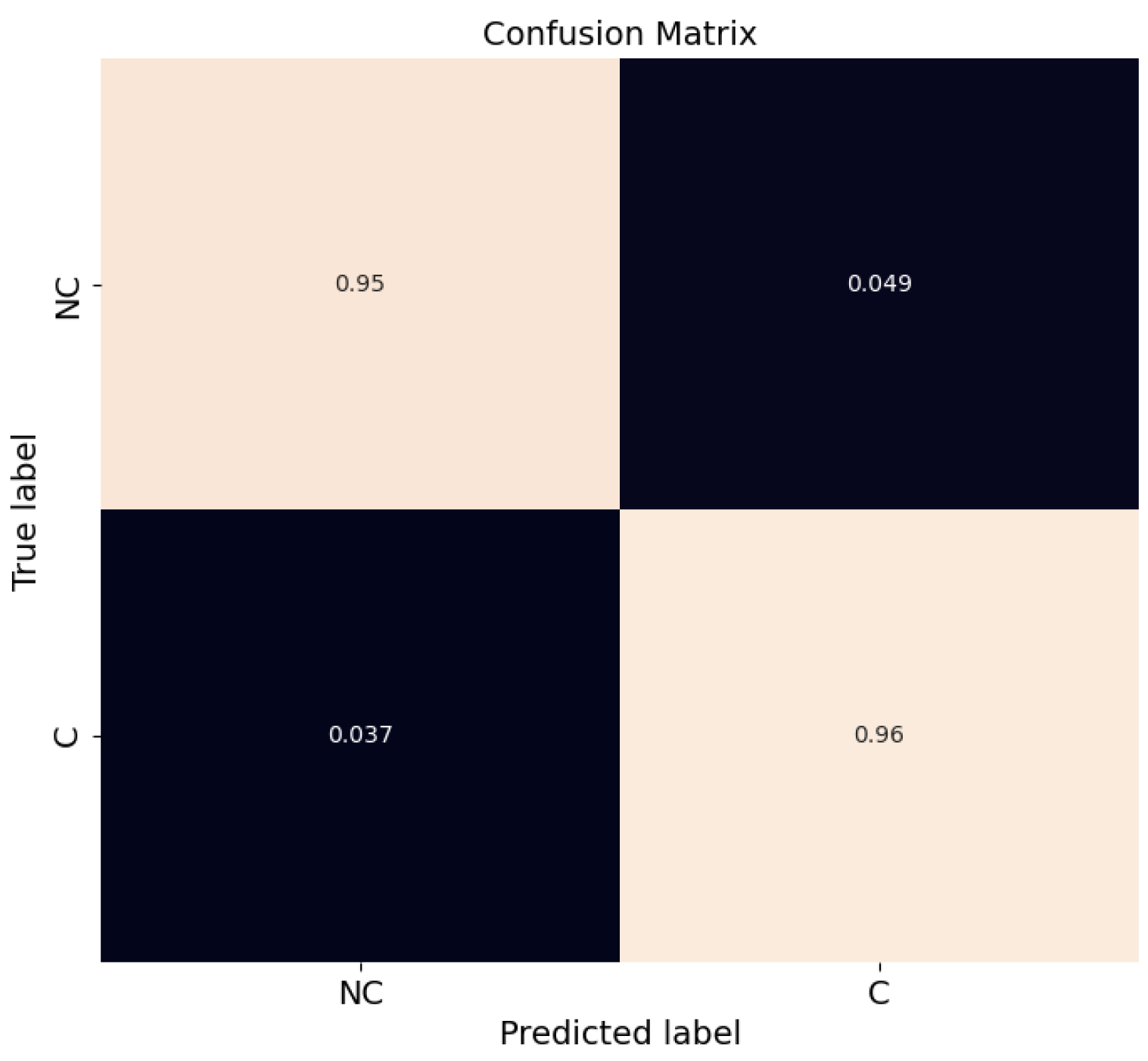

- An algorithm to automate the addition of pedestrian crossing intent labels to the existing dataset (see Section 4.1);

- Extensive and detailed validation and evaluation of pedestrian trajectories extracted from real data to show that the proposed model is applicable at different crossing locations, not just a predefined ROI (e.g., crosswalk). Scenarios where the crossing intention is not clear are also investigated (e.g., pedestrian slowing down before crossing);

- Comparison analysis between an RF and a feed-forward neural network (NN) in the context of pedestrian intention forecasting, verifying that the proposed approach outperforms the NN.

2. Related Work

2.1. On-Board Sensors

2.2. Infrastructure Sensors

3. Methodology

3.1. Random Forest

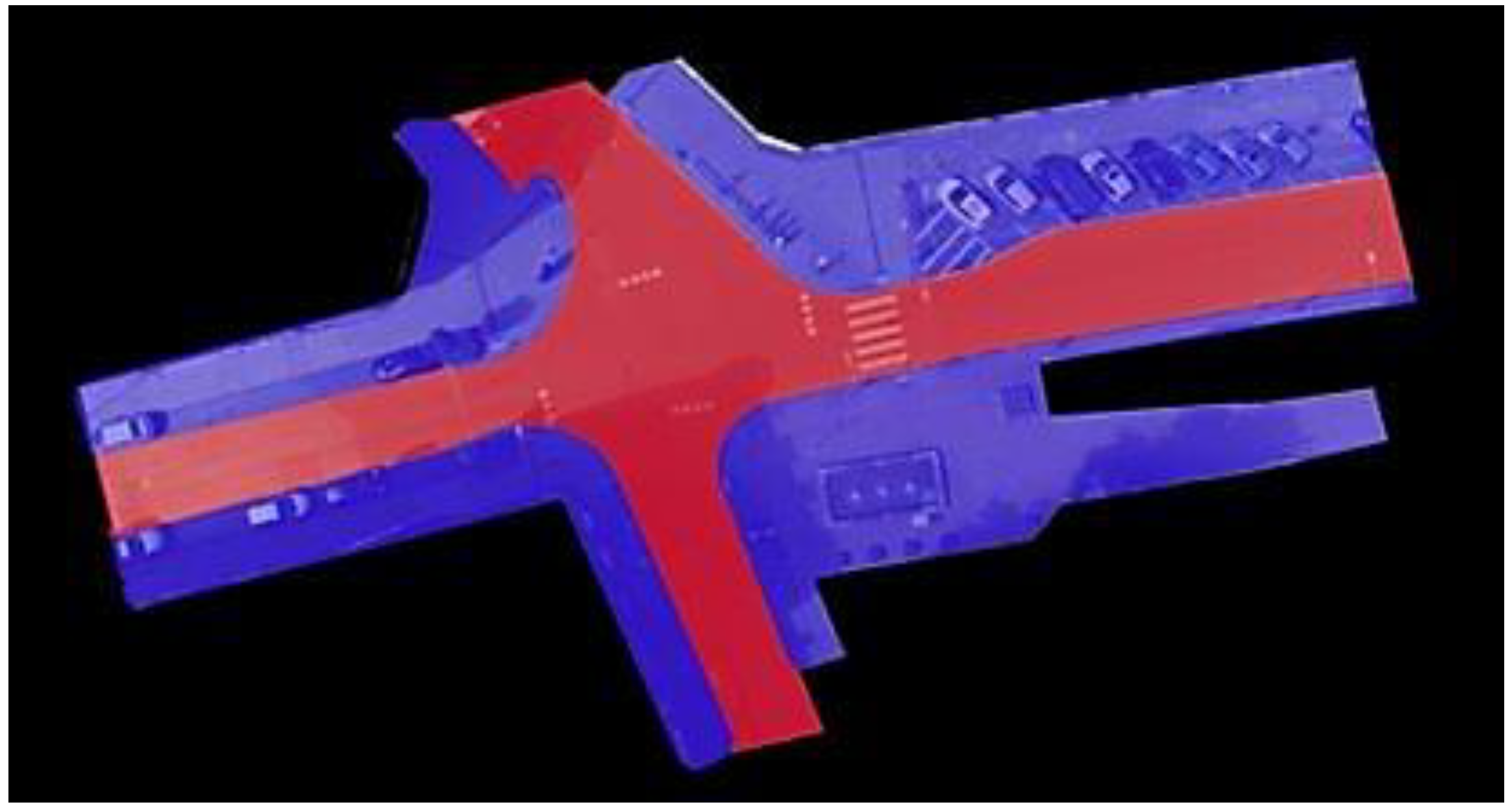

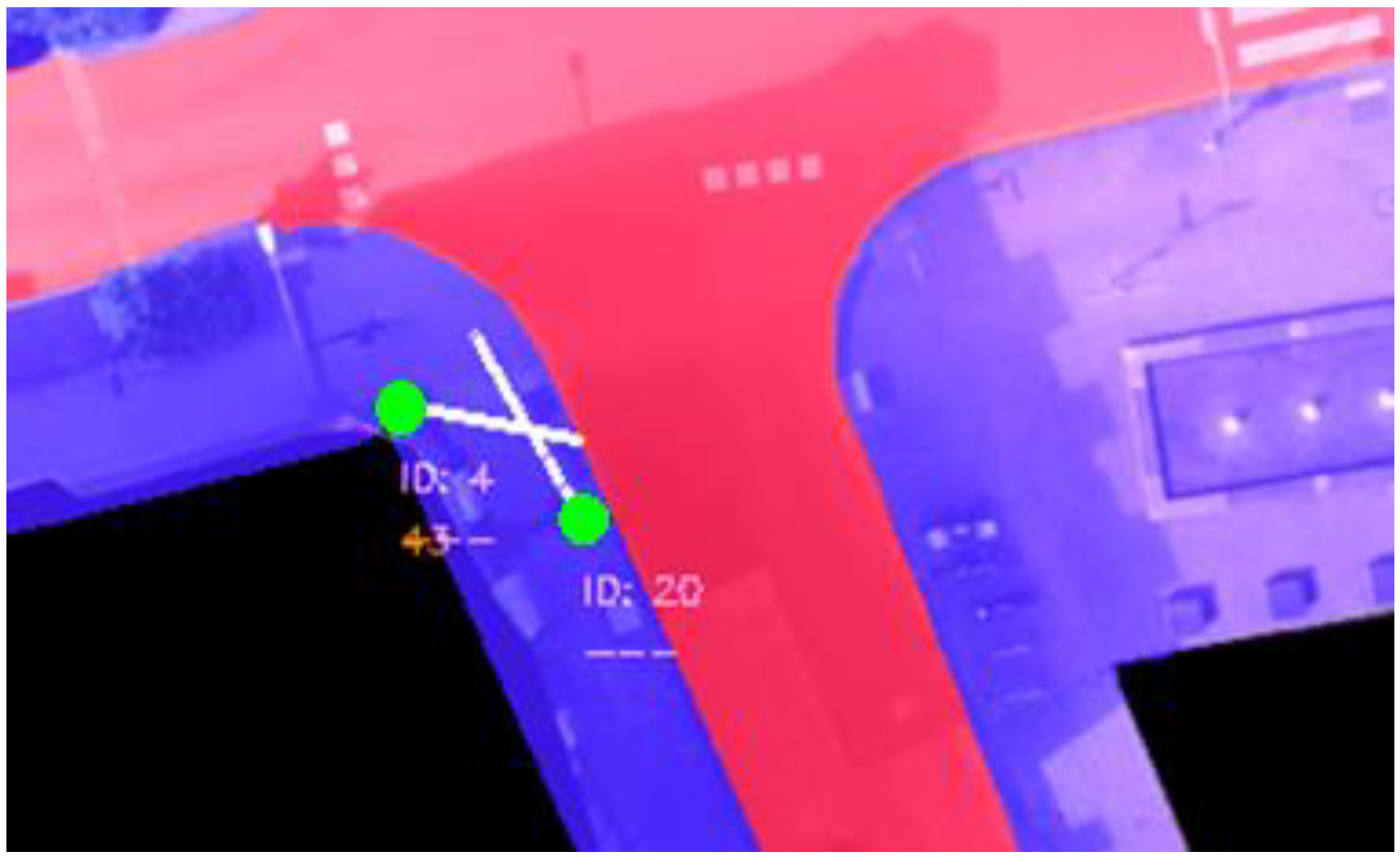

3.2. Dataset

4. Experiments and Results

4.1. Data Preprocessing

4.2. Training

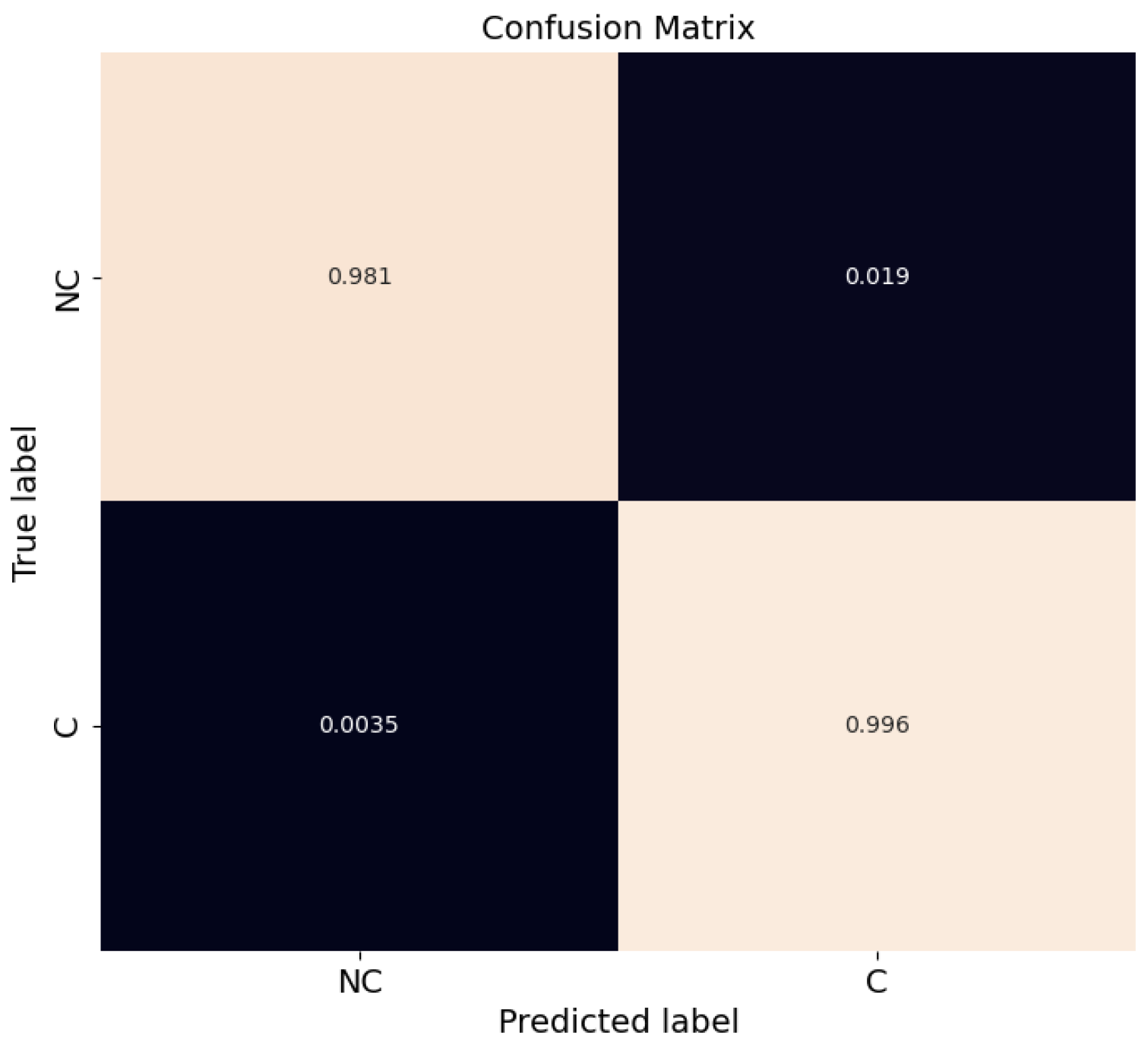

4.3. Evaluation

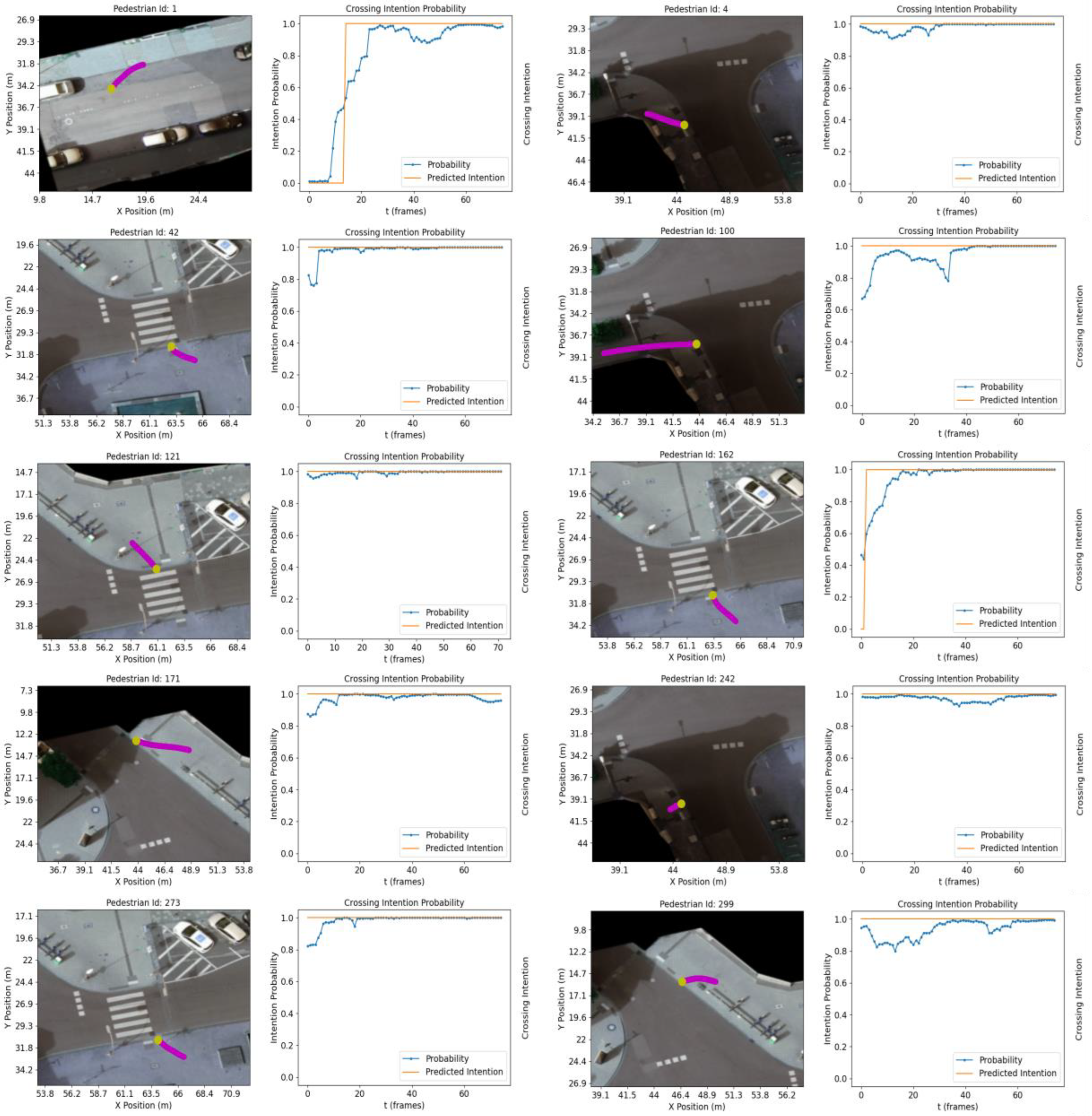

- C Pedestrian at the zebra crossing, ID: 42, 121, 162, 273;

- C Pedestrian at the bottom arm of the intersection, ID: 4, 100, 242;

- C Pedestrians on less utilized (i.e., walked) areas (left and top arms of the intersection), ID: 1, 171, 299.

- Pedestrians walking on the sidewalk with NC intention, ID: 193, 369;

- NC while walking in front of the zebra-crossing, ID: 63, 77, 148, 183, 203_1, 242;

- Pedestrian walking away from the crosswalk, ID: 248;

- Pedestrian turning and NC in front of the road, ID: 203_2.

4.4. When Pedestrian Intention Is Not Clear

5. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- World Health Organization. Global status report on road safety. Inj. Prev. 2015. Available online: http://www.who.int/violence_injury_prevention/road_safety_status/2015/en/ (accessed on 20 May 2020).

- Cao, J.; Pang, Y.; Xie, J.; Khan, F.S.; Shao, L. From Handcrafted to Deep Features for Pedestrian Detection: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 4913–4934. [Google Scholar] [CrossRef] [PubMed]

- Yilmaz, A.; Javed, O.; Shah, M. Object tracking: A survey. ACM Comput. Surv. 2006, 38, 13. [Google Scholar] [CrossRef]

- Gawande, U.; Hajari, K.; Golhar, Y. Pedestrian Detection and Tracking in Video Surveillance System: Issues, Comprehensive Review, and Challenges. In Recent Trends in Computational Intelligence; Elsevier: Amsterdam, The Netherlands, 2020. [Google Scholar]

- Fang, Z.; Vázquez, D.; López, A.M. On-Board Detection of Pedestrian Intentions. Sensors 2017, 17, 2193. [Google Scholar] [CrossRef]

- Liu, B.; Adeli, E.; Cao, Z.; Lee, K.-H.; Shenoi, A.; Gaidon, A.; Niebles, J.C. Spatiotemporal Relationship Reasoning for Pedestrian Intent Prediction. IEEE Robot. Autom. Lett. 2020, 5, 3485–3492. [Google Scholar] [CrossRef]

- Fang, Z.; Lopez, A.M. Is the Pedestrian going to Cross? Answering by 2D Pose Estimation. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 1271–1276. [Google Scholar] [CrossRef]

- Ridel, D.; Rehder, E.; Lauer, M.; Stiller, C.; Wolf, D. A Literature Review on the Prediction of Pedestrian Behavior in Urban Scenarios. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC) 2018, Maui, HI, USA, 4–7 November 2018; pp. 3105–3112. [Google Scholar] [CrossRef]

- Kwak, J.-Y.; Ko, B.C.; Nam, J.-Y. Pedestrian intention prediction based on dynamic fuzzy automata for vehicle driving at nighttime. Infrared Phys. Technol. 2017, 81, 41–51. [Google Scholar] [CrossRef]

- Quintero, R.; Parra, I.; Llorca, D.F.; Sotelo, M.A. Pedestrian Intention and Pose Prediction through Dynamical Models and Behaviour Classification. In Proceedings of the 2015 IEEE 18th International Conference on Intelligent Transportation Systems 2015, Gran Canaria, Spain, 15–18 September 2015; pp. 83–88. [Google Scholar] [CrossRef]

- Dominguez-Sanchez, A.; Cazorla, M.; Orts-Escolano, S. Pedestrian Movement Direction Recognition Using Convolutional Neural Networks. IEEE Trans. Intell. Transp. Syst. 2017, 18, 3540–3548. [Google Scholar] [CrossRef]

- Rasouli, A.; Kotseruba, I.; Tsotsos, J.K. Are They Going to Cross? A Benchmark Dataset and Baseline for Pedestrian Crosswalk Behavior. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 206–213. [Google Scholar] [CrossRef]

- Ranga, A.; Giruzzi, F.; Bhanushali, J.; Wirbel, E.; Pérez, P.; Vu, T.-H.; Perotton, X. VRUNet: Multi-Task Learning Model for Intent Prediction of Vulnerable Road Users. Electron. Imaging 2020, 32, art00012. [Google Scholar] [CrossRef]

- Rasouli, A.; Tsotsos, J.K. Pedestrian Action Anticipation using Contextual Feature Fusion in Stacked RNNs. arXiv 2020, arXiv:2005.06582. [Google Scholar]

- Alvarez, W.M.; Moreno, F.M.; Sipele, O.; Smirnov, N.; Olaverri-Monreal, C. Autonomous Driving: Framework for Pedestrian Intention Estimation in a Real World Scenario. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; pp. 39–44. [Google Scholar] [CrossRef]

- Škovierová, J.; Vobecký, A.; Uller, M.; Škoviera, R.; Hlaváč, V. Motion Prediction Influence on the Pedestrian Intention Estimation Near a Zebra Crossing. In Proceedings of the VEHITS, Crete, Greece, 16–18 March 2018; pp. 341–348. [Google Scholar] [CrossRef]

- Kim, U.-H.; Ka, D.; Yeo, H.; Kim, J.-H. A Real-time Vision Framework for Pedestrian Behavior Recognition and Intention Prediction at Intersections Using 3D Pose Estimation. 2020, pp. 1–12. Available online: http://arxiv.org/abs/2009.10868 (accessed on 1 February 2021).

- Rasouli, A.; Kotseruba, I.; Kunic, T.; Tsotsos, J. PIE: A Large-Scale Dataset and Models for Pedestrian Intention Estimation and Trajectory Prediction. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2019, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar] [CrossRef]

- Vasishta, P.; Vaufreydaz, D.; Spalanzani, A. Building Prior Knowledge: A Markov Based Pedestrian Prediction Model Using Urban Environmental Data. In Proceedings of the 2018 15th International Conference on Control, Automation, Robotics and Vision (ICARCV), Singapore, 18–21 November 2018; pp. 247–253. [Google Scholar] [CrossRef]

- Lisotto, M.; Coscia, P.; Ballan, L. Social and Scene-Aware Trajectory Prediction in Crowded Spaces. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops 2019, Seoul, Republic of Korea, 27–28 October 2019; pp. 2567–2574. [Google Scholar] [CrossRef]

- Bonnin, S.; Weisswange, T.H.; Kummert, F.; Schmuedderich, J. Pedestrian crossing prediction using multiple context-based models. In Proceedings of the 17th International IEEE Conference on Intelligent Transportation Systems (ITSC) 2014, Qingdao, China, 8–11 October 2014; pp. 378–385. [Google Scholar] [CrossRef]

- Schneemann, F.; Heinemann, P. Context-based detection of pedestrian crossing intention for autonomous driving in urban environments. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) 2016, Daejeon, Republic of Korea, 9–14 October 2016; pp. 2243–2248. [Google Scholar] [CrossRef]

- Hashimoto, Y.; Gu, Y.; Hsu, L.-T.; Iryo-Asano, M.; Kamijo, S. A probabilistic model of pedestrian crossing behavior at signalized intersections for connected vehicles. Transp. Res. Part C Emerg. Technol. 2016, 71, 164–181. [Google Scholar] [CrossRef]

- Kohler, S.; Schreiner, B.; Ronalter, S.; Doll, K.; Brunsmann, U.; Zindler, K. Autonomous evasive maneuvers triggered by infrastructure-based detection of pedestrian intentions. In Proceedings of the 2013 IEEE Intelligent Vehicles Symposium (IV) 2013, Gold Coast, Australia, 23–26 June 2013; pp. 519–526. [Google Scholar] [CrossRef]

- Zhao, J.; Li, Y.; Xu, H.; Liu, H. Probabilistic Prediction of Pedestrian Crossing Intention Using Roadside LiDAR Data. IEEE Access 2019, 7, 93781–93790. [Google Scholar] [CrossRef]

- Goldhammer, M.; Doll, K.; Brunsmann, U.; Gensler, A.; Sick, B. Pedestrian’s Trajectory Forecast in Public Traffic with Artificial Neural Networks. In Proceedings of the International Conference on Pattern Recognition, Stockholm, Sweden, 24–28 August 2014; pp. 4110–4115. [Google Scholar] [CrossRef]

- Zhang, H.; Liu, Y.; Wang, C.; Fu, R.; Sun, Q.; Li, Z. Research on a Pedestrian Crossing Intention Recognition Model Based on Natural Observation Data. Sensors 2020, 20, 1776. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Held, D.; Thrun, S.; Savarese, S. Learning to track at 100 fps with deep regression networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 749–765. [Google Scholar]

- Ghasemi, A.; Kumar, C.R. A Survey of Multi Object Tracking and Detecting Algorithm in Real Scene use in video surveillance systems. Int. J. Comput. Trends Technol. 2015, 29, 31–39. [Google Scholar] [CrossRef]

- Ciaparrone, G.; Sánchez, F.L.; Tabik, S.; Troiano, L.; Tagliaferri, R.; Herrera, F. Deep learning in video multi-object tracking: A survey. Neurocomputing 2020, 381, 61–88. [Google Scholar] [CrossRef]

- Jurgen, R.K. (Ed.) V2V/V2I Communications for Improved Road Safety and Efficiency; SAE International: Warrendale, PA, USA, 2012. [Google Scholar]

- Cao, Z.; Simon, T.; Wei, S.E.; Sheikh, Y. Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1302–1310. [Google Scholar] [CrossRef]

- Zhao, J.; Xu, H.; Liu, H.; Wu, J.; Zheng, Y.; Wu, D. Detection and tracking of pedestrians and vehicles using roadside LiDAR sensors. Transp. Res. Part C Emerg. Technol. 2019, 100, 68–87. [Google Scholar] [CrossRef]

- Chen, Y.-Y.; Chen, N.; Zhou, Y.-Y.; Wu, K.-H.; Zhang, W.-W. Pedestrian Detection and Tracking for Counting Applications in Metro Station. Discret. Dyn. Nat. Soc. 2014, 2014, 712041. [Google Scholar] [CrossRef]

- Fu, T.; Miranda-Moreno, L.; Saunier, N. A novel framework to evaluate pedestrian safety at non-signalized locations. Accid. Anal. Prev. 2018, 111, 23–33. [Google Scholar] [CrossRef]

- Gupta, A.; Johnson, J.; Fei-Fei, L.; Savarese, S.; Alahi, A. Social GAN: Socially Acceptable Trajectories with Generative Adversarial Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2018, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2255–2264. [Google Scholar] [CrossRef]

- Zhang, P.; Ouyang, W.; Zhang, P.; Xue, J.; Zheng, N. SR-LSTM: State Refinement for LSTM Towards Pedestrian Trajectory Prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15-20 June 2019; pp. 12077–12086. [Google Scholar] [CrossRef]

- Alahi, A.; Goel, K.; Ramanathan, V.; Robicquet, A.; Fei-Fei, L.; Savarese, S. Social LSTM: Human Trajectory Prediction in Crowded Spaces. In Proceedings of the 29th IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 961–971. [Google Scholar] [CrossRef]

- Helbing, D.; Molnár, P. Social force model for pedestrian dynamics. Phys. Rev. E 1995, 51, 4282–4286. [Google Scholar] [CrossRef]

- Scholler, C.; Aravantinos, V.; Lay, F.; Knoll, A. What the Constant Velocity Model Can Teach Us About Pedestrian Motion Prediction. IEEE Robot. Autom. Lett. 2020, 5, 1696–1703. [Google Scholar] [CrossRef]

- Volz, B.; Mielenz, H.; Siegwart, R.; Nieto, J. Predicting pedestrian crossing using Quantile Regression forests. In Proceedings of the 2016 IEEE Intelligent Vehicles Symposium (IV) 2016, Gotenburg, Sweden, 19–22 June 2016; pp. 426–432. [Google Scholar] [CrossRef]

- Yang, J.; Gui, A.; Wang, J.; Ma, J. Pedestrian Behavior Interpretation from Pose Estimation. In Proceedings of the 2021 IEEE International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–22 September 2021; pp. 3110–3115. [Google Scholar] [CrossRef]

- Volz, B.; Mielenz, H.; Agamennoni, G.; Siegwart, R. Feature Relevance Estimation for Learning Pedestrian Behavior at Crosswalks. In Proceedings of the 2015 IEEE 18th International Conference on Intelligent Transportation Systems 2015, Gran Canaria, Spain, 15–18 September 2015; pp. 854–860. [Google Scholar] [CrossRef]

- Volz, B.; Mielenz, H.; Gilitschenski, I.; Siegwart, R.; Nieto, J. Inferring Pedestrian Motions at Urban Crosswalks. IEEE Trans. Intell. Transp. Syst. 2018, 20, 544–555. [Google Scholar] [CrossRef]

- Sikudova, E.; Malinovska, K.; Skoviera, R.; Skovierova, J.; Uller, M.; Hlavac, V. Estimating pedestrian intentions from trajectory data. In Proceedings of the 2019 IEEE 15th International Conference on Intelligent Computer Communication and Processing (ICCP) 2019, Cluj-Napoca, Romania, 5–7 September 2019; pp. 19–25. [Google Scholar] [CrossRef]

- Völz, B.; Behrendt, K.; Mielenz, H.; Gilitschenski, I.; Siegwart, R.; Nieto, J. A data-driven approach for pedestrian intention estimation. In Proceedings of the 19th International Conference on Intelligent Transportation System (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 2607–2612. [Google Scholar]

- Zhang, S.; Abdel-Aty, M.; Yuan, J.; Li, P. Prediction of Pedestrian Crossing Intentions at Intersections Based on Long Short-Term Memory Recurrent Neural Network. Transp. Res. Rec. J. Transp. Res. Board 2020, 2674, 57–65. [Google Scholar] [CrossRef]

- Zhang, S.; Abdel-Aty, M.; Wu, Y.; Zheng, O. Pedestrian Crossing Intention Prediction at Red-Light Using Pose Estimation. IEEE Trans. Intell. Transp. Syst. 2021, 23, 2331–2339. [Google Scholar] [CrossRef]

- Papathanasopoulou, V.; Spyropoulou, I.; Perakis, H.; Gikas, V.; Andrikopoulou, E. Classification of pedestrian behavior using real trajectory data. In Proceedings of the 2021 7th International Conference on Models and Technologies for Intelligent Transportation Systems (MT-ITS) 2021, Heraklion, Greece, 16–17 June 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Fuhr, G.; Jung, C.R. Collective Behavior Recognition Using Compact Descriptors. no. 2014. 2018, pp. 1–8. Available online: http://arxiv.org/abs/1809.10499 (accessed on 12 September 2022).

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Hastie, J.; Tibshirani, T.; Friedman, R. The Elements of Statistical Learning, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Tang, F.; Ishwaran, H. Random forest missing data algorithms. Stat. Anal. Data Min. ASA Data Sci. J. 2017, 10, 363–377. [Google Scholar] [CrossRef]

- Wang, S.; Aggarwal, C.; Liu, H. Random-Forest-Inspired Neural Networks. ACM Trans. Intell. Syst. Technol. 2018, 9, 1–25. [Google Scholar] [CrossRef]

- Fern, M.; Cernadas, E. Do we Need Hundreds of Classifiers to Solve Real World Classification Problems ? J. Mach. Learn. Res. 2014, 15, 3133–3181. [Google Scholar]

- Roßbach, P. Neural Networks vs. Random Forests—Does it always have to be Deep Learning? Frankfurt Sch. Financ. Manag. 2018. Available online: https://blog.frankfurt-school.de/wp-content/uploads/2018/10/Neural-Networks-vs-Random-Forests.pdf (accessed on 15 September 2022).

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. RandomForestClassifier in Scikit-Learn. 2011. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestClassifier.html (accessed on 27 February 2023).

- Bock, J.; Krajewski, R.; Moers, T.; Runde, S.; Vater, L.; Eckstein, L. The inD Dataset: A Drone Dataset of Naturalistic Road User Trajectories at German Intersections. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV) 2020, Las Vegas, Nevada, USA, 19 October–13 November 2020; pp. 1929–1934. [Google Scholar] [CrossRef]

- Curran, K.; Crumlish, J.; Fisher, G. OpenStreetMap. Int. J. Interact. Commun. Syst. Technol. 2012, 2, 69–78. [Google Scholar] [CrossRef]

- Kaiser, P.; Wegner, J.D.; Lucchi, A.; Jaggi, M.; Hofmann, T.; Schindler, K. Learning Aerial Image Segmentation From Online Maps. IEEE Trans. Geosci. Remote. Sens. 2017, 55, 6054–6068. [Google Scholar] [CrossRef]

- Wu, J.; Xu, H.; Zheng, J. Automatic background filtering and lane identification with roadside LiDAR data. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017; pp. 1–6. [Google Scholar] [CrossRef]

- More, A.S.; Rana, D.P. Review of random forest classification techniques to resolve data imbalance. In Proceedings of the 2017 1st International Conference on Intelligent Systems and Information Management (ICISIM) 2017, Aurangabad, India, 5–6 October 2017; pp. 72–78. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Theodoridis, S.; Koutroumbas, K. Pattern Recognition, 4th ed.; Academic Press: San Diego, CA, USA, 2009. [Google Scholar]

- Haykin, S. Neural Networks and Learning Machines; Pearson: London, UK, 2008. [Google Scholar]

- Liashchynskyi, P.; Liashchynskyi, P. Grid Search, Random Search, Genetic Algorithm: A Big Comparison for NAS. No. 2017. 2019, pp. 1–11. Available online: http://arxiv.org/abs/1912.06059 (accessed on 7 October 2022).

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. GridSearch in Scikit-Learn. 2011. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.model_selection.GridSearchCV.html (accessed on 7 October 2022).

- Kingma, D.P.; Ba, J.L. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015—Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

| Hyperparameter | Value |

|---|---|

| Number of hidden layers | 3 |

| Number of units per layer | 1000 |

| Activation function | ReLU |

| Solver | Adam |

| Alpha | 0.05 |

| Learning rate | Constant |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moreno, E.; Denny, P.; Ward, E.; Horgan, J.; Eising, C.; Jones, E.; Glavin, M.; Parsi, A.; Mullins, D.; Deegan, B. Pedestrian Crossing Intention Forecasting at Unsignalized Intersections Using Naturalistic Trajectories. Sensors 2023, 23, 2773. https://doi.org/10.3390/s23052773

Moreno E, Denny P, Ward E, Horgan J, Eising C, Jones E, Glavin M, Parsi A, Mullins D, Deegan B. Pedestrian Crossing Intention Forecasting at Unsignalized Intersections Using Naturalistic Trajectories. Sensors. 2023; 23(5):2773. https://doi.org/10.3390/s23052773

Chicago/Turabian StyleMoreno, Esteban, Patrick Denny, Enda Ward, Jonathan Horgan, Ciaran Eising, Edward Jones, Martin Glavin, Ashkan Parsi, Darragh Mullins, and Brian Deegan. 2023. "Pedestrian Crossing Intention Forecasting at Unsignalized Intersections Using Naturalistic Trajectories" Sensors 23, no. 5: 2773. https://doi.org/10.3390/s23052773

APA StyleMoreno, E., Denny, P., Ward, E., Horgan, J., Eising, C., Jones, E., Glavin, M., Parsi, A., Mullins, D., & Deegan, B. (2023). Pedestrian Crossing Intention Forecasting at Unsignalized Intersections Using Naturalistic Trajectories. Sensors, 23(5), 2773. https://doi.org/10.3390/s23052773