1. Introduction

Modern products face increasing demand for sustainable manufacturing and operation. These demands are addressed by research in a variety of technological fields, amongst which, lightweighting of the mechanical structure of a product is often applied. Applying high-performance materials, such as fiber-reinforced polymer composites, and modern numerical design methods, such as the finite element (FE) method, enables the highest optimization of mechanical structures. However, high optimization always implies the possibility of critical failure, e.g., when loads are underestimated, when unexpected manufacturing defects exist, or when damages occur during operation. These uncertainties are a significant issue in industries where the highest structural reliability is requested, e.g., transportation industries. To address these uncertainties, e.g., in aviation engineering, damage tolerance design philosophy is used together with maintenance programs: this produces high operational costs, due to downtime and labor. An upcoming approach to reducing maintenance costs, while reliably obtaining integrity, is structural health monitoring (SHM) [

1,

2].

SHM is the continuous on-board monitoring of the condition of a mechanical structure, during operation, by online and integrated systems of sensors, and can be classified into five levels: (i) damage detection; (ii) damage localization; (iii) damage quantification; (iv) damage typification; and (v) structural integrity assessment. Numerous active and passive SHM methods readily exist, achieving up to level four in laboratory conditions [

3,

4,

5]. These apply systems of sensors to measure different physical effects, and continuously evaluate the collected data for potential damage. Among the various methods, acoustic methods—such as the passive acoustic emission (AE) method or the active guided waves (GW) and electromechanical impedance (EMI) method—are of the highest research interest, due to their sensitivity to structural change, and their potential for damage identification up to level four [

2,

3,

5,

6,

7,

8,

9,

10,

11]. Furthermore, the often-used piezoelectric wafer active sensors (PWAS) are lightweight and easy to apply; however, due to the high sensitivity of acoustic methods, they are also very prone to environmental influences, measurement equipment, etc. This makes their application in realistic operational conditions extremely challenging, and has thus, to date, prevented their widespread industrial application. This is particularly true for the EMI method, which evaluates the dynamic response of a structure of interest (part of an electromechanical system) to continuous harmonic excitation, i.e., it is a vibration-based method. Consequently, the evaluation of potential damages is extremely complicated, due to reflections from more distant structural regions, e.g., boundaries and correlated disturbances [

12]. Classical statistical damage metrics typically do not allow reliable damage identification [

9]. However, recently, with the development and increasing distribution of novel statistical methods like machine learning (ML), and the availability of large computational power, new potential to solve the issue has been identified by the scientific community [

13]. This has been similarly reported by Avci [

14] for “vibration-based methods”, by a review of a number of selected journals. Furthermore, numerous recent review articles have highlighted the application and the advances of ML-based measurement data evaluation for vibration-based damage monitoring methods, such as the EMI [

13,

14,

15,

16]. Fan [

13] classified EMI measurement data evaluation methods into data-based and physics-based methods.

Significant advances in data-based methods, which rely solely on real-world measurement data, are currently being achieved, in light of the increasing number of damage indicators, via the application of novel ML algorithms. Lopes [

17] used an artificial neural network (ANN) to evaluate EMI measurements for monitoring the loosening of bolted joints of a scaled steel bridge section. The algorithm evaluated the data of four PWAS in two steps, and succeeded in detecting and locating loose joints, and identifying the severity of the loosening (one, two, or three bolts loose per joint). The training was done according to the same structure, by systematically loosening and tightening bolts. Giurgiutiu [

11] demonstrated, in laboratory conditions, the superiority of a probabilistic neural network (PNN) to conventional damage-metric-based EMI measurement data evaluations, by means of artificially introduced cracks in an aging aircraft panel. He applied a number of the largest resonance frequencies as features, and classified unknown data into pristine and non-pristine (i.e., damaged). For training, only pristine state data was used. While the classical damage metric was only capable of detecting a crack in a very narrow region, the PPN-based evaluation also identified all cracks, at a distance of 100 mm. Another demonstration of PNN-based EMI measurement data evaluation for damage was reported by Palomino [

18], who investigated a number of different damage scenarios in laboratory conditions, and was able to detect, localize, and classify cracks and rivet losses in large aluminum aircraft fuselage components by means of a sensor array consisting of eight PWAS. Park [

19] demonstrated the potential of principal component analysis (PCA) to extract relevant features for damage monitoring from EMI measurement data: he used a bolted metal–metal single-lap shear joint, and used bolt loosening and bolt re-tightening as damage. He clearly showed the improvement of the feature extraction by the PCA, for differentiating between a loose and a re-tightened joint by a simple damage metric, and he also demonstrated the applicability of the k-means clustering algorithm to damage identification, using only two principal components. Min [

20] addressed the sensitivity of EMI signal damage metrics to the considered frequency ranges, and solved the issue by training an ANN with damage metric values of discretized frequency spectra, to identify five different damage scenarios at a building structure (zero to four loose bolts at a joint). The same approach also performed well in an experimental study on the detection of loose bolts and cracks induced on a steel bridge. Oliveira [

21] first proposed a convolutional-neural-network(CNN)-based evaluation method for damage detection, by the use of EMI measurements, and demonstrated its superiority to other ML methods on an aluminum plate equipped with three PWAS and with nuts adhesively bonded to it as artificial damages. Rezende [

22] used aluminum beams, with weight added as damage, at varying temperature conditions, and demonstrated CNN-based evaluation also for temperature compensation. A similar demonstration was performed by Li [

23] for the more complex use case of a concrete cube with cracks at varying temperature conditions, using CNN in combination with Pearson Correlation Coefficient indices from measured electromechanical admittance spectra sub-ranges, to identify both crack length and applied temperature. Furthermore, Li [

23] used the Orthogonal Matching Pursuit algorithm to synthetically generate data based on measurement data, to reduce training costs for the CNN. Other successful applications of CNN models were reported by Ai [

24,

25], who demonstrated the identification of concurrent compressive stresses and damage (crack number and width), and minor mass loss of a concrete specimen. The application of a deep residual network to the EMI data evaluation for damage, and its potential to overcome the necessity of programmer-dependent preprocessing, was demonstrated by Alazzawi [

26], who managed to directly evaluate time domain impedance data for crack quantification and localization in a steel beam. This potential for the evaluation of electromechanical admittance raw data was also found for a deep neural network by Nguyen [

27], who applied it to the EMI data-based prestress loss monitoring of a post-tensioned reinforced concrete girder, in laboratory conditions. Singh [

28] successfully used unsupervised self-organizing maps (SOM) to fuse multi-sensor data for the identification of hole sizes in a large aluminum plate. Furthermore, he found that data reduction of measured EMI spectra by PCA is not only more efficient but also improves the quality of the data for the realized experiments. The latter was also supported by the findings of Lopes [

17], who stated that feature engineering, i.e., the selection of meaningful features of the raw data, often (i) highlights dynamic characteristics of the evaluation objective, and (ii) strongly reduces the required amount of training data. For a summary of the key contributions to data-driven and ML-based EMI measurement data, see Fan [

13].

Physics-based methods use physical models of the monitored structure of interest and the applied sensor system, to evaluate real-world EMI measurement data. Analytical or numerical models are used to, e.g., find sensitive frequency ranges or specific sensitive features and their responses to a considered damage and its location and properties [

29,

30]. Another physics-based approach is model updating, whereby predefined parameters of a model, which represent damage, temperature changes, etc., are optimized until the EMI measurement data is matched by the model’s simulation results. Albakri [

31], e.g., used a length-varying spectral element mesh to efficiently model a beam with a single crack-like opening, the opening parameters of which were subsequently optimized to fit the EMI measurement data of the equivalent, but physical, beam with a single unknown opening, thereby enabling the identification of the opening’s location, width, and severity. However, due to the large parameter space for more complex mechanical structures, and their large number of possible damage modes, the computational effort for model updating is large, and fast data evaluation is challenging. Ezzat [

32] addressed this issue by increasing the efficiency of the model updating evaluation method by the application of surrogate models and a multi-stage statistical calibration framework. The framework used a pre-screening step to reduce the parameter space, by identifying probable damage locations before the model updating and signal matching steps and, therefore, strongly reduced the overall computational effort for damage identification. Another approach to accelerating data evaluation is to pre-simulate impedance spectra for varying damage parameters of the structure of interest. The typically large database is computationally expensive; however, its calculation can be done prior to the operational life of the monitored structure. To reduce this large computational effort, the application of numerical surrogate models (e.g., ML models) can be used to lower the number of required simulations by interpolation. An approach was recently demonstrated for the accurate interpolation of simulated guided wave signals by Humer [

33]. The available simulated signals (and their correlated and known damage parameters) enable very fast damage identification, by simply comparing their similarity to measured EMI spectra. Shuai [

34] and Kralovec [

9] demonstrated this approach by the identification of the crack location and severity in a simple metallic beam, and the debonding size in an aluminum sandwich panel, respectively. However, physics-based methods are still limited to a low-frequency range or simple mechanical structures (mostly beams are investigated), as computational accuracy and efficiency are still issues [

13].

Nevertheless, ML methods are generally found to be superior to traditional evaluation methods, in which the vibration signal is fuzzy and noise-contaminated [

14,

21]. To date, ML algorithms applied to vibration-based monitoring methods, such as EMI, are predominantly supervised learning algorithms based on real-world measurement data. Avci [

14] concludes that the latest ML data evaluation approaches to potential damage increasingly apply signal features other than modal parameters, as these are often very sensitive to environmental conditions, e.g., temperature. Furthermore, the curation of experimental data is typically very costly. Thus, approaches using synthetic data (e.g., simulated by an FE model) are utilized, to reduce the cost of data acquisition [

35,

36]. Similarly, Fan [

13] highlighted the significant potential of physics-based (i.e., based on synthetic data from physical models) EMI data evaluation, and the need for efficient numerical modeling.

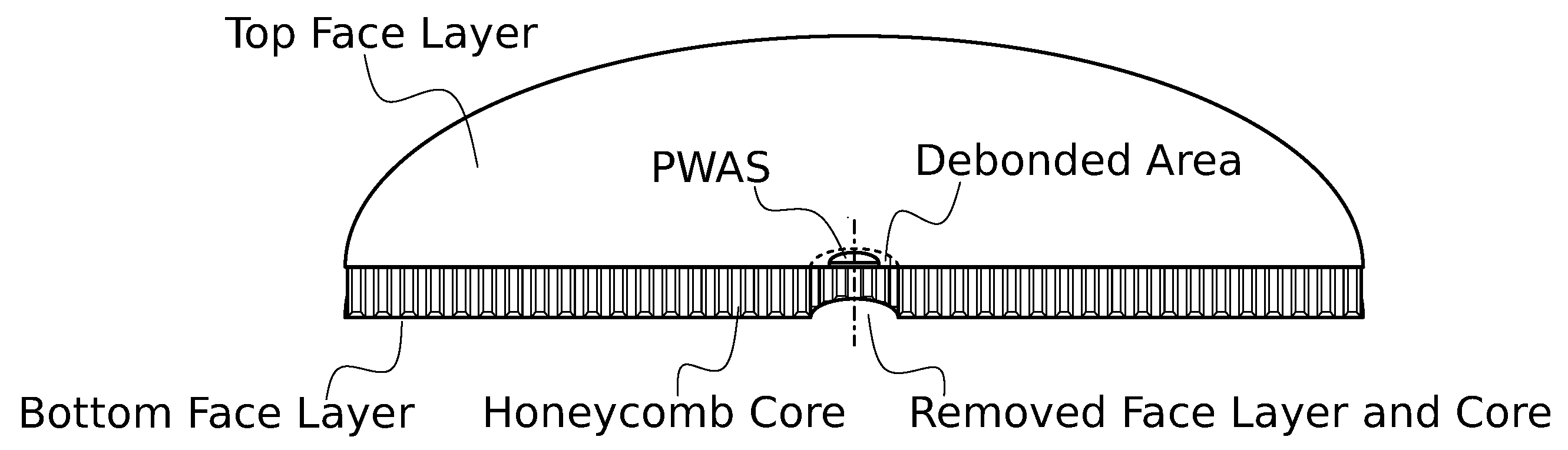

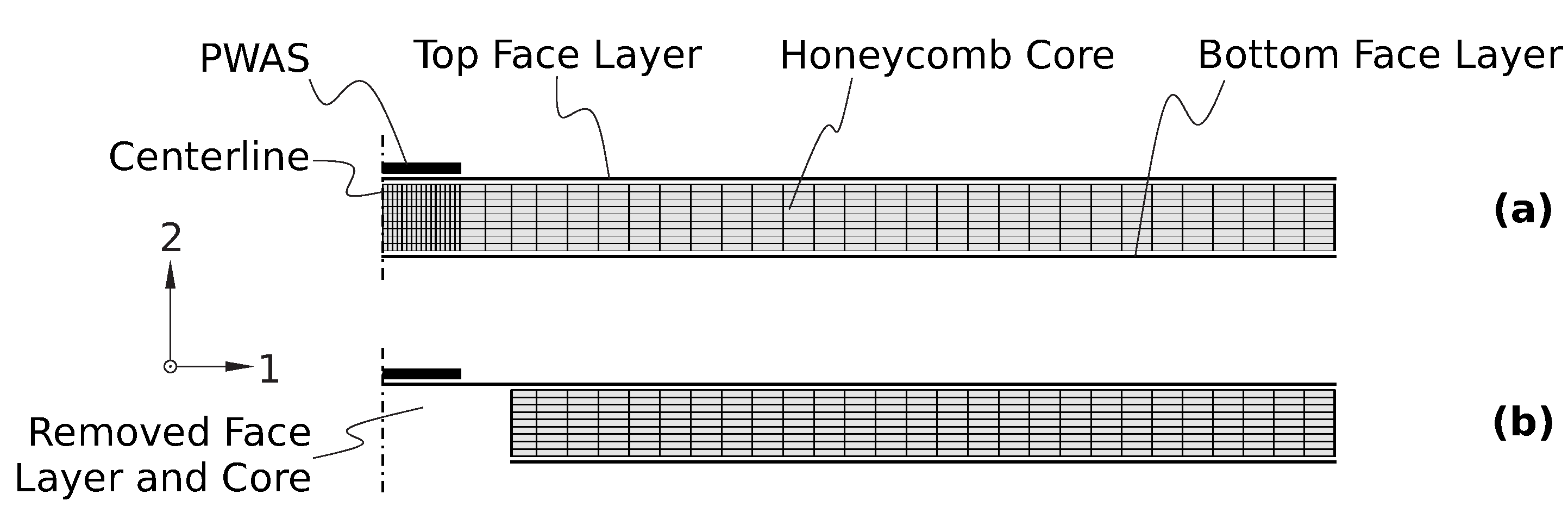

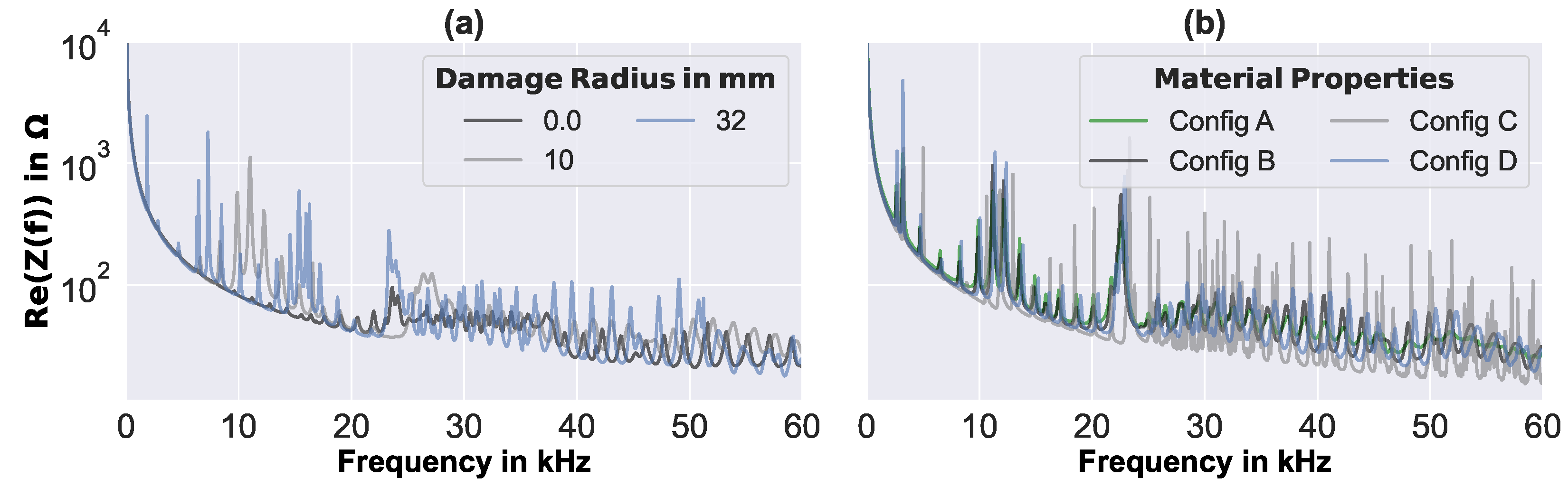

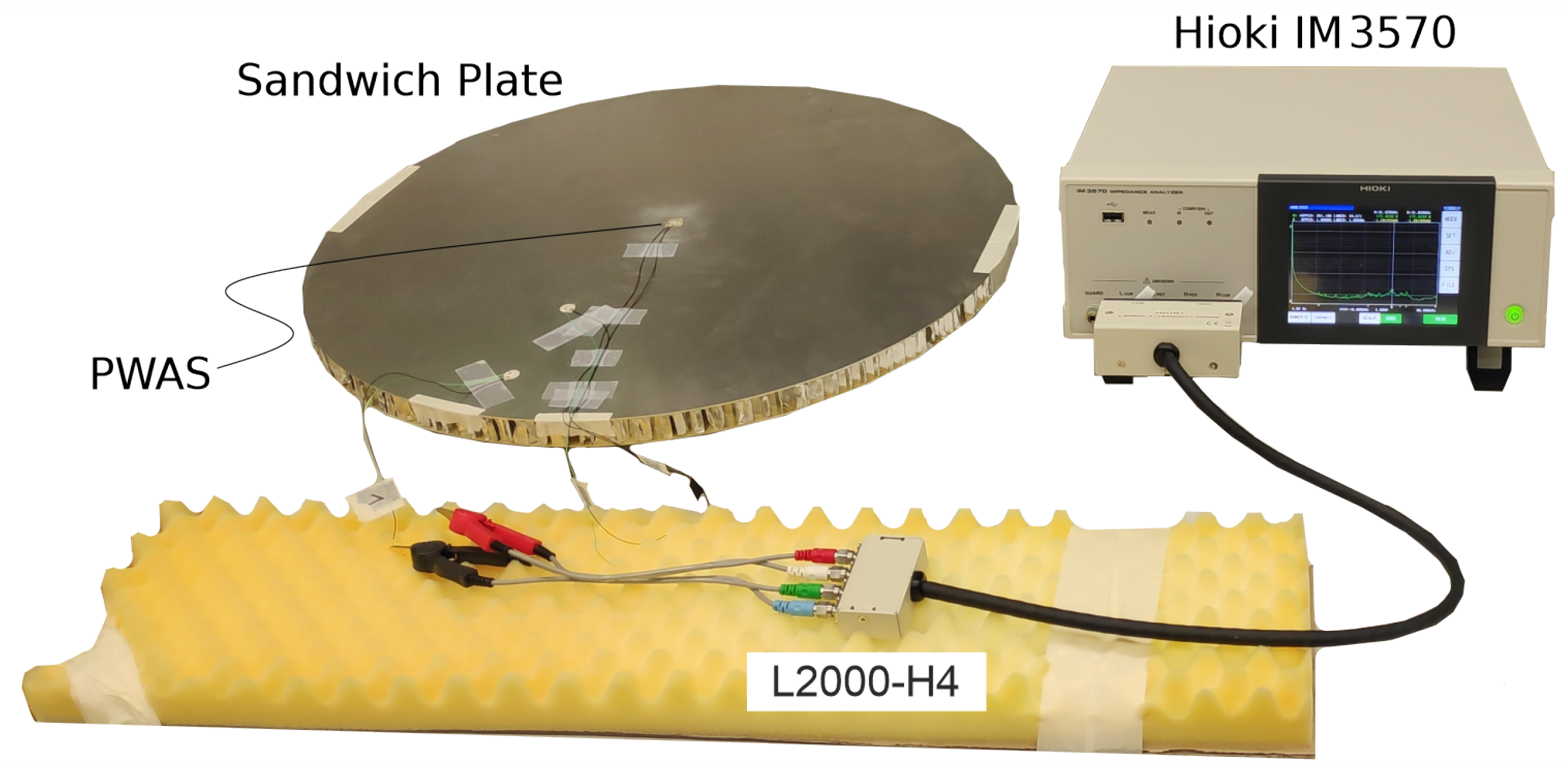

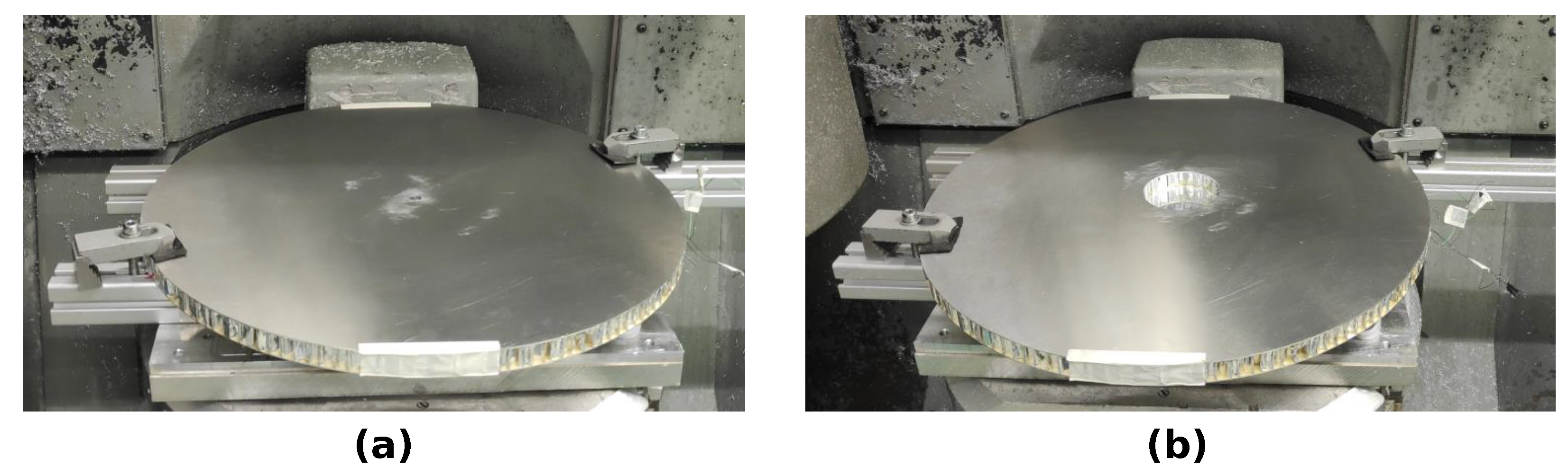

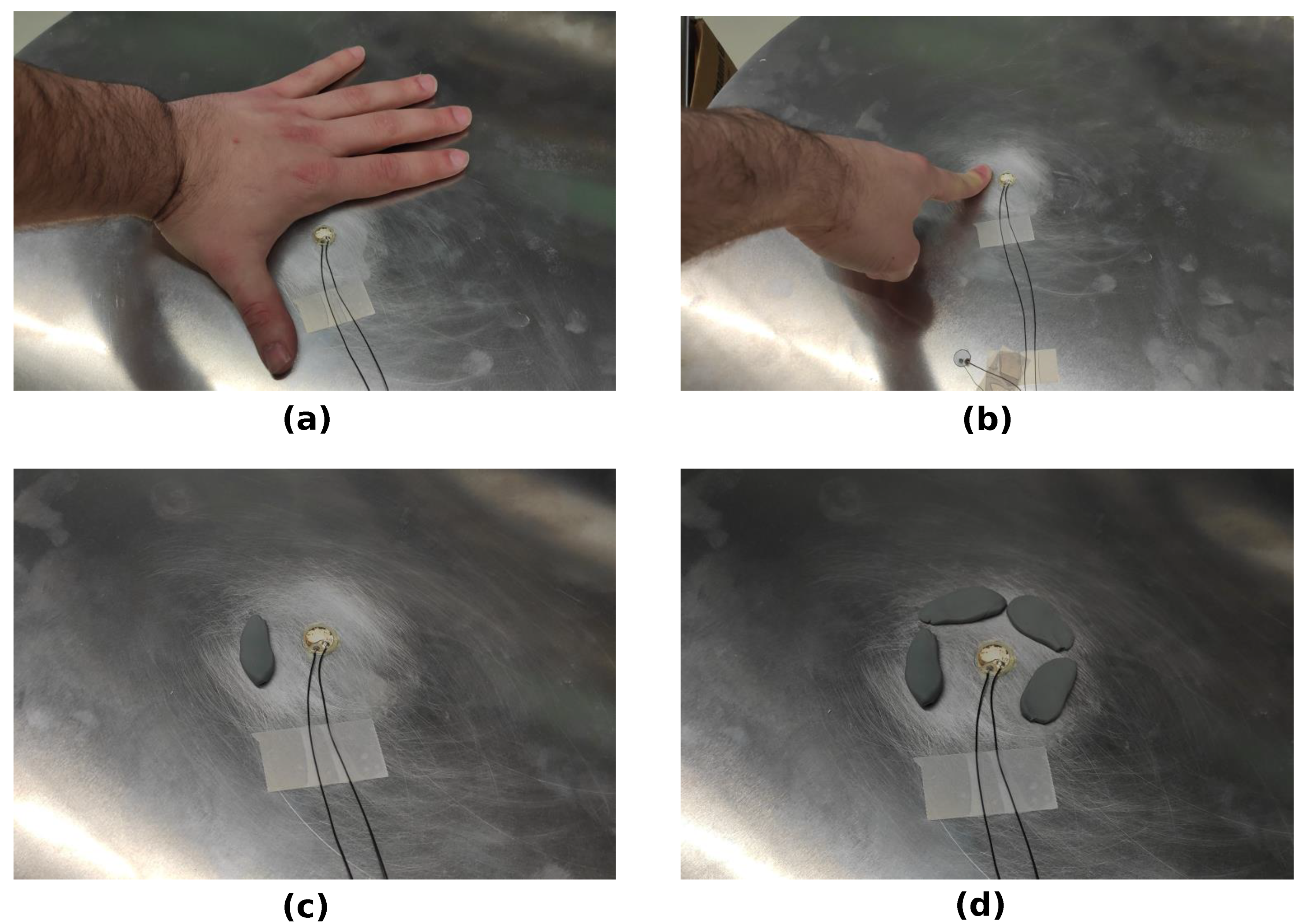

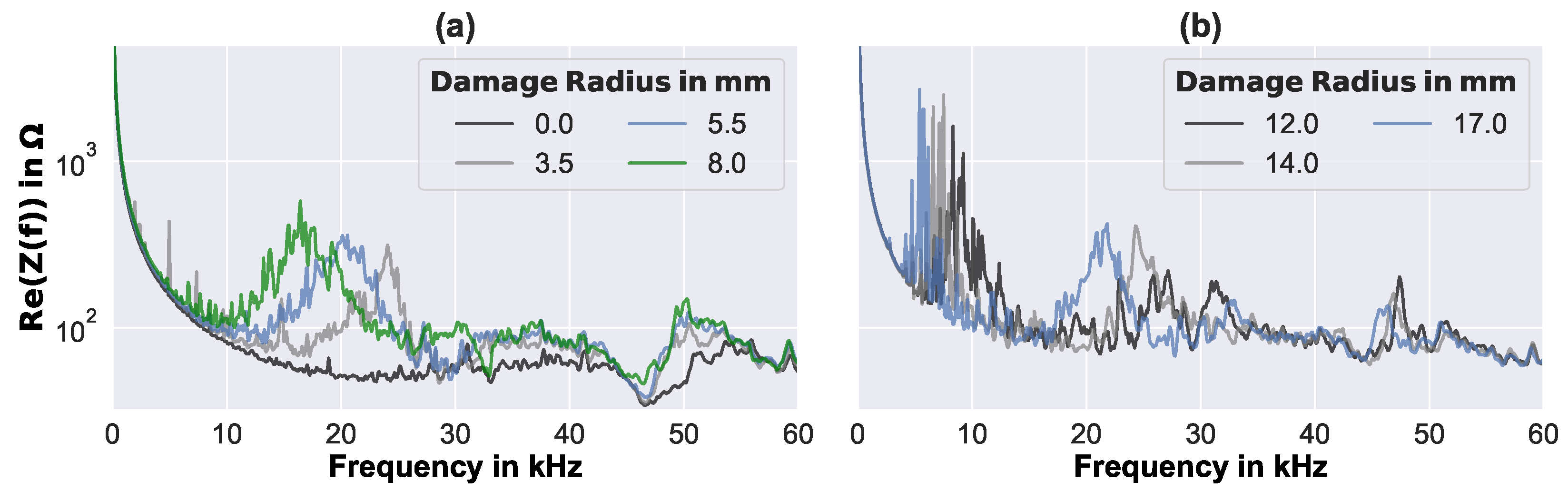

The present research contributes to the field of EMI data evaluation for structural damage identification, by proposing a novel two-step physics- and ML-based EMI data evaluation approach to sandwich debonding detection and size estimation. The proposed novel approach addresses the above-mentioned research shortcomings by (i) very fast and accurate damage identification by supervised ML models, (ii) the replacement of costly real-world measurement data for training, by synthetic FE model-based data, and (iii) the engineering of EMI signal features that are robust against simplifications of the FE model (used to generate training data), environmental disturbances and small variations of the debonding shape (in reality, debondings are not perfectly circular). As a case example, a comparatively large and complex mechanical structure—a circular sandwich with aluminum face layers and an aluminum honeycomb core—was used. A single PWAS located at the center of the sandwich directly above the considered idealized face layer debonding was used for the EMI measurements. In contrast to many other EMI studies, the FE model used to simulate the artificial EMI data was strongly simplified, and was not intended to perfectly reflect all features of the real structural response: this, on the one hand, enabled a very efficient simulation of the artificial EMI data, which may be very relevant for larger structural components or more complex damage modes, and on the other hand, it represented a test of the proposed method’s robustness against incomplete training data. The considered idealized face layer debonding was increased stepwise by milling and EMI measurements, which were evaluated by the proposed physics-based ML approach. The approach was tested for the consistency, accuracy, reliability, and robustness of its evaluation results, and was benchmarked.

3. Data Preprocessing

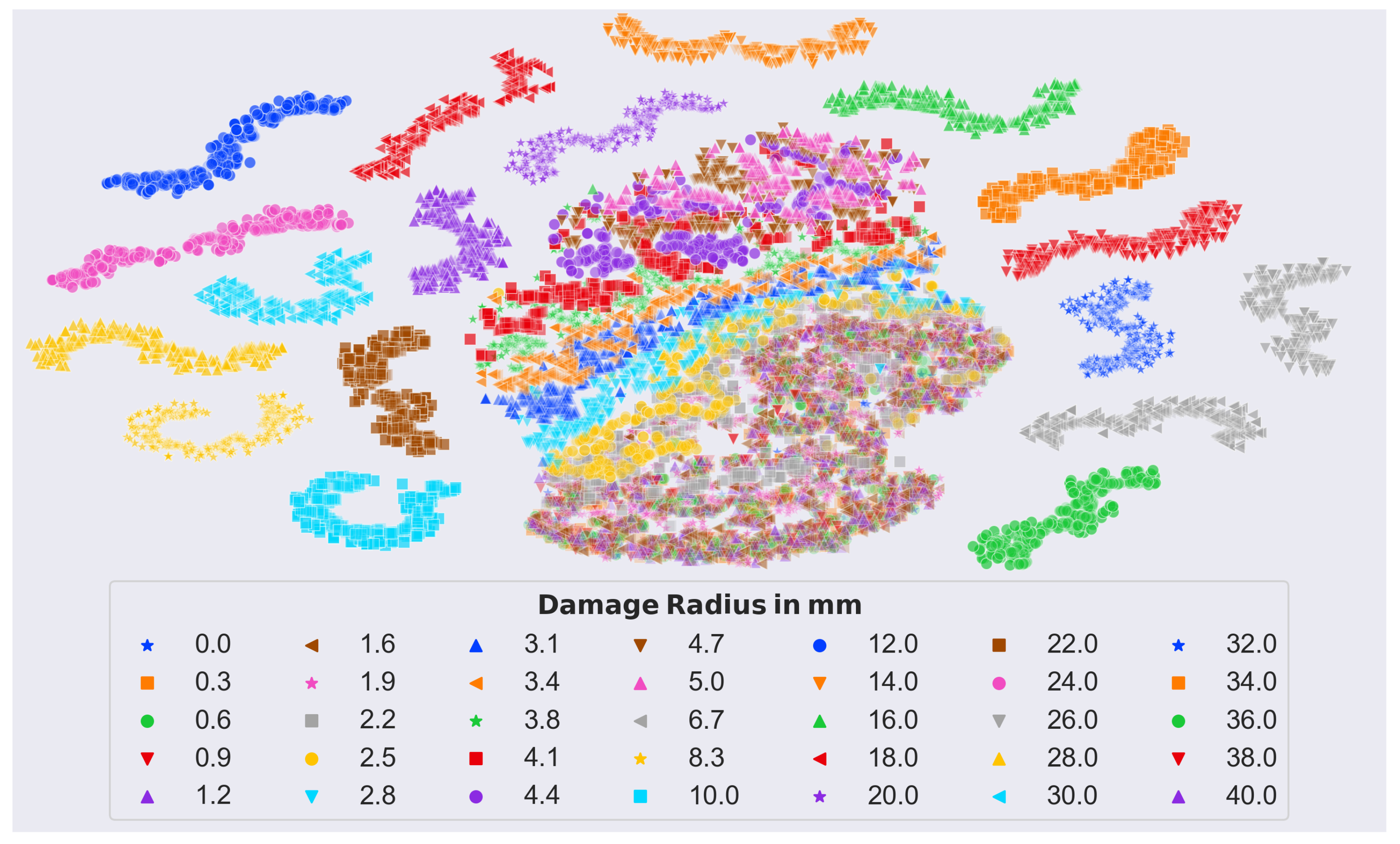

A three-step approach was implemented for preprocessing the input data for the ML-based evaluation, in order to decrease its dimensionality and, at the same time, improve the robustness of the overall method: firstly, relevant frequencies were identified through analysis of the frequency spectrum, allowing for the elimination of unnecessary information; secondly, a log-scaled filterbank was applied to the data, leading to more robust representation, in terms of slight variations in resonance frequencies; lastly, a discrete cosine transform (DCT) was applied to the processed data, for further data compression and smoothing of the spectrum. After each step, it was important to confirm that enough relevant information was still present in the data. To ensure this, a well-known data exploration technique called t-SNE (t-distributed stochastic neighbor embedding) was applied. T-SNE is a non-linear, unsupervised technique for visualizing high-dimensional data in 2D or 3D maps, which preserves the local data structure: that is, data points close to each other in high-dimensional space will end up close to each other in low-dimensional space. This technique allows for the visualization of high-dimensional data in a lower-dimensional space, making it easier to identify patterns, and to confirm the preservation of relevant information. This way, the original data representation, with a dimensionality of 801, was reduced to only 31.

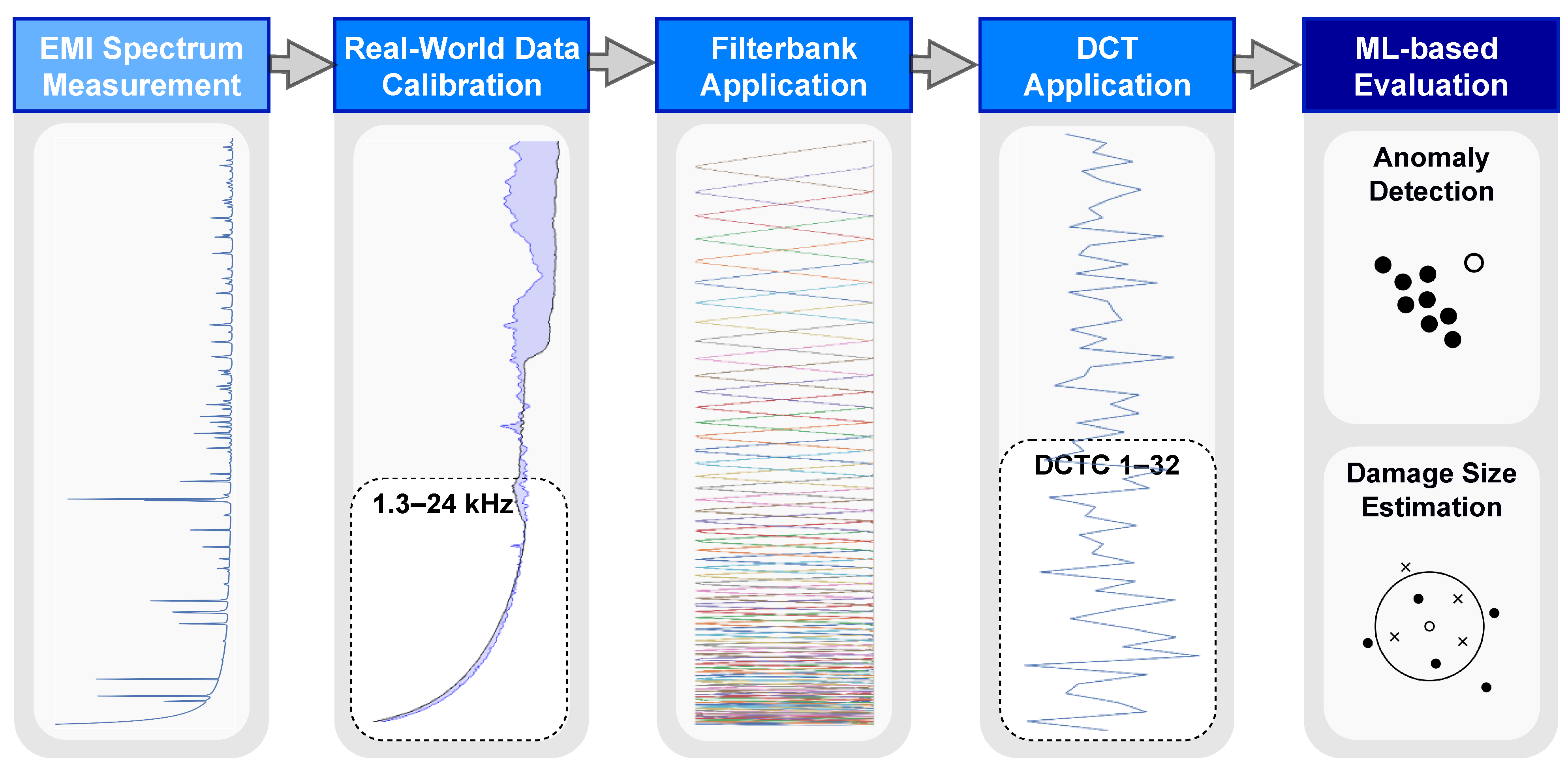

The features obtained through the preprocessing steps were then extracted from both the synthetic and the real-world EMI data, before conducting the ML-based damage evaluation experiments described in

Section 4. The real-world EMI data were additionally calibrated to the synthetic data, to compensate for the strong simplifications of the FE model (see

Section 2.3) used to generate the synthetic data. The finally defined preprocessing for the ML-based damage evaluation of real-world EMI data is depicted in

Figure 9, for a better overview. The preprocessing for synthetic EMI data was the same, but without the calibration.

3.1. Feature Engineering

The goal of feature engineering is to improve the performance and robustness of ML-based algorithms: to this end, the dimensionality of data can be reduced for denoising purposes, and features can be developed to extract relevant information, while at the same time suppressing irrelevant information. The features used in this analysis were obtained through the process of feature engineering using exclusively synthetic data.

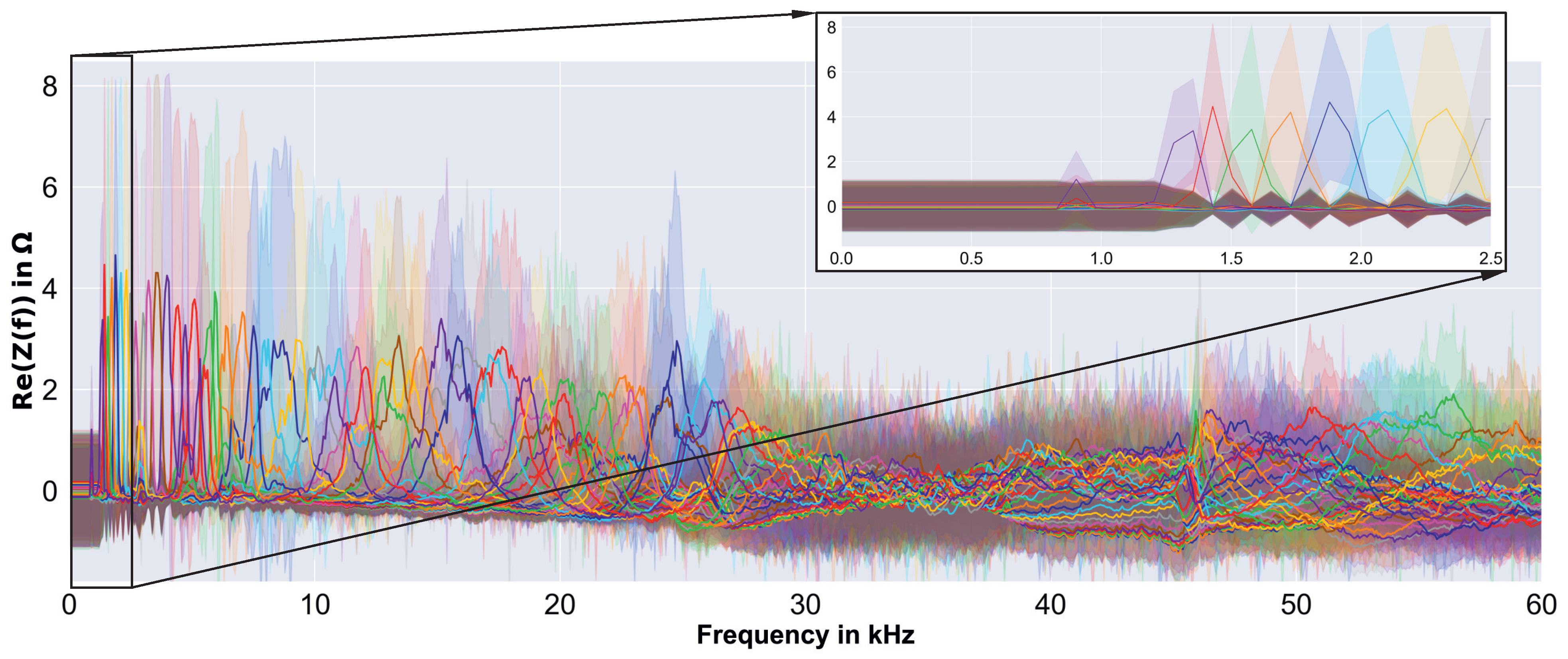

We started with an exploration of the raw synthetic spectra, by looking at damage size-specific characteristics, to identify the most informative range of frequencies. In

Figure 10, we can see the mean and standard deviation values of each damage size individually.

Note that we scaled the spectra to have zero mean and unit standard deviation, for better clarity. In general, it can be seen that different damage sizes caused peaks (i.e., resonances) at different frequencies. However, frequencies below 1.3 kHz were found to be less informative (see

Figure 10 detail), and were therefore excluded. Furthermore, the size-specific damage peaks were less pronounced, between 28 kHz and 46 kHz. Given our knowledge, from previous work, about the precision decrease of the simulation results with increasing frequency, and the clear objective to find an efficient evaluation method, we defined a frequency range between 1.3 kHz and 28 kHz to be considered, thereby readily shrinking the dimensionality of the data to less than half. With the help of the previously mentioned t-SNE plots, it was possible to further optimize the frequency range from 1.3 kHz to 24 kHz, i.e., only 38% of the available data.

Figure 11 shows the clear structure of the t-SNE plot of the finally used frequency range.

The central cluster consisted of comparatively small damage sizes up to a radius of 5 mm. Furthermore, there was some overlap for very small damage sizes (debonding radius mm) in the lower part.

After identifying the relevant frequencies, a filterbank was applied, which consisted of triangular filters that were 50% overlapping, and uniformly spaced on a logarithmic frequency scale. The overlapping of the filters allowed for a more fine-grained representation of the frequency spectrum, while the logarithmic spacing ensured that the filterbank was more sensitive to lower frequencies than to higher frequencies. This led to a more robust representation in terms of slight variations in resonance frequencies, while at the same time compressing the frequency axis of the spectrum. Additionally, log-scaling the magnitude compressed the dynamic range of the spectrum, which can be useful for handling signals with wide variations in amplitude. Considering the range of frequencies obtained in the previous step, and the filterbank configuration, a log-scaled spectrum with 66 bins was computed.

Finally, the log-scaled spectrum obtained through the previous steps was transformed, using the DTC [

39]. The DCT is a mathematical technique used to decorrelate and transform data into a new coordinate system: that is, orthogonal cosine basis vectors with specific frequencies. The resulting transformed data will be a set of uncorrelated basis vectors, each representing a different frequency component of the original data. This step can further improve the robustness and efficiency of the data representation, by eliminating the dependencies between the data, furthermore only keeping the lower-order coefficients of the DCT results, in a signal that is more compact, and less affected by irrelevant information. This is based on the assumption that a signal can be separated—at least to a certain extent—into low-order components comprising relevant information, and high-order components that are irrelevant to the ML model, to perform the task at hand. In other words, the lower DCT coefficients represent a low-pass filtered spectrum that converges to its envelope. Furthermore, ignoring the 0th DCT coefficient increases the robustness of the method even further, by eliminating the dependency on the overall energy level of the signal.

The final feature vector consisted of 31 DCT coefficients that were computed on a log-scaled spectrum that exhibited a frequency range from 1.3 kHz to 24 kHz. This feature vector, which represented a robust and compact representation of the input signal, captured the essential spectral information relevant to damage identification. In the case of real-world data, there was an additional calibration step required, which is discussed in the next Section.

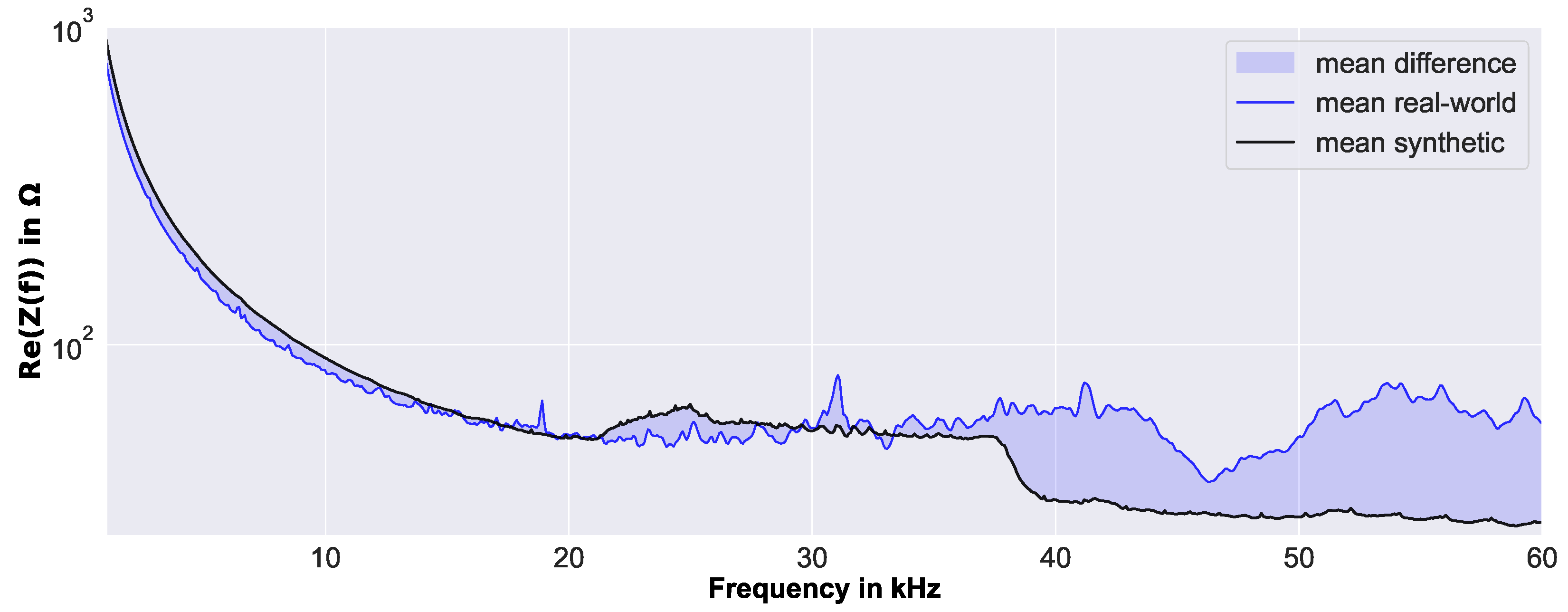

3.2. Calibration of Real-World EMI Data

The real-world data (i.e., the measured EMI spectra) were calibrated to the synthetic data (i.e., the simulated EMI spectra), to increase comparability. Therefore, it was possible to evaluate real-world EMI spectra by synthetic data-based features and ML models. This data preprocessing step was required, as the FE model used for the simulation of the synthetic EMI spectra was strongly simplified, to increase efficiency and robustness. The applied calibration was a simple shift of the real-world EMI spectra

by the difference of the frequency-dependent mean values of the real-world pristine data

and the synthetic pristine data

, thereby aligning the pristine spectra of the real-world measurements and the synthetic simulations. For illustration,

Figure 12 shows the mean real-world EMI spectra

for all measurements at the pristine sandwich panel, and the mean synthetic EMI spectra for all simulations by the pristine FE model

.

Furthermore, the difference between the two mean spectra used to shift, and thus calibrate, the real-world data is highlighted. This difference was used to calculate the calibrated real-world EMI spectra by

where

i represents the bin of the spectrum (i.e., the 801 discrete frequency steps).

5. Discussion

5.1. Evaluation Experiments

In the present research, great care was taken to meticulously select an appropriate cross-validation setup for each task individually, recognizing that this crucial step is overlooked in many studies. Thus, each experimental setup with cross-validation produced consistent results when repeated. Interestingly, the ranking of models was preserved between synthetic and real-world data for both tasks: this demonstrated that the synthetic data, in combination with the experimental setup, were sufficiently accurate for developing methods for a specific task, and for determining their relative performance in real-world settings.

5.2. Preprocessing

It is important to understand that although exclusively synthetic data were used to set up the complete preprocessing pipeline and ML models for evaluation, real-world data were still needed for calibration purposes. However, this was not a significant issue in practice, as the calibration process only required pristine baseline samples, which could be easily collected during the installation of the sensor network and the corresponding data acquisition system. Despite this, the value is recognized of further investigating more advanced preprocessing methods that could eliminate the need for calibration altogether.

5.3. Data

There are numerous disturbing influences on EMI measurements of operating mechanical structures, and thus challenges to the robustness of damage evaluation methods. The present research does not reflect all of these. Among them, measurement disturbances by ambient structural vibrations should be highlighted, as the results presented for the proposed two-step evaluation approach used comparatively low frequencies, and recent studies clearly show its relevance [

38,

46].

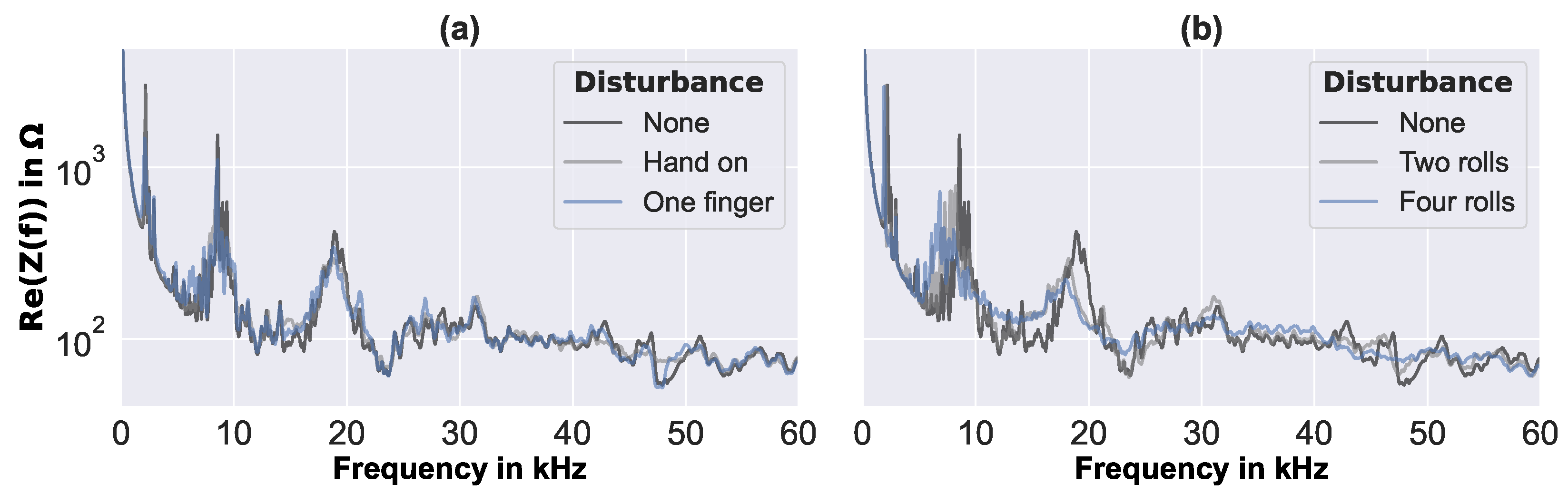

Thus, future research will address the approach’s robustness against ambient structural vibrations. Further frequently reported and not directly addressed influences on EMI measurements are temperature and moisture, as these are not expected to significantly affect aluminum sandwich panels. However, other important lightweight design materials, e.g., fiber-reinforced polymer composite, are well-known to be sensitive to temperature and moisture. The authors expect that an FE parameter study that also considers the density change of the face layers will reflect all parameters that are affected by temperature and moisture, and thus also allow damage evaluation in composite materials. This is also supported, as the real-world EMI data used for model testing already include measurements of unknown elongated debondings and artificial disturbances, which may have effects similar to temperature and moisture on composite properties. However, extended studies should also include composite materials and the examination of other non-idealized types of damages, such as delamination and cracks. The proposed physics-based two-step approach is expected to be applicable to all these cases, but may require inefficiently detailed FE models.

The considered debonding in the FE model is a strong idealization, and the results of the real-world measurements may also be interpreted as the identification of a hole in the back side of the sandwich panel. However, unpublished simulations with the same FE model, but with the remaining core and back-side face layer, show very similar characteristics of the EMI spectra. This is also supported by previous results of the authors [

9], who found a clear correlation between the resonances in the EMI spectra and the debonded face layer area. Furthermore, damages distant from the sensor are expected to be very challenging to the proposed approach, due to the strongly increased parameter space. Nevertheless, today’s computational power, and the fusion of the data of a sensor array, are considered promising for solving this issue.

It is noteworthy that, while the real-world data used in this study provided valuable insights, the sample size was limited; therefore, the conclusions drawn from it should be considered within the scope of this limitation. Further research, with a larger and more diverse set of real-world data, is necessary, to fully validate the results and conclusions of this study.

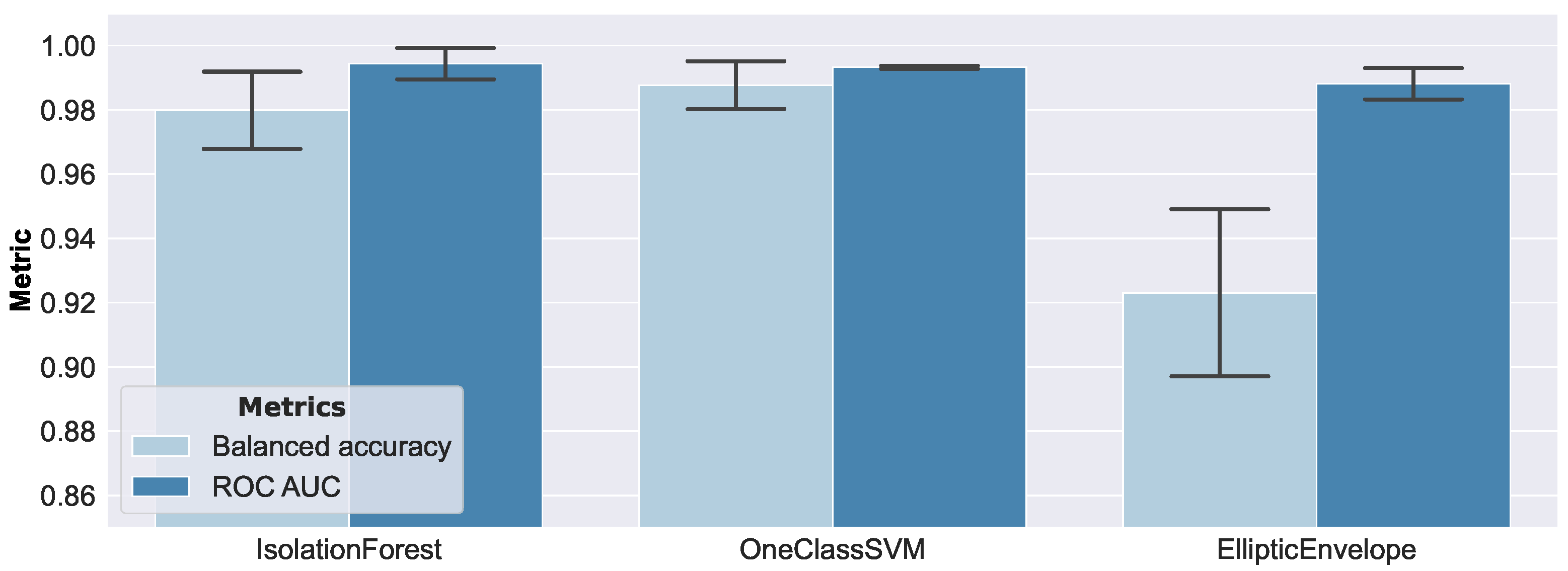

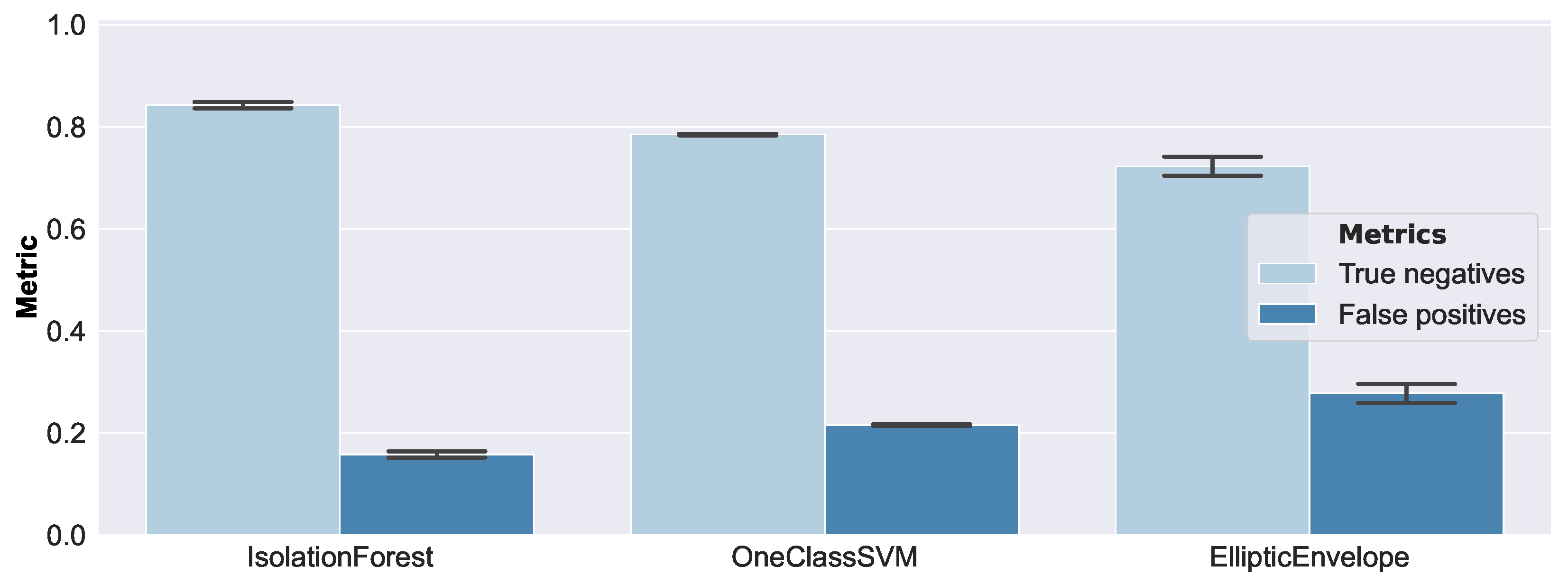

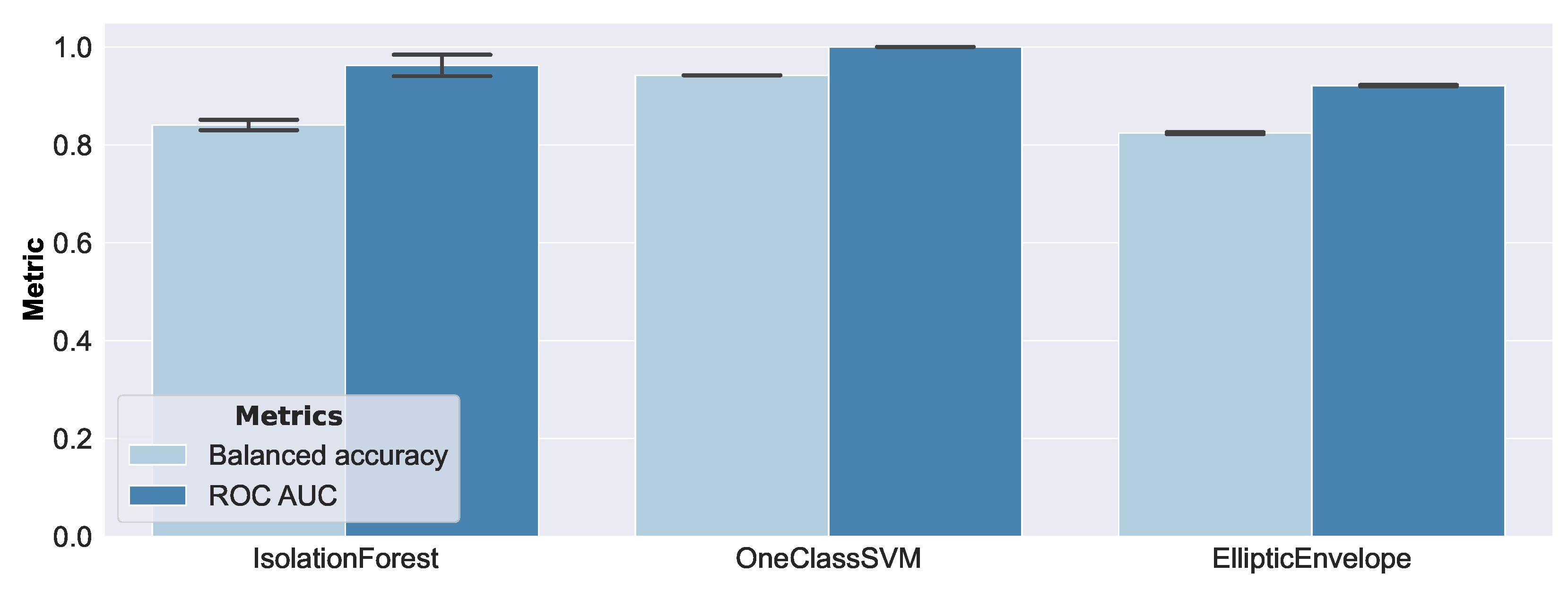

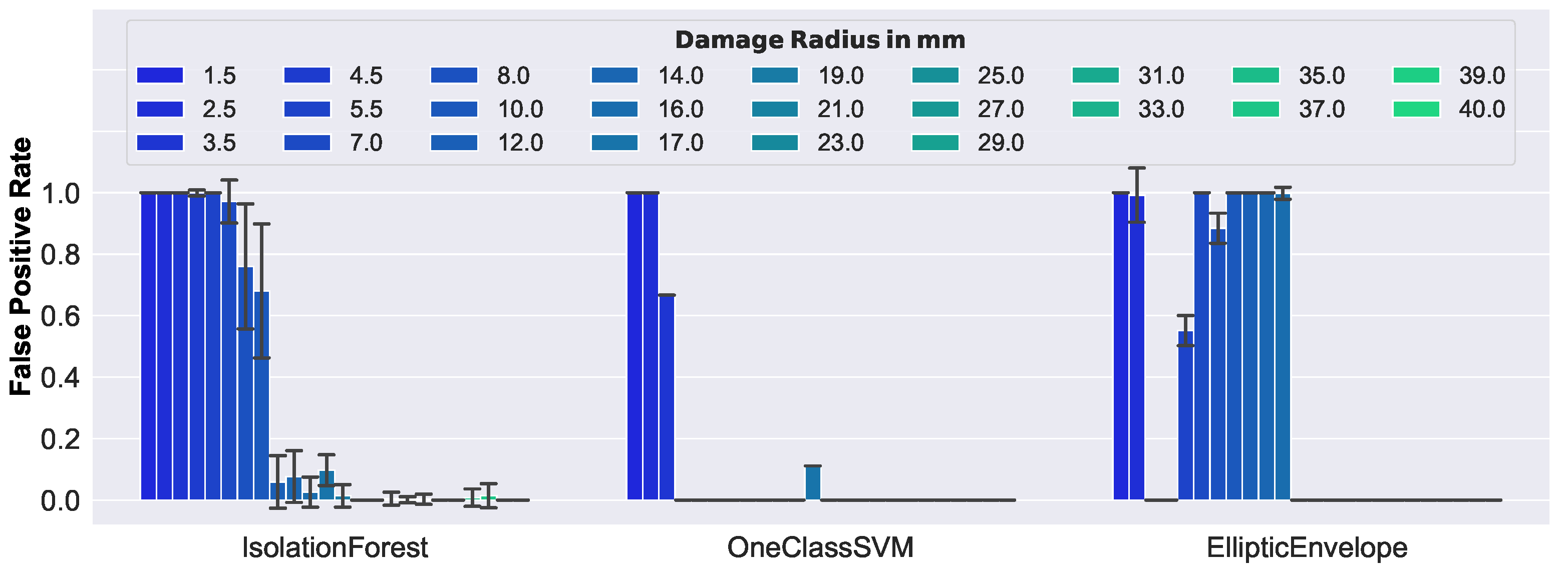

5.4. Debonding Detection by Anomaly Detection

Depending on the material properties, small damages may only be detectable if a relatively high rate of false negatives (i.e., normal examples classified as anomalous) is accepted. However, by monitoring over a longer period of time, and evaluating summary statistics, satisfactory results may still be obtained. In regard to the real-world specimen used in this study, it might not be considered practical to investigate debonding damage sizes that are smaller than the size of the honeycomb core cells. However, the ability of the proposed method to identify even smaller damages could be useful in certain applications, such as predictive maintenance. These applications may also be supported by the short data evaluation times of the considered ML models, which are in the magnitude of (for damage detection) and (for damage size estimation) of the duration of a real-world EMI measurement.

Overall, the results are promising, and provide valuable insights into the use of anomaly detection models for identifying damage in sandwich panels.

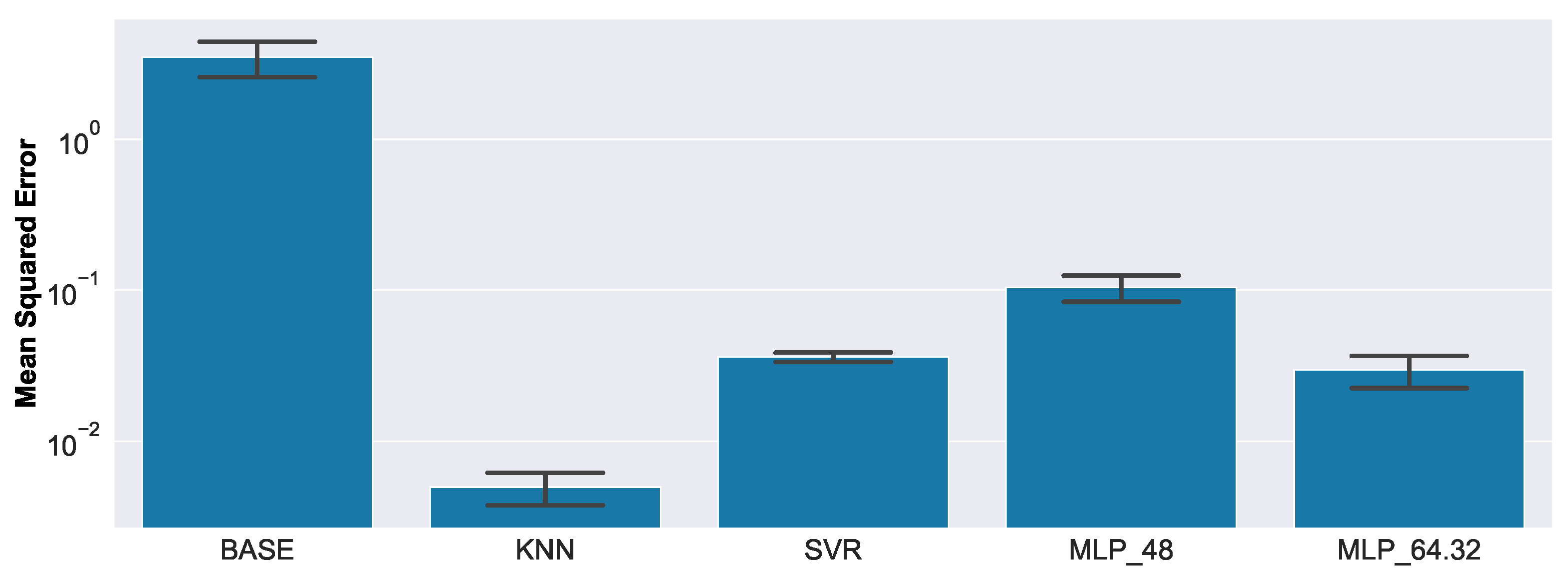

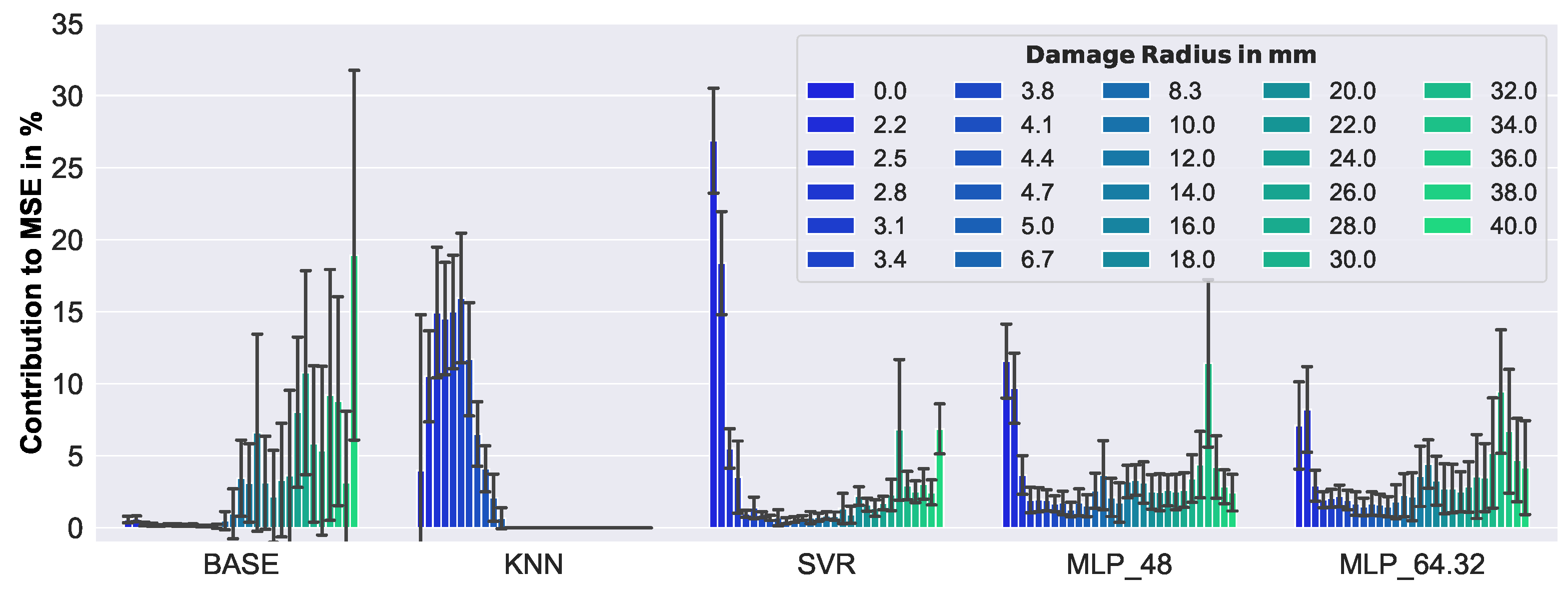

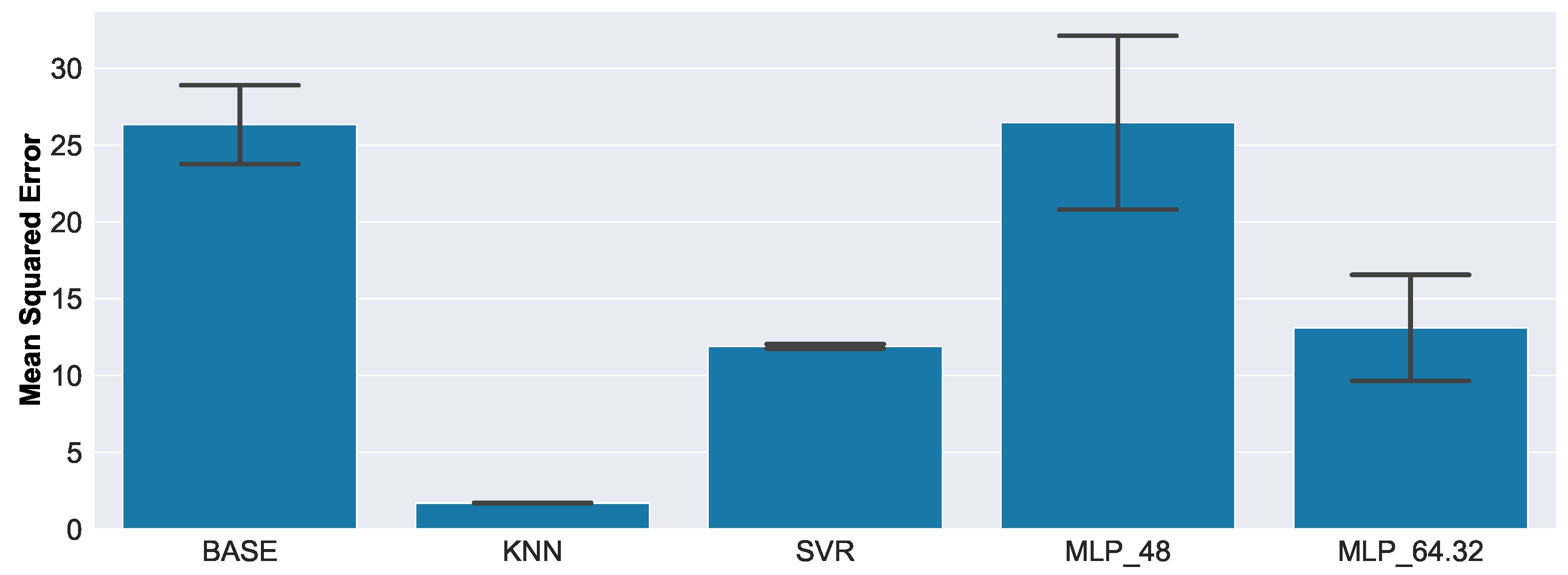

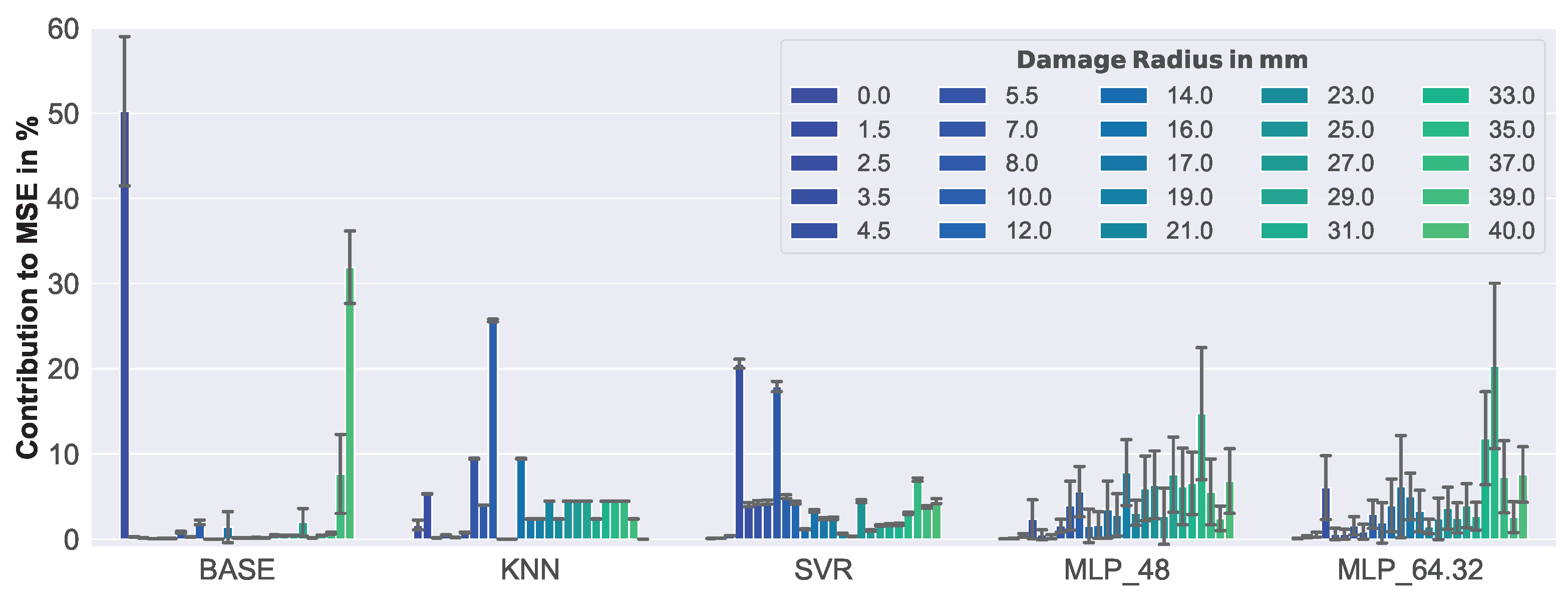

5.5. Debonding Size Estimation by Regression

One of the main disadvantages of using the KNN approach is that it requires the traversal of the entire training data set during inference; therefore, the running time of a KNN is directly proportional to the number of examples in the data set, which means that the larger the data set, the more time-consuming the process will be. This can lead to increased computational time and memory usage, particularly when dealing with large data sets. In cases where real-time or near-real-time predictions are needed, the KNN approach may not be the most efficient choice, due to this requirement. If the computational efficiency is less important, the KNN results may be further improved by increasing K, the number of considered NNs. However, as most of the time the NNs correspond to the same target value, little improvement is expected. The ML models considered in the present research showed short data evaluation times in the magnitude of of the duration of a real-world EMI measurement, and thus were hardly constrained in their possible applications. This efficiency may even question the need for the previous anomaly detection step. However, anomaly detection is (i) believed to be more robust in the evaluation of data that strongly deviate from the used training data, and (ii) 100-times faster, which appears meaningful for applications where normal data (data from the pristine structure) are predominantly evaluated.

6. Conclusions

The presented results clearly demonstrate the applicability of the proposed two-step physics- and ML-based EMI spectra evaluation approach for sandwich debonding detection and size estimation. The approach is capable of evaluating measured real-world EMI spectra exclusively based on synthetic, FE-simulated EMI spectra for debonding detection (step one) and debonding size estimation (step two). In addition, the feature engineering and the evaluation model development are conducted exclusively based on synthetic, FE-simulated EMI spectra. Therefore, the approach is very economic, compared to training with measured real-world data. The ML models applied for damage evaluation are, once trained, approximately -times (for damage detection) and -times (for damage size estimation) faster than the EMI measurement itself. This also enables quasi-real-time applications and edge computing at small and lightweight local processing units.

The best performance for damage detection by real-world EMI data was given by the combination of the developed data preprocessing with a One-Class SVM anomaly detection model. Misclassifications were only given for radial debonding sizes below 2.5 mm, i.e., debondings smaller than the honeycomb core’s cell size. The best performance for damage size estimation by real-world EMI data (1.7 mm MSE and 0.01 mm standard deviation) was given by the combination of the developed data preprocessing with a KNN regression model, which clearly outperformed a previous method used as a benchmark. Both the damage detection and the size estimation models were cross-validated, and showed high consistency in their results. Furthermore, the physics-based two-step approach demonstrated robustness against unknown artificial disturbances that were applied during real-world data acquisition. Data preprocessing was identified as the key factor in the performance of the proposed two-step evaluation approach, which—after comprehensive data exploration—found the consideration of a frequency range between 1.3 kHz and 24 kHz to be sufficient. This strongly reduced the ML model complexity, and allowed for a less discretized FE model, leading to a significant reduction in the simulation costs of the synthetic data.

However, the application of synthetic data-based features to the evaluation of real-world EMI measurement data still requires calibration to baseline measurements, to overcome the strong simplifications of the used FE model—a drawback that shall be addressed in future research. Future research must also investigate the proposed approach’s applicability to other important materials (e.g., composites) and their peculiar damage types, and damage evaluation with distant sensors. In addition, damage assessment with more remote sensors, and the extension of the approach to data fusion of a sensor network, need to be addressed.

The present work is also intended to promote future research in the field, by providing the complete EMI spectra data and the full code for data exploration, data preprocessing, and data evaluation (access see: Data Availability Statement).