Multi-Stage Network for Event-Based Video Deblurring with Residual Hint Attention

Abstract

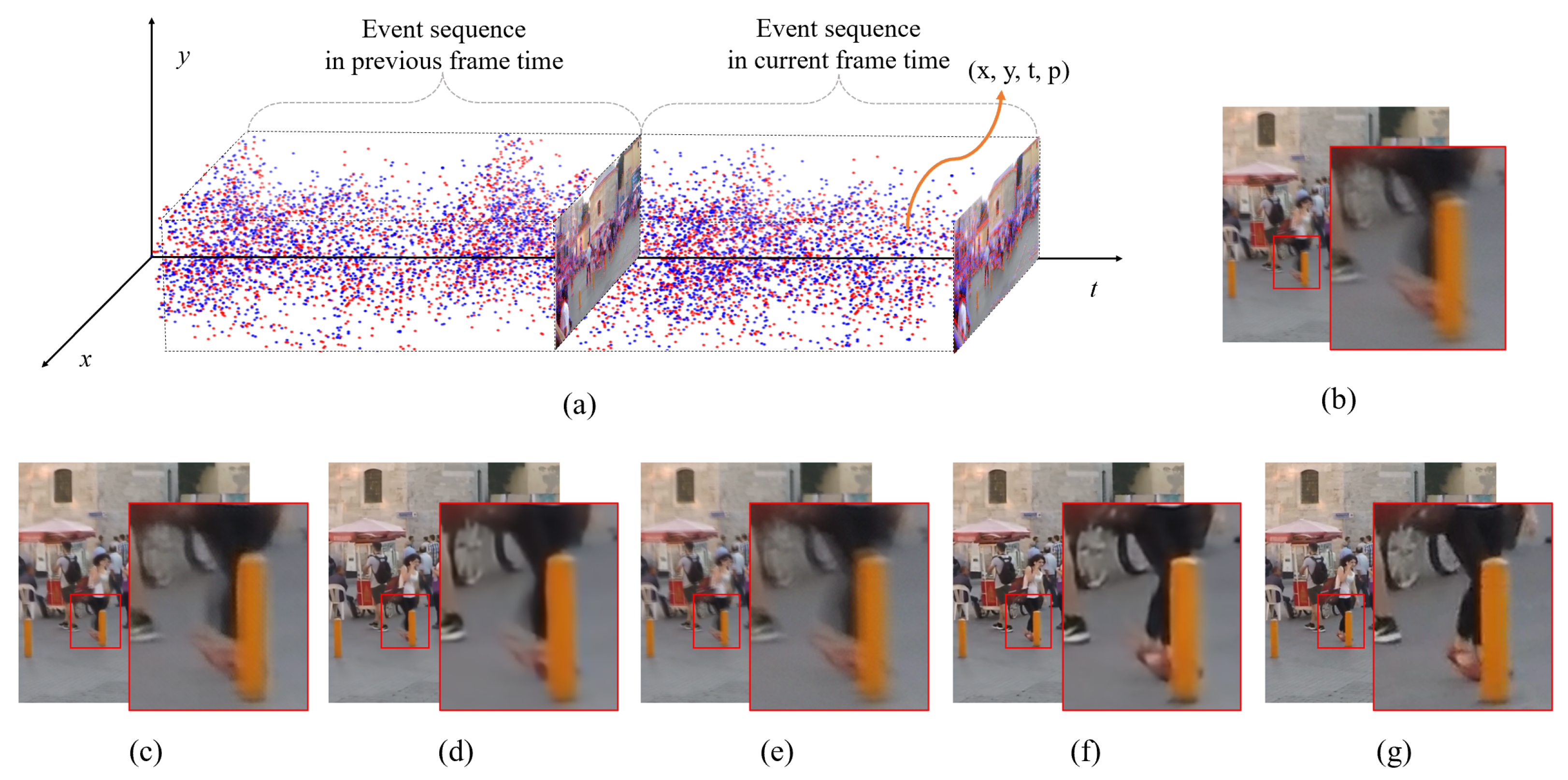

1. Introduction

- Contrarily to the existing method that uses a single-stage deep learning network, a multi-stage event-based video deblurring network (MEVDNet) is proposed, which estimates a coarse output through event-based video deblurring in the first stage and then refines the coarse output through all the available frame information in the second stage.

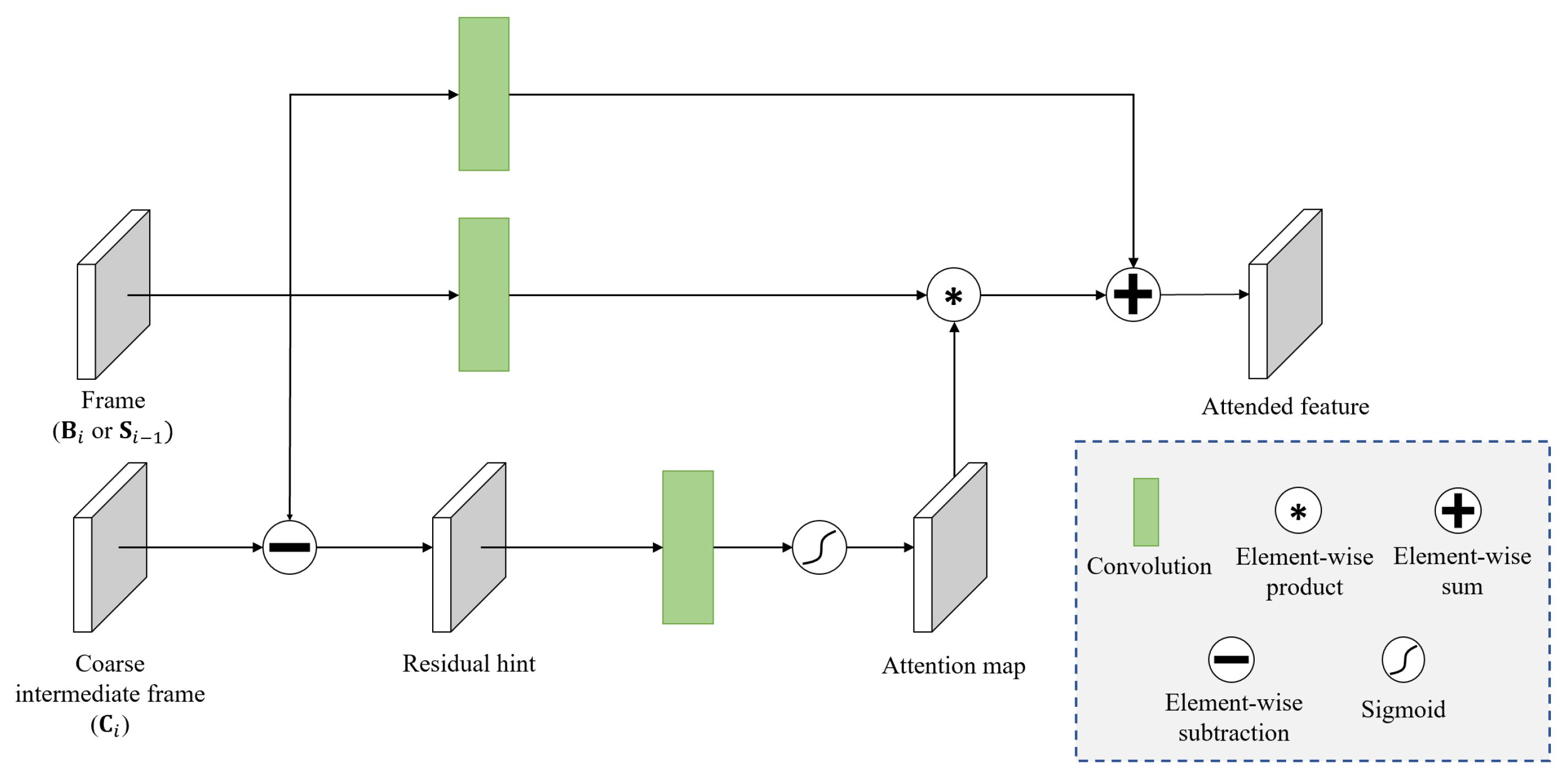

- To connect the first and second stages, a RHA module is also proposed to extract useful attention information for the refinement from all the available frame information.

2. Related Work

2.1. Frame-Based Video Deblurring

2.2. Event-Based Video Deblurring

3. Method

3.1. Stage 1: Coarse Network

3.2. Residual Hint Attention (RHA)

3.3. Stage 2: Refinement Network

3.4. Loss Function

4. Experiments and Results

4.1. Experiment Settings

4.2. Qualitative Results

4.3. Quantitative Results

4.4. Effect of the Attention Module

4.5. Effect of the Two Stages

4.6. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Su, S.; Delbracio, M.; Wang, J.; Sapiro, G.; Heidrich, W.; Wang, O. Deep video deblurring for hand-held cameras. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1279–1288. [Google Scholar]

- Kim, T.H.; Lee, K.M.; Scholkopf, B.; Hirsch, M. Online video deblurring via dynamic temporal blending network. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4038–4047. [Google Scholar]

- Kim, T.H.; Sajjadi, M.S.; Hirsch, M.; Scholkopf, B. Spatio-temporal transformer network for video restoration. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 106–122. [Google Scholar]

- Shen, Z.; Wang, W.; Lu, X.; Shen, J.; Ling, H.; Xu, T.; Shao, L. Human-aware motion deblurring. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5572–5581. [Google Scholar]

- Zhou, S.; Zhang, J.; Pan, J.; Xie, H.; Zuo, W.; Ren, J. Spatio-temporal filter adaptive network for video deblurring. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 2482–2491. [Google Scholar]

- Nah, S.; Son, S.; Lee, K.M. Recurrent neural networks with intra-frame iterations for video deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8102–8111. [Google Scholar]

- Zhang, X.; Jiang, R.; Wang, T.; Wang, J. Recursive neural network for video deblurring. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 3025–3036. [Google Scholar] [CrossRef]

- Pan, J.; Bai, H.; Tang, J. Cascaded deep video deblurring using temporal sharpness prior. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3043–3051. [Google Scholar]

- Wu, J.; Yu, X.; Liu, D.; Chandraker, M.; Wang, Z. DAVID: Dual-attentional video deblurring. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 1–5 March 2020; pp. 2376–2385. [Google Scholar]

- Zhong, Z.; Gao, Y.; Zheng, Y.; Zheng, B. Efficient spatio-temporal recurrent neural network for video deblurring. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 191–207. [Google Scholar]

- Ji, B.; Yao, A. Multi-scale memory-based video deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 1919–1928. [Google Scholar]

- Lin, S.; Zhang, J.; Pan, J.; Jiang, Z.; Zou, D.; Wang, Y.; Chen, J.; Ren, J. Learning event-driven video deblurring and interpolation. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 695–710. [Google Scholar]

- Shang, W.; Ren, D.; Zou, D.; Ren, J.S.; Luo, P.; Zuo, W. Bringing events into video deblurring with non-consecutively blurry frames. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 4531–4540. [Google Scholar]

- Lichtsteiner, P.; Posch, C.; Delbruck, T. A 128 × 128 120 dB 15 μs latency asynchronous temporal contrast vision sensor. IEEE J.-Solid-State Circuits 2008, 43, 566–576. [Google Scholar] [CrossRef]

- Brandli, C.; Berner, R.; Yang, M.; Liu, S.C.; Delbruck, T. A 240 × 180 130 db 3 μs latency global shutter spatiotemporal vision sensor. IEEE J.-Solid-State Circuits 2014, 49, 2333–2341. [Google Scholar] [CrossRef]

- Wang, L.; Kim, T.K.; Yoon, K.J. Eventsr: From asynchronous events to image reconstruction, restoration, and super-resolution via end-to-end adversarial learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8315–8325. [Google Scholar]

- Ahmed, S.H.; Jang, H.W.; Uddin, S.N.; Jung, Y.J. Deep event stereo leveraged by event-to-image translation. In Proceedings of the AAAI Conference on Artificial Intelligence, Pomona, CA, USA, 24–28 October 2022; Volume 35, pp. 882–890. [Google Scholar]

- Tulyakov, S.; Gehrig, D.; Georgoulis, S.; Erbach, J.; Gehrig, M.; Li, Y.; Scaramuzza, D. Time lens: Event-based video frame interpolation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 16155–16164. [Google Scholar]

- Uddin, S.N.; Ahmed, S.H.; Jung, Y.J. Unsupervised deep event stereo for depth estimation. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 7489–7504. [Google Scholar] [CrossRef]

- Pan, L.; Scheerlinck, C.; Yu, X.; Hartley, R.; Liu, M.; Dai, Y. Bringing a blurry frame alive at high frame-rate with an event camera. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6820–6829. [Google Scholar]

- Rebecq, H.; Ranftl, R.; Koltun, V.; Scaramuzza, D. High speed and high dynamic range video with an event camera. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1964–1980. [Google Scholar] [CrossRef] [PubMed]

- Nah, S.; Kim, T.H.; Lee, K.M. Deep multi-scale convolutional neural network for dynamic scene deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3883–3891. [Google Scholar]

- Stoffregen, T.; Scheerlinck, C.; Scaramuzza, D.; Drummond, T.; Barnes, N.; Kleeman, L.; Mahony, R.E. Reducing the Sim-to-Real Gap for Event Cameras. In Proceedings of the 2020 European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 534–549. [Google Scholar]

- Tao, X.; Gao, H.; Shen, X.; Wang, J.; Jia, J. Scale-recurrent network for deep image deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8174–8182. [Google Scholar]

- Li, L.; Pan, J.; Lai, W.S.; Gao, C.; Sang, N.; Yang, M.H. Learning a discriminative prior for blind image deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6616–6625. [Google Scholar]

- Aittala, M.; Durand, F. Burst image deblurring using permutation invariant convolutional neural networks. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 731–747. [Google Scholar]

- Zhang, H.; Dai, Y.; Li, H.; Koniusz, P. Deep stacked hierarchical multi-patch network for image deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5978–5986. [Google Scholar]

- Kupyn, O.; Budzan, V.; Mykhailych, M.; Mishkin, D.; Matas, J. Deblurgan: Blind motion deblurring using conditional adversarial networks. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8183–8192. [Google Scholar]

- Kupyn, O.; Martyniuk, T.; Wu, J.; Wang, Z. Deblurgan-v2: Deblurring 52(orders-of-magnitude) faster and better. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8878–8887. [Google Scholar]

- Zhang, K.; Luo, W.; Zhong, Y.; Ma, L.; Stenger, B.; Liu, W.; Li, H. Deblurring by realistic blurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2737–2746. [Google Scholar]

- Truong, N.Q.; Lee, Y.W.; Owais, M.; Nguyen, D.T.; Batchuluun, G.; Pham, T.D.; Park, K.R. SlimDeblurGAN-based motion deblurring and marker detection for autonomous drone landing. Sensors 2020, 20, 3918. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Zhang, J.; Pan, J.; Lin, S.; Fang, F.; Ren, J.S. Learning a non-blind deblurring network for night blurry images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 10542–10550. [Google Scholar]

- Dong, J.; Roth, S.; Schiele, B. Learning spatially-variant MAP models for non-blind image deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 4886–4895. [Google Scholar]

- Chen, L.; Zhang, J.; Lin, S.; Fang, F.; Ren, J.S. Blind deblurring for saturated images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 6308–6316. [Google Scholar]

- Tran, P.; Tran, A.T.; Phung, Q.; Hoai, M. Explore image deblurring via encoded blur kernel space. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 11956–11965. [Google Scholar]

- Suin, M.; Rajagopalan, A. Gated spatio-temporal attention-guided video deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7802–7811. [Google Scholar]

- Li, D.; Xu, C.; Zhang, K.; Yu, X.; Zhong, Y.; Ren, W.; Suominen, H.; Li, H. Arvo: Learning all-range volumetric correspondence for video deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7721–7731. [Google Scholar]

- Wang, J.; Wang, Z.; Yang, A. Iterative dual CNNs for image deblurring. Mathematics 2022, 10, 3891. [Google Scholar] [CrossRef]

- Xu, F.; Yu, L.; Wang, B.; Yang, W.; Xia, G.S.; Jia, X.; Qiao, Z.; Liu, J. Motion deblurring with real events. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 2583–2592. [Google Scholar]

- Sun, L.; Sakaridis, C.; Liang, J.; Jiang, Q.; Yang, K.; Sun, P.; Ye, Y.; Wang, K.; Gool, L.V. Event-based fusion for motion deblurring with cross-modal attention. In Proceedings of the Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; pp. 412–428. [Google Scholar]

- Li, J.; Gong, W.; Li, W. Combining motion compensation with spatiotemporal constraint for video deblurring. Sensors 2018, 18, 1774. [Google Scholar] [CrossRef] [PubMed]

- Jia, X.; De Brabandere, B.; Tuytelaars, T.; Gool, L.V. Dynamic filter networks. Adv. Neural Inf. Process. Syst. 2016, 29, 667–675. [Google Scholar]

- Niklaus, S.; Mai, L.; Liu, F. Video frame interpolation via adaptive convolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 670–679. [Google Scholar]

- Mildenhall, B.; Barron, J.T.; Chen, J.; Sharlet, D.; Ng, R.; Carroll, R. Burst denoising with kernel prediction networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2502–2510. [Google Scholar]

- Wang, X.; Yu, K.; Dong, C.; Loy, C.C. Recovering realistic texture in image super-resolution by deep spatial feature transform. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 606–615. [Google Scholar]

- Wang, L.; Ho, Y.S.; Yoon, K.J. Event-based high dynamic range image and very high frame rate video generation using conditional generative adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 10081–10090. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Uddin, S.N.; Jung, Y.J. Global and local attention-based free-form image inpainting. Sensors 2020, 20, 3204. [Google Scholar] [CrossRef] [PubMed]

- Yoon, H.; Uddin, S.N.; Jung, Y.J. Multi-scale attention-guided non-local network for HDR image reconstruction. Sensors 2022, 22, 7044. [Google Scholar] [CrossRef] [PubMed]

- Rebecq, H.; Gehrig, D.; Scaramuzza, D. ESIM: An open event camera simulator. In Proceedings of the Conference on Robot Learning. PMLR, Zürich, Switzerland, 29–31 October 2018; pp. 969–982. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 2019, 32, 8024–8035. [Google Scholar]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.c. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. Adv. Neural Inf. Process. Syst. 2015, 28, 802–810. [Google Scholar]

- Mehri, A.; Ardakani, P.B.; Sappa, A.D. MPRNet: Multi-path residual network for lightweight image super resolution. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 5–9 January 2021; pp. 2704–2713. [Google Scholar]

| Method | Description | |

|---|---|---|

| Frame-based video deblurring | STFAN [5] | Frame-based deblurring method that performs the alignment using a kernel prediction network. |

| CDVD-TSP [8] | Frame-based deblurring method that utilizes the adjacent frames aligned with the optical flow in an image sequence. | |

| ESTRNN [10] | Recurrent neural network (RNN) that performs temporal feature fusion. | |

| Event-based video deblurring | EDI [20] | Handcrafted method using the event-main approach that directly computes the residual frame from event data. |

| LEDVDI [12] | CNN model of the event-main approach that predicts the residual frame from event data. | |

| Net [13] | CNN model of the frame-main approach that uses a frame-based deblurring framework and fuses the image features with event features extracted from event data. | |

| E2VID [21] | STFAN [5] | CDVD-TSP [8] | ESTRNN [10] | LEDVDI [12] | Net [13] | MEVDNet | |

|---|---|---|---|---|---|---|---|

| PSNR | 31.06 | 31.60 | 33.76 | 33.51 | 35.14 | 35.69 | 36.66 |

| SSIM | 0.904 | 0.900 | 0.925 | 0.912 | 0.941 | 0.950 | 0.957 |

| E2VID [21] | STFAN [5] | CDVD-TSP [8] | ESTRNN [10] | LEDVDI [12] | Net [13] | MEVDNet | |

|---|---|---|---|---|---|---|---|

| PSNR | 27.54 | 22.48 | 22.39 | 23.23 | 30.77 | 29.33 | 31.01 |

| SSIM | 0.829 | 0.679 | 0.694 | 0.688 | 0.905 | 0.880 | 0.910 |

| No Attention Module | SAM | RHA | |

|---|---|---|---|

| PSNR | 30.65 | 35.14 | 36.66 |

| SSIM | 0.900 | 0.941 | 0.957 |

| Without Coarse Stage | Without Refinement Stage | Our Model | |

|---|---|---|---|

| PSNR | 30.65 | 35.14 | 36.66 |

| SSIM | 0.900 | 0.941 | 0.957 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, J.; Jung, Y.J. Multi-Stage Network for Event-Based Video Deblurring with Residual Hint Attention. Sensors 2023, 23, 2880. https://doi.org/10.3390/s23062880

Kim J, Jung YJ. Multi-Stage Network for Event-Based Video Deblurring with Residual Hint Attention. Sensors. 2023; 23(6):2880. https://doi.org/10.3390/s23062880

Chicago/Turabian StyleKim, Jeongmin, and Yong Ju Jung. 2023. "Multi-Stage Network for Event-Based Video Deblurring with Residual Hint Attention" Sensors 23, no. 6: 2880. https://doi.org/10.3390/s23062880

APA StyleKim, J., & Jung, Y. J. (2023). Multi-Stage Network for Event-Based Video Deblurring with Residual Hint Attention. Sensors, 23(6), 2880. https://doi.org/10.3390/s23062880