Three-Dimensional Digital Zooming of Integral Imaging under Photon-Starved Conditions

Abstract

1. Introduction

2. Related Work

2.1. Zooming

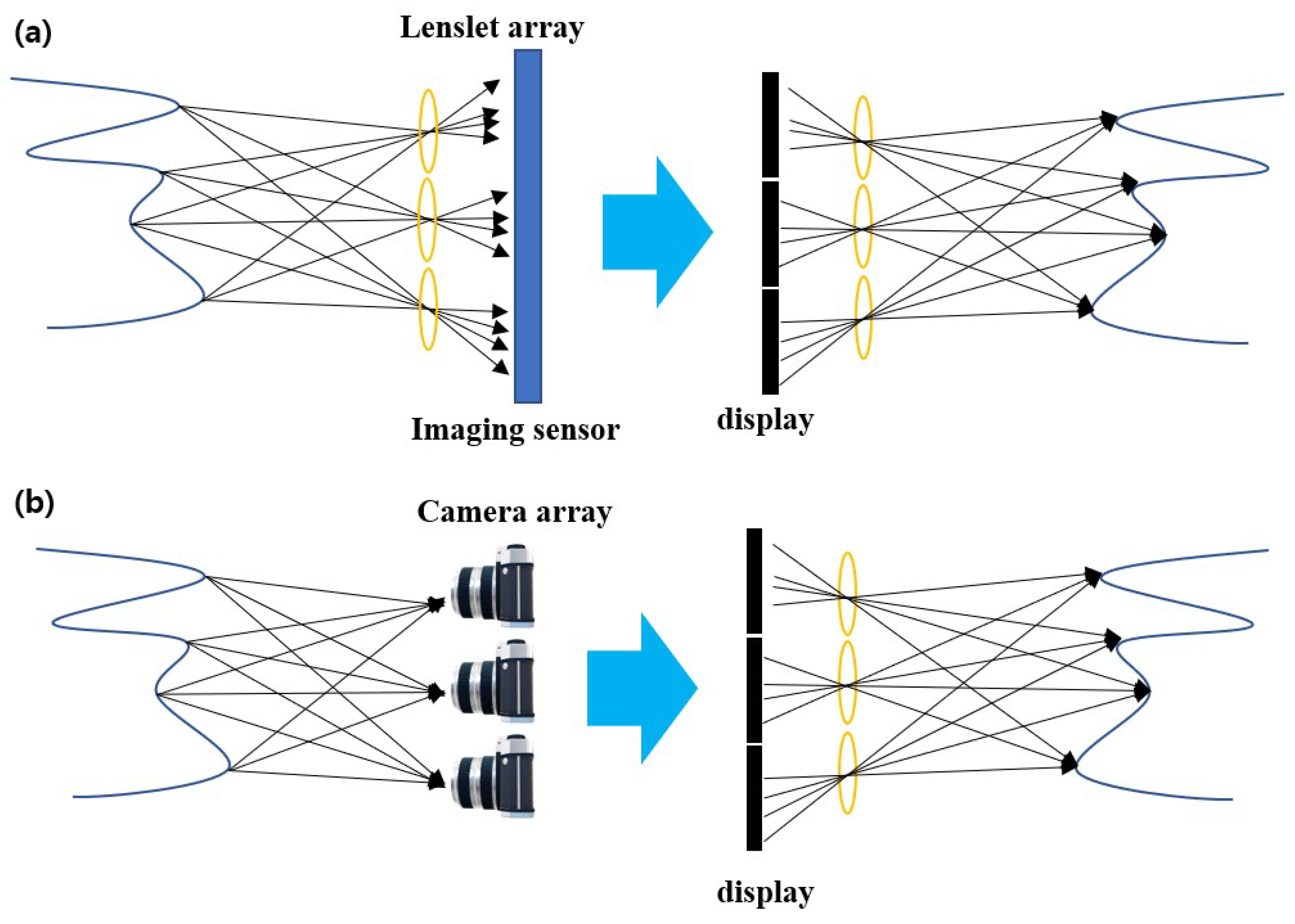

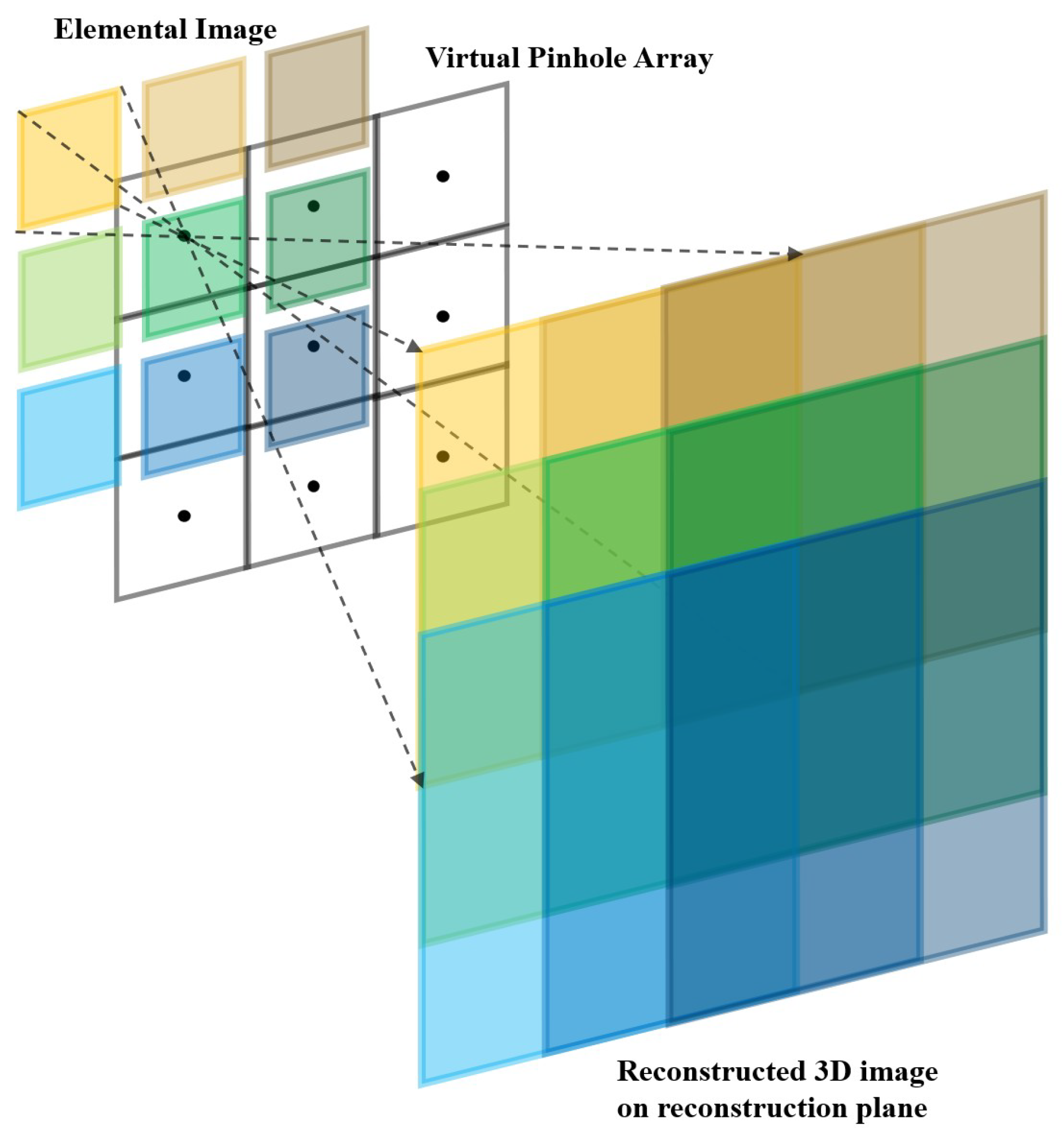

2.2. Integral Imaging

3. Three-Dimensional Visualization of Objects at Long Distances under Photon-Starved Conditions

3.1. Computational Photon Counting Imaging

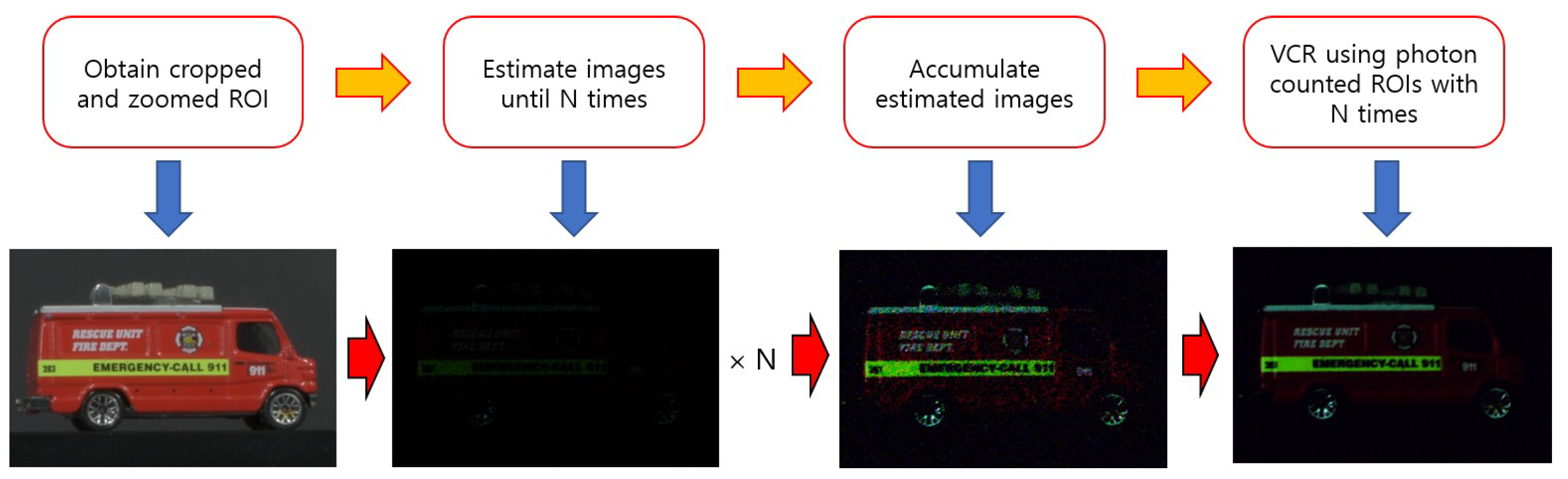

3.2. Three-Dimensional Digital Zooming of Integral Imaging and N Observation Photon Counting Integral Imaging under Photon-Starved Conditions

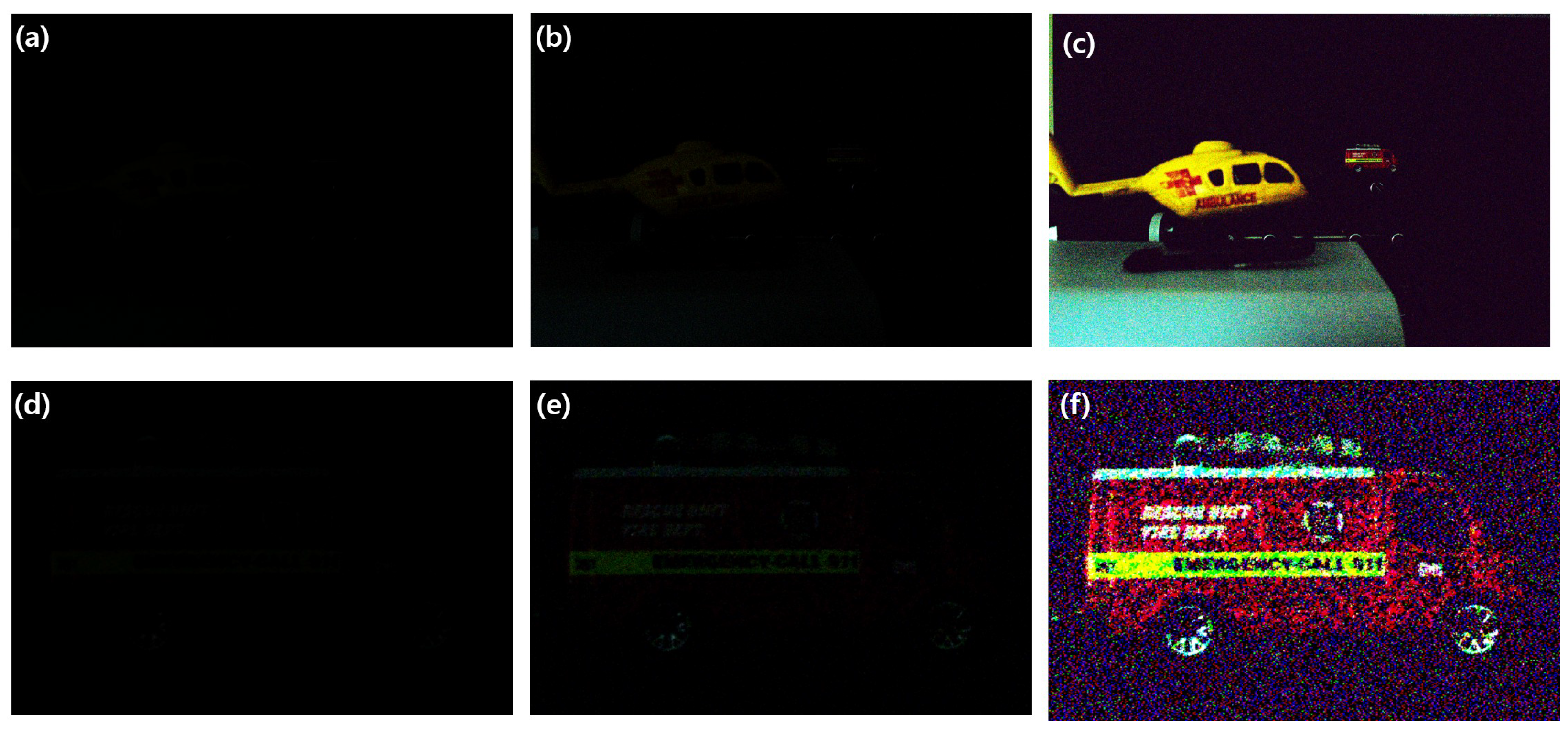

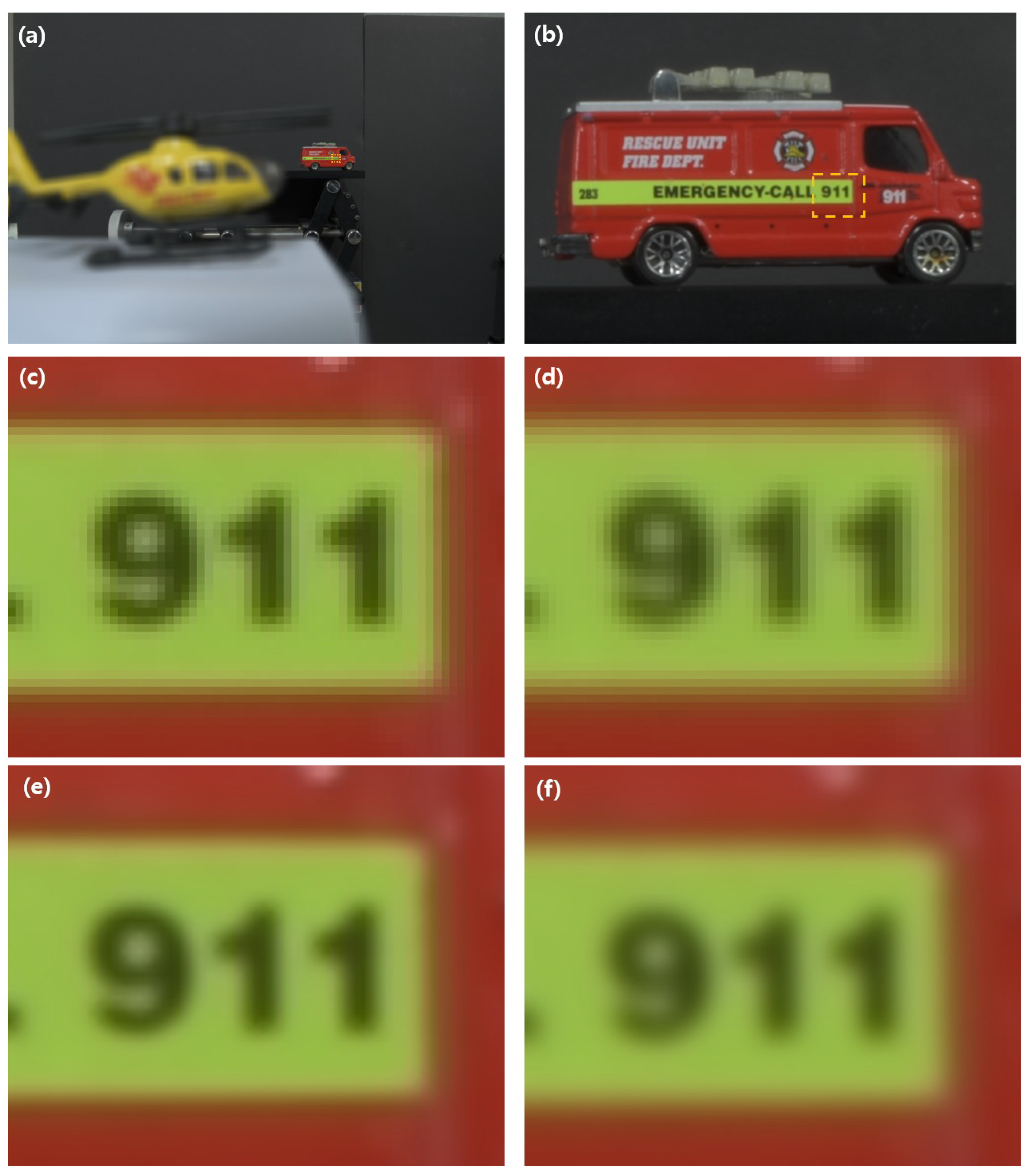

4. Simulation and Experimental Results

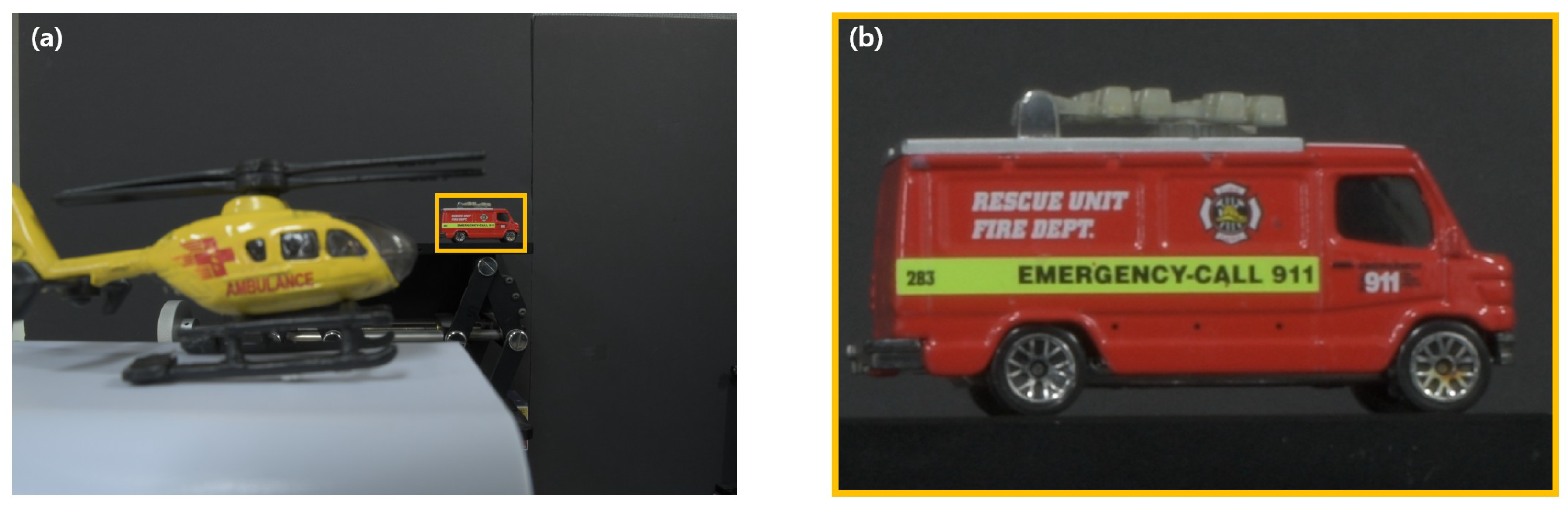

4.1. Simulation and Experimental Setup

4.2. Experimental Result

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| 3D | Three-dimensional |

| 2D | Two-Dimensional |

| ROI | Region of interest |

| VCR | Volumetric computational reconstruction |

| SAII | Synthetic aperture integral imaging |

| MLE | Maximum likelihood estimation |

| PSR | Peak sidelobe ratio |

References

- Lippmann, G. La Photographie Integrale. Comp. Rend. Acad. Sci. 1908, 146, 446–451. [Google Scholar]

- Sokolov, A.P. Autostereoscopy and Integral Photography by Professor Lippmann’s Method; Moscow State University: Moscow, Russia, 1911. [Google Scholar]

- lves, H.E. Optical properties of a lippmann lenticuled sheet. Opt. Soc. Amer 1931, 21, 171–176. [Google Scholar]

- Burckhardt, C.B. Optimum parameters and resolution limitation of integral photography. J. Opt. Soc. Amer. 1968, 58, 71–76. [Google Scholar] [CrossRef]

- Okoshi, T. Three-Dimensional Imaging Techniques; Academic Press: New York, NY, USA, 1976. [Google Scholar]

- Okoshi, T. Three-Dimensional displays. Proc. IEEE 1980, 68, 548–564. [Google Scholar] [CrossRef]

- Javidi, B.; Okano, F.; Son, J.-Y. Three-Dimensional Imaging, Visualization, and Display Technology; Springer: New York, NY, USA, 2009. [Google Scholar]

- Cho, M.; Daneshpanah, M.; Moon, I.; Javidi, B. Three-Dimensional Optical Sensing and Visualization Using Integral Imaging. Proc. IEEE 2010, 99, 556–575. [Google Scholar]

- Cho, M.; Javidi, B. Three-dimensional photon counting integral imaging using moving array lens technique. Opt. Lett. 2012, 37, 1487–1489. [Google Scholar] [CrossRef]

- Cho, M.; Javidi, B. Three-dimensional photon counting image with axially distributed sensing. Sensors 2016, 16, 1184. [Google Scholar] [CrossRef]

- Tavakoli, B.; Javidi, B.; Watson, E. Three-dimensional visualization by photon counting computational integral imaging. Opt. Exp. 2008, 16, 4426–4436. [Google Scholar] [CrossRef]

- Cho, M. Three-dimensional color photon counting microscopy using Bayesian estimation with adaptive priori information. Chin. Opt. Lett. 2015, 13, 070301. [Google Scholar]

- Jung, J.; Cho, M.; Dey, D.-K.; Javidi, B. Three-dimensional photon counting integral using Bayesian estimation. Opt. Lett. 2010, 35, 1825–1827. [Google Scholar] [CrossRef]

- Lee, J.; Cho, M. Enhancement of three-dimensional image visualization under photon-starved conditions. Appl. Opt. 2022, 61, 6374–6382. [Google Scholar] [CrossRef] [PubMed]

- Jang, J.-S.; Javidi, B. Three-dimensional synthetic aperture integral imaging. Opt. Lett. 2002, 27, 1144–1146. [Google Scholar] [CrossRef]

- Hong, S.-H.; Jang, J.-S.; Javidi, B. Three-dimensional volumetric object reconstruction using computational integral imaging. Opt. Exp. 2004, 12, 483–491. [Google Scholar] [CrossRef] [PubMed]

- Hong, S.-H.; Javidi, B. Three-Dimensional visualization of partially occluded objects using integral imaging. IEEE OSA J. Display Technol. 2005, 1, 354–359. [Google Scholar] [CrossRef]

- Levoy, M. Light fields and computational imaging. IEEE Comput. Mag. 2006, 39, 46–55. [Google Scholar] [CrossRef]

- Hwang, Y.S.; Hong, S.-H.; Javidi, B. Free view 3-D visualization of occluded objects by using computational synthetic aperture integral imaging. J. Disp. Technol. 2007, 3, 64–70. [Google Scholar] [CrossRef]

- Tavakoli, B.; Daneshpanah, M.; Javidi, B.; Watson, E. Performance of 3D integral imaging with position uncertainty. Opt. Exp. 2007, 15, 11889–11902. [Google Scholar] [CrossRef] [PubMed]

- Arimoto, H.; Javidi, B. Integral three-dimensional imaging with computed reconstruction. Opt. Lett. 2001, 26, 157–159. [Google Scholar] [CrossRef] [PubMed]

- Vaish, V.; Levoy, M.; Szeliski, R.; Zitnick, C.L.; Kang, S.-B. Reconstructing occluded surfaces using synthetic apertures: Stereo, focus and robust measures. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; Volume 2, pp. 2331–2338. [Google Scholar]

- Cho, B.; Kopycki, P.; Martinez-Corral, M.; Cho, M. Computational volumetric reconstruction of integral imaging with improved depth resolution considering continuously non-uniform shifting pixels. Opt. Laser Eng. 2018, 111, 114–121. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 3rd ed.; Pearson Education, Inc.: Upper Saddle River, NJ, USA, 2008; pp. 65–68. [Google Scholar]

- Occorsio, D.; Ramella, G.; Themistoclakis, W. Image Scaling by de la Vallée-Poussin Filtered Interpolation. J. Math. Imaging Vis. 2022, 2, 1–29. [Google Scholar] [CrossRef]

- Occorsio, D.; Ramella, G.; Themistoclakis, W. Lagrange-Chebyshev Interpolation for image resizing. Math. Comput. Simul. 2022, 197, 105–126. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, D.; Yang, J.; Han, W.; Huang, H. Deep Networks for Image Super-Resolution With Sparse Prior. In Proceedings of the IEEE International Conference on Computer Vision 2015, Santiago, Chile, 7–13 December 2015; pp. 370–378. [Google Scholar]

- Cho, M.; Mahalanobis, A.; Javidi, B. 3D passive photon counting automatic target recognition using advanced correlation filters. Opt. Lett. 2011, 36, 861–863. [Google Scholar] [CrossRef]

| Nearest | Bilinear | Bicubic | |

|---|---|---|---|

| CPU Time (Photon-counting X) | 1.538 s | 1.539 s | 1.563 s |

| PSR (Photon-counting X) | 267.625 | 281.502 | 283.708 |

| Depth | Bicubic | Bilinear | Nearest |

|---|---|---|---|

| 136.36 | 686.4 | 684.88 | 666.03 |

| 141.36 | 694.05 | 688.82 | 657.41 |

| 146.36 | 701.53 | 697.89 | 672.88 |

| 151.36 | 708.06 | 702.32 | 677.2 |

| 156.3 | 706.54 | 703.33 | 670.42 |

| 161.36 | 723.68 | 713.62 | 669.73 |

| 166.36 | 721.34 | 716.03 | 647.86 |

| 171.36 | 723.47 | 713.39 | 532.76 |

| 176.36 | 727.69 | 718.97 | 629.1 |

| 181.36 | 727.29 | 719.26 | 672.78 |

| 186.36 | 727.47 | 718.81 | 705.19 |

| 191.36 | 721.75 | 718.71 | 700.6 |

| 196.36 | 725.19 | 720.57 | 677.61 |

| 201.36 | 725.17 | 718.62 | 684.69 |

| 206.36 | 721.15 | 714.82 | 675.91 |

| 211.36 | 717.69 | 714.12 | 671.25 |

| 216.36 | 715.21 | 712.53 | 681.92 |

| 221.36 | 715.05 | 707.95 | 677.67 |

| 226.36 | 710.48 | 705.72 | 664.27 |

| Depth | Bicubic | Bilinear | Nearest |

|---|---|---|---|

| 37 mm | 60.369 | 55.347 | 56.645 |

| 37.5 mm | 56.623 | 56.79 | 54.915 |

| 38 mm | 57.572 | 54.012 | 55.894 |

| 38.5 mm | 58.496 | 54.822 | 57.674 |

| 39 mm | 59.836 | 57.243 | 57.977 |

| 39.5 mm | 60.894 | 55.426 | 55.376 |

| 40 mm | 55.991 | 54.728 | 55.693 |

| 40.5 mm | 57.311 | 54.661 | 57.142 |

| 41 mm | 58.025 | 58.222 | 60.222 |

| 41.5 mm | 57.470 | 57.132 | 56.611 |

| 42 mm | 57.428 | 57.582 | 53.567 |

| 42.5 mm | 55.767 | 58.363 | 54.942 |

| 43 mm | 57.045 | 60.997 | 54.663 |

| 43.5 mm | 58.734 | 56.593 | 57.633 |

| 44 mm | 58.733 | 56.037 | 56.383 |

| 44.5 mm | 58.865 | 58.117 | 53.274 |

| 45 mm | 57.778 | 58.805 | 53.966 |

| 45.5 mm | 58.506 | 58.003 | 52.855 |

| 46 mm | 58.043 | 56.135 | 52.302 |

| 46.5 mm | 58.627 | 56.737 | 55.504 |

| 47 mm | 59.309 | 56.073 | 55.979 |

| 47.5 mm | 56.762 | 53.066 | 53.567 |

| 48 mm | 57.923 | 53.987 | 52.069 |

| 48.5 mm | 56.267 | 53.361 | 52.403 |

| 49 mm | 57.754 | 52.126 | 54.06 |

| 49.5 mm | 53.562 | 53.546 | 50.501 |

| Depth (Conventional) | Conventional Method | With Digital Zooming | Our Method |

|---|---|---|---|

| 37 mm (296 mm) | 27.783 | 34.7 | 55.347 |

| 37.5 mm (300 mm) | 27.783 | 36.812 | 56.79 |

| 38 mm (304 mm) | 25.731 | 33.67 | 54.012 |

| 38.5 mm (308 mm) | 25.731 | 35.507 | 54.822 |

| 39 mm (312 mm) | 27.162 | 34.907 | 57.243 |

| 39.5 mm (316 mm) | 27.162 | 34.956 | 55.426 |

| 40 mm (320 mm) | 30.89 | 34.225 | 54.728 |

| 40.5 mm (324 mm) | 30.89 | 35.7 | 54.661 |

| 41 mm (328 mm) | 26.249 | 35.94 | 58.222 |

| 41.5 mm (332 mm) | 26.249 | 36.793 | 57.132 |

| 42 mm (336 mm) | 26.249 | 34.21 | 57.582 |

| 42.5 mm (340 mm) | 26.249 | 38.861 | 58.363 |

| 43 mm (344 mm ) | 26.249 | 41.706 | 60.997 |

| 43.5 mm (348 mm) | 26.249 | 36.669 | 56.593 |

| 44 mm (352 mm) | 31.809 | 35.317 | 56.037 |

| 44.5 mm (356 mm) | 32.183 | 33.931 | 58.117 |

| 45 mm (360 mm) | 32.183 | 33.377 | 58.805 |

| 45.5 mm (364 mm) | 25.502 | 33.653 | 58.003 |

| 46 mm (368 mm) | 25.502 | 33.412 | 56.135 |

| 46.5 mm (372 mm) | 25.502 | 33.185 | 56.737 |

| 47 mm (376 mm) | 28.709 | 34.474 | 56.073 |

| 47.5 mm (380 mm) | 28.709 | 33.81 | 56.066 |

| 48 mm (384 mm) | 28.709 | 33.698 | 53.987 |

| 48.5 mm (388 mm) | 30.993 | 32.317 | 53.361 |

| 49 mm (392 mm) | 30.993 | 33.493 | 52.126 |

| 49.5 mm (396 mm) | 30.993 | 34.092 | 53.546 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yeo, G.; Cho, M. Three-Dimensional Digital Zooming of Integral Imaging under Photon-Starved Conditions. Sensors 2023, 23, 2645. https://doi.org/10.3390/s23052645

Yeo G, Cho M. Three-Dimensional Digital Zooming of Integral Imaging under Photon-Starved Conditions. Sensors. 2023; 23(5):2645. https://doi.org/10.3390/s23052645

Chicago/Turabian StyleYeo, Gilsu, and Myungjin Cho. 2023. "Three-Dimensional Digital Zooming of Integral Imaging under Photon-Starved Conditions" Sensors 23, no. 5: 2645. https://doi.org/10.3390/s23052645

APA StyleYeo, G., & Cho, M. (2023). Three-Dimensional Digital Zooming of Integral Imaging under Photon-Starved Conditions. Sensors, 23(5), 2645. https://doi.org/10.3390/s23052645