Underwater Object Detection Using TC-YOLO with Attention Mechanisms

Abstract

1. Introduction

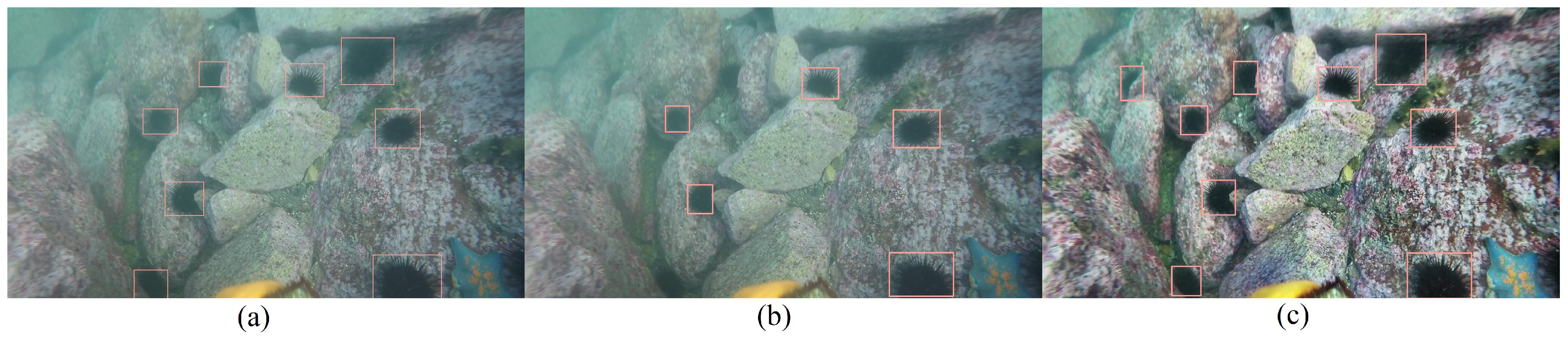

2. Related Work

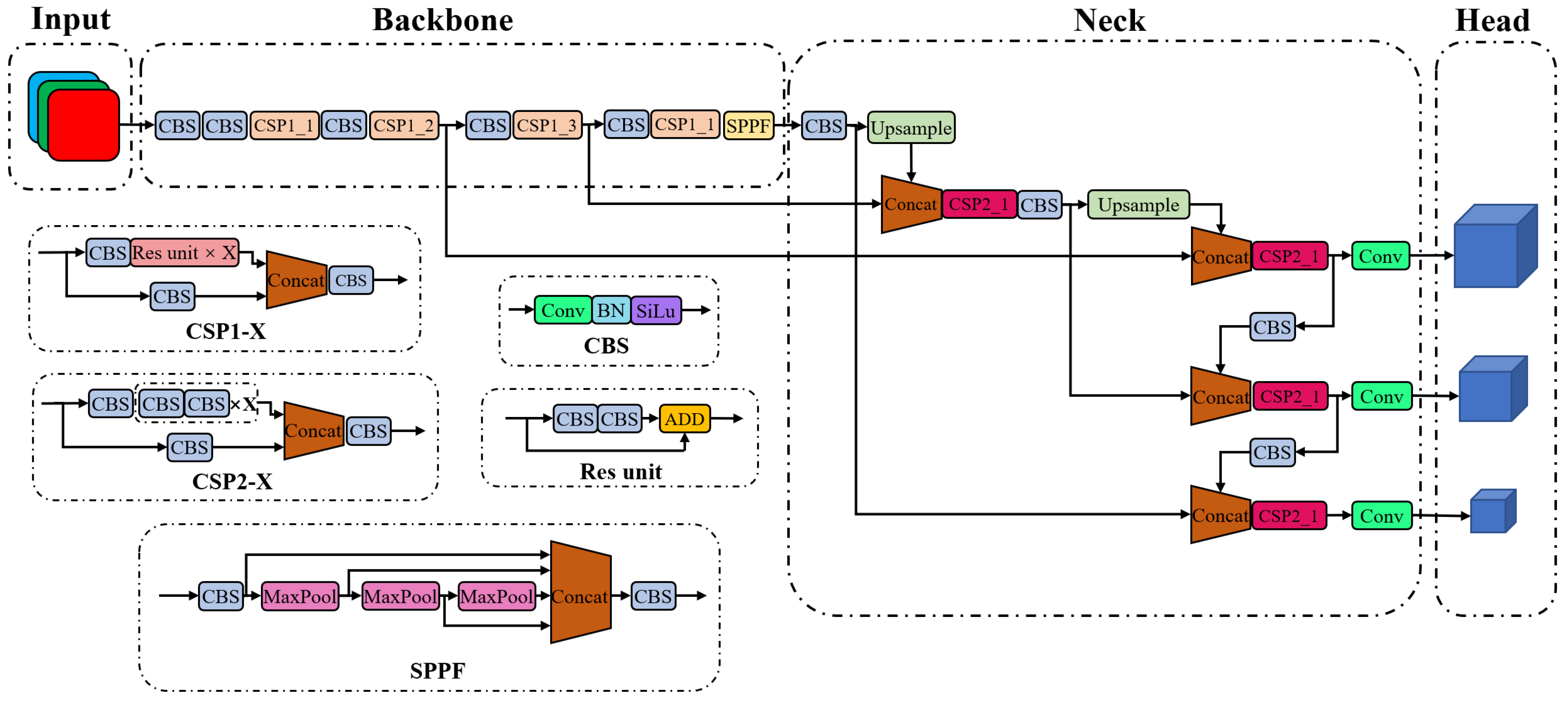

2.1. YOLOv5

2.2. Attention Mechanism

2.3. Label Assignment

2.4. Underwater Images Enhancement

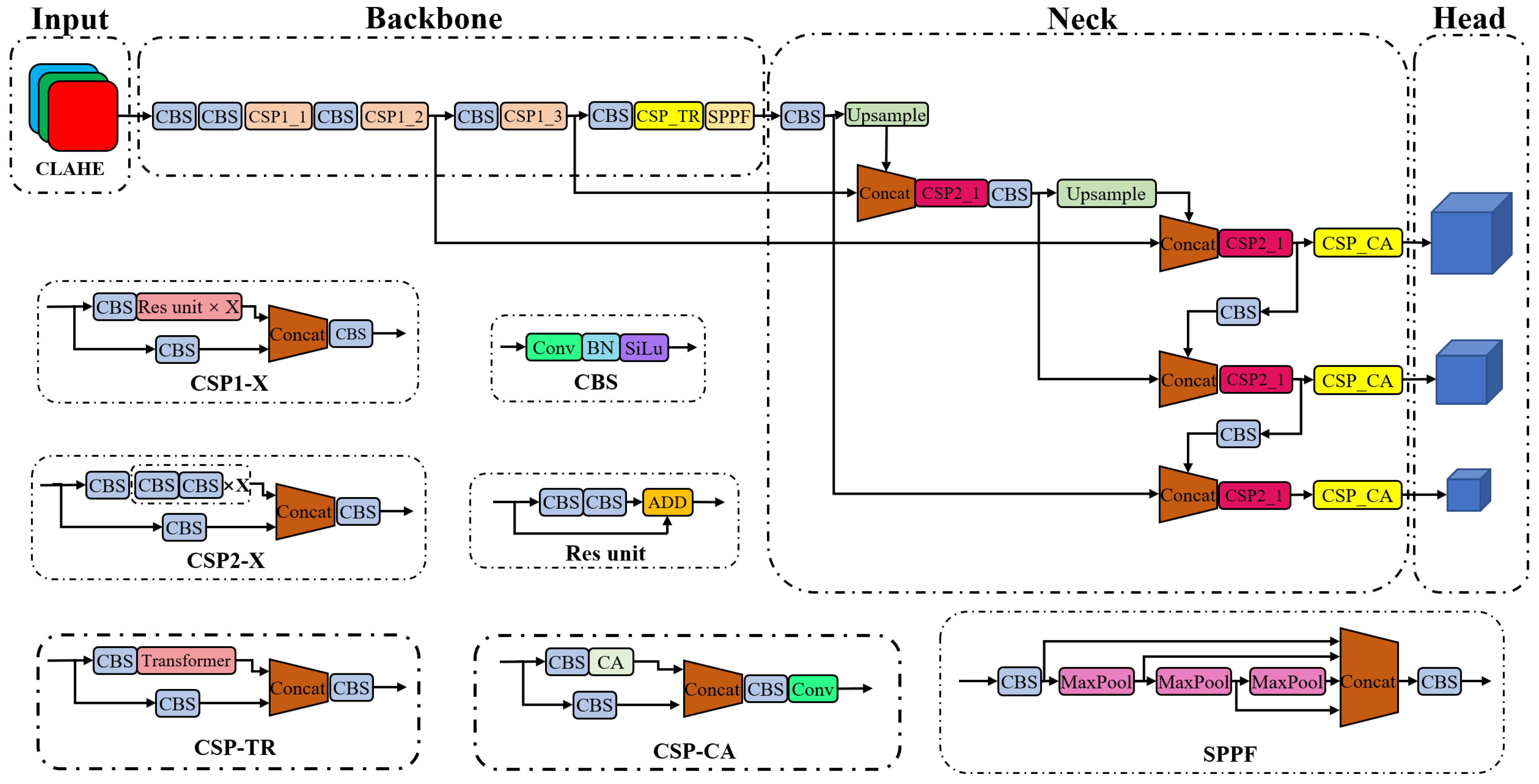

3. Proposed Approach

- Attention mechanisms were integrated into YOLOv5 by adding Transformer and CA modules to develop a new network named TC-YOLO;

- OTA was used to improve label assignment in training for object detection;

- A CLAHE algorithm was employed for underwater image enhancement.

3.1. Dataset

3.2. TC-YOLO

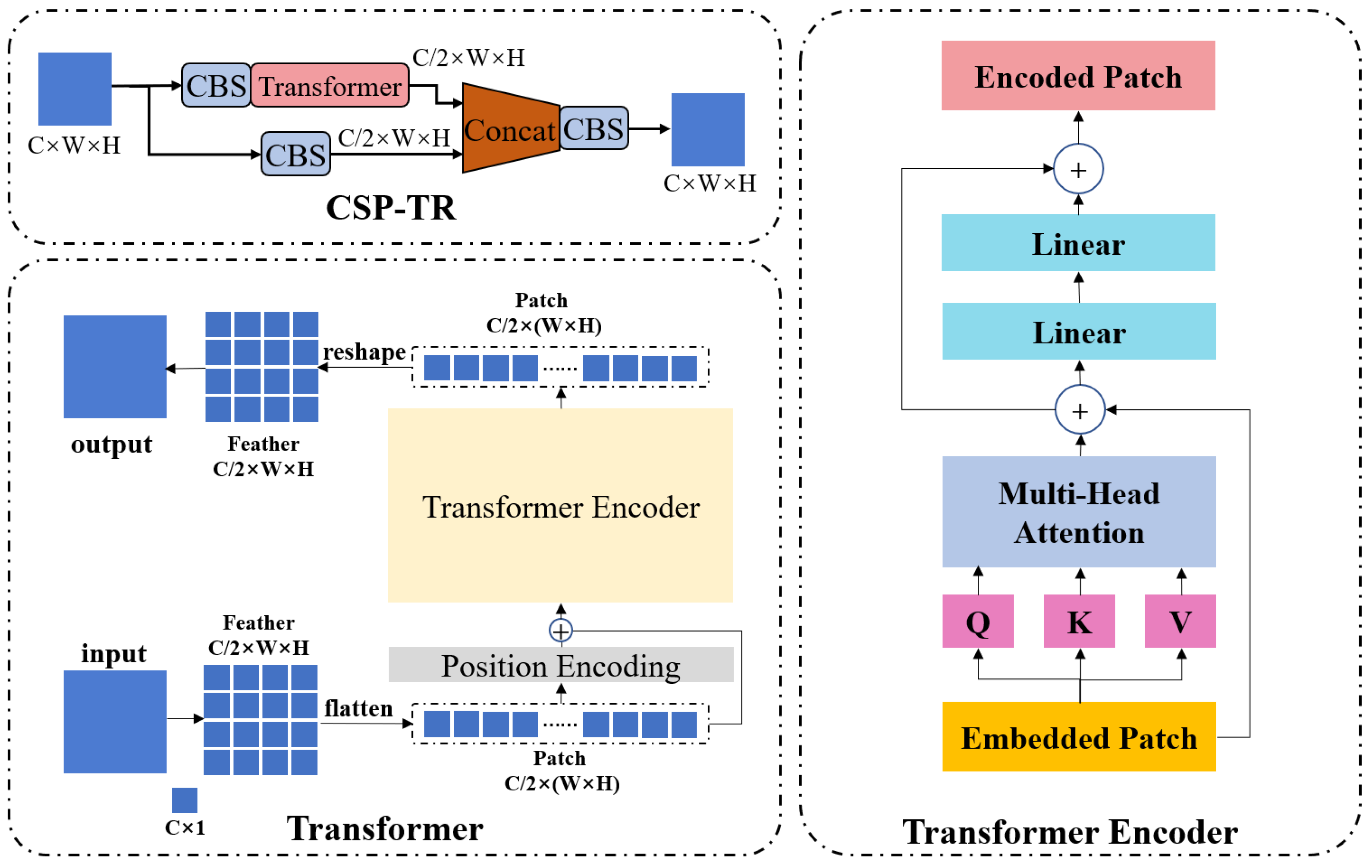

3.2.1. Transformer Module

3.2.2. Coordinate Attention

3.3. Optimal Transport Assignment

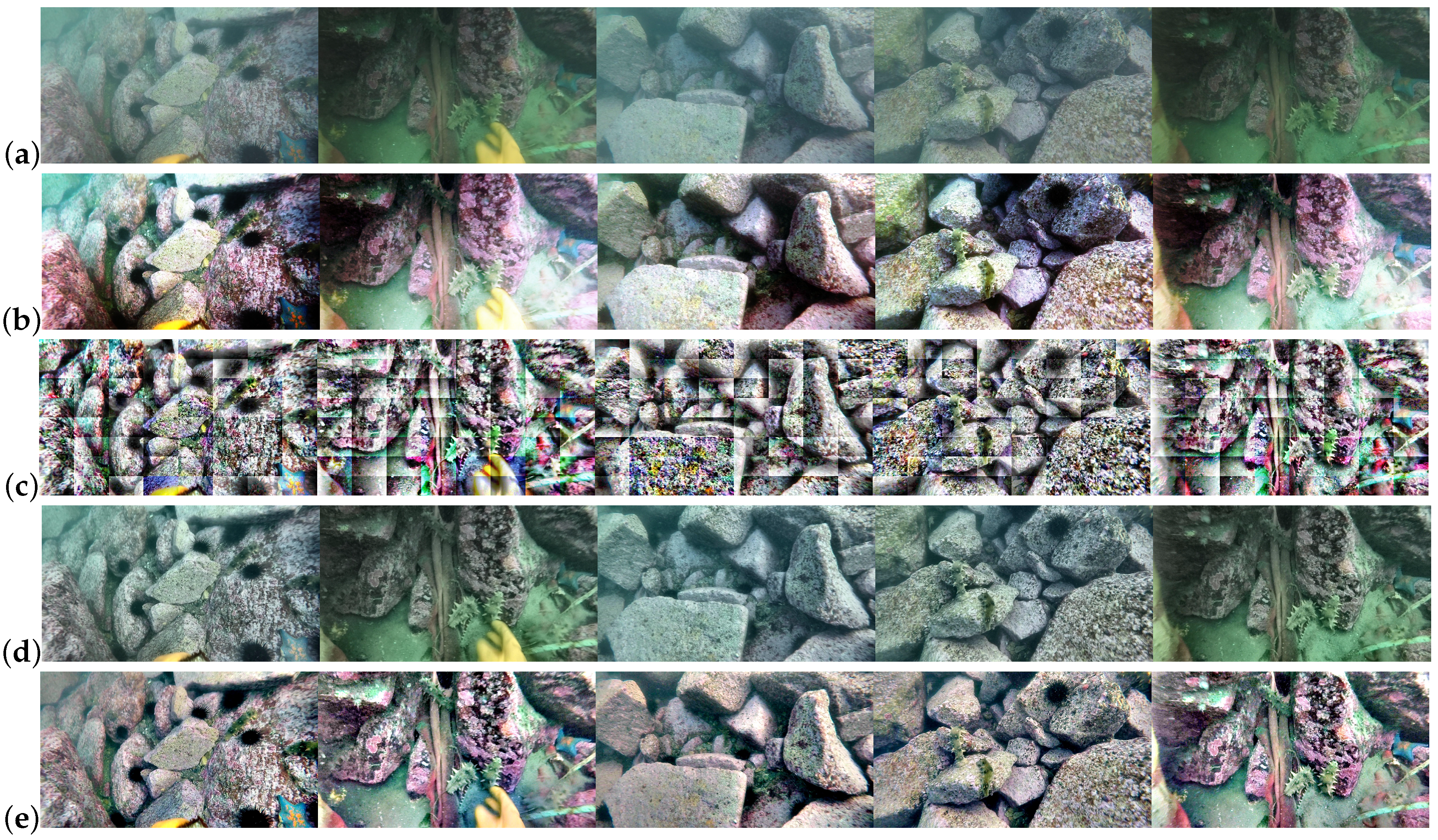

3.4. Underwater Image Enhancement

4. Experiments and Results

4.1. Evaluation Metrics

- Precision, defined as TP/(TP + FP), reflects the false-detection rate of a network;

- Recall, defined as TP/(TP + FN), reflects the missed-detection rate of a network;

- mAPIoU=0.5, defined as the mean average precision (mAP) evaluated for all object classes of the entire dataset, in which IoU = 0.5 was used as the threshold for evaluation;

- mAPIoU=0.5:0.95 was defined as the mean value of multiple mAPs that were evaluated based on different IoU thresholds ranging from 0.5 to 0.95 at intervals of 0.05.

4.2. Comparisons

4.3. Ablation Experiments

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| YOLO | You Only Look Once |

| MSRCR | Multi-Scale Retinex with Color Restoration |

| GAN | Generative Adversarial Network |

| CNN | Convolutional Neural Network |

| CLAHE | Contrast-Limited Adaptive Histogram Equalization |

| RUIE | Real-World Underwater Image Enhancement |

| CSP | Cross-Stage Partial |

| IoU | Intersection over Union |

| GT | Ground Truth |

| CBAM | Convolutional Block Attention Module |

| CA | Coordinate Attention |

| HE | Histogram Equalization |

| AHE | Adaptive Histogram Equalization |

| OTA | Optimal Transport Assignment |

| mAP | Mean Average Precision |

References

- Sun, K.; Cui, W.; Chen, C. Review of Underwater Sensing Technologies and Applications. Sensors 2021, 11, 7849. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Wang, B.; Guo, J.; Zhang, S. Sonar Objective Detection Based on Dilated Separable Densely Connected CNNs and Quantum-Behaved PSO Algorithm. Comput. Intell. Neurosci. 2021, 2021, 6235319. [Google Scholar] [CrossRef] [PubMed]

- Tao, Y.; Dong, L.; Xu, L.; Xu, W. Effective solution for underwater image enhancement. Opt. Express. 2021, 29, 32412–32438. [Google Scholar] [CrossRef] [PubMed]

- Rahman, Z.; Jobson, D.J.; Woodell, G.A. Multi-scale retinex for color image enhancement. In Proceedings of the 3rd IEEE International Conference on Image Processing, Lausanne, Switzerland, 19 September 1996; pp. 1003–1006. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Single Image Haze Removal Using Dark Channel Prior. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 2341–2353. [Google Scholar]

- Han, Y.; Huang, L.; Hong, Z.; Cao, S.; Zhang, Y.; Wang, J. Deep Supervised Residual Dense Network for Underwater Image Enhancement. Sensors 2021, 21, 3289. [Google Scholar] [CrossRef] [PubMed]

- Yeh, C.H.; Lin, C.H.; Kang, L.W.; Huang, C.H.; Lin, M.H.; Chang, C.Y.; Wang, C.C. Lightweight Deep Neural Network for Joint Learning of Underwater Object Detection and Color Conversion. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 6129–6143. [Google Scholar] [CrossRef] [PubMed]

- Song, S.; Zhu, J.; Li, X.; Huang, Q. Integrate MSRCR and Mask R-CNN to Recognize Underwater Creatures on Small Sample Datasets. IEEE Access 2020, 8, 172848–172858. [Google Scholar] [CrossRef]

- Katayama, T.; Song, T.; Shimamoto, T.; Jiang, X. GAN-based Color Correction for Underwater Object Detection. In Proceedings of the OCEANS 2019 MTS/IEEE SEATTLE, Seattle, WA, USA, 27–31 October 2019; pp. 1–4. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Sung, M.; Yu, S.-C.; Girdhar, Y. Vision based real-time fish detection using convolutional neural network. In Proceedings of the OCEANS 2017—Aberdeen, Aberdeen, UK, 19–22 June 2017; pp. 1–6. [Google Scholar]

- Pedersen, M.; Haurum, J.B.; Gade, R.; Moeslund, T. Detection of Marine Animals in a New Underwater Dataset with Varying Visibility. In Proceedings of the IEEE Conference on Computer Vision and Pattern recognition Workshops, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Wang, W.; Wang, Y. Underwater target detection system based on YOLO v4. Int. Conf. Artif. Intell. Inf. Syst. 2021, 107, 1–5. [Google Scholar]

- Zhao, Z.; Liu, Y.; Sun, X.; Liu, J.; Yang, X.; Zhou, C. Composited FishNet: Fish Detection and Species Recognition From Low-Quality Underwater Videos. IEEE Trans. Image Process. 2021, 30, 4719–4734. [Google Scholar] [CrossRef]

- Wei, X.; Yu, L.; Tian, S.; Feng, P.; Ning, X. Underwater target detection with an attention mechanism and improved scale. Multimed. Tools Appl. 2021, 80, 33747–33761. [Google Scholar] [CrossRef]

- Wang, L.; Ye, X.; Xing, H.; Wang, Z.; Li, P. YOLO Nano Underwater: A Fast and Compact Object Detector for Embedded Device. In Proceedings of the Global Oceans 2020: Singapore—U.S. Gulf Coast, Biloxi, MS, USA, 5–30 October 2020; pp. 1–4. [Google Scholar]

- Al Muksit, A.; Hasan, F.; Hasan Bhuiyan Emon, M.F.; Haque, M.R.; Anwary, A.R.; Shatabda, S. YOLO-Fish: A robust fish detection model to detect fish in realistic underwater environment. Ecol. Inform. 2022, 72, 101847. [Google Scholar] [CrossRef]

- Zhao, S.; Zheng, J.; Sun, S.; Zhang, L. An Improved YOLO Algorithm for Fast and Accurate Underwater Object Detection. Symmetry 2022, 14, 1669. [Google Scholar] [CrossRef]

- Hu, X.; Liu, Y.; Zhao, Z.; Liu, J.; Yang, X.; Sun, C.; Chen, S.; Li, B.; Zhou, C. Real-time detection of uneaten feed pellets in underwater images for aquaculture using an improved YOLO-V4 network. Comput. Electron. Agric. 2021, 185, 106135. [Google Scholar] [CrossRef]

- Wang, C.Y.; Liao, H.-Y.; Yeh, I.-H.; Wu, Y.-H.; Chen, P.-Y.; Hsieh, J.-W. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar]

- Wang, K.; Liew, J.; Zou, Y.; Zhou, D.; Feng, J. PANet: Few-shot image semantic segmentation with prototype alignment. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9197–9206. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Neubeck, A.; Van Gool, L. Efficient non-maximum suppression. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; pp. 850–855. [Google Scholar]

- Sun, Y.; Wang, X.; Zheng, Y.; Yao, L.; Qi, S.; Tang, L.; Yi, H.; Dong, K. Underwater Object Detection with Swin Transformer. In Proceedings of the 2022 4th International Conference on Data Intelligence and Security (ICDIS), Shenzhen, China, 24–26 August 2022. [Google Scholar]

- Li, J.; Zhu, Y.; Chen, M.; Wang, Y.; Zhou, Z. Research on Underwater Small Target Detection Algorithm Based on Improved YOLOv3. In Proceedings of the 2022 16th IEEE International Conference on Signal Processing (ICSP), Beijing, China, 21–24 October 2022. [Google Scholar]

- Zhai, X.; Wei, H.; He, Y.; Shang, Y.; Liu, C. Underwater Sea Cucumber Identification Based on Improved YOLOv5. Appl. Sci. 2022, 12, 9105. [Google Scholar] [CrossRef]

- Jaderberg, M.; Simonyan, K.; Zisserman, A.; Kavukcuogl, K. Spatial transformer networks. Adv. Neural Inf. Process. Syst. 2015, 28, 1–9. [Google Scholar]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 42, 2011–2023. [Google Scholar] [CrossRef] [PubMed]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the Computer Vision—ECCV 2018: 15th European Conference, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 13713–13722. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October2021; pp. 10012–10022. [Google Scholar]

- Ge, Z.; Wang, J.; Huang, X.; Liu, S.; Yoshie, O. LLA: Loss-aware label assignment for dense pedestrian detection. Neurocomputing 2021, 462, 272–281. [Google Scholar] [CrossRef]

- Xu, C.; Wang, J.; Yang, W.; Yu, H.; Yu, L.; Xia, G.-S. RFLA: Gaussian receptive field based label assignment for tiny object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 526–543. [Google Scholar]

- Ge, Z.; Liu, S.; Li, Z.; Yoshie, O.; Sun, J. OTA: Optimal transport assignment for object detection. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 303–312. [Google Scholar]

- Li, T.; Rong, S.; Zhao, W.; Chen, L.; Liu, Y.; Zhou, H.; He, B. Underwater image enhancement using adaptive color restoration and dehazing. Opt. Express 2022, 30, 6216–6235. [Google Scholar] [CrossRef] [PubMed]

- Li, C.-Y.; Guo, J.-C.; Cong, R.-M.; Pang, Y.-W.; Wang, B. Underwater image enhancement by dehazing with minimum information loss and histogram distribution prior. IEEE Trans. Image Process. 2016, 25, 5664–5677. [Google Scholar] [CrossRef] [PubMed]

- Han, F.; Yao, J.; Zhu, H.; Wang, C. Underwater image processing and object detection based on deep CNN method. J. Sens. 2020, 2020, 6707328. [Google Scholar] [CrossRef]

- Sahu, P.; Gupta, N.; Sharma, N. A survey on underwater image enhancement techniques. Int. J. Comput. Appl. 2014, 87, 160–164. [Google Scholar] [CrossRef]

- Mustafa, W.A.; Kader, M.M.M.A. A review of histogram equalization techniques in image enhancement application. J. Phys. Conf. Ser. 2018, 1019, 012026. [Google Scholar] [CrossRef]

- Pizer, S.M.; Amburn, E.P.; Austin, J.D.; Cromartie, R.; Geselowitz, A.; Greer, T.; ter Haar Romeny, B.; Zimmerman, J.B.; Zuiderveld, K. Adaptive histogram equalization and its variations. Comput. Vis. Graph. Image Process. 1987, 39, 355–368. [Google Scholar] [CrossRef]

- Reza, A.M. Realization of the contrast limited adaptive histogram equalization (CLAHE) for real-time image enhancement. J. Vlsi Signal Process. Syst. Signal Image Video Technol. 2014, 38, 35–44. [Google Scholar] [CrossRef]

- Rahman, Z.; Woodell, G.A.; Jobson, D.J. A Comparison of the Multiscale Retinex with Other Image Enhancement Techniques; NASA Technical Report 20040110657; NASA: Washington, DC, USA, 1997. [Google Scholar]

- Liu, R.; Fan, X.; Zhu, M.; Hou, M.; Luo, Z. Real-World Underwater Enhancement: Challenges, Benchmarks, and Solutions Under Natural Light. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 4861–4875. [Google Scholar] [CrossRef]

- Robbins, H.; Monro, S. A stochastic approximation method. Ann. Math. Stat. 1951, 22, 400–407. [Google Scholar] [CrossRef]

- Goyal, P.; Dollár, P.; Girshick, R.; Noordhuis, P.; Wesolowski, L.; Kyrola, A.; Tulloch, A.; Jia, Y.; He, K. Accurate, large minibatch SGD: Training imagenet in 1 hour. arXiv 2017, arXiv:1706.02677. [Google Scholar]

- Khasawneh, N.; Fraiwan, M.; Fraiwan, L. Detection of K-complexes in EEG signals using deep transfer learning and YOLOv3. Clust. Comput. 2022, 1–11. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. MobileNetV2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal loss for dense object detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Tan, M.; Le, Q. EfficientNet: Rethinking model scaling for convolutional neural networks. In Proceedings of the 36th International Conference on Machine Learning (ICML 2019), Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Proceedings of the NIPS’15: Proceedings of the 28th International Conference on Neural Information Processing Systems, Cambridge, MA, USA, 7–12 December 2015. [Google Scholar]

| Algorithms | Precision | Recall | Processing Time | |

|---|---|---|---|---|

| 1 | Original Image | 79.7% | 71.1% | – |

| 2 | HE | 77.1% | 70.8% | 3.2 ms |

| 3 | AHE | 75.5% | 68.4% | 3.5 ms |

| 4 | CLAHE | 78.4% | 73.5% | 3.3 ms |

| 5 | MSRCR | 80.2% | 74.3% | 1646 ms |

| Model | Backbone | Precision | Recall | mAPIoU=0.5 | mAPIoU=0.5:0.95 | Floating-point Operations | Number of Parameters | |

|---|---|---|---|---|---|---|---|---|

| 1 | YOLOv3 [11] | MobileNet [55] | 70.6% | 57.2% | 70.2% | 32.5% | 6.58 | 4.5 |

| 2 | YOLOX-tiny [56] | Darknet53 | 68.5% | 59.8% | 67.8% | 34.6% | 7.64 | 5.7 |

| 3 | RetinaNet [57] | EfficientNet [58] | 76.9% | 63.6% | 76.5% | 40.7% | 47.18 | 37.5 |

| 4 | Faster-RCNN [59] | ResNet18 | 75.6% | 65.1% | 74.6% | 41.9% | 72.54 | 47.6 |

| 5 | YOLOv5s (w/ OTA) | CSPDarknet53 | 79.7% | 71.1% | 76.5% | 38.5% | 16.00 | 7.0 |

| 6 | TC-YOLO (w/ OTA & CLAHE) | CSPDarknet53 | 82.9% | 76.8% | 83.1% | 45.6% | 18.60 | 7.7 |

| Case | CLAHE | Transformer | CA Block | Precision | Recall | mAPIOU=0.5 | mAPIOU=0.5:0.95 | Time |

|---|---|---|---|---|---|---|---|---|

| 1 | x | x | x | 79.7% | 71.1% | 76.5% | 38.5% | 16.2 ms |

| 2 | √ | x | x | 78.1% | 73.5% | 75.4% | 37.7% | 18.7 ms |

| 3 | x | √ | x | 80.5% | 71.8% | 78.2% | 40.3% | 17.3 ms |

| 4 | x | x | √ | 81.2% | 72.9% | 78.6% | 41.2% | 16.5 ms |

| 5 | √ | √ | x | 80.4% | 74.2% | 77.6% | 39.5% | 19.4 ms |

| 6 | √ | x | √ | 80.9% | 74.8% | 79.6% | 42.3% | 18.8 ms |

| 7 | x | √ | √ | 81.6% | 75.1% | 80.5% | 43.5% | 17.5 ms |

| 8 | √ | √ | √ | 82.9% | 76.8% | 83.1% | 45.6% | 19.7 ms |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, K.; Peng, L.; Tang, S. Underwater Object Detection Using TC-YOLO with Attention Mechanisms. Sensors 2023, 23, 2567. https://doi.org/10.3390/s23052567

Liu K, Peng L, Tang S. Underwater Object Detection Using TC-YOLO with Attention Mechanisms. Sensors. 2023; 23(5):2567. https://doi.org/10.3390/s23052567

Chicago/Turabian StyleLiu, Kun, Lei Peng, and Shanran Tang. 2023. "Underwater Object Detection Using TC-YOLO with Attention Mechanisms" Sensors 23, no. 5: 2567. https://doi.org/10.3390/s23052567

APA StyleLiu, K., Peng, L., & Tang, S. (2023). Underwater Object Detection Using TC-YOLO with Attention Mechanisms. Sensors, 23(5), 2567. https://doi.org/10.3390/s23052567