Joint Optimization of Multi-User Partial Offloading Strategy and Resource Allocation Strategy in D2D-Enabled MEC

Abstract

1. Introduction

- We jointly optimize the transmit power allocation strategy and the subtask offloading strategy to minimize the average cost under the D2D-enabled MEC communication system; the average cost refers to the weighted sum of the total completion delay and the average energy consumption of the users to complete all subtasks.

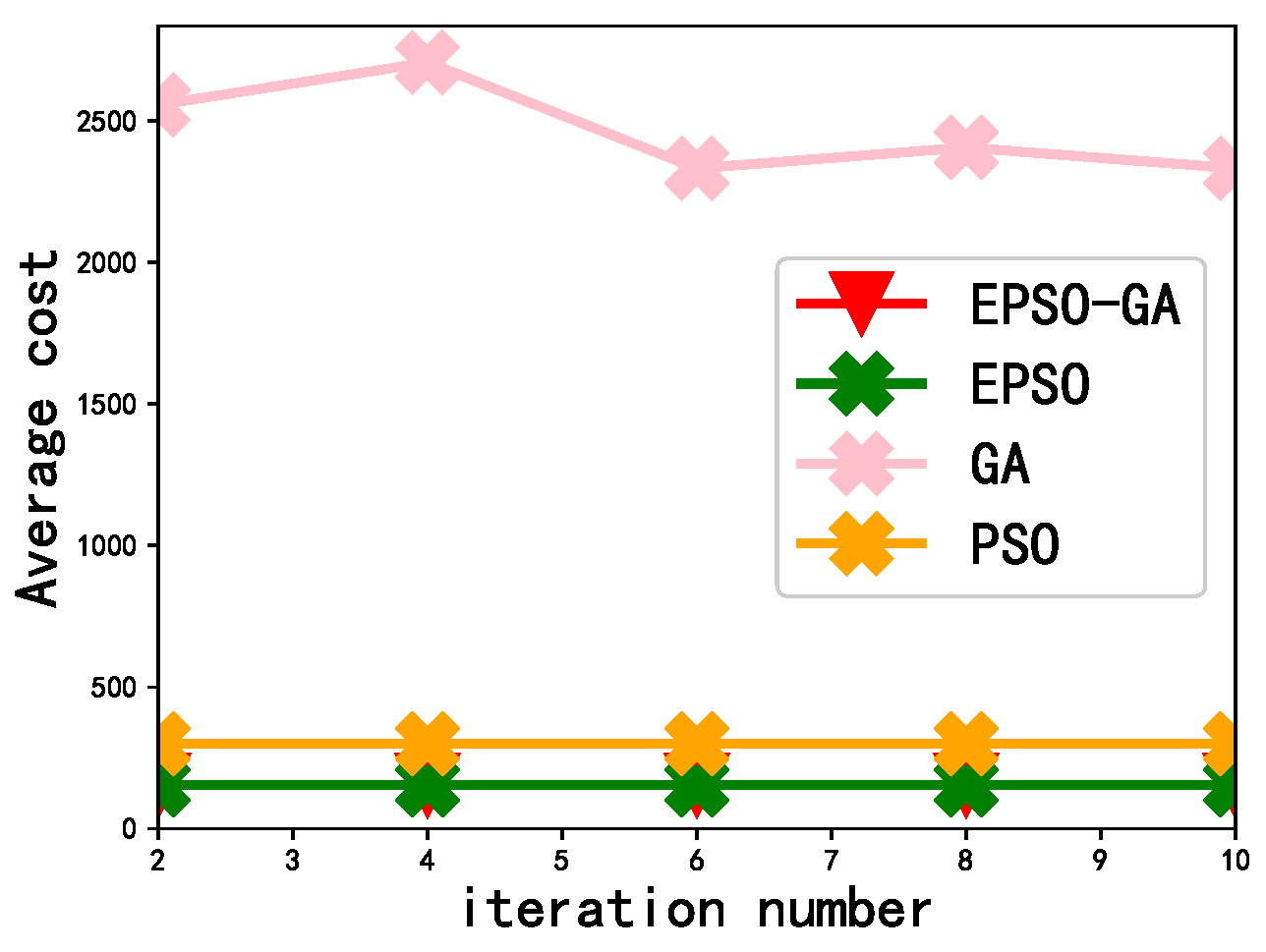

- We propose the enhanced particle swarm optimization algorithm (EPSO), which can optimize the transmit power allocation strategy. The simulation results show that epso can cost less than other algorithms.

- We propose an alternate optimization algorithm, EPSO-GA, that combines the EPSO algorithm and Genetic Algorithm (GA). The algorithm can jointly optimize the transmit power allocation strategy and subtask offloading strategy. The simulation results show that the algorithm can effectively reduce the average cost of the users.

2. System Model and Problem Formulation

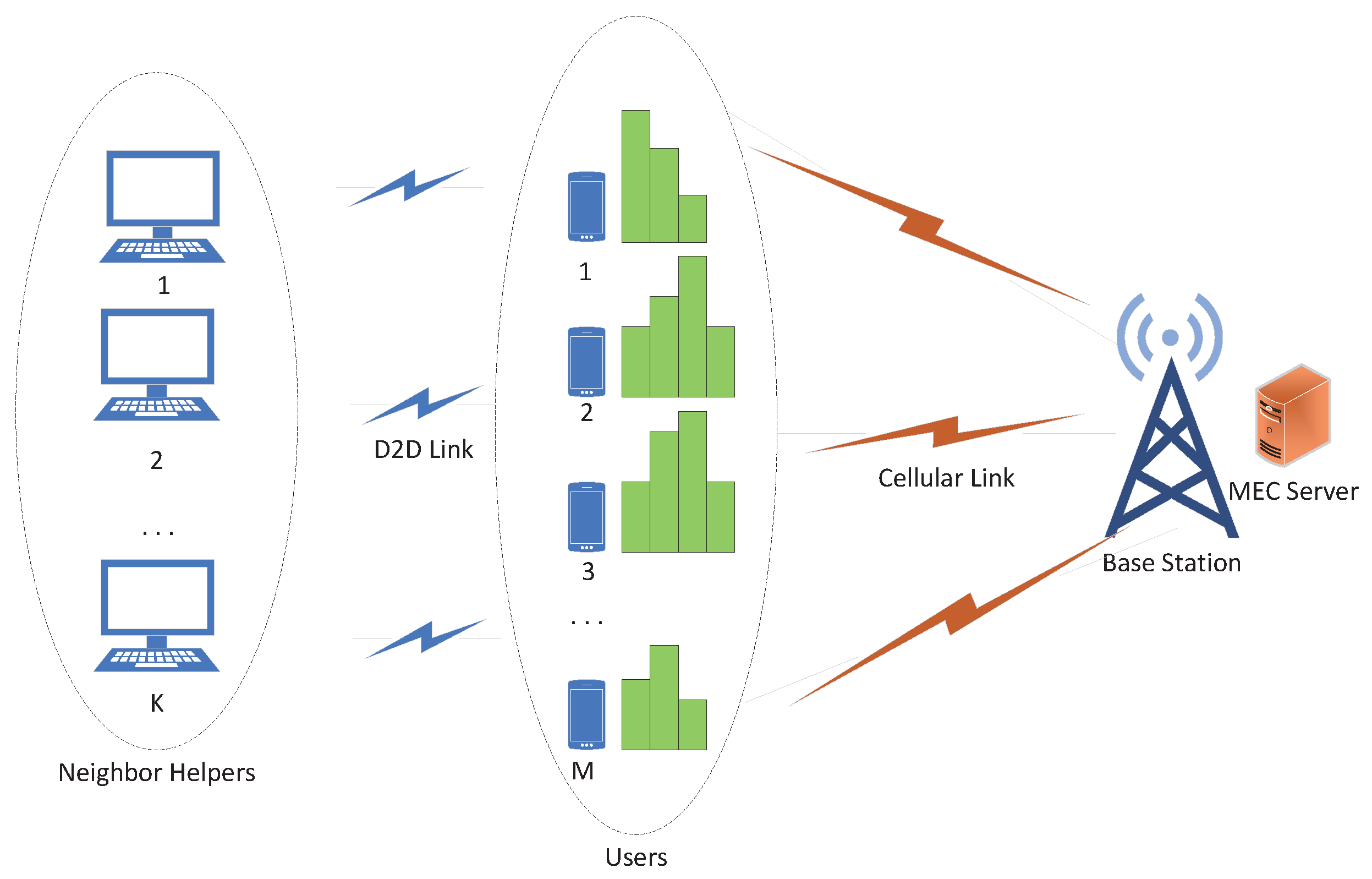

2.1. System Model

- The network is quasi-static, and the network status and user information can be obtained by referencing plugins;

- All devices are single-core, and a device can only execute one task at a time;

- When multiple users offload tasks to a device at the same time, the tasks are executed on a first-come-first-served basis;

- Each subtask is of the same type; the number of CPU cycles required to process 1 bit of data is 1500 cycles [21].

- The channel bandwidths are B.

2.2. Problem Formulation

2.2.1. Local Execution

2.2.2. Edge Execution

2.2.3. Neighbor Helper Execution

2.2.4. Objective Function Formation

3. Joint Optimization of Power Allocation Strategy and Offloading Strategy

3.1. Optimizing Power Allocation Strategy

| Algorithm 1 EPSO |

| Input:M, N, K, , X, , , , , ; |

| Output:P, ; |

|

3.2. Optimization Subtasks Offloading Strategy

3.2.1. Population, Chromosome, Gene

3.2.2. Select

3.2.3. Crossover

3.2.4. Mutation

3.3. Alternate Optimization

| Algorithm 2 EPSO-GA |

| Input: M, N, K, , , , c, , , , , , , , ; |

| Output:P, X, ; |

|

4. Performance Evaluation

4.1. Parameter Settings

4.2. Experimental Comparison Scheme

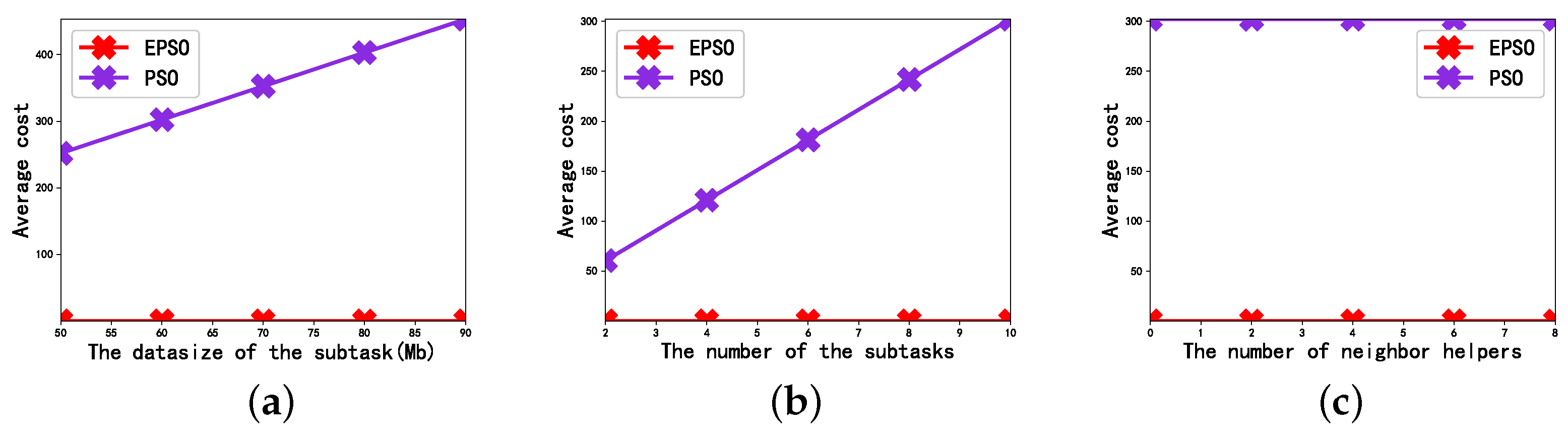

4.3. Performance Evaluation of EPSO Algorithm

4.4. Performance Evaluation of EPSO-GA Algorithm

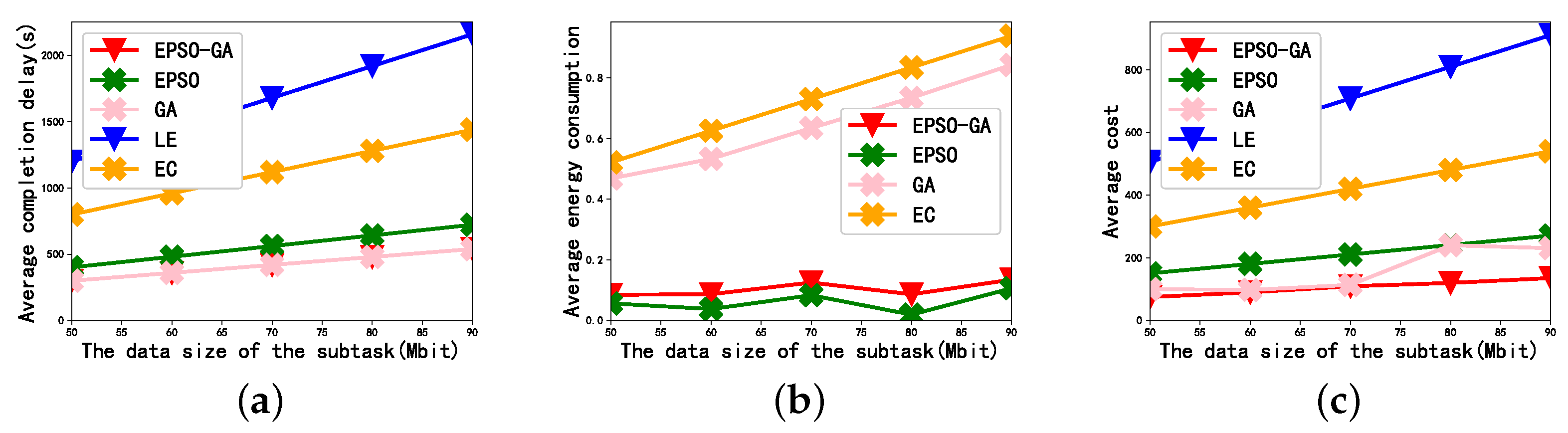

4.4.1. The Effect of Data Size

4.4.2. The Effect of the Number of Subtasks

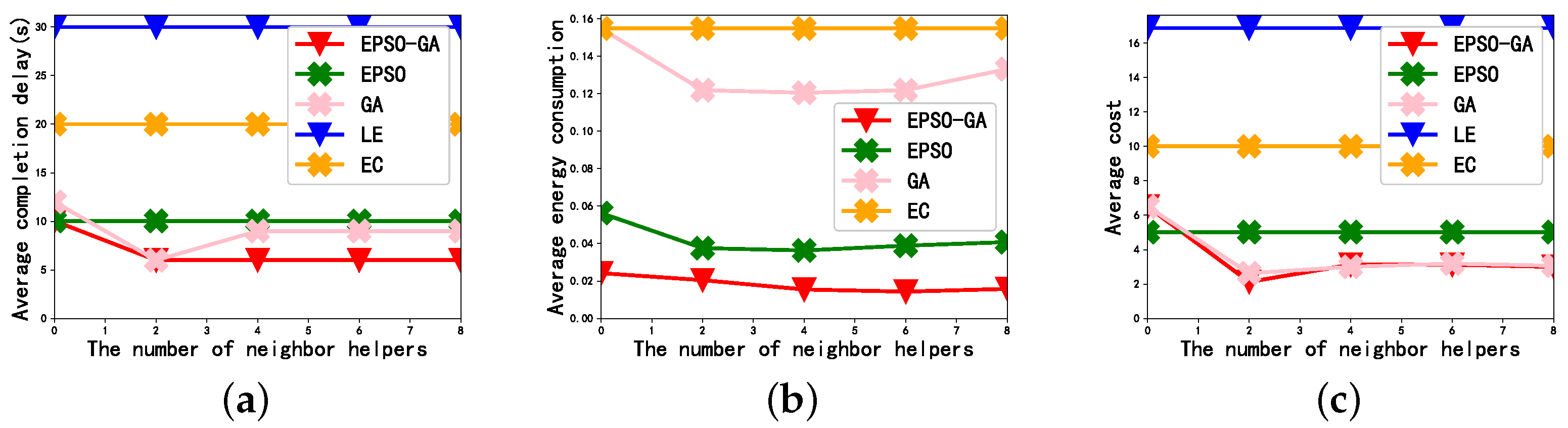

4.4.3. The Effect of the Number of Resource Helpers

4.4.4. The Effect of the Delay Weight Coefficient on the Average Cost

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mao, Y.; Zhang, J.; Letaief, K.B. Dynamic computation offloading for mobile-edge computing with energy harvesting devices. IEEE J. Sel. Areas Commun. 2016, 34, 3590–3605. [Google Scholar] [CrossRef]

- Wang, Y.; Sheng, M.; Wang, X.; Wang, L.; Li, J. Mobile-edge computing: Partial computation offloading using dynamic voltage scaling. IEEE Trans. Commun. 2016, 64, 4268–4282. [Google Scholar] [CrossRef]

- Zhang, W.; Wen, Y.; Guan, K.; Kilper, D.; Luo, H.; Wu, D.O. Energy-optimal mobile cloud computing under stochastic wireless channel. IEEE Trans. Wirel. Commun. 2013, 12, 4569–4581. [Google Scholar] [CrossRef]

- You, C.; Huang, K.; Chae, H. Energy efficient mobile cloud computing powered by wireless energy transfer. IEEE J. Sel. Areas Commun. 2016, 34, 1757–1771. [Google Scholar] [CrossRef]

- Yoo, W.; Yang, W.; Chung, J.M. Energy consumption minimization of smart devices for delay-constrained task processing with edge computing. In Proceedings of the 2020 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 4–6 January 2020; pp. 1–3. [Google Scholar]

- Bi, J.; Yuan, H.; Duanmu, S.; Zhou, M.; Abusorrah, A. Energy-optimized partial computation offloading in mobile-edge computing with genetic simulated-annealing-based particle swarm optimization. IEEE Internet Things J. 2020, 8, 3774–3785. [Google Scholar] [CrossRef]

- Ren, J.; Yu, G.; Cai, Y.; He, Y. Latency optimization for resource allocation in mobile-edge computation offloading. IEEE Trans. Wirel. Commun. 2018, 17, 5506–5519. [Google Scholar] [CrossRef]

- Yan, J.; Bi, S.; Zhang, Y.J.; Tao, M. Optimal task offloading and resource allocation in mobile-edge computing with inter-user task dependency. IEEE Trans. Wirel. Commun. 2019, 19, 235–250. [Google Scholar] [CrossRef]

- Ye, Y.; Shi, L.; Chu, X.; Hu, R.Q.; Lu, G. Resource allocation in backscatter-assisted wireless powered MEC networks with limited MEC computation capacity. IEEE Trans. Wirel. Commun. 2022, 21, 10678–10694. [Google Scholar] [CrossRef]

- Zhang, T.; Xu, Y.; Loo, J.; Yang, D.; Xiao, L. Joint computation and communication design for UAV-assisted mobile edge computing in IoT. IEEE Trans. Ind. Inform. 2019, 16, 5505–5516. [Google Scholar] [CrossRef]

- Cao, C.; Su, M.; Duan, S.; Dai, M.; Li, J.; Li, Y. QoS-Aware Joint Task Scheduling and Resource Allocation in Vehicular Edge Computing. Sensors 2022, 22, 9340. [Google Scholar] [CrossRef] [PubMed]

- Liao, Y.; Qiao, X.; Yu, Q.; Liu, Q. Intelligent dynamic service pricing strategy for multi-user vehicle-aided MEC networks. Future Gener. Comput. Syst. 2021, 114, 15–22. [Google Scholar] [CrossRef]

- Pu, L.; Chen, X.; Xu, J.; Fu, X. D2D fogging: An energy-efficient and incentive-aware task offloading framework via network-assisted D2D collaboration. IEEE J. Sel. Areas Commun. 2016, 34, 3887–3901. [Google Scholar] [CrossRef]

- Zhang, J.; Xia, W.; Yan, F.; Shen, L. Joint computation offloading and resource allocation optimization in heterogeneous networks with mobile edge computing. IEEE Access 2018, 6, 19324–19337. [Google Scholar] [CrossRef]

- Saleem, U.; Liu, Y.; Jangsher, S.; Li, Y.; Jiang, T. Mobility-aware joint task scheduling and resource allocation for cooperative mobile edge computing. IEEE Trans. Wirel. Commun. 2020, 20, 360–374. [Google Scholar] [CrossRef]

- Xing, H.; Liu, L.; Xu, J.; Nallanathan, A. Joint task assignment and resource allocation for D2D-enabled mobile-edge computing. IEEE Trans. Commun. 2019, 67, 4193–4207. [Google Scholar] [CrossRef]

- Wang, H.; Lin, Z.; Lv, T. Energy and delay minimization of partial computing offloading for D2D-assisted MEC systems. In Proceedings of the 2021 IEEE Wireless Communications and Networking Conference (WCNC), Nanjing, China, 29 March–1 April 2021; pp. 1–6. [Google Scholar]

- Li, Y.; Xu, G.; Ge, J.; Liu, P.; Fu, X.; Jin, Z. Jointly optimizing helpers selection and resource allocation in D2D mobile edge computing. In Proceedings of the 2020 IEEE Wireless Communications and Networking Conference (WCNC), Seoul, Republic of Korea, 25–28 May 2020; pp. 1–6. [Google Scholar]

- Qiu, S.; Zhao, J.; Lv, Y.; Dai, J.; Chen, F.; Wang, Y.; Li, A. Digital-Twin-Assisted Edge-Computing Resource Allocation Based on the Whale Optimization Algorithm. Sensors 2022, 22, 9546. [Google Scholar] [CrossRef] [PubMed]

- Lim, D.; Lee, W.; Kim, W.-T.; Joe, I. DRL-OS: A Deep Reinforcement Learning-Based Offloading Scheduler in Mobile Edge Computing. Sensors 2022, 22, 9212. [Google Scholar] [CrossRef] [PubMed]

- Zhao, S.; Yang, Y.; Yang, X.; Zhang, W.; Luo, X.; Qian, H. Online user association and computation offloading for fog-enabled D2D network. In Proceedings of the 2017 IEEE Fog World Congress (FWC), Santa Clara, CA, USA, 30 October–1 November 2017; pp. 1–6. [Google Scholar]

- Hosseinalipour, S.; Nayak, A.; Dai, H. Power-aware allocation of graph jobs in geo-distributed cloud networks. IEEE Trans. Parallel Distrib. Syst. 2019, 31, 749–765. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yong, D.; Liu, R.; Jia, X.; Gu, Y. Joint Optimization of Multi-User Partial Offloading Strategy and Resource Allocation Strategy in D2D-Enabled MEC. Sensors 2023, 23, 2565. https://doi.org/10.3390/s23052565

Yong D, Liu R, Jia X, Gu Y. Joint Optimization of Multi-User Partial Offloading Strategy and Resource Allocation Strategy in D2D-Enabled MEC. Sensors. 2023; 23(5):2565. https://doi.org/10.3390/s23052565

Chicago/Turabian StyleYong, Dongping, Ran Liu, Xiaolin Jia, and Yajun Gu. 2023. "Joint Optimization of Multi-User Partial Offloading Strategy and Resource Allocation Strategy in D2D-Enabled MEC" Sensors 23, no. 5: 2565. https://doi.org/10.3390/s23052565

APA StyleYong, D., Liu, R., Jia, X., & Gu, Y. (2023). Joint Optimization of Multi-User Partial Offloading Strategy and Resource Allocation Strategy in D2D-Enabled MEC. Sensors, 23(5), 2565. https://doi.org/10.3390/s23052565