Abstract

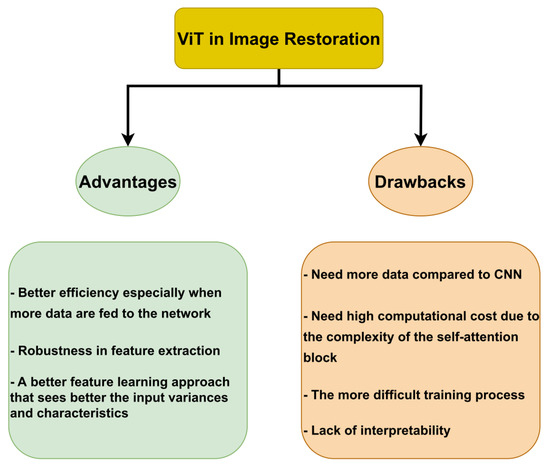

The Vision Transformer (ViT) architecture has been remarkably successful in image restoration. For a while, Convolutional Neural Networks (CNN) predominated in most computer vision tasks. Now, both CNN and ViT are efficient approaches that demonstrate powerful capabilities to restore a better version of an image given in a low-quality format. In this study, the efficiency of ViT in image restoration is studied extensively. The ViT architectures are classified for every task of image restoration. Seven image restoration tasks are considered: Image Super-Resolution, Image Denoising, General Image Enhancement, JPEG Compression Artifact Reduction, Image Deblurring, Removing Adverse Weather Conditions, and Image Dehazing. The outcomes, the advantages, the limitations, and the possible areas for future research are detailed. Overall, it is noted that incorporating ViT in the new architectures for image restoration is becoming a rule. This is due to some advantages compared to CNN, such as better efficiency, especially when more data are fed to the network, robustness in feature extraction, and a better feature learning approach that sees better the variances and characteristics of the input. Nevertheless, some drawbacks exist, such as the need for more data to show the benefits of ViT over CNN, the increased computational cost due to the complexity of the self-attention block, a more challenging training process, and the lack of interpretability. These drawbacks represent the future research direction that should be targeted to increase the efficiency of ViT in the image restoration domain.

1. Introduction

Image Restoration is an umbrella term incorporating many computer vision tasks. These approaches seek to generate a better version of an image that is retrieved in a lower-quality format. The leading seven tasks of image restoration are: Super-Resolution, Image Denoising, General Image Enhancement, JPEG Compression Artifact Reduction, Image Deblurring, Removing Adverse Weather Conditions, and Image Dehazing. All these tasks share among them many common characteristics. First of all, they are image generation tasks. This means that we generate new data that tries to mimic the authentic representation of the information being captured inside the image. Second, they all try to remove a specific type of corruption applied to the image. This corruption may be a noise, a bad quality image sensor, a capturing imperfection, a weather condition that hides some information, etc. Third, all of them are now becoming based on deep learning techniques. This architectural change is due primarily to their effectiveness compared to old techniques. Hence, any advancement in deep learning theory directly affects these tasks and brings new ideas for any image restoration researcher.

1.1. Techniques Used in Image Restoration

Since deep learning popularity began in the computer vision field in 2012 [1], Convolutional Neural Networks (CNN) have been the default feature extractor used to learn patterns in the data. An overwhelming number of models and architectures have been designed and improved over the years to increase the learning capability of CNNs. Generic architectures, as well as task-specific architectures, were designed. Before 2020, it was the rule for any image restoration researcher to customize a model based on CNN to increase the efficiency of the state-of-the-art works on his task [2,3,4,5]. This approach is adopted in most computer vision tasks [6,7,8,9,10,11,12,13,14].

Since 2017, the Transformer architecture has made a significant breakthrough in the Natural Language Processing (NLP) domain. The self-attention mechanism and the new proposed architecture have proven to have a considerable ability to manage sequential data efficiently. Nevertheless, adoption in the computer vision domain was delayed until 2020, when the Vision Transformer (ViT) architecture was first introduced [15]. Up to now, ViT has proven efficient and competed very well with CNN. Here also, an overwhelming number of architectures inspired by ViT have been introduced with an attractive efficiency. Moreover, ViT has been demonstrated to solve many issues traditionally associated with CNN. Therefore, a debate between these two architectures’ advantages and drawbacks has emerged to decide where to go in future research directions.

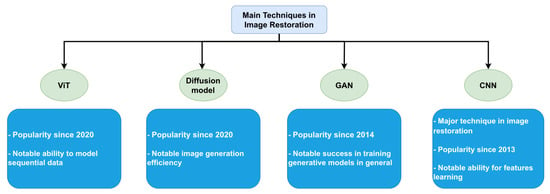

Many techniques have been developed for image restoration, including those based on CNNs and ViTs. However, CNNs have several limitations regarding their ability to learn a mapping from degraded images to reach their original counterparts. In addition, the quality of the images generated by CNNs is often far from the original images. In contrast, some techniques address these challenges of CNN. These techniques include the Generative Adversarial Networks (GANS) [16] and the Diffusion model [17], as shown in Figure 1. GANs are a class of deep learning models that involve training two neural networks, a generator, and a discriminator, to work together to generate new, synthetic data similar to the real data. Despite the success of GANs in various tasks, their application in image restoration methods has often been found to produce suboptimal results due to issues such as pattern collapse, excessive smoothing, artifacts, and training instability. The Diffusion model, on the other hand, is a generative model that uses a diffusion process to generate new images by modeling the image formation process. However, while diffusion models have been used in image generation and denoising tasks, they may struggle to generate images with accurate global features and require a high computational cost. This is because the diffusion process models the image formation process, but they may not be as effective as other models in capturing global features. Additionally, the high computational cost is a limitation that needs to be considered when using these models.

Figure 1.

The main techniques used in Image Restoration.

The current study aimed to answer the question: what is the impact of ViT on the Image Restoration domain? The study is based on a study of around 70 papers. First, every research work has been classified into the seven fields of image restoration. Then, a description of the state-of-the-art techniques in these seven fields is presented. Later, the advantages, drawbacks, and future research directions are discussed.

1.2. Related Surveys

To our knowledge, no literature survey has targeted this problematic. The existing surveys about ViT are more generic in scope and do not consider the image restoration domain’s peculiarities. For example, Khan et al. [18] have explored the use of vision transformers in popular recognition tasks, generative modeling, multi-modal tasks, video processing, low-level vision, and three-dimensional analysis. Han et al. [19] undertook a generic review of ViT for different computer vision tasks; they compared their advantages and drawbacks. Then, they classified the tasks into four classes: low-level vision, high/mid-level vision, backbone network, and video processing. They also discussed the application of vision transformers to real devices. Islam et al. [20] provided an overview of the best-performing modern vision Transformer methods and compared the strengths, weaknesses, and computational costs between vision Transformers and CNN methods on the most benchmark datasets. Shamshad et al. [21] were more specific and focused on one domain. They presented a comprehensive review of the application of vision transformers in medical imaging, including medical image segmentation, classification, detection, clinical report generation, synthesis, registration, reconstruction, and other tasks. They also discussed unresolved problems in the architectural designs of vision transformers.

Looking for surveys that focused only on image restoration tasks, no one has elaborated a study about ViT architectures in this regard. For example, Su et al. [22] generally tackled deep learning algorithms without focusing on ViT specifically. They presented a comparative study of deep learning techniques used in image restoration, including image super-resolution, dehazing, deblurring, and denoising. They also discussed the deep network structures involved in these tasks, including network architectures, skip connection or residual, and receptive field in autoencoder mechanisms. They also focused on presenting an effective network to eliminate errors caused by multi-tasking training in the super-resolution task. In Table 1, the related surveys to our research are described based on two main characteristics. The first is the algorithm studied (CNN and/or ViT). The second is the area of the survey. As concluded, a specific survey that studies the effect of ViT in the Image Restoration domain is still lacking in the current state of the art.

Table 1.

Comparison between our survey and the related surveys in image restoration.

1.3. Main Contributions and Organization of the Survey

A gap is noted in these related surveys. A specific survey that analyses the impact of only ViT-based architectures on the image restoration domain is still needed, considering all of its peculiarities. The main contributions elaborated in this research are:

- The study lists the most important ViT-based architectures introduced in the image restoration domain, classified by each of the seven subtasks: Image Super-Resolution, Image Denoising, General Image Enhancement, JPEG Compression Artifact Reduction, Image Deblurring, Removing Adverse Weather Conditions, and Image Dehazing.

- It describes the impact of using ViT, its advantages, and drawbacks in relation to these image restoration tasks.

- It compares the efficiency metrics, such as The Peak Signal-to-Noise Ratio (PSNR) and the Structural Similarity Index Metric (SSIM) of the ViT-based architectures, on the main benchmarks and datasets used in every task of image restoration.

- It discusses the most critical challenges facing ViT in image restoration and presents some solutions and future work.

This study is organized as follows, Section 2 introduces the ViT architecture and presents its success keys, including self-attention, sequential feature encoding, and feature positioning. Section 3 details the evaluation metrics used to assess the performance of image restoration algorithms. These evaluation metrics will be used later for the whole paper, and their definitions are worth describing from the beginning. Section 4 describes the state of the art of ViT-based architectures in each of the seven image restoration tasks. Section 5 presents a discussion of the advantages, drawbacks, and the main challenges of ViT in image restoration. Finally, in Section 6, our work is concluded alongside a description of the next research problematics to be addressed in future works.

2. Vision Transformer Model

In this section, we introduce ViT and the most important principles upon which it is built, including structure, self-attention, multi-headed self-attention, and the mathematical background behind ViT. The ViT was introduced by [15] in 2020. The ViT is a deep neural network architecture for image recognition tasks based on the Transformer architecture initially developed for natural language processing tasks. The main idea behind ViT is to treat an image as a sequence of tokens (typically, image patches). Then, the transformer architecture is used to process this sequence. The transformer architecture, on which ViT is based, has been adapted to many tasks and is effective in many of them, such as image restoration and object detection [23,24].

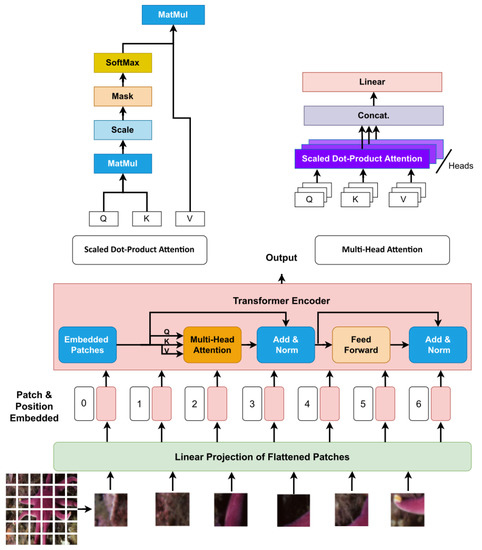

The ViT is based on the Transformer architecture. Figure 2 shows the main stages of VIT architecture. where the image is tokenized and embedded by dividing it into a grid of non-overlapping patches, flattening each patch, and mapping it to a high-dimensional space through a linear transformation followed by normalization. This process, called tokenization and embedding, allows the model to learn both global and local information from an image.

Figure 2.

The general form of the ViT architecture.

The transformer architecture is designed to process any sequence, but it does not explicitly consider each token’s position in the sequence. To address this limitation, the ViT architecture uses pre-defined positional embeddings. These positional embeddings are additional vectors that encode the position of each token in the sequence, and they are added to the token embeddings before they are passed through the transformer layers. This mechanism allows the model to capture the relative position of the tokens and to extract spatial information from the image.

The core of the ViT architecture lies in the Multi-head Self-Attention (MSA). This mechanism allows the model to simultaneously attend to different parts of the image. It is composed of several different “heads”, each of which computes attention independently. These attention heads can attend to different regions of the image and produce different representations, which are then concatenated to form a final image representation. This allows the ViT to capture more complex relationships between the input elements, as it can attend to multiple parts of the input simultaneously. However, it also increases the complexity and computational cost of the model, as it requires more attention to heads and more processing to combine the outputs from all the heads. MSA can be expressed as follows:

where , is the number of heads. MSA depends on the self-attention mechanism introduced by the authors of [25]. The basic idea of self-attention is to estimate how closely one element relates to the other elements in the sequence of elements. In digital images, the image is divided into several patches, then each patch is converted into a sequence, and then self-attention estimates the relevance of one sequence to the rest of the sequences.

The self-attention mechanism is the backbone of transformers, which explicitly model the interactions and connections between all sequences of prediction tasks. The self-attention layer also collects global information and features from the entire input sequence, distinguishing it from CNN. It is for this reason that transformers have a larger model capacity. To understand more about the mechanism of self-attention, we may consider a sequence of elements by , where is the embedding dimension to represent each element. The purpose of the attention mechanism is to estimate the interactions and connections between all n elements by encoding each element and then capturing global information and features. In order to implement the attention mechanism, three learnable weight matrices must first be defined, including Queries , Keys , and Values , where . The input sequence is projected by weights matrices , , . The output of attention layer is Equation (2):

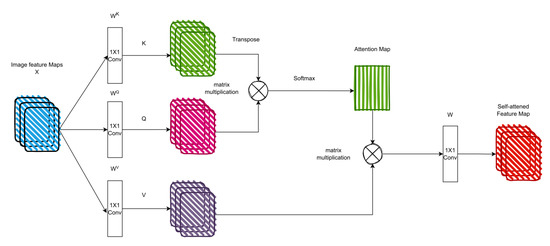

The attention mechanism computes the dot product of Q (query) with all K (keys) for a given element in the sequence. Then the SoftMax operator is used for normalization to get the attention outputs. Each element in the sequence becomes the sum weight of all elements in the entire sequence; attention outputs generate these weights. Then, using the dot product, the attention output is multiplied by the V (value) matrix. Figure 3 shows an example of the self-attention model.

Figure 3.

The self-attention model used in ViT.

The main advantage of the self-attention mechanism compared to the convolution mechanism is that the values of the filters are calculated dynamically instead of static filters, as in the case of the convolution operation, where the filters remain the same for any input. In addition, self-attention is distinguished from standard convolution in the stability of permutations and changes in the number of insertion points. As a result, it can process irregular data and input. Based on literature reviews, it appears theoretically that the process of self-attention with positional encodings is more flexible in extracting local features than convolutional models [26]. Cordonnier et al. [27] investigated the connections between self-attention and convolution operations. Their results show that self-attention with sufficient parameters is a very flexible and more general process that can extract local and global features. Furthermore, based on previous studies and research findings on various computer vision tasks, self-attention can learn both local and global features. It also provides adaptive learning of kernel weights, as in research on deformable convolutions [28].

The ViT architecture requires a large amount of data for training to achieve optimal performance. This is a common challenge encountered with transformer models. To address this limitation, a two-stage training approach is often employed. In the first stage, supervised or self-supervised [24,29] pre-training is performed on a large dataset or a combination of several datasets [30]. In the second stage, the pre-trained weights are used to fine-tune the model on smaller or medium-sized datasets for specific tasks such as object detection, classification, and image restoration. This approach has been demonstrated to be effective in previous research, where it was shown that the accuracy of the Vision Transformer model decreased by 13% when trained only on the ImageNet dataset and increased when trained on the JFT dataset containing 300 million images. This highlights the importance of pre-training transformer models for vision and language on large-scale datasets.

Obtaining labeled datasets for training AI models can be a significant challenge due to the high cost and difficulties associated with the process. To address this, self-supervised learning (SSL) has emerged as a promising alternative to traditional supervised learning. SSL enables training models on a large number of parameters, up to a trillion, as demonstrated in [31]. The basic principle of SSL is to train a model on unlabeled datasets by applying various transformations to the images, such as slight adversarial changes or replacing one object with another in the same scene, without altering the semantics of the base class. This allows the model to learn from the data without needing manual labeling. For more information and a comprehensive survey of SSL technology, readers can refer to references [32,33].

3. Evaluation Metrics

This section introduces the most widely used image quality measurement methods in image restoration. In addition, we present the most modern methods for measuring image quality based on ViT. The quality of digital images can be defined based on the measurement method. Measurement methods depend on viewers’ perceptions and visual attributes. Image Quality Assessment (IQA) methods are classified into subjective and objective methods [34]. Subjective methods rely on image quality evaluators, but these methods take a lot of time, effort, and cost due to large datasets. Therefore, objective methods are more appropriate in image restoration tasks, according to [35]. Objective methods need the ground-truth image and the predicted image. PSNR is one of the most widely used image quality measures. PSNR is maximum signal to maximum noise. In image restoration, the PSNR results from divisibility between the maximum pixel value and Mean Square Error (MSE) between the ground-truth image and the predicted image. The PSNR is defined as:

where is the maximum pixel value, i.e., the max value of 255 for 8-bit color depth and is defined as:

where is the ground truth image, and is the predicted image. The PSNR approach can be misleading because the predicted image may not be perceptually similar to a ground truth image [36,37]. However, the PSNR approach is still used in all image restoration research, and research results are also compared using it. Human perception is the best measure of image quality for its ability to extract structural information [38]. However, ensuring human visual validation of the data remains very expensive, especially with large datasets. An image quality metric that measures the structural similarity between ground truth images and predicted images by comparing luminance, contrast, and structural information is called SSIM [39].

where is the average value for the ground truth image, is the average value of the predicted image, is the standard deviation for the ground truth image, is the standard deviation of the predicted image, and is the co-variation. are two variables to avoid division by zero, and .

In image restoration research, two of the most widely used image quality metrics are SSIM and PSNR. SSIM, in particular, is based on the human perception of structural information in images and is commonly used for generic images, as discussed in [40]. However, when applied to medical images, where brightness and contrast may not be consistent, the SSIM metric can be unstable, as reported in [41]. To address this issue, the authors in [42] proposed a model for measuring the perceptual quality of images. They tested this model using the Restormer model [43] in various noise removal and adverse weather conditions. They then applied an object detection model to the same images before and after using the Restormer model. They found that while SSIM and PSNR results are often unstable, the results from the object detection model were more consistent. This led the authors to propose a Grad-CAM model to measure image quality, which bridges the gap between human perception and machine evaluation [44].

Recently, Cheon et al. [45] proposed a new Image Quality Transformer (IQT) which utilizes the transformer architecture. IQT extracts perceptual features from both ground-truth and predicted images using a CNN backbone. These features are then fed to the transformer encoder and decoder, which compares the ground-truth images and the predicted images. The transformer’s output is then passed to a prediction head, which predicts the quality of the images. In further research, Conde et al. [46] replaced the IQT encoder-decoder with the Conformer architecture, which utilizes convolution layers from the Inception-ResNet-v2 model [47] along with attention operations to extract both global and local features.

4. Image Restoration Tasks

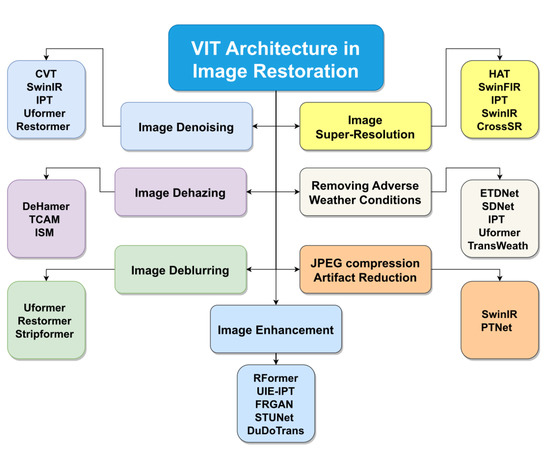

In this section, we will introduce all transformer-based image restoration tasks, in addition to presenting a comparative study between all models of vision transformers in each sub-task. Figure 4 presents a taxonomy of all vision tasks with the most common head transformer models.

Figure 4.

The most popular image restoration techniques based on ViT.

4.1. Image Super-Resolution

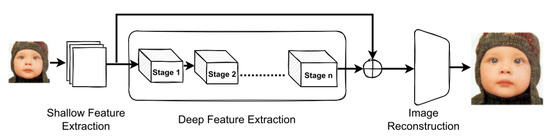

Super-resolution is a technique for reconstructing high-resolution images from low-resolution input images. SR has been widely applied in image restoration tasks due to its value in various applications and its ability to overcome the limitations imposed by imaging systems [48]. SR can be broadly classified into two categories: multi-image super-resolution (MISR) and single-image super-resolution (SISR). MISR generates a single high-resolution image from multiple low-resolution images, while SISR generates a high-resolution image from a single low-resolution input. Vision transformer (VT)-based SR models, such as HAT [49], SwinIR [50], and SwinFIR [51], have gained widespread adoption in image restoration and are depicted in Figure 5 as a general block diagram.

Figure 5.

The block diagram of the best ViT-based super-resolution models.

In most applications, obtaining multiple images of the same scene is often complex and costly. Therefore, using only a single image in super-resolution technology is a suitable and effective solution for many applications. In most research papers, low-resolution images are typically obtained by applying a degradation model to high-resolution images, which involves blurring followed by downsampling. Traditional methods use interpolation and blur to enhance low-resolution images and rely on iterative optimization frameworks. Yang et al. [52] conducted an investigative comparison of ten high-resolution papers that utilize iterative optimization techniques and sparse representation, including [53,54,55].

Traditional methods for image restoration are often ineffective and time-consuming. In contrast, deep neural networks can learn from large amounts of training data rather than relying on complex statistical models. With the advancement of computing devices and GPUs, neural networks have become more prevalent. In recent years, the vision transformer has emerged as a strong contender in the field of computer vision, including in the area of image SR. According to the results of many research papers, vision transformers have shown high accuracy and efficiency in SR techniques. In this survey, we can classify super-resolution transformer networks based on the input size, the type of images, and the type of task. A low-resolution image can be expressed as

where is a high-resolution image, is effect blur, and is random noise. SR models based on transformers can be divided based on the nature of the images. Using a generic image to train super-resolution models is the most common and comprehensive method in most super-resolution applications. One of the most famous models is SwinIR [50], which is widely popular. SwinIR is an image restoration model that does more than one task depending on the type of training. SwinIR is inspired by the swin v1 [56] transformer used in image classification. SwinIR consists of a convolutional layer at the beginning called shallow feature extraction. In the middle, SwinIR consists of 36 consecutive swin blocks divided into six stages; each stage consists of 6 blocks and is called deep feature extraction. Finally, the reconstruction layer, which contains more than one type, depending on the task, in super-resolution, is an enlargement layer for the image dimensions. Because of the high efficiency of the swin model in extracting global and local features, it has also been used in many super-resolution models such as SWCGAN [57], SwinFIR [51] and 3DT-Net [58].

The HAT [49] model is a model that combines self-attention and channel attention, so it has a high ability to aggregate features. HAT also proposed an overlapping cross-attention module to collect information through cross-windows in a new and highly efficient way. Based on the results shown in Table 2, Table 3 and Table 4, HAT is the highest model in PSNR and SSIM scores across all scales. The SwinFIR model is a recent variation of the SwinIR model that utilizes Fast Fourier Convolution (FFC) components to extract global information suitable for the task of super-resolution. This is achieved by combining global and local features extracted by the FFC and residual modules.

Table 2.

Generic Image Super-Resolution-Based on ViT for scale ×2. Best records are emphasized in bold font.

Table 3.

Generic Image Super-Resolution-Based on ViT for scale ×3. Best records are emphasized in bold font.

Table 4.

Generic Image Super-Resolution-Based on ViT for scale ×4. Best records are emphasized in bold font.

Recently, authors in [65] proposed the first transformer-based light field super-resolution (LFSR) processing model, called SA-LSA. The model divides each light field into a set of image sequences and utilizes a combination of convolutional layers and a self-attention network to reinforce non-local spatial angular dependencies in each sequence. Table 5 compares SA-LSA with other super-resolution models on light field images.

Table 5.

Light Field Super-Resolution (LFSR). The best records are in bold font.

SWCGAN uses a swin-inspired switched window self-attention mechanism combined with the GAN model to combine the advantages of swin switches with convolutional layers. SWCGAN has been applied to remote-sensing images. Table 6 compares super-resolution models on remote sensing images.

Table 6.

Image Super-Resolution based on ViT in the Remote Sensing field.

Transformers have also been applied to the super-resolution of medical images, such as in the case of the ASFT model [68]. ASFT consists of three branches, two of which transmit similar features from MRI slices, and the third branch builds the super-resolution slice. The performance of ASFT is compared with other super-resolution models on MIR medical images in Table 7 of the corresponding paper.

Table 7.

MRI Images Super-Resolution. The best records are in bold font.

The 3DT-Net architecture is a transformer-based approach that leverages the spatial information in the hyperspectral images. It utilizes multiple layers of the Swin Transformer in place of 2D-CNN layers and employs 3D convolutional layers to take advantage of the spatial spectrum of the data. This architecture has been applied to hyperspectral image processing, which inherently possesses multi-dimensional characteristics. It is effective in this task, and it is worth noting that the performance of 3DT-Net has been evaluated and compared against other state-of-the-art models on hyperspectral images, as shown in Table 8.

Table 8.

Hyperspectral Image Super-resolution ×4. Best records are in bold font.

Table 9 presents a collection of various super-resolution models that do not belong to a standardized dataset. The authors in [73] propose a transformer-based super-resolution texture network, called TTSR. This model uses reference images to create a super-resolution image from multiple low-resolution images and reference images. The VGG model [74] extracts features from the reference images. Additionally, a self-attention-based transformer model is proposed for extracting and transferring texture features from reference and low-resolution images.

Table 9.

Different Models of Image Super-Resolution for Different Datasets.

A transformer-based super-resolution architecture, called CTCNet, was proposed by Gao et al. [75] for the task of face image super-resolution. CTCNet is composed of an encoder-decoder architecture and a novel global-local feature extraction module that can extract fine-grained facial details from low-resolution images. The proposed architecture demonstrated promising results and potential for the task of face image super-resolution. BN-CSNT [76] is a network that combines channel splitting and the Swin transformer to extract context and spatial information. Additionally, it includes a feature fusion module with an attention mechanism, and it has been applied to thermal images to address the super-resolution problem. One of the main advantages of transformers in super-resolution is their adaptability to different types of images, similar to CNNs.

4.2. Image Denoising

Noise reduction, or denoising, is crucial in image processing and restoration. Removing noise from images is often used as a preprocessing step for many computer vision tasks, and it is also used to evaluate optimization methods and image prior models from a Bayesian perspective [77]. There are various approaches to reducing noise, including traditional methods such as BM3D [78], which enhances image contrast by assembling blocks of similar images from 3D images. There are also machine learning-based methods, and in [79], there is a comprehensive study on the use of machine learning for noise reduction in images. The wide range of noise reduction models is due to their widespread use in signal processing. In recent years, deep learning methods have been dominant in terms of perceptual efficiency and quality for noise reduction. For an overview of deep learning models based on CNNs for noise reduction, see [80]. In this section, we will focus on vision transformer-based noise reduction algorithms. Noisy images production can be expressed mathematically as

where is a noisy image, is a clean image, and is the random noise. Noise removal algorithms based on deep learning are divided into two methods.

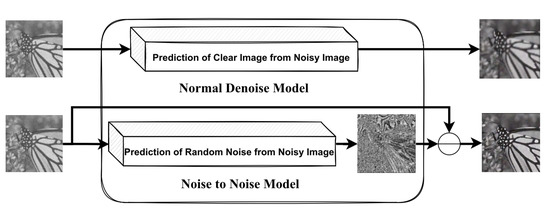

There are two main approaches for removing noise from images using ViT, as shown in Figure 6. The first approach, known as “noise-to-noise,” involves using deep learning networks to estimate the noise in an image and then subtracting the estimated noise from the noisy image to generate a noise-free image. The second approach involves using deep learning networks to estimate noise-free images from noisy images directly. For example, Prayuda et al. [81] proposed a convolutional vision transformer (CVT) for noise removal using the noise-to-noise approach, which employs residual learning to reduce noise from the noisy image by estimating the relationship between the noisy image and its noise map. Several previous research studies, including SwinIR, Uformer, Restormer, and IPT, have used a single encoder for all image restoration tasks but have utilized multiple decoders or reconstruction layers depending on the specific task.

Figure 6.

The main method for removing noise from images using transformer-based approaches. The top image shows a traditional technique that focuses on restoring clear image details from a noisy image. The bottom image depicts a more efficient method that estimates the random noise present in the noisy image and then subtracts this estimated noise from the image to produce a noise-free result.

Liu et al. [82] have proposed a new network called DnT for unsupervised noise removal from images. DnT combines a CNN and Transformer to estimate clean images from noisy images, using a loss function that is measured by pairs of noisy independent images generated from the input images. This method, known as R2R [83], effectively removes noise from images. In addition, the authors of [84] propose an efficient model called TECDNet, which utilizes a transformer for data encoding and a convolutional network for the decoder. Additionally, a convolutional network is incorporated to reduce computational complexity. Another transformer-based model for noise removal is TC-Net, which was introduced by Xue et al. [85]. This network consists of extra skip-connections, window multi-head self-attention, a convolution-based forward network, and a deep residual shrinkage network. The components of TC-Net work together to integrate features between layers, reduce computational complexity, and extract local features. The swin transformer is also frequently used in various shapes and structures for noise removal in image restoration tasks due to its ability to effectively extract important features from input images and its adaptability to different structures. Table 10 presents a comparison of various ViT models for the task of denoising in both generic and medical images. It can be observed that when the level of noise is high, the performance of the models, as measured by the SSIM/PSNR metrics, decreases.

Table 10.

Image denoising.

Fan et al. [87] have used a swin transformer as a basic block inside the layers of the U-net model, and it is called SUNet. Li et al. [88] collected a real dataset of noisy polarized color images to remove the disturbing noise and used a conventional camera to take the same images and use these images as ground truth. Then they proposed a hybrid transformer model based on the attention mechanism to remove the disturbing noise from the polarized color images, called Pocoformer. The authors in [89] have proposed a model to improve image quality and reduce noise in Low-Dose Computed Tomography (LDCT). Their proposed model, called TransCT analyzes distorted images into High-Frequency (HF) and Low-Frequency (LF) components. Several convolutional layers extract content and texture features from LF and texture features from HF. The extracted features are then fed into a modified transformer with three encoders and decoders blocks to obtain well-polished features. Finally, the refined features are combined to restore high-quality LDCT images. LDCT images are the most common in diagnosing diseases. However, LDCT suffers from loud noises more than normal CT images. Therefore, the authors of [90] have proposed a new convolution-free Token-to-Token (T2T) transformer model to remove noise from LDCT images. Luthra et al. [91] have proposed a transformer that improves edge-to-edge using a Sobel filter called Eformer. In addition, non-overlapping self-attention based on a shifted window is used to reduce computational costs while using residual learning to remove natural noise in LDCT images.

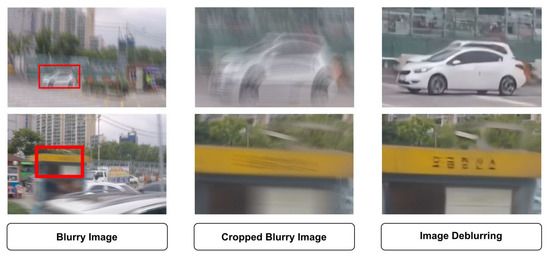

4.3. Image Deblurring

Blurry images can be caused by various factors, such as the random movement of objects or the movement of the camera itself and physical limitations. This makes the problem of blurry images challenging to describe and solve. Blurry images are common in many image capture devices due to factors such as camera movement or scene changes [92]. It is not feasible to develop a mathematical formula that accurately captures the blur in an image, as it depends on a range of variables. In the past, various approaches have been proposed for deblurring images, including traditional methods. Wang et al. [93] made a comprehensive review of the traditional methods that occurred in common imaging and divided them into five frames according to the characteristics of each of them. Lay et al. [94] evaluated 13 single-image deblurring algorithms. Zhang et al. [95] presented a recent study on deep learning and CNN methods in terms of structure, loss function, and various applications. This paper will focus on transformer-based deep learning models to treat blurry images. Figure 7 presents some examples of blurry image processing. The leftmost image is a full, blurry image, the middle image is a cropped version of the blurry image, and the rightmost image is the reconstruction of the blurry image using the model.

Figure 7.

Some examples of blurry images processing.

Wang et al. [86] introduced a new transformer-based network called Uformer, consisting of a hierarchical U-net architecture. Uformer is based on two main designs: The locally enhanced window (LeWin) block significantly reduces computational cost when using it with high-resolution feature maps rather than window-based self-attention. Secondly, they designed a learnable multi-scale restoration modulator to adjust the spatial features at the multi-layer level. Zamir et al. [43] introduced a novel transformer called Restormer Transformer (Restormer). Transformers are characterized by high image quality accuracy but suffer from significant computational complexity. Restormer is a practical image restoration transformer that models global features and can be applied to large images. Restormer consists of a multi-Dconv head ‘transposed’ attention (MDTA) block. MDTA is distinct from the vanilla multi-headed [25] in calculating the inter-channel covariance to obtain an optimal attention map. An advantage of MDTA is that it uses global relationships of pixels and optimizes the local context for feature mapping. Table 11 illustrates various image deblurring models that are based on the ViT architecture. It is worth noting that all the datasets used in this demonstration are real-world data and not synthetic.

Table 11.

Image deblurring.

In order to reduce the computational complexity of transformers, the authors of [96] proposed a deep network called Multi-scale Cubic-Mixer. Besides, to reduce computational costs, they did not use any self-attention mechanism. The proposed model uses the fast Fourier transform to calculate the Fourier coefficients to use the real and imaginary components and thus obtain the image without blurring. The proposed new network extracts the long-range and local features from blurred images in the frequency domain. The authors [97] have proposed a hybrid transformer called Stripformer, which relies on the attention of inter-strip and intra-strip [98] and which estimates motion blur from a blurred image by projecting motion blur in vertical and horizontal directions in the Cartesian coordinate. In addition, the vertical and horizontal features extracted at each pixel are stored to provide more information for subsequent layers in order to deduce the blur pattern. This method enables the sequential extraction of multi-scale local features. Therefore, the stripformer can remove long- and short-range blurred artifacts.

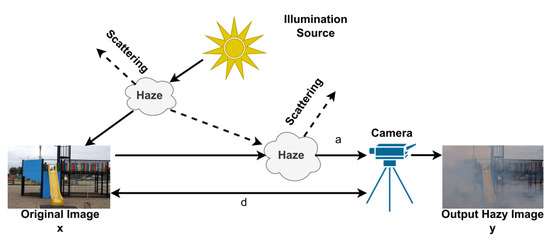

4.4. Image Dehazing

Image dehazing involves the removal of non-linear noise produced by a turbid medium, such as dust and fog, which impairs the visibility of images and objects. The presence of hazing in images adds complex and difficult-to-predict noise, making it a challenging problem in image restoration. In addition, hazing reduces the accuracy and efficiency of computer vision algorithms. The generation of hazy images can be described using the atmospheric scattering model:

where is a hazy image, is an image without hazing, is global atmospheric light, is the scattering coefficient, and is the distance between the camera and the object. Many computer vision algorithms degrade in hazing scenes. Figure 8 describes an atmospheric dispersion model describing the hazing formation.

Figure 8.

The atmospheric dispersion model by which the haze formation process is described.

The RESIDE dataset is commonly used for training and testing models for dehazing images. It includes 13,990 images in the Indoor Training Set (ITS), 500 images in the Synthetic Object Testing Set (SOTS), and 20 images in the Hybrid Subjective Testing Set (HSTS). To address the inconsistency problem of various features from CNN and transformer models, the authors of [99] propose a feature modulation module for combining hierarchical global features from transformers and hierarchical local features from CNN for image dehazing. Furthermore, Gao et al. [100] proposed a model that combines residual and parallel convolutional networks with a transformer-based channel attention module for detailed feature extraction called TCAM. TCAM consists of a channel attention module and a spatial attention module, and the channel attention module improves features along the channel.

Table 12 presents a comparison of different ViT models for the task of image dehazing. It can be observed that many of these models utilize the RESIDE dataset, which consists of both indoor and outdoor real-world images, for their evaluation. Li et al. [101] proposed a new two-phase de-hazing network. The first phase is based on the swin transformer and extracts key features from blurred images. In addition, the swin architecture has been improved by adding an Inter-block Supervision Mechanism (ISM). In the second phase, convolutional layers are used to extract local features and are also merged. The authors [102] have proposed a dual-stream network consisting of a CNN and transformers to extract global and local features. In addition, they proposed an atmospheric light estimation model based on atmospheric veils and partial derivatives. Song et al. [103] introduced a DehazeFormer model for removing hazing from generic images. Besides, they use multi-head self-attention (MHSA) that is dynamically trainable to adapt to different generics of images. Additionally, they suggest a soft reconstruction module based on SoftReLU. The contributions of Song et al. are to propose a modified normalization layer, spatial feature aggregation system, and soft activation function. Zhao et al. [104] suggest an improved framework for complementary features that relies on forcing the model to learn weak complementary features rather than iteratively learning ineffective features of the model. In addition, they proposed the Complementary Feature Selection Module (CFSM) to identify the most valuable features in learning.

Table 12.

Image Dehazing.

4.5. Image JPEG Compression Artifact Reduction

With the significant development in cameras, most devices have become highly dependent on images, including airplanes, cars, and smartphones. Images and videos require huge storage space and high internet speed. This leads to the need for image compression and storage systems in all applications. However, most compression methods result in compression noises, such as compression artifacts [105]. JPEG is the most popular image compression technology on the Internet. JPEG compression technology consists of four stages: discrete cosine transformation (DCT), entropy coding, block division, and quantization. JPEG suffers from image dropouts at spatial boundaries because JPEG ignores the spatial connections between image blocks. Therefore, there are approaches based on filters [106,107,108] to improve the quality of images after compression, but they suffer from image blur. Deep learning techniques have emerged in the past few years to solve the JPEG compression artifact problem by mapping compressed images to ground-truth images [109,110,111]. In Table 13, a comparison of transformer-based models for removing JPEG compression artifacts is presented. The evaluation is done in terms of quality, the number of parameters, and the datasets used for a single image and the pairs of stereo images.

Table 13.

JPEG compression artifact reduction.

Modern techniques are divided into two parts in JPEG compression artifact reduction; the first is using one image to solve the problem, and the second is using two or more images. Each method has advantages and disadvantages. In this work, we present the latest JPEG compression artifact reduction techniques based on transformers. The most famous transformer-based model is SwinIR [50] which was mentioned before. Transformers are distinguished in capturing global and local features from CNN, which only extracts local features. SwinIR is a model that addresses more than one problem in image restoration, and the last layers of it are changed to suit the solution of each problem. A single convolution layer is used in the last block of the SwinIR model to remove the JPEG compression artifact. In addition, Charbonnier loss [113] was used in training the SwinIR model. The second type of JPEG artifact removal is the use of stereo image pairs.

4.6. Removing Adverse Weather Conditions

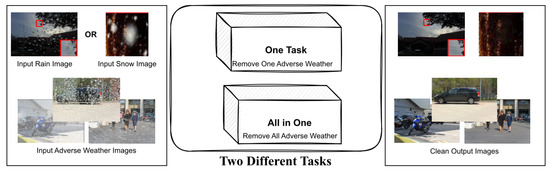

Many computer vision algorithms, including those used for detection, depth estimation, and segmentation [114,115,116], are sensitive to the surrounding conditions and can be affected by factors such as adverse weather. These algorithms have important applications in various systems that are integral to our daily lives, such as navigation and surveillance systems and Unmanned Aerial Vehicles (UAVs) [117,118,119]. As a result, it is necessary to address hostile weather conditions such as fog, rain, and snow that can negatively impact the reliability of vision systems. Traditional methods for removing adverse weather are often based on empirical observations [120,121,122]. However, these methods must be tailored to each weather condition, as an all-weather method is generally ineffective. Additionally, there are algorithms based on CNNs that have been developed for removing adverse weather, including deraining [123,124,125,126], desnowing [127,128,129], and raindrop removal [130,131]. In Figure 9, it can be observed that there are several transformer-based approaches for removing adverse weather conditions, which can be broadly categorized into two groups: methods that focus on removing a specific type of adverse weather and methods that are capable of removing a variety of adverse weather conditions.

Figure 9.

Illustration of the two common types of adverse weather removal algorithms. The top image shows a technique that removes a single type of adverse weather, such as rain or snow. The bottom image depicts a more efficient approach that is capable of removing multiple types of adverse weather at the same time.

Qin et al. [132] proposed ETDNet, which utilizes transformers to extract features from individual images to remove rain streaks. ETDNet employs expansion filters at different rates to estimate the appropriate kernel for rainy images, thereby allowing for the extraction of features in an approximate to exact manner. The authors of [133] utilized a Swin-transformer [56] for rain removal from single images, utilizing a Swin block in three parallel branches referred to as Mswt. Deep and shallow features are extracted through the use of four consecutive Mswt and skip-connections between Mswt. The authors [134] proposed a two-stage training and fine-tuning approach called Task-Agnostic Pre-Embedding (TAPE). TAPE is trained on natural images, and then the knowledge is used to help remove rain, snow, and noise from images in a single model. The authors [135] proposed a model that eliminates adverse weather conditions at once called TransWeather, which consists of a single decoder. The weather-type queries are identified by a multi-headed attention mechanism and matched with the values and keys taken from the features extracted from the transformer encoder. To reconstruct the images after identifying the type of deterioration, hierarchical features from the encoder and features from the decoder are combined and then projected by a convolutional block on the image space. Therefore, TransWeather consists of one encoder and one decoder to eliminate adverse weather and generate a pure image. Transformers can extract important global features compared to CNN. However, when patches are large, transformers fail to pay attention to small information and detail.

Table 14 presents a comparison of transformer-based models for removing adverse weather conditions. The models can be divided into two categories: those that remove a specific type of adverse weather and those that are capable of removing multiple types of adverse weather. It is important to note that it is generally preferable to use models that can handle multiple types of adverse weather conditions in real-world applications. For example, Liu et al. [136] designed an image restoration model called SiamTrans, inspired by Siamese transformers. SiamTrans is trained on an extensive dataset in a noise reduction task. Then knowledge transfer is used to train SiamTrans on many low-level image restoration tasks, including demoireing, deraining, and desnowing. In addition, the SamTrans consists of the encoder, decoder, self-attention units, and temporal-attention units. Two Uformer and Restormer models were previously introduced in detail in the image deblurring section.

Table 14.

Removing Adverse Weather Conditions.

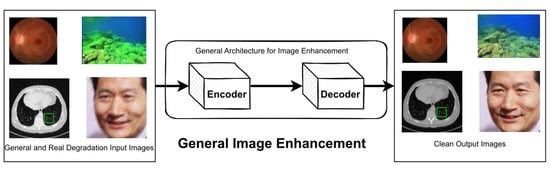

4.7. General Image Enhancement

Image restoration is a technique used to restore missing information in images and has been applied to various tasks such as super-resolution, denoising, deblurring, dehazing, JPEG compression artifact reduction, and the removal of adverse weather conditions. However, some research involving image datasets may not fit neatly into any specific image restoration task. These datasets may take the form of images captured of different qualities or images that have undergone significant degradation due to natural or artificial factors, as shown in Figure 10. The datasets that need improvement have two forms. The first form is images taken twice, once with high quality and once with low quality. The second form is images suffering from a lot of image degradation, including noise, downsample, blur, hazing, and adverse weather conditions.

Figure 10.

Illustration for general image enhancement, which does not fall under any specific sub-task of the image restoration.

Deng et al. [137] have created a clinical dataset (real fundus). This dataset consists of 120 real pairs of low-resolution and high-resolution images. They also proposed a new transformer-based GAN called RFormer. RFormer is a true degradation restoration model for fundus images. RFormer consists of a generator and a discriminator. The generator is based on the U-Net architecture and is mainly based on the Window-based Self-Attention Block (WSAB) but has been replaced by the Multilayer Perceptual (MLP) with the Feed-Forward Network (FFN). The WSAB generates more realistic images that capture long-range dependencies and non-local self-similarities. The discriminator model relies on a transformer to distinguish between real and fake images to monitor the quality of the generator. The discriminator is based on PatchGAN [138], which has a final layer as N × N × 1.

Boudiaf et al. [139] have employed an Image Processing Transformer (IPT) model [59] to address the problem of noise and distortion in underwater images. The IPT model was trained on a large number of images for tasks such as super-resolution and noise removal, and it has shown promising results in this context. In a separate study, the authors in [140] focused on restoring and improving degraded facial images, due to their significance in various applications. They proposed the FRGAN model, which can restore facial features and enhance accuracy. The FRGAN comprises three stages: a head position estimation network, a Postural Transformer Network, and a Face Generative Adversarial Network. Additionally, a new loss function called Swish-X was also proposed for use in the FRGAN model. Souibgui et al. [141] presented a flexible document image enhancement model called DocEnTr, based on transformers. DocEnTr is the first encoder-decoder-based image document restoration model that uses transformers without any CNNs. The encoder extracts the most salient features from the positional pixels and converts them into encoded patches, without the use of convolution layers, while the decoder reconstructs the images from the encoded patches. Table 15 presents the results of various ViT models in enhancing the quality of images. It is important to note the diversity of the datasets used in these evaluations, which include scenarios that do not require mathematical degradation models.

Table 15.

Image Restoration and Enhancement.

Puyang et al. [142] have created two datasets for blind face restoration, known as BFR128 and BFR512. They also present a comprehensive model for facial image degradation, which includes noise, down-sampling, blur, and JPEG compression artifacts, and combine them in a complete degradation scenario. In addition, they propose the U-net model and replace the down-sampling and up-sampling layers with swin-transformer blocks. In [143], they propose the Sinogram Restoration Transformer (SRT) model, which is inspired by the swin transformer and long-range dependency modeling. They also introduce the Residual Image Reconstruction Module (RIRM) for restoring X-ray sinograms and a differentiable DuDo Consistency Layer to preserve consistency in the sinograms, leading to the creation of high-quality Computed Tomography (CT) images. In [144], the authors propose the U2-Former transformer based on a nested U-shaped architecture to facilitate feature transfer between different layers. The model utilizes two overlapping or nested structures: an inner U-shape built on the self-attention block and an external U-shape to create a deep encoding and decoding space. This model is complex, but its strength lies in its large transformer depth, leading to high efficiency in image restoration tasks such as reflection removal, rain streak removal, and dehazing.

Yan et al. [145] propose a medical MRI model called SMIR, which combines the advantages of residual contact with those of the transformer. SMIR consists of a feature extraction unit and a reconstruction unit, with the Swin transformer used as the feature extractor. The proposed model is applied to different ratio radial filling trajectory samples. The authors in [146] proposed a U-net-based transformer to combine the advantages of convolutional layers and transformers. They used several convolution layers in the encoder, the encoder vector, the transformer encoder block, and the same structure in the decoder. In addition, they used self-learning and contrastive loss.

5. Discussion and Future Work

In this section, we present the advantages and limitations of ViT in image restoration as well as the new possible research directions arising from these limitations. The current study has analyzed the use of transformer models, specifically the ViT, in the field of image restoration. Our review of the literature found that ViT has been widely applied to various tasks within image restoration, including Super-Resolution, Denoising, General Image Enhancement, JPEG Compression Artifact Reduction, Image Deblurring, Removing Adverse Weather Conditions and Image Dehazing. It has generally achieved strong results compared to traditional methods and other deep learning models such as CNNs and GANs.

One of the key advantages of using ViT in image restoration is its ability to extract global features and its stability during training. This allows ViT to effectively model complex relationships within the data and generalize well to new examples. In addition, ViT has been shown to be flexible and perform well on various types of data, including generic images, medical images, and remote sensing data. However, there are also some limitations to using ViT in image restoration. One major issue is the high computational cost of training ViT models, which can make them impractical for some applications. However, ViT performs better than CNN models when trained on large datasets. The performance of different versions of ViTs in the task of image classification shows that its performance increases while increasing the number of training images compared to the CNN-based ResNet (BiT) model [15]. In contrast, ViT suffers from slow training (large training time) due to the self-attention process, which requires higher computational costs than CNN models [15]. In addition, ViT is less effective at extracting local features and may struggle to capture close-range dependencies within the data. This can be a drawback in tasks requiring detailed, fine-grained image analysis. Figure 11 summarizes the advantages and drawbacks of ViT in image restoration.

Figure 11.

The main advantages and drawbacks of ViT in Image Restoration.

Despite these limitations, our review suggests that ViT has strong potential for use in image restoration and has already demonstrated impressive results in a variety of tasks. However, further research is needed to address the challenges of using ViT in image restoration, such as reducing the computational cost and improving its ability to extract local features. This could involve developing more efficient self-attention mechanisms, adapting the model to the specific characteristics of image restoration tasks, or exploring hybrid approaches that combine both ViT and other deep learning models.

Several directions for future research could help to further advance the use of ViT in image restoration. One potential avenue is to explore ways to reduce the computational cost of training ViT models, such as using more efficient self-attention mechanisms or adapting the model to the specific characteristics of image restoration tasks. Another area for investigation is the development of more interpretable ViT models, which could help better understand and explain the model’s decisions during the restoration process. This could involve incorporating additional interpretability mechanisms into the model architecture or designing more transparent loss functions. In addition, further research could focus on developing hybrid approaches that combine the strengths of ViT with other deep learning models, such as CNNs. This could allow for the use of the best features of both models in a single image restoration pipeline, potentially resulting in improved performance.

6. Conclusions

In conclusion, ViT models have gained widespread attention in computer vision. They have demonstrated superior performance to traditional models such as CNNs in various tasks, including image classification, object detection, action recognition, segmentation, and image restoration. This survey has explicitly focused on the use of ViT models, in the field of image restoration. We have reviewed a range of approaches based on vision transformers, including image super-resolution, denoising, enhancement, JPEG compression artifact reduction, deblurring, removal of adverse weather conditions, and dehazing. We have also presented a comparative study of the state-of-the-art vision transformer models in each of these tasks and have discussed the main strengths and drawbacks of their adoption in image restoration. Overall, our review suggests that vision transformers offer a promising approach to addressing the challenges of image restoration and have demonstrated strong performance in many tasks. However, there are also limitations to their use, including a high computational cost and difficulty in extracting local features. To further advance the use of vision transformers in image restoration, it will be important for future research to address these limitations and explore ways to improve the model’s performance and efficiency.

Author Contributions

Conceptualization, A.M.A., B.B. and A.K.; methodology, A.M.A., B.B. and A.K.; investigation, A.M.A. and B.B.; writing—original draft preparation, A.M.A. and, B.B.; writing—review and editing, A.M.A., B.B., A.K., W.E.-S., Z.K. and W.B.; supervision, B.B., A.K. and W.E.-S.; project administration, B.B. and A.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Prince Sultan University.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to acknowledge the support of Prince Sultan University for paying the Article Processing Charges (APC) of this publication.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- El-Shafai, W.; Ali, A.M.; El-Rabaie, E.S.M.; Soliman, N.F.; Algarni, A.D.; Abd El-Samie, F.E. Automated COVID-19 Detection Based on Single-Image Super-Resolution and CNN Models. Comput. Mater. Contin. 2021, 70, 1141–1157. [Google Scholar] [CrossRef]

- El-Shafai, W.; El-Nabi, S.A.; El-Rabaie, E.S.M.; Ali, A.M.; Soliman, N.F.; Algarni, A.D.; Abd El-Samie, F.E. Efficient Deep-Learning-Based Autoencoder Denoising Approach for Medical Image Diagnosis. Comput. Mater. Contin. 2021, 70, 6107–6125. [Google Scholar] [CrossRef]

- El-Shafai, W.; Mohamed, E.M.; Zeghid, M.; Ali, A.M.; Aly, M.H. Hybrid Single Image Super-Resolution Algorithm for Medical Images. Comput. Mater. Contin. 2022, 72, 4879–4896. [Google Scholar] [CrossRef]

- Lu, D.; Weng, Q. A Survey of Image Classification Methods and Techniques for Improving Classification Performance. Int. J. Remote Sens. 2007, 28, 823–870. [Google Scholar] [CrossRef]

- Noor, A.; Benjdira, B.; Ammar, A.; Koubaa, A. DriftNet: Aggressive Driving Behaviour Detection Using 3D Convolutional Neural Networks. In Proceedings of the 1st International Conference of Smart Systems and Emerging Technologies, SMART-TECH 2020, Riyadh, Saudi Arabia, 3–5 November 2020; pp. 214–219. [Google Scholar] [CrossRef]

- Varone, G.; Boulila, W.; lo Giudice, M.; Benjdira, B.; Mammone, N.; Ieracitano, C.; Dashtipour, K.; Neri, S.; Gasparini, S.; Morabito, F.C.; et al. A Machine Learning Approach Involving Functional Connectivity Features to Classify Rest-EEG Psychogenic Non-Epileptic Seizures from Healthy Controls. Sensors 2022, 22, 129. [Google Scholar] [CrossRef]

- Benjdira, B.; Koubaa, A.; Boulila, W.; Ammar, A. Parking Analytics Framework Using Deep Learning. In Proceedings of the 2022 2nd International Conference of Smart Systems and Emerging Technologies, SMARTTECH 2022, Riyadh, Saudi Arabia, 9–11 May 2022; pp. 200–205. [Google Scholar] [CrossRef]

- Benjdira, B.; Koubaa, A.; Azar, A.T.; Khan, Z.; Ammar, A.; Boulila, W. TAU: A Framework for Video-Based Traffic Analytics Leveraging Artificial Intelligence and Unmanned Aerial Systems. Eng. Appl. Artif. Intell. 2022, 114, 105095. [Google Scholar] [CrossRef]

- Benjdira, B.; Ouni, K.; al Rahhal, M.M.; Albakr, A.; Al-Habib, A.; Mahrous, E. Spinal Cord Segmentation in Ultrasound Medical Imagery. Appl. Sci. 2020, 10, 1370. [Google Scholar] [CrossRef]

- Benjdira, B.; Ammar, A.; Koubaa, A.; Ouni, K. Data-Efficient Domain Adaptation for Semantic Segmentation of Aerial Imagery Using Generative Adversarial Networks. Appl. Sci. 2020, 10, 1092. [Google Scholar] [CrossRef]

- Khan, A.R.; Saba, T.; Khan, M.Z.; Fati, S.M.; Khan, M.U.G. Classification of Human’s Activities from Gesture Recognition in Live Videos Using Deep Learning. Concurr. Comput. 2022, 34, e6825. [Google Scholar] [CrossRef]

- Ubaid, M.T.; Saba, T.; Draz, H.U.; Rehman, A.; Ghani, M.U.; Kolivand, H. Intelligent Traffic Signal Automation Based on Computer Vision Techniques Using Deep Learning. IT Prof. 2022, 24, 27–33. [Google Scholar] [CrossRef]

- Delia-Alexandrina, M.; Nedevschi, S.; Fati, S.M.; Senan, E.M.; Azar, A.T. Hybrid and Deep Learning Approach for Early Diagnosis of Lower Gastrointestinal Diseases. Sensors 2022, 22, 4079. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Ho, J.; Jain, A.; Abbeel, P. Denoising Diffusion Probabilistic Models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Khan, S.; Naseer, M.; Hayat, M.; Zamir, S.W.; Khan, F.S.; Shah, M.; Khan, S.; Naseer, M.; City, M.; Dhabi, A.; et al. Transformers in Vision: A Survey. ACM Comput. Surv. 2022, 54, 200. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Chen, H.; Chen, X.; Guo, J.; Liu, Z.; Tang, Y.; Xiao, A.; Xu, C.; Xu, Y.; et al. A Survey on Vision Transformer. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 87–110. [Google Scholar] [CrossRef]

- Islam, K. Recent Advances in Vision Transformer: A Survey and Outlook of Recent Work. arXiv 2022. [Google Scholar] [CrossRef]

- Shamshad, F.; Khan, S.; Zamir, S.W.; Khan, M.H.; Hayat, M.; Khan, F.S.; Fu, H. Transformers in Medical Imaging: A Survey. arXiv 2022. [Google Scholar] [CrossRef]

- Su, J.; Xu, B.; Yin, H. A Survey of Deep Learning Approaches to Image Restoration. Neurocomputing 2022, 487, 46–65. [Google Scholar] [CrossRef]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V.; et al. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the NAACL HLT 2019–2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; Volume 1, pp. 4171–4186. [Google Scholar] [CrossRef]

- Vaswani, A.; Brain, G.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Pérez, J.; Marinković, J.; Barceló, P. On the Turing Completeness of Modern Neural Network Architectures. In Proceedings of the 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar] [CrossRef]

- Cordonnier, J.-B.; Loukas, A.; Jaggí, M.J. On the Relationship between Self-Attention and Convolutional Layers. arXiv 2019. [Google Scholar] [CrossRef]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable Convolutional Networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar]

- Li, X.; Yin, X.; Li, C.; Zhang, P.; Hu, X.; Zhang, L.; Wang, L.; Hu, H.; Dong, L.; Wei, F.; et al. Oscar: Object-Semantics Aligned Pre-Training for Vision-Language Tasks. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 121–137. [Google Scholar] [CrossRef]

- Su, W.; Zhu, X.; Cao, Y.; Li, B.; Lu, L.; Wei, F.; Dai, J. VL-BERT: Pre-Training of Generic Visual-Linguistic Representations. arXiv 2019. [Google Scholar] [CrossRef]

- Fedus, W.; Zoph, B.; Shazeer, N. Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity. J. Mach. Learn. Res. 2021, 23, 1–40. [Google Scholar] [CrossRef]

- Jing, L.; Tian, Y. Self-Supervised Visual Feature Learning with Deep Neural Networks: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 4037–4058. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, F.; Hou, Z.; Mian, L.; Wang, Z.; Zhang, J.; Tang, J. Self-Supervised Learning: Generative or Contrastive. IEEE Trans. Knowl. Data Eng. 2021, 35, 857–876. [Google Scholar] [CrossRef]

- Thung, K.H.; Raveendran, P. A Survey of Image Quality Measures. In Proceedings of the International Conference for Technical Postgraduates 2009, TECHPOS 2009, Kuala Lumpur, Malaysia, 14–15 December 2009. [Google Scholar] [CrossRef]

- Saad, M.A.; Bovik, A.C.; Charrier, C. Blind Image Quality Assessment: A Natural Scene Statistics Approach in the DCT Domain. IEEE Trans. Image Process. 2012, 21, 3339–3352. [Google Scholar] [CrossRef]

- Horé, A.; Ziou, D. Image Quality Metrics: PSNR vs SSIM. In Proceedings of the International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 2366–2369. [Google Scholar] [CrossRef]

- Almohammad, A.; Ghinea, G. Stego Image Quality and the Reliability of PSNR. In Proceedings of the 2010 2nd International Conference on Image Processing Theory, Tools and Applications, IPTA 2010, Paris, France, 7–10 July 2010; pp. 215–220. [Google Scholar] [CrossRef]

- Rouse, D.M.; Hemami, S.S. Analyzing the Role of Visual Structure in the Recognition of Natural Image Content with Multi-Scale SSIM. In Human Vision and Electronic Imaging XIII; SPIE: Bellingham, WA, USA, 2008; Volume 6806, pp. 410–423. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Sara, U.; Akter, M.; Uddin, M.S.; Sara, U.; Akter, M.; Uddin, M.S. Image Quality Assessment through FSIM, SSIM, MSE and PSNR—A Comparative Study. J. Comput. Commun. 2019, 7, 8–18. [Google Scholar] [CrossRef]

- Pambrun, J.F.; Noumeir, R. Limitations of the SSIM Quality Metric in the Context of Diagnostic Imaging. In Proceedings of the International Conference on Image Processing, ICIP 2015, Quebec City, QC, Canada, 27–30 September 2015; pp. 2960–2963. [Google Scholar] [CrossRef]

- Marathe, A.; Jain, P.; Walambe, R.; Kotecha, K. RestoreX-AI: A Contrastive Approach Towards Guiding Image Restoration via Explainable AI Systems. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2022, New Orleans, LA, USA, 18–24 June 2022; pp. 3030–3039. [Google Scholar]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.-H. Restormer: Efficient Transformer for High-Resolution Image Restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2022, New Orleans, LA, USA, 18–24 June 2022; pp. 5728–5739. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the IEEE International Conference on Computer Vision 2017, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Cheon, M.; Yoon, S.-J.; Kang, B.; Lee, J. Perceptual Image Quality Assessment with Transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2021, Nashville, TN, USA, 20–25 June 2021; pp. 433–442. [Google Scholar]

- Conde, M.V.; Burchi, M.; Timofte, R. Conformer and Blind Noisy Students for Improved Image Quality Assessment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2022, New Orleans, LA, USA, 18–24 June 2022; pp. 940–950. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Available online: https://dl.acm.org/doi/10.5555/3298023.3298188 (accessed on 30 October 2022).

- Park, S.C.; Park, M.K.; Kang, M.G. Super-Resolution Image Reconstruction: A Technical Overview. IEEE Signal Process. Mag. 2003, 20, 21–36. [Google Scholar] [CrossRef]

- Chen, X.; Wang, X.; Zhou, J.; Dong, C. Activating More Pixels in Image Super-Resolution Transformer. arXiv 2022. [Google Scholar] [CrossRef]

- Liang, J.; Cao, J.; Sun, G.; Zhang, K.; van Gool, L.; Timofte, R. SwinIR: Image Restoration Using Swin Transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2021, Montreal, BC, Canada, 11–17 October 2021; pp. 1833–1844. [Google Scholar]

- Zhang, D.; Huang, F.; Liu, S.; Wang, X.; Jin, Z. SwinFIR: Revisiting the SwinIR with Fast Fourier Convolution and Improved Training for Image Super-Resolution. arXiv 2022. [Google Scholar] [CrossRef]

- Yang, C.Y.; Ma, C.; Yang, M.H. Single-Image Super-Resolution: A Benchmark. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; pp. 372–386. [Google Scholar] [CrossRef]

- Yang, C.-Y.; Yang, M.-H. Fast Direct Super-Resolution by Simple Functions. In Proceedings of the IEEE International Conference on Computer Vision 2013, Sydney, Australia, 2–8 December 2013; pp. 561–568. [Google Scholar]

- Shan, Q.; Li, Z.; Jia, J.; Tang, C.K. Fast Image/Video Upsampling. ACM Trans. Graph. 2008, 27, 153. [Google Scholar] [CrossRef]

- Sun, J.; Sun, J.; Xu, Z.; Shum, H.Y. Image Super-Resolution Using Gradient Profile Prior. In Proceedings of the 26th IEEE Conference on Computer Vision and Pattern Recognition, CVPR, Anchorage, Alaska, 23–28 June 2008. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2021, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Tu, J.; Mei, G.; Ma, Z.; Piccialli, F. SWCGAN: Generative Adversarial Network Combining Swin Transformer and CNN for Remote Sensing Image Super-Resolution. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 5662–5673. [Google Scholar] [CrossRef]

- Ma, Q.; Jiang, J.; Liu, X.; Ma, J. Learning A 3D-CNN and Transformer Prior for Hyperspectral Image Super-Resolution. arXiv 2021. [Google Scholar] [CrossRef]

- Chen, H.; Wang, Y.; Guo, T.; Xu, C.; Deng, Y.; Liu, Z.; Ma, S.; Xu, C.; Xu, C.; Gao, W. Pre-Trained Image Processing Transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2021, Nashville, TN, USA, 20–25 June 2021; pp. 12299–12310. [Google Scholar]

- He, D.; Wu, S.; Liu, J.; Xiao, G. Cross Transformer Network for Scale-Arbitrary Image Super-Resolution Lecture Notes in Computer Science. In Proceedings of the Knowledge Science, Engineering and Management: 15th International Conference, KSEM 2022, Singapore, 6–8 August 2022; pp. 633–644. [Google Scholar] [CrossRef]

- Liu, H.; Shao, M.; Wang, C.; Cao, F. Image Super-Resolution Using a Simple Transformer Without Pretraining. Neural Process. Lett. 2022, 1–19. [Google Scholar] [CrossRef]

- Zhang, X.; Zeng, H.; Guo, S.; Zhang, L. Efficient Long-Range Attention Network for Image Super-Resolution. arXiv 2022. [Google Scholar] [CrossRef]

- Cai, Q.; Qian, Y.; Li, J.; Lv, J.; Yang, Y.-H.; Wu, F.; Zhang, D. HIPA: Hierarchical Patch Transformer for Single Image Super Resolution. arXiv 2022. [Google Scholar] [CrossRef]

- Yoo, J.; Kim, T.; Lee, S.; Kim, S.H.; Lee, H.; Kim, T.H. Enriched CNN-Transformer Feature Aggregation Networks for Super-Resolution. arXiv 2022. [Google Scholar] [CrossRef]

- Wang, S.; Zhou, T.; Lu, Y.; Di, H. Detail-Preserving Transformer for Light Field Image Super-Resolution. In Proceedings of the AAAI Conference on Artificial Intelligence 2022, Virtual, 22 February–1 March 2022; Volume 36, pp. 2522–2530. [Google Scholar] [CrossRef]

- Liang, Z.; Wang, Y.; Wang, L.; Yang, J.; Zhou, S. Light Field Image Super-Resolution with Transformers. IEEE Signal Process. Lett. 2022, 29, 563–567. [Google Scholar] [CrossRef]

- Lei, S.; Shi, Z.; Mo, W. Transformer-Based Multistage Enhancement for Remote Sensing Image Super-Resolution. IEEE Trans. Geosci. Remote Sens. 2022, 60, 3136190. [Google Scholar] [CrossRef]

- Wang, L.; Zhu, H.; He, Z.; Jia, Y.; Du, J. Adjacent Slices Feature Transformer Network for Single Anisotropic 3D Brain MRI Image Super-Resolution. Biomed. Signal Process. Control. 2022, 72, 103339. [Google Scholar] [CrossRef]

- Zhang, W.; Wang, L.; Chen, W.; Jia, Y.; He, Z.; Du, J. 3D Cross-Scale Feature Transformer Network for Brain mr Image Super-Resolution. In Proceedings of the ICASSP, IEEE International Conference on Acoustics, Speech and Signal Processing, Singapore, 23–27 May 2022; pp. 1356–1360. [Google Scholar] [CrossRef]

- Fang, C.; Zhang, D.; Wang, L.; Zhang, Y.; Cheng, L.; Lab, Z.; Han, J. Cross-Modality High-Frequency Transformer for MR Image Super-Resolution. arXiv 2022. [Google Scholar] [CrossRef]

- Hu, J.F.; Huang, T.Z.; Deng, L.J.; Dou, H.X.; Hong, D.; Vivone, G. Fusformer: A Transformer-Based Fusion Network for Hyperspectral Image Super-Resolution. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6012305. [Google Scholar] [CrossRef]

- Liu, Y.; Hu, J.; Kang, X.; Luo, J.; Fan, S. Interactformer: Interactive Transformer and CNN for Hyperspectral Image Super-Resolution. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5531715. [Google Scholar] [CrossRef]