SwimmerNET: Underwater 2D Swimmer Pose Estimation Exploiting Fully Convolutional Neural Networks

Abstract

1. Introduction

2. Material and Methods

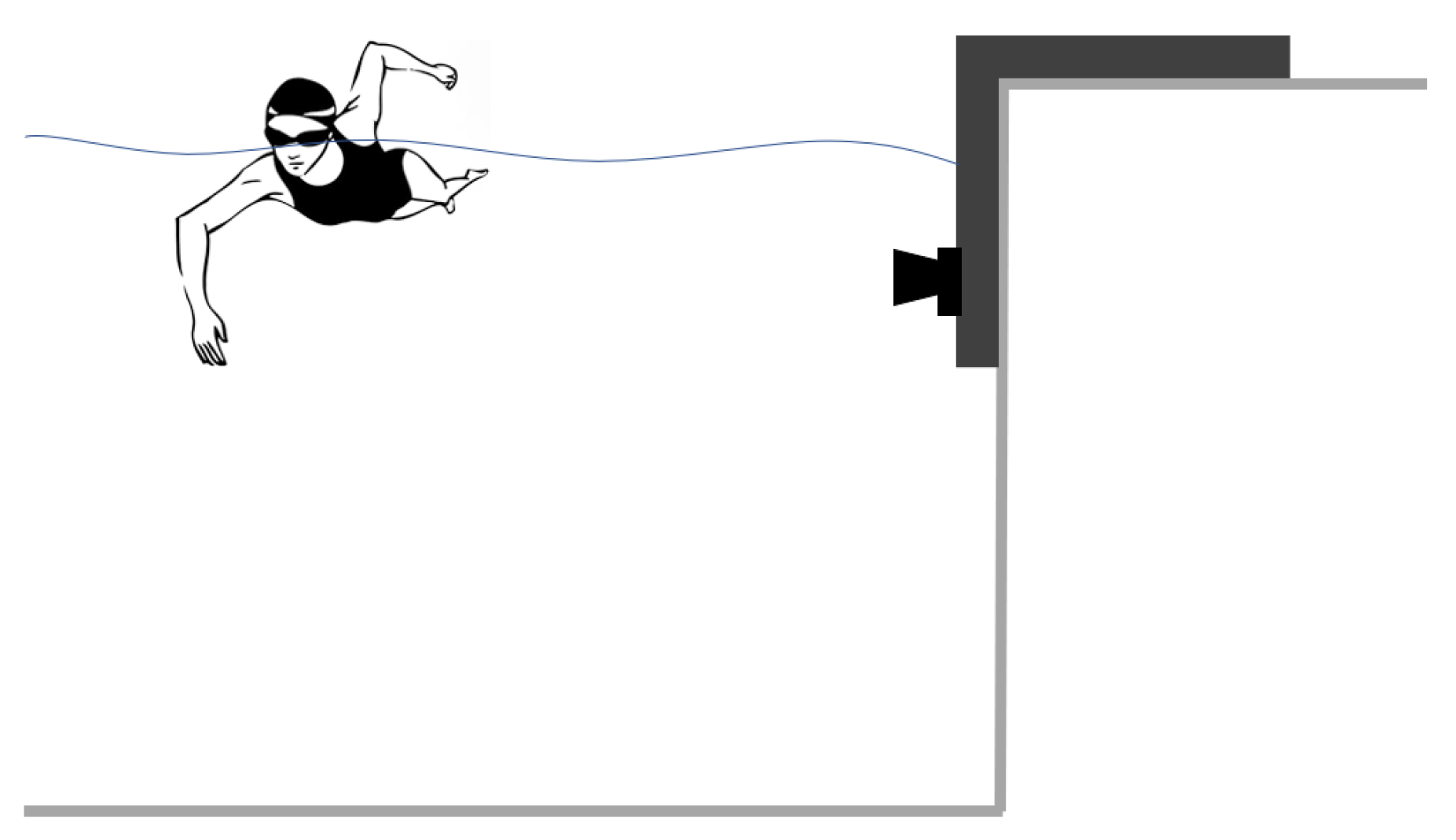

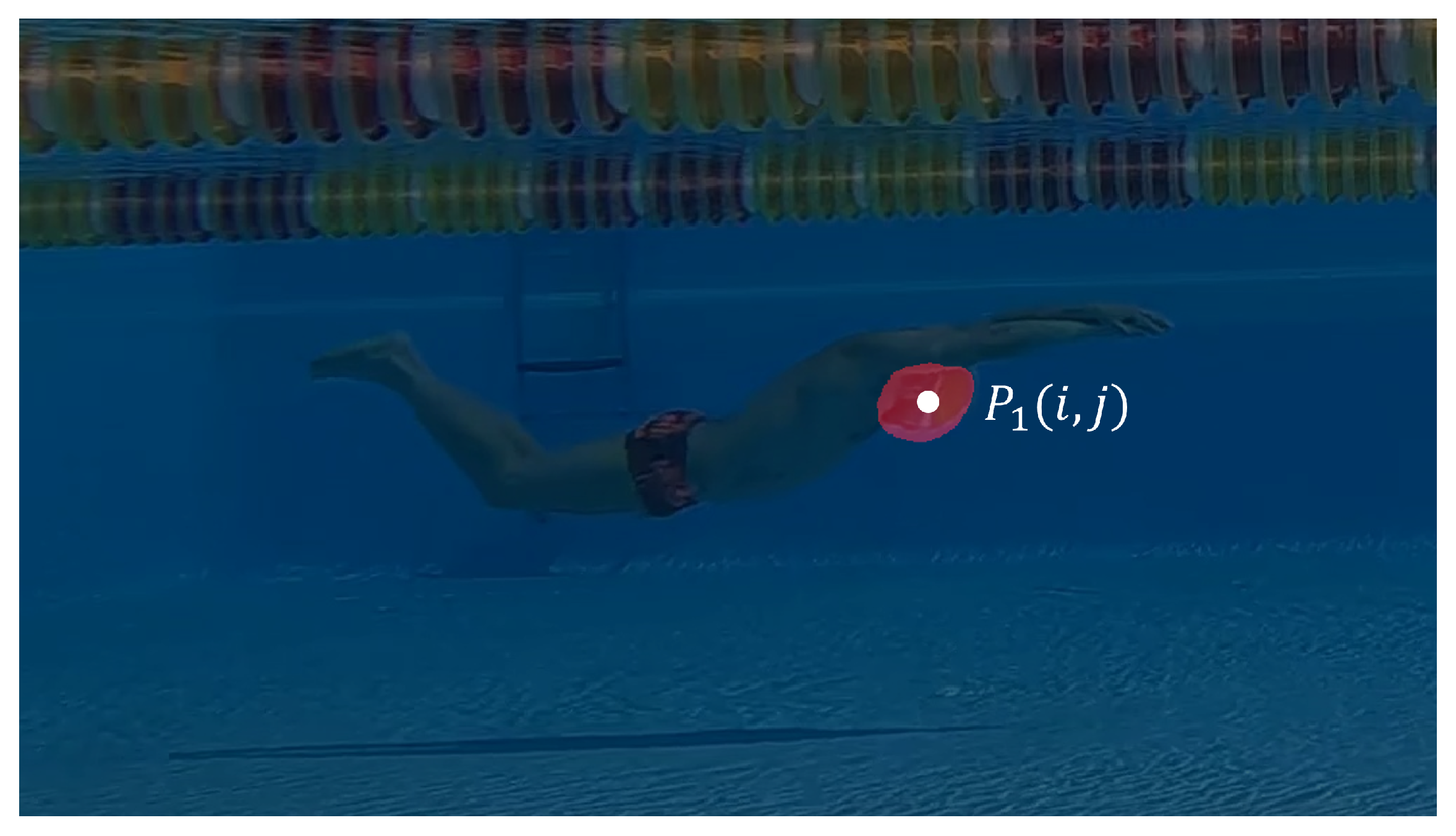

2.1. Image Acquisition System

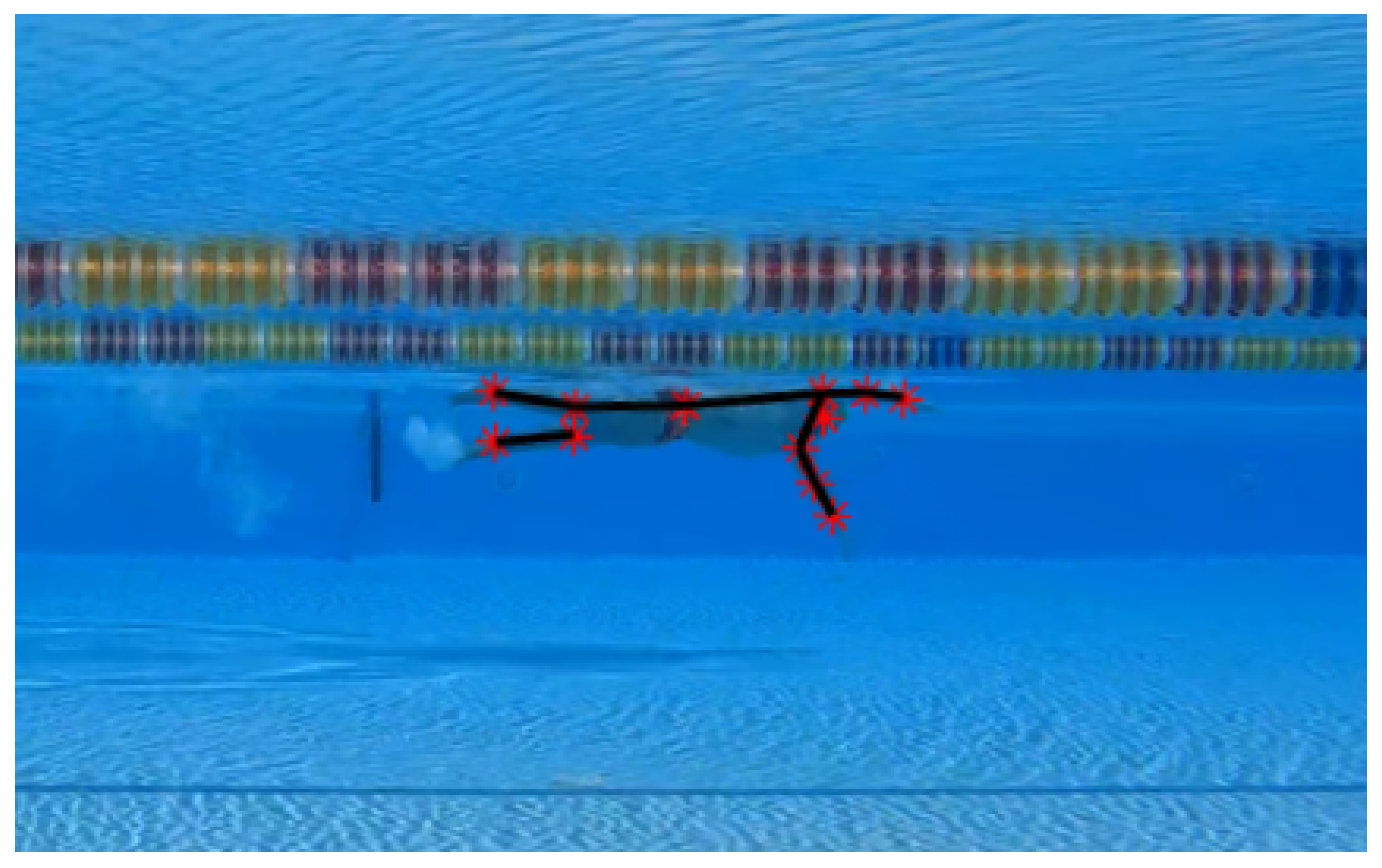

2.2. AI-Based Marker-Less Pose Estimation

2.2.1. Model Architecture

2.2.2. Model Training

- Architecture a, which defines the type of architecture used for binary semantic segmentation. These were selected from the most recent ones available in the literature: Unet, Unet++, Linknet, PSPNet, DeepLabV3 and DeepLabV3+ [17].

- Batch size , which defines the number of training-data sub-samples that will be propagated through the network. The batch size was varied between 2 and 16.

- Learning rate defines how severely the model will change in response to the estimated IoU score each time the model’s weights are updated by the optimizer. The learning rate was varied between and .

- Optimizer o, which defines the algorithm exploited to increase the IoU score by modifying attributes of the neural network, such as weights and learning rate. The optimizer can be selected among SGD, Adam, RMSprop, Adadelta and Adagrad [23].

- Backbone , which is the inner CNN architecture of the encoder path. The optimizer can be selected among efficientnet-b7, efficientnet-b6, efficientnet-b5, inceptionv4, vgg19, vgg16 and mobilenet.

2.2.3. Pose Optimization Algorithm

- the absence of targets during the over-water motion;

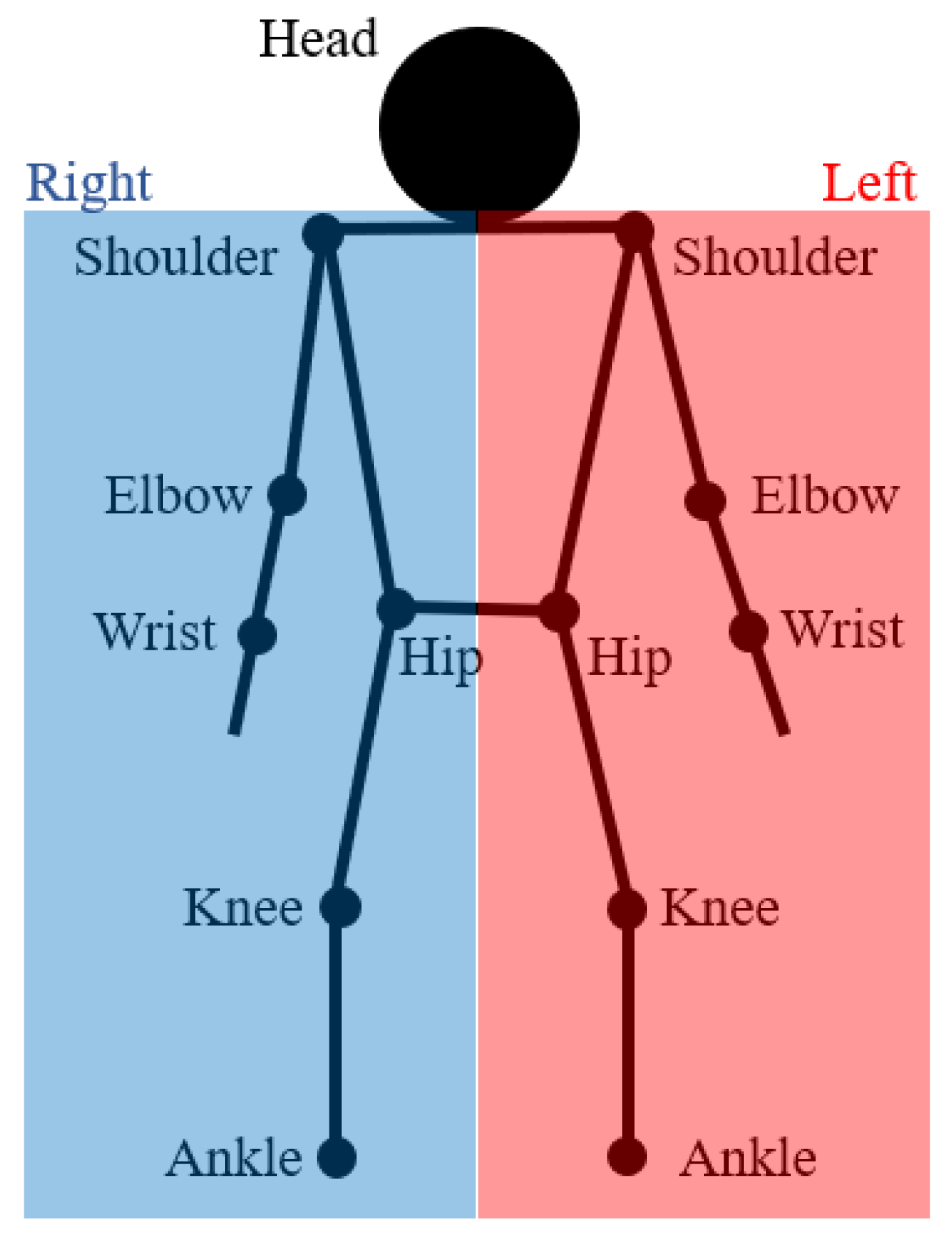

- the choice of a single viewpoint (side-view) of the camera, which prevents the identification of one side of the body;

- the swap between right and left targets, as the models developed do not distinguish between the two parts (e.g., with the exception of the head, all other body parts relate to left and right sides).

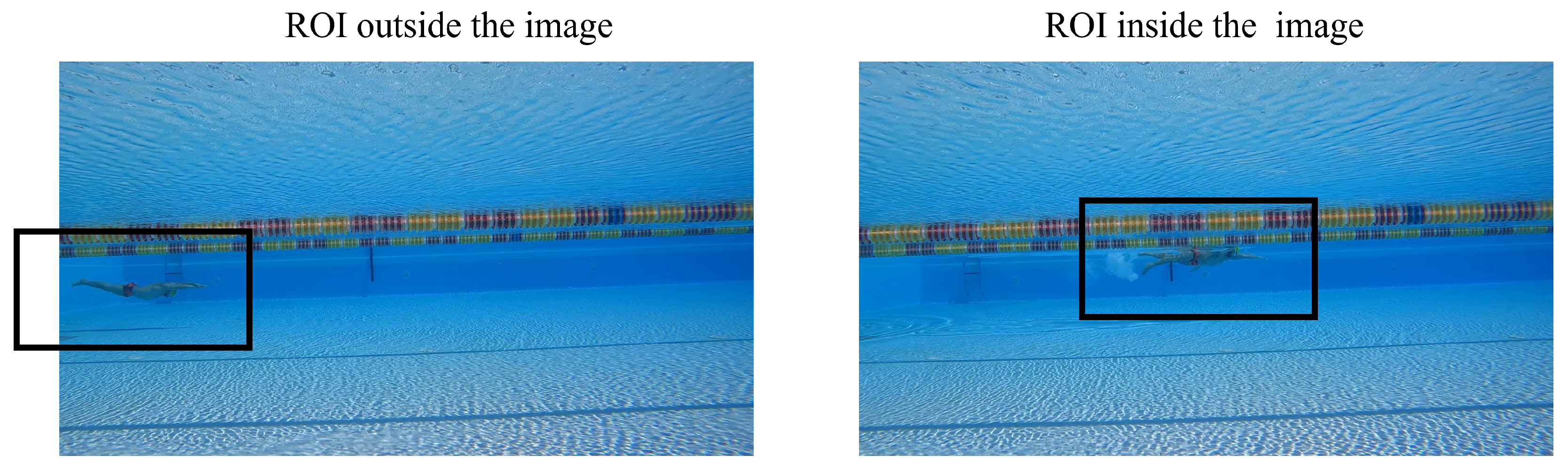

2.2.4. SwimmerNET Workflow

3. Results and Discussion

3.1. Swimmer’s Body Parts Semantic Segmentation Model Training

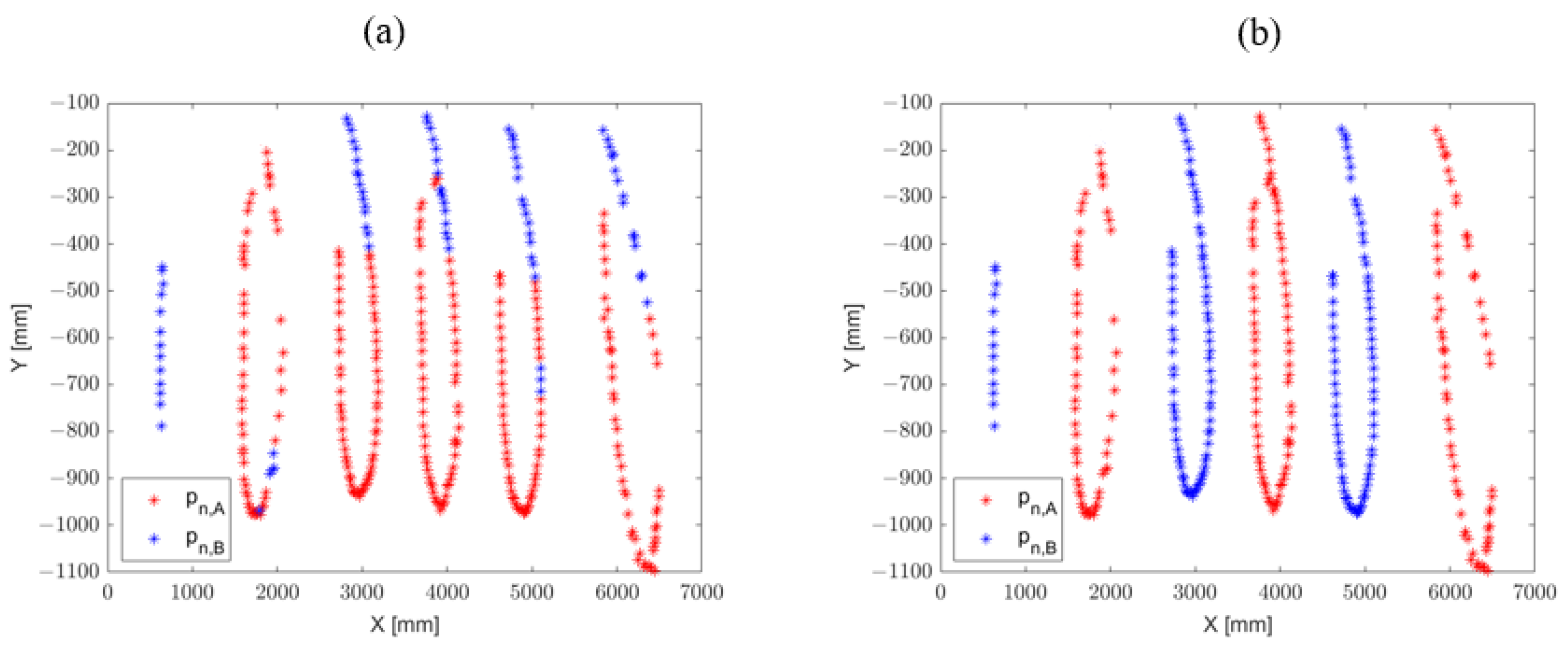

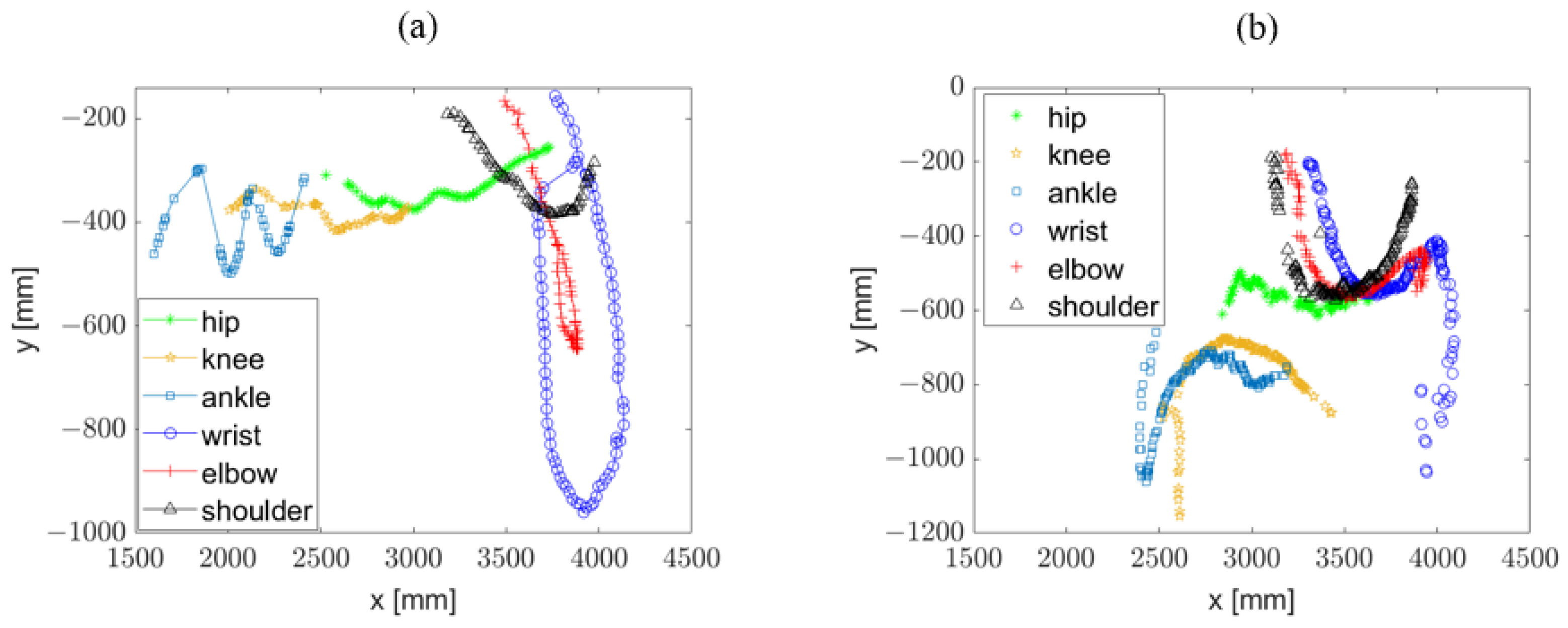

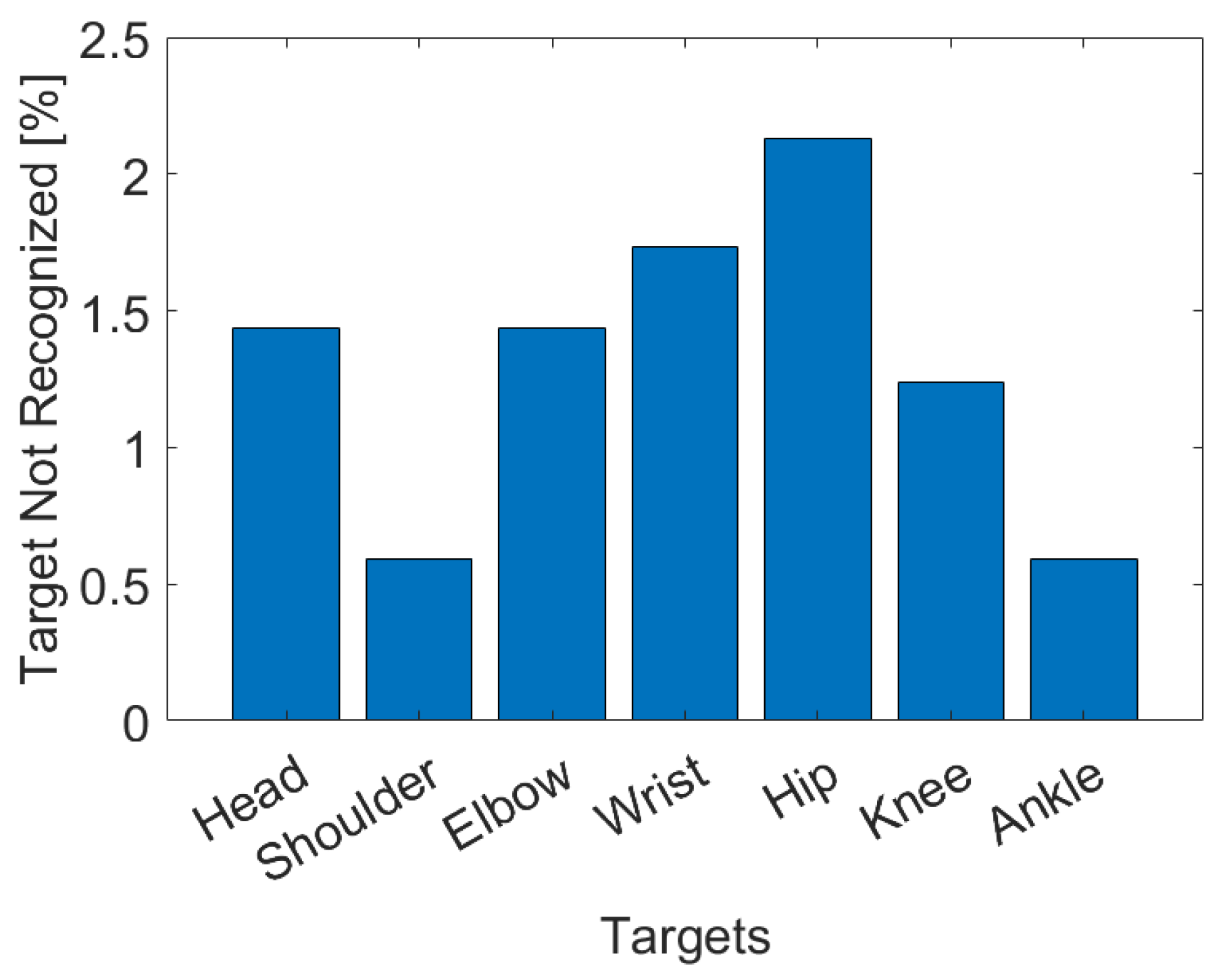

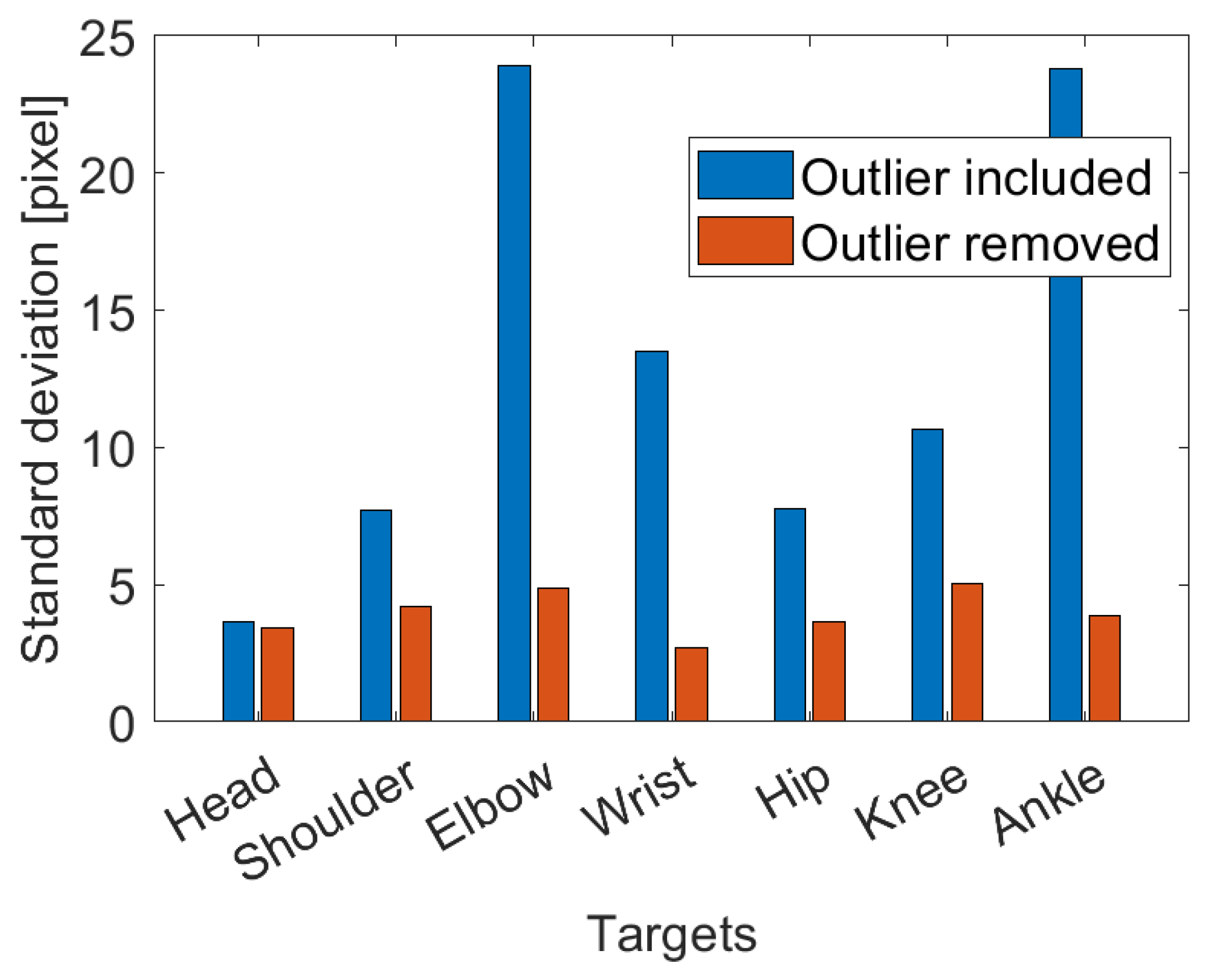

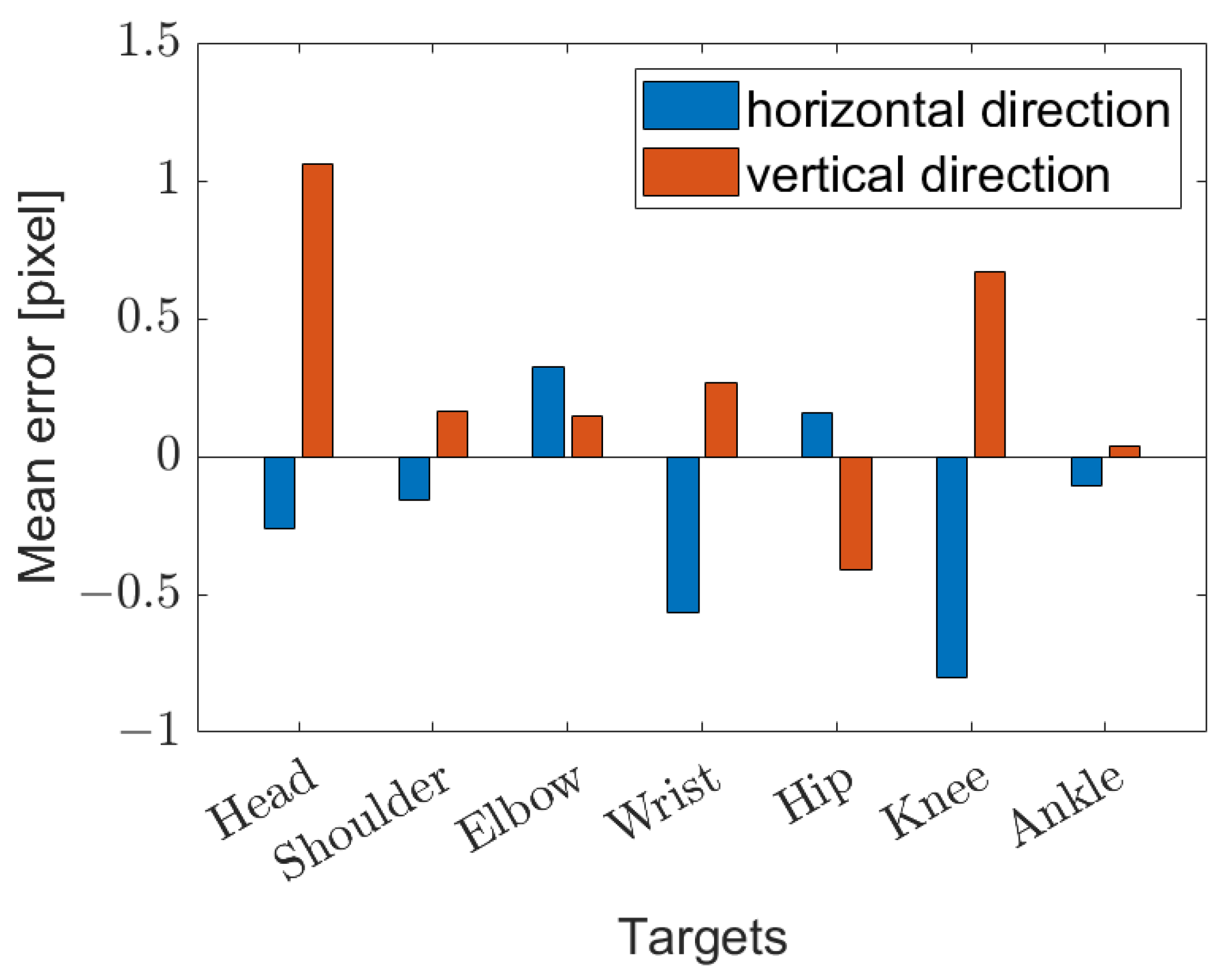

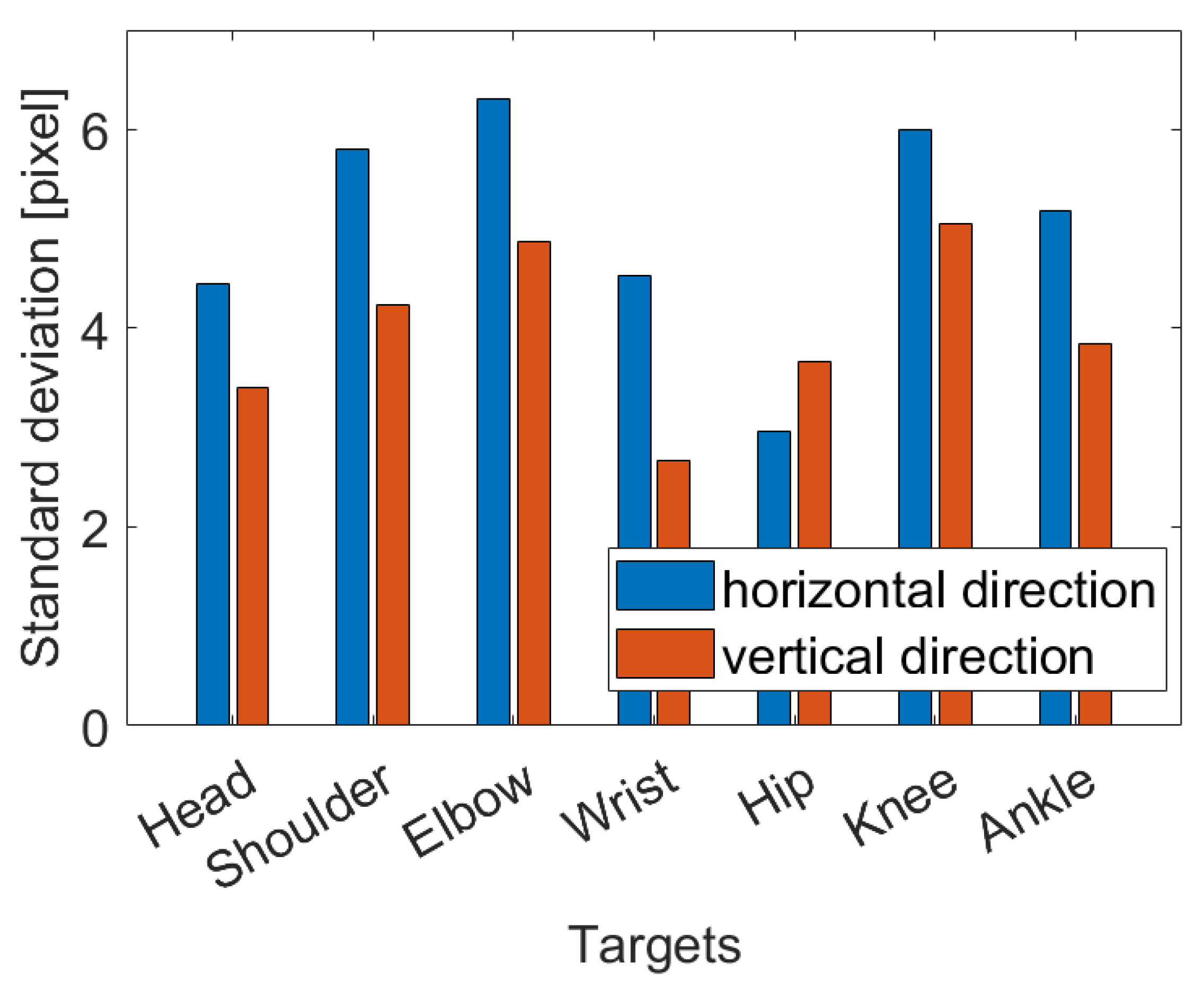

3.2. Method Performance Analysis on New Videos

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ANN | Artificial Neural Network |

| FCN | Fully Convolutional Neural Networks |

| AFOV | Angular Field Of View |

| CNN | Convolutional Neural Networks |

| ROI | Region of Interest |

| IoU | Intersection over Union |

| NaN | Not a Number |

| SVW | Sport Video in the Wild |

References

- Seifert, L.; Boulesteix, L.; Chollet, D.; Vilas-Boas, J. Differences in spatial-temporal parameters and arm–leg coordination in butterfly stroke as a function of race pace, skill and gender. Hum. Mov. Sci. 2008, 27, 96–111. [Google Scholar] [CrossRef] [PubMed]

- Cosoli, G.; Antognoli, L.; Veroli, V.; Scalise, L. Accuracy and precision of wearable devices for real-time monitoring of swimming athletes. Sensors 2022, 22, 4726. [Google Scholar] [CrossRef] [PubMed]

- Gong, W.; Zhang, X.; Gonzàlez, J.; Sobral, A.; Bouwmans, T.; Tu, C.; Zahzah, E.h. Human pose estimation from monocular images: A comprehensive survey. Sensors 2016, 16, 1966. [Google Scholar] [CrossRef] [PubMed]

- Fani, H.; Mirlohi, A.; Hosseini, H.; Herperst, R. Swim stroke analytic: Front crawl pulling pose classification. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar] [CrossRef]

- Zecha, D.; Greif, T.; Lienhart, R. Swimmer detection and pose estimation for continuous stroke-rate determination. In Proceedings of the Volume 8304, Multimedia on Mobile Devices 2012, Burlingame, CA, USA, 22–26 January 2012. [Google Scholar] [CrossRef]

- Zecha, D.; Einfalt, M.; Eggert, C.; Lienhart, R. Kinematic pose rectification for performance analysis and retrieval in sports. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar] [CrossRef]

- Wei, S.E.; Ramakrishna, V.; Kanade, T.; Sheikh, Y. Convolutional pose machines. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 July 2016; pp. 4724–4732. [Google Scholar]

- Lugaresi, C.; Tang, J.; Nash, H.; McClanahan, C.; Uboweja, E.; Hays, M.; Zhang, F.; Chang, C.L.; Yong, M.G.; Lee, J.; et al. Mediapipe: A framework for building perception pipelines. arXiv 2019, arXiv:1906.08172. [Google Scholar]

- Cao, Z.; Simon, T.; Wei, S.E.; Sheikh, Y. Realtime multi-person 2d pose estimation using part affinity fields. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7291–7299. [Google Scholar]

- Zhou, T.; Wang, W.; Liu, S.; Yang, Y.; Van Gool, L. Differentiable multi-granularity human representation learning for instance-aware human semantic parsing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 July 2021; pp. 1622–1631. [Google Scholar]

- Wang, W.; Zhou, T.; Qi, S.; Shen, J.; Zhu, S.C. Hierarchical human semantic parsing with comprehensive part-relation modeling. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 3508–3522. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Y.; Li, J.; Tian, Y. Parsing Objects at a Finer Granularity: A Survey. arXiv 2022, arXiv:2212.13693. [Google Scholar]

- Cohen, R.C.Z.; Cleary, P.W.; Mason, B.R.; Pease, D.L. The role of the hand during freestyle swimming. J. Biomech. Eng. 2015, 137. [Google Scholar] [CrossRef] [PubMed]

- Greif, T.; Lienhart, R. An Annotated Data Set for pose Estimation of Swimmers; Technical Report; University of Augsburg: Augsburg, Germany, 2009. [Google Scholar]

- Einfalt, M.; Zecha, D.; Lienhart, R. Activity-conditioned continuous human pose estimation for performance analysis of athletes using the example of swimming. arXiv 2018, arXiv:1802.00634. [Google Scholar]

- Zecha, D.; Einfalt, M.; Lienhart, R. Refining joint locations for human pose tracking in sports videos. In Proceedings of the 2019 IEEE CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–20 June 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar] [CrossRef]

- Mo, Y.; Wu, Y.; Yang, X.; Liu, F.; Liao, Y. Review the state-of-the-art technologies of semantic segmentation based on deep learning. Neurocomputing 2022, 493, 626–646. [Google Scholar] [CrossRef]

- Berstad, T.J.D.; Riegler, M.; Espeland, H.; de Lange, T.; Smedsrud, P.H.; Pogorelov, K.; Stensland, H.K.; Halvorsen, P. Tradeoffs using binary and multiclass neural network classification for medical multidisease detection. In Proceedings of the 2018 IEEE International Symposium on Multimedia (ISM), Taichung, Taiwan, 10–12 December 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–8. [Google Scholar]

- Mikołajczyk, A.; Grochowski, M. Data augmentation for improving deep learning in image classification problem. In Proceedings of the 2018 International Interdisciplinary PhD Workshop (IIPhDW), Swinoujscie, Poland, 9–12 May 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 117–122. [Google Scholar]

- Giulietti, N.; Discepolo, S.; Castellini, P.; Martarelli, M. Correction of Substrate Spectral Distortion in Hyper-Spectral Imaging by Neural Network for Blood Stain Characterization. Sensors 2022, 22, 7311. [Google Scholar] [CrossRef] [PubMed]

- Turner, R.; Eriksson, D.; McCourt, M.; Kiili, J.; Laaksonen, E.; Xu, Z.; Guyon, I. Bayesian optimization is superior to random search for machine learning hyperparameter tuning: Analysis of the black-box optimization challenge 2020. In Proceedings of the NeurIPS 2020 Competition and Demonstration Track, Virtual, 6–12 December 2020; PMLR: New York, NY, USA, 2021; pp. 3–26. [Google Scholar]

- Agrawal, T. Bayesian Optimization. In Hyperparameter Optimization in Machine Learning; Springer: Berlin/Heidelberg, Germany, 2021; pp. 81–108. [Google Scholar]

- Sun, S.; Cao, Z.; Zhu, H.; Zhao, J. A survey of optimization methods from a machine learning perspective. IEEE Trans. Cybern. 2019, 50, 3668–3681. [Google Scholar] [CrossRef] [PubMed]

- Iakubovskii, P. Segmentation Models Pytorch. 2019. Available online: https://github.com/qubvel/segmentation_models.pytorch (accessed on 1 February 2023).

- Nogueira, F. Bayesian Optimization: Open Source Constrained Global Optimization Tool for Python. 2014. Available online: https://github.com/fmfn/BayesianOptimization (accessed on 1 February 2023).

- Refaeilzadeh, P.; Tang, L.; Liu, H. Cross-validation. Encycl. Database Syst. 2009, 5, 532–538. [Google Scholar]

- Safdarnejad, S.M.; Liu, X.; Udpa, L.; Andrus, B.; Wood, J.; Craven, D. Sports videos in the wild (svw): A video dataset for sports analysis. In Proceedings of the 2015 11th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), Ljubljana, Slovenia, 4–8 May 2015; IEEE: Piscataway, NJ, USA, 2015; Volume 1, pp. 1–7. [Google Scholar]

| Target Name | bs | lr |

|---|---|---|

| Whole Body | 5 | 0.0031 |

| Head | 5 | 0.0001 |

| Shoulder | 8 | 0.0001 |

| Elbow | 8 | 0.0001 |

| Wrist | 8 | 0.0002 |

| Hip | 7 | 0.0002 |

| Knee | 8 | 0.0015 |

| Ankle | 8 | 0.0011 |

| Mean Error | Standard Deviation | |||

|---|---|---|---|---|

| [pixel] | [mm] | [pixel] | [mm] | |

| Horizontal direction | −0.2 | −0.86 | 2.6 | 10.8 |

| Vertical direction | 0.3 | 1.17 | 2.0 | 8.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Giulietti, N.; Caputo, A.; Chiariotti, P.; Castellini, P. SwimmerNET: Underwater 2D Swimmer Pose Estimation Exploiting Fully Convolutional Neural Networks. Sensors 2023, 23, 2364. https://doi.org/10.3390/s23042364

Giulietti N, Caputo A, Chiariotti P, Castellini P. SwimmerNET: Underwater 2D Swimmer Pose Estimation Exploiting Fully Convolutional Neural Networks. Sensors. 2023; 23(4):2364. https://doi.org/10.3390/s23042364

Chicago/Turabian StyleGiulietti, Nicola, Alessia Caputo, Paolo Chiariotti, and Paolo Castellini. 2023. "SwimmerNET: Underwater 2D Swimmer Pose Estimation Exploiting Fully Convolutional Neural Networks" Sensors 23, no. 4: 2364. https://doi.org/10.3390/s23042364

APA StyleGiulietti, N., Caputo, A., Chiariotti, P., & Castellini, P. (2023). SwimmerNET: Underwater 2D Swimmer Pose Estimation Exploiting Fully Convolutional Neural Networks. Sensors, 23(4), 2364. https://doi.org/10.3390/s23042364