Abstract

Nowadays, Artificial Intelligence systems have expanded their competence field from research to industry and daily life, so understanding how they make decisions is becoming fundamental to reducing the lack of trust between users and machines and increasing the transparency of the model. This paper aims to automate the generation of explanations for model-free Reinforcement Learning algorithms by answering “why” and “why not” questions. To this end, we use Bayesian Networks in combination with the NOTEARS algorithm for automatic structure learning. This approach complements an existing framework very well and demonstrates thus a step towards generating explanations with as little user input as possible. This approach is computationally evaluated in three benchmarks using different Reinforcement Learning methods to highlight that it is independent of the type of model used and the explanations are then rated through a human study. The results obtained are compared to other baseline explanation models to underline the satisfying performance of the framework presented in terms of increasing the understanding, transparency and trust in the action chosen by the agent.

1. Introduction

In recent years, the increase in the application of Machine Learning (ML) techniques, due to the availability of faster computers and the need to analyze a large amount of data, has renewed interest in such algorithms. However, ML algorithms sometimes lack explainability and transparency, which is fundamental for being trusted by users [1,2,3,4]. In particular, this is still an unsolved problem for Reinforcement Learning (RL), especially for model-free methods. This kind of techniques estimates the optimal policy without using or evaluating the dynamics of the environment. For this reason, they are difficult to explain, but, on the other hand, these RL techniques have applications in sensitive fields such as medicine [5,6] and finance [7], where choosing one action over another can lead to substantial differences in outcome [4]. Overall, the problem of generating explanations for model-free RL is very important and interesting.

This paper aims to introduce improvements to an existing method [8,9] for generating an explanation for model-free RL agents. The general idea is to predict the information at the next time steps and the most important aspects of a particular problem using a combination of Bayesian Networks (BNs) and Recurrent Neural Networks (RNNs). In detail, we focused on creating explanations for both the actual and counterfactual questions, i.e., respectively, “why” and “why not”. Consequently, this model is computationally evaluated in three RL benchmark environments (two continuous and one discrete) using different trained RL agents and the results are compared to the literature. Lastly, the explanations generated are comparatively rated in a human study together with baseline approaches to check their quality.

The paper is structured as follows: first, the theoretical background is introduced; then, the related works, and in particular Madumal et al.’s method [8,9], are presented. In the following section, our methodology is explained in detail and the experimental results are shown. In the end, a comparison of the results is provided and a discussion about the highlights of this research and future works is presented.

2. Background

In this section, the basic theoretical knowledge is discussed. First, RL will be briefly introduced. Then, BNs are described with the NOTEARS algorithm that allows us to obtain the directed acyclic graph.

2.1. Reinforcement Learning

RL is one of the three learning paradigms in ML next to Supervised and Unsupervised Learning. In RL, there is an agent, which is the learner and decision-maker: it has to understand how to use the information of the environment to choose the best action. Everything that interacts with the agent is called the environment. The agent can influence and change the environment through its actions while the environment gives rewards whose sum must be maximized over time.

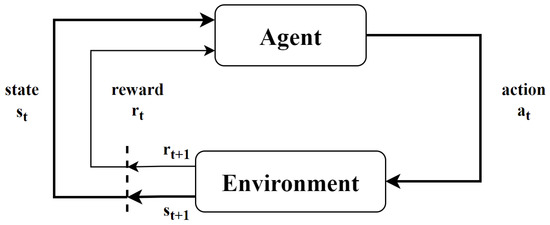

In more detail, at each discrete time step the agent receives the actual state from the environment and decides on an action , where and are the state and action spaces, respectively. As a consequence of the action, the agent will receive a numerical reward and the environment will update to a new state . This procedure is shown in Figure 1. The agent has to choose actions to maximize the sum of rewards , where is a discounting factor. An additional characterizing property is that the probability of each value for and depends only on the state and action and not on earlier states and actions. This property is called the Markov property and characterizes the Markov Decision Process (MDP) framework. An MDP is a tuple , where and are previously defined, is the probability of transitioning from state to state after taking action and is the reward function such that . Another option is to consider the reward function using the transition probability: . The MDP is the basis of the RL algorithms we will further explore in the following: the model-free methods. These algorithms do not need any knowledge of the transitional probability distribution of the environment, but they consider only the Q-function under the policy , which is defined simply as a distribution over actions given states: . In particular, the methods used in this work are Sarsa, Deep Q-Network (DQN) and Policy Gradient.

Figure 1.

Agent–environment interaction in RL [10].

2.2. Sarsa

The first RL algorithm we will study is Sarsa, a model-free and on-policy method: a policy has to be followed to apply this algorithm. The first step of the Sarsa method [10] is to learn the action value function . At the beginning of the learning process, a Q-table () is initialized as a matrix of zeros. The dimensions of this table are defined by the number of states and actions . The agent looks at this matrix in order to determine its next action, i.e., using an -greedy strategy, where is a fixed constant that represents the probability of choosing a random action. After taking an action, the Q-table is updated considering the following rule:

where is the learning rate and is the discounting factor. This update is carried out after each step until a terminal state is reached. As we can see from the updating Formula (1), at each iteration a quintuple of events is needed. This quintuple gives the name Sarsa to this algorithm.

2.3. DQN

Before introducing DQN, we need to give some basic notions on Q-learning. The Q-learning algorithm [11] utilizes the same basic ideas of the Sarsa algorithm. We start with a Q-table initialized at zero and, at each step, the Q-value [10] is updated using now the following rule:

where as always is the learning rate and the discounting factor. The DQN agent is a combination of the previous RL method and a class of Artificial Neural Networks (ANNs) called Deep Neural Networks (DNNs). The use of ANNs is justified by the fact that when a ReLU function is utilized as an activation function, the ANN is an universal function approximator [12]. Therefore such ANNs can well approximate any Lebesgue integrable function with respect to distance if the network’s depth is sufficient enough. In this particular case, the approximate value function is where are the parameters (weight of the arcs) of the Q-network at iteration i. We refer to the neural network function approximator as the Q-network. With this structure, the agent can achieve a high level of performance on different problems without using different specific feature sets. At each time step t, the agent’s experience is stored in a dataset . The Q-learning updates are applied during the learning on minibatches of experience , taken uniformly at random from the memory D. Then, the loss function used for the updating is defined as follows:

where is the target at iteration i. By differentiating the loss function with respect to the parameters, we obtain:

Instead of computing the full expectations of the gradient, it is often better to optimize the loss function by stochastic gradient descent. This approach has several advantages over standard Q-learning. The most important is that each step of the experience is used to update many different weights and that also increases the efficiency of the method. Another interesting aspect is that randomizing the samples allows for breaking the correlations between consecutive samples. However, there are also flaws. It is easy to get stuck in loops near a poor local minimum or even diverge. However, using experience replay, it is possible to avoid oscillations or divergence in the parameters.

2.4. Policy Gradient

All the methods previously presented are action-value methods: they learn the values of the action and they choose the one that maximizes the estimated action value. In this section, different kinds of processes are analyzed that learn a parametrized policy without directly using any value function. Let be the set of parameters that parametrize the policy (we will use for brevity). Thus

is the probability of taking action a at time t given the state s at time t and parameters . Then, a scalar performance measure has to be maximized with respect to the policy parameter. Using the gradient ascent method, we obtain:

where is the gradient of the performance measure with respect to the policy parameter . This is the general idea of the methods known as Policy Gradient methods [13]. As a performance function, the expected return following a parametrized policy can be utilized:

where is the total return following the trajectory . The challenge here is to calculate the gradient of an expectation. This calculation is the result of the Policy Gradient Theorem [13], which states:

An important aspect that has not been described yet is how we can parametrize our policy . The only condition that we have to assure is the differentiability with respect to the parameter . Considering every policy for which is finite and is a good choice.

2.5. Bayesian Network

A BN consists of a Directed Acyclic Graph (DAG) containing vertices V and edges E and a set of conditional probability distributions P. A DAG is a graph where all the edges point in a specific direction and there are no cycles (it is not possible to start from any node and return to it following the direction of the edges). Each vertex in corresponds to a feature and the edges represent a dependency between two features. This graph G is also called the structure of the BN. Another interesting domain is the one relative to Dynamic Bayesian Networks (DBNs). A DBN deals with variables in a BN that change with time. For the definition of a DBN, we need two structures: a prior network that represents the interactions between variables at time and a second transition network that can illustrate how each variable is conditioned by the one from the previous (or same) time slices [14].

2.6. NOTEARS Algorithm

In this subsection, the principal features of the NOTEARS algorithm are introduced; for major details, we refer to [15]. The task of learning DAGs from data is an extremely complex problem since it is an NP-hard problem [16] and NP-complete [17]. For this reason, it is helpful to describe this combinatorial problem using a particular smooth function as follows:

where

and

Here, W is the weighted adjacency matrix, which is a particular case of adjacency matrices where, instead of , there are the weights of the arcs between nodes. In addition, is a score function and is a smooth function whose level set at zero characterizes directed acyclic graphs. The algorithm proposed by [15] for solving (9) consists of three steps:

- Writing the constrained problem as a sequence of unconstrained subproblems;

- Solving the subproblems: first the primal one and then dual ascent is applied. This process is iterated until we obtain that the constraint is verified with a tolerance of ;

- Utilizing thresholding: eliminating all the edges that present a weight lower than a threshold parameter .

All these steps compose the NOTEARS algorithm, which is summarized in Algorithm 1. The computational complexity of this method is cubic in the number of nodes, although the constant is small for sparse matrices and it outperforms many of the existing algorithms when the in-degree is large. However, there are no studies on the worst-case iteration complexity since in practice not many () iterations are required [15]. In the end, although the method guarantees to find only stationary points, the scores attained by the solutions found are comparable to the global optimal scores [15].

| Algorithm 1 NOTEARS algorithm [15] |

| Require: Initial guess , progress rate , tolerance , threshold |

| 1: for do |

| 2: Solve primal problem: |

| 3: argmin with s.t. |

| 4: Dual ascent: |

| 5: |

| 6: if then |

| 7: |

| 8: break |

| 9: end if |

| 10: end for |

| 11: Thresholding: |

| 12: |

| 13: Return |

2.7. Explainable Reinforcement Learning Terminology

In recent years, with the explosion of Explainable Reinforcement Learning (XRL) usage in practice, the ambiguity in the vocabulary considered in different research in this field has increased [18,19]. This fact creates huge problems if comparing papers adopting contrasting terminologies. The most relevant example of ambiguity is in the definition of the central concept of an explainable method [20]. A recurrent circumstance is, indeed, when authors do not explicitly give the definition of what they consider explainable or interpretable [21], generating in this way more doubt. Although in different papers [18,22,23] explainability is considered as a synonym of interpretability, we recognize these two terms as distinct: a model is qualified as interpretable if we can intrinsically understand it without any further developments; while an approach is explainable when we apply an external algorithm that can generate a reasonable explanation. Therefore, interpretability can be regarded as a passive and explainability as active characteristic.

However, this is not the only equivocal case in the terminology. Other controversial examples are: transparency, comprehensibility, simulatability and decomposability [19,21,24,25]. In particular, for the purpose of this paper, we focus our attention on the definition of the following concepts:

- Transparency: the ability to give relevant information about a model, e.g., feature relationships;

- Trustworthy: when a user can reasonably be confident in the choices of the algorithm;

- Understandable: if a user can comprehend why the explanation was generated in that way;

- Satisfying: the feeling of having all the information well organized;

- Safe-reliable: being able to constantly behave in the same way;

- Complete: when all the fundamental components are presented in the explanation.

After this brief introduction to the vocabulary adopted in this paper, we can move to the literature review presented in the next section.

3. Related Work

In this section, past work on the explanation and interpretability of RL methods is discussed. In particular, we focus first on a general overview of the most important previous publications and later we give the details of the existing method from which we will develop our methodology.

3.1. General Overview

The literature that explores the explanation of policies and actions in an MDP context is extensive. In [26], a first attempt to generate explanations for MDP selects and uses relevant variables of states of factored MDPs, chosen by domain experts. Then, attention was brought to the generation of minimal explanations for MDPs using three domain-independent templates [27]. Moreover, policy explanations were created from summarized policies and provided contrastive explanations for counterfactual events [28]. Similarly to our approach, ref. [29] proposes to use DBNs for representing the transition function in factored MDPs, since learning DBNs in factored MDPs is a well known solved problem [30]. It is fundamental for the increased transparency of the machine to be able to create explanations for the counterfactual case, which simulates what could happen under different conditions. These answers will give a detailed explanation of the agent’s behaviour [22,31,32].

Another category of methods for XRL analyzed images of Atari games and constructed counterfactual explanations through a deep generative model, obtaining interesting results in the identification of flawed agents [33]. Other previous works that used images for understanding deep RL agents focused on saliency maps and t-SNE embeddings [34,35,36].

A different approach to XRL is given by reward decomposition into meaningful reward types, to directly compare the kind of action done for a sufficiently minimal explanation [37,38,39]. Similarly, it is possible to decouple the state feature extraction to reduce the search space, accelerate the training and give a better interpretable representation [40]. However, these methods do not use an underlying causal model, which is fundamental for our purposes. Causal models are usually defined by structural equations that can also model counterfactual explanations [41]. In the next subsection, we focus our attention on the Action-Influence graph approach which is the starting point for defining our methodology.

3.2. Action-Influence Model Approach

In this subsection, we introduce Madumal et al.’s [8,9] method for the generation of explanations in RL using Action-Influence graphs. In these papers, the authors apply different approaches (linear regression, decision tree, multi-layer perceptron, decision policy tree and decision policy tree with fixed depth) to learn the structural equations of the causal model and compare the results between them. The explanations are generated using an Action-Influence graph given by an expert in the domain. In the following, the formal definition of the Action-Influence model is provided.

Definition 1

([9]). An Action-Influence model is a tuple , where is a tuple in which is the set of the exogenous variables, the set of endogenous variables, is a function that denotes the range of values of every variable in and A is the set of actions and F is the set of structural equations.

Due to this graph, it is possible to understand how the agent’s actions interact with the environment and to explain the relationship between each feature. Before giving the definitions of the minimal explanation, we need to introduce the concept of instantiation [9] for both the actual and counterfactual scenarios.

Definition 2

([9]). The actual instantiation of a model M is defined as , where S is the vector of state variable values from an MDP and V gives the set of the endogenous variables of the action influence model.

Definition 3

([9]). The counterfactual instantiation of a model M for a counterfactual action B is defined as , where Z gives the instantiation of all the predecessor variables of action B with current state values and the instantiation of all successor nodes (of B) of the causal chain by forwarding simulation.

Now, it is possible to understand how minimally complete explanations are generated. For the answer to the “why” question, the authors from [8] provide the following definition that uses a decision tree as a predictor.

Definition 4

([8]). Given the set of decision nodes for the action a from a decision tree , we define a minimally complete explanation for a “why” question as a pair , in which is the vector of the reward variables reached by following the causal chain of the graph to sink nodes; is such that is the maximal set of variables in which , where is the set of intermediate nodes of the causal chain of action a, with and giving the values under the actual instantiation .

For the counterfactual question, they use a different concept based on minimally complete contrastive explanation.

Definition 5

([8]). Given the set of decision nodes for the action a from a decision tree , we define a minimally complete contrastive explanation for a “why not” question as a pair , in which is the vector of the reward variables reached by following the causal chain of the graph to sink nodes; is such that is the maximal set of variables in which , where is the set of intermediate nodes of the causal chain of the counterfactual action b, is the set of the counterfactual decision nodes (generated obtaining the counterfactual action and then traversing the tree) and values and are contrasted using the actual instantiation and the counterfactual instantiation .

Then, they also added the distal explanation: forecasting the most significant action in that episode through an RNN. For this reason, the explanations must be modified in order to consider the new information obtained.

Definition 6

([8]). Given a minimally complete contrastive explanation, current action a and a prediction model , a minimally complete distal explanation is a tuple , where and do not change from Definition 5, and gives the distal action predicted through such that , where A is the action set of the agent and gives the action set of the causal chain of current action a.

Hence, for the “why” questions, the explanation is created following the chain of the Action-Influence graph given by the action predicted using one of the previous techniques and the distal action. On the other hand, the counterfactual explanation is produced by comparing the chain given by the counterfactual action with the actual one.

The environment that is used for testing the results on the explanation in [8] is the Starcraft II scenario. Starcraft II is a science fiction real-time strategy video game where two or more players compete against each other. Each person has to develop his base and then defend it or attack and defeat the other players. Due to its high complexity, this game started to be an important benchmark for the creation of competitive AIs that could obtain better results than any other human player [42]. The state variables are W worker number, S supply depot number, B barracks number, E enemy location, ally unit number, ally unit health, ally unit location, destroyed units and destroyed buildings. The set of actions is composed of: build supply depot, build barracks, train offensive unit and attack. An example of counterfactual explanation for the question “why not build barracks ()?” produced by [8] is:

“Because ally unit number () is less than the optimal number 18, it is more desirable do the action train marine () to enable the action attack () as the goal is to have more Destroyed Units () and Destroyed buildings ()”.

In summary, this method begins with a technique for the prediction of the action performed by the agent; then, following the correct chain from the Action-Influence graph and using the distal action obtained from a RNN, the explanation is created. For more information and examples, we refer to [8,9]. This method is the starting point of our approach, but in our case, some changes will be introduced to obtain improvements in the accuracy of the prediction and increase the details of the explanations.

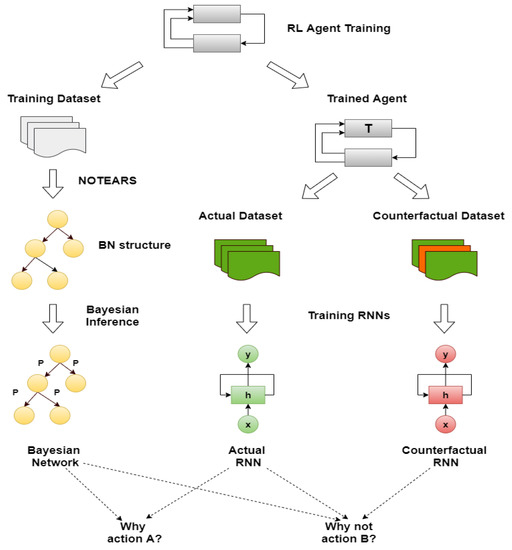

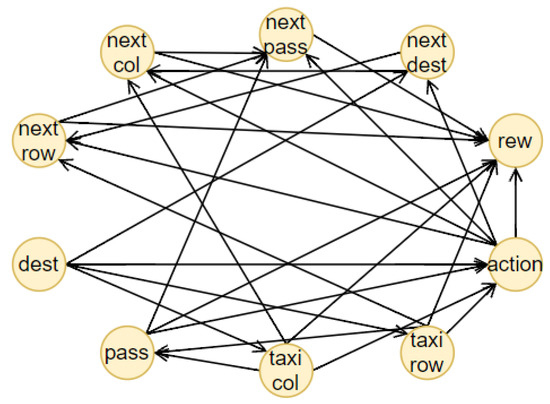

4. Our Method

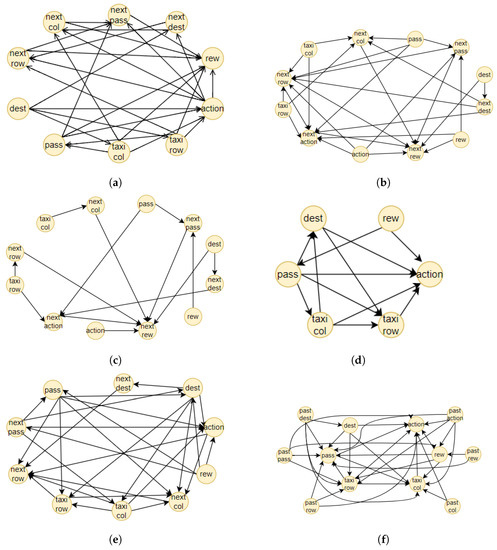

The proposed method consists of four components, illustrated together with the complete pipeline of the methodology in Figure 2. The first one is an RL agent, trained with a model-free method. During the training, the state-action pairs are saved in a dataset, the memory, to build the BN (the second component). The memory is composed of all the actual states, the action chosen by the agent, the next states and the reward obtained. From this dataset, we generated different causal graphs using different algorithms, represented in Figure 3. We decided to adopt our final methodology after a comparison of the different results obtained using approaches well known in the literature. In the first case, Figure 3a, we created a causal structure that is in between a BN and a DBN. In fact, for each of the memory’s characteristics, we consider a node in the BN. To create the structure of this BN, the NOTEARS algorithm, as discussed in Section 2.6, is utilized. The obtained graph is similar to a DBN but it does not present all the features in the next time step. In fact, we do not use any future information for the reward and action. Furthermore, during the last step of the NOTEARS algorithm (the thresholding), we include some assumptions to obtain a more realistic structure. The conditions are the following:

Figure 2.

Pipeline of the proposed methodology.

Figure 3.

Comparison of causal graphs generated with different algorithms and parameters for Taxi environment. (a) BN Graph created by the NOTEARS algorithm with threshold parameter . (b) DBN Graph created by the DYNOTEARS algorithm with threshold parameter . (c) DBN created by the DYNOTEARS algorithm with threshold parameter . (d) Causal graph generated from Direct LiNGAM using only the actual state values. (e) Causal graph generated from Direct LiNGAM using both the actual state values and the next ones. (f) Causal graph generated from VARLiNGAM with the threshold for the actual relation and for the next time step .

- Causality: all the state features influence their corresponding future values (so no future feature can influence current states or there will be a cycle that is not allowed);

- Stationarity of dependencies between variables: if there is a dependency between features at a one-time step, then it will be in every time step;

- Importance of the action: all the values of the actual state will be used to obtain the action and the action node is connected to all the future features.

These assumptions are justified by the observation of the other graphs. The first two hypotheses come from the DBN since they are fundamental conditions for generating this kind of graph. The third one is, instead, derived from the relations obtained using the Direct LiNGAM [43] and VARLiNGAM [44]. In the last graphs presented in Figure 3e,f, it is possible to see how all the state variables are connected to the action. This is also justified by the fact that the RL agent is trained to consider the state values and choose the best action in that particular instantiation. The resulting final graph is the closest to the ground truth compared to the others.

For the other tests, we generated two DBNs using the equivalent algorithm for DBNs to NOTEARS, i.e., DYNOTEARS [45]. As we can see from Figure 3b,c, the result is only satisfying when we use a small threshold parameter, i.e., , which means that the structural equations of the causal graphs considered are shallow and therefore subjected to higher fluctuation given different datasets (Figure 3b). If we increase this parameter to , many connections will be erased, resulting in a sparse graph (Figure 3c). For the remaining tests, we used Direct LiNGAM [43], which is a method applied for learning causal structures from data without any further assumption, relying only on the statistical method known as independent component analysis, and VARLiNGAM [44], which corresponds to the previous algorithm for studying time series. We first derived the structural equations by studying only the features at one-time step through the LiNGAM method. The resulting graph is presented in Figure 3d. As we can see, we have some contradictory results since it seems that the reward influences the passenger’s position and, most importantly, the action, while it is well known that the action causes the reward. This error is shown in the next graph in Figure 3e, where, in this case, all the features of the memory dataset were used as different characteristics. Lastly, we generate the causal graph using VARLiNGAM, with threshold parameters for the relations between actual state features fixed at and threshold for the next state features . The obtained structure is shown in Figure 3f. The causal graph obtained presents the same problem with the causality of the reward, but, on the other hand, it can understand the relationship between the state features. In fact, it is the only graph, together with the initial one, to recognize the destination as a root node. Moreover, if we compare the graph obtained with the algorithm NOTEARS considering also the next time step state features, in Figure 3a and the one generated through VARLiNGAM, in Figure 3f, we can notice that the relations discovered are principally the same. For these reasons, we consider the structure obtained using the NOTEARS algorithm correct and the best in this set of different models.

After the creation of the DAG for the BN, it is possible to learn the probabilities using inference. To this end, the dataset must be discrete. The discretization of the features depends on the particular environment that is studied. In our case, we will focus on three RL benchmarks (Taxi, Cartpole and Mountaincar) that will be explained in detail in the next section. In a discrete environment, e.g., Taxi, every feature is ready for inference, while in a continuous one, e.g., Cartpole and Mountaincar, we have to find the best clusters for each characteristic using the K-means algorithm. Usually, for the discretization of RL environments, tile coding is applied, which consists in dividing the continuous space into different tiles and then for each point evaluating if it is in a particular tile (1) or not (0). In this way, it is possible to obtain a vector of 0s and 1s as a discrete representation of each value. This process, the One-Hot-Encoding, is completely independent of the values that will be used during the simulation. To avoid this, we used the K-means clustering algorithm thus we have a better differentiation between each cluster considering the information gathered during the training. More information about the creation of the clusters for each environment is given in the following section. After the discretization, we applied the Bayesian estimators’ method assuming a Dirichlet distribution prior to learning the probabilities of the BN.

Consequently, it is possible to forecast the next state values using the freshly created BN. In fact, by giving to the network the state information and the actual (counterfactual) action, the prediction for the future time step will be the output of the BN. Following this concept, we enunciate the definition of the actual (counterfactual) prediction.

Definition 7

(Actual/Counterfactual Prediction).Given an initial vector of the state at a time t, and the actual (counterfactual) action , the actual (counterfactual) prediction of the Bayesian Network () is defined as the state values with the maximum probability, given and , i.e.:

This definition will be used later for the description of how an explanation is generated.

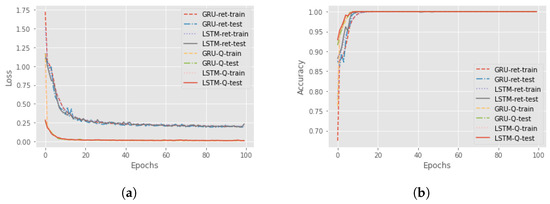

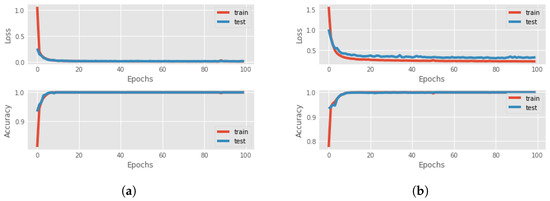

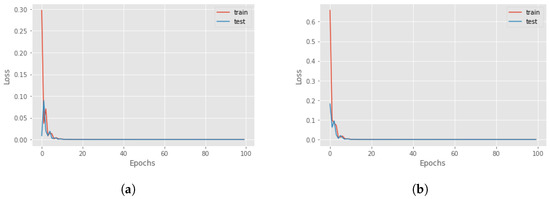

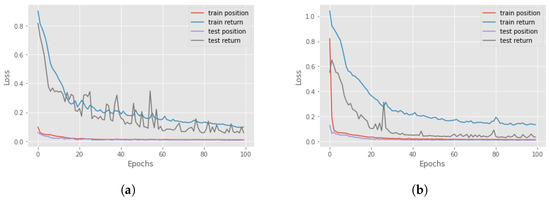

Now, we can focus on distal predictions. The idea of distal actions was first presented in [8], where a distal action is one of the most significant actions that is enabled if the agent realizes a particular sequence of actions. However, there is also different influential distal information which is valuable for a more detailed explanation. An example could be the final return of the episode. The third and fourth components of the proposed methodology are RNNs for the actual and counterfactual distal predictions. The third component aims to predict the distal return and action/state following the correct policy, and the fourth component to forecast the distal values if, at a particular time step, an action different from the correct one is executed. For these networks, we compared the performance of Long-short term memory (LSTM) cells and Gated Recurrent Units (GRUs) to find the better option. The features involved in the training of the network were the state characteristics, the actions executed and the actual accumulated returns or the Q-values. Four different RNNs were created to understand the best choice: using LSTM cells or GRU and if it is better to consider the actual return or if it is sufficient to only use the Q-value. In Figure 4, there is a comparison between all four different networks in terms of loss and accuracy during the training and test session over 100 epochs, for the Taxi environment. It can be seen that the best choice is to use the GRU network with Q-value, but in general, there are no significant differences with the GRU network that used the return. For this reason, in an environment where we have the Q-value, it is better to use it as a feature, while, if the Q-function is not known, it is possible to accomplish the same result using the actual accumulated returns.

Figure 4.

Comparison of losses and accuracy of RNNs with different cells and features for the Taxi problem. (a) Loss obtained during the training and test phase with different parameters. (b) Accuracy obtained during the training and test phase with different parameters.

The main difference between the RNNs for the actual prediction and the counterfactual one is how the memory for their training is composed. The actual RNN data are created following the optimal policy and saving all the information of each step. Meanwhile, for the counterfactual, the agent will always choose the optimal action following the trained RL agent until a “why not” question is asked. In that case, the counterfactual action is chosen and saved in the same way as indicated before. The final return and the distal state/action are given by following the correct actions after the counterfactual one. In both cases, it is fundamental to pre-process data: for the actions, One-Hot-Encoding is applied, while for the return it was beneficial to standardize the values to reduce the time of the training and the loss.

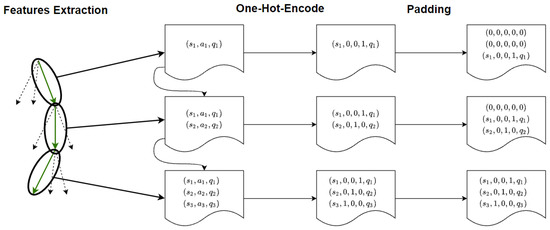

Another important problem is that each data point is a time series of potentially different lengths. At the start of the episode, the saved values v are only the one of the initial time step, so (where f represents the number of the features), while at the end, the saved data are composed of all the values of each previous step, i.e., if the episode length is l we have . However, each data point must have the same dimensions. To this end, it is possible to evaluate the maximum length of the episodes m. Then, all the elements that have a lower dimension than m will be filled with vectors of 0. This technique (Padding) is a well known method used in message [46] or image recognition [47]. Figure 5 represents the pipeline of the creation of the dataset for the RNN training. Now, we introduce a definition of distal prediction that will be useful for creating the final explanation.

Figure 5.

Creation of the dataset for the distal prediction.

Definition 8

(Distal Actual/Counterfactual Prediction).Given an initial vector of the state at a time t, , the one-hot-encoded actual (counterfactual) action () and the corresponding Q-value , the distal actual (counterfactual) prediction () is defined as the output of the actual (counterfactual) RNN given and as input.

All the information obtained from the previous components is fundamental for the explanation: the next state values prediction through the BN, the causal structure and the distal values obtained from the RNNs. However, the first step is to define what is the center of the explanation, i.e., the central variable.

Definition 9

(Actual central variable/node for “why” question).Given the state and , the vector of predicted values by the Bayesian Network at time step t, an actual central variable or node D in a Bayesian Network for the “why” question explanation is a node such that , where and are, respectively, the D components of the state vector and the predicted vector .

After this introductory part, it is possible to talk about the explanation for “why” questions. The following definition shows in detail how to use the information obtained previously to create an explanation.

Definition 10

(Explanation for “why” question).Given the state and an action , the explanation for the “why” question is a tuple:

where is the predicted values through the Bayesian Network, is the distal actual prediction through the actual RNN, is the parent set and the children set of the actual central node D and the reward node connected to D, which are the variables in the chain that are directly linked to the reward node.

Principally, we give the user all the information obtained through the BN and RNN predictions, connecting all of them and analyzing the structure of the DAG.

Similar to the previous definitions, there are almost identical definitions for the counterfactual case.

Definition 11

(Counterfactual central variable/node for “why not” question).Assume the state and let be the vector of the counterfactual predicted values by the Bayesian Network at a time step t. A central variable or node C in a Bayesian Network for a “why not” question explanation is the node such that , where and are, respectively, the C components of the actual vector and the counterfactual predicted vector .

Definition 12

(Explanation for “why not” question).Given the state , an actual action and a counterfactual action , the explanation for a “why not” question is a tuple:

where are, respectively, the vectors of predicted actual and counterfactual values through the Bayesian Network, are, respectively, the distal prediction through the actual and counterfactual RNNs, C is the counterfactual central variable/node, the parent set and the children set of the actual central node D and the reward node connected to D.

For the explanation of the “why not” question, the main idea is the same as the actual one: give to the user all the predicted information, linking them with each other through the central variables and the structure of the BN. In this way, it is also possible to directly compare the results of actual and counterfactual forecasting. Therefore, human awareness of the correctness of the agent will increase. An interesting event is when we have more than one central variable. In these scenarios, we will compute the chain for all the nodes that satisfy the properties described above.

In the next section, all these definitions are practically used to clearly explain what the results of the model will be.

5. Results

For the evaluation of the quality of the methodologies in XRL, different metrics are used to compare how well one approach behaves with respect to others [48]. In our case, we considered an application level and, then, a human level, as proposed in [49]. In the first case, we focused our attention on the accuracy of the prediction made by the model. In this way, it is possible to directly confront the results achieved in other papers [8,9], obtained always in the same environments and using the same agents. In fact, if the forecasts of the agent’s actions and outcomes are wrong, all the resulting explanation will be completely erroneous. In the second case, applying a survey [49], it is possible for human users to judge the proposed explanations and give grades on a Likert scale base. Moreover, we also implemented other inquiries from [27]; thus, we are able to rank the different statements considering multiple qualities and properties.

Therefore, in the following sections, we reported the experiments and results in two parts: one for the computational analysis and the other for the human study.

5.1. Computational Evaluation

The new method is now tested in three different benchmarks environments from OpenAI Gym [50] (Taxi, Cartpole and Mountaincar), with distinct RL agents (SARSA, Policy Gradient and DQN). The specific details of each problem and examples of generated explanations are described in the following subsections. For the parameter choice, we refer to Appendix A. In particular, the parameters presented in Table A1 were selected using optuna 2.9.1 [51] after 100 trials, while the parameters of the RNNs, illustrated in Table A2, were fixed at the beginning of the test.

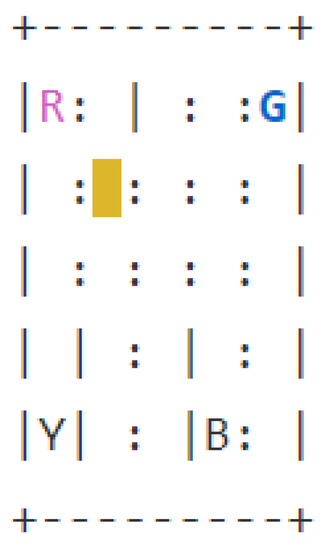

5.1.1. Taxi

The Taxi environment [52], represented in Figure 6, is a well known benchmark environment for developing RL algorithms. The problem can be considered as a simplified real-life problem: at the beginning of the episode, the taxi starts at a random square in a grid world and the passenger is at a random location. Then, the taxi driver has to drive to the passenger’s place, pick up the passenger, go to the passenger’s destination and drop off the traveller. The RL method used for the Taxi environment is Sarsa and Table A1 reports the parameters used for training. The structure of the BN, presented in Figure 7, is created using the NOTEARS algorithm (already implemented in the Python library causalnex [15]) with a thresholding parameter . For the analysis of the accuracy of the prediction, the agent completed 100 episodes and during each step the BN had to forecast the next action and next state values. The accuracy is reported in Table 1. After that, we calculated the AUROC to have a different measure of the quality of the prediction. In both cases, the results obtained are optimal.

Figure 6.

Rendering of the Taxi environment.

Figure 7.

Structure of the BN for Taxi environment where each node represents a feature: “dest” is the destination, “pass” is the passenger position, “taxi col” is the taxi column, “taxi row” is the taxi row, “action” is the action, “rew” is the reward, “next dest” is the next value for the destination, “next pass” is the next value for the passenger position, “next col” is the next value for the taxi column and “next row” is the next value for the taxi row.

Table 1.

AUROC and accuracy for the prediction of each future feature in the Taxi environment.

The distal values that have to be predicted are the final return of the episode and the distal action. In the Taxi environment, the most significant action during the episode is picking up the passenger and dropping him off at the correct destination. So, all the actions before the right “pick up” have a distal action “pick up”, while, after that, all the other steps have a distal action “drop off”. For this reason, the dataset for the training of the RNNs is created by applying the idea above: fixing the distal action to “pick up” for all the time steps until the passenger is in the taxi and then it will be identified as “drop off”. The parameters of the RNNs are presented in Table A2 while Figure 8 shows the results of the training and test of the RNNs.

Figure 8.

Results of the training and test of the RNNs for the distal prediction in the Taxi environment. (a) Loss and accuracy of the RNN for the distal actual prediction. (b) Loss and accuracy of the RNN for the distal counterfactual prediction.

To understand how the explanation is produced, it is helpful to describe two examples: one for the “why” question and the other for the “why not” question. First, we consider how to answer the “why” question. Consider the actual state given by , where the first element is the destination, the second one is the passenger position and then the taxi position represented, respectively, in column and row. Consider the actual action and the counterfactual . So the questions are: “why Go West?” and “why not Go South?”. The first step is evaluating the next state values through the BN. The results are the following: for the actual prediction, we obtain

and for the counterfactual prediction

Then, using the accumulated information during the episode, it is possible to predict the distal values through the trained RNNs. The actual value is

while the counterfactual value is

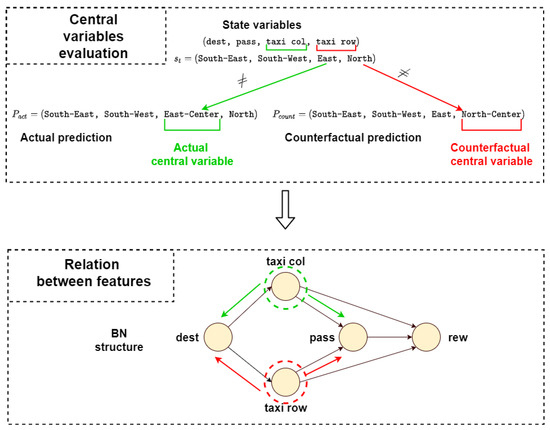

The last step before the creation of the explanation is finding the central variables in the structure of the BN, using Figure 7. The actual central variable is the taxi column (D) because and , while the counterfactual central variable is the taxi row (C), since and . Then, looking at the structure of the BN, it is possible to find automatically the actual and counterfactual chains composed of the parent and children set of the central variables and the nodes connected to the reward node in those chains. This process is summed up in Figure 9.

Figure 9.

Evaluation of the central variables and estimation of the actual (green) and counterfactual (red) chains for the determination of the feature relations used in the explanation.

Then, the answer to the “why” question is:“Since the Destination () is South-East (), it is desirable to do action Go West () in order to change the Taxi column (D) from East () to East-Center () to influence the Passenger position (), because Passenger position and Taxi column () are connected to the goal. Following this action, it will be possible to do Pick up the passenger and obtain a final return of ()”.

The answer to the “why not” question is instead:“Since the Destination () is South-East (), it is more desirable to do action Go West () in order to change the Taxi column (D) from East () to East-Center () instead of changing the Taxi row (C) from North () to North-Center () doing action Go South (), to influence the Passenger position (), because Passenger position and Taxi column () are connected to the goal. Following this action, it will be possible to do Pick up the passenger and obtain a final return of (, while following the counterfactual action will lead to Pick up the passenger and obtain a final return of ()”.

The structure of the explanation is created with the same idea for each environment: we start with the instantiation of the nodes in the parent set, then listing both actions (the actual and the counterfactual for the “why not” questions) and the predictions of the BN we introduce the central variable (comparing the actual instantiation with the predicted one) and finally, we describe the influences in the children and reward nodes. After this first sentence, there will be a distal explanation where the distal information will be reported.

5.1.2. Cartpole

The Cartpole environment, illustrated in Figure 10, is also a classical test problem used in RL. It was proposed and studied for the first time by Sutton and Barto [10]. A pole is attached by a joint to a cart, which moves along a one-dimensional track, without friction. The pole starts upright and the goal is to prevent it from falling over by increasing or reducing the cart’s velocity. Thus, the set of actions is composed of two possibilities: moving the cart to the left or the right. The state features are cart position, cart velocity, pole angle and velocity. The RL method used for this environment is Policy Gradient and Table A1 presents the parameters used.

Figure 10.

Rendering of the Cartpole environment.

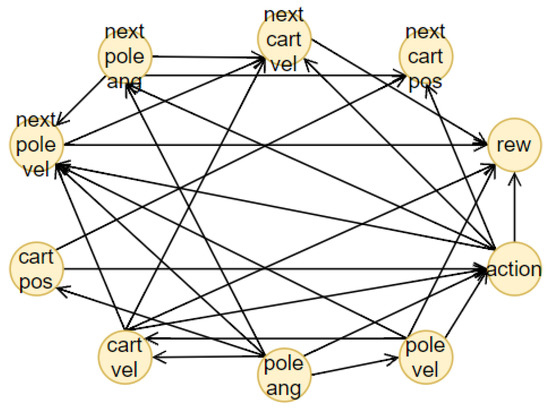

The structure of the BN, represented in Figure 11, is created using the NOTEARS algorithm with a thresholding parameter (chosen after a comparison of the structure obtained). In this case, the environment is continuous, so all the features have to be discretized. The limit values for each bin are obtained using the K-means clustering algorithm, fixing the number of clusters to , obtained from the elbow method. Table A3 presents the results.

Figure 11.

Structure of the BN for the Cartpole environment. The names of the nodes represent the following features: “cart pos” is the cart position, “cart vel” is the cart velocity, “pole ang” is the pole angle, “pole vel” is the pole velocity, “action” is the action, “rew” is the reward, “next cart pos” is the next value for the cart position, “next cart vel” is the next value for the cart velocity, “next pole ang” is the next pole angle, “next pole vel” is the next value for the pole velocity.

The prediction accuracy is tested on 100 episodes and the results are shown in Table 2. These results are not as convincing as they were in the discrete environment (Taxi test problem). To increase the accuracy, it is possible to augment the number of discrete values. However, this solution has a weakness: using a large amount of different discretized values will expand the computation time of the predictions. For this reason, the number of clusters was fixed at six.

Table 2.

AUROC and accuracy for the prediction of each future feature in the Cartpole environment.

In this case, the distal value that has to be predicted is only the final return, since there are no other important characteristics that can be used for a better explanation. Another fundamental aspect to keep in mind is the lack of evaluation of the Q-value. Then, for this environment, we choose as distal information the actual return. As seen in Section 4, the prediction accuracy using the actual return is sufficiently good for our task. However, this was not the only problem for this environment. Since the length of each episode is 500 (in the worst case), this will lead to the creation of a large time-series dataset, that slows down the prediction and needs an enormous amount of free memory to store all the information. For this reason, after 100 steps we end all the episodes. The other parameters of the RNNs are presented in Table A2. The forecasting of these two RNNs is optimal in both phases, as can be seen in Figure 12.

Figure 12.

Results of the training and test of the RNNs for the distal prediction in the Cartpole environment. (a) Loss of the RNN for the distal direct prediction. (b) Loss of the RNN for the distal counterfactual prediction.

Now, two examples of answers, in particular one for the “why” question and the other one for the “why not”, will be explained in detail. Consider the initial state values , where the first element is the cart position, then the cart velocity, the pole angle and lastly the pole velocity. The actual action and the counterfactual is . The questions are “why Go Left?” and “why not Go Right?”. The results of the BN prediction are the following: the direct prediction is

and the counterfactual is

Then, using the time series information accumulated during the episode, it is possible to forecast the distal return: the actual

and the counterfactual

The actual and counterfactual central variable is Pole Velocity () since , while the actual value is and the counterfactual . After evaluating the corresponding parents and children sets through the structure of the BN, it is possible to create the answers. The answer to the “why” question is:

“Since Pole Angle () is Center-Right (), it is desirable to do action Go Left () in order to change the Pole Velocity (D) from Low Positive () to Positive () to influence Cart Velocity (), because Pole Velocity and Cart Velocity () are connected to the goal. Following this action, it will be possible to obtain a final return of ()”.

While the answer to the “why not” question is:“Since Pole Angle () is Center-Right (), it is more desirable to do action Go Left () in order to change the Pole Velocity (D) from Low Positive () to Positive () instead of changing the Pole Velocity (C) from Low Positive () to Low Negative () doing action Go Right (), to influence Cart Velocity (), because Pole Velocity and Cart Velocity () are connected to the goal. Following this action, it will be possible to obtain a final return of (), while following the counterfactual action will lead to a final return of ()”.

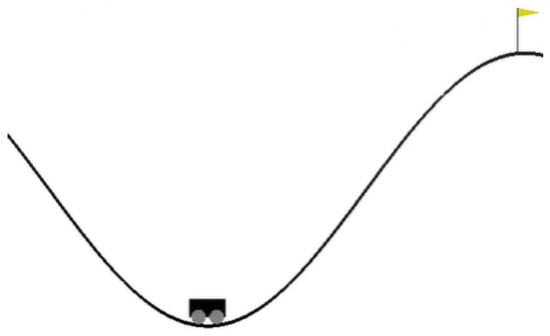

5.1.3. Mountaincar

The Mountaincar environment, shown in Figure 13, is one of the most famous benchmark environments for testing RL algorithms. In this environment, a car starts at the bottom of a valley. Then, the agent can choose to accelerate to the left, right, or stop any acceleration. The goal of the car is to reach the top of the mountain on the right. However, it is impossible to go directly there, by exclusively accelerating to the right, because the car does not have enough power. For this reason, it has to create momentum to reach a higher position. In this case, the RL method applied is Deep-Q-Network [53] with parameters presented in Table A1.

Figure 13.

Rendering of the Mountaincar environment.

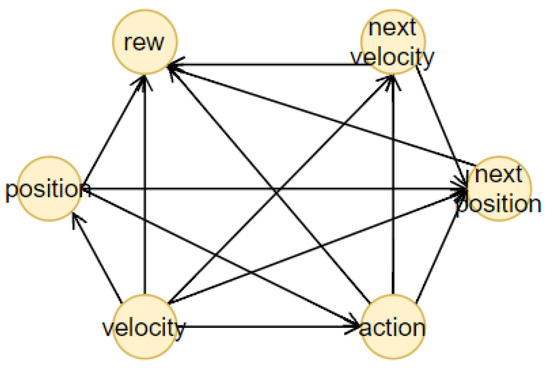

The structure of the BN—see Figure 14—is generated using the NOTEARS algorithm with the best thresholding parameter obtained after a comparison of different results. We applied the K-means clustering algorithm and using the elbow method we chose the optimal values for the position and for the velocity. Table A4 reports the discretization values obtained. The prediction accuracy is tested in 100 episodes and the results are presented in Table 3. The AUROC value and the accuracy are high for each feature and the action prediction.

Figure 14.

Structure of the BN for the Mountaincar environment. The names of the nodes represent the following features: “position” is the position of the car, “velocity” is the velocity of the car, “action” is the action, “next position” is the next position of the car, “next velocity” is the next velocity of the car and “rew” is the reward.

Table 3.

AUROC and accuracy for the prediction of each future feature in the Mountaincar environment.

The distal values for the Mountaincar problem are the final return and the highest position reached with that energy. In this way, it is possible to understand if the car can reach the top of the mountain or not. For the creation of the dataset, the highest position on the right is saved and all the past locations on that trajectory will have that as a distal position. The loss for both RNNs is represented in Figure 15 while the parameters are introduced in Table A2.

Figure 15.

Results of the training and test of the RNNs for the distal prediction in the Mountaincar environment. (a) Loss of the RNN for the distal direct prediction. (b) Loss of the RNN for the distal counterfactual prediction.

Also in this case, two examples will be used to clearly explain how to create the explanation. In the first example, the initial values , where the first element is the position and the second is the velocity; the actual action and the counterfactual is . The questions are “why Go Left?” and “why not Go Right?”. After that, one can predict the next state values through the BN. The results are the following: the direct prediction is

and the counterfactual

Then, using the time series information accumulated during the episode, it is possible to forecast the distal return and position: the actual

and the counterfactual

Then, the actual and counterfactual central variable is Velocity. Now, it is possible to create the answers through the structure of the BN. In this case, the answers will start differently because the parent set of the central variable is empty, so all the initial state values are considered. So the answer to the “why” question is:

“Since Position is Far-Left and the Velocity is Low Negative (), it is desirable to do action Go Left () in order to change the Velocity (D) from Low Negative () to Negative () to influence Position (), because Velocity and Position () are connected to the goal. Following this action, it will be possible to obtain a final return of and a distal position of ()”.

While the answer to the “why not” question is:“Since Position is Far-Left and the Velocity is Low Negative (), it is more desirable to do action Go Left () in order to change the Velocity (D) from Low Negative () to Negative () instead of changing the Velocity (C) from Low Negative () to Low Positive () doing action Go Right (), to influence the Velocity () because Velocity and Position () are connected to the goal. Following this action, it will be possible to obtain a final return of and a distal position of (), while following the counterfactual action will lead to a final return of and a distal position of ()”.

5.2. Human Evaluation Study

In order to evaluate the quality of the explanation generated using our methodology, we carried out a human study. In particular, we tested the hypothesis that our explanations lead to a better understanding of the RL agent strategies and an increase in trust in their choices.

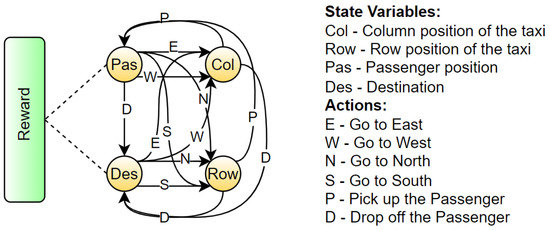

We decided to consider the Taxi environment, introduced in Section 5.1.1, in two different scenarios: we presented a “why” and a “why not” question with the corresponding explanations. For this study, we analyzed the distal explanations generated in [8,9] (C) for both the actual and counterfactual instance and the state relevant variables using template 1 of Khan et al. [27] (R) only for the “why” answer. Moreover, we also considered giving only the action chosen by the agent without any further explanation (N) to have a direct baseline. Therefore, our methodology (B) was compared with the three approaches in the actual scenario and two for the counterfactual. In detail, we present the following examples of the discussed explanations to increase clarity. For the sake of conciseness, we will consider only the situation where the taxi position is (North-Center, West), the passenger is in North-West and the destination is in South-West. For the relevant variables explanation (R), we used the original template with a small change: instead of counting the frequency of visiting a particular state, we considered the distance to the terminal state, since it was the relevant information in the Taxi environment. An example of (R) explanation is: “Why action Move North? Move North is the only action that is likely to take you to passenger=South-West, destination=South-West, taxi row=South, taxi column=West, in about 6 time steps, which is lower than any other action”. In the case of the causal explanations (C), first, we had to define an Action-Influence causal graph. Therefore, we considered the DAG obtained by applying our approach as a starting point and then, we developed the structure presented in Figure 16. For the choice of the optimal values, we manually set them knowing the task and how to reach the goal since the problem can be solved quickly by a human. Hence, considering the method described in Section 3.2, we obtain explanations like the following: “Why not action Move west? Because taxi row is not at the optimal number value North, it is more desirable do the action Move North to enable the action pick up as the goal is to have passenger and destination equal”. In the same way the explanations for the “why” questions were generated.

Figure 16.

Action-Influence graph for the Taxi environment.

Lastly, for the generation of our explanation (B), we considered the associated BN, presented in Figure 7, together with its predictions and used the distal information computed from the RNNs to create the following statement: “Why not action Move west? Since the destination is South-West, it is more desirable to do action Move North in order to change the taxi row from North-Center to North instead of changing no values doing action Move west, to influence passenger because passenger, taxi row are connected to the goal. Following this action, it will be possible to do pick up and obtain a final return of 10, while following the counterfactual action will lead to pick up and obtain a final return of 7”. In the proposed scenario, we rounded the return forecast to the next integer value. The procedure is analogous for the “why” questions case, as presented in Section 5.1.1.

After the detailed description of the considered explanations, we focus now on the format of the questionnaire. The survey consists of three components: there is, first, an introduction to the environment and the agent task and then two sections, one for each particular question (“why” and a “why not”). In the latter cases, after a brief explanation of the context in which the agent takes action, we present an explanation at a time. Then, the participants can evaluate the quality of the explanations by answering questions introduced in [49] using a Likert scale: giving an integer evaluation between 1 (very bad) and 5 (very good). At the end of each question section, preference questions and specific true and false statements are given to collect more information useful for future works. For more information, we refer to Appendix B.

The participants of this survey were 30 students, self-selected at the University of Bundeswehr Munich and Camerino with engineering and scientific (mathematics, computer science and physics) backgrounds and the data were gathered in both physical and digital form. We can consider them experts since the task was a real-life application of a simple daily problem. Moreover, they received detailed clarifications about the environment where the explanations were introduced. In this way, we simulated an audience of domain experts which is one of the possible targets of XRL, together with non-experts in data science and AI [18,54,55].

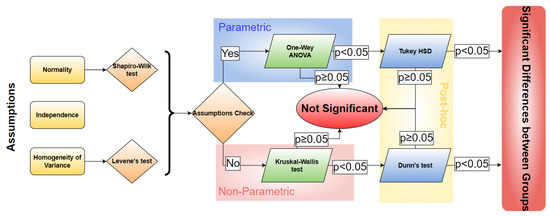

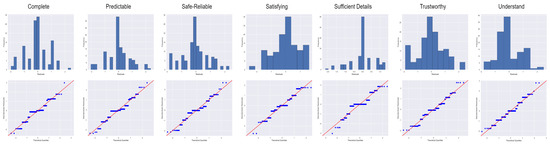

For the analysis of the quality, we conducted a statistical study considering the pipeline presented in Figure 17. In detail, since the assumptions for the One-way ANOVA approach (Parametric method) applied in the previous studies [8,9] were not verified (for more detail, we refer readers to Appendix C), we had to use a non-parametric approach, i.e., Kruskal–Wallis test [56]. Subsequently, if the test is significant (p-value ), i.e., there are significant differences between groups, we apply a post-hoc test to understand what the relations are between each kind of explanation. In this case, we considered Dunn’s test [57], which corresponds to the Tukey HSD test for the non-parametric situation. For the computation of the p-value for the latter test, we adopted the Bonferroni correction [58] to solve the multiple comparisons problem [59].

Figure 17.

Pipeline of the statistical analysis of the data gathered in the human study.

In the following, we analyze first the results for the “Why” explanations and then for the counterfactual question “Why not”.

5.2.1. Evaluation of the “Why” Explanation

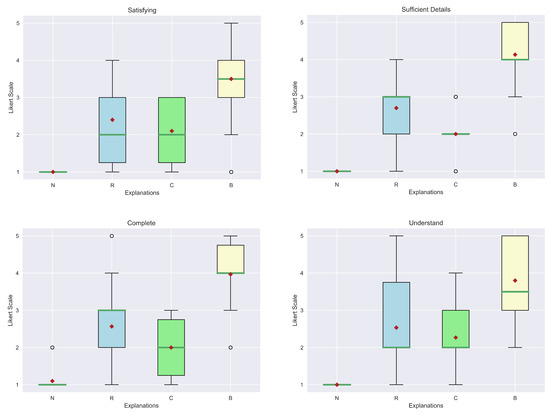

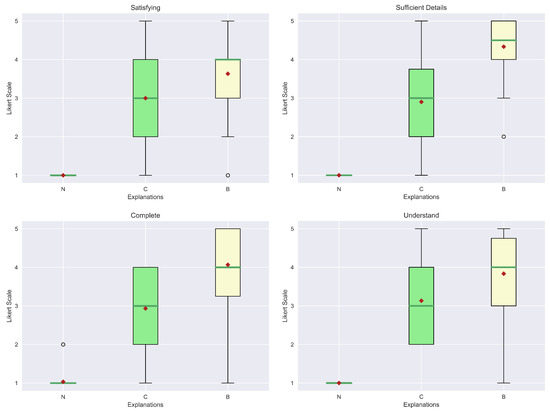

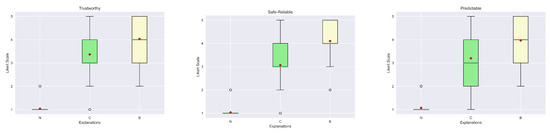

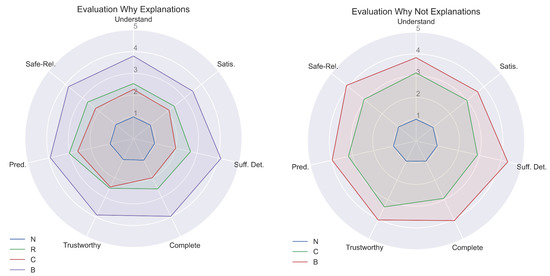

For the evaluation of the explanations, we considered seven qualities from [49]: Understand, Satisfying, Sufficient Details, Complete, Trustworthy, Predictable and Safe-Reliable. The first four belong to the class of general qualities, while the latter are relatives of the trust area. The first step is to visualize the results obtained from the human evaluation in box plots. In particular, Figure 18 and Figure 19 show, respectively, the general qualities and the trust qualities. At first glance, it is possible to see how the median (green line) and mean (red diamond) of our explanation (B) are greater than the others. Another interesting aspect is that explanations (R) and (C) obtained similar scores. In fact, this kind of outcome was also reported in the study presented in [9]. In contrast, in our case, all the explanations are far better considered than the “No explanation” (N) approach, which can also be justified by the fact that in some contexts explanations may be not so relevant.

Figure 18.

Box plot of the general qualities of the explanations for the “Why” question.

Figure 19.

Box plot of the trust qualities of the explanations for the “Why” question.

However, to have a clear view of the results obtained, it is fundamental to statistically analyze these data considering the methodology previously described. As shown in Table 4, the Kruskal–Wallis test reported a low p-value for each quality, confirming that there are differences between diverse explanation groups. Not only are the p-values below the usual threshold (), but also the F-values are high (>2.69 critical value), i.e., the between-group variance is great or the within variance group is small or both of these conditions are verified. Therefore, a post-hoc test to individuate which groups differ from each other is necessary.

Table 4.

Table of the F-values and p-values obtained in the Kruskal–Wallis test for the actual explanations.

The results of Dunn’s test are shown in Table 5. As can be seen from the calculated p-values, all the explanations achieve better scores than “No explanation” (N). In particular, statistically, there are no differences in the relevant state variable explanations (R) and the Action-Influence one (C). Moreover, our answer is considered statistically different from both the previous explanations for all the qualities. The only value that is not significant is relative to the Predictable aspect when compared to (R).

Table 5.

Table of the p-values obtained from Dunn’s test for the comparison of the actual explanations.

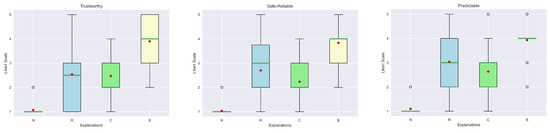

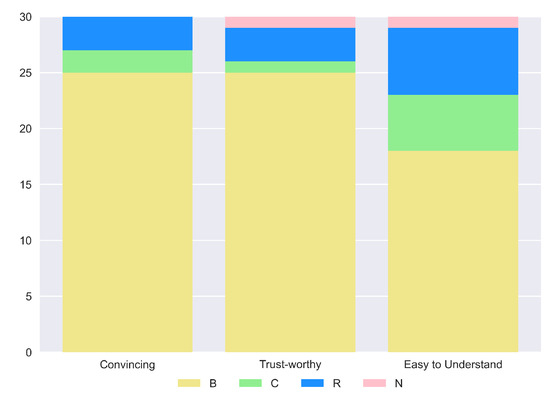

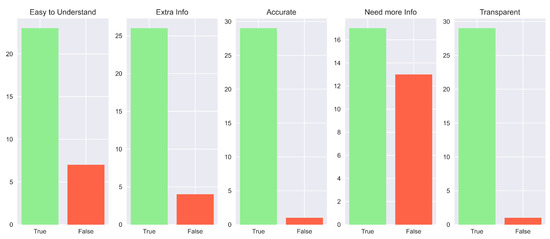

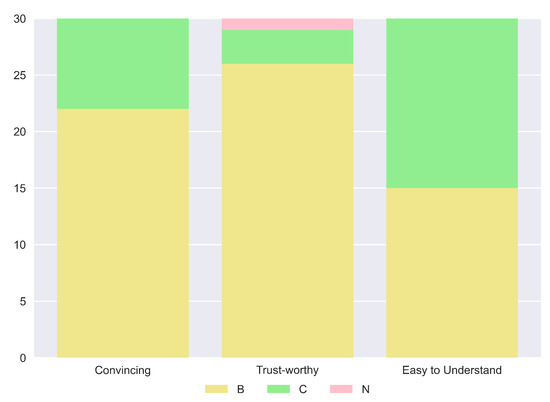

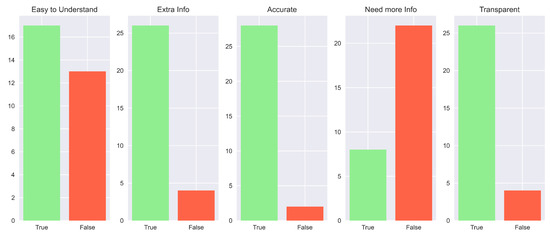

In general, these results are also supported by the overall consideration of the participants of the human study. In fact, in their preference questions, more than the majority of the study population answered that our explanation is the best in terms of “Convincing” (), “Trustworthy” () and “Easy to Understand” (). Complete results are shown in Figure 20. Impressive results are found in the answers to the true and false statements, illustrated in Figure 21. In detail, a high number of participants agree that this kind of explanation is accurate (), transparent () and provides more information (). An interesting result consists in the number of people who reported our explanation as “Easy to Understand” (). If we compare this number with the one obtained from the analysis of the preferences, we can notice that also people preferring other explanations as “the easiest to understand” consider ours good. The last remark is related to the question of the need for more information. In this case, there is a balanced result: reported how our explanation should need more information; therefore, this request should be taken into account for possible future works.

Figure 20.

Bar chart of the preferences for actual explanations chosen by the participants for three different aspects.

Figure 21.

Bar charts representing the results of the True and False statements for different aspects of the actual explanations.

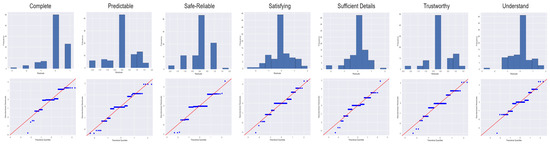

5.2.2. Evaluation of the “Why Not” Explanation

As described in the previous section, also during the analysis of the data gathered from the human study for the counterfactual explanations, we first visualized them in box plots, to understand the possible relations. The general qualities (Understand, Satisfying, Sufficient Details, Complete) and the ones related to trust (Trustworthy, Predictable and Safe-Reliable) are presented in Figure 22 and Figure 23, respectively.Already it is possible to see some small differences between the two explanations in terms of medians and means for each aspect. However, in this case, the representations of the 25th and 75th percentile testify that a complete statistical analysis has to be performed since these depictions could not be significantly used to deduce a major difference between groups.

Figure 22.

Box plot of the general qualities of the explanations for the “Why Not” question.

Figure 23.

Box plot of the trust qualities of the explanations for the “Why not” question.

Therefore, the Kruskal–Wallis test was executed obtaining the results presented in Table 6. Also in this case, a significant difference between groups is found since we obtain small p-values and great F-values for each quality. A remark can be made: if we compare these results with the ones obtained after the same test for the actual explanations, we can observe how the previously reported values are more significant, i.e., smaller for the p-values and greater for F-values, having then a slight difference in the comparisons of the groups. Thus, we expected to have less significant differences between our explanation and the one generated through the Action-Influence graphs.

Table 6.

Table of the p-values obtained from Kruskal-Wallis’s test for the comparison of the counterfactual explanations.

This hypothesis is verified by using the multiple comparisons of Dunn’s test. As previously specified, all the p-values reported in Table 7 are corrected using the Bonferroni adjustment. The results presented highlight how, also in this situation, both the explanations overwhelm the no explanation case. However, interesting observations can be deduced from the comparison between our proposed answer and the one generated by the Action-Influence graph. As expected from the consideration of the previously performed Kruskal–Wallis test, significant differences are spotted only in three cases; in particular for Sufficient Details (), Complete () and Safe-Reliable (). In all the other aspects, a p-value lower than the significant threshold of was not achieved. Therefore, we cannot deduce the presence of major differences in the compared explanations for these traits. Moreover, it is interesting to focus on Sufficient Details, since this is the quality that attains the lowest p-value. Substantially, this means that our explanation presents a higher number of details which can be useful for human users to better understand the choice of the agent.

Table 7.

Table of the p-values obtained from Dunn’s test for the comparison of the counterfactual explanations.

However, increasing the amount of information given can lead to a trade-off in comprehensibility. If we look at the results in terms of preferences for the easiest explanation to understand (Figure 24), it is possible to see how half of the participants chose (B) and the other half (C). Moreover, from the true and false statement data shown in Figure 25, we can see how the of the human study population considers our explanation not easy to understand. Hence, it is very important to balance the amount of information and the clarity of the final answer produced. On the other hand, also in this case, a high percentage of the participants voted the outcome of our method as the best in terms of being Convincing () and Trustworthy (). Consequently, despite not having a clear significant difference in some qualities of the explanations, we can recognize our description as better than the one proposed in [8].

Figure 24.

Bar chart of the preferences for counterfactual explanations chosen by the participants for three different aspects.

Figure 25.

Bar charts representing the results of the True and False statements for different aspects of the counterfactual explanations.

The last remarks concerning Figure 25 are related to the answers given for the necessity of more information. In fact, while for the other aspects we obtain results that are comparable to the “Why” scenario, e.g., gives extra information (), is accurate () and transparent (), in the “Need more Info” statement there is an opposite result: of participants agreed that our explanation does not need other details. This means that the counterfactual answer gives enough data to increase the comprehensibility of the agent’s actions.

6. Discussion

We tested the prediction accuracy of each model on 100 episodes after the training and the results can be seen in Table 8. The table shows the dimensions of each environment, where the first number represents the amount of the state features and the second one the possible actions. The results for the Mountaincar and Taxi environment are much better than those for the Cartpole experiment. This low accuracy is due to the difficulty of identifying the correct velocity of the pole and the cart. The number of clusters used for the discretization needs to be increased at the expense of a higher computational time to obtain better feature predictions. In general, our method seems to perform well in a discrete environment while losing some accuracy in the continuous case due to discretization.

Table 8.

Comparison of the accuracy (%) in prediction obtained with different methods: LR is the Linear Regression, DT is the Decision Tree, MLP is the Multi-Layer Perceptron, is the Decision Policy Tree, is the Decision Policy Tree with a fixed depth and BN is the Bayesian Network.

Focusing now on the explanation created, it is possible to understand the improvements obtained. Statistically relevant differences are found during the human study for both the actual and counterfactual explanations. In particular, in the first case, our approach scored way higher grades compared to the baseline explanations for all the aspects. Alternatively, for the counterfactual scenario, the number of statistically significant increments in quality is lower. However clear differences can be seen by analyzing both the explanations in detail. In fact, the answer to the counterfactual question in the Starcraft II environment of [8], presented in Section 3, can be compared to the ones obtained in our experiments, which are described in Section 5.1.1. From this comparison, we can see that our explanations have more detailed information because of the predicted future state values obtained from the BN. Using all the predicted subsequent state values provides more transparency to what will happen and will be trusted more by the user, as verified by the answers in the human study. Another interesting observation can be made by analyzing the use of distal information in both the explanation in counterfactual cases. In Madumal et al.’s example [8,9], it is used to directly understand the goal of the previous actions, while in our case, we use them to confront the outcome of the actual action to the counterfactual one. In fact, the possibility to compare the predictions in the case of counterfactual actions can reassure one that the actual action was the correct one. For this reason, using two different RNNs trained for specific distal prediction for direct and counterfactual cases is fundamental for a convincing explanation. Overall, we can state that humans value our answers in a superior way compared to the other baselines, as confirmed in the radar plot of Figure 26 showing the means of the scores achieved for both the actual and counterfactual cases. Therefore, with our approach, we can generate higher quality explanations and also increase the trust in the agent’s choices.

Figure 26.

Radar Plot with the means of the scores achieved for each quality in both the “Why” and “Why not” explanations.

Lastly, we recall that the final task of the explainable approaches is to be adopted in real-world situations. This means that these methodologies have to consider complicated problems and very complex models, which usually present millions of parameters. For this reason, it is fundamental to highlight approaches such as the one proposed here, which can be applied in different environments and general agents. Moreover, introducing this explainable component does not bring any changes or requests in the design of the RL algorithm.

7. Conclusions and Future Work

This paper introduces improvements in the existing explanation method of Madumal et al. [8,9] for model-free RL agents that can create answers to “why” and “why not” questions. This model can predict the future state values and the distal information, in both cases (actual and counterfactual). These predictions are obtained by using a BN created from the data saved during the training phase of the RL agent. Specifically, the structure is the output of the NOTEARS algorithm and the probabilities are learnt using the Bayesian Estimator method. The distal values are the outcome of two different Recurrent Neural Networks composed of GRUs. The explanation is finally generated using the predicted information and the BN structure for the causal relations of the features.

This approach is then computationally evaluated using three RL benchmark environments. The results showcase the quality of the method for the discrete environment, although it has some weaknesses in continuous scenarios. In fact, in these cases, a discretization has to be performed and this leads to a decrease in the prediction accuracy. After that, a human study is proposed to obtain a clear view of how users can perceive our explanation compared to the other baseline. The analysis of the participants’ answers proves the quality of our answers and emphasizes a statistically significant difference between the different approaches for the actual scenario. Moreover, although we did not find many significant results for the counterfactual questions, we can conclude that our description is better perceived since it achieved higher means and medians.

The highlights of the proposed model are the ability to evaluate the future state values with high accuracy and the possibility to compare the distal prediction for the actual and counterfactual actions. These solutions allow the user to better understand why the machine is doing that particular action and give the people more trust.

Future work will involve the solution of continuous scenario problems and an application to a larger environment to test the reliability of this model in complex cases. For example, it could be opportune to prove this approach in the Starcraft II scenario. Then, it will be possible to compare the results obtained by the improved method and the past works of Madumal et al. [8,9]. Moreover, as depicted from the human study, it could be useful to increase the amount of information given in the explanation, introducing other fundamental relationships, e.g., connections between features in different time steps. In this case, it is important to recall how also this could compromise the readiness of the explanation. Therefore, studying an answer that provides a large number of details can help us to understand what is the limit that has to not be surpassed. Another interesting future study could be related to the choice of distal information. In our case, an expert in the domain chose the distal information. Thus, to make this model less human-dependent, an analysis of the data has to be performed to automatically find these data.

Author Contributions

Conceptualization, R.M. and M.M.; methodology, software, validation, formal analysis, investigation, R.M.; resources, R.M. and M.M.; data curation, R.M.; writing—original draft preparation, R.M.; writing—review and editing, R.M. and M.M.; supervision, R.D.L. and S.P. All authors have read and agreed to the published version of the manuscript.

Funding

Rudy Milani is funded by dtec.bw—Digitalization and Technology Research Center of the Bundeswehr project RISK.twin. dtec.bw is funded by the European Union—NextGenerationEU.

Institutional Review Board Statement

Ethical review and approval were waived for this study due to the standard format and questions used. The survey considered takes all the questions from a well-known questionnaire used in the field. Moreover, all the participants were voluntarily selected and, before the survey, fully informed of the assured anonymity, the aim of the research, how their data will be used and if there are any risks associated.

Informed Consent Statement