Abstract

Climate change and the COVID-19 pandemic have disrupted the food supply chain across the globe and adversely affected food security. Early estimation of staple crops can assist relevant government agencies to take timely actions for ensuring food security. Reliable crop type maps can play an essential role in monitoring crops, estimating yields, and maintaining smooth food supplies. However, these maps are not available for developing countries until crops have matured and are about to be harvested. The use of remote sensing for accurate crop-type mapping in the first few weeks of sowing remains challenging. Smallholder farming systems and diverse crop types further complicate the challenge. For this study, a ground-based survey is carried out to map fields by recording the coordinates and planted crops in respective fields. The time-series images of the mapped fields are acquired from the Sentinel-2 satellite. A deep learning-based long short-term memory network is used for the accurate mapping of crops at an early growth stage. Results show that staple crops, including rice, wheat, and sugarcane, are classified with 93.77% accuracy as early as the first four weeks of sowing. The proposed method can be applied on a large scale to effectively map crop types for smallholder farms at an early stage, allowing the authorities to plan a seamless availability of food.

1. Introduction

According to the United Nations estimates, the world population is expected to reach 8.5 billion by 2030, thus escalating pressure on food security (https://population.un.org/wpp/publications/files/key_findings_wpp_2015.pdf, (accessed on 20 December 2022)). Shrinking arable land, climate change, and natural disasters are also hampering the agricultural sector and threatening food security at the global level [1,2,3,4]. The widening gap between food production and its demands is adversely affecting the food supply chain. However, timely and accurate information about cultivated crops and their estimated yields can be incorporated into decision-making to ensure food security. To maintain a seamless food supply chain, remote sensing (RS) data and deep learning (DL) algorithms are crucial in deriving high-quality information on crop-type maps, crop health, and their yields [5,6]. RS is widely used in precision agricultural (PA) applications as it is considered a reliable source for extracting phenological information about crops [7,8,9,10]. The availability of high spectral, spatial, and temporal RS data, including multi-spectral [11,12], hyperspectral [13], and synthetic aperture radar (SAR) [6,14,15,16,17] have opened new possibilities in crop-type mapping [3,18,19,20,21], crop health [22] and yield estimation [4,23,24,25]. In the early 21st century, Landsat and moderate resolution imaging spectroradiometer (MODIS) multi-spectral data were relied on for crop types [26]. However, their capability was limited due to lower spatial and temporal resolution, especially for small fragmented crop fields [27,28]. Nowadays, high-resolution satellite data from the European Union’s Earth Observation Programme and commercially available platforms have unlocked numerous opportunities for crops analysis and monitoring strategies. Table 1 presents some satellite platforms along with their specifications and applications in the agricultural sector. Intelligent algorithms in conjunction with the high-quality remotely sensed data can accurately identify/map the crops and estimate yields. Recently, DL algorithms have proved their significance in remote sensing applications as these algorithms are made to learn from multi-spectral and multi-temporal data of the crops and have outperformed classical machine learning algorithms [29].

Table 1.

Commonly used satellite platforms in agricultural applications along with their specifications.

Crop identification and mapping using RS-based approaches are more accurate than traditional ground-based methods, which are resource-extensive as the crop information is incorporated at multiple growing stages due to the temporal nature of RS data [40,41]. Optical and microwave remote sensing have both been employed as data sources for PA. Optical RS is based on vegetation indices that are extracted from multi-spectral images. Some of the most often used indices are normalized difference vegetation index, green normalized difference vegetation index, enhanced vegetation index, and land surface water Index [18]. The direct use of time-series vegetation indicators is advantageous for crops with certain temporal characteristics [42]. On the other hand, there has been a considerable rise in using DL algorithms in agriculture applications [43]. The use of deep CNN with multi-spectral and temporal satellite images was pioneered by [44] for rice -crop mapping. Later, many studies used variations of CNN for PA tasks [45,46,47,48,49,50,51,52]. In these patch-based studies, a large area of the land is extracted as a patch and thus requires further processing to extract relevant map of the crops. Additionally, actual field boundaries are not considered in mapping crops as they may have irregular shapes, thus incorporating neighboring fields with different crop types. In Asia, particularly South Asia and China, a smallholder agricultural landscape is prevalent [27,53] where the size of the fields is smaller than 5 ha [54] and crops are diverse. The heterogeneous landscape of small but diverse crops makes it challenging to accurately classify the crops [55,56,57] at an early growth stage. Existing studies on crop mapping and identification approaches mainly focus on large-scale plantation fields (10 hectares on average) and require an entire plant cycle for crop identification [2,9,11]. This study proposes an approach that serves two purposes. Firstly, it focuses on small farm holding (1 to 4 acres on average) and secondly, detects crops at the early stage, by overcoming challenges of overlapping readings due to the plantation of diverse crops in a small region. To the best of our knowledge, no existing study focuses on small farm holding for early crop classification. The objective of this study is to (1) map fields using GPS-based devices to draw field boundaries and acquire crop data, (2) acquire multi-spectral time-series RS data of the mapped fields from Sentinel-2 mission satellites at regular intervals, (3) extract and evaluate basic Sentinel-2 sensors and generate vegetation indices at pixel-level by combinations of bands to find an optimal combination, and (4) apply deep learning model to propose a method to accurately identify staple crops (rice, sugarcane, and wheat) in first 4 weeks of their sowing in smallhold farming, which will allow the government agencies to manage food security effectively.

2. Materials and Methods

2.1. Study Area

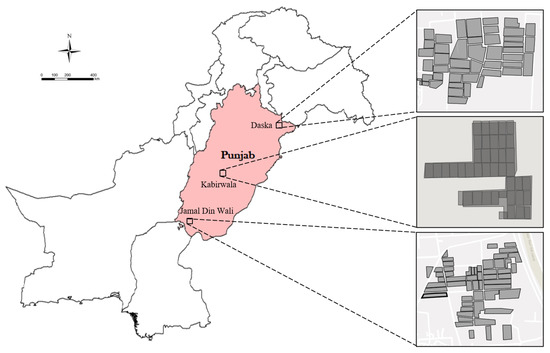

The research is conducted on different districts located in the province of Punjab, Pakistan, the map of which is shown in Figure 1. As per the 2017 census, the total population of Punjab is estimated to be 110 million [58] and it has an area of 20.63 million hectares [59]. Punjab is mostly comprised of flat land and has four seasons which enable suitable conditions to grow staple foods throughout the province. Smallholder agriculture is predominant in the region to provide a livelihood to the residents. Eighty percent of Punjab’s total area is cropped and thus contributes the largest share of the country’s agricultural production. The major crops are wheat, cotton, rice, fodder, maize, and sugarcane.

Figure 1.

Map of the Province Punjab, Pakistan. The images of the fields of interest are acquired at a resolution ranging from 10m to 60m depending on the bands of Sentinel-2 satellites.

A ground-based survey was carried out to determine the GPS coordinates of target fields, and other important attributes such as crop history, yields, and sowing dates. During the survey, the coordinates of the field boundaries were recorded using GPS devices, thus giving us the actual field boundaries to acquire satellite data. Fields of varying sizes were mapped at three different sites in the province. The objective of mapping land parcels at three separate sites was to make the dataset diverse and generalized. In total, around 2600 fields were mapped with an average size of 1.2 acres per field. The total mapped area was roughly 4160 acres. To make the dataset relevant and accurate, only the fields were mapped that had been planted with rice, wheat, and sugarcane crops repeatedly for the past three years. The geometry of some of the mapped fields is shown in Figure 1 highlighting the diversity in sizes and shapes of the fields.

The locations of the fields were selected from the Punjab province because it is the agriculture hub of Pakistan and is further divided into three regions. Due to the large size of the province, there is diversity in water patterns, rainfall, and climate. These staple crops are cultivated in all of these three regions, thus, making our dataset represent the entire region.

2.2. Sentinel-2 Data Acquisition

The Sentinel-2 mission is a constellation of two satellites named Sentinel-2A and Sentinel-2B [60]. The two satellites are phased at an angle of 180 degrees to one another and are placed in a sun-synchronous orbit around the Earth [61]. These satellites remain stationary in their orbits with respect to the sun (sun-synchronous). The constellation of Sentinel-2 satellites is passive, which means they do not have their own energy source. This is why they are placed in a sun-synchronous orbit to take advantage of the sunlight to capture the earth’s images. To revisit an area of the earth with a single Sentinel-2 satellite, it takes the satellite ten days to return to the same location. However, with the constellation of two satellites, phased at an angle of 180 degrees, it takes them five days to return to the same area of the earth and capture it, thus providing higher temporal resolution [62,63,64].

The Sentinel-2 images are captured with a multi-spectral instrument (MSI) in 13 spectral bands. The multi-spectral instrument takes rows of images as it moves along its orbital path. The light that reaches the multi-spectral sensor of Sentinel-2 is collected by two focal plane assemblies which in turn sample 13 spectral bands. These bands are sampled in visible, near-infrared, and shortwave infrared regions of the electromagnetic spectrum. The 13 bands vary in spatial resolution as four bands are at a 10 meter resolution per pixel, six bands are at 20 meters, and three bands are at a 60 meter spatial resolution.

The Sentinel-2 tiles can be acquired as Level-1C or Level-2A imagery [65]. Level-1C Imagery provides “Top of Atmosphere (TOA)” reflectance, which results in a blur image due to the atmospheric reflectance. Level-2A images are atmospherically corrected and named “Bottom of Atmosphere Reflectance (BOA)”. In Level-2A images, the effect of atmospheric reflectance is removed with the help of a band contained in the multi-spectral image. Subsequently, for each of the three sites considered for this study, their respective fields’ area of interest (AOI) was extracted from tiles of the Sentinel-2 satellites of Level-2A, over rice, wheat, and sugarcane crops, throughout the season.

The period of the acquisition of Sentinel-2 tiles was determined depending on the rice, wheat, and sugarcane crop seasons. In Punjab, the rice crop season begins in June and lasts until October, whereas the wheat crop season begins in December and is harvested in April. However, the sugarcane crop season spans the entire year, with its plantation starting in March. The duration and number of tiles acquired for each crop are detailed in Table 2.

Table 2.

Testbed Sentinel-2 data.

For each map field, 30 multi-spectral images were captured, at five-day intervals, for all three crops. Consequently, the dataset has 30 images of each field for the entire rice and wheat season. However, for the sugarcane crop, the data over five months was acquired to match the temporal length of the other two crops which proved to be sufficient for the sugarcane field classification. During the crop growth cycle, weather conditions have a significant impact on the pattern of crop growth and its yield. To account for the effect of weather conditions, and make the proposed strategy more generalized, the data was acquired for the years 2019, 2020 and 2021. As discussed in Section 2.1, only those land parcels were mapped for the study where the target crops are repeatedly planted for the past three years. This led us to inculcate the impact of soil, weather, and atmospheric conditions in the dataset.

2.3. Fields Stripping and Preprocessing

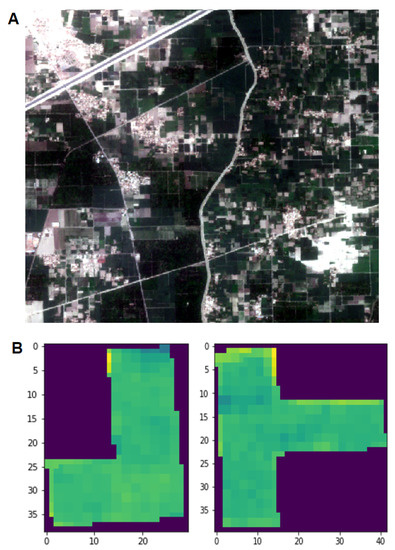

During the crop cycle, we acquired Sentinel-2 tiles for three mapped areas at regular intervals, where each tile contained all mapped fields at the corresponding site. As a single tile covers a large area of the earth’s surface, areas outside the mapped fields were discarded by filtering out the pixels of the tiles that lie outside the boundaries of the mapped fields. This was achieved by creating a mask over mapped fields using fields coordinates and iteratively applying it to the tile. A true color tile of a site and stripped fields are represented in Figure 2.

Figure 2.

(A) A Sentinel-2 tile specimen of sugarcane fields illustrated in RGB bands. (B) Depiction of mapped fields obtained by stripping the large tile and visualizing in NDVI values.

Cloudy weather causes the multi-spectral instrument of Sentinel-2 to capture the reflectance of clouds. As a result, the true reflectance of the land’s surface is not captured, and the tile is characterized by foggy pixels [66]. Foggy pixels have very high reflectance relative to the land’s surface reflectance. This alters the true reflectance of the surface and causes noise in the data. The reflectivity of the land’s surface is also affected by the shadows of the clouds on the ground. To cope with this problem, spectral band 10 contains information about cloudy pixels. By using band 10, a cloud mask was applied on all tiles to filter out cloudy pixels and replaced them with the average of the adjacent pixels. Further, to make all the Sentinel-2 bands spatially uniform, these were resampled to 10m spatial resolution using “Geospatial Data Abstraction Library (GDAL)” [67].

2.4. Selection of Spectral Bands and Vegetation Indices

2.4.1. Spectral Bands

Based on the literature and considering the relevance of the Sentinel-2 bands for agricultural applications, ten bands were chosen for the crop identification task [68,69]. Band 1, band 9 and band 10 were ignored as they do not capture the reflectance of the land surface. Instead, they are used for atmospheric correction, water vapour measure, and cloud masking, respectively [70]. The bands selected for the study are listed in Table 3 along with their bandwidths and central wavelengths.

Table 3.

Description of targeted spectral bands of Sentinel-2 used in experimental assessment.

2.4.2. Vegetation Indices

Vegetation indices are calculated by combining the surface reflectance of two or more spectral bands to obtain a single value [71,72]. These indices serve as indicators of the many properties of the vegetation under consideration [73]. Some indices are beneficial in identifying the vegetated areas, while others are good at determining the health of the plants. There are many vegetation indices proposed in the literature, however, for this study, “Normalized Difference Vegetation Index”, “Green Normalized Difference Vegetation Index”, “Modified Chlorophyll Absorption Reflectance Index”, “Enhanced Vegetation Index” and “Land Surface Water Index” are selected based on their importance in crop identification.

Normalized Difference Vegetation Index (NDVI)

NDVI is employed to assess the extent of vegetation [74,75] and its value ranges from −1 to 1. Densely vegetated areas have NDVI values close to 1, whereas for sparsely vegetated areas the values are near −1. This value is determined from band 4 (red) and band 8 (near-infrared). They operate on the premise that plants require sunlight for photosynthesis to occur. Plant leaves have a pigment known as chlorophyll that absorbs red light, which is required for photosynthesis and reflects near-infrared light. A healthy plant or a densely vegetated region will absorb more red light and reflect less of it. On the contrary, unhealthy plants reflect more red and less near-infrared light.

The NDVI index was chosen because it is a trait that can be used to distinguish between crops. For example, when compared to wheat, the rice crop turns thickly green after only a few weeks of growth after planting. The trend of the NDVI values during crop growing seasons can be quite useful for crop identification. Equation (1) is used to calculate NDVI.

Green Normalized Difference Vegetation Index (GNDVI)

The GNDVI is identical to the NDVI except that it uses green light instead of red light [76]. Although the normalized difference vegetation index is typically employed to quantify the area of vegetation, the green-normalized difference vegetation index (GNDVI) is an indicator of photosynthetic activity and is, therefore, more useful in yield forecasting [77]. Equation (2) is used to calculate GNDVI.

Enhanced Vegetation Index (EVI)

The EVI [72] index is also used to quantify the amount of vegetation on a given piece of land [78]. Nevertheless, it differs from the NDVI and the GNDVI as it corrects certain atmospheric reflectance that is not considered by the NDVI and GNDVI. It can also identify and eliminate noise in dense plant canopies, allowing for a more accurate estimation of the greenness as a result of the reduced reflectance from noise [79]. Equation (3) is used to calculate EVI.

Modified Chlorophyll Absorption Reflectance Index (MCARI)

This index measures the concentration of chlorophyll in plants [80]. Low chlorophyll level indicates the weak health of the vegetation. At different crop growth stages, the pattern of the values of MCARI helps to classify the crops [81]. Equation (4) is used to calculate MCARI.

Land Surface Water Index (LSWI)

LSWI [82] can distinguish if there is stagnant water on land by observing the reflectance of the near-infrared and shortwave infrared bands [83]. For the plantation of rice crops, the water remains stagnant in the fields for an extended period. By analyzing the time-series data of rice fields, the model can learn the pattern for rice crops and distinguish it from the wheat and sugarcane crop. Equation (5) calculates LSWI.

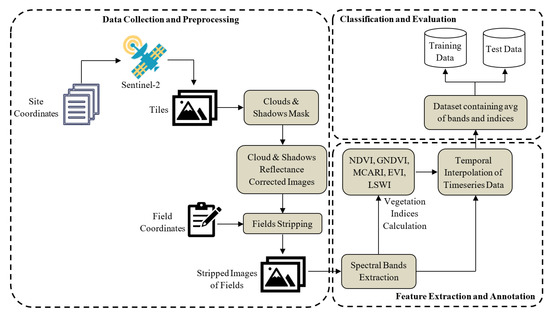

2.5. Data Shape

For each pixel of the mapped fields, the values of spectral bands and vegetation indices, listed in Section 2.4.1 and Section 2.4.2, respectively, were computed. In the next step, the values of spectral bands and indices were averaged over all the pixels that belong to a particular field. This process was iterated for all the timestamps of all fields separately. This resulted in one numeric value for each band and vegetation index, for a given field at each timestamp image. Consequently, against each field, we have 15 features (10 values for spectral bands and 5 values for indices). Hence, for a single field, an average shape of the data matrix had the dimensions 30 ∗ 15. The complete flow of data acquisition and preprocessing is shown in Figure 3.

Figure 3.

Pipeline for preparation of training and testing datasets used in experimental evaluation.

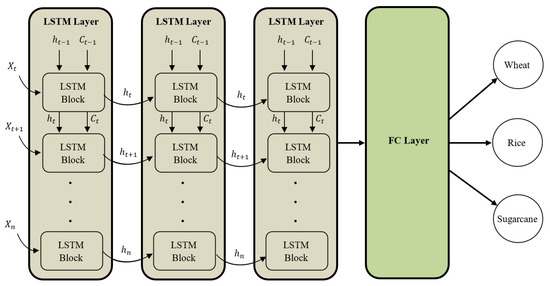

2.6. DL Network

LSTM is a deep learning network that is considered as one of the best choices for time-series data and data with long-range dependencies [84]. Feedback connections in LSTM distinguish it from the feedforward network. These feedback connections help the model understand the context and long-range dependencies within data. Feedback connections in LSTM enable it to process the whole sequence of data, instead of considering each data point individually. In this way, the information from the previous data points is retained, which is used with the new data points to learn the pattern or data. These characteristics make LSTM a preferred choice over other networks for sequenced and time-series data. LSTM networks make use of gates that control the incoming information, information that needs to be retained for the future, and the information that leaves out of the network. The input gate, forget gates, and output gates are used to control the flow of information [85,86]. Figure 4 shows the architecture of the LSTM DL network, where represents the input vector, and and are the output and memory from the previous LSTM block, respectively. is the memory from the current block that is passed to the next block and is the output from the current block. The non-linearities within a single LSTM block are added through sigmoid () and hyperbolic tangent (tanh) functions.

Figure 4.

Architecture of the stacked LSTM network.

Three stacked LSTM layers each with 256 blocks and a recurrent dropout of 0.3 were used with the data. The LSTM layers were followed by the linear SoftMax layer with three units, which is equal to the number of classes that needs to be predicted. All the experiments were performed using a learning rate of 0.001 with a batch size of 128. The model was trained using cross-entropy loss and an Adam optimizer. The model was trained for 100 epochs with early stopping and a patience counter of 10 epochs. Of the dataset, 80% was used for training while the remaining 20% was used for testing purposes.

2.7. Experimental Design

Initial studies were conducted in a set of three experiments on the entire plant cycle of wheat and rice, and the first 5 months of sugarcane cultivation, to identify the best combination of features for crop classification. Experiment 1 focused on the only visible spectrum (RGB bands), experiment 2 includes additional near-infrared and shortwave along with visible spectrum, and lastly, experiment 3 included vegetative indexes in addition to features used in experiment 2. Finally, in experiment 4, features of experiment 3 were utilized by limiting to 1 month of data from sowing instead of the entire plant life cycle for early crop detection.

3. Experimental Results

The LSTM model was used to investigate temporal (timestamp) Sentinel-2 data to identify staple crops for small-sized fields at the early stages of the plant life cycle. Optimizing of structure and hyperparameters of LSTM allowed the detection of staple crops within 1 month of sowing with high accuracy.

3.1. Model Training

The PyTorch framework is commonly used in academic research to train machine learning and deep learning models. The LSTM architecture was trained and optimized using the PyTorch framework using the different temporal lengths of data ranging from 1 month to the entire plant life cycle. The Colab pro server with 32 GB of RAM was used for training. The LSTM model was trained on our custom dataset in two phases. In the first stage, temporal information of entire plant cycle, at equal intervals, were fed into the network to evaluate the accuracy and significance of different spectral bands. In the second phase, after identifying important spectral bands combinations, the model was further tuned on those selected bands to enable the classification of staple crops within a month of sowing.

3.2. Results

The primary objective of the study was to use Sentinel-2 data to identify staple crops for small-sized fields at the early stages of the plant life cycle. In this regard, various experiments were designed to assess the significance of spectral bands, vegetation indices, and temporal length of the input data to classify agriculture fields. The significance of different combinations of Sentinel-2 spectral bands was investigated to identify the best combination of bands in crop classification for small-sized fields. During these experiments, five months from the plantation, timestamped data of the crops were used as input. After determining the best combination of bands in crop classification from five month plantation, additional experiment was performed with one month’s data from plantation, for the early identification of crop type. Table 4 lists the experiments which were performed along with the classification accuracy of the LSTM model on these experiments.

Table 4.

Experiments with different combinations of spectral bands and vegetation indices and their classification accuracy.

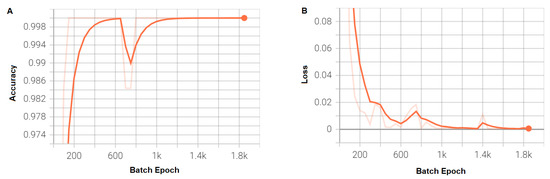

The training accuracy and loss of the optimized model are shown in Figure 5 and their performance on the entire temporal length of a plant cycle and within 1 month of sowing is shown in Table 4.

Figure 5.

(A) Training accuracy. (B) Training loss.

The accuracy for experiment 1 was 87.55%, which was the worst amongst the three experiments. This was due to the absence of near-infrared bands and vegetative indexes used in other experiments, as the near-infrared bands are crucial to determining the health of the plant and other important features. The reflectance of the near-infrared band greatly varies for crops. For example, in contrast to the wheat crop, the rice crops become dense and dark green after a few weeks of planting and reflect the maximum near-infrared band whose reflectance pattern then determines the crop. The result suggests that the visible spectrum (red, green, and blue bands) alone is not sufficient for crop type prediction.

For experiment 2, the classification accuracy of 98.08% was achieved. The significant increase in the accuracy was achieved by bands in the infrared region as they play a vital role due to reflectance patterns emitted in different plant cycles. In addition to the visible spectrum, the vegetation indices NDVI, GNDVI, EVI, MCARI, and LSWI were generated from near the infrared spectrum. As the harvesting weeks approach, the rice crop stays relatively green compared to wheat and sugarcane. This results in maximum variability in infrared and other bands’ reflectance, and the model was able to generalize and improve accuracy.

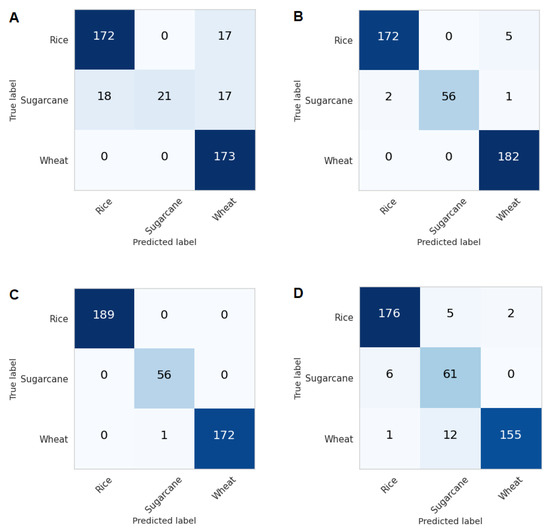

In Experiment 3, all ten bands of Sentinal-2 along with the five vegetation indices were utilized. The accuracy of this experiment stood at 99.76 %, the highest accuracy in all experiments conducted. Although some bands play a more crucial role than others, each band captured information differently from the other band, hence the model learned distinguished features in all bands which resulted in better performance than all other combinations. The confusion metrics for all three experiments are shown in Figure 6A–C.

Figure 6.

Confusion matrices of (A) Experiment 1, (B) Experiment 2, (C) Experiment 3, and (D) Experiment 4.

For early identification of the crops, the same parameters from experiment 3 were used in experiment 4, except only using the first month’s data to check the efficiency of our model for early crop-type classification. As the estimated area of the Punjab province is very large (20.63 million hectares), the sowing dates and patterns can vary up to two weeks. Figure 6D shows the confusion metric of this experiment, showing crop type classification accuracy of 93.77%. The results indicate that similar spectral properties of the crops were distinguishable using timestamp multi-spectral features for the entire crop cycle with high accuracy. These features were also useful for detection at an early stage of the crop cycle with reasonable accuracy.

The outcome of the study is a robust model that can classify crops in small fields and which is tolerant to crop diversity in a small region, and is able to predict crops at early stages. The model may fail in some circumstances due to the unavailability of satellite imagery because of bad weather, which may result in fewer readings in each month. Another challenge is the sowing time variation which can hinder accuracy if the sowing time is delayed for more than three weeks from the standard sowing date, for a given region.

4. Discussion

The research demonstrates the efficiency of remote sensing imagery, by using different spectral bands of Sentinel-2 and vegetative indices on crop classification for small-sized farms system in Asian countries, at the early stages of the crop. Existing studies focus primarily on large-scale farms for crop classification, whereas in smallholder farming systems, multiple crops are sowed parallel to each other in the same area, thus making crop classification more challenging. Utilizing the multi-spectral sensor to capture distinguishable features from the crops, Sentinel-2 enables observation of fields at regular intervals using 20 m spatial resolution imagery. Our proposed model was able to use distinguishable features from spectral bands and vegetation indices to detect specific crops as early as the first month, with an acceptable accuracy. Furthermore, the study has revealed that the freely available Sentinel-2 data can be utilized in South Asian countries for early crop type mapping for smallholder farms.

In developed countries with large areas, a single crop is cultivated in larger fields covering an area of many hectares and advanced agricultural practices are adopted to monitor crops growth and health. Secondly, a specific region is allocated to the certain crop to be cultivated in the entire region, thus reducing variance in crop types in a particular region. As a result, the precision agricultural tasks such as crop mapping, identification, and monitoring are much easier. However, for this study, considerably small fields were mapped in the state of Punjab, Pakistan, as the smallholder farming system is prevalent in the region. Further, multiple crops are cultivated within a small region in adjacent fields and traditional agricultural practices are used. Conventional farming techniques, the unavailability of data at early stages, and high variance in crop types make it a harder task to automatically identify the crops for yield forecasting and strategic planning.

Similar studies have focused on large-scale farming systems that have employed hyperspectral imaging, thermal imaging, soil moisture, rainfall estimates, etc. [3,4,6,13,16,17,21,25,87]. These studies are required to fuse data from multiple satellite sources to identify similar crops in a given region. The aim of these studies is to find a large contiguous chunk of similar crop cover, and use additional satellite data for calibration. In contrast, we utilize the multi-spectral data from a single Sentinel 2 satellite by only using 10 bands and additional features (NDVI, GNDVI, EVI, MCARI, and LSWI) that enables us to classify crops with high accuracy in small farm systems.

The approach used in this study yielded considerable results for crop identification at an early stage of crop growth despite the small-sized fields. However, some false positives resulted due to the reflectance of the adjacent fields of crops that were not covered in the scope of the study. Limited by the ground truth data availability, only three years of crop type data was assessed in this study. We expect that future long-term field surveys and advanced agricultural practices will result in many other precision agricultural applications for smallholder farming systems to improve crops health and increase yields to ensure food security.

5. Conclusions

Existing studies mostly focus on large-scale farming systems comprising of individual fields of an average size of 5 to 10 hectares, resulting in reasonable-sized satellite images to employ CNN-based feature extraction, leading to higher crop-classification accuracy. However, for small-scale fields, these methods cannot be applied, as the average spectral band resolution is 20 m per pixel. Additionally, the regions are dedicated to a single crop in a large farm system and crop diversity does not affect the spectral band readings for a given field, due to the same crops being located in the neighboring fields. In small farm systems, crop diversity is very common, and due to the small size of fields, the effect of neighboring crops is significant. In this study, we propose a robust LSTM-based model the counters the classification challenges of small field-size with high crop diversity, and can classify staple crops in early stages with high accuracy. We verify the effectiveness of our proposed model and demonstrate that our model performs satisfactorily on smallholder farms. In the future, we plan to reduce the detection time further, and incorporate additional satellite data to utilize soil moisture, rainfall estimates, and hyperspectral data to expand this model for more crops.

Author Contributions

Conceptualization, M.C. and Z.G.; methodology, H.R.K. and A.A.; software, H.R.K.; validation, M.H.J., Z.G. and M.C.; formal analysis, H.R.K.; investigation, M.T.C. and M.H.J.; resources, Z.G., H.C. and M.C.; data creation, H.R.K.; writing—original draft preparation, H.R.K.; writing—review and editing, M.C., Y.H. and H.C.; visualization, Z.G.; supervision, Y.H., M.C. and Z.G.; project administration, M.H.J.; funding acquisition, M.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Jiangsu Collaborative Innovation Center for Modern Crop Production and Collaborative Innovation Center for Modern Crop Production cosponsored by province and ministry; the National Natural Sciences Foundation of China [32070677].

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Freely available data from Sentinel-2 was used. However, coordinates of mapped fields and processed data will be shared upon request.

Conflicts of Interest

The authors declare that there are no conflict of interest regarding the publication of this paper.

References

- Khan, M.A.; Tahir, A.; Khurshid, N.; Husnain, M.I.u.; Ahmed, M.; Boughanmi, H. Economic effects of climate change-induced loss of agricultural production by 2050: A case study of Pakistan. Sustainability 2020, 12, 1216. [Google Scholar] [CrossRef]

- Shi, W.; Wang, M.; Liu, Y. Crop yield and production responses to climate disasters in China. Sci. Total Environ. 2021, 750, 141147. [Google Scholar] [CrossRef]

- Carranza, C.; Benninga, H.j.; van der Velde, R.; van der Ploeg, M. Monitoring agricultural field trafficability using Sentinel-1. Agric. Water Manag. 2019, 224, 105698. [Google Scholar] [CrossRef]

- Morel, J.; Bégué, A.; Todoroff, P.; Martiné, J.F.; Lebourgeois, V.; Petit, M. Coupling a sugarcane crop model with the remotely sensed time series of fIPAR to optimise the yield estimation. Eur. J. Agron. 2014, 61, 60–68. [Google Scholar] [CrossRef]

- Amani, M.; Kakooei, M.; Moghimi, A.; Ghorbanian, A.; Ranjgar, B.; Mahdavi, S.; Davidson, A.; Fisette, T.; Rollin, P.; Brisco, B.; et al. Application of Google Earth Engine cloud computing platform, Sentinel imagery, and neural networks for crop mapping in Canada. Remote Sens. 2020, 12, 3561. [Google Scholar] [CrossRef]

- Álvarez-Mozos, J.; Casalí, J.; González-Audícana, M.; Verhoest, N.E. Assessment of the operational applicability of RADARSAT-1 data for surface soil moisture estimation. IEEE Trans. Geosci. Remote Sens. 2006, 44, 913–924. [Google Scholar]

- Bégué, A.; Arvor, D.; Bellon, B.; Betbeder, J.; de Abelleyra, D.; Ferraz, R.P.D.; Lebourgeois, V.; Lelong, C.; Simões, M.; R. Verón, S. Remote sensing and cropping practices: A review. Remote Sens. 2018, 10, 99. [Google Scholar] [CrossRef]

- Karthikeyan, L.; Chawla, I.; Mishra, A.K. A review of remote sensing applications in agriculture for food security: Crop growth and yield, irrigation, and crop losses. J. Hydrol. (Amst.) 2020, 586, 124905. [Google Scholar]

- Orynbaikyzy, A.; Gessner, U.; Conrad, C. Crop type classification using a combination of optical and radar remote sensing data: A review. Int. J. Remote Sens. 2019, 40, 6553–6595. [Google Scholar]

- Weiss, M.; Jacob, F.; Duveiller, G. Remote sensing for agricultural applications: A meta-review. Remote Sens. Environ. 2020, 236, 111402. [Google Scholar]

- Son, N.T.; Chen, C.F.; Chen, C.R.; Guo, H.Y. Classification of multitemporal Sentinel-2 data for field-level monitoring of rice cropping practices in Taiwan. Adv. Space Res. 2020, 65, 1910–1921. [Google Scholar] [CrossRef]

- Zhang, H.; Kang, J.; Xu, X.; Zhang, L. Accessing the temporal and spectral features in crop type mapping using multi-temporal Sentinel-2 imagery: A case study of Yi’an County, Heilongjiang province, China. Comput. Electron. Agric. 2020, 176, 105618. [Google Scholar] [CrossRef]

- Alimohammadi, F.; Rasekh, M.; Sayyah, A.H.A.; Abbaspour-Gilandeh, Y.; Karami, H.; Sharabiani, V.R.; Fioravanti, A.; Gancarz, M.; Findura, P.; Kwaśniewski, D. Hyperspectral imaging coupled with multivariate analysis and artificial intelligence to the classification of maize kernels. Int. Agrophys. 2022, 36, 83–91. [Google Scholar] [CrossRef]

- Dey, S.; Mandal, D.; Robertson, L.D.; Banerjee, B.; Kumar, V.; McNairn, H.; Bhattacharya, A.; Rao, Y.S. In-season crop classification using elements of the Kennaugh matrix derived from polarimetric RADARSAT-2 SAR data. ITC J. 2020, 88, 102059. [Google Scholar] [CrossRef]

- Planque, C.; Lucas, R.; Punalekar, S.; Chognard, S.; Hurford, C.; Owers, C.; Horton, C.; Guest, P.; King, S.; Williams, S.; et al. National crop mapping using Sentinel-1 time series: A knowledge-based descriptive algorithm. Remote Sens. 2021, 13, 846. [Google Scholar] [CrossRef]

- Usowicz, B.; Lipiec, J.; Łukowski, M.; Słomiński, J. Improvement of spatial interpolation of precipitation distribution using cokriging incorporating rain-gauge and satellite (SMOS) soil moisture data. Remote Sens. 2021, 13, 1039. [Google Scholar] [CrossRef]

- Usowicz, B.; Lukowski, M.; Lipiec, J. The SMOS-Derived Soil Water EXtent and equivalent layer thickness facilitate determination of soil water resources. Sci. Rep. 2020, 10, 18330. [Google Scholar] [CrossRef]

- Cai, Y.; Guan, K.; Peng, J.; Wang, S.; Seifert, C.; Wardlow, B.; Li, Z. A high-performance and in-season classification system of field-level crop types using time-series Landsat data and a machine learning approach. Remote Sens. Environ. 2018, 210, 35–47. [Google Scholar] [CrossRef]

- Johnson, D.M.; Mueller, R. Pre- and within-season crop type classification trained with archival land cover information. Remote Sens. Environ. 2021, 264, 112576. [Google Scholar] [CrossRef]

- Kenduiywo, B.K.; Bargiel, D.; Soergel, U. Crop-type mapping from a sequence of Sentinel 1 images. Int. J. Remote Sens. 2018, 39, 6383–6404. [Google Scholar] [CrossRef]

- Wu, H.; Adler, R.F.; Tian, Y.; Huffman, G.J.; Li, H.; Wang, J. Real-time global flood estimation using satellite-based precipitation and a coupled land surface and routing model. Water Resour. Res. 2014, 50, 2693–2717. [Google Scholar] [CrossRef]

- Mutanga, O.; Dube, T.; Galal, O. Remote sensing of crop health for food security in Africa: Potentials and constraints. Remote Sens. Appl. Soc. Environ. 2017, 8, 231–239. [Google Scholar] [CrossRef]

- Donohue, R.J.; Lawes, R.A.; Mata, G.; Gobbett, D.; Ouzman, J. Towards a national, remote-sensing-based model for predicting field-scale crop yield. Field Crops Res. 2018, 227, 79–90. [Google Scholar] [CrossRef]

- Kern, A.; Barcza, Z.; Marjanović, H.; Árendás, T.; Fodor, N.; Bónis, P.; Bognár, P.; Lichtenberger, J. Statistical modelling of crop yield in Central Europe using climate data and remote sensing vegetation indices. Agric. For. Meteorol. 2018, 260–261, 300–320. [Google Scholar] [CrossRef]

- Usowicz, B.; Lipiec, J. Spatial variability of thermal properties in relation to the application of selected soil-improving cropping systems (SICS) on sandy soil. Int. Agrophys. 2022, 36, 269–284. [Google Scholar] [CrossRef]

- Wardlow, B.D.; Egbert, S.L. Large-area crop mapping using time-series MODIS 250 m NDVI data: An assessment for the U.S. Central Great Plains. Remote Sens. Environ. 2008, 112, 1096–1116. [Google Scholar] [CrossRef]

- Fritz, S.; See, L.; McCallum, I.; You, L.; Bun, A.; Moltchanova, E.; Duerauer, M.; Albrecht, F.; Schill, C.; Perger, C.; et al. Mapping global cropland and field size. Glob. Chang. Biol. 2015, 21, 1980–1992. [Google Scholar] [CrossRef]

- Lobell, D.B.; Asner, G.P. Cropland distributions from temporal unmixing of MODIS data. Remote Sens. Environ. 2004, 93, 412–422. [Google Scholar] [CrossRef]

- Mohanty, S.P.; Hughes, D.P.; Salathé, M. Using deep learning for image-based plant disease detection. Front. Plant Sci. 2016, 7, 1419. [Google Scholar] [CrossRef]

- Leslie, C.R.; Serbina, L.O.; Miller, H.M. Landsat and Agriculture—Case Studies on the Uses and Benefits of Landsat Imagery in Agricultural Monitoring and Production; Open-File Report; US Geological Survey: Menlo Park, CA, USA, 2017.

- Venancio, L.P.; Mantovani, E.C.; do Amaral, C.H.; Usher Neale, C.M.; Gonçalves, I.Z.; Filgueiras, R.; Campos, I. Forecasting corn yield at the farm level in Brazil based on the FAO-66 approach and soil-adjusted vegetation index (SAVI). Agric. Water Manag. 2019, 225, 105779. [Google Scholar] [CrossRef]

- Dong, T.; Liu, J.; Qian, B.; Zhao, T.; Jing, Q.; Geng, X.; Wang, J.; Huffman, T.; Shang, J. Estimating winter wheat biomass by assimilating leaf area index derived from fusion of Landsat-8 and MODIS data. ITC J. 2016, 49, 63–74. [Google Scholar] [CrossRef]

- Filippi, P.; Jones, E.J.; Wimalathunge, N.S.; Somarathna, P.D.S.N.; Pozza, L.E.; Ugbaje, S.U.; Jephcott, T.G.; Paterson, S.E.; Whelan, B.M.; Bishop, T.F.A. An approach to forecast grain crop yield using multi-layered, multi-farm data sets and machine learning. Precis. Agric. 2019, 20, 1015–1029. [Google Scholar] [CrossRef]

- Houborg, R.; McCabe, M. High-resolution NDVI from planet’s constellation of earth observing nano-satellites: A new data source for precision agriculture. Remote Sens. 2016, 8, 768. [Google Scholar] [CrossRef]

- Siegfried, J.; Longchamps, L.; Khosla, R. Multispectral satellite imagery to quantify in-field soil moisture variability. J. Soil Water Conserv. 2019, 74, 33–40. [Google Scholar] [CrossRef]

- de Lara, A.; Longchamps, L.; Khosla, R. Soil water content and high-resolution imagery for precision irrigation: Maize yield. Agronomy 2019, 9, 174. [Google Scholar] [CrossRef]

- Shang, J.; Liu, J.; Ma, B.; Zhao, T.; Jiao, X.; Geng, X.; Huffman, T.; Kovacs, J.M.; Walters, D. Mapping spatial variability of crop growth conditions using RapidEye data in Northern Ontario, Canada. Remote Sens. Environ. 2015, 168, 113–125. [Google Scholar] [CrossRef]

- Khabbazan, S.; Vermunt, P.; Steele-Dunne, S.; Ratering Arntz, L.; Marinetti, C.; van der Valk, D.; Iannini, L.; Molijn, R.; Westerdijk, K.; van der Sande, C. Crop monitoring using Sentinel-1 data: A case study from The Netherlands. Remote Sens. 2019, 11, 1887. [Google Scholar] [CrossRef]

- Segarra, J.; Buchaillot, M.L.; Araus, J.L.; Kefauver, S.C. Remote sensing for precision agriculture: Sentinel-2 improved features and applications. Agronomy 2020, 10, 641. [Google Scholar] [CrossRef]

- Dong, J.; Xiao, X.; Kou, W.; Qin, Y.; Zhang, G.; Li, L.; Jin, C.; Zhou, Y.; Wang, J.; Biradar, C.; et al. Tracking the dynamics of paddy rice planting area in 1986–2010 through time series Landsat images and phenology-based algorithms. Remote Sens. Environ. 2015, 160, 99–113. [Google Scholar] [CrossRef]

- Sakamoto, T.; Yokozawa, M.; Toritani, H.; Shibayama, M.; Ishitsuka, N.; Ohno, H. A crop phenology detection method using time-series MODIS data. Remote Sens. Environ. 2005, 96, 366–374. [Google Scholar] [CrossRef]

- Zhao, Q.; Lenz-Wiedemann, V.; Yuan, F.; Jiang, R.; Miao, Y.; Zhang, F.; Bareth, G. Investigating within-field variability of rice from high resolution satellite imagery in qixing farm county, northeast China. ISPRS Int. J. Geoinf. 2015, 4, 236–261. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep learning classification of land cover and crop types using remote sensing data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Zhang, M.; Lin, H.; Wang, G.; Sun, H.; Fu, J. Mapping paddy rice using a convolutional neural network (CNN) with Landsat 8 datasets in the Dongting lake area, China. Remote Sens. 2018, 10, 1840. [Google Scholar] [CrossRef]

- Zhong, L.; Hu, L.; Zhou, H. Deep learning based multi-temporal crop classification. Remote Sens. Environ. 2019, 221, 430–443. [Google Scholar] [CrossRef]

- Karakizi, C.; Karantzalos, K.; Vakalopoulou, M.; Antoniou, G. Detailed Land Cover mapping from multitemporal Landsat-8 data of different cloud cover. Remote Sens. 2018, 10, 1214. [Google Scholar] [CrossRef]

- Shrestha, S.; Vanneschi, L. Improved fully convolutional network with conditional random fields for building extraction. Remote Sens. 2018, 10, 1135. [Google Scholar] [CrossRef]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Convolutional neural networks for large-scale remote-sensing image classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 645–657. [Google Scholar] [CrossRef]

- Seydi, S.T.; Amani, M.; Ghorbanian, A. A Dual Attention Convolutional Neural Network for Crop Classification Using Time-Series Sentinel-2 Imagery. Remote Sens. 2022, 14, 498. [Google Scholar] [CrossRef]

- Tuvdendorj, B.; Zeng, H.; Wu, B.; Elnashar, A.; Zhang, M.; Tian, F.; Nabil, M.; Nanzad, L.; Bulkhbai, A.; Natsagdorj, N. Performance and the Optimal Integration of Sentinel-1/2 Time-Series Features for Crop Classification in Northern Mongolia. Remote Sens. 2022, 14, 1830. [Google Scholar] [CrossRef]

- Li, H.; Lu, J.; Tian, G.; Yang, H.; Zhao, J.; Li, N. Crop classification based on GDSSM-CNN using multi-temporal RADARSAT-2 SAR with limited labeled data. Remote Sens. 2022, 14, 3889. [Google Scholar] [CrossRef]

- Zhang, W.; Cao, G.; Li, X.; Zhang, H.; Wang, C.; Liu, Q.; Chen, X.; Cui, Z.; Shen, J.; Jiang, R.; et al. Closing yield gaps in China by empowering smallholder farmers. Nature 2016, 537, 671–674. [Google Scholar] [CrossRef] [PubMed]

- Samberg, L.H.; Gerber, J.S.; Ramankutty, N.; Herrero, M.; West, P.C. Subnational distribution of average farm size and smallholder contributions to global food production. Environ. Res. Lett. 2016, 11, 124010. [Google Scholar] [CrossRef]

- Yu, L.; Wang, J.; Clinton, N.; Xin, Q.; Zhong, L.; Chen, Y.; Gong, P. FROM-GC: 30 m global cropland extent derived through multisource data integration. Int. J. Digit. Earth 2013, 6, 521–533. [Google Scholar] [CrossRef]

- Yu, L.; Wang, J.; Li, X.; Li, C.; Zhao, Y.; Gong, P. A multi-resolution global land cover dataset through multisource data aggregation. Sci. China Earth Sci. 2014, 57, 2317–2329. [Google Scholar] [CrossRef]

- Xiong, J.; Thenkabail, P.; Tilton, J.; Gumma, M.; Teluguntla, P.; Oliphant, A.; Congalton, R.; Yadav, K.; Gorelick, N. Nominal 30-m cropland extent map of continental Africa by integrating pixel-based and object-based algorithms using sentinel-2 and Landsat-8 data on Google earth engine. Remote Sens. 2017, 9, 1065. [Google Scholar] [CrossRef]

- Population Profile Punjab. Available online: https://pwd.punjab.gov.pk/population_profile (accessed on 24 April 2022).

- Agriculture Statistics of Punjab. Available online: http://www.pbit.gop.pk/agriculture (accessed on 24 April 2022).

- Caballero, I.; Ruiz, J.; Navarro, G. Sentinel-2 satellites provide near-real time evaluation of catastrophic floods in the West Mediterranean. Water 2019, 11, 2499. [Google Scholar] [CrossRef]

- Spoto, F.; Sy, O.; Laberinti, P.; Martimort, P.; Fernandez, V.; Colin, O.; Hoersch, B.; Meygret, A. Overview of sentinel-2. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012. [Google Scholar]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P.; et al. Sentinel-2: ESA’s optical high-resolution mission for GMES operational services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Gascon, F. Sentinel-2 for Agricultural Monitoring. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018. [Google Scholar]

- van der Meer, F.D.; van der Werff, H.M.A.; van Ruitenbeek, F.J.A. Potential of ESA’s Sentinel-2 for geological applications. Remote Sens. Environ. 2014, 148, 124–133. [Google Scholar] [CrossRef]

- Sola, I.; García-Martín, A.; Sandonís-Pozo, L.; Álvarez-Mozos, J.; Pérez-Cabello, F.; González-Audícana, M.; Montorio Llovería, R. Assessment of atmospheric correction methods for Sentinel-2 images in Mediterranean landscapes. ITC J. 2018, 73, 63–76. [Google Scholar] [CrossRef]

- Magno, R.; Rocchi, L.; Dainelli, R.; Matese, A.; Di Gennaro, S.F.; Chen, C.F.; Son, N.T.; Toscano, P. AgroShadow: A new Sentinel-2 cloud shadow detection tool for precision agriculture. Remote Sens. 2021, 13, 1219. [Google Scholar] [CrossRef]

- Qin, C.Z.; Zhan, L.J.; Zhu, A.X. How to apply the geospatial data abstraction library (GDAL) properly to parallel geospatial raster I/O?: Applying GDAL properly to parallel geospatial raster I/O. Trans. GIS 2014, 18, 950–957. [Google Scholar] [CrossRef]

- Zhang, T.; Su, J.; Liu, C.; Chen, W.H.; Liu, H.; Liu, G. Band selection in sentinel-2 satellite for agriculture applications. In Proceedings of the 2017 23rd International Conference on Automation and Computing (ICAC), Huddersfield, UK, 7–8 September 2017. [Google Scholar]

- Clevers, J.G.P.W.; Gitelson, A.A. Remote estimation of crop and grass chlorophyll and nitrogen content using red-edge bands on Sentinel-2 and -3. Int. J. Appl. Earth Obs. Geoinf. 2013, 23, 344–351. [Google Scholar] [CrossRef]

- Main-Knorn, M.; Pflug, B.; Louis, J.; Debaecker, V.; Müller-Wilm, U.; Gascon, F. Sen2Cor for Sentinel-2. In Proceedings of the Image and Signal Processing for Remote Sensing XXIII; Bruzzone, L., Bovolo, F., Benediktsson, J.A., Eds.; SPIE: Bellingham, WA, USA, 2017. [Google Scholar]

- Sharifi, A. Remotely sensed vegetation indices for crop nutrition mapping. J. Sci. Food Agric. 2020, 100, 5191–5196. [Google Scholar] [CrossRef]

- Farid Muhsoni, F. Comparison of different vegetation indices for assessing mangrove density using sentinel-2 imagery. Int. J. Geomate 2018, 14, 42–51. [Google Scholar] [CrossRef]

- Somvanshi, S.S.; Kumari, M. Comparative analysis of different vegetation indices with respect to atmospheric particulate pollution using sentinel data. Appl. Comput. Geosci. 2020, 7, 100032. [Google Scholar] [CrossRef]

- Spadoni, G.L.; Cavalli, A.; Congedo, L.; Munafò, M. Analysis of Normalized Difference Vegetation Index (NDVI) multi-temporal series for the production of forest cartography. Remote Sens. Appl. Soc. Environ. 2020, 20, 100419. [Google Scholar] [CrossRef]

- Zaitunah, A.; Samsuri; Ahmad, A.G.; Safitri, R.A. Normalized difference vegetation index (ndvi) analysis for land cover types using landsat 8 oli in besitang watershed, Indonesia. IOP Conf. Ser. Earth Environ. Sci. 2018, 126, 012112. [Google Scholar] [CrossRef]

- Reyadh, A.; Venkataraman, L. Comparison of normalized difference vegetation index derived from Landsat, MODIS, and AVHRR for the Mesopotamian marshes between 2002 and 2018. Remote Sens. 2019, 11, 1245. [Google Scholar]

- Candiago, S.; Remondino, F.; De Giglio, M.; Dubbini, M.; Gattelli, M. Evaluating multispectral images and vegetation indices for precision farming applications from UAV images. Remote Sens. 2015, 7, 4026–4047. [Google Scholar] [CrossRef]

- Wang, C.; Li, J.; Liu, Q.; Zhong, B.; Wu, S.; Xia, C. Analysis of differences in phenology extracted from the enhanced vegetation index and the leaf area index. Sensors 2017, 17, 1982. [Google Scholar] [CrossRef] [PubMed]

- de Azevedo Silva, P.A.; de Carvalho Alves, M.; Sáfadi, T.; Pozza, E.A. Time series analysis of the enhanced vegetation index to detect coffee crop development under different irrigation systems. J. Appl. Remote Sens. 2021, 15, 014511. [Google Scholar] [CrossRef]

- Shrestha, S.; Brueck, H.; Asch, F. Chlorophyll index, photochemical reflectance index and chlorophyll fluorescence measurements of rice leaves supplied with different N levels. J. Photochem. Photobiol. B 2012, 113, 7–13. [Google Scholar] [CrossRef]

- Yang, P.; van der Tol, C.; Campbell, P.K.E.; Middleton, E.M. Fluorescence Correction Vegetation Index (FCVI): A physically based reflectance index to separate physiological and non-physiological information in far-red sun-induced chlorophyll fluorescence. Remote Sens. Environ. 2020, 240, 111676. [Google Scholar] [CrossRef]

- Guha, S.; Govil, H.; Gill, N.; Dey, A. Analytical study on the relationship between land surface temperature and land use/land cover indices. Ann. GIS 2020, 26, 201–216. [Google Scholar] [CrossRef]

- Hu, X.; Ren, H.; Tansey, K.; Zheng, Y.; Ghent, D.; Liu, X.; Yan, L. Agricultural drought monitoring using European Space Agency Sentinel 3A land surface temperature and normalized difference vegetation index imageries. Agric. For. Meteorol. 2019, 279, 107707. [Google Scholar] [CrossRef]

- Yu, Y.; Si, X.; Hu, C.; Zhang, J. A review of recurrent neural networks: LSTM cells and network architectures. Neural Comput. 2019, 31, 1235–1270. [Google Scholar] [CrossRef]

- Smagulova, K.; James, A.P. A survey on LSTM memristive neural network architectures and applications. Eur. Phys. J. Spec. Top. 2019, 228, 2313–2324. [Google Scholar] [CrossRef]

- Gers, F.A.; Eck, D.; Schmidhuber, J. Applying LSTM to time series predictable through time-window approaches. In Perspectives in Neural Computing; Springer: London, UK, 2002; pp. 193–200. [Google Scholar]

- Bousbih, S.; Zribi, M.; Lili-Chabaane, Z.; Baghdadi, N.; El Hajj, M.; Gao, Q.; Mougenot, B. Potential of Sentinel-1 radar data for the assessment of soil and cereal cover parameters. Sensors 2017, 17, 2617. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).