1. Introduction

Vehicles are a major source of pollution, accounting for a total of 25% of annual CO

2 emissions worldwide [

1]. In the last decade, the adoption of hybrid vehicles (HV) on a global scale has proven the reduced levels of CO

2 emissions generated by HVs in comparison with conventional vehicles. This has sparked interest in industry and academia in finding ways to optimise and reduce vehicle emissions. When it comes to measuring CO

2 concentrations, a portable emissions monitoring system (PEMS) is the preferred choice, since they are an accurate and reasonably portable. Nevertheless, the usage of this equipment incurs high costs, and therefore, it is mainly used by car manufacturers and environmental regulation entities. It us almost impossible to use for researchers and investigators.

Recent developments in machine learning (ML) mechanisms have led to the creation of pollution predictors. However, these have primarily been focused on conventional internal combustion engine (ICE) vehicles. The complexity and constantly changing nature of power-train sources, coupled with several factors involved in determining the CO

2 emissions from HVs, make the prediction a challenging task for traditional ML algorithms. A comparative analysis has been summarised in

Table 1 to contrast the published results related to CO

2 emission prediction in automotive applications.

In [

2], a real-time cloud-based in-vehicle air quality monitoring system was developed to predict the current and future cabin air quality. Three predictive ML algorithms—linear regression (LR), support vector regression (SVR), and multilayer perceptron (MLP)—were applied for prediction. SVR had the best performance in terms of R

2 score in their studies when compared with the LR and MLP models. However, our study is focused on the prediction of CO

2 exhaust concentrations emitted by HVs.

In [

3], CO

2 concentrations were predicted using a tuned gradient boosting regression (GBR) model, which achieved a higher (92%) accuracy compared to other ML algorithms, such as MLP and xtreme gradient boosting regression (XGBR). Focusing on fuel consumption will be their future work for developing a more robust model. In [

4], the GBR model was used to predict the emissions of nitrogen oxide (NOx) and carbon dioxide (CO

2) and the fuel consumption in urban, suburban and highway areas in a conventional diesel-fuelled vehicle. Data collection was performed using a PEMS. Two routes were used to perform the experiments. The model was trained in the first route data and was used to predict the emissions of the second route. The best CO

2 model had R

2 scores of 0.98, 0.99 and 0.99 for the various driving patterns. Predictions for the second route were R

2 scores of 0.79, 0.82 and 0.83, respectively.

In [

5], the exhaust emissions and fuel consumption of a HV were tested using a GBR model. However, in contrast to our study, the predictive model only considered the data corresponding to when the ICE was turned on, and therefore, the authors did not contemplate the power train state transitions in HVs. In [

12], air pollutant concentrations were modelled using an ANN model for three different traffic volume predictors. Moderate results could be achieved for all CO

2 concentration levels. All three input variable options (sound, traffic and time) are suitable for modelling various air pollutant concentrations.

In [

6], a comparative analysis of LSTM, DNN and CNN was conducted regarding predicting CO

2 emissions from two light-duty diesel-fuelled vehicles. The dataset was captured from the OBD2 port. The LSTM model outperformed the other models, achieving an RMSE score of 9.30. Plus, the authors analysed the effect of noisy data in the input data, showing that its presence can negatively affect a model’s accuracy by up to 30%.

In [

7], several ML techniques used to estimate the CO

2 emission rate in hybrid vehicles in comparison to traditional micro and macro estimation models. The Gaussian process regression (GPR) model outperformed the rest, achieving a maximum level of accuracy of 69%. As the authors conclude, due to the variability of CO

2 emission levels from a hybrid vehicle, there is a problem with the existing emission models, as they do not provide reliable results. Our research here provides a contribution to address this phenomenon.

In [

9], the authors propose a parallel attention-based LSTM (PA-LSTM) for building an emission prediction model for several vehicle pollutants using PEMS. In this model, the two-layer attention spatial encoding mechanism accelerated the convergence speed of the model to reduce the training time. The results show that the PA-LSTM performed better than any other ML mode, reaching 94.6% accuracy.

In [

10], the CO

2 emissions of a diesel-fuelled vehicle were estimated by means of different ML models. The authors examined the a novel relationship between the movement of construction equipment chassis and the emission rates generated from them. Results show that the best algorithm was RF, obtaining an accuracy level of 94%.

In [

11], four different ANNs were studied for predicting the mass-based pollution rates of diesel-fuelled passenger vehicles. Among the analysed models, the ANN that showed the best results was the one trained with the engine speed, engine torque, vehicle speed, coolant temperature and air–fuel ratio.

To sum up, almost all the current literature focuses on prediction of CO

2 emissions using ICE passenger vehicles. Only two works used a HV during the experiments, of which only the work done in [

7] studied the prediction during the vehicle’s power-train switch-overs, which is the biggest challenge in HVs. The results reflected in this study are about 27% less accurate than the average score obtained by the rest of the studies present in the literature. Hence, it is clear that the complex behaviour of HV lowers the accuracy of CO

2 emission prediction to a large extent when using current ML-based mechanisms.

To tackle this problem, we designed, implemented and evaluated a long short-term memory-based (LSTM) model named UWS-LSTM for predicting CO

2 emission concentrations from HVs. Although several studies have been performed using various ML techniques to predict several pollutants in conventional vehicles, to the best of our knowledge, this is the first study focusing on HV CO

2 emission concentrations considering the entire set of conditions and challenges derived from the complete use of a HV (i.e., without excluding any driving scenario). By accurately predicting CO

2 emission concentrations, this model could help in democratising the access to CO

2 concentration information in HVs and in the development of strategies to reduce vehicle emission to combat air pollution. In addition, the proposed UWS-LSTM model has been embedded and deployed in a real-world HV to properly demonstrate its practical application in a low-powered Internet of Things (IoT) device, providing an efficient, cost-effective solution without the need for using PEMS. As seen from previous studies, IoT devices are increasingly improving their capabilities, which, coupled with the optimisation of ML techniques, can drastically provoke a change in this paradigm from highly-capable workstations to low-powered devices [

13]. The outcomes of this study have the potential to assist policy makers and vehicle manufacturers regarding CO

2 emission in HVs. As a result, our contributions are summarised as follows:

Collection of a comprehensive dataset containing operational and emission-related information of a HV in real driving conditions and scenarios.

Study of the most influential features presented in the dataset for prediction of CO2 emission concentrations.

Design, implementation, training and validation of an accurate lightweight LSTM-based model (UWS-LSTM) able to perform CO2-concentration prediction for HVs.

Comparison of traditional and advanced ML models in terms of accuracy, speed and model size with the proposed UWS-LSTM model.

Deployment of the designed model in a low-powered IoT device installed within the vehicle to perform real-time on-road CO2-concentration prediction.

The rest of the paper is organised as follows.

Section 2 elaborates the data collection and cleaning process, and addresses the input feature selection of the models evaluated. Moreover, it describes the definition of the proposed LSTM model and presents the execution environment. Subsequently,

Section 3 explains the results found during the evaluation of each of the models evaluated.

Section 4 concludes the paper.

2. Materials and Methods

This sections explains the main stages addressed to achieve the contributions highlighted in the introduction.

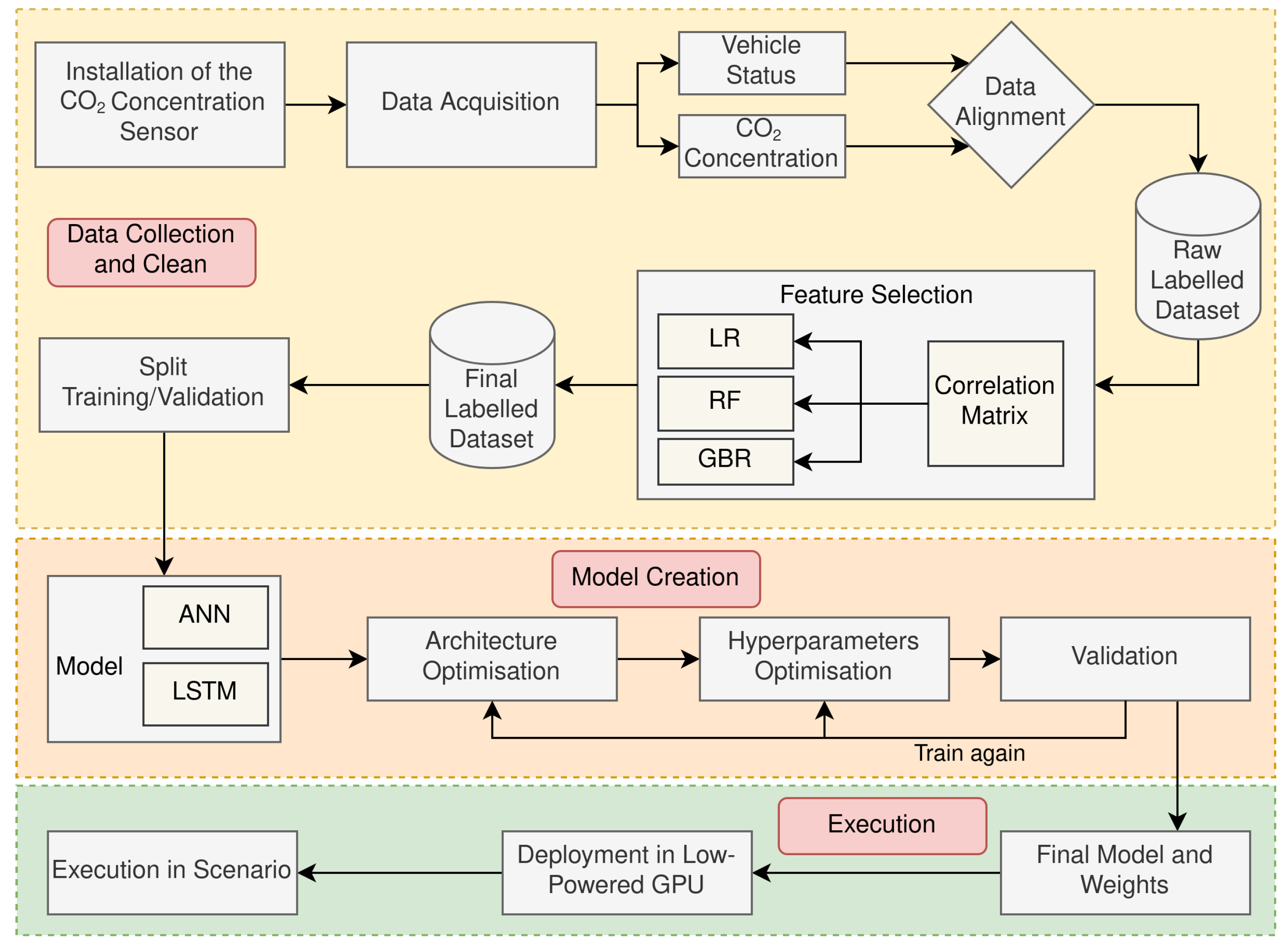

Figure 1 depicts the process followed to perform these stages. First, a data collection and cleaning process was carried out to gather the dataset that was used to train and evaluate the ML models. During this process, a study was conducted to investigate the correlation and effect of different input parameters sets on the assessed ML models. As a result, a subset of parameters present in the original dataset was identified as the final dataset and used in subsequent stages of the study. Later, a model creation stage was conducted with the objective of defining, training and validating the ANN-based ML models. Lastly, during the execution stage, an evaluation of each ML model was carried out with various performance indicators when these models were executed in a IoT device installed in a real vehicle. Further descriptions of the tasks involved in the defined stages are elaborated below.

2.1. Machine Learning Algorithms Evaluated

ML is a frequently used method to be applied for classification and regression in myriad of applications. In our study, 5 different ML algorithms were developed and tested to assess their performances when predicting the CO2 concentrations in HV exhausts:

2.2. CO2 Concentration Collection

For the entire process of dataset collection, a SprintIR-R 20 (Gas Sensing Solutions Ltd., Cumbernauld, UK) sensor was used for measuring and recording the vehicle’s exhaust CO2 concentration, expressed in parts per million ppm. More specifically, the Sprint-IR sensor was found to be ideal for this use case, since it is able to report CO2 concentration values of up to 20% with an accuracy of ±70 ppm and a resolution of 10 ppm at a maximum sampling frequency of 50 Hz via non-dispersive infra red (NDIR) technology.

During the dataset collection process, the sensor was installed in the rear end of a vehicle and connected to the vehicle’s exhaust pipe. Furthermore, a set of tools were used to separate impurities and water vapour from the CO

2 analyte, which included a hydrophobic filter, a water trap and a particulate filter to avoid any incorrect or inaccurate measurements. The CO

2 collection system also featured an electric pump that helped the system to have a constant 0.5 l/min sample flow rate through the sensor’s measurement chamber. Moreover, the CO

2 sensor was directly connected to an ESP32 micro-controller (Espressif Systems, Shanghai, China) via serial connection that performed measurements requests at a rate of 5 Hz. Before each drive, the sensor was calibrated manually using the external air as a reference, assuming a CO

2 concentration value of 400 ppm. More information about the CO

2 sensor can be found in the

Supplementary information section of this manuscript.

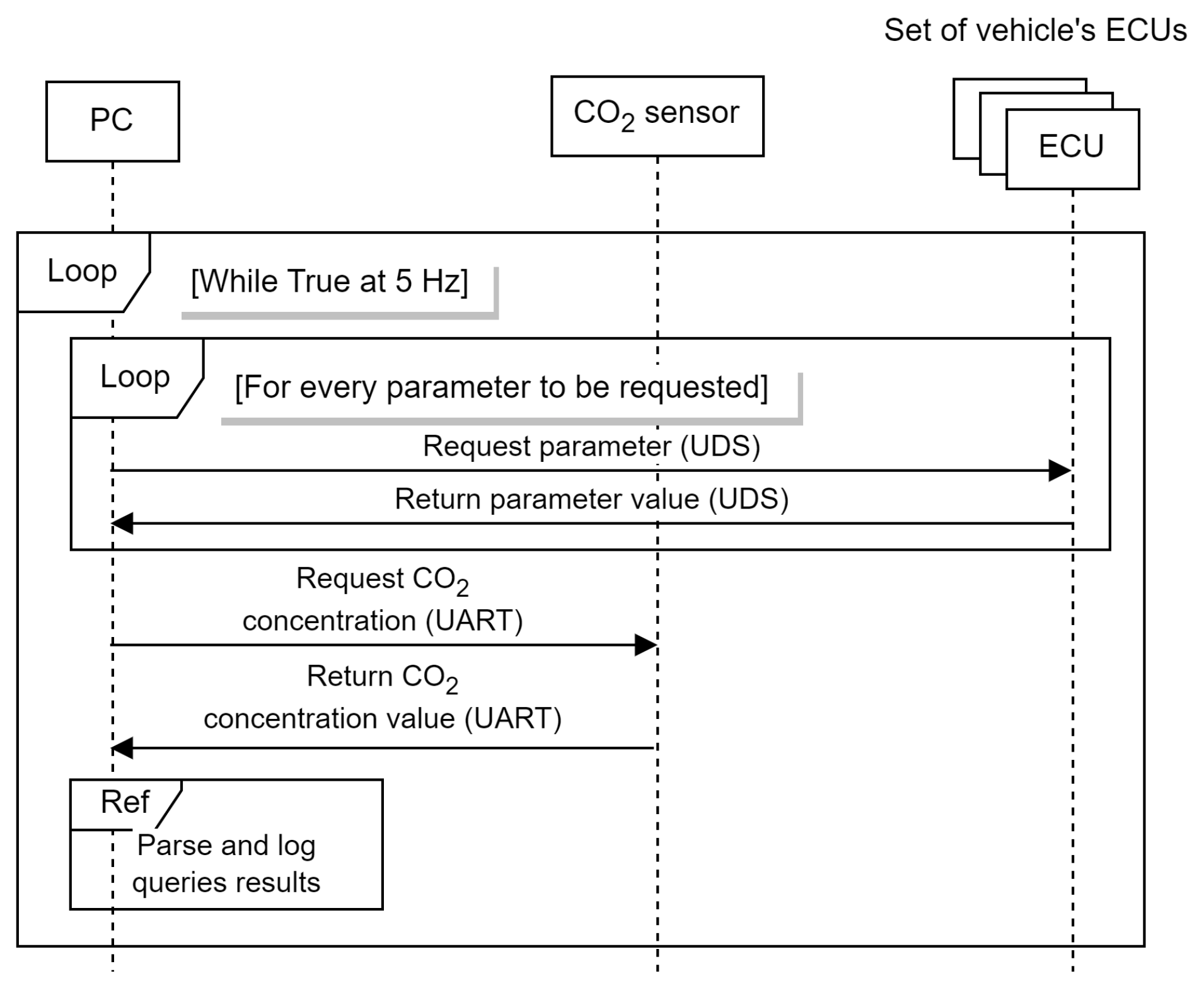

2.3. Vehicle Status Data Collection

The collection of the onboard vehicle status parameters was carried out with custom software executed on a laptop that was connected to the vehicle’s CAN bus via its OBD2 port. The software was written in Python and performs UDS-like (Unified Diagnostic Services) [

19] queries to periodically report a list of given parameters obtained from the vehicle’s electronic control units (ECU) at a fixed rate. The collection of parameters was done at 5 Hz; we stored the information recorded on separate files and drives and then merged it together.

Figure 2 illustrates the sequence diagram of the data collection process.

The set of parameters acquired from the vehicle’s CAN bus comprises the following 21 parameters: accelerator position (%), vehicle speed (MPH), external atmospheric pressure (psi), engine load (%), coolant temperature (°C), acceleration (m/s2), distance travelled since vehicle startup (miles), drive mode, engine exhaust flow rate (kg/h), engine mode, engine speed (RPM), EV mode status, engine power delivered (kW), hybrid battery SOC (%), mass air flow (g/min), electric motor power delivered (kW), electric motor revolution (RPM), electric motor torque (Nm), GPS latitude, GPS longitude and altitude (m). Among the listed variables, there are 3 categorical parameters:

Drive mode: indicates whether the power train source is hybrid or electric.

EV mode status: in case the drive mode is electric, it indicates whether the electric mode is normal or “city”, which limits the power capacity up to 53 kW.

Engine mode: indicates the status of the engine, such as (1) stopped, (2) stopping, (3) starting or (4) started.

2.4. Selected Input Parameters

Specific input features selection was conducted to identify a subset of input features that are most pertinent to the output variable and also to eliminate unnecessary information in the prediction process. To perform the input selection, the significance of the input data and the correlation amongst features should be estimated. Hence, to identify the most relevant parameters for this use-case, the correlation coefficient technique and some traditional ML algorithms were used for different parameters of the dataset.

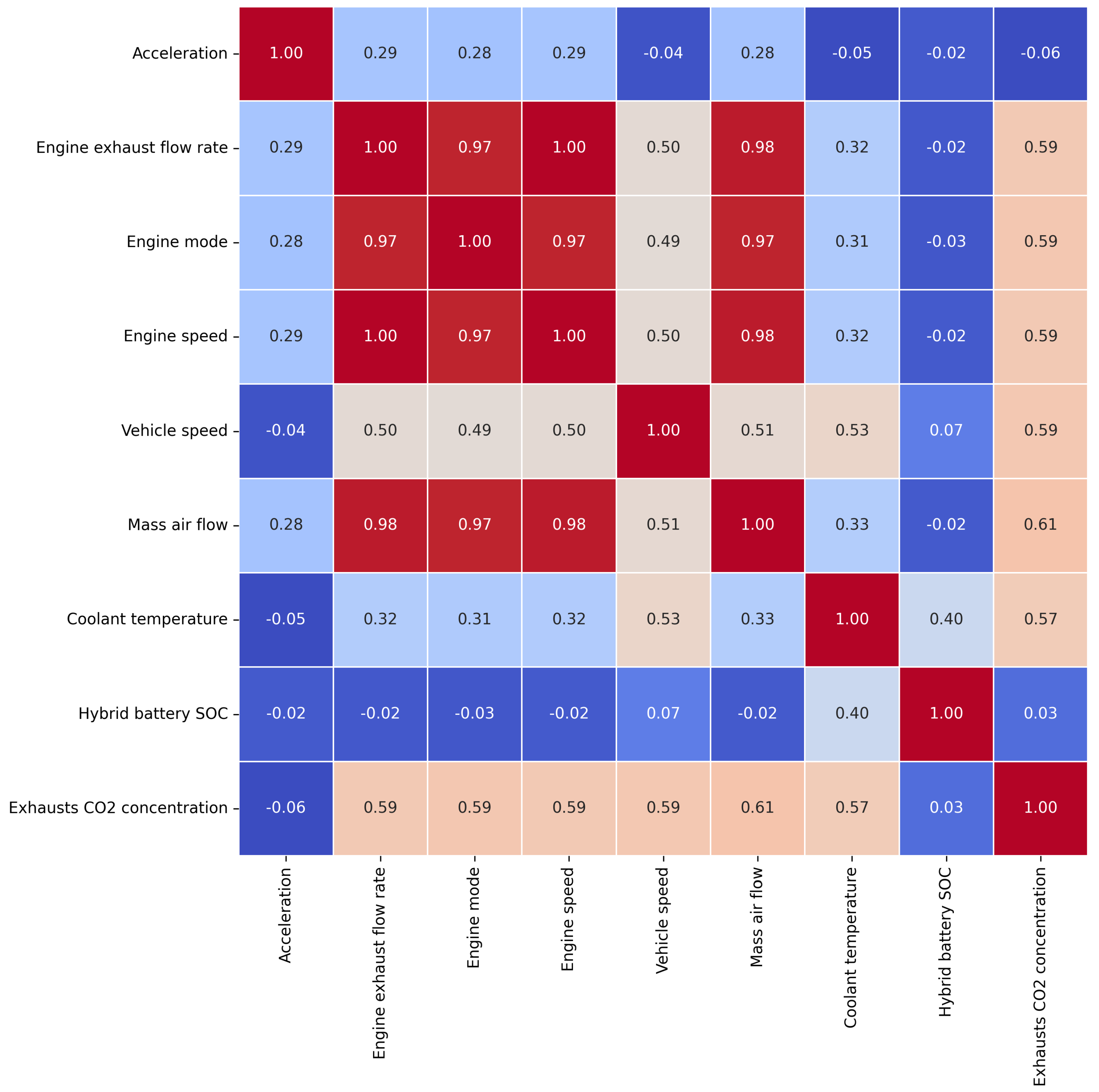

2.4.1. Correlation Matrix

The first attempt to identify the most useful parameters was to calculate their correlation coefficients. Firstly, a correlation matrix was created with the entire set of parameters described in

Section 2.3. The technique implemented was the Spearman-based correlation matrix, which provides a correlation coefficient based on the statistical dependence of ranking between two variables and covers non-linear relationships. The parameters with a high correlation score among them were eliminated, and the ones with a high correlation with the CO

2 concentration were kept. Based on the observations seen in the correlation matrix, the parameters accelerator position, external atmospheric pressure, engine load, distance travelled since vehicle startup, drive mode, EV mode status, engine power delivered, electric motor power delivered, electric motor revolution, electric motor torque and altitude were removed, since they were considered less influential for the prediction. In addition, GPS coordinates of the car, i.e., latitude and longitude, were also discarded from the dataset, since the location of the car should not be a decisive factor in the prediction. As a result,

Figure 3 presents the correlation matrix featuring the eight final selected input parameters and the output parameter to be predicted. Moreover, the resulting input parameters are presented and listed in

Table 2.

2.4.2. ML-Based Feature Selection

To further evaluate the impacts of the remaining input parameters, 255 different combinations of input values (the combination for 8 input values) were used to train and test each ML model to assess the adjusted R2 accuracy level achieved. Three distinct traditional ML algorithms were selected: linear regression (LR), random forest (RF) and gradient boosting regression (GBR).

Table 3 illustrates the top 4 combinations of input parameters that showed the best result for each ML algorithm tested. As seen, for both RF and GBR, the set of parameters that achieved the highest scores were: acceleration, hybrid battery SOC, vehicle speed, engine mode, coolant temperature and engine speed, leaving behind mass air flow and engine exhaust flow rate. However, LR showed that using all the values yielded the best score. In this case, as the RF and GBR obtained substantially higher accuracy than LR, the combinations of input parameters indicated by the best GBR and RF scores were chosen as candidates for the set of most influential parameters. As a result, two different set of input parameters were defined and used in the following stages of this research:

Input set 1. Features 8 input parameters: acceleration, hybrid battery SOC, vehicle speed, engine mode, coolant temperature, engine speed, mass air flow and engine exhaust flow rate.

Input set 2. Features 6 input parameters: acceleration, hybrid battery SOC, vehicle speed, engine mode, coolant temperature and engine speed.

2.5. Final Dataset

A total of 70,683 samples were collected from 235 min of driving. Three heterogeneous road scenarios are included in this dataset: motorway roads, urban roads and intercity roads; all of them were recorded at different times of the day. The classification of the samples in each road was done manually based on the GPS coordinates of the vehicle at each moment. As is common, 80% of the samples were employed for the purpose of training (56,546 samples in total), and the remaining 20% (14,137 samples in total) were used for validation and testing (10% and 10% respectively).

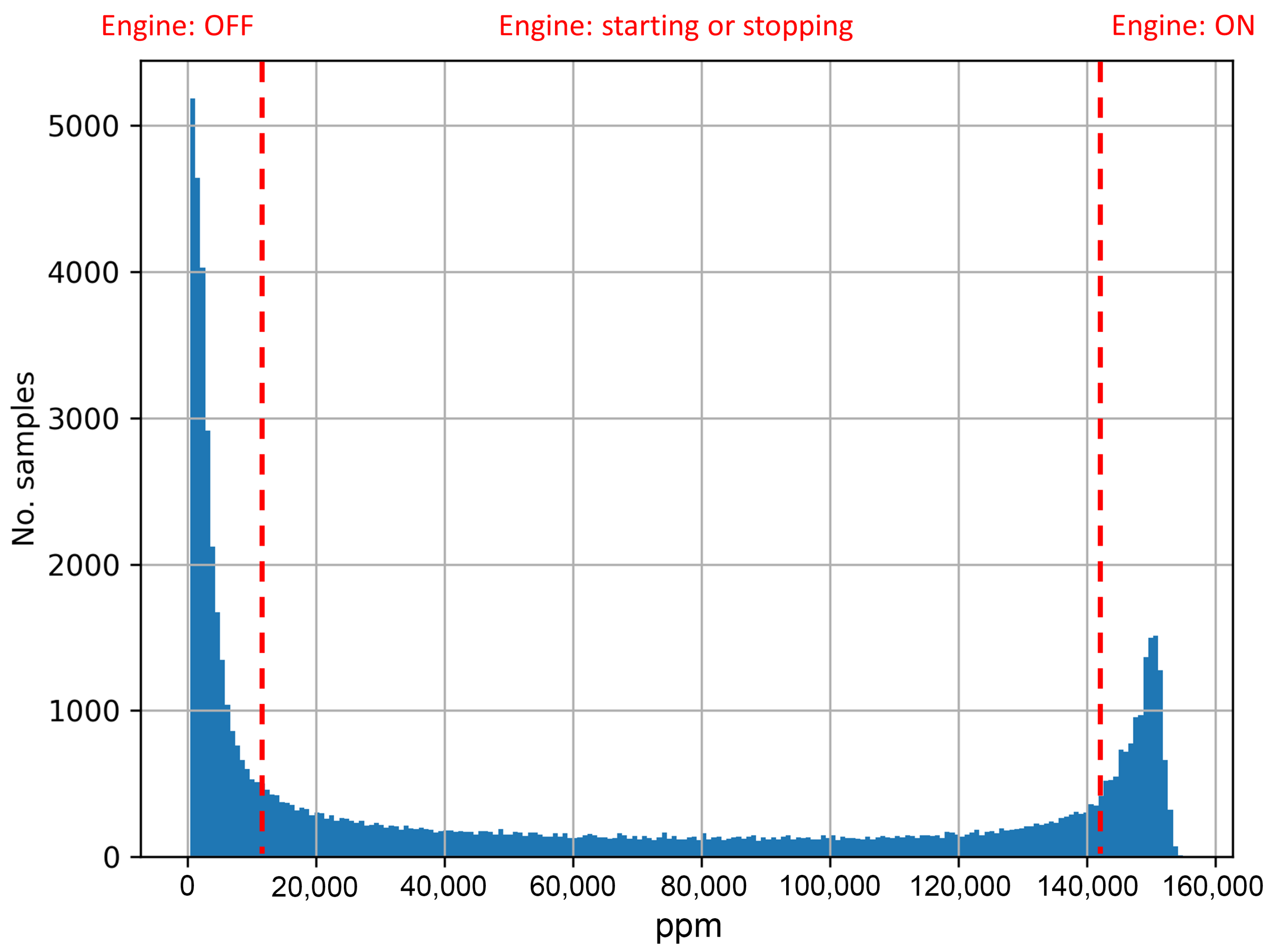

The distribution of CO

2 concentration in ppm in the dataset is represented in

Figure 4. As is apparent, most of the samples in the dataset lie between the minimum and the maximum concentration values ppm. To the best of our knowledge, no other studies in the literature used the CO

2 concentration emitted by HVs in combination with the vehicle’s operational performance indicators. In other datasets mentioned in the literature, the ppm concentration remains either constant when using a petrol engine or fluctuates within a limited range (usually between 80,000 and 120,000 ppm) when using a diesel engine [

20,

21]. With a hybrid engine, this is distributed along the entire range of possible values from 400 to approximately 155,000 ppm, generating the U-shaped distribution presented in

Figure 4. This graph shows two peaks being captured over the data collection. First, a large portion of low-ppm measurements are captured when the engine is turned off (53.73%). Secondly, a high portion of high-ppm measurements are captured when the engine is on (38.41%). The remaining values correspond to switch-overs from the electric mode to the hybrid mode and vice versa. Therefore, these data are minimal, since it only takes a few seconds to make the transition (1.52% and 6.32% of the total dataset when the engine is starting and stopping, respectively).

The high frequency of switch-overs recorded during the drives carried out, which is very usual and normal behaviour in HV, and a non-constant CO2 concentration data distribution between both ends of the data, indicate the complexity and uniqueness of the dataset. This rapid change in concentration values during the switch-overs can be challenging for traditional ML algorithms. For this reason, more complex prediction mechanisms techniques were developed and used in this research, this being one of the main contributions of this manuscript.

Dataset Preprocessing

For training and validation, the complete dataset was standardised to prevent inputs with higher values from dominating those with the smaller values. To this end, formulae described by Equation (

1) were applied to the dataset, where

describes the standardised value of a sample

i,

describes the original value of a sample

i,

represents the mean value of the this parameter in the dataset, and

is the standard deviation of this parameter in the dataset.

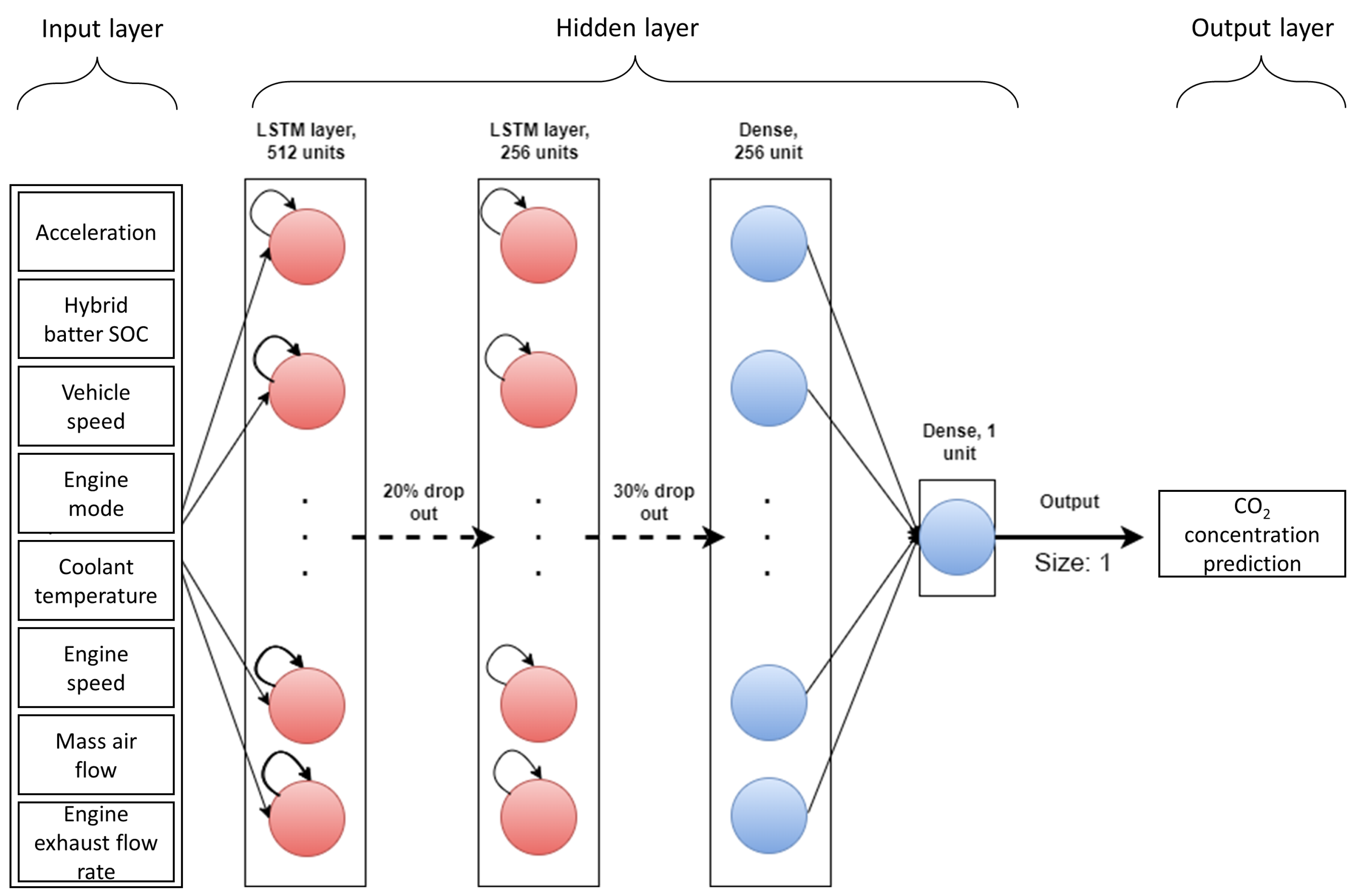

2.6. Proposed LSTM

The proposed UWS-LSTM comprises three primary components: the first component is the input layer, which includes the most effective input parameters mentioned in

Section 2.4.2. The second component is the hidden layers comprising two LSTM layers with 512 and 256 neurons in each LSTM cell. In this regard, a hierarchical structure of stacked LSTM layers has been shown to outperform single-layer LSTM models [

22]. A drop out layer is also used after each LSTM layer with the probabilities of

p = 0.2 and

p = 0.3, respectively, to control the overfitting [

23]. The second component also comprises two dense layers with 256 and 1 neurons, respectively. The third components is the output layer which outputs the CO

2-concentration prediction as a single value.

Figure 5 illustrates the architecture of the proposed algorithm, which outperforms the other algorithms mentioned in this article.

One of the advantages of using an LSTM model in comparison with the ANN model is that the former can be parameterized in terms of the amount of information that is taken into consideration from previous states. This term is often referred to as the lookback window. This parameter determines how many previous timesteps are considered to perform the current prediction. In order to figure out the influence of this parameter in the accuracy of the LSTM model, a process of model training and validation was conducted with a set of different lookback windows to compare the obtained scores. Further results can be observed in

Section 3.1.1.

2.7. Hyperparameters

This subsection gathers the information regarding the configurations for the ML models studied in this work.

Table 4 reflects the hyperparameter configurations. It is worth mentioning that for optimising the hyperparameters defined in the ANN model, a series of Bayesian optimisation iterations were conducted using keras-tuner.

2.8. Execution Environment

The complete prediction system was deployed in a real operating vehicle and tested on public roads to validate its performance under realistic conditions. The vehicle used was a Toyota Prius Plug-in Hybrid (2019). This vehicle features a 4-stroke, 4-cylinder 1798 cc gasoline engine that can output 90 kW of power. The maximum torque achieved is 142 Nm at 3600 RPM. As per the emissions, the vehicle is categorised under the Euro-class scheme as a Euro 6 DG type; according to official manufacturers information, the vehicle emits 28 g/km of CO2 (WLTP test).

The computing platform chosen was a Nvidia Jetson Xavier. This board is an off-the-shelf, low-consumption Linux-based system with a 512-core Volta GPU with Tensor Cores which runs at more than 21 tera operations per second (TOPS) and has 32 GB of memory. Different power consumption modes are supported: 10 W, 15 W and 30 W. Its portability and its power capability made it suitable for use cases to deploy our CO2-concentration predictor.

All the ANN-based algorithms were implemented and executed on TensorFlow 2. TensorFlow [

24], which is a state-of-the-art ML platform that is fully compatible with Nvidia compute unified device architecture (CUDA); therefore, it was the selected platform for implementation and execution of the algorithms in Jetson Xavier. On the other hand, the python package Scikit-learn was also used to perform the predictions with the traditional ML algorithms due to it is simplicity and efficiency.

3. Results and Discussion

In this section, we present and analyse the results obtained from the evaluations of the ML models outlined in previous sections of the manuscript. The results are presented in two forms: quantitative and qualitative. The quantitative results consist of a set of numerical measurements, and the qualitative results pertain to the implementation and performance of the CO2 predictor within an actual vehicle environment.

3.1. Quantitative Results

The quantitative accuracy of the predictions was measured by calculating the errors given by adjusted R

2, mean absolute error (MAE), mean square error (MSE) and root mean squared error (RMSE), described by Equations (

2)–(

5), respectively. In these equations,

refers to the ordinary R-squared formula,

n is the number of data points,

p is the number of predictor variables in the model,

is the true value of the

ith observation and

is the predicted value of the

ith observation.

3.1.1. LSTM Lookback Window

As previously mentioned in

Section 2.6, a key hyperparameter to be defined for LSTM-based models is the lookback window.

Table 5 presents the different results in terms of accuracy after training and validating the UWS-LSTM model with different lookback window sizes. In addition, we presented in

Section 2.4.2 that two particular input parameters yielded different scores depending on the ML model used. For this reason, these input parameters were part of the analysis to determine the best LSTM configuration.

As observed in the results, configurations with smaller lookback window sizes, such as 1, 2 or 4, performed inferiorly. However, there was a significant increase in accuracy as the window size increased: an improvement of approximately 25% when using both input parameter sets, when comparing a window size of 8 to 16. Notably, the highest accuracy was achieved with a window size of 32 and input parameter set 1: an adjusted R2 score of 0.975, surpassing the same window size with input parameter set 2, which had a score of 0.9702. This illustrates that a sufficiently large window size can substantially enhance the accuracy of the model. Moreover, the selected input parameters for the training have effect on the results. Thus, input parameter of set 1 scored better results for every lookback window size configuration.

Nonetheless, it is also known that higher lookback windows demand higher computational resources. Since the aim of this research was the deployment of the developed model in a low-powered device, no bigger window sizes were considered for this study.

3.1.2. Accuracy

Table 6 shows the accuracy obtained for each ML model. It is very important to clarify that, due to the fact that LSTM-based models are naturally designed to be trained (and executed) following a time-based order, for the sake of a fair comparison among all the models, the other 4 ML algorithms were trained following the same procedure, i.e., without shuffling the testing dataset.

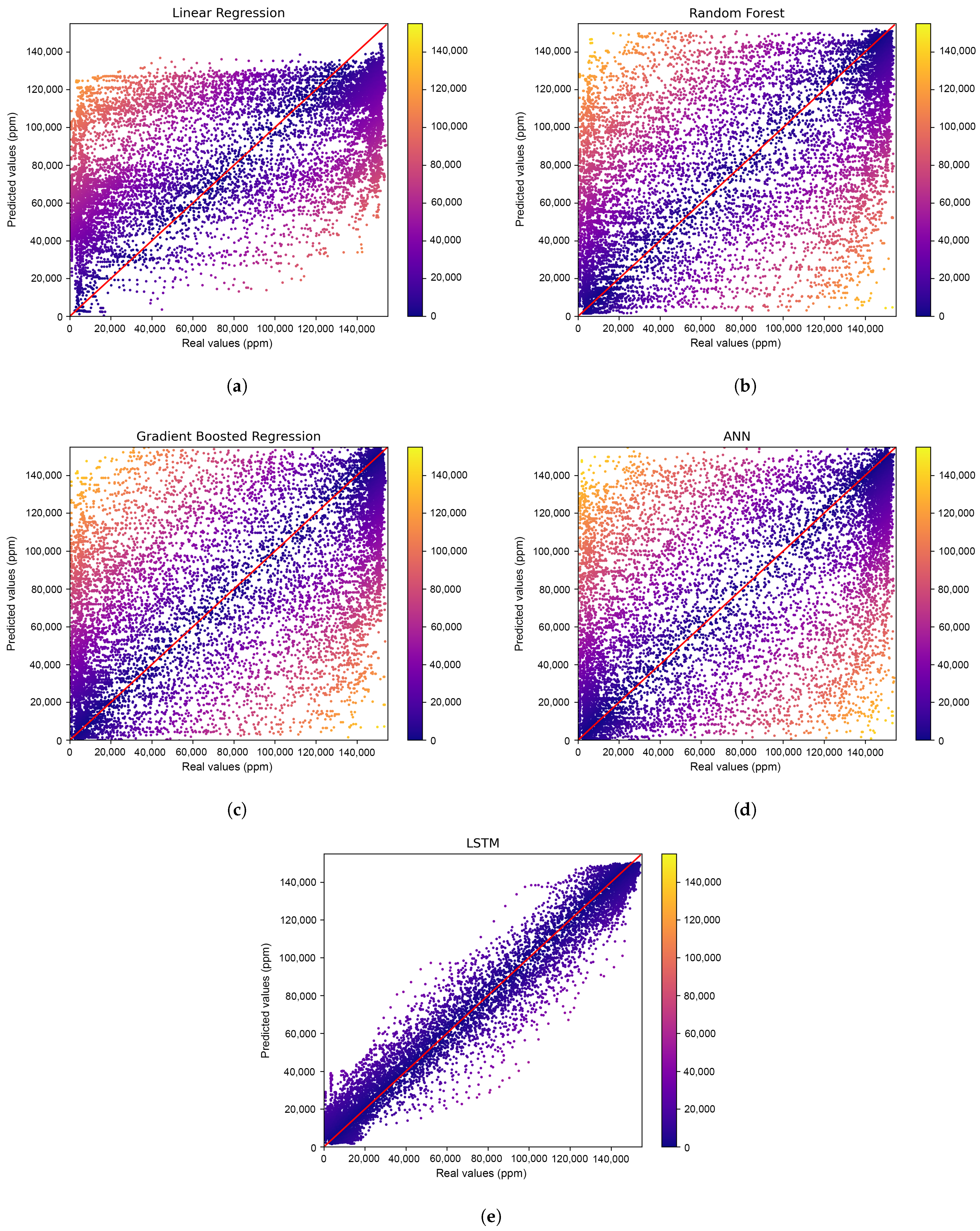

Figure 6 shows the prediction results of the models. The figures show the real values against the predicted values in ppm. In addition, the results are colour-coded based on the differences between these two values.

As evident in

Table 6, the performance of the LR model was the lowest among all the ML models evaluated, with scores of 0.4214, 0.6566, 0.6021 and 0.7759 for adjusted R

2, MAE, MSE and RMSE, respectively. This is also reflected in

Figure 6a; a high degree of dispersion can be observed throughout the plot. In contrast, RF, GBR and ANN models exhibited similar accuracy levels. Furthermore, the distribution of the results, as seen in

Figure 6b–d, illustrates a more accurate prediction on the edges of the dataset’s distribution, which correspond to moments when the engine was either started or stopped. However, a more dispersed result can be observed in all three models when predicting values associated with power-train switch-overs. This serves as clear evidence of how the presence of power-train switch-overs hinders the ability of these three models to predict the CO

2 concentration in hybrid vehicles (HVs). Specifically, for RF, the scores obtained were 0.4645, 0.5507, 0.5572 and 0.7465 for adjusted R

2, MAE, MSE and RMSE, respectively. For GBR, the scores were 0.4597, 0.5555, 0.5622 and 0.7498 for adjusted R

2, MAE, MSE and RMSE, respectively. For ANN, the scores obtained were 0.4639, 0.5346, 0.5577 and 0.7468 for adjusted R

2, MAE, MSE and RMSE, respectively.

Ultimately, the proposed UWS-LSTM algorithm demonstrated superior performance in comparison to the other evaluated methods, as evidenced by its adjusted R

2 value of 0.975 and its MAE, MSE, and RMSE values of 0.1135, 0.0261, and 0.1616, respectively. Additionally, as demonstrated in

Figure 6e, the LSTM algorithm shows exceptional accuracy not only at the edges of the data distribution, but also in the middle values corresponding to engine switch-overs, as depicted in

Figure 4. This highlights the potential of LSTM-based models in predicting more complex non-linear relationships by utilising previous states.

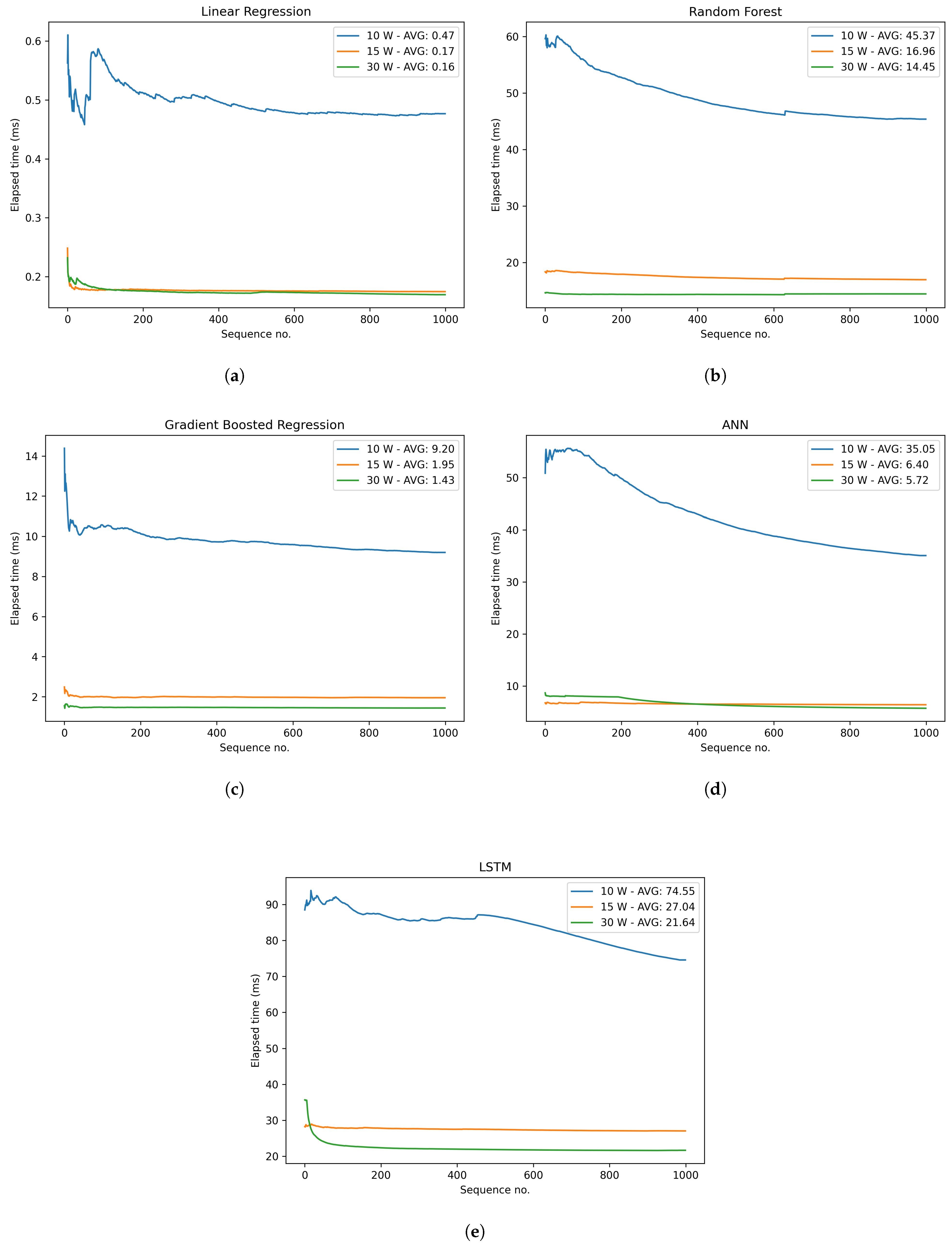

3.1.3. Latency

This subsection focuses on the experiments carried out after deploying and applying our predictive model in TensorFlow in the Nvidia Jetson board described in

Section 2.8. In addition to the proposed UWS-LSTM model, LR, RF, GBR and ANN were studied to compare the different latency levels and assess the trade-offs between speed and accuracy. All these results were obtained by setting the Nvidia Jetson Xavier on different power modes: 10 W, 15 W and 30 W.

The results in

Figure 7 are presented in milliseconds, which shows the cumulative average inference time for the first 1000 iterations. The cumulative average inference time was calculated following Equation (

6), where

x is the inference time at a time step

i and

t is the current time step. The inference time was calculated, since the input was fed to the algorithm until the results were provided in the output. Thus, 15 experiments were carried out to evaluate five algorithms in three different power modes. As LR, RF and GBR models were implemented with the Scikit learn package and not with TensorFlow, their execution was carried out by the CPUs available on the device. On the other hand, the TensorFlow framework was used to implement neural network-based algorithms, so they were compatible with the Jetson’s GPU.

First the performance levels of traditional ML algorithms, including LR, GBR and RF, were analysed and compared. The results, as depicted in

Figure 7a–c, indicate that the LR algorithm performed with the lowest average cumulative latency of 0.16 ms per iteration. GBR exhibited a slightly slower performance, with an average execution time of 1.43 ms per iteration under a 30 W power consumption. On the other hand, RF algorithm demonstrated the highest average latency of 14.45 ms among the three traditional ML algorithms. Furthermore, ANN and LSTM models, as presented in

Figure 7d,e, respectively, both exhibited slower average performance when operating at 30 W. Specifically, the ANN-based model recorded an average execution time of 5.72 ms, whereas the LSTM-based model took 21.64 ms on average. These results suggest that traditional ML algorithms generally exhibit faster performance compared to ANN and LSTM models. However, as seen in previous sections, there is a trade-off between speed and accuracy, which is especially notable with the UWS-LSTM model.

Additionally, as observed in every graph, there was a significant improvement in terms of speed when the Nvidia Jetson board was configured to operate at 15 W in comparison to 10 W. Oppositely, changing from 15 to 30 W did not reveal a remarkable enhancement in terms of latency.

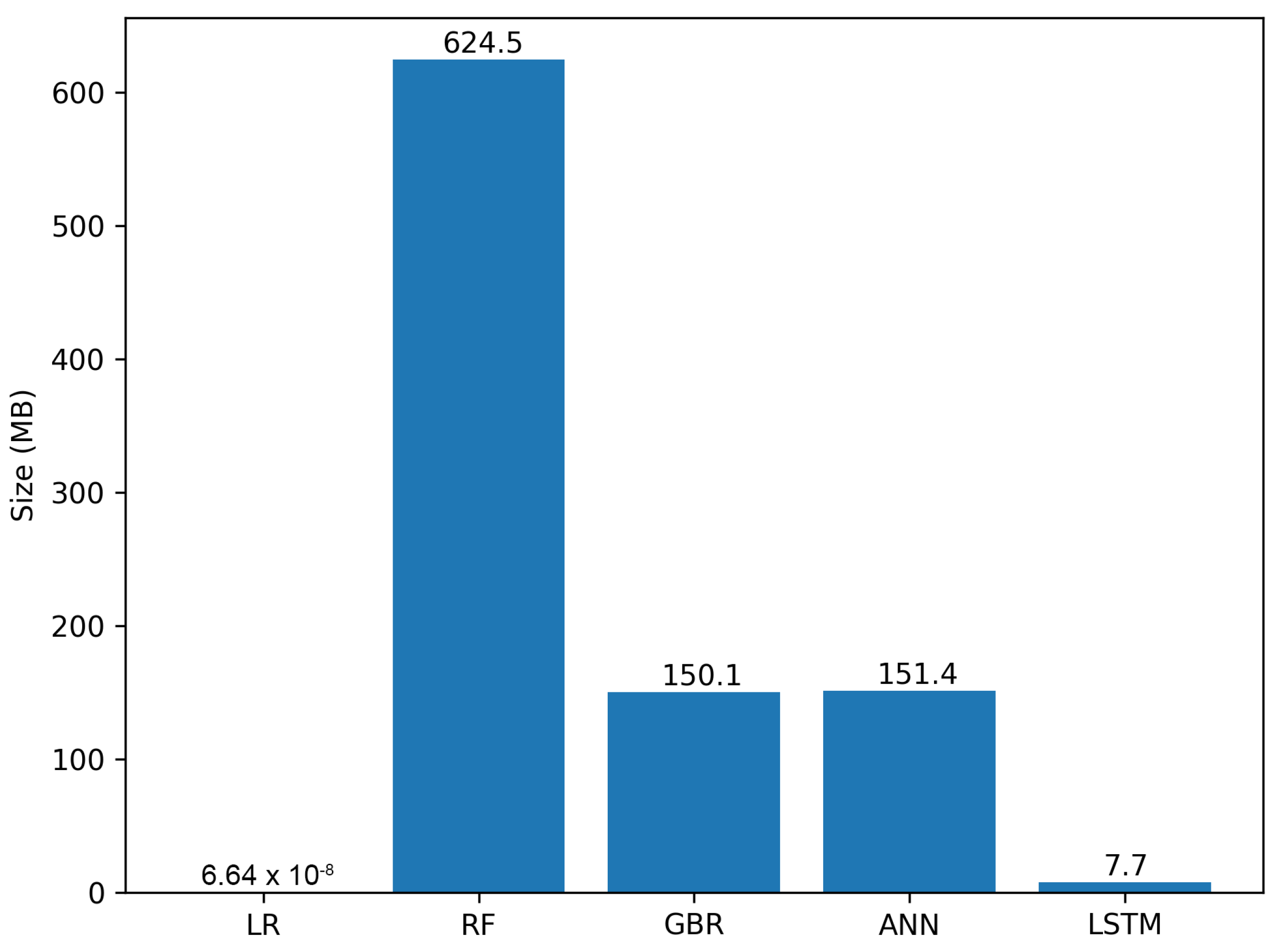

3.1.4. Model Size

In an IoT setting, the size of a machine learning model is a crucial consideration. Due to the limited storage and computational resources of IoT devices, models with smaller sizes are typically more favourable, as they can be more easily exported and deployed on these devices. In this context, the UWS-LSTM model, with a size of 7.7 MB, can be considered relatively lightweight and well-suited for IoT applications. As depicted in

Figure 8, among the five models presented, only the LR model had a smaller size than the UWS-LSTM model. However, as previously established through the analysis of other performance metrics, the LR model dud not present an appropriate trade-off between model size and accuracy. Thus, the UWS-LSTM model emerged as the most promising candidate for deployment on IoT devices.

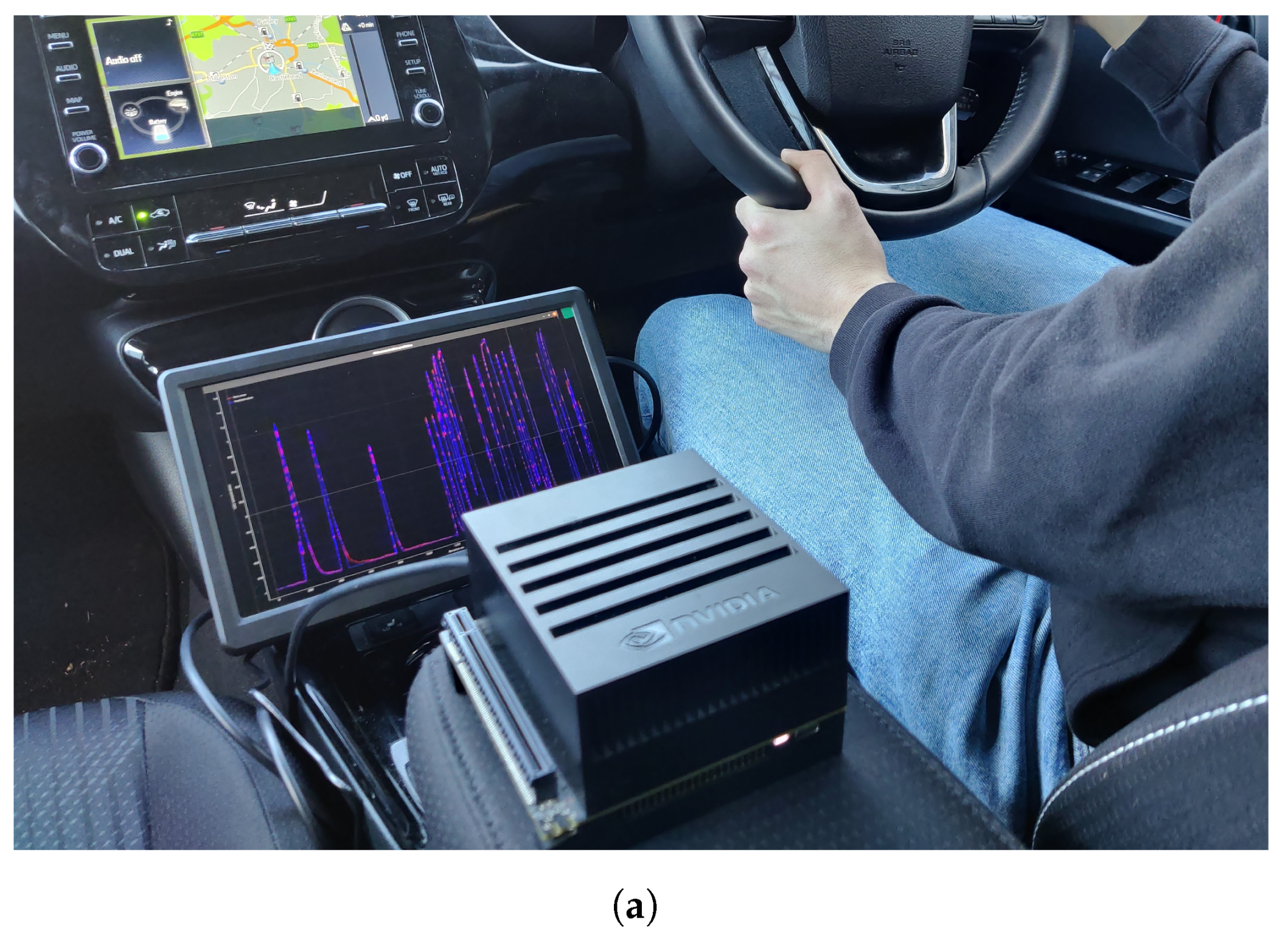

3.2. Qualitative Results

The UWS-LSTM model was instantiated and installed within a real scenario to prove the potential of its use under realistic scenarios. The Nvidia Jetson was set up in the Toyota Prius car described in

Section 2.8. The installation can be seen in

Figure 9a, where the display shows the real-time execution and CO

2 measurement during the evaluation.

Figure 9b presents the predicted concentration against the real CO

2 values captured by the sensors during the installation and evaluation process. As can be seen, the prediction results fit to a great extent the real values, including when the switch-overs were performed.

During the on-board evaluation, the real-time predictions were shown on a display. However, IoT devices represent an ideal platform for remote monitoring, thanks to their easy integration with communication protocols over wireless networks, leveraging an incipient solution for remote ML-based emissions monitoring.

3.3. Discussion

In this paper, we have presented the design, implementation, training and validation of a LSTM-based ML model for prediction of CO2 concentration for HVs. Initially, a data gathering process was conducted with the aim of collecting features of a real-world HV and its CO2 emission concentrations. This dataset is the only of its kind, to the best of our knowledge, since it contains over 70,000 of more than 20 operational parameters and CO2 emission concentrations of a HV during 235 min of driving in different scenarios, regardless of the power-train source (i.e., data were collected when the engine was started and stopped).

To determine the relevance of the gathered data, we performed an input feature selection by calculating the correlation score among the dataset parameters and evaluating 765 different combinations of training with different input features in three traditional ML algorithms. This resulted in two sets of parameters, one with six parameters and another with eight parameters, which was better for each different algorithm, showcasing that no specific input parameter set can be foreseen as optimal for every ML model. This input feature selection procedure allowed identifying the most relevant parameters for predicting emissions and eliminating irrelevant or redundant data, which proved to improve the performance in the assessed models.

Moreover, we have designed and evaluated the proposed ML model, UWS-LSTM, for predicting CO

2 emission concentrations in a HV. The results showed that this model outperformed other ML mechanisms (achieving 97.5% accuracy level), especially with high complexity of the labelling distribution observed during power-train switch-overs carried out by the HV (see

Figure 4). This makes the UWS-LSTM model a valuable tool for researchers studying emissions in HVs, where the use of the engine is not constant, and therefore, the CO

2 concentrations fluctuate greatly over time. In terms of the computational cost derived from the training and validation processes, the proposed UWS-LSTM was found as a relatively inexpensive and quick solution with 7.7 MB in size and 21.64 ms of inference time. The proposed model took an average of 30 min for training when utilising a dedicated GPU. It is hard to make a comparison with other approaches seen in the literature about the computational costs implied in the process, since this information is usually not disclosed by the authors.

Finally, we have implemented the UWS-LSTM model in a low-powered IoT device installed in the same vehicle where we gathered the data (see

Figure 9). An evaluation of its performance with three different power settings was conducted as recorded to measure the speed. As observed, the UWS-LSTM reached a minimum average execution time of 21.64 ms, which was considerably more than the second best ML algorithm (GBR with 1.43 ms). Nonetheless, as for the model sizes, the proposed UWS-LSTM was the second most lightweight model, being 7.7 MB; the other three most accurate models were 150.1, 151.4 and 624.5 MB, respectively. Overall, the proposed UWS-LSTM seems to better fit the performance and weight requirements for being implemented in low-resource environments.

In conclusion, the work presented in this study would make an important contribution to the field of HV emission concentration prediction. The large collected dataset, the effective input feature selection and the performance of the UWS-LSTM model make it a valuable resource for researchers in the area of ML-based pollution prediction, which can greatly help the development of new cost-effective emission-aware driving strategies. Moreover, thanks to the use of IoT devices, this solution can potentially facilitate the transmission of CO2 emission concentrations over wireless networks.

Despite the provided results proving the capacity of the model to accurately predict the concentrations with a decent extent of success, we are aware of the shortcomings in execution time. Therefore, further research will be conducted towards reducing the execution time for the proposed model.