Abstract

The undeniable computational power of artificial neural networks has granted the scientific community the ability to exploit the available data in ways previously inconceivable. However, deep neural networks require an overwhelming quantity of data in order to interpret the underlying connections between them, and therefore, be able to complete the specific task that they have been assigned to. Feeding a deep neural network with vast amounts of data usually ensures efficiency, but may, however, harm the network’s ability to generalize. To tackle this, numerous regularization techniques have been proposed, with dropout being one of the most dominant. This paper proposes a selective gradient dropout method, which, instead of relying on dropping random weights, learns to freeze the training process of specific connections, thereby increasing the overall network’s sparsity in an adaptive manner, by driving it to utilize more salient weights. The experimental results show that the produced sparse network outperforms the baseline on numerous image classification datasets, and additionally, the yielded results occurred after significantly less training epochs.

1. Introduction

In recent years, artificial neural networks have demonstrated undeniable efficiency in carrying out tasks such as image detection, action recognition, and compression, rendering their implementations almost exclusive candidates for problem solving in such domains. In conjunction with the technological advancements in computational power and data handling, faster and more accurate networks are constantly designed, based on dense, recursive architectures that are able to analyze more complex data. The efficiency of deeper networks lies in the fact that these networks feature significantly more trainable parameters than shallow networks, making them extremely flexible in interpreting diverse input data. Although this is a major advantage, the excessive adaptation of a network’s neurons and synapses to the available data establishes a risk of overfitting, and thus can render the model unable to generalize.

In order to exploit the performances of very complex networks and concurrently ameliorate this inherit adversity, several regularization techniques have been proposed, such as cross validation [1,2], bagging [3], boosting weights [4], data augmentation [5,6,7], early stopping [8], and weight decay [9]. One of the most efficient, effective, and therefore popular regularization techniques is dropout [10], which tackles overfitting by randomly removing nodes during training, with no additional computational overhead. Dropout benefits the network by randomly adding noise to its hidden units, forcing the loss descent path to frequently change and avoid settling to a local minimum. Dropout essentially prevents the co-adaptation of activations, so that a network’s hidden units detect features independently of each other. Although this method usually ensures better model performance, dropout comes at the cost of a far more important convergence time. Randomly changing the network’s loss descent path can multiply the necessary training time, as the model seeks for a general representation that fits the given input, using varying parts of its components at each iteration. More specifically, for a network with t parameters, if all of them are considered eligible for dropout, the number of possible loss descent paths would be .

This paper proposes a learnable adaptive training method that aims at producing efficient models that are able to generalize accurately and fast. Extending the standard random dropout technique, the proposed method freezes a part of the network’s parameters based on the input information on every training step by zeroing targeted gradients. The selection of gradients to be switched off is carried out by an ensemble of networks with trainable parameters. The proposed method was evaluated by conducting experiments on popular public image classification datasets, showing that a simple vanilla network with dropout is outperformed by its modified learned sparse version.

The incentive behind the presented work is to enhance the standard behavior of the dropout mechanism. The proposed method achieves this by selecting which weights and gradients are frozen in each training step, instead of ambiguously dropping potentially important ingredients of the network, i.e., the essence of the vanilla dropout technique.

The remainder of this paper is organized in four sections: Section 2 is a presentation of prior publications on relative scientific domains. In Section 3, the description of the idea and implementation of the presented method are detailed. Section 4 is an ablation study on the performed experiments, and additionally analyzes the yielded results. Finally, Section 5 concludes the paper.

2. Related Work

Recently, numerous works have focused on tackling the overfitting problem that occurs when complex artificial networks are implemented. Variations and extensions of the dropout mechanism have been proposed, such as DropConnect [11], which inducts network sparsity by randomly dropping the weights of a fully connected layer, instead of its activations’ output vectors. In [12], the authors propose a regularizing method that, when applied to very complex residual networks, randomly drops fractions of layers. This approach aims at sparsifying the network during training, while retaining its complexity during test time and thus boosting generalization while retaining performance. DropBlock [13] is a generalization of Cutout [14], a data augmentation method where random square regions of an image are masked out to improve performance and robustness on object occlusion examples. In DropBlock, the authors apply this method to the feature maps of convolution filters, aiming at better generalization of such layers with spatial structure.

Other papers aim to improve the generalization ability of multi-branch networks by blocking possible co-adaptation between parallel branches or paths, either by randomly dropping network fractions, as in [15,16], or by modifying activation functions, as in [17,18]. In other works, the dropout mechanism is extended, such as in Maxout [19], which introduces a new layer that essentially generalizes the rectified linear unit (ReLU) and leaky ReLU functions and exploits the averaging properties of dropout. In [20], instead of masking out weights, a random gradient regularization mechanism is introduced, inducing noise to gradients in order to improve model generalization.

All mentioned dropout variations are established on randomly infusing noise to either weights, activations, or gradients. During training, the weight, activity, or gradient of a hidden unit is set to zero with a fixed probability using samples from a Bernoulli distribution. On the contrary, approaches such as [21] are closely related to the presented work, as the probability of a unit being dropped is not random but strongly related to the candidate unit’s inputs and activation. A similar intuition was followed in [22], where units and weights were ranked using an approximate rank of importance. The ambition of that paper was to reduce the dependency between the important and unimportant features of a network, i.e., to maximize the mutual information between units in the same layer, so that the impact of dropping a unit is minimal. In [23], the authors proposed a learning-rate dropout mechanism similar to the original dropout approach; upon each step, a random unit’s learning rate drops to zero, thereby temporarily disabling its training. Although the main concept of that work is closely related to the present paper’s idea, they differ on the important aspect of randomly freezing the network’s nodes, instead of selectively masking the unimportant units.

3. Proposed Method

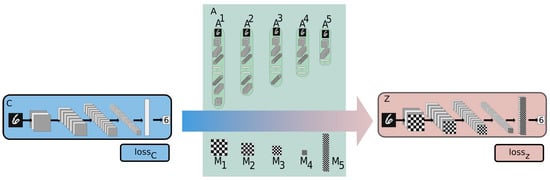

The proposed approach is an end-to-end trainable architecture as can be seen in Figure 1, established on a continuous three-way communication channel between its components—more specifically, a core network C with its set of parameters (weights and biases) denoted as , a modified network Z with , and a set of auxiliary networks, , comprising networks .

Figure 1.

Example of the proposed method. The auxiliary network ensemble A, comprising networks , is responsible for providing each layer of network C with a binary mask , which controls which parts of the layer will be trained. The applied masks’ performance is evaluated on the next forward pass, and, if the proposals are accepted, the training procedure continues with the modified version of C, Z.

We index the layers of a network U as , with representing the total number of layers in a network U. Although each network is independently trained, back propagated, and optimized for a specific objective, the information exchanged between them is crucial for the maximal exploitation of the salient weights of network C.

On each training step, C represents a deep neural network that aims to minimize the cost function:

where i is the input, T is the cardinality of the training dataset, is the ground truth value, and the model’s estimation.

The ensemble of auxiliary networks, , is responsible for providing C (Figure 2) with a set of binary masks, . In order to bolster the usage of salient features for each layer of C, the proposed method implements a convolutional variational autoencoder network for each auxiliary subnetwork, . More information on the architecture of each network can be found in Section 4. First, ’s weights are encoded to a lower dimension by the respective encoder of , and then, the decoder side attempts to reproduce them while trying to ignore less important weights. The generated representations are the blueprint from which the desired binary masks are extracted. The binary value, , of a neuron n contained in layer is decided by taking into account the neuron’s absolute gradient value, , after back propagation, compared to the strongest absolute gradient value per layer, so that

where represents a masking threshold , for each layer of C, and represents the gradient matrix of layer . A higher masking threshold implies that the masking mechanism will be more aggressive towards that specific layer. The effects of the threshold can be seen in Figure 3.

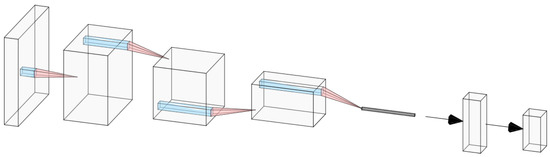

Figure 2.

Architecture of the C network consisting of the input image, four convolutional layers, and two fully connected layers. The proposed method is beneficial to the network even in such minimal setups.

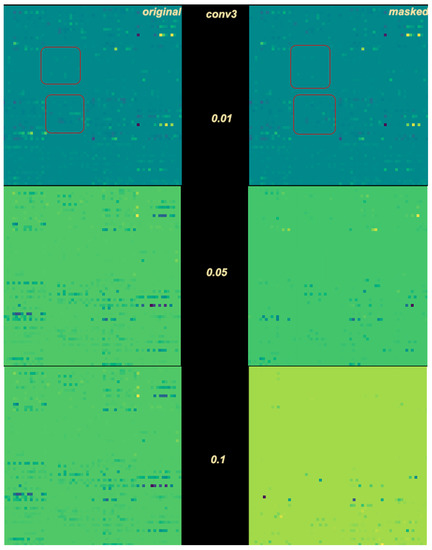

Figure 3.

Threshold effect on the third convolution filter, for , , and . Red squares in the first dense example indicate same pixel neighborhoods for easier comprehension.

Apart from acquiring masks from a layer’s gradients, and in a similar manner, the method takes into consideration the absolute weight value of each neuron, , after some training intervals, so that:

where represents the weight matrix of layer .

Filtering parts of a layer by the respective weight values provides more robust mask proposals and acts as a review of the freezing procedure thus far. After some training epochs, the network has built an effective generalization mechanism with more stable weights than before, the values of which quantify their respective participation levels in minimizing the loss function (1).

The assigned binary values of all neurons in layer constitute the binary mask . Each produced binary mask corresponds to each C layer, , and controls how different parts of that layer are trained, by computing the Hadamard product between the individual values of the mask and the respective layer’s gradient values, , after the latter have been obtained through back propagation. The Hadamard product is calculated as:

where ⊙ denotes the Hadamard product of two matrices, and the size of each dimension of a layer.

The gradients computed on every training step express the magnitudes of adjustments that network C needs to apply at each neuron so that Function (1) is minimized. The concept of freezing neurons that only need minor adjustments during training aims at a faster convergence time, as the network is targeted towards modifying the weights of neurons that need these adjustments the most.

The effectiveness of the applied masks is evaluated on the next forward pass of C. More specifically, on each training step, the input data are propagated through C, and through its modified counterpart, Z, which contains the updated weights, based on the masked gradients, , of the previous step. Depending on the calculated loss for each network, and , the algorithm either accepts the proposed parameters after masking, , consisting of all modified gradients , and weights , or rejects them and advances with the original parameters , i.e., the unmasked gradients and weights , as updated by C’s backward pass. Finally, either or is passed to the auxiliary networks, .

Although each auxiliary network is an unsupervised generative model, its weights are updated by inheriting the loss value of a supervised classification neural network, or . This behavior proves that the method is agnostic towards the individual networks’ architectures, and shows that the intertwined behavior of the components ensures uninterrupted, end-to-end integration.

4. Experiments and Results

The proposed method consists of an ensemble of networks, and its implementation can become cumbersome when deeper architectures are employed. Testing the method on upscaled networks is out of the scope of the proposed paper, as all state of the art methods for dropout are tested against their respective vanilla dropout versions. The experimental results show that a typical convolutional neural network is benefited when utilizing the proposed adaptive dropout method, when compared to a standard dropout integration.

4.1. Datasets

- CIFAR-10 [24]: The CIFAR-10 dataset features 60,000 32 × 32 color images, divided into 10 classes of 6000 images each. The training set consists of 50,000 images, whereas the test set contains 10,000 images, randomly selected from each class.

- USPS Handwritten Digits (USPS) [25]: USPS is a dataset of handwritten digits featuring 7291 training and 2007 8 × 8 testing examples, coming from 10 classes.

- Fashion-MNIST [26]: Fashion-MNIST is structured based on MNIST [27], a handwritten digit dataset, which is considered an almost solved problem, and is designed as a more challenging dataset; it consists of clothing images divided into a training set of 60,000 samples and a test set of 10,000 28 × 28 grayscale samples of 10 classes.

- SVHN [28]: SVHN is an image dataset of house numbers, obtained from Google Street View images. The dataset’s structure is similar to that of the MNIST dataset; each of the 10 classes consists of images of one digit. The dataset contains over 600,000 digit images, split into 73,257 digits for training, 26,032 digits for testing, and 531,131 additional training examples.

- STL-10 [29]: The STL-10 dataset is an image recognition dataset inspired by the CIFAR-10 dataset. The dataset shares the same structure as the CIFAR-10 dataset, with 10 classes of 500 96 × 96 training images and 800 96 × 96 test images in each. However, the dataset also contains 100,000 unlabeled images for unsupervised training, with content extracted from similar, but not the same categories as the original classes, acquired from Imagenet [30]. Although this dataset was designed for developing scalable unsupervised methods, in this study, it was used as a standard supervised classification dataset.

4.2. Implementation Details

The core network C consists of four convolutional layers, each activated by a leaky ReLU function and normalized by a batch normalization layer. The resulting feature map is then flattened and fed to a fully connected layer, also activated by a leaky ReLU function and followed by a batch normalization layer. The vanilla version of C randomly drops some elements of the network by applying standard dropout with probability . The output is finally passed to a second fully connected layer and then activated by a multi-class softmax function.

For every , an auxiliary network is built and trained in order to provide the required binary mask to that specific layer. The auxiliary networks for the proposed approach are based on variational autoencoders [31] with a fixed encoding section of four convolutional filters and a modified decoding part of transposed convolutions, their number depending on the size of the output mask to be applied on the gradients or weights. All filters are activated using leaky ReLU activation functions, as they are slightly faster than normal ReLU functions and alleviate the “dying ReLU” problem [32].

Variational autoencoders map their inputs to a distribution instead of a fixed feature map; then, using the mean vector and the standard deviation vector , a sample of the distribution can be fed to the decoding part of the network. Using the reparameterization trick, the output of the decoder is backpropagated through the network, training the and vectors, and also used for generating the essential binary masks, as described in the previous section.

For each experiment, the network was initialized by training in its vanilla version until it reached a minimum validation accuracy of about 30%, which was typically achieved in the first epoch. The model parameters were optimized using stochastic gradient descent, whereas the learning rate was initialized at and decayed every 5 epochs by .

4.3. Results

Table 1, Table 2, Table 3 and Table 4 and Figure 4, Figure 5, Figure 6, Figure 7 and Figure 8 demonstrate how different setup parameters of the proposed method lead to either better accuracy or faster convergence time, when compared to the standard vanilla dropout. The first two columns of each table hold the accuracy score for each setup and the epochs at which they were performed, respectively. Columns 3 to 7 are the thresholds p used by the network ensemble to acquire the binary masks , which were finally applied to every layer. Dynamically setting p, depending on the nature of each filter and the magnitude of the layer’s units, is justified; convolution filters are more sensitive to dropout compared to fully connected layers, as they directly interact with the input. Additionally, considering the spatial correlation of their units, applying dropout to the first convolution layers is expected to result in performance loss, as these layers are responsible for extracting the fundamental features of the input. Column 8 expresses the intervals of gradient masking; on some occasions, the binary masks were extracted after accumulating gradients for a number of epochs, in order to let the dropout mechanism have a broader knowledge of the impact of each unit on the training procedure. Finally, the 9th column holds the intervals of weight masking. Although the proposed method applies temporary dropout to the gradients of the hidden units, the binary masks can occur from the intensity of the gradients or a combination of the layer’s gradients and weight values.

Table 1.

Accuracy scores, parameter tuning, and convergence times for different experiments on the CIFAR10 dataset. The first row holds the best accuracy score for the vanilla dropout version and the epoch at which it was attained.

Table 2.

Accuracy scores, parameter tuning, and convergence times for different experiments on the USPS dataset. The first row holds the best accuracy score for the vanilla dropout version and the epoch at which it was attained.

Table 3.

Accuracy scores, parameter tuning, and convergence times for different experiments on the fashion-MNIST dataset. The first row holds the best accuracy score for the vanilla dropout version and the epoch at which it was attained.

Table 4.

Accuracy scores, parameter tuning, and convergence times for different experiments on the STL-10 dataset. The first row holds the best accuracy score for the vanilla dropout version and the epoch at which it was attained.

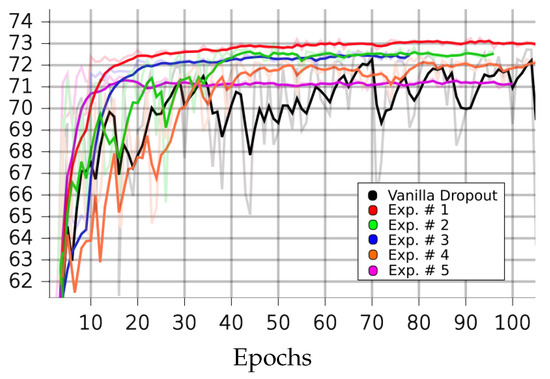

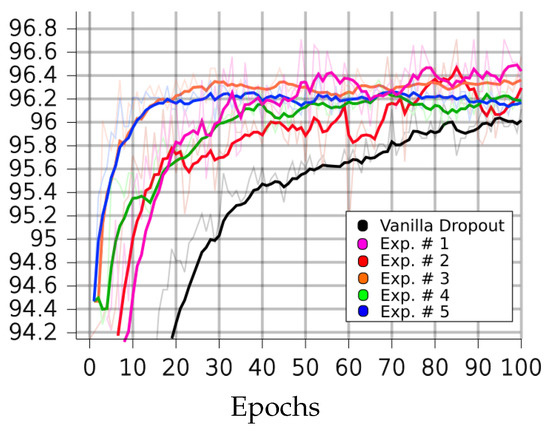

Figure 4.

Performances of LIM and vanilla dropout methods on the CIFAR10 dataset, trained and tested for 100 epochs. Graphs were smoothed for better comprehension; original graphs can be seen in the background. Curves correspond to Table 2 scores. Black: vanilla. Red: 1. Green: 2. Blue: 3. Orange: 4. Purple: 5.

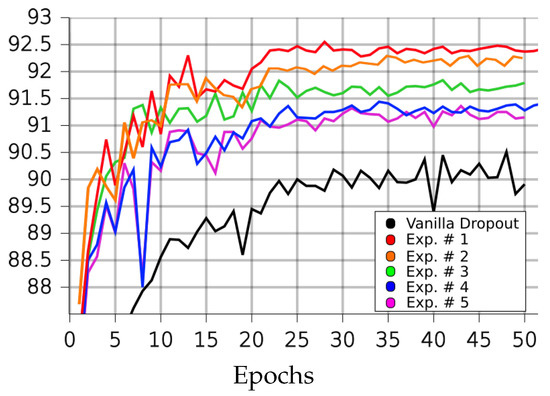

Figure 5.

Performance of LIM and vanilla dropout methods on the USPS dataset, trained and tested for 100 epochs. Graphs were smoothed for better comprehension; original graphs can be seen in the background. Curves correspond to Table 2 scores. Black: vanilla, purple: 1, red: 2, orange: 3, green: 4, blue: 5.

Figure 6.

Performances of LIM and vanilla dropout methods on the fashion-MNIST dataset, trained and tested for 50 epochs. Curves correspond to Table 3 scores; black: vanilla, red: 1, orange: 2, green: 3, blue: 4, purple: 5.

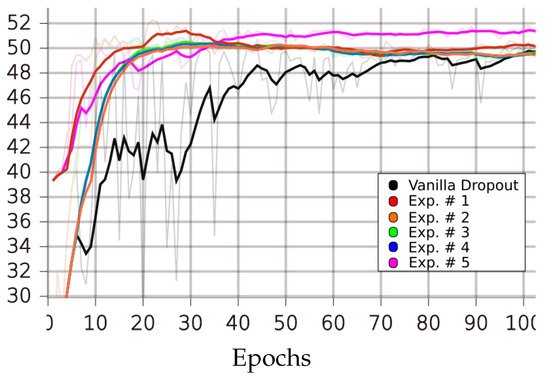

Figure 7.

Performances of LIM and vanilla dropout methods on the STL-10 dataset, trained and tested for 100 epochs. Graphs were smoothed for better comprehension; original graphs can be seen in the background. Curves correspond to Table 4 scores; black: vanilla, red: 1, purple: 2, green: 3, orange: 4, blue: 5.

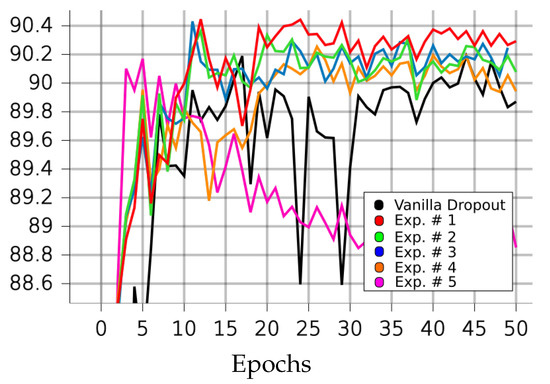

Figure 8.

Performances of LIM and vanilla dropout methods on the SVHN dataset, trained and tested for 50 epochs. Graphs were smoothed for better comprehension; original graphs can be seen in the background. Curves correspond to Table 5 scores; black: vanilla, red: 1, green: 2, blue: 3, orange: 4, purple: 5.

On the CIFAR10 dataset, the proposed method outperformed the standard dropout architecture in most circumstances. Additionally, as seen in Table 1, the models that utilize the proposed dropout mechanism only need a few training epochs to score close to their best performances. In experiment 5, for a small performance trade-off (less than 1%), the model converged in just six epochs, which is a 168.4% reduction compared to the 70 epochs needed when training with standard dropout. As already discussed, intense selective dropout was only applied on the first fully connected layer, to the extreme of , in experiments 3 and 4.

On the USPS dataset, our method outperformed the vanilla dropout architecture in all experiments, as seen in Table 2. Experiments 1 and 2 improved the network’s performance by ; in experiment 1, it performed its best in fewer epochs than the vanilla version needed. In experiment 3, it needed fewer training steps to outperform the vanilla version by , as its best performance reduced the required steps by .

On Fashion-MNIST, the proposed method surpassed the standard dropout version by (experiment 1) in fewer epochs. In the third experimental setup, our method outperformed the baseline by the fastest, in 23 epochs, or fewer steps. All reported training procedures on the Fashion-MNIST dataset used weight masking in every epoch, as this setup was found to perform the best.

On STL-10, the best performing experimental setup achieved a 2.18% increase in performance, compared to the vanilla version, and only needed 22 epochs, a 127.2% reduction in convergence time. Setup 4 achieved a performance improvement of 0.71% in just 12 epochs, or 156.7% less epochs than the original dropout version. Weight masking was applied every epoch, and all setups accumulated gradients before freezing the dropout candidate parts.

Finally, on the SVHN dataset, the standard dropout version performed its best much quicker than on the previous datasets; however, the proposed method still outperformed it in terms of both accuracy and epochs needed. In our best experiment, 1, our algorithm surpassed the vanilla one’s performance by in fewer epochs. The fastest experiment for our method, 5, resulted in optimal performance almost identical to that of the vanilla version, being inferior by only , but also faster by .

5. Conclusions

In this paper, we proposed a novel algorithm for selectively disabling weight updating on parts of the network, based on both gradient and weight values of the respective network units. The proposed idea was tested in five well-known image classification datasets, yielding favorable performance results. Although the limited processing power restricted the architecture to an essential convolution network, the extended experiments have shown that this alternating scheme is able to match or surpass standard dropout performance in considerably fewer training steps. Convergence time is extremely important in practical machine learning applications; shorter network training times enable researchers to acquire knowledge quickly, and therefore conduct extended and more meaningful experiments. More importantly, faster production of models translates into additional ideas and methods for circumventing potential obstacles in research and integration.

Author Contributions

Conceptualization, C.A. and N.V.; methodology, N.V.; software, C.A.; validation, C.A. and N.V.; formal analysis, C.A.; investigation, C.A.; resources, N.V.; data curation, C.A.; writing—original draft preparation, C.A.; writing—review and editing, N.V. and P.D.; visualization, C.A.; supervision, N.V. and P.D.; project administration, N.V.; funding acquisition, P.D. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the European Commission (INTREPID, Intelligent Toolkit for Reconnaissance and assessmEnt in Perilous Incidents) under Grant 883345.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Allen, D.M. The Relationship between Variable Selection and Data Agumentation and a Method for Prediction. Technometrics 1974, 16, 125–127. [Google Scholar] [CrossRef]

- Kohavi, R. A Study of Cross-Validation and Bootstrap for Accuracy Estimation and Model Selection. In 14th International Joint Conference on Artificial Intelligence—Volume 2; IJCAI’95; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 1995; pp. 1137–1143. [Google Scholar]

- Freund, Y. Boosting a Weak Learning Algorithm by Majority. Inf. Comput. 1995, 121, 256–285. [Google Scholar] [CrossRef]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Perez, L.; Wang, J. The Effectiveness of Data Augmentation in Image Classification using Deep Learning. arXiv 2017, arXiv:1712.04621. [Google Scholar]

- Cubuk, E.D.; Zoph, B.; Mané, D.; Vasudevan, V.; Le, Q.V. AutoAugment: Learning Augmentation Policies from Data. arXiv 2018, arXiv:1805.09501. [Google Scholar]

- Ohashi, H.; Al-Naser, M.; Ahmed, S.; Akiyama, T.; Sato, T.; Nguyen, P.; Nakamura, K.; Dengel, A. Augmenting Wearable Sensor Data with Physical Constraint for DNN-Based Human-Action Recognition. In Proceedings of the ICML 2017 Times Series Workshop, PMLR, Sydney, Australia, 6–11 August 2017. [Google Scholar]

- Prechelt, L. Early Stopping-But When? In Neural Networks: Tricks of the Trade; This Book Is an Outgrowth of a 1996 NIPS Workshop; Springer: Berlin/Heidelberg, Germany, 1998; pp. 55–69. [Google Scholar]

- Krogh, A.; Hertz, J.A. A Simple Weight Decay Can Improve Generalization. In Proceedings of the 4th International Conference on Neural Information Processing Systems; NIPS’91. Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 1991; pp. 950–957. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Wan, L.; Zeiler, M.D.; Zhang, S.; LeCun, Y.; Fergus, R. Regularization of Neural Networks using DropConnect. In Proceedings of the International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013. [Google Scholar]

- Huang, G.; Sun, Y.; Liu, Z.; Sedra, D.; Weinberger, K. Deep Networks with Stochastic Depth. arXiv 2016, arXiv:1603.09382. [Google Scholar]

- Ghiasi, G.; Lin, T.Y.; Le, Q.V. DropBlock: A regularization method for convolutional networks. arXiv 2018, arXiv:1810.12890. [Google Scholar]

- DeVries, T.; Taylor, G.W. Improved Regularization of Convolutional Neural Networks with Cutout. arXiv 2017, arXiv:1708.04552. [Google Scholar]

- Larsson, G.; Maire, M.; Shakhnarovich, G. FractalNet: Ultra-Deep Neural Networks without Residuals. arXiv 2017, arXiv:1605.07648. [Google Scholar]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning Transferable Architectures for Scalable Image Recognition. arXiv 2018, arXiv:1707.07012. [Google Scholar]

- Gastaldi, X. Shake-Shake regularization. arXiv 2017, arXiv:1705.07485. [Google Scholar]

- Yamada, Y.; Iwamura, M.; Akiba, T.; Kise, K. Shakedrop Regularization for Deep Residual Learning. IEEE Access 2019, 7, 186126–186136. [Google Scholar] [CrossRef]

- Goodfellow, I.; Warde-Farley, D.; Mirza, M.; Courville, A.; Bengio, Y. Maxout Networks. In Proceedings of the 30th International Conference on Machine Learning; Dasgupta, S., McAllester, D., Eds.; PMLR: Atlanta, GA, USA, 2013; Volume 28, pp. 1319–1327. [Google Scholar]

- Tseng, H.Y.; Chen, Y.W.; Tsai, Y.H.; Liu, S.; Lin, Y.Y.; Yang, M.H. Regularizing Meta-Learning via Gradient Dropout. arXiv 2020, arXiv:2004.05859. [Google Scholar]

- Ba, J.; Frey, B. Adaptive dropout for training deep neural networks. In Proceedings of the Advances in Neural Information Processing Systems; Burges, C.J.C., Bottou, L., Welling, M., Ghahramani, Z., Weinberger, K.Q., Eds.; Curran Associates, Inc.: Lake Tahoe, NA, USA, 2013; Volume 26. [Google Scholar]

- Gomez, A.N.; Zhang, I.; Kamalakara, S.R.; Madaan, D.; Swersky, K.; Gal, Y.; Hinton, G.E. Learning Sparse Networks Using Targeted Dropout. arXiv 2019, arXiv:1905.13678. [Google Scholar]

- Lin, H.; Zeng, W.; Ding, X.; Huang, Y.; Huang, C.; Paisley, J. Learning Rate Dropout. arXiv 2019, arXiv:1912.00144. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Nair, V.; Hinton, G. Learning Multiple Layers of Features from Tiny Images; University of Toronto: Toronto, ON, Canada, 2019. [Google Scholar]

- Seewald, A.K. Digits—A Dataset for Handwritten Digit Recognition; Institute for Artificial Intelligence: Vienna, Austria, 2005. [Google Scholar]

- Xiao, H.; Rasul, K.; Vollgraf, R. Fashion-MNIST: A Novel Image Dataset for Benchmarking Machine Learning Algorithms. arXiv 2017, arXiv:1708.07747. [Google Scholar]

- Deng, L. The Mnist Database of Handwritten Digit Images for Machine Learning Research. IEEE Signal Process. Mag. 2012, 29, 141–142. [Google Scholar] [CrossRef]

- Netzer, Y.; Wang, T.; Coates, A.; Bissacco, A.; Wu, B.; Ng, A.Y. Reading Digits in Natural Images with Unsupervised Feature Learning NIPS Workshop on Deep Learning and Unsupervised Feature Learning; Springer: Granada, Spain, 2011. [Google Scholar]

- Coates, A.; Ng, A.; Lee, H. An Analysis of Single Layer Networks in Unsupervised Feature Learning. In Proceedings of the Artificial Intelligence and Statistics AISTATS, Ft. Lauderdale, FL, USA, 2011; Available online: https://cs.stanford.edu/~acoates/papers/coatesleeng_aistats_2011.pdf (accessed on 11 December 2022).

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. arXiv 2014, arXiv:1312.6114. [Google Scholar]

- Lu, L. Dying ReLU and Initialization: Theory and Numerical Examples. Commun. Comput. Phys. 2020, 28, 1671–1706. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).