Subjective Assessment of Objective Image Quality Metrics Range Guaranteeing Visually Lossless Compression

(This article belongs to the Section Intelligent Sensors)

Abstract

1. Introduction

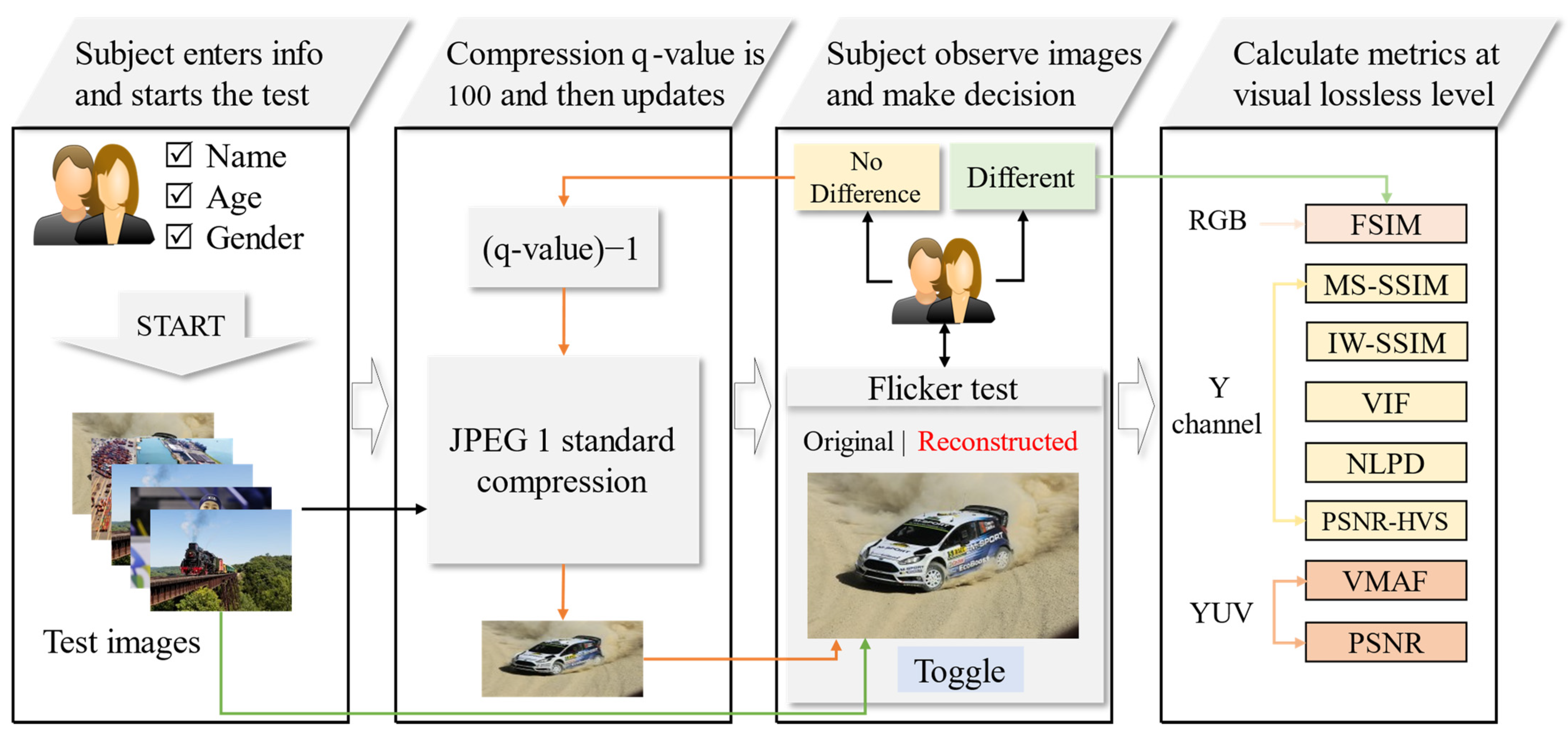

- This study performs a subjective quality assessment of JPEG 1 standard compressed images and evaluates the objective IQM values range that guarantees the visually or near-visually lossless compression of the images.

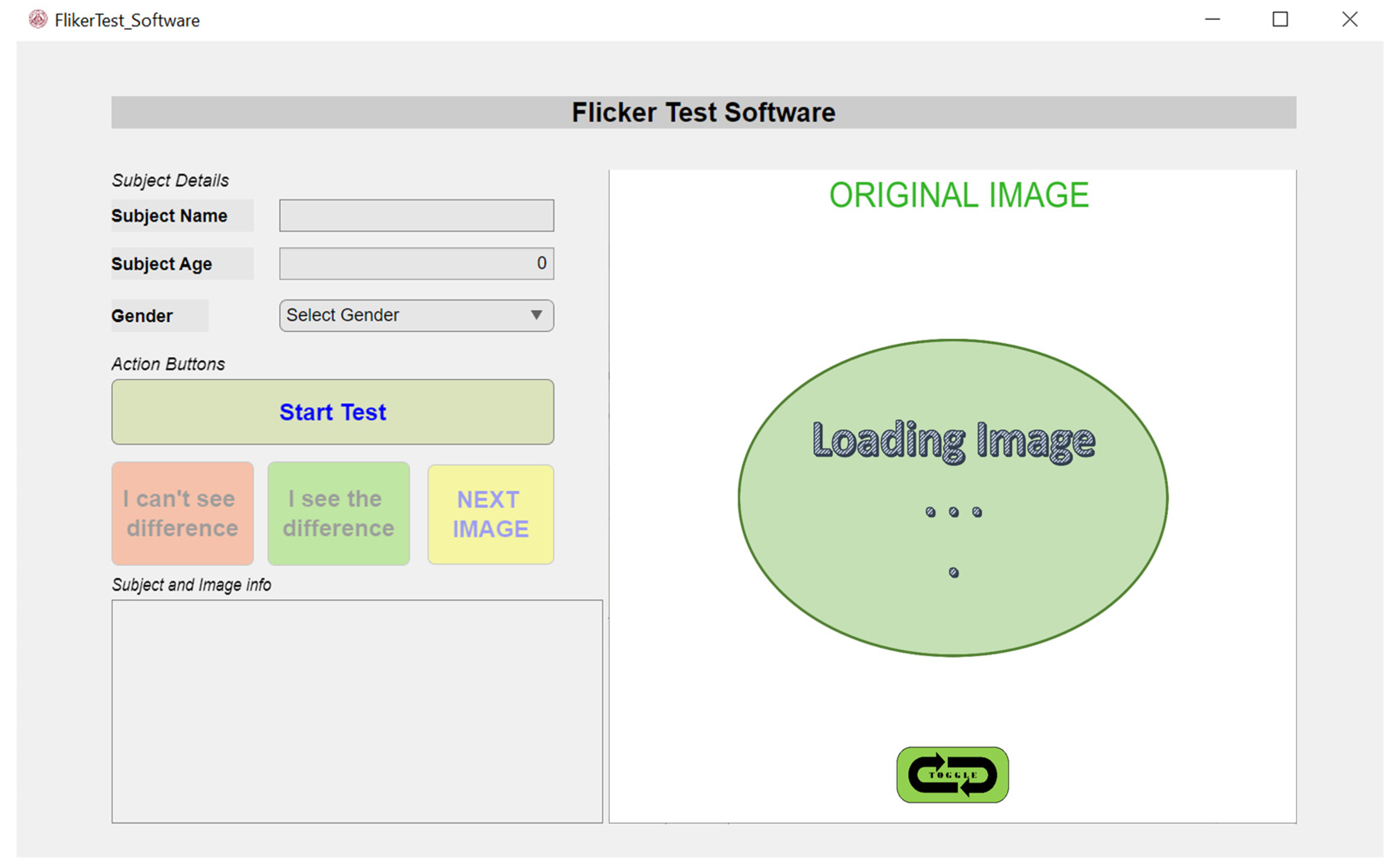

- A unique platform “Flicker Test Software” is designed that compress the images using the worldwide utilized JPEG 1 standard at different compression levels to perform a flicker test for the subjective assessment of visually or near-visually lossless compressed images and evaluates the objective IQMs.

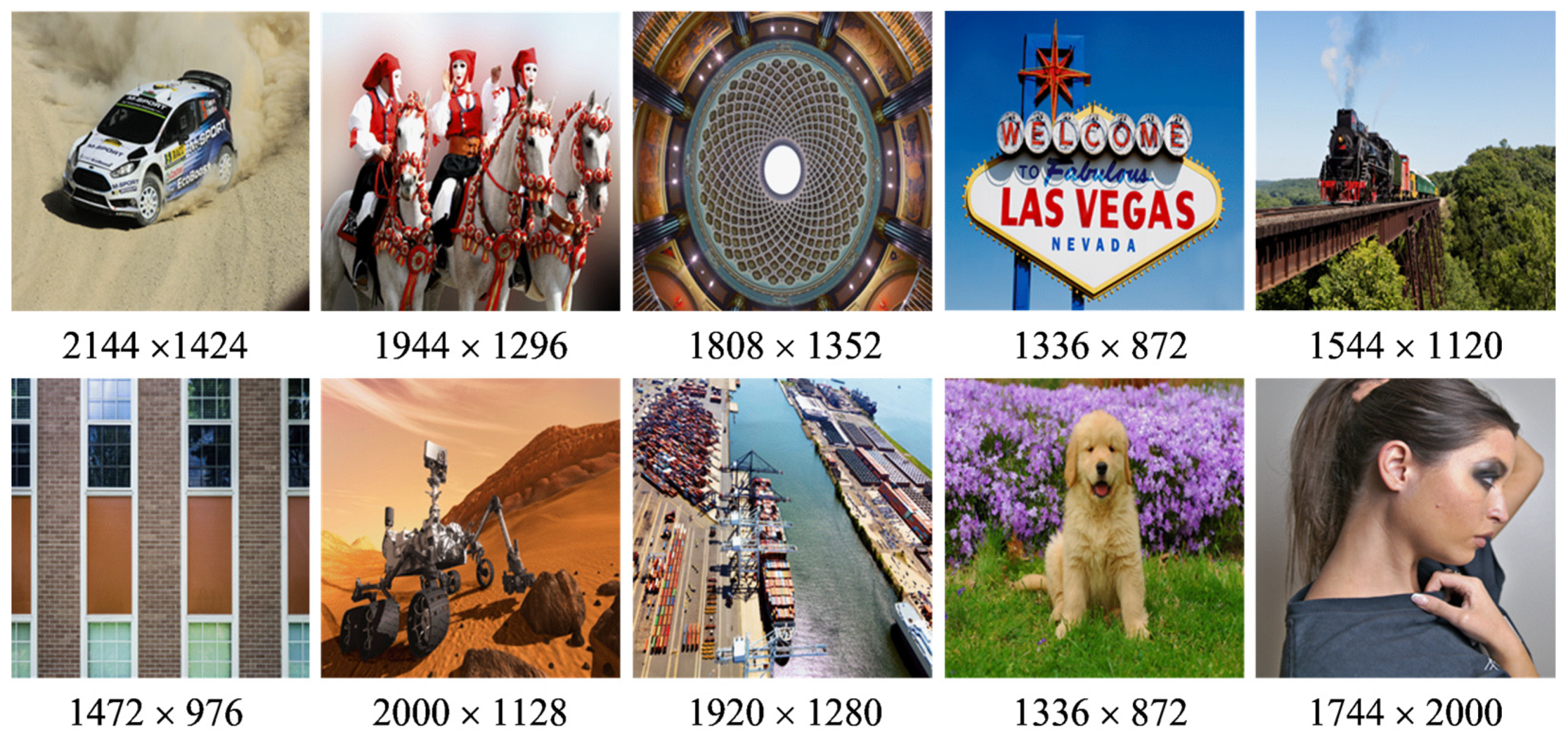

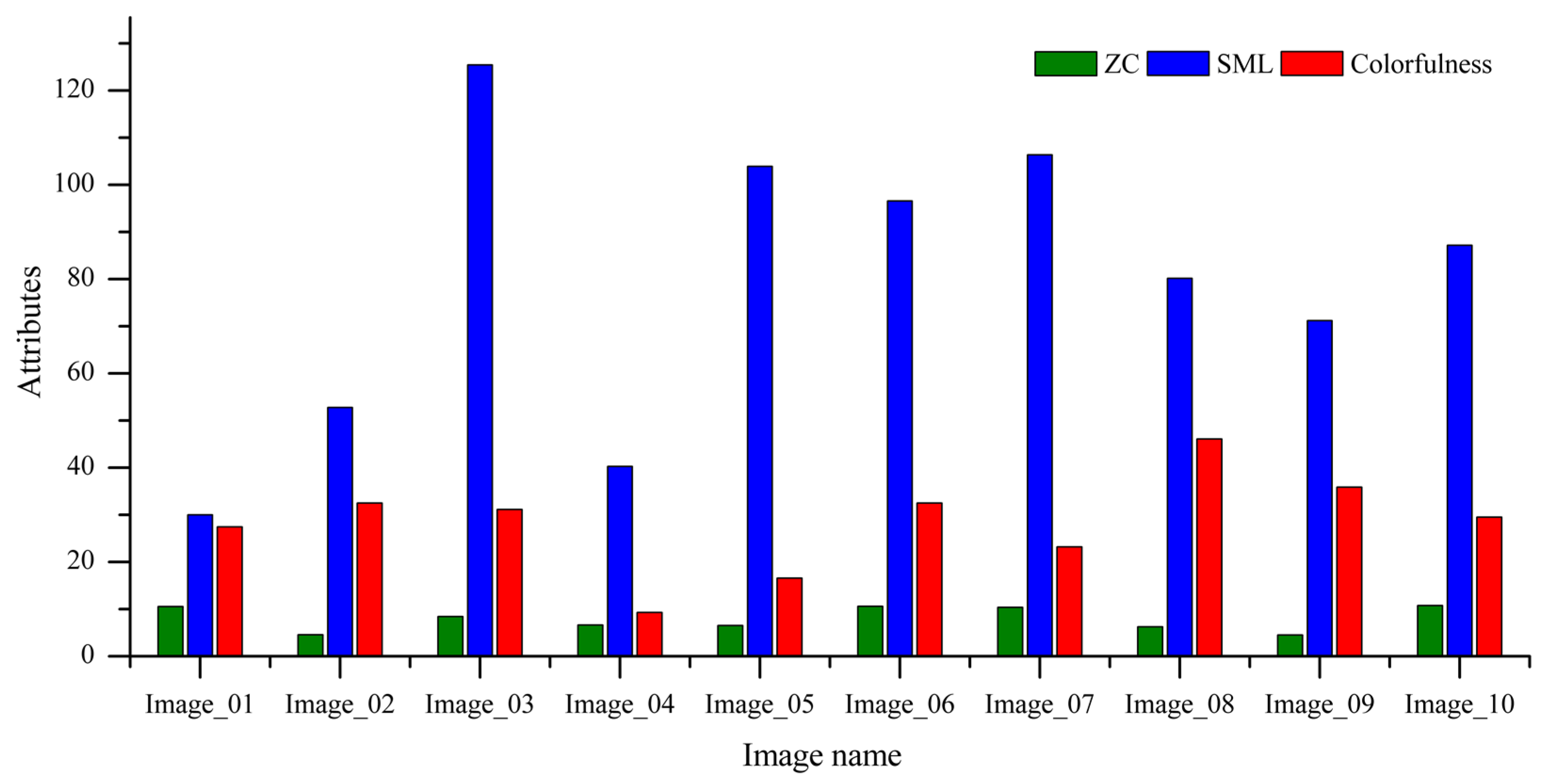

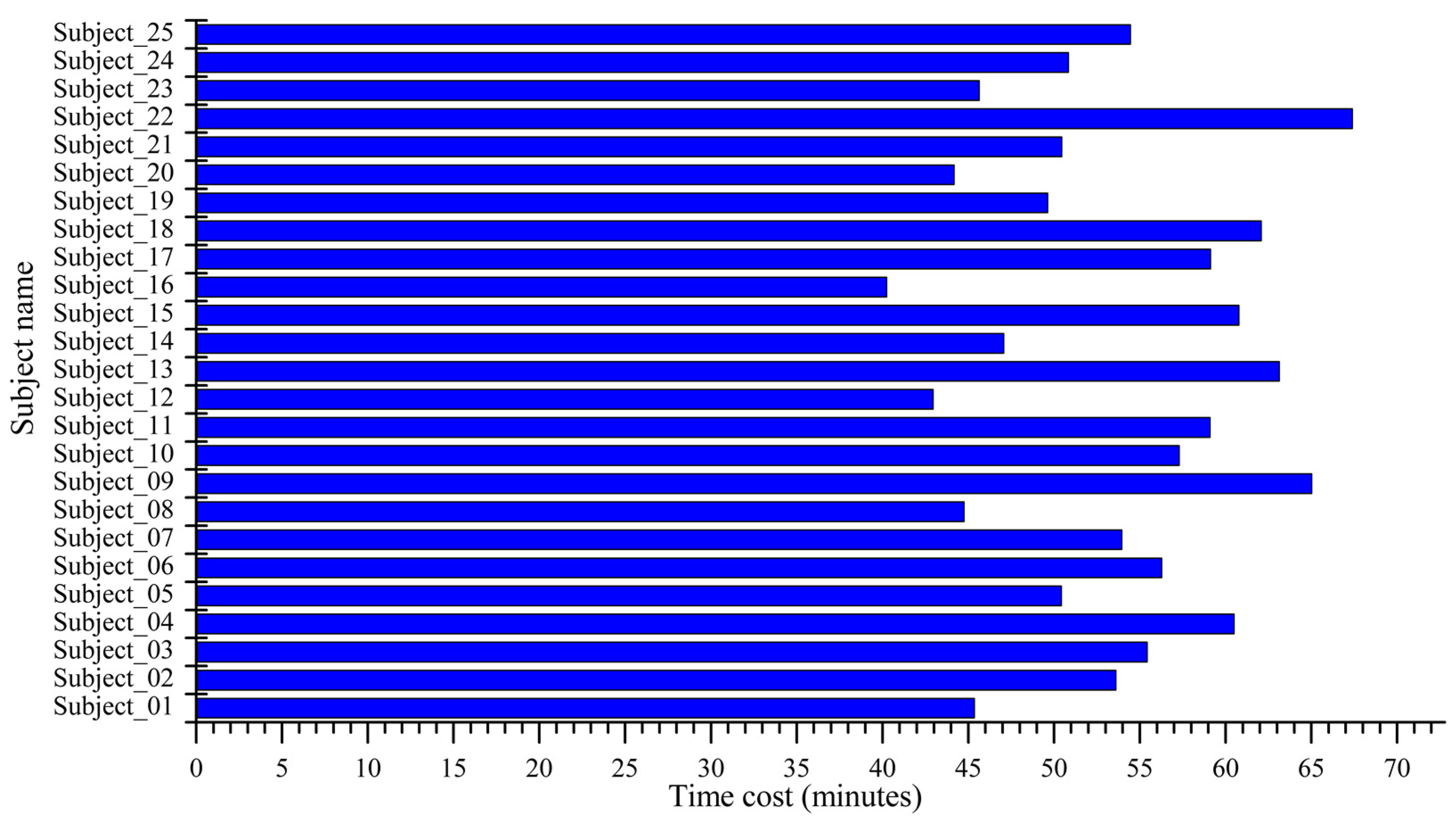

- A subjective test activity performing the flicker test is conducted by 25 participants, individually assessing ten raw images subjectively at different quality levels of compression. The objective metrics for the test images at the point of visually or near-visually lossless compression level observed by each subject are determined.

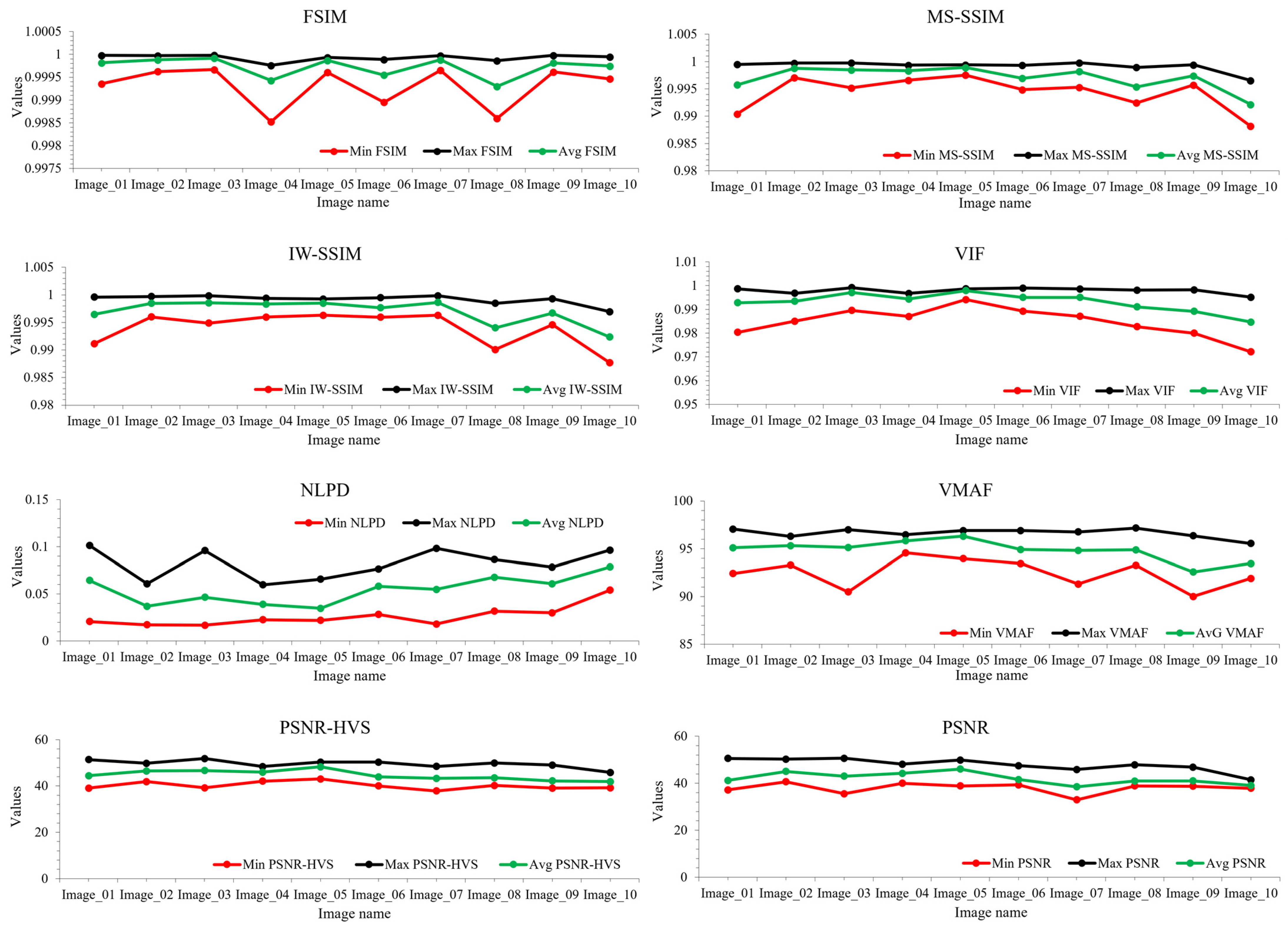

- The objective IQMs, named FSIM, MS-SSIM, and IW-SSIM, show the least standard deviations with a close range of values that best guarantee the visually or near-visually lossless compression of the images.

2. Related Work

2.1. Objective Image Quality Assessment

2.1.1. No-Reference Image Quality Metrics

2.1.2. Reduced-Reference Image Quality Metrics

2.1.3. Full-Reference Image Quality Metrics

2.2. Subjective Image Quality Assessment

2.2.1. Single Stimulus-Based Methods

2.2.2. Double Stimulus-Based Methods

2.3. Subjective Assessment of Visually Lossless Compressed Images

3. Proposed Methodology

Flicker Test Software

4. Experimentation and Results

4.1. Experimental Setup and Display Configuration

4.1.1. Test Subjects

4.1.2. Test Images

4.1.3. Objective Image Quality Metrics

4.2. Results and Discussion

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Abi-Jaoude, E.; Naylor, K.T.; Pignatiello, A. Smartphones, social media use and youth mental health. Can. Med. Assoc. J. 2020, 192, E136–E141. [Google Scholar] [CrossRef] [PubMed]

- Aljuaid, H.; Parah, S.A. Secure patient data transfer using information embedding and hyperchaos. Sensors 2021, 21, 282. [Google Scholar] [CrossRef] [PubMed]

- Lungisani, B.A.; Lebekwe, C.K.; Zungeru, A.M.; Yahya, A. Image compression techniques in wireless sensor networks: A survey and comparison. IEEE Access 2022, 10, 82511–82530. [Google Scholar] [CrossRef]

- Varga, D. No-reference video quality assessment using multi-pooled, saliency weighted deep features and decision fusion. Sensors. 2022, 22, 2209. [Google Scholar] [CrossRef]

- Wakin, M.; Romberg, J.; Choi, H.; Baraniuk, R. Rate-distortion optimized image compression using wedge lets. In Proceedings of the International Conference on Image Processing, Rochester, NY, USA, 22–25 September 2002. [Google Scholar]

- Sun, M.; He, X.; Xiong, S.; Ren, C.; Li, X. Reduction of JPEG compression artifacts based on DCT coefficients prediction. Neurocomputing 2020, 384, 335–345. [Google Scholar] [CrossRef]

- Jenadeleh, M.; Pedersen, M.; Saupe, D. Blind quality assessment of iris images acquired in visible light for biometric recognition. Sensors 2020, 20, 1308. [Google Scholar] [CrossRef]

- Dumic, E.; Bjelopera, A.; Nüchter, A. Dynamic point cloud compression based on projections, surface reconstruction and video compression. Sensors 2021, 22, 197. [Google Scholar] [CrossRef] [PubMed]

- Zhai, G.; Min, X. Perceptual image quality assessment: A survey. Sci. China Inf. Sci. 2020, 63, 1–52. [Google Scholar] [CrossRef]

- Opozda, S.; Sochan, A. The survey of subjective and objective methods for quality assessment of 2D and 3D images. Theor. Appl. Inform. 2014, 26, 39–67. [Google Scholar]

- Lin, H.; Chen, G.; Jenadeleh, M.; Hosu, V.; Reips, U.-D.; Hamzaoui, R.; Saupe, D. Large-scale crowdsourced subjective assessment of picture wise just noticeable difference. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 5859–5873. [Google Scholar] [CrossRef]

- ITU-R Recommendation, B.T. 500-11. Methodology for the Subjective Assessment of the Quality of Television Pictures; ITU: Geneva, Switzerland, 2002. [Google Scholar]

- Testolina, M.; Ebrahimi, T. Review of subjective quality assessment methodologies and standards for compressed images evaluation. In Applications of Digital Image Processing XLIV; SPIE: Bellingham, MA, USA, 2021; Volume 11842, pp. 302–315. [Google Scholar]

- ISO/IEC 29170-2:2015; Information Technology—Advanced Image Coding and Evaluation—Part 2: Evaluation Procedure for Nearly Lossless Coding. ISO: Geneva, Switzerland, 2021.

- Jiang, J.; Wang, X.; Li, B.; Tian, M.; Yao, H. Multi-Dimensional Feature Fusion Network for No-Reference Quality Assessment of In-the-Wild Videos. Sensors 2021, 21, 5322. [Google Scholar] [CrossRef]

- Zhang, H.; Hu, X.; Gou, R.; Zhang, L.; Zheng, B.; Shen, Z. Rich Structural Index for Stereoscopic Image Quality Assessment. Sensors 2022, 22, 499. [Google Scholar] [CrossRef] [PubMed]

- Mahdaoui, A.E.; Ouahabi, A.; Moulay, M.S. Image denoising using a compressive sensing approach based on regularization constraints. Sensors 2022, 22, 2199. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Kwong, S.; Kuo, C.-C.J. Data-Driven Transform-Based Compressed Image Quality Assessment. IEEE Trans. Circuits Syst. Video Technology. 2020, 31, 3352–3365. [Google Scholar] [CrossRef]

- Testolina, M.; Upenik, E.; Ascenso, J.; Pereira, F.; Ebrahimi, T. Performance evaluation of objective image quality metrics on conventional and learning-based compression artifacts. In Proceedings of the 13th International Conference on Quality of Multimedia Experience (QoMEX), Online, 14–17 June 2021. [Google Scholar]

- Sheikh, H.R.; Sabir, M.F.; Bovik, A.C. A statistical evaluation of recent full reference image quality assessment algorithms. IEEE Trans. Image Process. 2006, 15, 3440–3451. [Google Scholar] [CrossRef] [PubMed]

- Li, X. Blind image quality assessment. In Proceedings of the International Conference on Image Processing, Rochester, NY, USA, 22–25 September 2002. [Google Scholar]

- Varga, D. A Human Visual System Inspired No-Reference Image Quality Assessment Method Based on Local Feature Descriptors. Sensors 2022, 22, 6775. [Google Scholar] [CrossRef] [PubMed]

- Stępień, I.; Oszust, M. A Brief Survey on No-Reference Image Quality Assessment Methods for Magnetic Resonance Images. J. Imaging. 2022, 8, 160. [Google Scholar] [CrossRef]

- Xu, S.; Jiang, S.; Min, W. No-reference/blind image quality assessment: A survey. IETE Tech. Rev. 2017, 34, 223–245. [Google Scholar] [CrossRef]

- Kamble, V.; Bhurchandi, K. No-reference image quality assessment algorithms: A survey. Optik 2015, 126, 1090–1097. [Google Scholar] [CrossRef]

- Lu, W.; Sun, W.; Min, X.; Zhu, W.; Zhou, Q.; He, J.; Wang, Q.; Zhang, Z.; Wang, T.; Zhai, G. Deep Neural Network for Blind Visual Quality Assessment of 4K Content. arXiv 2022, arXiv:2206.04363. [Google Scholar] [CrossRef]

- Golestaneh, S.A.; Dadsetan, S.; Kitani, K. No-reference image quality assessment via transformers, relative ranking, and self-consistency. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 4–8 January 2022. [Google Scholar]

- Lu, W.; Sun, W.; Min, X.; Zhu, W.; Zhou, Q.; He, J.; Wang, Q.; Zhang, Z.; Wang, T.; Zhai, G. No-reference panoramic image quality assessment based on multi-region adjacent pixels correlation. PloS One 2022, 17, e0266021. [Google Scholar]

- Lee, S.; Park, S. A new image quality assessment method to detect and measure strength of blocking artifacts. Signal Process. Image Commun. 2012, 27, 31–38. [Google Scholar] [CrossRef]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “completely blind” image quality analyzer. IEEE Signal Process. Lett. 2012, 20, 209–212. [Google Scholar] [CrossRef]

- Su, S.; Yan, Q.; Zhu, Y.; Zhang, C.; Ge, X.; Sun, J.; Zhang, Y. Blindly assess image quality in the wild guided by a self-adaptive hyper network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 14–19 June 2020. [Google Scholar]

- Zhu, H.; Li, L.; Wu, J.; Dong, W.; Shi, G. Generalizable no-reference image quality assessment via deep meta-learning. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 1048–1060. [Google Scholar] [CrossRef]

- Ma, Y.; Zhang, W.; Yan, J.; Fan, C.; Shi, W. Blind image quality assessment in multiple bandpass and redundancy domains. Digit. Signal Process. 2018, 80, 37–47. [Google Scholar] [CrossRef]

- Li, D.; Jiang, T.; Jiang, M. Norm-in-norm loss with faster convergence and better performance for image quality assessment. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020. [Google Scholar]

- Ying, Z.; Niu, H.; Gupta, P.; Mahajan, D.; Ghadiyaram, D.; Bovik, A. From patches to pictures (PaQ-2-PiQ): Mapping the perceptual space of picture quality. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Liu, J.; Zhou, W.; Xu, J.; Li, X.; An, S.; Chen, Z. LIQA: Lifelong Blind Image Quality Assessment. arXiv 2021, arXiv:2104.14115. [Google Scholar] [CrossRef]

- Zhang, W.; Li, D.; Ma, C.; Zhai, G.; Yang, X.; Ma, K. Continual learning for blind image quality assessment. IEEE Trans. Pattern Anal. Mach. Intell. 2022, Early Access, 1. [Google Scholar] [CrossRef]

- Sun, S.; Yu, T.; Xu, J.; Zhou, W.; Chen, Z. GraphIQA: Learning distortion graph representations for blind image quality assessment. IEEE Trans. Multimed. 2022, 1. [Google Scholar] [CrossRef]

- Balanov, A.; Schwartz, A.; Moshe, Y. Reduced-reference image quality assessment based on dct subband similarity. In Proceedings of the 2016 Eighth International Conference on Quality of Multimedia Experience (QoMEX), Lisbon, Portugal, 6–8 June 2016. [Google Scholar]

- Gu, K.; Zhai, G.; Yang, X.; Zhang, W. A new reduced-reference image quality assessment using structural degradation model. In Proceedings of the 2013 IEEE international symposium on circuits and systems (ISCAS), Beijing, China, 19–23 May 2013. [Google Scholar]

- Gu, K.; Zhai, G.; Yang, X.; Zhang, W.; Liu, M. Subjective and objective quality assessment for images with contrast change. In Proceedings of the 2013 IEEE International Conference on Image Processing, Mlebourne, VI, Australia, 15–18 September 2013. [Google Scholar]

- Wu, J.; Lin, W.; Shi, G.; Li, L.; Fang, Y. Orientation selectivity based visual pattern for reduced-reference image quality assessment. Inf. Sci.. 2016, 351, 18–29. [Google Scholar] [CrossRef]

- Phadikar, B.S.; Maity, G.K.; Phadikar, A. Full reference image quality assessment: A survey. In Industry Interactive Innovations in Science, Engineering and Technology; Springer: Cham, Switzerland, 2018; pp. 197–208. [Google Scholar]

- George, A.; Livingston, S.J. A survey on full reference image quality assessment algorithms. Int. J. Res. Eng. Technol. 2013, 2, 303–307. [Google Scholar]

- Pedersen, M.; Hardeberg, J.Y. Survey of Full-reference Image QUALITY metrics. 2009. Available online: https://ntnuopen.ntnu.no/ntnu-xmlui/bitstream/handle/11250/144194/rapport052009_elektroniskversjon.pdf?sequence=1 (accessed on 18 December 2022).

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Bovik, A.C. Modern image quality assessment. Synth. Lect. Image Video Multimed. Process. 2006, 2, 1–156. [Google Scholar]

- Ponomarenko, N.; Jin, L.; Ieremeiev, O.; Lukin, V.; Egiazarian, K.; Astola, J.; Vozel, B.; Chehdi, K.; Carli, M.; Battisti, F. Image database TID2013: Peculiarities, results and perspectives. Signal Process. Image Commun. 2015, 30, 57–77. [Google Scholar] [CrossRef]

- Gu, K.; Zhai, G.; Yang, X.; Zhang, W. Hybrid no-reference quality metric for singly and multiply distorted images. IEEE Trans. Broadcast. 2014, 60, 555–567. [Google Scholar] [CrossRef]

- Damera-Venkata, N.; Kite, T.D.; Geisler, W.S.; Evans, B.L.; Bovik, A.C. Image quality assessment based on a degradation model. IEEE Trans. Image Process. 2000, 9, 636–650. [Google Scholar] [CrossRef]

- Wang, Z.; Li, Q. Information content weighting for perceptual image quality assessment. IEEE Trans. Image Process. 2010, 20, 1185–1198. [Google Scholar] [CrossRef]

- Larson, E.C.; Chandler, D.M. Most apparent distortion: Full-reference image quality assessment and the role of strategy. J. Electron. Imaging. 2010, 19, 011006. [Google Scholar]

- Johnson, J.; Alahi, A.; Fei-Fei, L. Perceptual losses for real-time style transfer and super-resolution. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Prashnani, E.; Cai, H.; Mostofi, Y.; Sen, P. Pieapp: Perceptual image-error assessment through pairwise preference. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Gu, J.; Cai, H.; Chen, H.; Ye, X.; Ren, J.; Dong, C. Image quality assessment for perceptual image restoration: A new dataset, benchmark and metric. arXiv 2020, arXiv:2011.15002. [Google Scholar]

- Wang, Z.; Simoncelli, E.P.; Bovik, A.C. Multiscale structural similarity for image quality assessment. In Proceedings of the The Thrity-Seventh Asilomar Conference on Signals, Systems & Computers, Pacific Grove, CA, USA, 9–12 October 2003. [Google Scholar]

- Chen, G.-H.; Yang, C.-L.; Po, L.-M.; Xie, S.-L. Edge-based structural similarity for image quality assessment. In Proceedings of the 2006 IEEE International Conference on Acoustics Speech and Signal Processing Proceedings, Toulouse, France, 14–19 May 2006. [Google Scholar]

- Liu, A.; Lin, W.; Narwaria, M. Image quality assessment based on gradient similarity. IEEE Trans. Image Process. 2011, 21, 1500–1512. [Google Scholar]

- Xue, W.; Zhang, L.; Mou, X.; Bovik, A.C. Gradient magnitude similarity deviation: A highly efficient perceptual image quality index. IEEE Trans. Image Process. 2013, 23, 684–695. [Google Scholar] [CrossRef]

- Zhang, B.; Sander, P.V.; Bermak, A. Gradient magnitude similarity deviation on multiple scales for color image quality assessment. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017. [Google Scholar]

- Zhang, L.; Zhang, L.; Mou, X.; Zhang, D. FSIM: A feature similarity index for image quality assessment. IEEE Trans. Image Process. 2011, 20, 2378–2386. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Shen, Y.; Li, H. VSI: A visual saliency-induced index for perceptual image quality assessment. IEEE Trans. Image Process. 2014, 23, 4270–4281. [Google Scholar] [CrossRef] [PubMed]

- Reisenhofer, R.; Bosse, S.; Kutyniok, G.; Wiegand, T. A Haar wavelet-based perceptual similarity index for image quality assessment. Signal Process. Image Commun. 2018, 61, 33–43. [Google Scholar] [CrossRef]

- Nafchi, H.Z.; Shahkolaei, A.; Hedjam, R.; Cheriet, M. Mean deviation similarity index: Efficient and reliable full-reference image quality evaluator. IEEE Access 2016, 4, 5579–5590. [Google Scholar] [CrossRef]

- Ding, K.; Ma, K.; Wang, S.; Simoncelli, E.P. Image quality assessment: Unifying structure and texture similarity. IEEE Trans. Pattern Anal.Mach. Intell.. 2020, 44, 2567–2581. [Google Scholar] [CrossRef] [PubMed]

- Sheikh, H.R.; Bovik, A.C. A Visual Information Fidelity Approach to Video Quality Assessment. 2005, 7, pp. 2117–2128. Available online: https://utw10503.utweb.utexas.edu/publications/2005/hrs_vidqual_vpqm2005.pdf (accessed on 18 December 2022).

- Mohammadi, P.; Ebrahimi-Moghadam, A.; Shirani, S. Subjective and objective quality assessment of image: A survey. arXiv 2014, arXiv:1406.7799. [Google Scholar]

- ITU-T Recommendation, P. 910. Subjective Video Quality Assessment Methods for Multimedia Applications; ITU: Geneva, Switzerland, 2008. [Google Scholar]

- ITU-R Recommendation, B.T. 814-1. Specification and Alignment Procedures for Setting of Brightness and Contrast of Displays; ITU: Geneva, Switzerland, 1994. [Google Scholar]

- ITU-R Recommendation, B.T. 1129-2. Subjective Assessment of Standard Definition Digital Television (SDTV) Systems; ITU: Geneva, Switzerland, 1998. [Google Scholar]

- Cheng, Z.; Akyazi, P.; Sun, H.; Katto, J.; Ebrahimi, T. Perceptual quality study on deep learning based image compression. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019. [Google Scholar]

- Ascenso, J.; Akyazi, P.; Pereira, F.; Ebrahimi, T. Learning-based image coding: Early solutions reviewing and subjective quality evaluation. In Optics, Photonics and Digital Technologies for Imaging Applications; SPIE: Bellingham, MA, USA, 2020; Volume 11353, pp. 164–176. [Google Scholar]

- Egger-Lampl, S.; Redi, J.; Hoßfeld, T.; Hirth, M.; Möller, S.; Naderi, B.; Keimel, C.; Saupe, D. Crowdsourcing quality of experience experiments. In Evaluation in the crowd. Crowdsourcing and human-centered experiments; Springer: Cham, Switzerland, 2017; pp. 154–190. [Google Scholar]

- Chen, K.-T.; Wu, C.-C.; Chang, Y.-C.; Lei, C.-L. A crowdsourceable QoE evaluation framework for multimedia content. In Proceedings of the 17th ACM International Conference on Multimedia, Beijing, China, 19–24 October 2009. [Google Scholar]

- Willème, A.; Mahmoudpour, S.; Viola, I.; Fliegel, K.; Pospíšil, J.; Ebrahimi, T.; Schelkens, P.; Descampe, A.; Macq, B. Overview of the JPEG XS core coding system subjective evaluations. In Applications of Digital Image Processing XLI; SPIE: Bellingham, MA, USA, 2018; Volume 10752, pp. 512–523. [Google Scholar]

- Hoffman, D.M.; Stolitzka, D. A new standard method of subjective assessment of barely visible image artifacts and a new public database. J. Soc. Inf. Disp.. 2014, 22, 631–643. [Google Scholar] [CrossRef]

- Cornsweet, T.N. The staircase-method in psychophysics. Am. J. Psychol. 1962, 75, 485–491. [Google Scholar] [CrossRef]

- Hudson, G.; Léger, A.; Niss, B.; Sebestyén, I.; Vaaben. JPEG-1 standard 25 years: Past, present, and future reasons for a success. J. Electron. Imaging. 2018, 27, 040901. [Google Scholar] [CrossRef]

- Libjpeg-Turbo. Available online: https://libjpeg-turbo.org/Main/HomePage (accessed on 18 October 2022).

- JPEG—JPEG, A.I. Available online: https://jpeg.org/jpegai/dataset.html (accessed on 1 November 2022).

- Choi, S.; Kwon, O.-J.; Lee, J. A method for fast multi-exposure image fusion. IEEE Access 2017, 5, 7371–7380. [Google Scholar] [CrossRef]

- ISO/IEC JTC 1/SC29/WG1 N100106; ICQ JPEG AI Common Training and Test Conditions. ISO: Geneva, Switzerland, 2022.

| S. No. | Objective IQMs | Color Space |

|---|---|---|

| 1 | Feature similarity index measure (FSIM) | RGB |

| 2 | Multiscale structural similarity index measure (MS-SSIM) | Y |

| 3 | The information content weighted SSIM (IW-SSIM) | Y |

| 4 | Visual information fidelity (VIF) | Y |

| 5 | Normalized Laplacian pyramid (NLPD) | Y |

| 6 | Peak signal-to-noise ratio human visual system (PSNR-HVS) | Y |

| 7 | Video multimethod assessment fusion (VMAF) | YUV |

| 8 | Peak signal-to-noise ratio (PSNR) | YUV |

| Image | Min q-Value | Max q-Value | Avg q-Value | Min bpp | Max bpp | Avg bpp |

|---|---|---|---|---|---|---|

| Image_01 | 69 | 99 | 87 | 1.14 | 6.42 | 2.46 |

| Image_02 | 79 | 100 | 92 | 0.94 | 4.29 | 2.09 |

| Image_03 | 71 | 100 | 91 | 1.74 | 6.39 | 3.37 |

| Image_04 | 78 | 100 | 92 | 0.35 | 1.99 | 0.86 |

| Image_05 | 82 | 100 | 95 | 0.87 | 3.43 | 2.23 |

| Image_06 | 68 | 97 | 83 | 0.53 | 1.90 | 0.86 |

| Image_07 | 73 | 100 | 89 | 2.16 | 8.36 | 3.87 |

| Image_08 | 66 | 97 | 80 | 0.58 | 2.40 | 0.96 |

| Image_09 | 65 | 95 | 78 | 0.88 | 2.55 | 1.32 |

| Image_10 | 67 | 93 | 80 | 0.85 | 2.74 | 1.47 |

| Overall | 65 | 100 | 86.61 | 0.3525 | 8.3588 | 1.9502 |

| Image | Avg FSIM | Avg MS-SSIM | Avg IW-SSIM | Avg VIF | Avg NLPD | Avg PSNR-HVS | Avg VMAF | Avg PSNR |

|---|---|---|---|---|---|---|---|---|

| Image_01 | 0.9998 | 0.9958 | 0.9964 | 0.9928 | 0.0644 | 44.45 | 95.10 | 41.21 |

| Image_02 | 0.9999 | 0.9988 | 0.9985 | 0.9934 | 0.0369 | 46.46 | 95.31 | 45.06 |

| Image_03 | 0.9999 | 0.9985 | 0.9985 | 0.9971 | 0.0466 | 46.63 | 95.12 | 43.02 |

| Image_04 | 0.9994 | 0.9983 | 0.9983 | 0.9943 | 0.0391 | 45.92 | 95.82 | 44.23 |

| Image_05 | 0.9999 | 0.9989 | 0.9985 | 0.9979 | 0.0348 | 48.24 | 96.30 | 46.05 |

| Image_06 | 0.9995 | 0.9970 | 0.9977 | 0.9950 | 0.0581 | 43.91 | 94.90 | 41.58 |

| Image_07 | 0.9999 | 0.9982 | 0.9986 | 0.9950 | 0.0548 | 43.30 | 94.82 | 38.51 |

| Image_08 | 0.9993 | 0.9954 | 0.9940 | 0.9911 | 0.0677 | 43.54 | 94.88 | 40.98 |

| Image_09 | 0.9998 | 0.9974 | 0.9967 | 0.9892 | 0.0609 | 42.20 | 92.57 | 41.03 |

| Image_10 | 0.9997 | 0.9922 | 0.9923 | 0.9846 | 0.0786 | 41.90 | 93.45 | 39.10 |

| Overall | 0.9997 | 0.9970 | 0.9970 | 0.9930 | 0.0542 | 44.65 | 94.83 | 42.08 |

| IQMs | Min Value | Max Value | Avg Value | Std |

| FSIM | 0.9985 | 1.0000 | 0.9997 | 0.0003 |

| MS-SSIM | 0.9882 | 0.9998 | 0.9970 | 0.0025 |

| IW-SSIM | 0.9877 | 0.9998 | 0.9970 | 0.0026 |

| VIF | 0.9722 | 0.9992 | 0.9930 | 0.0054 |

| NLPD | 0.0169 | 0.1014 | 0.0542 | 0.0209 |

| PSNR-HVS | 37.8483 | 51.8247 | 44.6545 | 3.2799 |

| VMAF | 90.0126 | 97.1580 | 94.8265 | 1.5799 |

| PSNR | 32.9527 | 50.6389 | 42.0773 | 3.7473 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Afnan; Ullah, F.; Yaseen; Lee, J.; Jamil, S.; Kwon, O.-J. Subjective Assessment of Objective Image Quality Metrics Range Guaranteeing Visually Lossless Compression. Sensors 2023, 23, 1297. https://doi.org/10.3390/s23031297

Afnan, Ullah F, Yaseen, Lee J, Jamil S, Kwon O-J. Subjective Assessment of Objective Image Quality Metrics Range Guaranteeing Visually Lossless Compression. Sensors. 2023; 23(3):1297. https://doi.org/10.3390/s23031297

Chicago/Turabian StyleAfnan, Faiz Ullah, Yaseen, Jinhee Lee, Sonain Jamil, and Oh-Jin Kwon. 2023. "Subjective Assessment of Objective Image Quality Metrics Range Guaranteeing Visually Lossless Compression" Sensors 23, no. 3: 1297. https://doi.org/10.3390/s23031297

APA StyleAfnan, Ullah, F., Yaseen, Lee, J., Jamil, S., & Kwon, O.-J. (2023). Subjective Assessment of Objective Image Quality Metrics Range Guaranteeing Visually Lossless Compression. Sensors, 23(3), 1297. https://doi.org/10.3390/s23031297