Fashion-Oriented Image Captioning with External Knowledge Retrieval and Fully Attentive Gates

Abstract

1. Introduction

2. Related Work

2.1. Image Captioning

2.2. Fashion-Oriented Solutions for Vision and Language

3. Proposed Method

3.1. Preliminaries

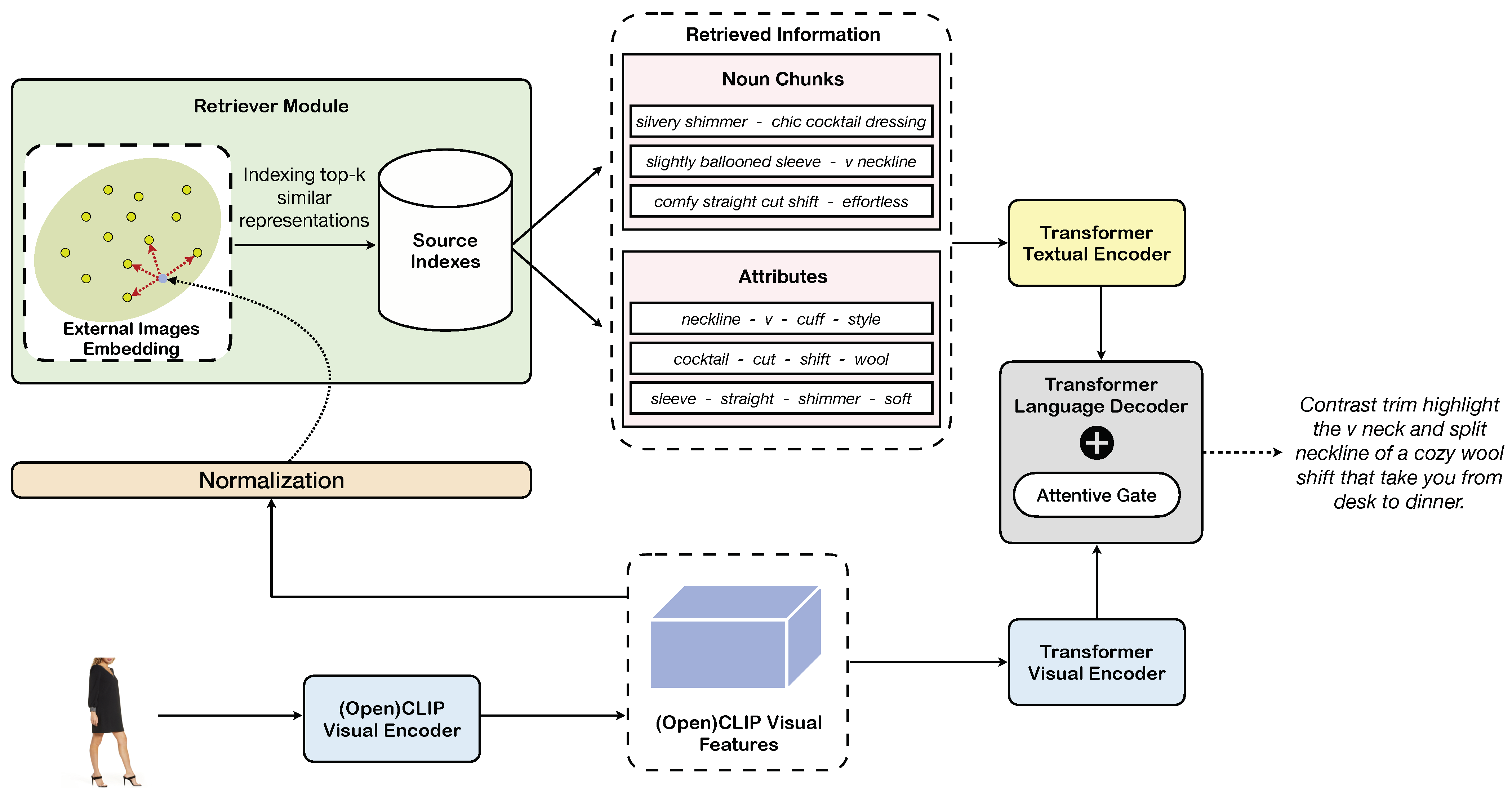

3.2. Overview of Our Approach

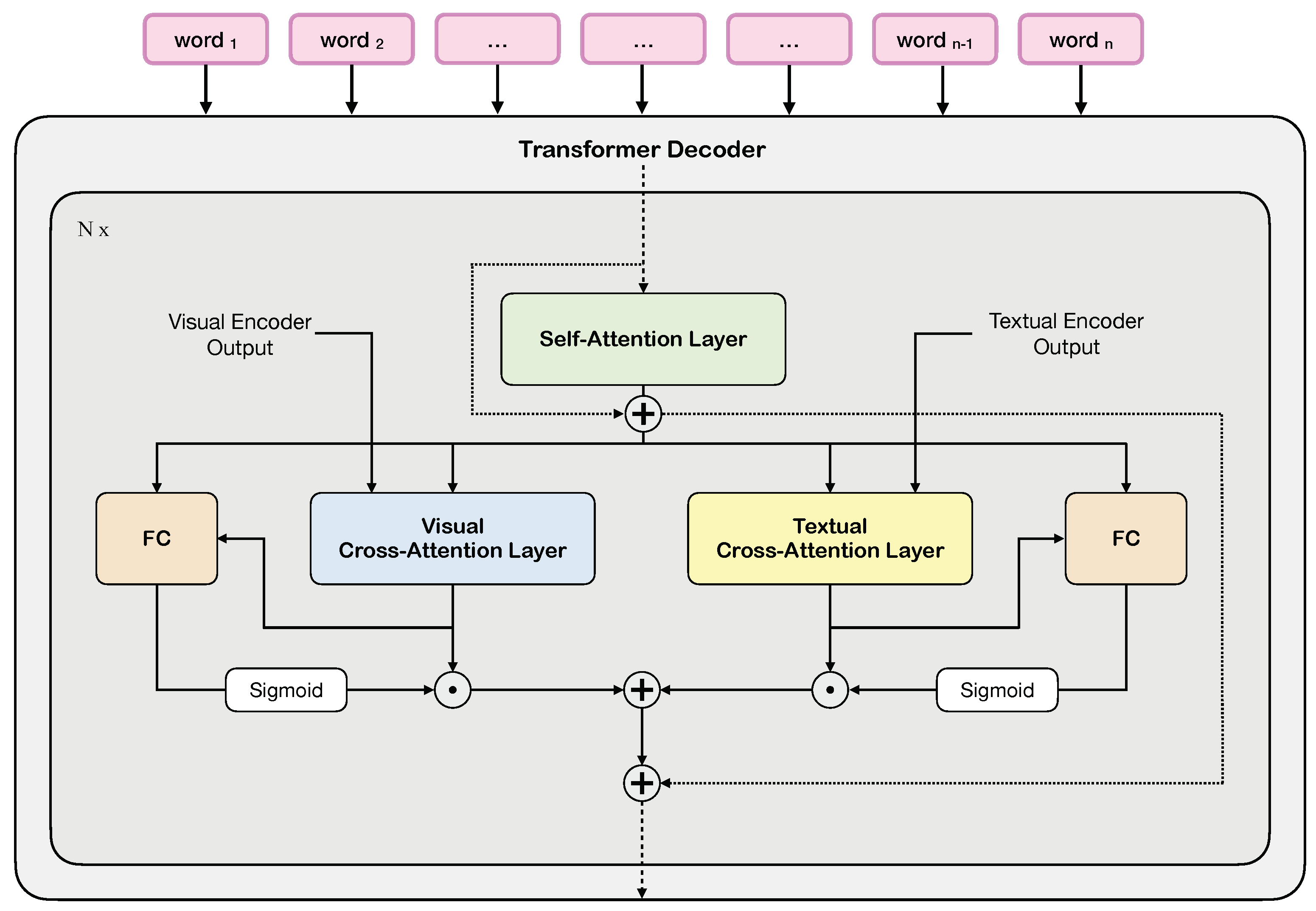

3.3. Knowledge Retrieval Architecture with Fully Attentive Gates

4. Experimental Evaluation

4.1. Dataset

4.2. Metrics

4.3. Implementation Details

4.4. Model Ablation and Analysis

4.5. Comparison to the State-of-the-Art

4.6. Computational Analysis

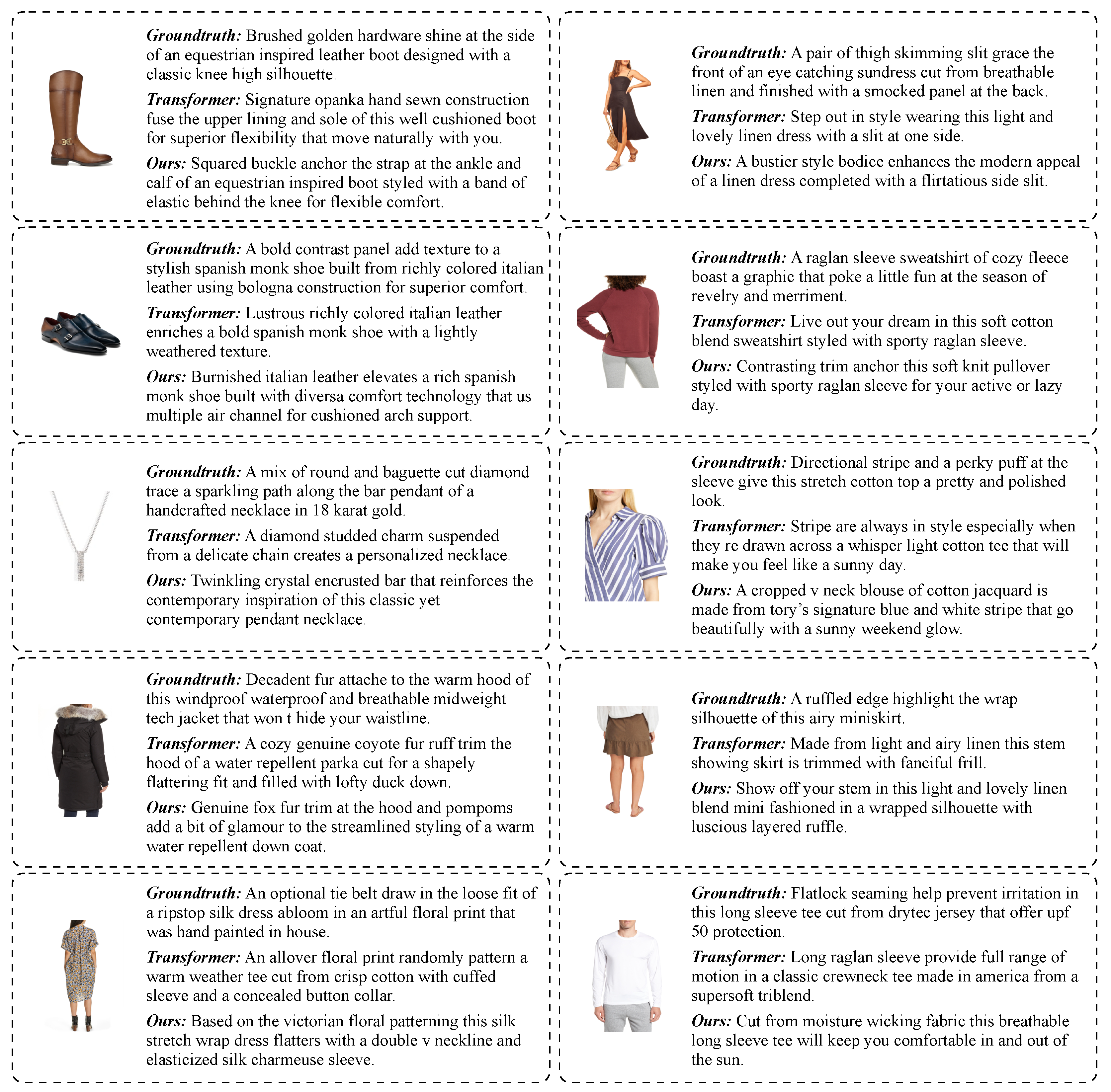

4.7. Qualitative Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ho, J.; Jain, A.; Abbeel, P. Denoising Diffusion Probabilistic Models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Zhen, L.; Hu, P.; Wang, X.; Peng, D. Deep supervised cross-modal retrieval. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 10394–10403. [Google Scholar]

- Messina, N.; Amato, G.; Falchi, F.; Gennaro, C.; Marchand-Maillet, S. Towards efficient cross-modal visual textual retrieval using transformer-encoder deep features. In Proceedings of the International Conference on Content-based Multimedia Indexing, Virtual, 28–30 June 2021; pp. 1–6. [Google Scholar]

- Han, X.; Wu, Z.; Wu, Z.; Yu, R.; Davis, L.S. VITON: An Image-based Virtual Try-On Network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7543–7552. [Google Scholar]

- Wang, B.; Zheng, H.; Liang, X.; Chen, Y.; Lin, L.; Yang, M. Toward characteristic-preserving image-based virtual try-on network. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 589–604. [Google Scholar]

- Fincato, M.; Landi, F.; Cornia, M.; Cesari, F.; Cucchiara, R. VITON-GT: An image-based virtual try-on model with geometric transformations. In Proceedings of the International Conference on Pattern Recognition, Virtual, 10–15 January 2021; pp. 7669–7676. [Google Scholar]

- Morelli, D.; Fincato, M.; Cornia, M.; Landi, F.; Cesari, F.; Cucchiara, R. Dress Code: High-Resolution Multi-Category Virtual Try-On. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 345–362. [Google Scholar]

- Zhao, B.; Feng, J.; Wu, X.; Yan, S. Memory-Augmented Attribute Manipulation Networks for Interactive Fashion Search. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1520–1528. [Google Scholar]

- Wu, H.; Gao, Y.; Guo, X.; Al-Halah, Z.; Rennie, S.; Grauman, K.; Feris, R. Fashion IQ: A New Dataset Towards Retrieving Images by Natural Language Feedback. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 11307–11317. [Google Scholar]

- Stefanini, M.; Cornia, M.; Baraldi, L.; Cascianelli, S.; Fiameni, G.; Cucchiara, R. From Show to Tell: A Survey on Deep Learning-based Image Captioning. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 539–559. [Google Scholar] [CrossRef] [PubMed]

- Vinyals, O.; Toshev, A.; Bengio, S.; Erhan, D. Show and tell: A neural image caption generator. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3156–3164. [Google Scholar]

- Xu, K.; Ba, J.; Kiros, R.; Cho, K.; Courville, A.; Salakhutdinov, R.; Zemel, R.S.; Bengio, Y. Show, attend and tell: Neural image caption generation with visual attention. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 2048–2057. [Google Scholar]

- Anderson, P.; He, X.; Buehler, C.; Teney, D.; Johnson, M.; Gould, S.; Zhang, L. Bottom-up and top-down attention for image captioning and visual question answering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6077–6086. [Google Scholar]

- Yang, X.; Zhang, H.; Jin, D.; Liu, Y.; Wu, C.H.; Tan, J.; Xie, D.; Wang, J.; Wang, X. Fashion Captioning: Towards Generating Accurate Descriptions with Semantic Rewards. arXiv 2020, arXiv:2008.02693v2. [Google Scholar]

- Zhu, S.; Urtasun, R.; Fidler, S.; Lin, D.; Change Loy, C. Be Your Own Prada: Fashion Synthesis With Structural Coherence. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1680–1688. [Google Scholar]

- Jiang, Y.; Yang, S.; Qju, H.; Wu, W.; Loy, C.C.; Liu, Z. Text2Human: Text-Driven Controllable Human Image Generation. ACM Trans. Graph. 2022, 41, 1–11. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 5–12 September 2014; pp. 740–755. [Google Scholar]

- Sharma, P.; Ding, N.; Goodman, S.; Soricut, R. Conceptual Captions: A Cleaned, Hypernymed, Image Alt-text Dataset For Automatic Image Captioning. In Proceedings of the Annual Meeting on Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; pp. 2556–2565. [Google Scholar]

- Socher, R.; Fei-Fei, L. Connecting modalities: Semi-supervised segmentation and annotation of images using unaligned text corpora. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 966–973. [Google Scholar]

- Yao, B.Z.; Yang, X.; Lin, L.; Lee, M.W.; Zhu, S.C. I2T: Image parsing to text description. IEEE 2010, 98, 1485–1508. [Google Scholar] [CrossRef]

- Rennie, S.J.; Marcheret, E.; Mroueh, Y.; Ross, J.; Goel, V. Self-Critical Sequence Training for Image Captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7008–7024. [Google Scholar]

- Qin, Y.; Du, J.; Zhang, Y.; Lu, H. Look Back and Predict Forward in Image Captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 8367–8375. [Google Scholar]

- Yao, T.; Pan, Y.; Li, Y.; Mei, T. Exploring Visual Relationship for Image Captioning. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 684–699. [Google Scholar]

- Aneja, J.; Deshpande, A.; Schwing, A.G. Convolutional image captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 5561–5570. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 6000–6010. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the Annual Conference of the North American Chapter of the Association for Computational Linguistics, New Orleans, LA, USA, 1–6 June 2018; pp. 4171–4186. [Google Scholar]

- Huang, L.; Wang, W.; Chen, J.; Wei, X.Y. Attention on Attention for Image Captioning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4634–4643. [Google Scholar]

- Pan, Y.; Yao, T.; Li, Y.; Mei, T. X-Linear Attention Networks for Image Captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020; pp. 10971–10980. [Google Scholar]

- Herdade, S.; Kappeler, A.; Boakye, K.; Soares, J. Image Captioning: Transforming Objects into Words. Adv. Neural Inf. Process. Syst. 2019, 32, 11137–11147. [Google Scholar]

- Cornia, M.; Stefanini, M.; Baraldi, L.; Cucchiara, R. Meshed-Memory Transformer for Image Captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020; pp. 10578–10587. [Google Scholar]

- Cornia, M.; Baraldi, L.; Cucchiara, R. SMArT: Training Shallow Memory-aware Transformers for Robotic Explainability. In Proceedings of the IEEE International Conference on Robotics and Automation, Virtual, 31 May–31 August 2020; pp. 1128–1134. [Google Scholar]

- Luo, Y.; Ji, J.; Sun, X.; Cao, L.; Wu, Y.; Huang, F.; Lin, C.W.; Ji, R. Dual-Level Collaborative Transformer for Image Captioning. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; pp. 2286–2293. [Google Scholar]

- Barraco, M.; Stefanini, M.; Cornia, M.; Cascianelli, S.; Baraldi, L.; Cucchiara, R. CaMEL: Mean Teacher Learning for Image Captioning. In Proceedings of the International Conference on Pattern Recognition, Montreal, QC, Canada, 21–25 August 2022; pp. 4087–4094. [Google Scholar]

- Nguyen, V.Q.; Suganuma, M.; Okatani, T. GRIT: Faster and Better Image captioning Transformer Using Dual Visual Features. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 167–184. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations, Virtual, 3–7 May 2021. [Google Scholar]

- Liu, W.; Chen, S.; Guo, L.; Zhu, X.; Liu, J. CPTR: Full Transformer Network for Image Captioning. arXiv 2021, arXiv:2101.10804. [Google Scholar]

- Cornia, M.; Baraldi, L.; Cucchiara, R. Explaining Transformer-based Image Captioning Models: An Empirical Analysis. AI Commun. 2021, 35, 111–129. [Google Scholar] [CrossRef]

- Li, X.; Yin, X.; Li, C.; Zhang, P.; Hu, X.; Zhang, L.; Wang, L.; Hu, H.; Dong, L.; Wei, F.; et al. Oscar: Object-Semantics Aligned Pre-training for Vision-Language Tasks. In Proceedings of the European Conference on Computer Vision, Virtual, 23–28 August 2020; pp. 121–137. [Google Scholar]

- Zhang, P.; Li, X.; Hu, X.; Yang, J.; Zhang, L.; Wang, L.; Choi, Y.; Gao, J. VinVL: Revisiting visual representations in vision-language models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 5579–5588. [Google Scholar]

- Li, J.; Li, D.; Xiong, C.; Hoi, S. BLIP: Bootstrapping Language-Image Pre-training for Unified Vision-Language Understanding and Generation. In Proceedings of the International Conference on Machine Learning, Baltimore, MD, USA, 17–23 July 2022. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Shen, S.; Li, L.H.; Tan, H.; Bansal, M.; Rohrbach, A.; Chang, K.W.; Yao, Z.; Keutzer, K. How Much Can CLIP Benefit Vision-and-Language Tasks? In Proceedings of the International Conference on Learning Representations, Virtual, 25–29 April 2022. [Google Scholar]

- Cornia, M.; Baraldi, L.; Fiameni, G.; Cucchiara, R. Universal Captioner: Inducing Content-Style Separation in Vision-and-Language Model Training. arXiv 2022, arXiv:2111.12727. [Google Scholar]

- Sarto, S.; Cornia, M.; Baraldi, L.; Cucchiara, R. Retrieval-Augmented Transformer for Image Captioning. In Proceedings of the International Conference on Content-based Multimedia Indexing, Graz, Austria, 14–16 September 2022; pp. 1–7. [Google Scholar]

- Li, Y.; Pan, Y.; Yao, T.; Mei, T. Comprehending and Ordering Semantics for Image Captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 17990–17999. [Google Scholar]

- Hadi Kiapour, M.; Han, X.; Lazebnik, S.; Berg, A.C.; Berg, T.L. Where to buy it: Matching street clothing photos in online shops. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3343–3351. [Google Scholar]

- Liu, Z.; Luo, P.; Qiu, S.; Wang, X.; Tang, X. DeepFashion: Powering robust clothes recognition and retrieval with rich annotations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1096–1104. [Google Scholar]

- Hsiao, W.L.; Grauman, K. Creating capsule wardrobes from fashion images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7161–7170. [Google Scholar]

- Cucurull, G.; Taslakian, P.; Vazquez, D. Context-aware visual compatibility prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 12617–12626. [Google Scholar]

- De Divitiis, L.; Becattini, F.; Baecchi, C.; Del Bimbo, A. Garment Recommendation with Memory Augmented Neural Networks. In Proceedings of the International Conference on Pattern Recognition, Virtual, 10–15 January 2021; pp. 282–295. [Google Scholar]

- De Divitiis, L.; Becattini, F.; Baecchi, C.; Bimbo, A.D. Disentangling Features for Fashion Recommendation. ACM Trans. Multimed. Comput. Commun. Appl. 2022. [Google Scholar] [CrossRef]

- Zhuge, M.; Gao, D.; Fan, D.P.; Jin, L.; Chen, B.; Zhou, H.; Qiu, M.; Shao, L. Kaleido-BERT: Vision-Language Pre-Training on Fashion Domain. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 12647–12657. [Google Scholar]

- Han, X.; Yu, L.; Zhu, X.; Zhang, L.; Song, Y.Z.; Xiang, T. FashionViL: Fashion-Focused Vision-and-Language Representation Learning. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 634–651. [Google Scholar]

- Mirchandani, S.; Yu, L.; Wang, M.; Sinha, A.; Jiang, W.; Xiang, T.; Zhang, N. FaD-VLP: Fashion Vision-and-Language Pre-training towards Unified Retrieval and Captioning. arXiv 2022, arXiv:2210.15028. [Google Scholar]

- Chia, P.J.; Attanasio, G.; Bianchi, F.; Terragni, S.; Magalhães, A.R.; Goncalves, D.; Greco, C.; Tagliabue, J. FashionCLIP: Connecting Language and Images for Product Representations. arXiv 2022, arXiv:2204.03972. [Google Scholar]

- Cai, C.; Yap, K.H.; Wang, S. Attribute Conditioned Fashion Image Captioning. In Proceedings of the International Conference on Image Processing, Bordeaux, France, 16–19 October 2022; pp. 1921–1925. [Google Scholar]

- Sennrich, R.; Haddow, B.; Birch, A. Neural Machine Translation of Rare Words with Subword Units. In Proceedings of the Annual Meeting on Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016; pp. 1715–1725. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. BLEU: A method for automatic evaluation of machine translation. In Proceedings of the Annual Meeting on Association for Computational Linguistics, Philadelphia, PA, USA, 6–12 July 2002; pp. 311–318. [Google Scholar]

- Banerjee, S.; Lavie, A. METEOR: An automatic metric for MT evaluation with improved correlation with human judgments. In Proceedings of the Annual Meeting on Association for Computational Linguistics Workshops, Ann Arbor, MI, USA, 25–30 June 2005; pp. 65–72. [Google Scholar]

- Lin, C.Y. Rouge: A package for automatic evaluation of summaries. In Proceedings of the Annual Meeting on Association for Computational Linguistics Workshops, Barcelona, Spain, 21–26 July 2004; pp. 74–81. [Google Scholar]

- Vedantam, R.; Lawrence Zitnick, C.; Parikh, D. CIDEr: Consensus-based Image Description Evaluation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4566–4575. [Google Scholar]

- Wortsman, M.; Ilharco, G.; Kim, J.W.; Li, M.; Kornblith, S.; Roelofs, R.; Lopes, R.G.; Hajishirzi, H.; Farhadi, A.; Namkoong, H.; et al. Robust fine-tuning of zero-shot models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 7959–7971. [Google Scholar]

- Schuhmann, C.; Beaumont, R.; Vencu, R.; Gordon, C.; Wightman, R.; Cherti, M.; Coombes, T.; Katta, A.; Mullis, C.; Wortsman, M.; et al. LAION-5B: An open large-scale dataset for training next generation image–text models. arXiv 2022, arXiv:2210.08402. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Johnson, J.; Douze, M.; Jégou, H. Billion-Scale Similarity Search with GPUs. IEEE Trans. Big Data 2019, 7, 535–547. [Google Scholar] [CrossRef]

| Validation Set | Test Set | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Retrieval | B-1 | B-4 | M | R | C | B-1 | B-4 | M | R | C | |

| CLIP ViT-L/14 | attributes | 26.2 | 10.0 | 10.9 | 21.4 | 75.6 | 24.8 | 8.1 | 10.2 | 20.2 | 63.1 |

| chunks | 26.1 | 10.4 | 10.8 | 21.3 | 78.9 | 25.0 | 8.3 | 10.2 | 20.3 | 67.0 | |

| OpenCLIP ViT-L/14 | attributes | 28.6 | 12.1 | 12.3 | 23.4 | 93.1 | 27.4 | 10.2 | 11.6 | 22.4 | 80.9 |

| chunks | 28.9 | 13.0 | 12.4 | 23.7 | 100.9 | 27.3 | 10.6 | 11.5 | 22.3 | 84.5 | |

| Validation Set | Test Set | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Retrieval | B-1 | B-4 | M | R | C | B-1 | B-4 | M | R | C | |

| CLIP ViT-B/32 | ✗ | 22.6 | 5.5 | 8.9 | 17.6 | 39.7 | 21.6 | 4.1 | 8.4 | 16.9 | 32.4 |

| ✓ | 24.5 | 9.2 | 9.9 | 19.9 | 69.3 | 23.5 | 7.5 | 9.3 | 18.9 | 57.3 | |

| (+1.9) | (+3.7) | (+1.0) | (+2.3) | (+29.6) | (+1.9) | (+3.4) | (+0.9) | (+2.0) | (+24.9) | ||

| OpenCLIP ViT-B/32 | ✓ | 24.5 | 7.4 | 10.0 | 19.9 | 55.2 | 23.2 | 5.2 | 9.3 | 18.2 | 40.8 |

| ✓ | 27.1 | 11.2 | 11.4 | 22.0 | 85.8 | 25.8 | 9.3 | 10.7 | 21.0 | 73.1 | |

| (+2.6) | (+3.8) | (+1.4) | (+2.1) | (+30.6) | (+2.6) | (+4.1) | (+1.4) | (+2.8) | (+32.3) | ||

| CLIP ViT-L/14 | ✗ | 24.1 | 7.1 | 9.8 | 19.2 | 52.3 | 22.7 | 5.0 | 9.0 | 18.0 | 39.3 |

| ✓ | 26.1 | 10.4 | 10.8 | 21.3 | 78.9 | 25.0 | 8.3 | 10.2 | 20.3 | 67.0 | |

| (+2.0) | (+3.3) | (+1.0) | (+2.1) | (+26.6) | (+2.3) | (+3.3) | (+1.2) | (+2.3) | (+27.7) | ||

| OpenCLIP ViT-L/14 | ✗ | 25.9 | 8.9 | 10.8 | 20.9 | 67.4 | 24.5 | 6.8 | 10.1 | 19.7 | 53.0 |

| ✓ | 28.9 | 13.0 | 12.4 | 23.7 | 100.9 | 27.3 | 10.6 | 11.5 | 22.3 | 84.5 | |

| (+3.0) | (+4.1) | (+1.6) | (+2.8) | (+33.5) | (+2.8) | (+3.8) | (+1.4) | (+2.6) | (+31.5) | ||

| B-1 | B-4 | M | R | C | mAP | |

|---|---|---|---|---|---|---|

| Show, Attend, and Tell [12] | - | 4.3 | 9.5 | 19.1 | 35.2 | 0.056 |

| Up–Down [13] | - | 4.4 | 9.7 | 19.6 | 36.9 | 0.058 |

| LBPF [22] | - | 4.5 | 9.5 | 19.1 | 36.4 | 0.055 |

| ORT [29] | - | 4.2 | 10.2 | 19.9 | 36.7 | 0.061 |

| SRFC [14] | - | 4.4 | 9.8 | 20.2 | 35.6 | 0.058 |

| SCST [21] | - | 5.6 | 11.8 | 22.0 | 39.7 | 0.080 |

| SRFC (RL-fine-tuned) [14] | - | 6.8 | 13.2 | 24.2 | 42.1 | 0.095 |

| Transformer | 24.5 | 6.8 | 10.1 | 19.7 | 53.0 | 0.238 |

| Transformer [30] | 24.7 | 6.8 | 10.4 | 19.9 | 53.3 | 0.237 |

| CaMEL [33] | 25.0 | 7.0 | 10.7 | 20.4 | 55.0 | 0.241 |

| Ours | 27.3 | 10.6 | 11.5 | 22.3 | 84.5 | 0.248 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moratelli, N.; Barraco, M.; Morelli, D.; Cornia, M.; Baraldi, L.; Cucchiara, R. Fashion-Oriented Image Captioning with External Knowledge Retrieval and Fully Attentive Gates. Sensors 2023, 23, 1286. https://doi.org/10.3390/s23031286

Moratelli N, Barraco M, Morelli D, Cornia M, Baraldi L, Cucchiara R. Fashion-Oriented Image Captioning with External Knowledge Retrieval and Fully Attentive Gates. Sensors. 2023; 23(3):1286. https://doi.org/10.3390/s23031286

Chicago/Turabian StyleMoratelli, Nicholas, Manuele Barraco, Davide Morelli, Marcella Cornia, Lorenzo Baraldi, and Rita Cucchiara. 2023. "Fashion-Oriented Image Captioning with External Knowledge Retrieval and Fully Attentive Gates" Sensors 23, no. 3: 1286. https://doi.org/10.3390/s23031286

APA StyleMoratelli, N., Barraco, M., Morelli, D., Cornia, M., Baraldi, L., & Cucchiara, R. (2023). Fashion-Oriented Image Captioning with External Knowledge Retrieval and Fully Attentive Gates. Sensors, 23(3), 1286. https://doi.org/10.3390/s23031286