Edge-Cloud Collaborative Defense against Backdoor Attacks in Federated Learning

Abstract

:1. Introduction

- We mainly remove the correlation between the backdoor samples and the attack target at the edge and repair the model accuracy by self-distillation to resist the backdoor attack of the edge intelligent service.

- Our framework is more suitable for the edge collaborative computing scenario of intelligent services.

- We propose the idea of a layered defense backdoor to obtain the cooperative training model more safely.

2. Related Work

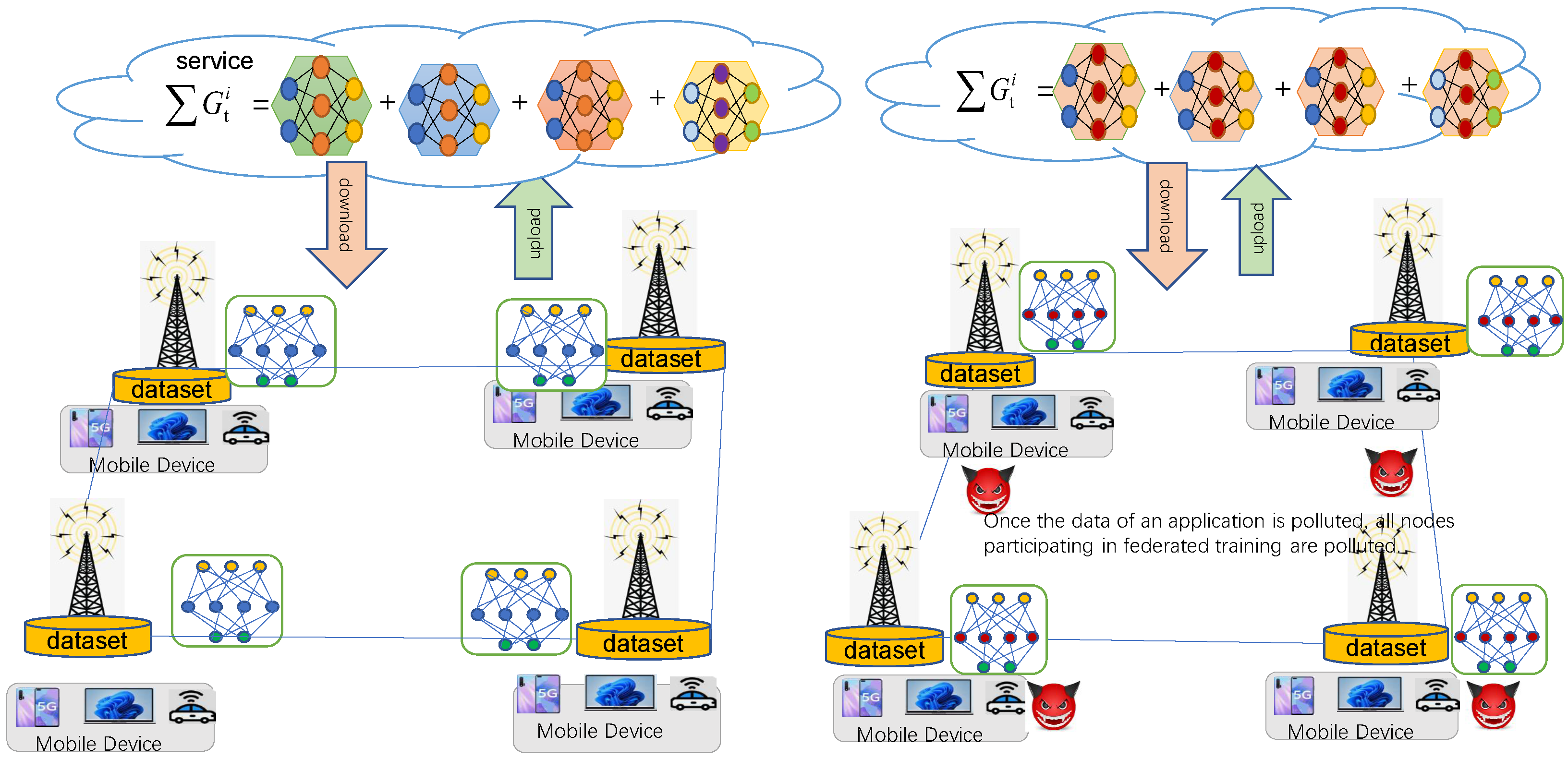

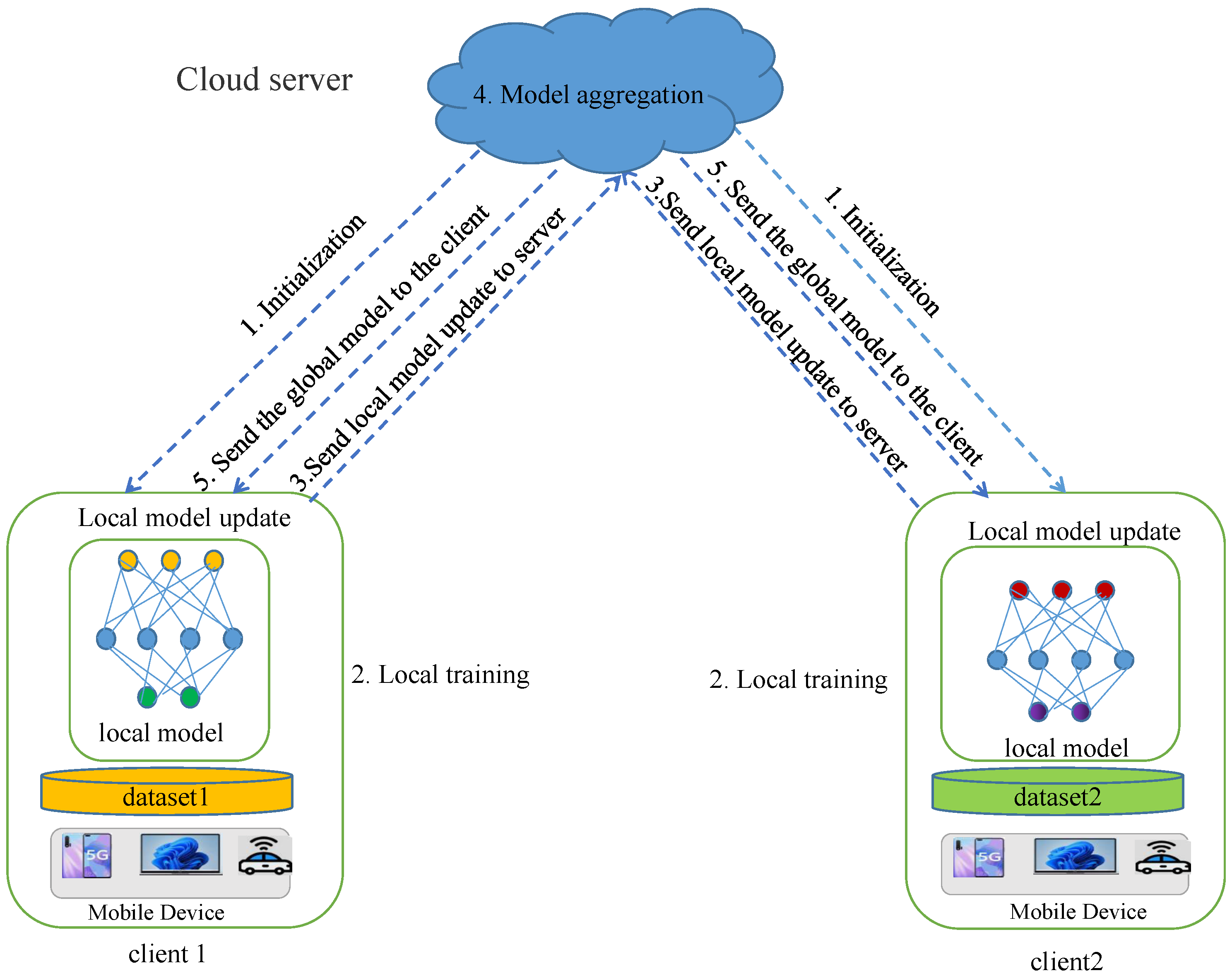

2.1. Federated Learning

2.2. FL Backdoor Attack

2.3. FL Backdoor Defense

3. Problem Setting and Objectives

3.1. Problem Definition

3.2. Threat Model

4. Proposed Method

4.1. Overview

4.2. Backdoor Defense Based on Edge Computing Services

4.3. Cloud Service Defense

5. Experimental Evaluation

5.1. Environment Settings

5.1.1. Datasets and Models

5.1.2. Attack Details

5.1.3. Evaluation Metrics

5.1.4. Attack Scenario

5.1.5. Defense Methods

5.2. Experimental Results

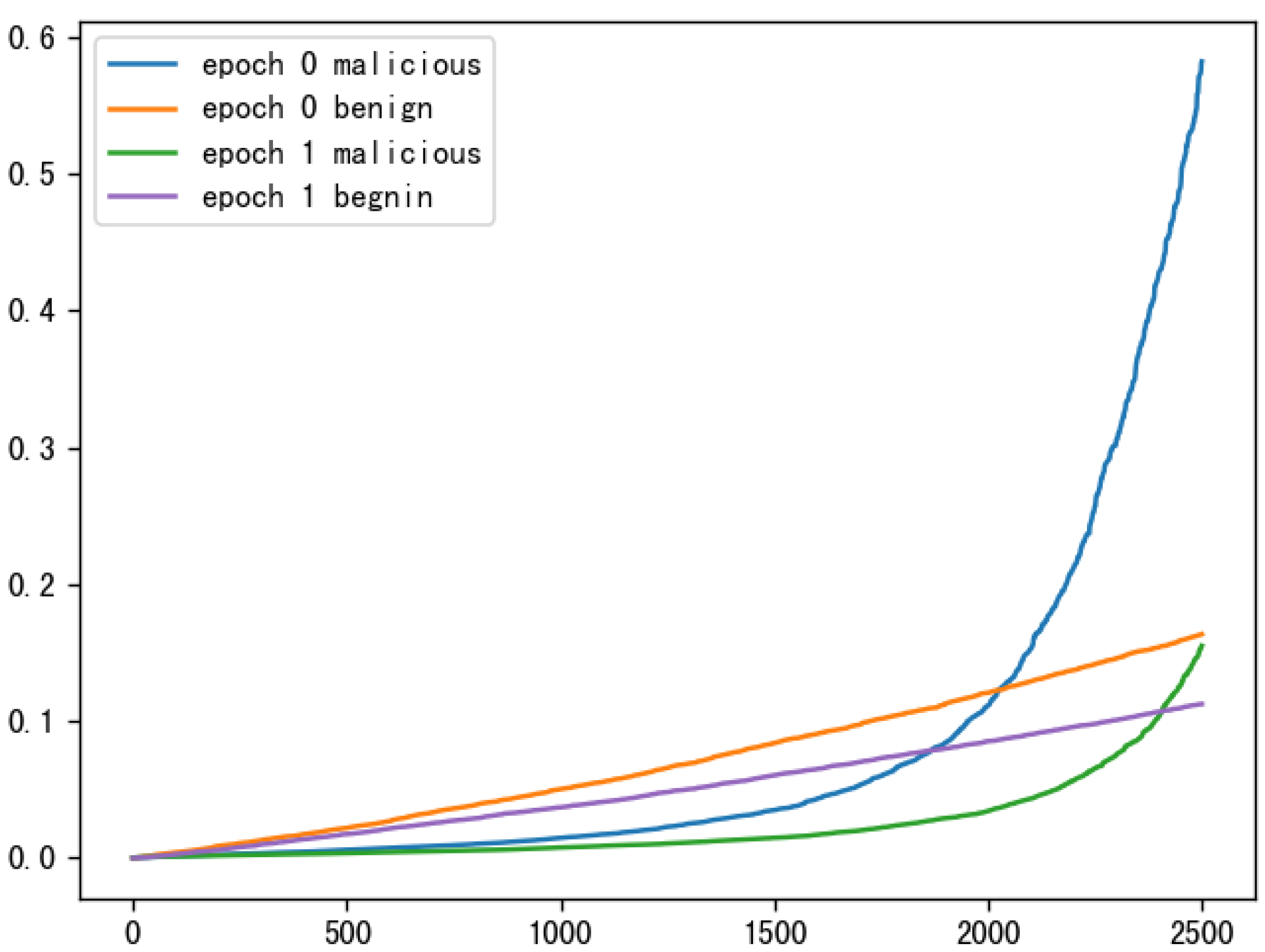

5.2.1. Effectiveness of Layered Defense

5.2.2. The Necessity of Edge Model Self-Distillation

5.2.3. The Necessity of Cloud Server Defense

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| Notations | Meaning |

| N | N Participated IIoT applications. |

| D | Total number of training samples |

| Training data set Di of each edge node | |

| Data set size of edge node | |

| L | Total training samples |

| Initialize global model parameters | |

| t | tth Round of the federated training process. |

| m | Selected applications in one round |

| tth round global model. | |

| The edge node trains the local data on the model of round t to obtain the | |

| model update of round | |

| th round global model. | |

| a | Learning rate of the global model |

| the incorrect prediction chosen by adversary | |

| x | input |

| G | clean model |

| poisoned model | |

| trigger set | |

| correct prediction f | |

| incorrect predictions f | |

| the accuracy both on main task and backdoor task | |

| evades abnormal detection | |

| trade off hyperparameter | |

| the adversarial loss | |

| the target label | |

| normal training loss | |

| y | real label |

| difference between clean and rear door samples | |

| backdoor learning process minimizes the loss of clean samples and maximizes | |

| the loss of backdoor samples | |

| t | current training epoch |

| LGA’s loss threshold | |

| T | Total number of training iterations |

| isolated backdoor set | |

| Local Edge Model | |

| Local edge poisoning model | |

| activation output of the mth layer of the network | |

| channel, height, and width | |

| channel, height, and width | |

| channel, height, and width | |

| g | mapping function |

| Minimize distillation losses | |

| Construction of Attention Map | |

| L2 norm loss | |

| s | the true classification |

| b | represents the existence of the category, representing the existence of the |

| category predicted by the neural network | |

| Attention Distillation Loss | |

| Overall Training Loss | |

| binomial cross entropy loss function | |

| adjust and balance the three losses |

References

- Reus-Muns, G.; Jaisinghani, D.; Sankhe, K.; Chowdhury, K.R. Trust in 5G open RANs through machine learning: RF fingerprinting on the POWDER PAWR platform. In Proceedings of the GLOBECOM 2020-2020 IEEE Global Communications Conference, Taipei, Taiwan, 7–11 December 2020; Volume 8, pp. 1–6. [Google Scholar] [CrossRef]

- Gul, O.M.; Kulhandjian, M.; Kantarci, B.; Touazi, A.; Ellement, C.; D’Amours, C. Fine-grained Augmentation for RF Fingerprinting under Impaired Channels. In Proceedings of the 2022 IEEE 27th International Workshop on Computer Aided Modeling and Design of Communication Links and Networks (CAMAD), Paris, France, 2–3 November 2022; Volume 8, pp. 115–120. [Google Scholar] [CrossRef]

- Comert, C.; Kulhandjian, M.; Gul, O.M.; Touazi, A.; Ellement, C.; Kantarci, B.; D’Amours, C. Analysis of Augmentation Methods for RF Fingerprinting under Impaired Channels. In Proceedings of the 2022 ACM Workshop on Wireless Security and Machine Learning (WiseML ’22), San Antonio, TX, USA, 19 May 2022; Volume 8, pp. 3–8. [Google Scholar] [CrossRef]

- Alwarafy, A.; Al-Thelaya, K.A.; Abdallah, M.; Schneider, J.; Hamdi, M. A Survey on Security and Privacy Issues in Edge-Computing-Assisted Internet of Things. IEEE Access 2021, 8, 4004–4022. [Google Scholar] [CrossRef]

- Sun, H.; Tan, Y.-a.; Zhu, L.; Zhang, Q.; Li, Y.; Wu, S. A fine-grained and traceable multidomain secure data-sharing model for intelligent terminals in edge-cloud collaboration scenarios. Int. J. Intell. Syst. 2022, 37, 2543–2566. [Google Scholar] [CrossRef]

- Maamar, Z.; Baker, T.; Faci, N.; Ugljanin, E.; Khafajiy, M.A.; Burégio, V. Towards a seamless coordination of cloud and fog: Illustration through the internet-of-things. In Proceedings of the 34th ACM/SIGAPP Symposium on Applied Computing, Limassol, Cyprus, 8–12 April 2019; pp. 2008–2015. [Google Scholar] [CrossRef]

- Yao, J.; Zhang, S.; Yao, Y.; Wang, F.; Ma, J.; Zhang, J.; Chu, Y.; Ji, L.; Jia, K.; Shen, T.; et al. Edge-Cloud Polarization and Collaboration: A Comprehensive Survey for AI. IEEE Trans. Knowl. Data Eng. 2022, 3, 5. [Google Scholar] [CrossRef]

- Wang, J.; He, D.; Castiglione, A.; Gupta, B.B.; Karuppiah, M.; Wu, L. PCNNCEC: Efficient and Privacy-Preserving Convolutional Neural Network Inference Based on Cloud-Edge-Client Collaboration. IEEE Trans. Netw. Sci. Eng. 2022. [Google Scholar] [CrossRef]

- Yang, Q.; Liu, Y.; Chen, T.; Tong, Y. Federated machine learning: Concept and applications. ACM Trans. Intell. Syst. Technol. 2019, 10, 1–19. [Google Scholar] [CrossRef]

- Sun, H.; Li, S.; Yu, F.R.; Qi, Q.; Wang, J.; Liao, J. Toward communication-efficient federated learning in the Internet of Things with edge computing. IEEE Internet Things J. 2020, 7, 11053–11067. [Google Scholar] [CrossRef]

- Ye, Y.; Li, S.; Liu, F.; Tang, Y.; Hu, W. EdgeFed: Optimized federated learning based on edge computing. IEEE Access 2020, 8, 209191–209198. [Google Scholar] [CrossRef]

- Wang, Y.; Tan, Y.-a.; Baker, T.; Kumar, N.; Zhang, Q. Deep Fusion: Crafting Transferable Adversarial Examples and Improving Robustness of Industrial Artificial Intelligence of Things. IEEE Trans. Ind. Inform. 2022, 37, 1. [Google Scholar] [CrossRef]

- Yang, J.; Zheng, J.; Baker, T.; Tang, S.; Tan, Y.-a.; Zhang, Q. Clean-label poisoning attacks on federated learning for IoT. Expert Syst. 2022, 37, 9290–9308. [Google Scholar] [CrossRef]

- Bagdasaryan, E.; Veit, A.; Hua, Y.; Estrin, D.; Shmatikov, V. How to backdoor federated learning. Int. Conf. Artif. Intell. Stat. 2020, 2938–2948. [Google Scholar]

- Suresh, A.T.; McMahan, B.; Kairouz, P.; Sun, Z. Can you really backdoor federated learning? arXiv 2019, arXiv:1911.07963. [Google Scholar]

- Zeng, Y.; Pan, M.; Just, H.A.; Lyu, L.; Qiu, M.; Jia, R. NARCISSUS: A Practical Clean-Label Backdoor Attack with Limited Information. arXiv 2022, arXiv:2204.05255. [Google Scholar]

- Zhang, Q.; Ma, W.; Wang, Y.; Zhang, Y.; Shi, Z.; Li, Y. Backdoor Attacks on Image Classification Models in Deep Neural Networks. Chin. J. Electron. 2022, 31, 199–212. [Google Scholar] [CrossRef]

- Andreina, S.; Marson, G.A.; Möllering, H.; Karame, G. Baffle: Backdoor detection via feedback-based federated learning. In Proceedings of the 2021 IEEE 41st International Conference on Distributed Computing Systems (ICDCS), Washington, DC, USA, 7–10 July 2021; Volume 1, pp. 163–169. [Google Scholar] [CrossRef]

- Zhao, Y.; Xu, K.; Wang, H.; Li, B.; Jia, R. Stability-based analysis and defense against backdoor attacks on edge computing services. IEEE Netw. 2021, 1, 163–169. [Google Scholar] [CrossRef]

- Nguyen, T.D.; Rieger, P.; Chen, H.; Yalame, H.; Möllering, H.; Fereidooni, H.; Marchal, S.; Miettinen, M.; Mirhoseini, A.; Zeitouni, S.; et al. {FLAME}: Taming Backdoors in Federated Learning. In Proceedings of the 31st USENIX Security Symposium (USENIX Security 22), Boston, MA, USA, 10–12 August 2022; pp. 1415–1432. [Google Scholar]

- Jebreel, N.; Domingo-Ferrer, J. FL-Defender: Combating Targeted Attacks in Federated Learning. arXiv 2022, arXiv:2207.00872. [Google Scholar] [CrossRef]

- Rieger, P.; Nguyen, T.D.; Miettinen, M.; Sadeghi, A.-R. Deepsight: Mitigating backdoor attacks in federated learning through deep model inspection. arXiv 2022, arXiv:2201.00763. [Google Scholar]

- Wang, Y.; Shi, H.; Min, R.; Wu, R.; Liang, S.; Wu, Y.; Liang, D.; Liu, A. Adaptive Perturbation Generation for Multiple Backdoors Detection. arXiv 2022, arXiv:2209.05244. [Google Scholar]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. Artif. Intell. Stat. 2017, 1, 1273–1282. [Google Scholar] [CrossRef]

- Zhang, Z.; Yang, R.; Zhang, X.; Li, C.; Huang, Y.; Yang, L. Backdoor Federated Learning-Based mmWave Beam Selection. IEEE Trans. Commun. 2022, 70, 6563–6578. [Google Scholar] [CrossRef]

- Li, Y.; Jiang, Y.; Li, Z.; Xia, S.-T. Backdoor learning: A survey. IEEE Trans. Neural Netw. Learn. Syst. 2022, 1, 1–18. [Google Scholar] [CrossRef]

- Xie, C.; Huang, K.; Chen, P.-Y.; Li, B. Dba: Distributed backdoor attacks against federated learning. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Fung, C.; Yoon, C.J.M.; Beschastnikh, I. Mitigating sybils in federated learning poisoning. arXiv 2018, arXiv:1808.04866. [Google Scholar]

- Goldblum, M.; Tsipras, D.; Xie, C.; Chen, X.; Schwarzschild, A.; Song, D.; Madry, A.; Li, B.; Goldstein, T. Dataset security for machine learning: Data poisoning, backdoor attacks, and defenses. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 1, 1. [Google Scholar] [CrossRef]

- Muñoz-González, L.; Co, K.T.; Lupu, E.C. Byzantine-robust federated machine learning through adaptive model averaging. arXiv 2019, arXiv:1909.05125. [Google Scholar]

- Hou, B.; Gao, J.; Guo, X.; Baker, T.; Zhang, Y.; Wen, Y.; Liu, Z. Mitigating the backdoor attack by federated filters for industrial IoT applications. IEEE Trans. Ind. Inform. 2021, 18, 3562–3571. [Google Scholar] [CrossRef]

- Nguyen, T.D.; Rieger, P.; Yalame, H.; Möllering, H.; Fereidooni, H.; Marchal, S.; Miettinen, M.; Mirhoseini, A.; Sadeghi, A.-R.; Schneider, T.; et al. Flguard: Secure and private federated learning. arXiv 2021, arXiv:2101.02281. [Google Scholar]

- Gao, J.; Zhang, B.; Guo, X.; Baker, T.; Li, M.; Liu, Z. Secure Partial Aggregation Making Federated Learning More Robust for Industry 4 Applications. IEEE Trans. Ind. Inform. 2022, 18, 6340–6348. [Google Scholar] [CrossRef]

- Mi, Y.; Guan, J.; Zhou, S. ARIBA: Towards Accurate and Robust Identification of Backdoor Attacks in Federated Learning. arXiv 2022, arXiv:2202.04311. [Google Scholar]

- Wu, C.; Zhu, S.; Mitra, P. Federated Unlearning with Knowledge Distillation. arXiv 2022, arXiv:2201.09441. [Google Scholar]

- Zhao, C.; Wen, Y.; Li, S.; Liu, F.; Meng, D. Federatedreverse: A detection and defense method against backdoor attacks in federated learning. In Proceedings of the 2021 ACM Workshop on Information Hiding and Multimedia Security, Virtual, 22–25 June 2021; pp. 51–62. [Google Scholar] [CrossRef]

- Li, Y.; Lyu, X.; Koren, N.; Lyu, L.; Li, B.; Ma, X. Anti-backdoor learning: Training clean models on poisoned data. Adv. Neural Inf. Process. Syst. 2021, 34, 14900–14912. [Google Scholar]

- An, S.; Liao, Q.; Lu, Z.; Xue, J.-H. Efficient Semantic Segmentation via Self-Attention and Self-Distillation. IEEE Trans. Intell. Transp. Syst. 2022, 23, 15256–15266. [Google Scholar] [CrossRef]

- Jebreel, N.M.; Domingo-Ferrer, J.; Sánchez, D.; Blanco-Justicia, A. Defending against the label-flipping attack in federated learning. arXiv 2022, arXiv:2207.01982. [Google Scholar]

- Fung, C.; Yoon, C.J.M.; Beschastnikh, I. Evil vs. evil: Using adversarial examples to against backdoor attack in federated learning. Multimed. Syst. 2022, 1, 1–16. [Google Scholar] [CrossRef]

- Lu, S.; Li, R.; Liu, W.; Chen, X. Defense against backdoor attack in federated learning. Comput. Secur. 2022, 121, 102819. [Google Scholar] [CrossRef]

| Dataset | Labels Number | Training Samples | Testing Samples | Model Structure |

|---|---|---|---|---|

| CIFAR-10 | 10 | 50,000 | 10,000 | ResNet-18 |

| Dataset | Types | No Defense | FL-Defender [21] | FLPA-SM [41] | Layered Defense (Ours) | ||||

|---|---|---|---|---|---|---|---|---|---|

| ASR | CA | ASR | CA | ASR | CA | ASR | CA | ||

| Cifar-10 | No Attack | 0% | 82.16% | 0% | 80.0% | 0% | 80.01% | 0% | 82.16% |

| Label flip attack [39] | 100% | 74.35% | 6.72% | 76.1% | 6.32.0% | 52.31% | 4.98% | 80.01% | |

| clean label attack [13] | 81.67% | 88.43% | 68.22% | 64.71% | 82.06% | 63.55% | 8.22% | 75.98% | |

| Constrain-and-scale [14] | 99.56% | 81.85% | 63.45% | 72.34% | 81.56.0% | 52.37% | 3.44% | 80.26% | |

| Dataset | Types | No Defense | Edge Defense | Edge Distillation | |||

|---|---|---|---|---|---|---|---|

| ASR | CA | ASR | CA | ASR | CA | ||

| Cifar-10 | No Attack | 0% | 82.16% | 0% | 78.31% | 0% | 82.01% |

| Label flip attack [39] | 99.3% | 74.35% | 6.31% | 58.90% | 6.33% | 80.22% | |

| clean label attack [13] | 81.67% | 88.43% | 8.77% | 55.33% | 10.96% | 79.89% | |

| Constrain-and-scale [14] | 99.56% | 81.85% | 2.71% | 50.32% | 5.44% | 81.33% | |

| Datdset | Type | Server Defence | Server Defense Distillation | ||

|---|---|---|---|---|---|

| ASR | CA | ASR | CA | ||

| Cifar10 | No attack | 0% | 82.16% | - | - |

| Constrain-and-scale [14] | 2.64% | 79.37% | 3.49% | 81.68% | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, J.; Zheng, J.; Wang, H.; Li, J.; Sun, H.; Han, W.; Jiang, N.; Tan, Y.-A. Edge-Cloud Collaborative Defense against Backdoor Attacks in Federated Learning. Sensors 2023, 23, 1052. https://doi.org/10.3390/s23031052

Yang J, Zheng J, Wang H, Li J, Sun H, Han W, Jiang N, Tan Y-A. Edge-Cloud Collaborative Defense against Backdoor Attacks in Federated Learning. Sensors. 2023; 23(3):1052. https://doi.org/10.3390/s23031052

Chicago/Turabian StyleYang, Jie, Jun Zheng, Haochen Wang, Jiaxing Li, Haipeng Sun, Weifeng Han, Nan Jiang, and Yu-An Tan. 2023. "Edge-Cloud Collaborative Defense against Backdoor Attacks in Federated Learning" Sensors 23, no. 3: 1052. https://doi.org/10.3390/s23031052

APA StyleYang, J., Zheng, J., Wang, H., Li, J., Sun, H., Han, W., Jiang, N., & Tan, Y.-A. (2023). Edge-Cloud Collaborative Defense against Backdoor Attacks in Federated Learning. Sensors, 23(3), 1052. https://doi.org/10.3390/s23031052