Communication between Autonomous Vehicles and Pedestrians: An Experimental Study Using Virtual Reality

Abstract

1. Introduction

2. Literature Review

3. Method

- Set up a 360° space using an existing urban designated pedestrian crossing.

- Set up the VR environment and create the four scenarios.

- Give instructions to the subjects participating in the experiment.

- Show the scenarios to the subjects one by one.

- Administer the questionnaire survey.

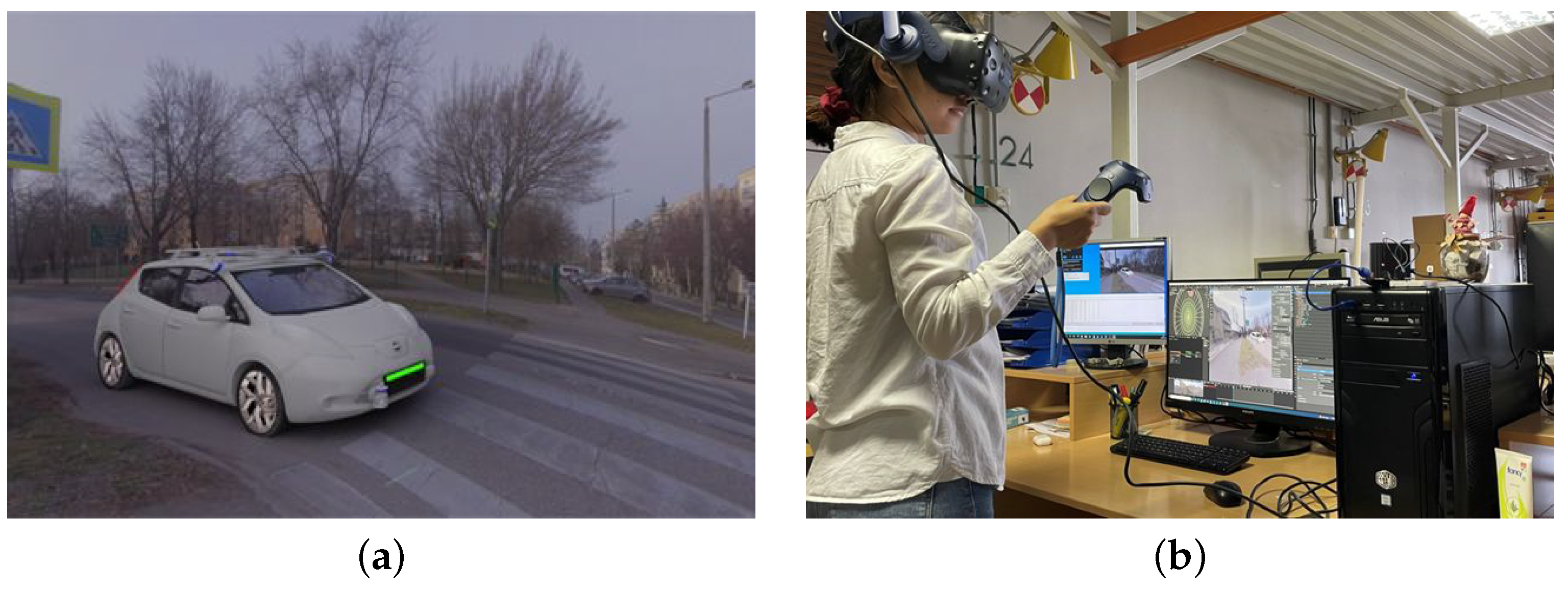

3.1. VR Space

3.2. Scenarios

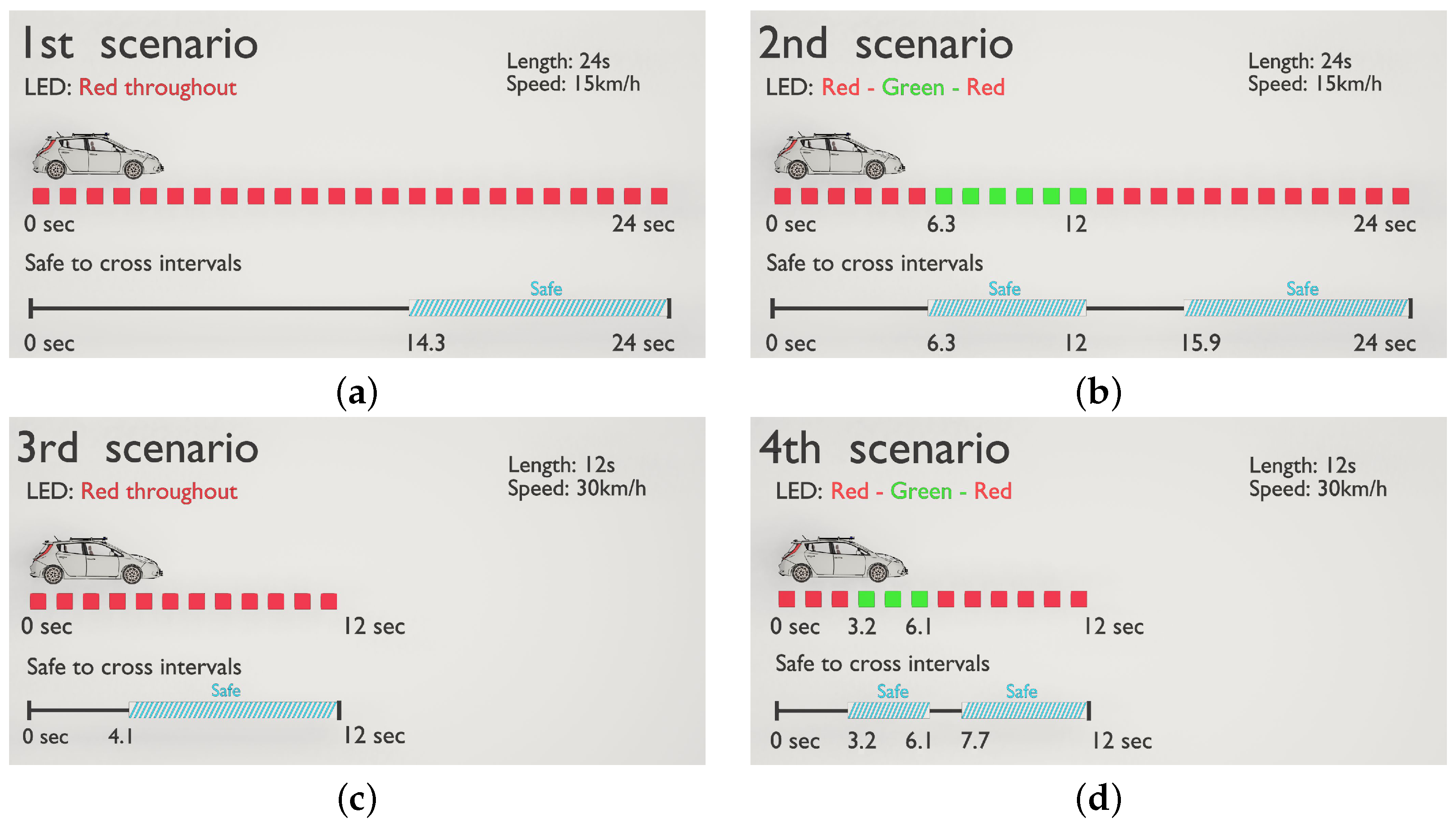

- Scenario 1: This scenario was 24 s long, where the AV approached the pedestrian crossing at a speed of 15 km/h, LED signal was red throughout. The AV did not stop and yield, it was safe to cross the pedestrian crossing after the vehicle left, this was after 14.3 s.

- Scenario 2: This scenario was 24 s long, where the AV approached the pedestrian crossing at a speed of 15 km/h, with a red LED signal. At 6.3 s, the vehicle made a stop at the pedestrian crossing and the LED signal turned green. At 12 s, the LED turned red and the AV started to move again. It was safe to cross in between 6.3 and 12 s, and technically also after the back of the vehicle left the pedestrian crossing, which took place at 15.9 s.

- Scenario 3: This scenario is similar to Scenario 1, with the exception that it was 12 s long, and that the speed of the vehicle was 30 km/h, LED signal was red throughout. It was safe to cross the pedestrian crossing after 4.1 s, when the vehicle left the area of the pedestrian crossing.

- Scenario 4: This scenario is similar to Scenario 2, however it was 12 s long, and the AV’s speed was 30 km/h. After 3.2 s, the vehicle made a stop at the pedestrian crossing and the LED signal turned green. At 6.1 s, the LED turned red and the AV started to move again. It was safe to cross in between these two time instants, but also after the back of the vehicle left the crossing, which took place at 7.7 s.

3.3. Survey

- I cannot imagine relying on this kind of equipment, only if the car has stopped am I willing to cross (limited trust in LED light display).

- After some time of getting familiar with the LED, I think I would rely on it and cross the road when seeing the green light (moderate trust in LED light display).

- The message was clear, I would cross the road without hesitating (trust in LED light display).

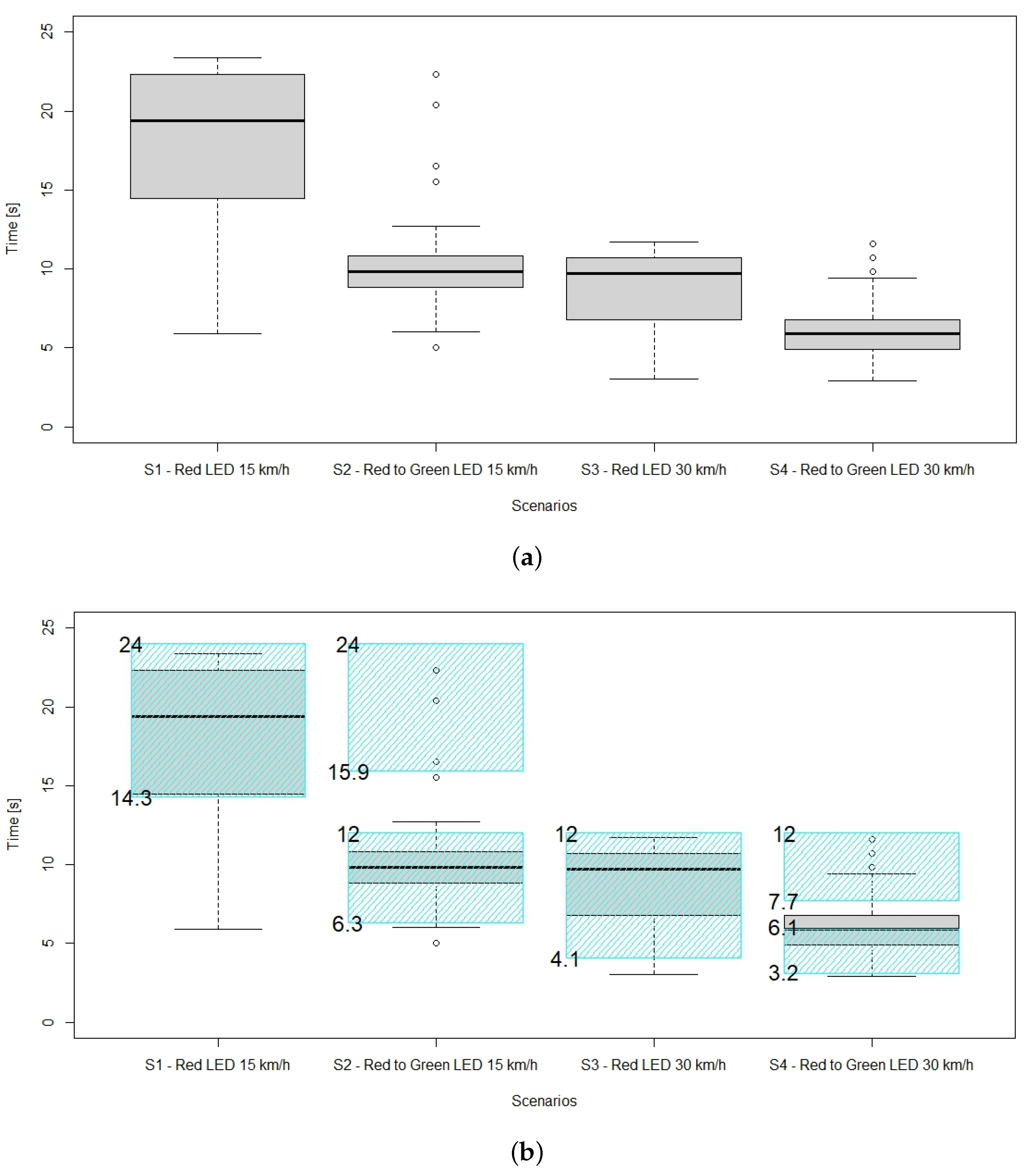

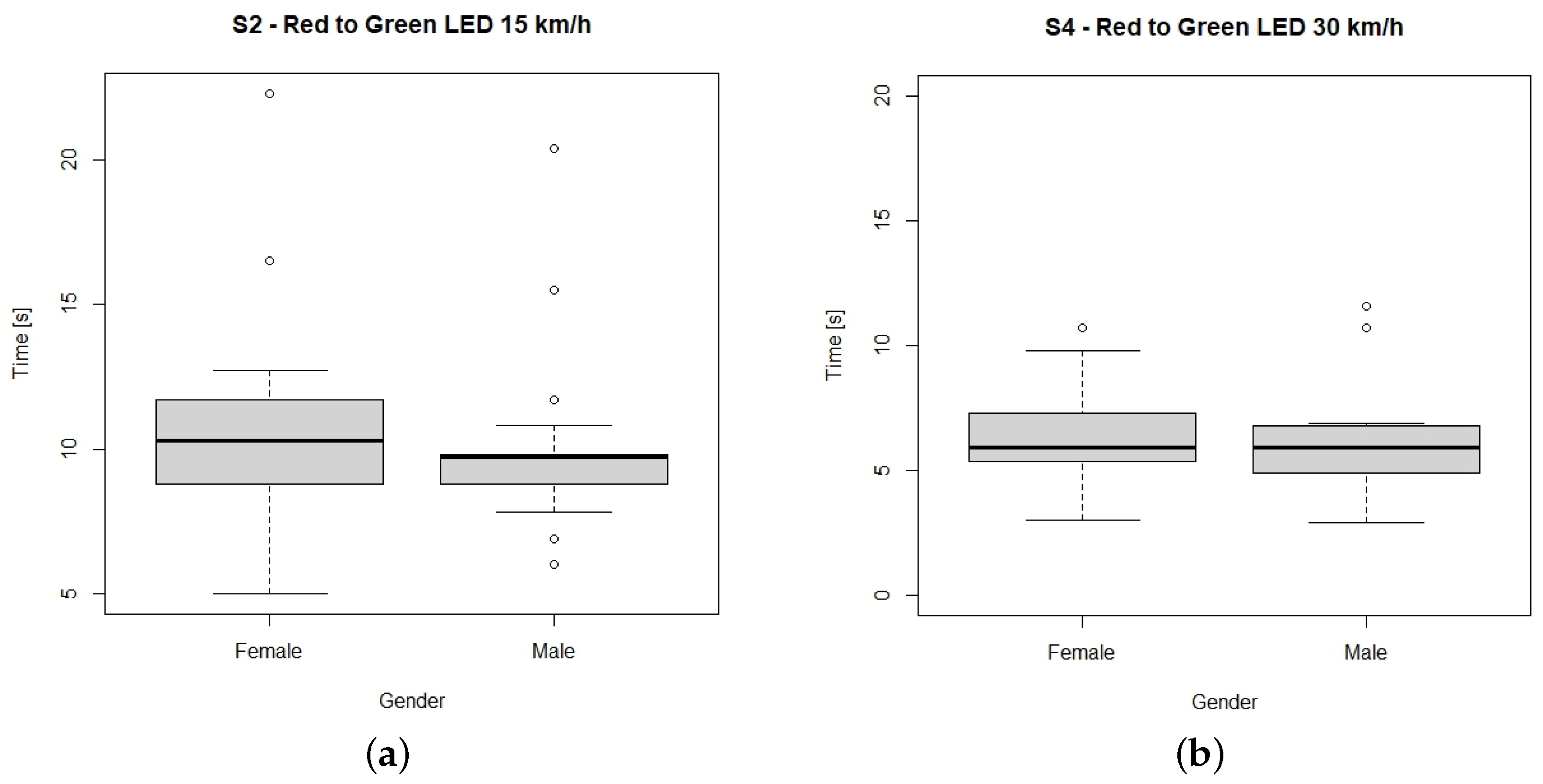

4. Results

5. Discussion and Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- WHO. Global Status Report on Road Safety 2018; WHO: Geneva, Switzerland, 2018. [Google Scholar]

- Tabone, W.; de Winter, J.; Ackermann, C.; Bärgman, J.; Baumann, M.; Deb, S.; Emmenegger, C.; Habibovic, A.; Hagenzieker, M.; Hancock, P.A.; et al. Vulnerable road users and the coming wave of automated vehicles: Expert perspectives. Transp. Res. Interdiscip. Perspect. 2021, 9, 100293. [Google Scholar] [CrossRef]

- Song, Y.E.; Lehsing, C.; Fuest, T.; Bengler, K. External HMIs and their effect on the interaction between pedestrians and automated vehicles. Adv. Intell. Syst. Comput. 2018, 722, 13–18. [Google Scholar] [CrossRef]

- Hochman, M.; Parmet, Y.; Oron-gilad, T. Pedestrians’ Understanding of a Fully Autonomous Vehicle’ s Intent to Stop: Utilizing Video-based Crossing Scenarios. In Proceedings of the RSS 2022, Athens, Greece, 8–10 June 2022. [Google Scholar]

- Clamann, M.; Aubert, M.; Cummings, M. Evaluation of Vehicle-to-Pedestrian Communication Displays for Autonomous Vehicles. In Proceedings of the 96th Annual Transportation Research Board Meeting, Washington, DC, USA, 8–12 January 2017. [Google Scholar]

- Ackermans, S.; Dey, D.; Ruijten, P.; Cuijpers, R.H.; Pfleging, B. The Effects of Explicit Intention Communication, Conspicuous Sensors, and Pedestrian Attitude in Interactions with Automated Vehicles. In Proceedings of the Conference on Human Factors in Computing Systems-Proceedings, Honolulu, HI, USA, 25–30 April 2020. [Google Scholar] [CrossRef]

- Kaleefathullah, A.A.; Merat, N.; Lee, Y.M.; Eisma, Y.B.; Madigan, R.; Garcia, J.; Winter, J.D. External Human–Machine Interfaces Can Be Misleading: An Examination of Trust Development and Misuse in a CAVE-Based Pedestrian Simulation Environment. Hum. Factors J. Hum. Factors Ergon. Soc. 2022, 64, 1070–1085. [Google Scholar] [CrossRef] [PubMed]

- Litman, T. Autonomous Vehicle Implementation Predictions Implications for Transport Planning; Technical Report; The National Academies of Sciences, Engineering, and Medicine: Washington, DC, USA, 2022. [Google Scholar]

- Daily, M.; Medasani, S.; Behringer, R.; Trivedi, M. Self-Driving Cars. Computer 2017, 50, 18–23. [Google Scholar] [CrossRef]

- Kitazaki, S.; Myhre, N.J. Effects of Non-Verbal Communication Cues on Decisions and Confidence of Drivers at an Uncontrolled Intersection. In Proceedings of the 8th International Driving Symposium on Human Factors in Driver Assessment, Training, and Vehicle Design: Driving Assessment 2015, Salt Lake City, UT, USA, 22–25 June 2015; University of Iowa: Iowa City, IA, USA, 2015; pp. 113–119. [Google Scholar] [CrossRef]

- Walker, I. Signals are informative but slow down responses when drivers meet bicyclists at road junctions. Accid. Anal. Prev. 2005, 37, 1074–1085. [Google Scholar] [CrossRef] [PubMed]

- Vissers, L.; van der Kint, S.; van Schagen, I.; Hagenzieker, M. Safe Interaction between Cyclists, Pedestrians and Automated Vehicles; Technical Report; SWOV: The Hague, The Netherlands, 2016. [Google Scholar]

- Rouchitsas, A.; Alm, H. External Human–Machine Interfaces for Autonomous Vehicle-to-Pedestrian Communication: A Review of Empirical Work. Front. Psychol. 2019, 10, 2757. [Google Scholar] [CrossRef]

- de Clercq, K.; Dietrich, A.; Núñez Velasco, J.P.; de Winter, J.; Happee, R. External Human-Machine Interfaces on Automated Vehicles: Effects on Pedestrian Crossing Decisions. Hum. Factors J. Hum. Factors Ergon. Soc. 2019, 61, 1353–1370. [Google Scholar] [CrossRef] [PubMed]

- Dey, D.; Matviienko, A.; Berger, M.; Pfleging, B.; Martens, M.; Terken, J. Communicating the intention of an automated vehicle to pedestrians: The contributions of eHMI and vehicle behavior. IT-Inf. Technol. 2021, 63, 123–141. [Google Scholar] [CrossRef]

- Carmona, J.; Guindel, C.; Garcia, F.; de la Escalera, A. eHMI: Review and Guidelines for Deployment on Autonomous Vehicles. Sensors 2021, 21, 2912. [Google Scholar] [CrossRef] [PubMed]

- Madigan, R.; Lee, Y.M.; Lyu, W.; Horn, S.; Garcia, J.; Merat, N. Pedestrian Interactions with Automated Vehicles: Does the Presence of a Zebra Crossing Affect How eHMIs and Movement Patterns Are Interpreted? PsyArXiv 2022. [Google Scholar] [CrossRef]

- Ackermann, C.; Beggiato, M.; Schubert, S.; Krems, J.F. An experimental study to investigate design and assessment criteria: What is important for communication between pedestrians and automated vehicles? Appl. Ergon. 2019, 75, 272–282. [Google Scholar] [CrossRef] [PubMed]

- Guo, J.; Yuan, Q.; Yu, J.; Chen, X.; Yu, W.; Cheng, Q.; Wang, W.; Luo, W.; Jiang, X. External Human–Machine Interfaces for Autonomous Vehicles from Pedestrians’ Perspective: A Survey Study. Sensors 2022, 22, 3339. [Google Scholar] [CrossRef] [PubMed]

- Jayaraman, S.K.; Creech, C.; Robert, L.P., Jr.; Tilbury, D.M.; Yang, X.J.; Pradhan, A.K.; Tsui, K.M. Trust in AV. In Proceedings of the Companion of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, 5–8 March 2018; ACM: New York, NY, USA, 2018; pp. 133–134. [Google Scholar] [CrossRef]

- Nuñez Velasco, J.P.; Farah, H.; van Arem, B.; Hagenzieker, M.P. Studying pedestrians’ crossing behavior when interacting with automated vehicles using virtual reality. Transp. Res. Part F Traffic Psychol. Behav. 2019, 66, 1–14. [Google Scholar] [CrossRef]

- Havard, C.; Willis, A. Effects of installing a marked crosswalk on road crossing behaviour and perceptions of the environment. Transp. Res. Part F Traffic Psychol. Behav. 2012, 15, 249–260. [Google Scholar] [CrossRef]

- Dey, D.; Habibovic, A.; Pfleging, B.; Martens, M.; Terken, J. Color and Animation Preferences for a Light Band eHMI in Interactions Between Automated Vehicles and Pedestrians. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; ACM: New York, NY, USA, 2020; pp. 1–13. [Google Scholar] [CrossRef]

- J3134_201905 Standards; Automated Driving System (ADS) Marker Lamp. SAE: Washington, DC, USA, 2019. [CrossRef]

- Faas, S.M.; Baumann, M. Yielding Light Signal Evaluation for Self-Driving Vehicle and Pedestrian Interaction; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; Volume 1026, pp. 189–194. [Google Scholar] [CrossRef]

- Mason, B.; Lakshmanan, S.; McAuslan, P.; Waung, M.; Jia, B. Lighting a Path for Autonomous Vehicle Communication: The Effect of Light Projection on the Detection of Reversing Vehicles by Older Adult Pedestrians. Int. J. Environ. Res. Public Health 2022, 19, 14700. [Google Scholar] [CrossRef] [PubMed]

- Dey, D.; Terken, J. Pedestrian Interaction with Vehicles: Roles of Explicit and Implicit Communication. In Proceedings of the 9th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Oldenburg, Germany, 24–27 September 2017; ACM: New York, NY, USA, 2017; pp. 109–113. [Google Scholar] [CrossRef]

- Vehicle Industry Research Center (University of Győr). JKK Research. Available online: https://jkk-research.github.io/ (accessed on 15 September 2022).

- R Core Team. R: A Language and Environment for Statistical Computing. Available online: http://www.R-project.org/ (accessed on 30 September 2022).

- Lin, Z.; Niu, H.; An, K.; Wang, Y.; Zheng, G.; Chatzinotas, S.; Hu, Y. Refracting RIS-Aided Hybrid Satellite-Terrestrial Relay Networks: Joint Beamforming Design and Optimization. IEEE Trans. Aerosp. Electron. Syst. 2022, 58, 3717–3724. [Google Scholar] [CrossRef]

- Niu, H.; Lin, Z.; An, K.; Liang, X.; Hu, Y.; Li, D.; Zheng, G. Active RIS-Assisted Secure Transmission for Cognitive Satellite Terrestrial Networks. IEEE Trans. Veh. Technol. 2022, 1–6. [Google Scholar] [CrossRef]

- Niu, H.; Lin, Z.; Chu, Z.; Zhu, Z.; Xiao, P.; Nguyen, H.X.; Lee, I.; Al-Dhahir, N. Joint Beamforming Design for Secure RIS-Assisted IoT Networks. IEEE Internet Things J. 2022, 10, 1628–1641. [Google Scholar] [CrossRef]

| Scenario 1 | Scenario 2 | Scenario 3 | Scenario 4 | |

|---|---|---|---|---|

| Vehicle approaches ped. crossing LED red | 13/51 (t < 14.3 s) | 3/51 (t < 6.3 s) | 6/51 (t < 4.1 s) | 2/51 (t < 3.2 s) |

| Vehicle stops LED green | - | 41/51 (6.3 s < t < 12 s) | - | 30/51 (3.2 s < t < 6.1 s) |

| Vehicle drives over ped. crossing LED red | - | 4/51 (12 s < t < 15.9 s) | - | 11/51 (6.1 s < t < 7.7 s) |

| Vehicle leaves the ped. crossing LED red | 38/51 (t > 14.3 s) | 3/51 (t > 15.9 s) | 45/51 (t > 4.1 s) | 8/51 (7.7 s < t < 12 s) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhanguzhinova, S.; Makó, E.; Borsos, A.; Sándor, Á.P.; Koren, C. Communication between Autonomous Vehicles and Pedestrians: An Experimental Study Using Virtual Reality. Sensors 2023, 23, 1049. https://doi.org/10.3390/s23031049

Zhanguzhinova S, Makó E, Borsos A, Sándor ÁP, Koren C. Communication between Autonomous Vehicles and Pedestrians: An Experimental Study Using Virtual Reality. Sensors. 2023; 23(3):1049. https://doi.org/10.3390/s23031049

Chicago/Turabian StyleZhanguzhinova, Symbat, Emese Makó, Attila Borsos, Ágoston Pál Sándor, and Csaba Koren. 2023. "Communication between Autonomous Vehicles and Pedestrians: An Experimental Study Using Virtual Reality" Sensors 23, no. 3: 1049. https://doi.org/10.3390/s23031049

APA StyleZhanguzhinova, S., Makó, E., Borsos, A., Sándor, Á. P., & Koren, C. (2023). Communication between Autonomous Vehicles and Pedestrians: An Experimental Study Using Virtual Reality. Sensors, 23(3), 1049. https://doi.org/10.3390/s23031049