Monitoring of Single-Track Melting States Based on Photodiode Signal during Laser Powder Bed Fusion

Abstract

:1. Introduction

2. Experiment Equipment and Datasets

2.1. LPBF Machine and Powder Materials

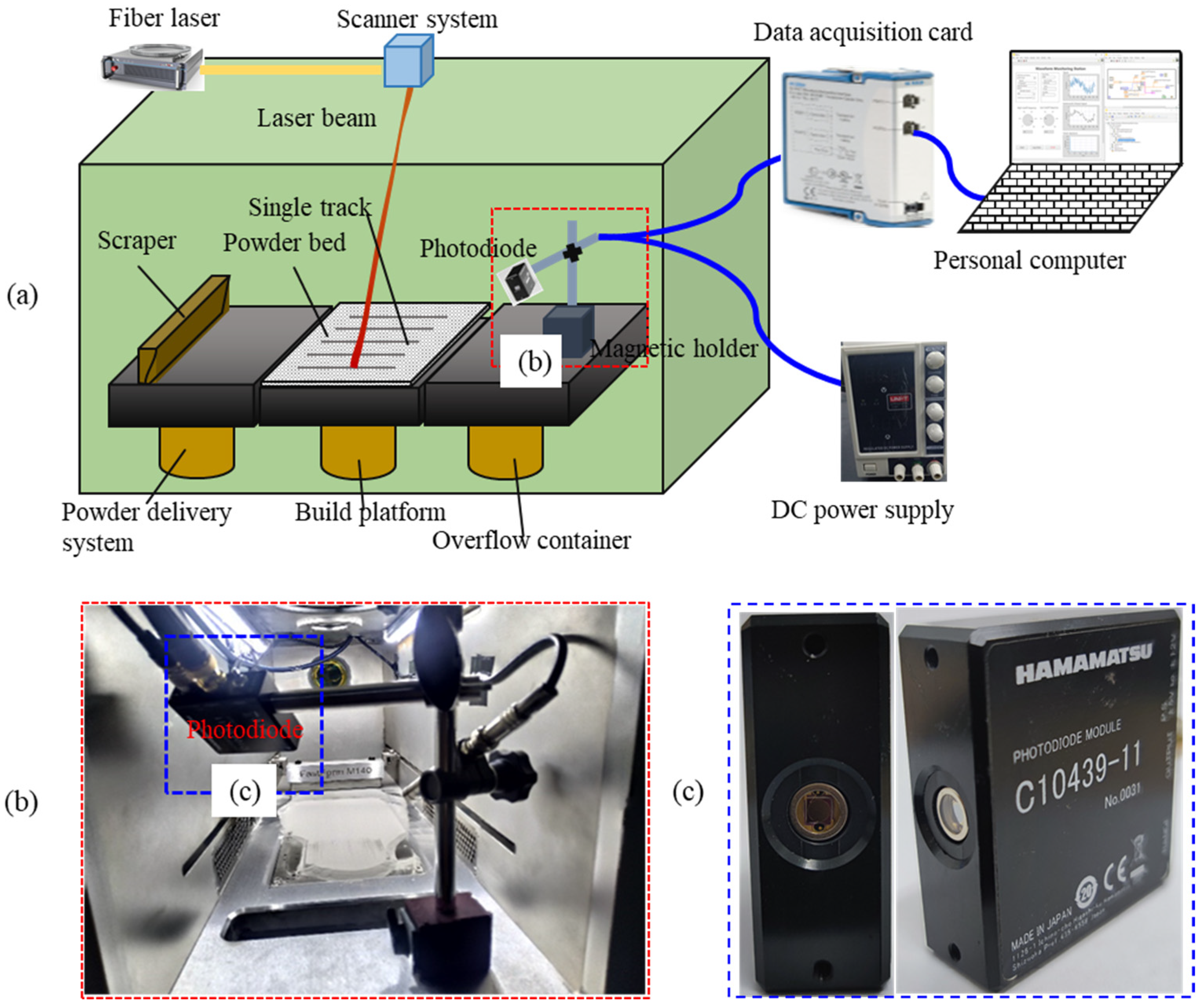

2.2. Photodiode-Based Signals Acquisition System

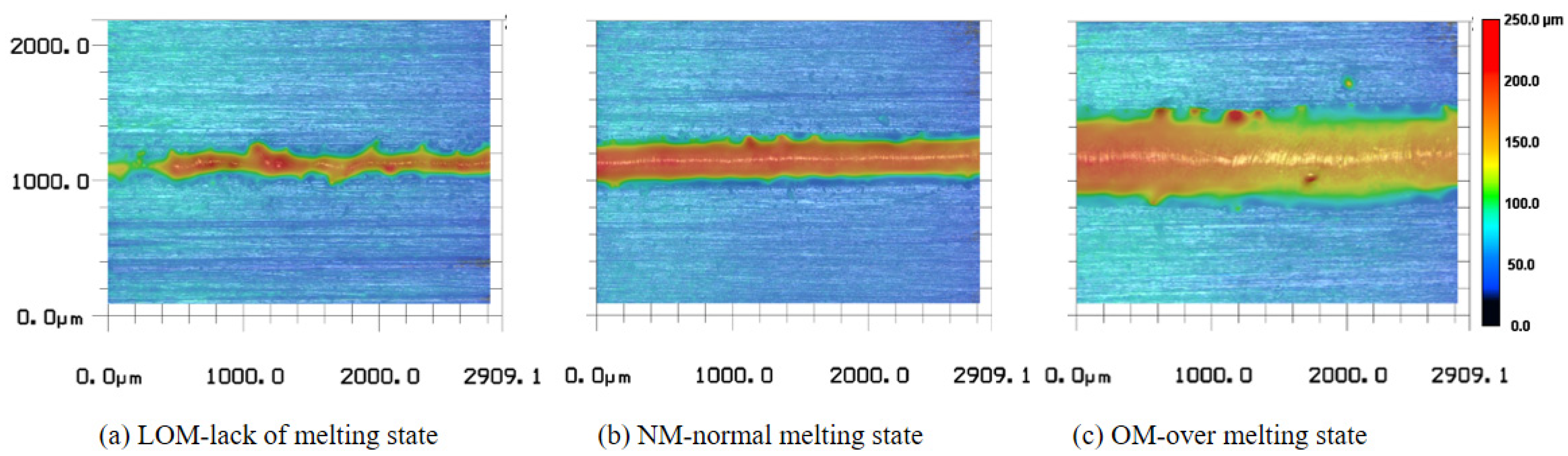

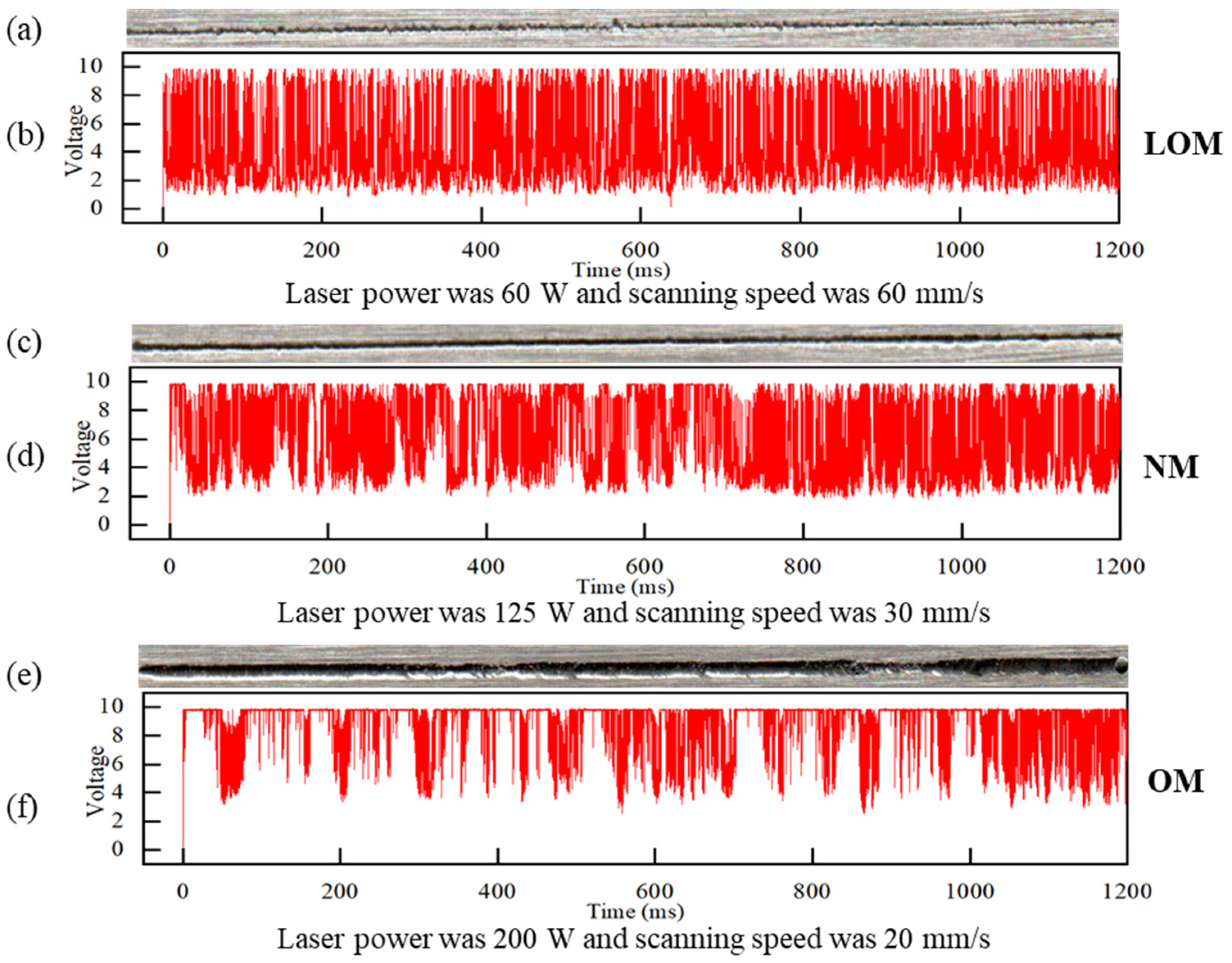

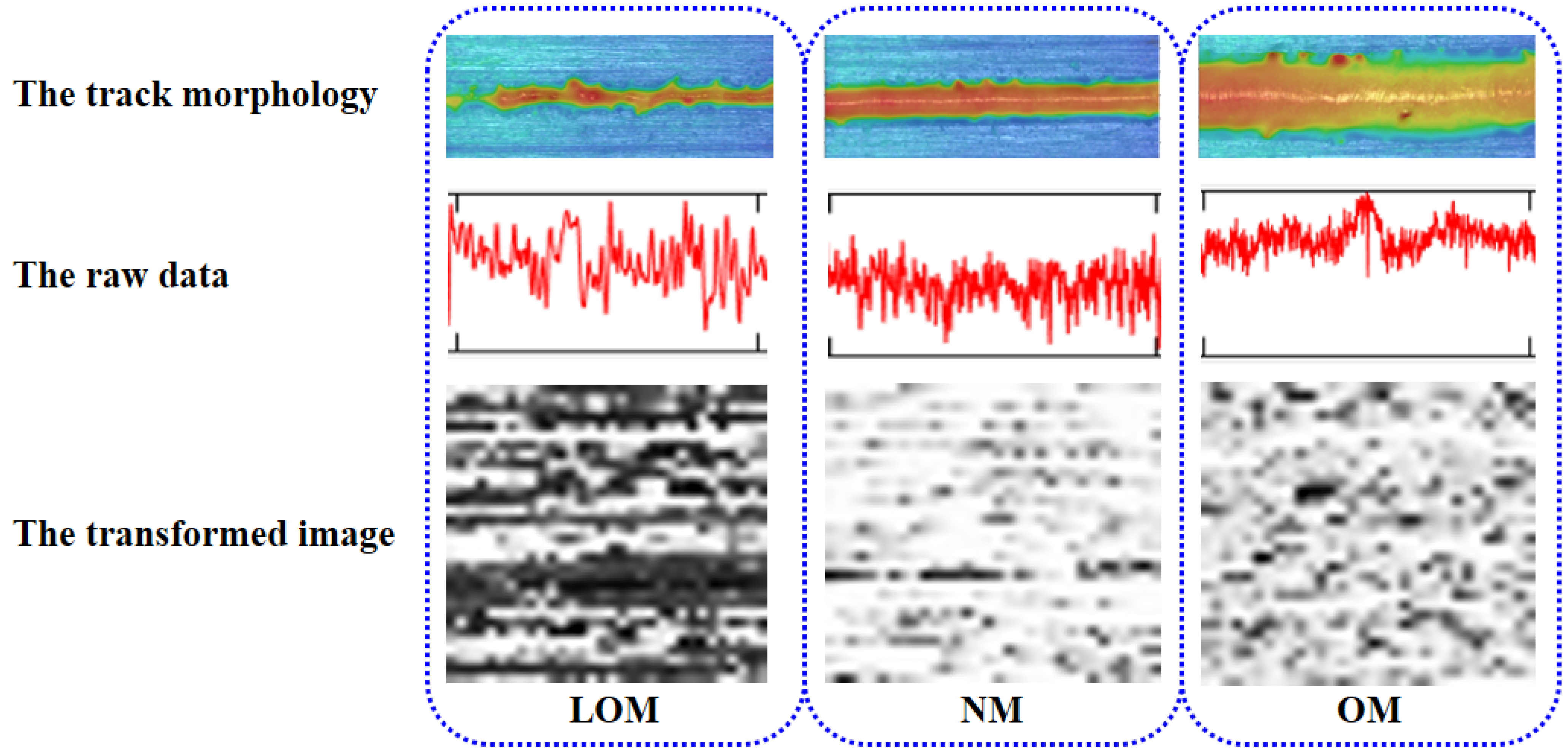

2.3. Data Collection and Track Analysis

3. Data Processing and CNN Model

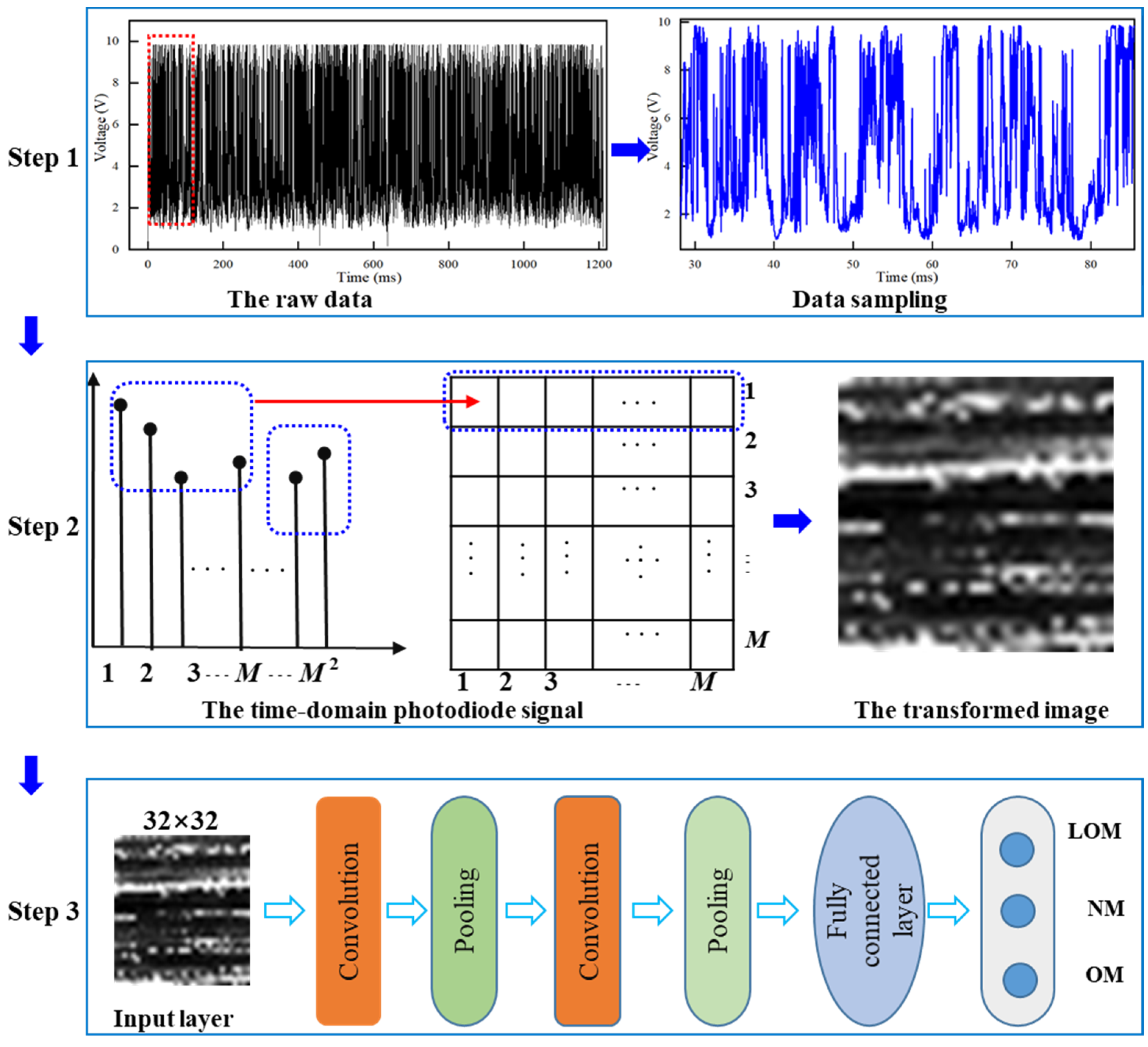

3.1. Data Processing

3.2. Signal-to-Image Transformation Methodology

3.3. General CNN Model

3.4. Construction of the Proposed CNN

4. Results and Discussion

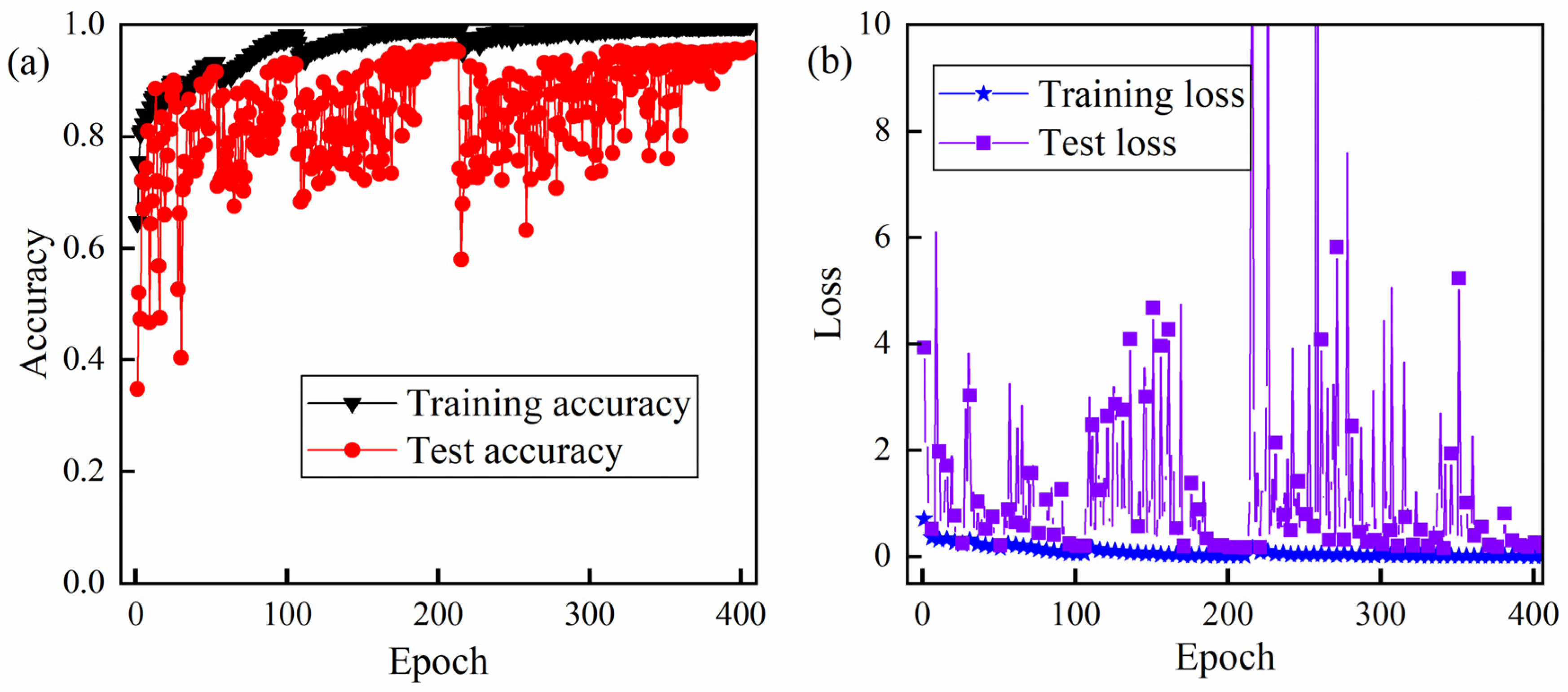

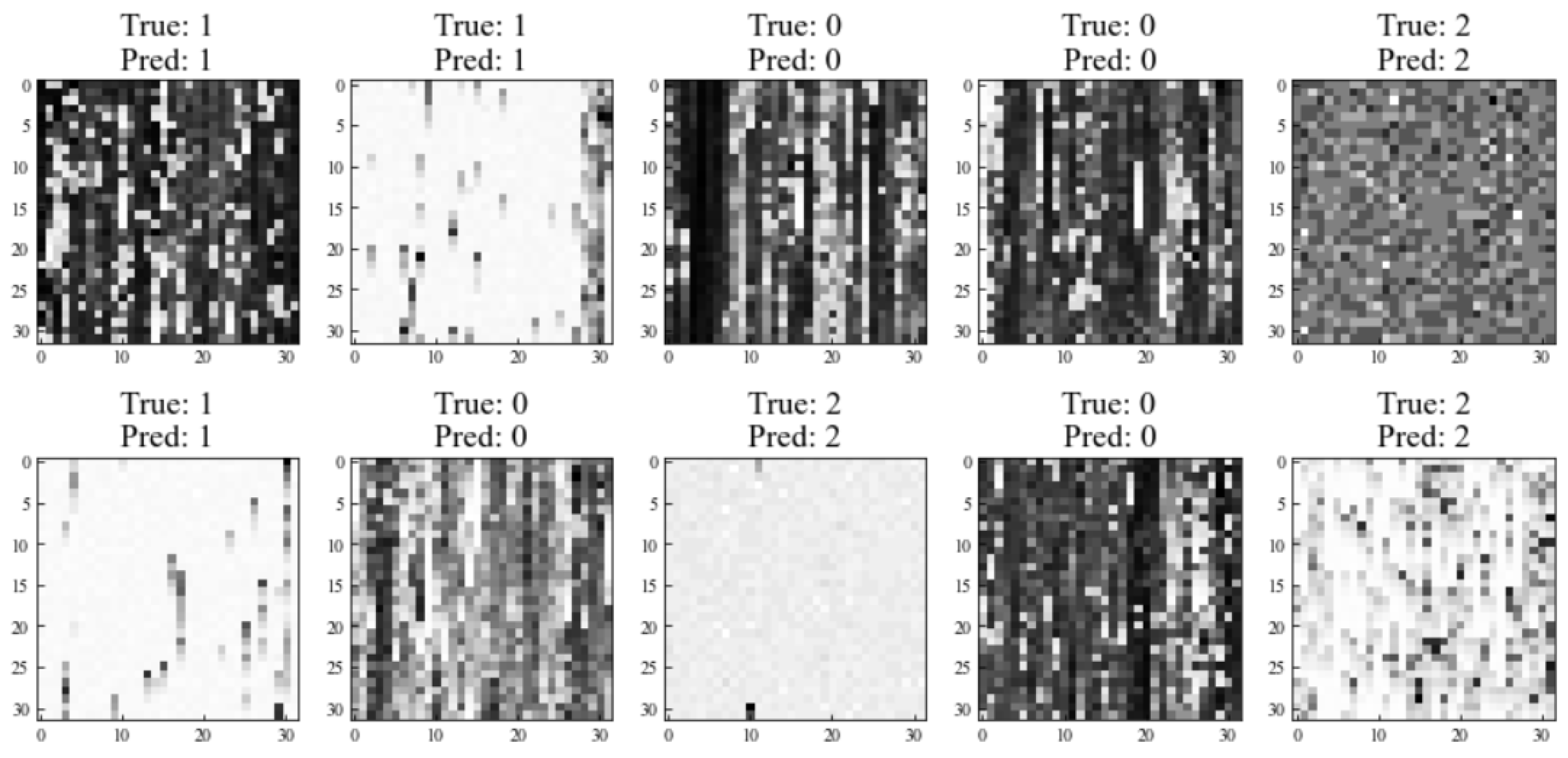

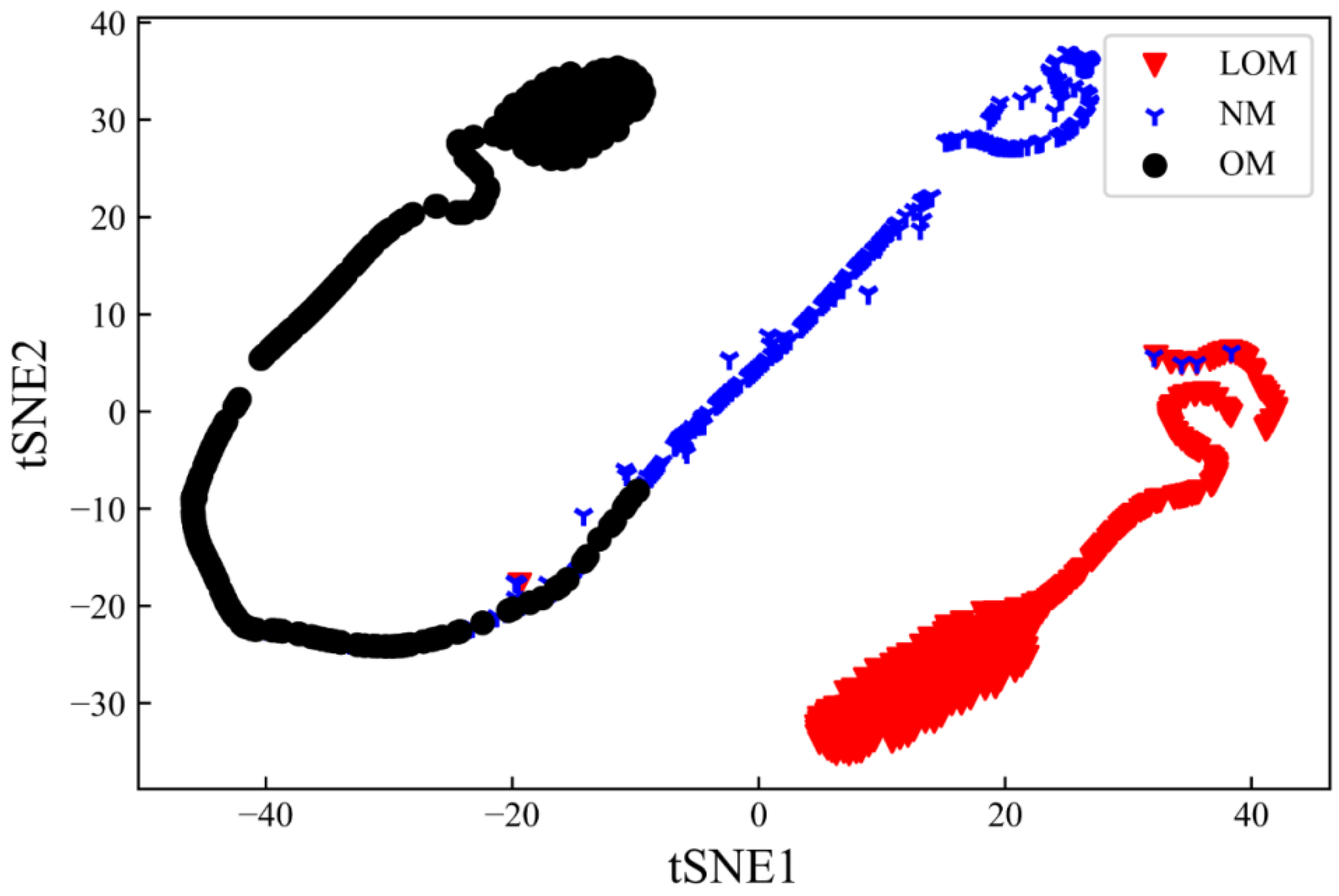

4.1. The Results of the Proposed CNN Model

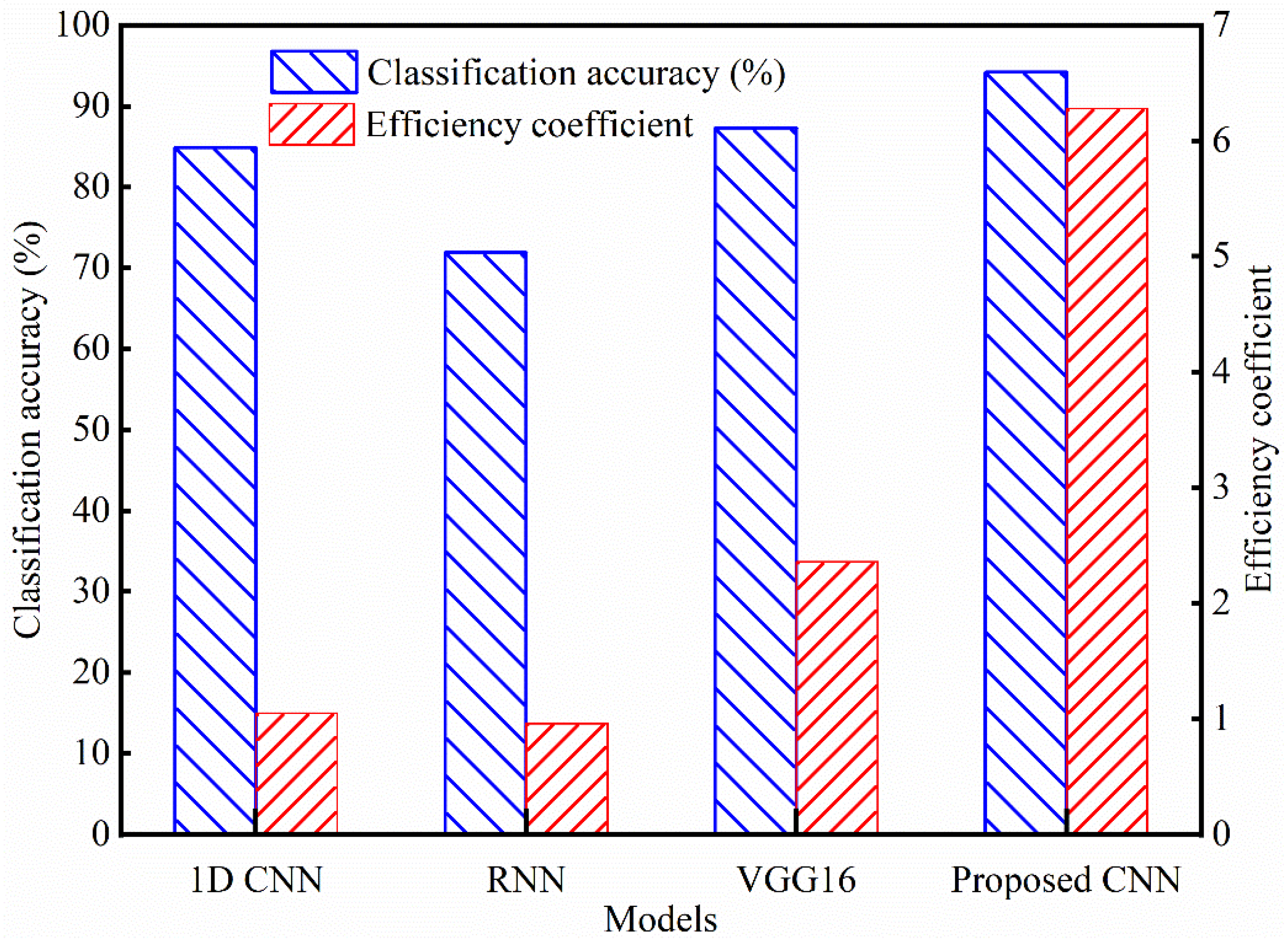

4.2. Comparison of Classic Deep Learning Models

5. Conclusions and Future Work

- (1)

- An off-axis photodiode-based monitoring system was established to acquire the light signal while the tracks were melting. A method was used to convert the photodiode signal to grayscale images;

- (2)

- A CNN model was proposed to classify the melting state. Tenfold cross-validation was applied. The classification accuracy of the proposed CNN model can reach 95.81% with the shortest time of 15 ms for each sample;

- (3)

- The performance of the proposed model was compared to three classic deep learning methods (1D CNN, RNN, and VGG16). It demonstrates that the proposed model exhibits outstanding performance in terms of classification accuracy and efficiency;

- (4)

- It indicates that it is feasible and reliable to monitor the LPBF process using a simple and low-cost photodiode combined with the CNN model. This work can promote progress in monitoring the LPBF process and improving the reliability of component quality and the repeatability of manufacturing.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bacciaglia, A.; Ceruti, A.; Liverani, A. Additive manufacturing challenges and future developments in the next ten years. In Design Tools and Methods in Industrial Engineering; Springer: Berlin/Heidelberg, Germany, 2020; pp. 891–902. [Google Scholar]

- Shah, J.; Snider, B.; Clarke, T.; Kozutsky, S.; Lacki, M.; Hosseini, A. Large-scale 3D printers for additive manufacturing: Design considerations and challenges. Int. J. Adv. Manuf. Technol. 2019, 104, 3679–3693. [Google Scholar] [CrossRef]

- Gisario, A.; Kazarian, M.; Martina, F.; Mehrpouya, M. Metal additive manufacturing in the commercial aviation industry: A review. J. Manuf. Syst. 2019, 53, 124–149. [Google Scholar] [CrossRef]

- Cao, L.C.; Li, J.C.; Hu, J.X.; Liu, H.P.; Wu, Y.D.; Zhou, Q. Optimization of surface roughness and dimensional accuracy in LPBF additive manufacturing. Opt. Laser Technol. 2021, 142, 107246. [Google Scholar] [CrossRef]

- Oliveira, J.P.; LaLonde, A.D.; Ma, J. Processing parameters in laser powder bed fusion metal additive manufacturing. Mater. Des. 2020, 193, 108762. [Google Scholar] [CrossRef]

- Kyogoku, H.; Ikeshoji, T.T. A review of metal additive manufacturing technologies: Mechanism of defects formation and simulation of melting and solidification phenomena in laser powder bed fusion process. Mech. Eng. Rev. 2020, 7, 19–00182. [Google Scholar] [CrossRef]

- Li, K.; Ma, R.; Qin, Y.; Gong, N.; Wu, J.; Wen, P.; Tan, S.; Zhang, D.Z.; Murr, L.E.; Luo, J. A review of the multi-dimensional application of machine learning to improve the integrated intelligence of laser powder bed fusion. J. Mater. Process. Technol. 2023, 318, 118032. [Google Scholar] [CrossRef]

- Hossain, M.S.; Taheri, H. In Situ Process Monitoring for Additive Manufacturing Through Acoustic Techniques. J. Mater. Eng. Perform. 2020, 29, 6249–6262. [Google Scholar] [CrossRef]

- Chauveau, D. Review of NDT and process monitoring techniques usable to produce high-quality parts by welding or additive manufacturing. Weld. World 2018, 62, 1097–1118. [Google Scholar] [CrossRef]

- Grasso, M.; Colosimo, B.M. Process defects and in situ monitoring methods in metal powder bed fusion: A review. Meas. Sci. Technol. 2017, 28, 044005. [Google Scholar] [CrossRef]

- Zhao, M.; Duan, C.; Luo, X. Metallurgical defect behavior, microstructure evolution, and underlying thermal mechanisms of metallic parts fabricated by selective laser melting additive manufacturing. J. Laser Appl. 2020, 32, 022012. [Google Scholar] [CrossRef]

- Hu, Y.N.; Wu, S.C.; Withers, P.J.; Zhang, J.; Bao, H.Y.X.; Fu, Y.N.; Kang, G.Z. The effect of manufacturing defects on the fatigue life of selective laser melted Ti-6Al-4V structures. Mater. Des. 2020, 192, 108708. [Google Scholar] [CrossRef]

- Moshiri, M.; Pedersen, D.B.; Tosello, G.; Nadimpalli, V.K. Performance evaluation of in-situ near-infrared melt pool monitoring during laser powder bed fusion. Virtual Phys. Prototyp. 2023, 18, e2205387. [Google Scholar] [CrossRef]

- Everton, S.K.; Hirsch, M.; Stravroulakis, P.; Leach, R.K.; Clare, A.T. Review of in-situ process monitoring and in-situ metrology for metal additive manufacturing. Mater. Des. 2016, 95, 431–445. [Google Scholar] [CrossRef]

- Spears, T.G.; Gold, S.A. In process sensing in selective laser melting (SLM) additive manufacturing. Integr. Mater. Manuf. Innov. 2016, 5, 16–40. [Google Scholar] [CrossRef]

- He, W.; Shi, W.; Li, J.; Xie, H. In-situ monitoring and deformation characterization by optical techniques; part I: Laser-aided direct metal deposition for additive manufacturing. Opt. Lasers Eng. 2019, 122, 74–88. [Google Scholar] [CrossRef]

- Ye, D.; Hong, G.S.; Zhang, Y.; Zhu, K.; Fuh, J.Y.H. Defect detection in selective laser melting technology by acoustic signals with deep belief networks. Int. J. Adv. Manuf. Technol. 2018, 96, 2791–2801. [Google Scholar] [CrossRef]

- Zhang, Y.; Hong, G.S.; Ye, D.; Zhu, K.; Fuh, J.Y.H. Extraction and evaluation of melt pool, plume and spatter information for powder-bed fusion AM process monitoring. Mater. Des. 2018, 156, 458–469. [Google Scholar] [CrossRef]

- Zhang, Y.X.; You, D.Y.; Gao, X.D.; Katayama, S. Online Monitoring of Welding Status Based on a DBN Model During Laser Welding. Engineering 2019, 5, 671–678. [Google Scholar] [CrossRef]

- Pandiyan, V.; Wróbel, R.; Leinenbach, C.; Shevchik, S. Optimizing in-situ monitoring for laser powder bed fusion process: Deciphering acoustic emission and sensor sensitivity with explainable machine learning. J. Mater. Process. Technol. 2023, 321, 118144. [Google Scholar] [CrossRef]

- Khairallah, S.A.; Martin, A.A.; Lee, J.R.I.; Guss, G.; Calta, N.P.; Hammons, J.A.; Nielsen, M.H.; Chaput, K.; Schwalbach, E.; Shah, M.N.; et al. Controlling interdependent meso-nanosecond dynamics and defect generation in metal 3D printing. Science 2020, 368, 660–665. [Google Scholar] [CrossRef]

- Cunningham, R.; Zhao, C.; Parab, N.; Kantzos, C.; Pauza, J.; Fezzaa, K.; Sun, T.; Rollett, A.D. Keyhole threshold and morphology in laser melting revealed by ultrahigh-speed x-ray imaging. Science 2019, 363, 849–852. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.H.; Ma, H.L.; Zhang, Q.; Fan, S.Q. Prediction of powder bed thickness by spatter detection from coaxial optical images in selective laser melting of 316L stainless steel. Mater. Des. 2020, 213, 110301. [Google Scholar] [CrossRef]

- Mazzoleni, L.; Demir, A.G.; Caprio, L.; Pacher, M.; Previtali, B. Real-Time Observation of Melt Pool in Selective Laser Melting: Spatial, Temporal, and Wavelength Resolution Criteria. IEEE Trans. Instrum. Meas. 2020, 69, 1179–1190. [Google Scholar] [CrossRef]

- De Bono, P.; Allen, C.; D’Angelo, G.; Cisi, A. Investigation of optical sensor approaches for real-time monitoring during fibre laser welding. J. Laser Appl. 2017, 29, 022417. [Google Scholar] [CrossRef]

- Coeck, S.; Bisht, M.; Plas, J.; Verbist, F. Prediction of lack of fusion porosity in selective laser melting based on melt pool monitoring data. Addit. Manuf. 2019, 25, 347–356. [Google Scholar] [CrossRef]

- Okaro, I.A.; Jayasinghe, S.; Sutcliffe, C.; Black, K.; Paoletti, P.; Green, P.L. Automatic fault detection for laser powder-bed fusion using semi-supervised machine learning. Addit. Manuf. 2019, 27, 42–53. [Google Scholar] [CrossRef]

- Montazeri, M.; Yavari, R.; Rao, P.; Boulware, P. In-Process Monitoring of Material Cross-Contamination Defects in Laser Powder Bed Fusion. J. Manuf. Sci. Eng. Trans. ASME 2018, 140, 111001. [Google Scholar] [CrossRef]

- Zhang, K.; Liu, T.T.; Liao, W.H.; Zhang, C.D.; Du, D.Z.; Zheng, Y. Photodiode data collection and processing of molten pool of alumina parts produced through selective laser melting. Optik 2018, 156, 487–497. [Google Scholar] [CrossRef]

- Nadipalli, V.K.; Andersen, S.A.; Nielsen, J.S.; Pedersen, D.B. Considerations for interpreting in-situ photodiode sensor data in pulsed mode laser powder bed fusion. In Proceedings of the Joint Special Interest Group Meeting between Euspen and ASPE Advancing Precision in Additive Manufacturing; The European Society for Precision Engineering and Nanotechnology: Bedfordshire, UK, 2019; pp. 66–69. [Google Scholar]

- Ding, S.Q.; You, D.Y.; Cai, F.S.; Wu, H.C.; Gao, X.D.; Bai, T.X. Research on laser welding process and molding effect under energy deviation. Int. J. Adv. Manuf. Technol. 2020, 108, 1863–1874. [Google Scholar] [CrossRef]

- Lapointe, S.; Guss, G.; Reese, Z.; Strantza, M.; Matthews, M.J.; Druzgalski, C.L. Photodiode-based machine learning for optimization of laser powder bed fusion parameters in complex geometries. Addit. Manuf. 2022, 53, 102687. [Google Scholar] [CrossRef]

- Razvi, S.S.; Feng, S.; Narayanan, A.; Lee, Y.-T.T.; Witherell, P. A review of machine learning applications in additive manufacturing. In Proceedings of the ASME 2019 International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Anaheim, CA, USA, 18–21 August 2019; pp. 1–10. [Google Scholar]

- Ogoke, F.; Lee, W.; Kao, N.Y.; Myers, A.; Beuth, J.; Malen, J.; Barati Farimani, A. Convolutional neural networks for melt depth prediction and visualization in laser powder bed fusion. Int. J. Adv. Manuf. Technol. 2023, 129, 1–16. [Google Scholar] [CrossRef]

- Jiang, Z.; Zhang, A.; Chen, Z.; Ma, C.; Yuan, Z.; Deng, Y.; Zhang, Y. A deep convolutional network combining layerwise images and defect parameter vectors for laser powder bed fusion process anomalies classification. J. Intell. Manuf. 2023, 1–31. [Google Scholar] [CrossRef]

- Lu, Q.Y.; Nguyen, N.V.; Hum, A.J.W.; Tran, T.; Wong, C.H. Optical in-situ monitoring and correlation of density and mechanical properties of stainless steel parts produced by selective laser melting process based on varied energy density. J. Mater. Process Technol. 2019, 271, 520–531. [Google Scholar] [CrossRef]

- Jayasinghe, S.; Paoletti, P.; Jones, N.; Green, P.L. Predicting gas pores from photodiode measurements in laser powder bed fusion builds. Prog. Addit. Manuf. 2023, 1–4. [Google Scholar] [CrossRef]

- Ciurana, J.; Hernandez, L.; Delgado, J. Energy density analysis on single tracks formed by selective laser melting with CoCrMo powder material. Int. J. Adv. Manuf. Technol. 2013, 68, 1103–1110. [Google Scholar] [CrossRef]

- Terris, T.D.; Andreau, O.; Peyre, P.; Adamski, F.; Koutiri, I.; Gorny, C.; Dupuy, C. Optimization and comparison of porosity rate measurement methods of Selective Laser Melted metallic parts. Addit. Manuf. 2019, 28, 802–813. [Google Scholar] [CrossRef]

- Wen, L.; Li, X.; Gao, L.; Zhang, Y. A New Convolutional Neural Network-Based Data-Driven Fault Diagnosis Method. IEEE Trans. Ind. Electron. 2018, 65, 5990–5998. [Google Scholar] [CrossRef]

- Zhao, B.; Zhang, X.; Li, H.; Yang, Z. Intelligent fault diagnosis of rolling bearings based on normalized CNN considering data imbalance and variable working conditions. Knowl. Based Syst. 2020, 199, 105971. [Google Scholar] [CrossRef]

- Cui, W.Y.; Zhang, Y.L.; Zhang, X.C.; Li, L.; Liou, F. Metal Additive Manufacturing Parts Inspection Using Convolutional Neural Network. Appl. Sci. 2020, 10, 545. [Google Scholar] [CrossRef]

- Zhang, B.; Liu, S.Y.; Shin, Y.C. In-Process monitoring of porosity during laser additive manufacturing process. Addit. Manuf. 2019, 28, 497–505. [Google Scholar] [CrossRef]

- Kiranyaz, S.; Avci, O.; Abdeljaber, O.; Ince, T.; Gabbouj, M.; Inman, D.J. 1D convolutional neural networks and applications: A survey. Mech. Syst. Sig. Process. 2021, 151, 107398. [Google Scholar] [CrossRef]

- Zhang, Y.; Ge, S.S. Design and Analysis of a General Recurrent Neural Network Model for Time-Varying Matrix Inversion. IEEE Trans. Neural Netw. 2005, 16, 1477–1490. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

| Items | Values |

|---|---|

| Maximum print size | 120 mm × 120 mm × 120 mm |

| Laser type | Fiber laser (RFL-C300L) |

| Heating bed temperature | 473.15 K |

| Rated power | 5 kW |

| Inert gas velocity | 0.5–1.5 L/min |

| Spreading powder way | One-way scraper |

| Element. | C | Ni | Mn | S | P | Cr | Cu | Mo | Fe |

|---|---|---|---|---|---|---|---|---|---|

| Percent | <0.03 | 12.5–13 | <2.00 | <0.01 | <0.02 | 17.5–18 | <0.50 | 2.25–2.5 | Balanced |

| No. | Laser Power (W) | Scanning Speed (mm/s) | Spot Radius (um) | Volumetric Energy Density (J/mm3) | Melting States |

|---|---|---|---|---|---|

| 1 | 50 | 50 | 80 | 49.8 | LOM |

| 2 | 60 | 60 | 80 | ||

| 3 | 70 | 70 | 80 | ||

| 4 | 120 | 28.8 | 80 | 207.3 | NM |

| 5 | 125 | 30 | 80 | ||

| 6 | 130 | 31.2 | 80 | ||

| 7 | 180 | 18 | 80 | 497.6 | OM |

| 8 | 200 | 20 | 80 | ||

| 9 | 220 | 22 | 80 |

| Layer | Type | Output | Number of Parameters |

|---|---|---|---|

| Input | Image data | 32 × 32 × 1 | 0 |

| Conv1 | Convolution | 32 × 32 × 16 | 320 |

| Pool1 | Max pooling | 16 × 16 × 32 | 0 |

| Conv2 | Convolution | 16 × 16 × 64 | 18,496 |

| Pool2 | Max pooling | 8 × 8 × 64 | 0 |

| Conv3 | Convolution | 8 × 8 × 128 | 73,856 |

| Pool3 | Max pooling | 4 × 4 × 128 | 0 |

| FC1 | Fully Connected | 64 | 131,136 |

| FC2 | Fully Connected | 512 | 33,280 |

| Output | Fully Connected | 3 | 1539 |

| Model | Classification Accuracy (%) | Computation Time (ms) | Efficiency Coefficient |

|---|---|---|---|

| 1D CNN | 84.92 | 81 | 1.05 |

| RNN | 71.92 | 75 | 0.96 |

| VGG16 | 87.30 | 37 | 2.36 |

| Proposed CNN | 95.81 | 15 | 6.39 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cao, L.; Hu, W.; Zhou, T.; Yu, L.; Huang, X. Monitoring of Single-Track Melting States Based on Photodiode Signal during Laser Powder Bed Fusion. Sensors 2023, 23, 9793. https://doi.org/10.3390/s23249793

Cao L, Hu W, Zhou T, Yu L, Huang X. Monitoring of Single-Track Melting States Based on Photodiode Signal during Laser Powder Bed Fusion. Sensors. 2023; 23(24):9793. https://doi.org/10.3390/s23249793

Chicago/Turabian StyleCao, Longchao, Wenxing Hu, Taotao Zhou, Lianqing Yu, and Xufeng Huang. 2023. "Monitoring of Single-Track Melting States Based on Photodiode Signal during Laser Powder Bed Fusion" Sensors 23, no. 24: 9793. https://doi.org/10.3390/s23249793

APA StyleCao, L., Hu, W., Zhou, T., Yu, L., & Huang, X. (2023). Monitoring of Single-Track Melting States Based on Photodiode Signal during Laser Powder Bed Fusion. Sensors, 23(24), 9793. https://doi.org/10.3390/s23249793