Abstract

Structured light illumination is widely applied for surface defect detection due to its advantages in terms of speed, precision, and non-contact capabilities. However, the high reflectivity of metal surfaces often results in the loss of point clouds, thus reducing the measurement accuracy. In this paper, we propose a novel quaternary categorization strategy to address the high-reflectivity issue. Firstly, we classify the pixels into four types according to the phase map characteristics. Secondly, we apply tailored optimization and reconstruction strategies to each type of pixel. Finally, we fuse point clouds from multi-type pixels to accomplish precise measurements of high-reflectivity surfaces. Experimental results show that our strategy effectively reduces the high-reflectivity error when measuring metal surfaces and exhibits stronger robustness against noise compared to the conventional method.

1. Introduction

Three-dimensional measurement technology is widely applied in significant domains [1], such as aviation, aerospace, and nuclear energy, primarily facilitating tasks such as defect detection and dimensional measurement [2,3,4,5]. This technology encompasses stereovision [6], line laser scanning [7], and structured light illumination (SLI). Among these methods, SLI stands out for its higher applicability and growth potential due to its advantages in terms of high speed, high precision, and cost-effectiveness. However, within industrial measurement, conventional SLI techniques are primarily suited to measuring diffuse reflective surfaces [8]. When it comes to metal surfaces, there is a risk of image oversaturation, primarily in regions with high reflectivity that exceeds the camera’s intensity response range. The image oversaturation can pose challenges in obtaining effective 3D reconstruction results [9]. One approach to addressing this problem is to apply a diffuse reflective layer onto high-reflectivity surfaces. However, it is crucial to note that the thickness of the layer can decrease the measurement accuracy [10], and the layer may cause corrosion to the measured surface. Consequently, this approach is unsuitable for precision 3D measurement scenarios. Furthermore, it is essential to note that in complex industrial scenarios, there is an increase in noise within camera images. Preprocessing [11,12] of these images can be performed to mitigate errors in point cloud reconstruction.

To address the 3D measurement challenges posed by high-reflectivity surfaces, researchers have explored various approaches to enhance conventional SLI techniques [13]. One approach is to adjust the projection strategy, which can be categorized into two types. The first type involves capturing multiple sets of fringe images with different exposure times and subsequently merging them into a single set. This helps to overcome issues related to the saturation and contrast problems resulting from varying reflectivity [14,15]. The second type entails obtaining surface reflectivity information through pre-projection and subsequently adjusting the overall projection intensity according to this information [16], or dynamically modifying the projection intensity [17] based on various reflectivity areas. These strategies effectively mitigate the occurrence of saturated pixels and prove to be beneficial in measuring high-reflectivity surfaces. However, they require the capture of multiple images and adjustments to the camera exposure settings, which may result in complex scanning processes and limited robustness. The second approach is to utilize polarization characteristics, as specular reflection exhibits stronger polarization in comparison to diffuse reflection. By utilizing polarizers on both the camera and projector [18,19], it becomes possible to reduce the impact of specular reflection in saturated areas [20]. Nonetheless, this approach also decreases the intensity in non-saturated areas, potentially reducing the measurement accuracy. The third approach employs the properties of specular reflection, where the location of saturated regions is closely linked to the incident angle of projected light. Therefore, employing multiple camera viewpoints [8,21] or projection directions [22] ensures that at least one of them captures valid images for the measurement of the saturated areas in other viewpoints. This approach does not decrease the accuracy in measuring non-saturated areas and results in high-quality overall measurements. In the multi-camera system mentioned above, the camera can be interchanged with the projector to form a multi-projector system [23,24]. This configuration not only enables measurements of high-reflectivity surfaces but also mitigates shadow issues [25,26,27].

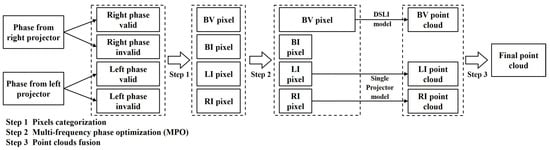

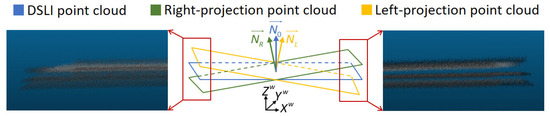

In this paper, we propose a quaternary categorization measurement strategy for high-reflectivity surfaces, which relies on two projectors and one camera. To enhance the measurement accuracy, we align the projection fringe direction perpendicular to the system baseline [28]. The core concept of the proposed method is to obtain two sets of phase information through two projectors. According to the left phase map and right phase map, camera pixels are classified into four categories by phase validity detection: both valid (BV), both invalid (BI), right invalid (RI), and left invalid (LI). For BI, RI, and LI pixels, with the aim of enhancing the measurement accuracy, we introduce a multi-frequency phase optimization (MPO) method to optimize a portion of these pixels into BV pixels. Regarding BV pixels, we propose the dual-projector structured light illumination (DSLI) model to compute 3D coordinates. The DSLI model surpasses conventional the single-projector model in terms of reconstruction accuracy, as shown in Equation (7) in Ref. [29]. For RI and LI pixels, we employ the single-projector model to compute the 3D coordinates. The very few BI pixels are directly eliminated. Ultimately, these three categories of point clouds are combined to generate the final point cloud of the high-reflectivity surface. The specific flow of the strategy is illustrated in Figure 1.

Figure 1.

Flow chart of quaternary categorization strategy.

The remainder of the paper is organized as follows. The DSLI model is introduced in Section 2.1, the pixel categorization strategy is described in Section 2.2, the MPO method is presented in Section 2.3, and the point cloud fusion strategy is shown in Section 2.4. Experimental designs and results are discussed in Section 3. Conclusions and future work are summarized in Section 4.

2. Methods

2.1. DSLI Model for 3D Reconstruction

Given that we project fringe patterns onto a highly reflective surface, the specular reflection ray reaching the camera can induce an oversaturation phenomenon in the image. Hence, this oversaturation leads to the loss of encoded information and the presence of outliers in the point cloud. We propose employing two projectors to conduct 3D scanning successively from different directions. Due to the small scattering angle of specular reflection, the modulated patterns obtained from different projectors may not saturate at the same measured point.

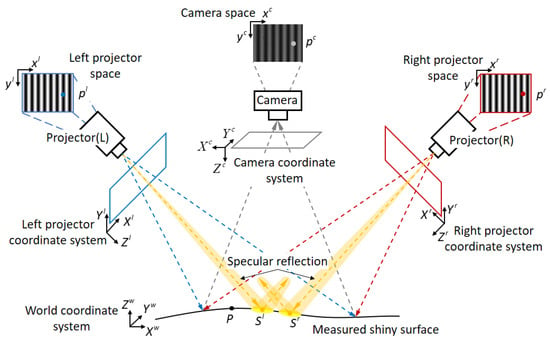

In the DSLI model, depicted in Figure 2, and represent saturated areas resulting from two different projections. A pinhole camera is employed, where denotes the coordinate of point P in the world coordinate system, represents the coordinate of the same point P in the camera coordinate system, and signifies the corresponding coordinate of within the camera space. and can be related as follows:

where is an arbitrary non-zero scalar, and is the 3 × 4 calibration matrix of the camera. Equation (1) represents the perspective transformation of 3D coordinate into 2D coordinate . The two projectors can be regarded as the inverse of the camera. Equation (1) can be extended as [23]

where , , , , , , , and are the parameters of the right and left projectors; , , are obtained by system calibration. During the calibration process, we divide the DSLI model into two single-projector models and calibrate each of them separately [30]. We reorganize Equation (2) and have

with

and

where , and , . Finally, our dual-projector model computes 3D coordinates as

Figure 2.

DSLI model and explanation of each symbol.

We conduct 3D scanning with sinusoidal phase-shifting fringe patterns. After the images with deforming patterns are captured by the camera, the images can be expressed as

where, for each camera coordinate , and are the intensities from the right and left projectors, and are the background values, and are the modulation values, and are the phases, n is the phase shift, and N is the total number of phase shifts. The parameters and , needed in Equation (6) for point cloud computing, can be obtained by computing phases with

where and are the wrapped phase that can be unwrapped as and . and can be expressed as [30]

where is the vertical resolution of the projectors. By introducing two sets of phase information, our DSLI model constructs a novel overdetermined system of equations with four expressions and three unknowns. In contrast to the single-projector model, our method incorporates an additional constraint from the second projector, enhancing the robustness and accuracy of the computed 3D coordinates [31]. This improvement will be demonstrated in the experiments.

2.2. Pixel Categorization Based on Phase Validity Detection

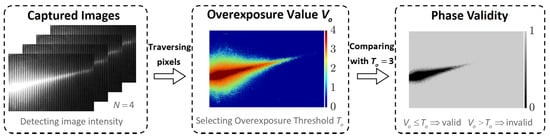

For high-reflectivity surfaces, camera image oversaturation leads to invalid phase values, which can be detected as illustrated in Figure 3. According to Equation (8), the oversaturation of the camera image intensity results in the inaccurate detection of the phase . Consequently, we detect the phase validity by processing the image intensity. In the case of a set of N-step phase-shift images, we traverse each pixel, counting the occurrences of saturated images for individual pixels, denoted as the overexposure value , which can be expressed as

where . Subsequently, we establish an overexposure threshold (0 < < N) and employ a comparison between the overexposure value and the overexposure threshold to assess the validity of the phase for each pixel. Typically, we set as based on experimental experience. The detection result K can be expressed as

Figure 3.

Detecting the phase validity from captured images.

Taking the four-step phase shift shown in Figure 3 as an example, the overexposure value for each pixel ranges from 0 to 4. By setting the overexposure threshold to 3, we can then derive the phase validity detection for each pixel.

For each pixel, there are two phases originating from the right projector and the left projector. Employing Equation (11), we derive detection results and , respectively. These results are then summed to obtain the detection result for the dual-projector system. Based on , , and , we classify all pixels into four distinct categories.

- (1)

- Pixels with are classified as BV pixels, and their 3D coordinates are calculated using the DSLI model.

- (2)

- Pixels with and are classified as LI pixels, and their 3D coordinates are calculated using the single-projector model.

- (3)

- Pixels with and are classified as RI pixels, and their 3D coordinates are calculated using the single-projector model.

- (4)

- Pixels with are classified as BI pixels, and it is not possible to calculate their 3D coordinates.

2.3. MPO Based on Phase Validity Detection

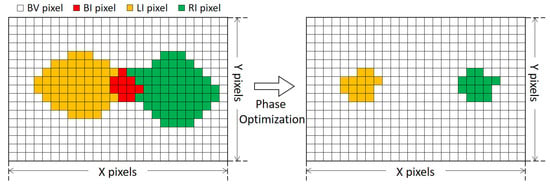

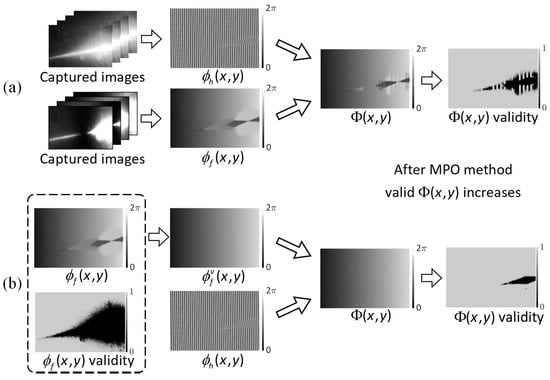

Due to variations in the reconstruction models, BV pixels exhibit superior point cloud quality compared to LI and RI pixels. Conversely, BI pixels lack valid phase information, leading to gaps in the reconstructed point cloud data. To enhance the reconstruction quality, it is essential to optimize these invalid phases, consequently increasing the count of BV pixels while decreasing the occurrence of BI, LI, and RI pixels, as illustrated in Figure 4.

Figure 4.

The change in pixel categorization before and after phase optimization.

In multi-frequency phase-shifted structured light, multiple sets of projection patterns with distinct frequencies are employed. The number of BV pixels varies among these different sets of patterns. The variation in the number of BV pixels can mainly be attributed to the following two factors. (1) There are substantial frequency disparities between high-frequency and fundamental-frequency patterns. As the frequency of the fringe pattern decreases, the size of its high-intensity regions also grows, leading to larger areas of saturation, and a decrease in BV pixels. (2) To enhance the phase accuracy, high-frequency patterns typically employ a larger number of phase-shift steps [32]. A larger number of phase-shift steps reduces the impact of saturated images on the phase, leading to an increase in BV pixels.

In multi-frequency phase unwrapping, the fundamental-frequency phase is utilized to compute the high-frequency wrapped phase, resulting in the absolute phase, denoted as . This process can be represented as

where and are the high-frequency and fundamental-frequency wrapped phases, f is the frequency of the high-frequency phase, and is the symbol of rounding. In the process shown in Equation (12), only when both the high-frequency phase and the fundamental-frequency phase are in the BV state, the absolute phase can display the BV state. Therefore, we propose the MPO method, as illustrated in Figure 5, to optimize the fundamental-frequency phase, ensuring that the BV count in the absolute phase is maximized.

Figure 5.

The process of phase unwrapping: (a) without MPO method, (b) with MPO method.

The fundamental-frequency images are processed according to the phase validity detection method demonstrated in Equation (11), resulting in a binary image labeled as D. In this binary image, pixels with valid phases are denoted as 1, while pixels with invalid phases are denoted as 0. To identify the boundaries of phase-invalid connected regions, we apply image erosion to the image D, which is defined as

where E represents the erosion kernel, F represents the binary image after erosion, and P represents the difference image between the original image and the eroded image. Pixels with represent the outer boundaries of the phase-invalid connected regions. The phases of the outer boundary pixels are employed for surface fitting, and the fitted surface serves as an interpolation for the phase-invalid regions. This process allows us to derive a valid fundamental-frequency phase denoted as

where a through e denote fitting parameters, typically estimated using the least squares method [33,34]. The estimation involves minimizing the following loss function L to obtain the best-fitting parameters:

where represents the total number of , and denotes the image coordinates of the pixel. Next, we substitute the original fundamental-frequency phase in Equation (12) with , enabling us to obtain

where, if there are only two distinct sets of phase-shifting frequencies, represents the ultimate absolute phase. However, when three or more sets of phase-shifting frequencies are involved, serves as the new fundamental-frequency phase. This iterative process is repeated until the absolute phase of the highest frequency is successfully calculated.

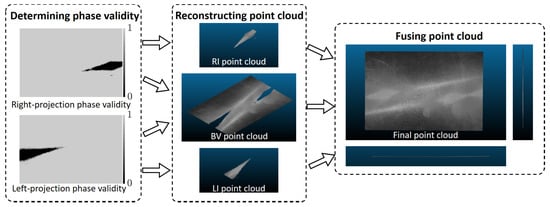

2.4. Point Cloud Fusion Based on Pixel Categorization

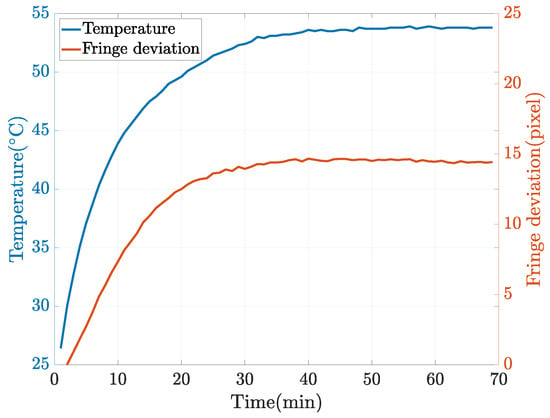

Following the pixel categorization method outlined in Section 2.2, we acquire three distinct sets of point cloud data for the high-reflectivity surface under measurement. These sets are denoted as the BV point cloud, RI point cloud, and LI point cloud, respectively. As depicted in Figure 6, we employ the RI and LI point clouds to address gaps in the BV point cloud. Ideally, with the same calibration information, these three sets of point clouds should align seamlessly and be directly fused. However, due to the thermal noise and lens distortion present in the projector, deviations occur in the reconstructed point clouds across different projection states, as illustrated in Figure 7. Thermal noise [35], primarily attributed to temperature fluctuations within the DLP projection system, directly causes an overall shift in fringe patterns. The relationship between temperature and fringe deviation is illustrated in Figure 8. Hence, we stabilize the operating temperature of the projectors to avoid the influence of thermal noise. Lens distortion can cause deformation in the reconstructed point clouds of planes, such as warping [36]. The varying distortion among different lenses results in varying deformation in the point clouds.

Figure 6.

The process of obtaining the final point cloud.

Figure 7.

Deviation between different point clouds.

Figure 8.

The curve graph of fringe deviation and temperature over time (the projector model is the LightCrafter 4500).

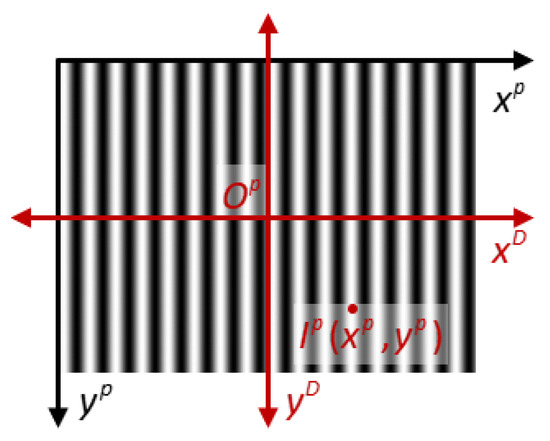

The projector lens introduces distortion to the projected fringe pattern, and by investigating the impact of this distortion on the fringe pattern, we can propose suitable solutions. In Figure 9, represents the intensity of the projected fringe pattern, and the distortion is centered on the optical origin of the projector, denoted as . This distortion is decomposed into two directions, which are and . The pixel axes of the projection pattern, and , are parallel to and , respectively. The fringe pattern exhibits sinusoidal variation along the direction, with no variation along the direction. Due to the distortion in the direction, the intensity of the actual pattern, , differs from the intensity of the ideal pattern, . Consequently, this distortion leads to deviations in the solved phase, resulting in changes in the correspondence between the phase and 3D coordinates. Conversely, in the direction, there is a minimal difference between and , thus having no effect on the 3D coordinates. Finally, as depicted in Figure 7, due to variations in the distortion parameters of the two projectors, the coordinates exhibit relative tilting and sinking along the direction. To simplify the calculation, we approximate the deviation between the point clouds as a rigid deformation, which is subsequently corrected using a calibration matrix. This correction process is outlined as

where represents the original 3D coordinate, represents the corrected 3D coordinates, and the calibration matrix is composed of a rotation matrix, , and a translation matrix, . For the rotation matrix , we employ a checkerboard as a calibration target. We reconstruct three distinct point clouds and derive their normal vectors, named , , and (as depicted in Figure 7). Utilizing the Rodrigues rotation formula, we calculate the matrix based on and another normal vector. Following the rotation, we derive the corner coordinates of the calibration target and subsequently calculate the average deviation between these corner coordinates in the DSLI point cloud and another point cloud. This average deviation provides the specific values for the translation matrix .

Figure 9.

Decomposition of the projected fringe pattern distortion.

Following the correction of the point clouds, we minimize the discrepancies between them, enabling the fusion of the different point clouds. As illustrated in Figure 6, we fuse the three sets of point clouds to obtain the final point cloud.

3. Experiments

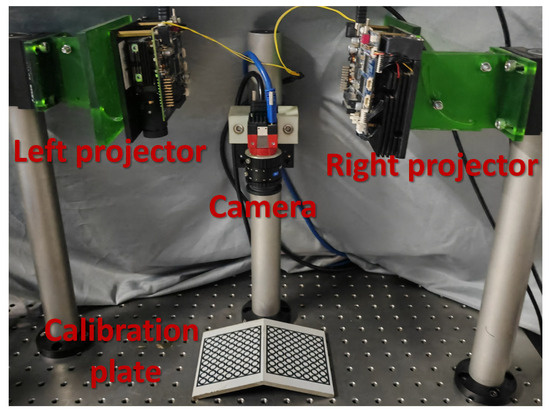

Our experimental system consists of two Texas Instruments (Dallas, TX, USA) LightCrafter 4500 DLP projectors with a resolution of 912 × 1140 and an Allied Vision (Stadtroda, Germany) Alvium 1800U-2050m camera with a resolution of 5496 × 3672, as shown in Figure 10. We align the fringe direction perpendicular to the system baseline to ensure system accuracy [28]. In our subsequent experiments, we utilize three distinct frequencies to obtain the wrapped phase, subsequently applying the conventional temporal phase unwrapping method to obtain the unwrapped phase. Furthermore, we specify the parameters for the fringe patterns as follows: the spatial frequency of patterns is denoted as , where represents the fundamental frequency, represents the intermediate frequency, and represents the high frequency; the phase shift number of the patterns is denoted as , where , , and correspond to the total number of phase shifts for each frequency, respectively.

Figure 10.

Experimental system setup.

3.1. Measuring Standard Plane

To evaluate the proposed DSLI model, we conducted a comparative analysis with the single-projection model. Both methods were employed to scan a standardized plane measuring 50 mm × 70 mm, with a flatness specification better than 1 μm. In this experimental setup, we set all frequency parameters as . However, for the phase shift parameters, we employed two different parameters, which were and . In the process of solving the phase, it is well established that increasing the number of phase shifts can effectively suppress noise [32]. Hence, we consider the 3D results obtained with as the reference values, while those acquired with are regarded as the measured values. Due to the projection limit of 128 patterns imposed by the LightCrafter 4500, the option of could only be set as .

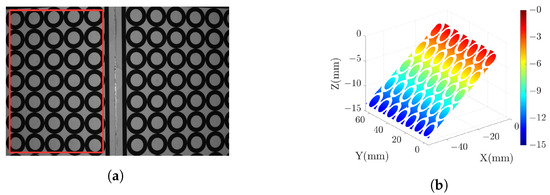

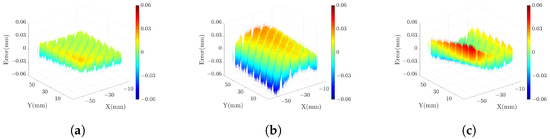

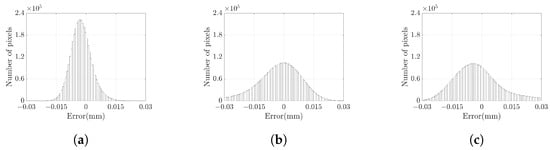

The grayscale image of the standard plane is displayed in Figure 11 (a), with the pixels inside the red box identified as the measured area. High-gray-value pixels within the grayscale image are excluded from consideration. The selected 3D point cloud is visualized in Figure 11 (b). For measuring the standard plane, we use the DSLI model, single-right-projector (SRP) model, and single-left-projector (SLP) model using two different sets of parameters. This results in three sets of reference values and three sets of measured values. To quantify the measurement accuracy, we define the error as the Z-axis distance between the measured value and the reference value and calculate the error for each point based on the three different models. Table 1 lists the mean absolute error (MAE), root mean squared error (RMSE), and peak-to-valley (PV) of the point cloud for various models. The results demonstrate that the RMSE can be reduced by more than 50% in the DSLI model. Figure 12 illustrates the errors for individual points across different models, while Figure 13 depicts the error distributions under varying model conditions. These figures reveal that the errors in the DSLI model are primarily confined to the range of 0–0.015 mm, whereas certain errors in the SRP and SLP models significantly exceed 0.015 mm.

Figure 11.

Measured result of the standard plane with : (a) grayscale image and the selected measured area, (b) point cloud map of the selected area (the points with high gray values are eliminated).

Table 1.

The errors of standard plane point clouds in different models.

Figure 12.

The scatter plots of errors for each point in the standard plane from (a) DSLI model, (b) SRP model, and (c) SLP model.

Figure 13.

The histograms of error distribution in the standard plane from (a) DSLI model, (b) SRP model, and (c) SLP model.

According to the experimental results when measuring the standard plane, it can be concluded that the DSLI model exhibits superior phase sensitivity, leading to an effective enhancement in the accuracy of the 3D coordinates. Therefore, the MPO method in Section 2.3 can effectively improve the point cloud accuracy.

3.2. Measuring Precision Microgrooves

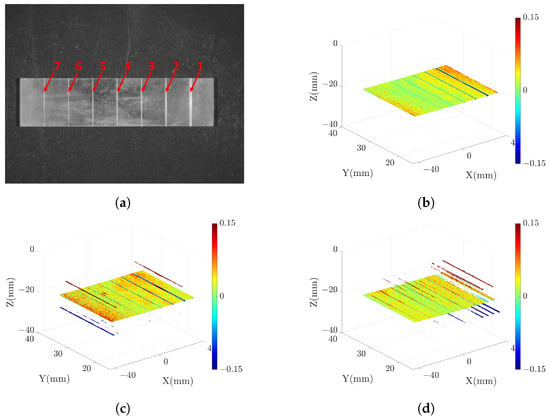

In order to verify the measurement capability of the proposed strategy, we measure a high-reflectivity copper block with seven precision microgrooves. These microgrooves are machined with accuracy exceeding 1 μm, and the surface of the block is prone to specular reflection. Arranged from right to left on the copper block are seven microgrooves of varying sizes. we employ patterns with , to measure these microgrooves. The results of these measurements are illustrated in Figure 14. In Figure 14 (b)–(d), we fit all the points to a datum surface and use distinct colors to represent the distances between the points and the datum surface. It is evident that, in comparison to the single-projector model, the proposed strategy effectively mitigates the issue of excessive outliers arising from specular reflection.

Figure 14.

Measured results of microgrooves: (a) grayscale image, (b) point cloud in proposed strategy, (c) point cloud in SRP model, (d) point cloud in SLP model. In (b–d), the color represents the distance from the point to the datum surface.

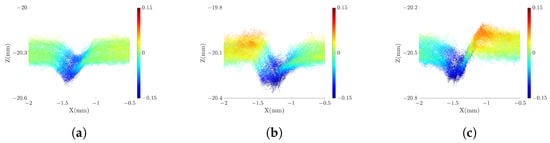

When calculating the depth of the microgroove, we perform plane fitting for the points on both sides of the microgroove. The average distance between the microgroove points and the fitting plane is then determined as the depth measured value (DMV). The horizontal accuracy of the point cloud is influenced by both the z-direction accuracy and the camera parameters [29]. The z-direction accuracy can be directly assessed through the DMV. We define the difference between the DMV and the depth ground truth as the measurement error, which serves as an indicator of the system’s measurement capabilities. In Figure 15, we present the side-view point cloud of microgroove number 4, clearly demonstrating the superior quality of the point cloud in the proposed strategy compared to the SRP and SLP models. Table 2 lists the DMV and the measured error in different models. It reveals that the single-projector model fails to measure all the microgrooves, as illustrated in Figure 14 (c)–(d), where the point clouds in some microgroove areas are distorted and unsuitable for measurement. In contrast, the proposed strategy effectively measures all the microgrooves with a relatively stable range of error. However, for microgroove numbers 5–7, due to their reduced width and increased depth-width ratio (D/W), there are limitations imposed by the measurement principle of laser triangulation, which results in reduced measurement capabilities.

Figure 15.

Measured results of microgroove number 4: (a) point cloud in proposed strategy, (b) point cloud in SRP model, and (c) point cloud in SLP model. The color represents the distance from the point to the datum surface.

Table 2.

The errors of microgroove point clouds in different models.

Based on the results of this experiment, we can conclude that the proposed strategy is capable of effectively measuring defects on high-reflectivity surfaces. It achieves accuracy of approximately 15 μm for small defects and exceeds 10 μm accuracy for relatively larger defects. The proposed strategy exhibits both higher measurement accuracy and robustness compared to the single-projector model, as evident from both MAE and PV.

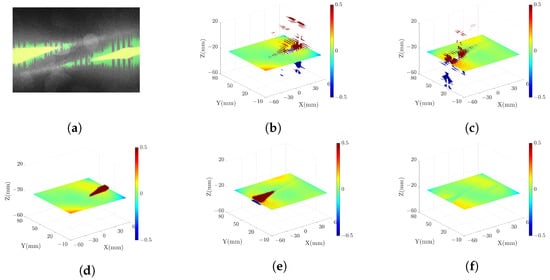

3.3. Measuring High-Reflectivity Metal Plate

To verify the optimization of the point cloud for high-reflectivity surfaces, we conducted scans of a metal plate with the pattern parameters and . The scanning results are presented in Figure 16, with Figure 16 (a) displaying the grayscale image of the metal plate and Figure 16 (b)–(f) showing the point clouds under different conditions. In the grayscale image, the yellow and green pixels represent pixels in the initial point cloud identified as outliers. The outliers associated with green pixels are eliminated using the MPO method described in Section 2.3, while the outliers linked to yellow pixels are removed during the point cloud fusion process outlined in Section 2.4. Figure 16 (f) displays the point cloud without outliers.

Figure 16.

Scanning results of high-reflectivity metal plate: (a) grayscale image, (b) initial point cloud in SRP model, (c) initial point cloud in SLP model, (d) optimized point cloud in SRP model, (e) optimized point cloud in SLP model, (f) final fused point cloud. In (b–f), the color represents the distance from the point to the datum surface.

To quantify the quality of the point cloud under different conditions, Table 3 lists the count of outliers deviating from the datum in different point clouds. The findings demonstrate a substantial reduction in outliers through the application of the MPO and point cloud fusion methods.

Table 3.

The number of outliers in different point clouds.

According to this experiment, we conclude that our strategy exhibits the capability of measuring a large range of high-reflectivity surfaces and generating accurate point clouds.

4. Conclusions

In this paper, we propose a novel quaternary categorization strategy for the scanning of high-reflectivity surfaces utilizing a setup consisting of two projectors and one camera. Firstly, we obtain two groups of phase information from two projectors along different directions, from which the pixels are classified into four types according to the phase characteristics. Secondly, we employ the MPO method to reduce the area of the invalid phase region. Based on the pixel type, we adaptively utilize either the DSLI model or the single-projector model for 3D reconstruction. Finally, we integrate the three categories of point clouds into one to obtain a high-accuracy result. The experimental results show that, in terms of point cloud accuracy, the DSLI model exhibits an enhancement of over 50% compared to the single-projector model; regarding micro-defect measurements, the quaternary categorization strategy achieves accuracy of approximately 15 μm, representing an improvement of over 40% compared to the single-projector model. Concerning the measurements of broad high-reflectivity surfaces, the quaternary categorization strategy effectively conducts measurements and mitigates outliers. In summary, our strategy significantly improves the measurement accuracy and robustness of SLI systems. In future work, we will further optimize our strategy to mitigate the impact of projector distortion on the accuracy of point cloud reconstruction.

Author Contributions

Conceptualization, B.X. and K.L.; methodology, S.Q. and G.Z.; software, S.Q.; validation, B.X., S.Q. and J.L.; formal analysis, B.X. and S.Q.; resources, Z.D. and H.L.; data curation, B.Z.; writing—original draft preparation, S.Q.; writing—review and editing, B.X., B.Z. and G.Z.; project administration, Z.D. and H.L.; funding acquisition, B.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Innovation Center of Nuclear Power Technology for National Defense Industry (HDLCXZX-2022-ZH-014).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data are not publicly available due to personal privacy.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Fang, F. On the Three Paradigms of Manufacturing Advancement. Nanomanuf. Metrol. 2023, 6, 1–3. [Google Scholar] [CrossRef]

- Ito, S.; Kameoka, D.; Matsumoto, K.; Kamiya, K. Design and development of oblique-incident interferometer for form measurement of hand-scraped surfaces. Nanomanuf. Metrol. 2021, 4, 69–76. [Google Scholar] [CrossRef]

- Shkurmanov, A.; Krekeler, T.; Ritter, M. Slice thickness optimization for the focused ion beam-scanning electron microscopy 3D tomography of hierarchical nanoporous gold. Nanomanuf. Metrol. 2022, 5, 112–118. [Google Scholar] [CrossRef]

- Bai, J.; Wang, Y.; Wang, X.; Zhou, Q.; Ni, K.; Li, X. Three-probe error separation with chromatic confocal sensors for roundness measurement. Nanomanuf. Metrol. 2021, 4, 247–255. [Google Scholar] [CrossRef]

- Han, M.; Lei, F.; Shi, W.; Lu, S.; Li, X. Uniaxial MEMS-based 3D reconstruction using pixel refinement. Opt. Express 2023, 31, 536–554. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Yin, Y.; Wu, Q.; Li, X.; Zhang, G. On-site calibration method for outdoor binocular stereo vision sensors. Opt. Lasers Eng. 2016, 86, 75–82. [Google Scholar] [CrossRef]

- Sun, Q.; Chen, J.; Li, C. A robust method to extract a laser stripe centre based on grey level moment. Opt. Lasers Eng. 2015, 67, 122–127. [Google Scholar] [CrossRef]

- Liu, G.; Liu, X.; Feng, Q. 3D shape measurement of objects with high dynamic range of surface reflectivity. Appl. Opt. 2011, 50, 4557–4565. [Google Scholar] [CrossRef]

- Qi, Z.; Wang, Z.; Huang, J.; Xing, C.; Gao, J. Error of image saturation in the structured-light method. Appl. Opt. 2018, 57, A181–A188. [Google Scholar] [CrossRef]

- Palousek, D.; Omasta, M.; Koutny, D.; Bednar, J.; Koutecky, T.; Dokoupil, F. Effect of matte coating on 3D optical measurement accuracy. Opt. Mater. 2015, 40, 1–9. [Google Scholar] [CrossRef]

- Jahid, T.; Karmouni, H.; Hmimid, A.; Sayyouri, M.; Qjidaa, H. Image moments and reconstruction by Krawtchouk via Clenshaw’s reccurence formula. In Proceedings of the 2017 International Conference on Electrical and Information Technologies (ICEIT), IEEE, Rabat, Morocco, 15–18 November 2017; pp. 1–7. [Google Scholar]

- Karmouni, H.; Jahid, T.; Hmimid, A.; Sayyouri, M.; Qjidaa, H. Fast computation of inverse Meixner moments transform using Clenshaw’s formula. Multimed. Tools Appl. 2019, 78, 31245–31265. [Google Scholar] [CrossRef]

- Feng, S.; Zhang, L.; Zuo, C.; Tao, T.; Chen, Q.; Gu, G. High dynamic range 3D measurements with fringe projection profilometry: A review. Meas. Sci. Technol. 2018, 29, 122001. [Google Scholar] [CrossRef]

- Zhang, S.; Yau, S. High dynamic range scanning technique. Opt. Eng. 2009, 48, 033604. [Google Scholar]

- Qi, Z.; Wang, Z.; Huang, J.; Xue, Q.; Gao, J. Improving the quality of stripes in structured-light three-dimensional profile measurement. Opt. Eng. 2016, 56, 031208. [Google Scholar] [CrossRef]

- Ekstrand, L.; Zhang, S. Autoexposure for three-dimensional shape measurement using a digital-light-processing projector. Opt. Eng. 2011, 50, 123603. [Google Scholar] [CrossRef]

- Sun, J.; Zhang, Q. A 3D shape measurement method for high-reflective surface based on accurate adaptive fringe projection. Opt. Lasers Eng. 2022, 153, 106994. [Google Scholar] [CrossRef]

- Zhu, Z.; Li, M.; Zhou, F.; You, D. Stable 3D measurement method for high dynamic range surfaces based on fringe projection profilometry. Opt. Lasers Eng. 2023, 166, 107542. [Google Scholar] [CrossRef]

- Zhu, Z.; You, D.; Zhou, F.; Wang, S.; Xie, Y. Rapid 3D reconstruction method based on the polarization-enhanced fringe pattern of an HDR object. Opt. Express 2021, 29, 2162–2171. [Google Scholar] [CrossRef] [PubMed]

- Salahieh, B.; Chen, Z.; Rodriguez, J.J.; Liang, R. Multi-polarization fringe projection imaging for high dynamic range objects. Opt. Express 2014, 22, 10064–10071. [Google Scholar] [CrossRef]

- Feng, S.; Chen, Q.; Zuo, C.; Asundi, A. Fast three-dimensional measurements for dynamic scenes with shiny surfaces. Opt. Commun. 2017, 382, 18–27. [Google Scholar] [CrossRef]

- Kowarschik, R.M.; Kuehmstedt, P.; Gerber, J.; Schreiber, W.; Notni, G. Adaptive optical 3-D-measurement with structured light. Opt. Eng. 2000, 39, 150–158. [Google Scholar]

- Zhang, S.; Huang, P.S. Novel method for structured light system calibration. Opt. Eng. 2006, 45, 083601. [Google Scholar]

- Jia, Z.; Sun, H.; Liu, W.; Yu, X.; Yu, J.; Rodrıguez-Andina, J.J.; Gao, H. 3-D Reconstruction Method for a Multiview System Based on Global Consistency. IEEE Trans. Instrum. Meas. 2023, 72, 1–11. [Google Scholar] [CrossRef]

- Yu, Y.; Lau, D.L.; Ruffner, M.P.; Liu, K. Dual-projector structured light 3D shape measurement. Appl. Opt. 2020, 59, 964–974. [Google Scholar] [CrossRef] [PubMed]

- Jiang, C.; Lim, B.; Zhang, S. Three-dimensional shape measurement using a structured light system with dual projectors. Appl. Opt. 2018, 57, 3983–3990. [Google Scholar] [CrossRef]

- Zhang, Y.; Qu, X.; Li, Y.; Zhang, F. A Separation Method of Superimposed Gratings in Double-Projector Fringe Projection Profilometry Using a Color Camera. Appl. Sci. 2021, 11, 890. [Google Scholar] [CrossRef]

- Zhang, R.; Guo, H.; Asundi, A.K. Geometric analysis of influence of fringe directions on phase sensitivities in fringe projection profilometry. Appl. Opt. 2016, 55, 7675–7687. [Google Scholar] [CrossRef]

- Liu, K.; Wang, Y.; Lau, D.L.; Hao, Q.; Hassebrook, L.G. Dual-frequency pattern scheme for high-speed 3-D shape measurement. Opt. Express 2010, 18, 5229–5244. [Google Scholar] [CrossRef]

- Yalla, V.G.; Hassebrook, L.G. Very high resolution 3D surface scanning using multi-frequency phase measuring profilometry. In Proceedings of the Spaceborne Sensors II, International Society for Optics and Photonics, SPIE, Orlando, FL, USA, 28 March–1 April 2005; Tchoryk, P., Jr., Holz, B., Eds.; Volume 5798, pp. 44–53. [Google Scholar]

- Lv, S.; Tang, D.; Zhang, X.; Yang, D.; Deng, W.; Kemao, Q. Fringe projection profilometry method with high efficiency, precision, and convenience: Theoretical analysis and development. Opt. Express 2022, 30, 33515–33537. [Google Scholar] [CrossRef]

- Zuo, C.; Huang, L.; Zhang, M.; Chen, Q.; Asundi, A. Temporal phase unwrapping algorithms for fringe projection profilometry: A comparative review. Opt. Lasers Eng. 2016, 85, 84–103. [Google Scholar] [CrossRef]

- Han, M.; Kan, J.; Yang, G.; Li, X. Robust Ellipsoid Fitting Using Combination of Axial and Sampson Distances. IEEE Trans. Instrum. Meas. 2023, 72, 2526714. [Google Scholar] [CrossRef]

- Du, H. DMPFIT: A Tool for Atomic-Scale Metrology via Nonlinear Least-Squares Fitting of Peaks in Atomic-Resolution TEM Images. Nanomanuf. Metrol. 2022, 5, 101–111. [Google Scholar] [CrossRef]

- Overmann, S.P. Thermal Design Considerations for Portable DLP TM Projectors. In Proceedings of the High-Density Interconnect and Systems Packaging, Santa Clara, CA, USA, 17–20 April 2001; Volume 4428, p. 125. [Google Scholar]

- Zhang, G.; Xu, B.; Lau, D.L.; Zhu, C.; Liu, K. Correcting projector lens distortion in real time with a scale-offset model for structured light illumination. Opt. Express 2022, 30, 24507–24522. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).