Why You Cannot Rank First: Modifications for Benchmarking Six-Degree-of-Freedom Visual Localization Algorithms

Abstract

:1. Introduction

- Through rigorous experimentation, we identify two limitations in the existing public benchmark for long-term visual localization evaluation: the inability of the evaluation method to eliminate human intervention, and the lack of judging basis for ranking methods when faced with tied ranking results. These limitations have the potential to hinder the accurate measurement of the localization capabilities of the methods submitted.

- In light of the limitations, we propose a unified process of preprocessing to the current evaluation method employed for localizing methods conducted on multi-camera datasets. Without compromising the practical significance of the autonomous vehicle localization problem, it provides another dimension for the dataset evaluation, which improves the fairness and reliability of the existing benchmark.

- We enhance the current ranking methodology to assist the benchmark in mitigating any potential confusion that may arise from deadlock scenarios when there is a tie between candidates during the ranking calculation process.

2. Related Works

2.1. Localization Benchmarks

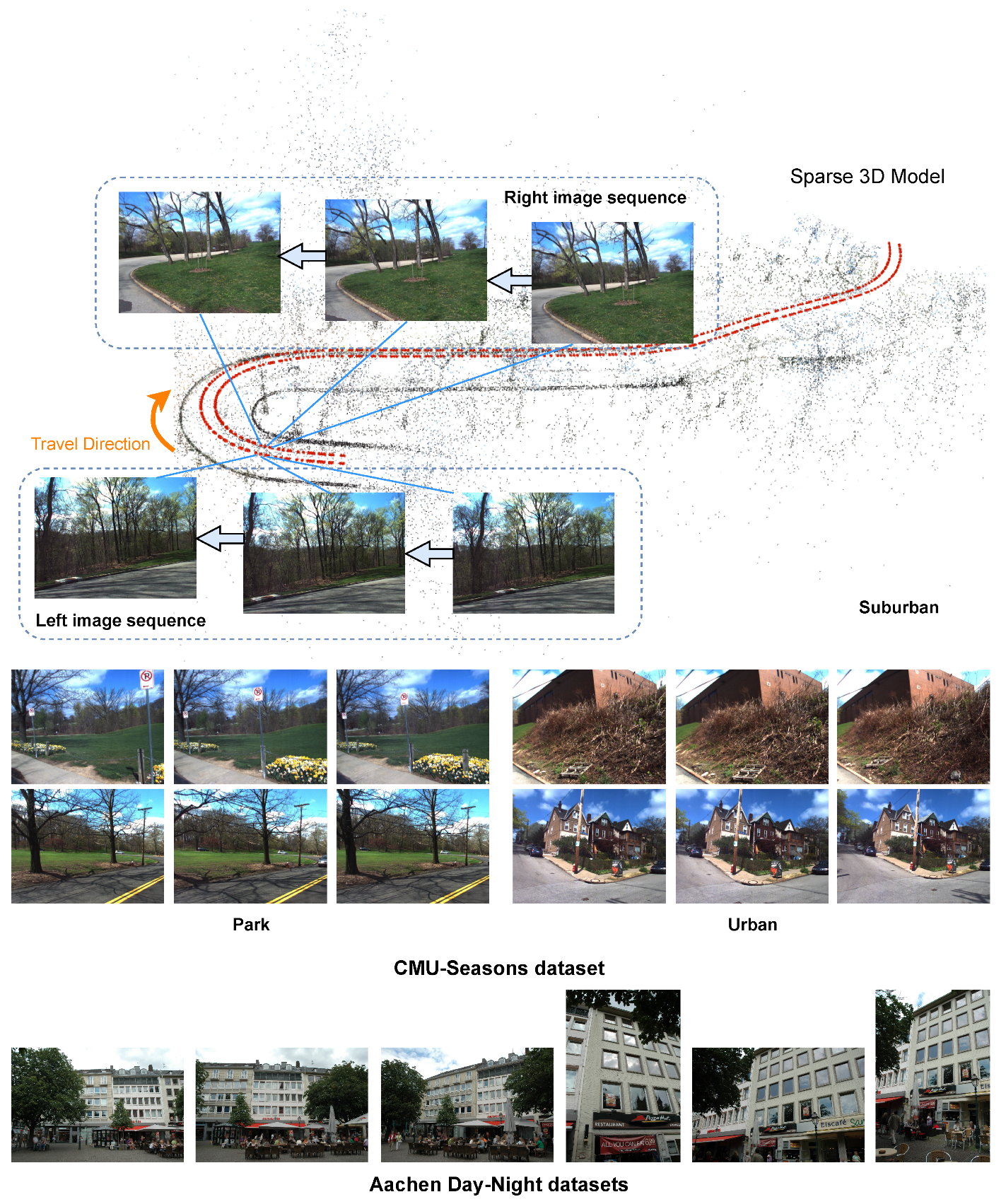

2.2. Benchmark Datasets

2.3. Ranking Methods

3. Proposed Modifications

3.1. Evaluation Modification

3.2. Ranking Modification

4. Experiments

4.1. Simulation Experiment for Evaluation

- Each camera rises equally 1.5 m above the ground.

- All acquisition points are evenly distributed according to the camera on the left to conform to the image acquisition method of most data sets. As a result, the distance between every two connected blue points in the left half of Figure 2 is 0.5 m, and every two connected blue dots in the right half of Figure 2 correspond to one-fiftieth of the three-quarters of the central angle.

- The localization success rate of the simulated query images in the tested method is 70%.

- The orientation error of all simulation query images can be disregarded, thus the determination of the localization result’s interval is solely contingent upon the position error.

- If both cameras of the rig are successfully localized, the pose of the right camera is compensated to the left. The average of their poses is then regarded as the vehicle’s pose.

- If only the left camera is successfully localized, its pose will be regarded as the pose of the vehicle.

- If only the right camera is successfully localized, the subsequent compensated result of the pose to the left is regarded as the vehicle’s pose.

- If no camera is successfully localized, the vehicle is regarded as fail-localized.

4.2. Actual Experiment for Evaluation

4.3. Experiment for Ranking

5. Results

5.1. Simulation Experiment for Evaluation

5.2. Actual Experiment for Evaluation

5.3. Experiment for Ranking

6. Discussion

6.1. Necessity

6.2. Influence

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| 6-DOF | 6-Degree-of-Freedom |

| SLAM | Simultaneous Localization and Mapping |

| CB | Condition Balance |

| ICB | Intra-condition Balance |

| CCB | Cross-condition Balance |

References

- Castle, R.O.; Klein, G.; Murray, D.W. Video-Rate Localization in Multiple Maps for Wearable Augmented Reality. In Proceedings of the 12th IEEE International Symposium on Wearable Computers (ISWC 2008), Pittsburgh, PA, USA, 28 September–1 October 2008; IEEE Computer Society: Washington, DC, USA, 2008; pp. 15–22. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardós, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Arandjelovic, R.; Gronat, P.; Torii, A.; Pajdla, T.; Sivic, J. NetVLAD: CNN Architecture for Weakly Supervised Place Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 1437–1451. [Google Scholar] [CrossRef] [PubMed]

- Torii, A.; Arandjelović, R.; Sivic, J.; Okutomi, M.; Pajdla, T. 24/7 Place Recognition by View Synthesis. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 257–271. [Google Scholar] [CrossRef] [PubMed]

- Cummins, M.; Newman, P. FAB-MAP: Probabilistic Localization and Mapping in the Space of Appearance. Int. J. Robot. Res. 2008, 27, 647–665. [Google Scholar] [CrossRef]

- Torii, A.; Taira, H.; Sivic, J.; Pollefeys, M.; Okutomi, M.; Pajdla, T.; Sattler, T. Are Large-Scale 3D Models Really Necessary for Accurate Visual Localization? IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 814–829. [Google Scholar] [CrossRef] [PubMed]

- Svärm, L.; Enqvist, O.; Kahl, F.; Oskarsson, M. City-Scale Localization for Cameras with Known Vertical Direction. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1455–1461. [Google Scholar] [CrossRef] [PubMed]

- Liu, L.; Li, H.; Dai, Y. Efficient Global 2D-3D Matching for Camera Localization in a Large-Scale 3D Map. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2391–2400. [Google Scholar] [CrossRef]

- Sattler, T.; Leibe, B.; Kobbelt, L. Efficient Amp; Effective Prioritized Matching for Large-Scale Image-Based Localization. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1744–1756. [Google Scholar] [CrossRef] [PubMed]

- Kendall, A.; Grimes, M.; Cipolla, R. PoseNet: A Convolutional Network for Real-Time 6-DOF Camera Relocalization. In Proceedings of the 2015 IEEE International Conference on Computer Vision, ICCV 2015, Santiago, Chile, 7–13 December 2015; IEEE Computer Society: Washington, DC, USA, 2015; pp. 2938–2946. [Google Scholar] [CrossRef]

- Cao, S.; Snavely, N. Graph-Based Discriminative Learning for Location Recognition. Int. J. Comput. Vis. 2015, 112, 239–254. [Google Scholar] [CrossRef]

- Chen, Z.; Jacobson, A.; Sünderhauf, N.; Upcroft, B.; Liu, L.; Shen, C.; Reid, I.; Milford, M. Deep Learning Features at Scale for Visual Place Recognition. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3223–3230. [Google Scholar] [CrossRef]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. SuperPoint: Self-Supervised Interest Point Detection and Description. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 337–33712. [Google Scholar] [CrossRef]

- Dusmanu, M.; Rocco, I.; Pajdla, T.; Pollefeys, M.; Sivic, J.; Torii, A.; Sattler, T. D2-Net: A Trainable CNN for Joint Description and Detection of Local Features. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 8084–8093. [Google Scholar] [CrossRef]

- Revaud, J.; de Souza, C.R.; Humenberger, M.; Weinzaepfel, P. R2D2: Reliable and Repeatable Detector and Descriptor. In Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, Vancouver, BC, Canada, 8–14 December 2019; Wallach, H.M., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E.B., Garnett, R., Eds.; IEEE: Piscataway, NJ, USA, 2019; pp. 12405–12415. [Google Scholar]

- Schönberger, J.L.; Pollefeys, M.; Geiger, A.; Sattler, T. Semantic Visual Localization. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6896–6906. [Google Scholar] [CrossRef]

- Toft, C.; Olsson, C.; Kahl, F. Long-Term 3D Localization and Pose from Semantic Labellings. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops, ICCV Workshops 2017, Venice, Italy, 22–29 October 2017; IEEE Computer Society: Washington, DC, USA, 2017; pp. 650–659. [Google Scholar] [CrossRef]

- Toft, C.; Stenborg, E.; Hammarstrand, L.; Brynte, L.; Pollefeys, M.; Sattler, T.; Kahl, F. Semantic Match Consistency for Long-Term Visual Localization. In Proceedings of the 15th European Conference on Computer Vision—ECCV 2018, Munich, Germany, September 8–14 2018; pp. 391–408. [Google Scholar] [CrossRef]

- Anoosheh, A.; Sattler, T.; Timofte, R.; Pollefeys, M.; Gool, L.V. Night-to-Day Image Translation for Retrieval-based Localization. In Proceedings of the International Conference on Robotics and Automation, ICRA 2019, Montreal, QC, Canada, 20–24 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 5958–5964. [Google Scholar] [CrossRef]

- Han, S.; Gao, W.; Wan, Y.; Wu, Y. Scene-Unified Image Translation For Visual Localization. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 25–28 October 2020; pp. 2266–2270. [Google Scholar] [CrossRef]

- Sattler, T.; Maddern, W.; Toft, C.; Torii, A.; Hammarstrand, L.; Stenborg, E.; Safari, D.; Okutomi, M.; Pollefeys, M.; Sivic, J.; et al. Benchmarking 6DOF Outdoor Visual Localization in Changing Conditions. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8601–8610. [Google Scholar] [CrossRef]

- Schulze, M. A New Monotonic, Clone-Independent, Reversal Symmetric, and Condorcet-Consistent Single-Winner Election Method. Soc. Choice Welf. 2011, 36, 267–303. [Google Scholar] [CrossRef]

- Milford, M.J.; Wyeth, G.F. SeqSLAM: Visual Route-Based Navigation for Sunny Summer Days and Stormy Winter Nights. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, St. Paul, MN, USA, 14–18 May 2012; pp. 1643–1649. [Google Scholar] [CrossRef]

- Torii, A.; Sivic, J.; Okutomi, M.; Pajdla, T. Visual Place Recognition with Repetitive Structures. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 2346–2359. [Google Scholar] [CrossRef] [PubMed]

- Irschara, A.; Zach, C.; Frahm, J.M.; Bischof, H. From Structure-from-Motion Point Clouds to Fast Location Recognition. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 2599–2606. [Google Scholar] [CrossRef]

- Chen, D.M.; Baatz, G.; Koser, K.; Tsai, S.S.; Vedantham, R.; Pylvanainen, T.; Roimela, K.; Chen, X.; Bach, J.; Pollefeys, M.; et al. City-Scale Landmark Identification on Mobile Devices. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 737–744. [Google Scholar] [CrossRef]

- Hutchison, D.; Kanade, T.; Kittler, J.; Kleinberg, J.M.; Mattern, F.; Mitchell, J.C.; Naor, M.; Nierstrasz, O.; Pandu Rangan, C.; Steffen, B.; et al. Location Recognition Using Prioritized Feature Matching. In Proceedings of the 11th European Conference on Computer Vision—ECCV 2010, Crete, Greece, 5–11 September 2010; Volume 6312, pp. 791–804. [Google Scholar] [CrossRef]

- Li, Y.; Snavely, N.; Huttenlocher, D.P.; Fua, P. Worldwide Pose Estimation Using 3D Point Clouds. In Large-Scale Visual Geo-Localization; Zamir, A.R., Hakeem, A., Van Gool, L., Shah, M., Szeliski, R., Eds.; Springer: Cham, Switzerland, 2016; pp. 147–163. [Google Scholar] [CrossRef]

- Sattler, T.; Weyand, T.; Leibe, B.; Kobbelt, L. Image Retrieval for Image-Based Localization Revisited. In Proceedings of the British Machine Vision Conference, Surrey, BC, Canada, 3–7 September 2012; pp. 76.1–76.12. [Google Scholar] [CrossRef]

- Shotton, J.; Glocker, B.; Zach, C.; Izadi, S.; Criminisi, A.; Fitzgibbon, A. Scene Coordinate Regression Forests for Camera Relocalization in RGB-D Images. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2930–2937. [Google Scholar] [CrossRef]

- Sun, X.; Xie, Y.; Luo, P.; Wang, L. A Dataset for Benchmarking Image-Based Localization. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5641–5649. [Google Scholar] [CrossRef]

- Badino, H.; Huber, D.; Kanade, T. The CMU Visual Localization Data Set. 2011. Available online: http://3dvis.ri.cmu.edu/data-sets/localization (accessed on 1 January 2020).

- Maddern, W.; Pascoe, G.; Linegar, C.; Newman, P. 1 Year, 1000 Km: The Oxford RobotCar Dataset. Int. J. Robot. Res. 2017, 36, 3–15. [Google Scholar] [CrossRef]

- Taira, H.; Okutomi, M.; Sattler, T.; Cimpoi, M.; Pollefeys, M.; Sivic, J.; Pajdla, T.; Torii, A. InLoc: Indoor Visual Localization with Dense Matching and View Synthesis. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 1293–1307. [Google Scholar] [CrossRef] [PubMed]

- Wijmans, E.; Furukawa, Y. Exploiting 2D Floorplan for Building-Scale Panorama RGBD Alignment. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1427–1435. [Google Scholar] [CrossRef]

- Badino, H.; Huber, D.; Kanade, T. Visual Topometric Localization. In Proceedings of the 2011 IEEE Intelligent Vehicles Symposium (IV), Baden-Baden, Germany, 5–9 June 2011; pp. 794–799. [Google Scholar] [CrossRef]

- Humenberger, M.; Cabon, Y.; Guerin, N.; Morat, J.; Revaud, J.; Rerole, P.; Pion, N.; de Souza, C.; Leroy, V.; Csurka, G. Robust Image Retrieval-based Visual Localization Using Kapture. arXiv 2020, arXiv:2007.13867. [Google Scholar]

- Schulze, M. The Schulze Method of Voting. arXiv 2021, arXiv:1804.02973. [Google Scholar]

- Zhang, Z.; Sattler, T.; Scaramuzza, D. Reference Pose Generation for Long-term Visual Localization via Learned Features and View Synthesis. Int. J. Comput. Vis. 2021, 129, 821–844. [Google Scholar] [CrossRef] [PubMed]

| Current Method | Improved Method | ||

|---|---|---|---|

| distance [m] | 0.25/0.50/5.0 | 0.25/0.50/5.0 | |

| orient. [deg] | 2/5/10 | 2/5/10 | |

| Straight Route | Basic | 20.5/62.5/70.0 | 39.0/86.0/93.0 |

| Basic_rig | 27.5/82.0/93.0 | 39.0/86.0/93.0 | |

| Basic_rig_seq | 31.5/88.0/100.0 | 42.0/92.0/100.0 | |

| Curved Route | Basic | 24.0/65.5/70.0 | 49.0/86.0/91.0 |

| Basic_rig | 32.5/84.0/91.0 | 49.0/86.0/91.0 | |

| Basic_rig_seq | 38.0/92.5/100.0 | 55.0/95.0/100.0 |

| CMU-Seasons | ||||||

|---|---|---|---|---|---|---|

| Urban | Suburban | Park | Overcast | Sunny | ||

| distance [m] | 0.25/0.50/5.0 | 0.25/0.50/5.0 | 0.25/0.50/5.0 | 0.25/0.50/5.0 | 0.25/0.50/5.0 | |

| orient. [deg] | 2/5/10 | 2/5/10 | 2/5/10 | 2/5/10 | 2/5/10 | |

| UniGAN(RGBS) + NV + SP [20] | 92.4/95.0/98.0 | 75.9/82.1/91.0 | 56.8/65.1/81.7 | 78.6/84.0/92.4 | 69.0/74.5/87.2 | |

| UniGAN(RGBS) + NV + SP_rig | 93.3/95.8/98.0 | 81.4/88.6/94.8 | 65.3/74.3/82.8 | 80.9/86.2/90.6 | 76.9/83.3/89.5 | |

| UniGAN(RGBS) + NV + SP_rig_seq | 93.4/96.1/98.4 | 81.6/89.2/96.1 | 66.5/77.4/89.7 | 81.1/86.7/92.3 | 78.2/86.5/96.2 | |

| foliage | mixed foliage | no foliage | low sun | cloudy | snow | |

| distance [m] | 0.25/0.50/5.0 | 0.25/0.50/5.0 | 0.25/0.50/5.0 | 0.25/0.50/5.0 | 0.25/0.50/5.0 | 0.25/0.50/5.0 |

| orient. [deg] | 2/5/10 | 2/5/10 | 2/5/10 | 2/5/10 | 2/5/10 | 2/5/10 |

| UniGAN(RGBS) + NV + SP [20] | 71.7/76.9/88.4 | 75.2/81.5/90.8 | 82.9/87.9/93.4 | 75.4/81.8/90.4 | 80.9/85.2/92.6 | 74.8/81.8/89.3 |

| UniGAN(RGBS) + NV + SP_rig | 78.9/84.8/90.2 | 78.1/84.6/90.5 | 85.5/90.9/95.7 | 79.2/86.0/91.9 | 84.9/89.5/93.6 | 80.0/87.8/94.1 |

| UniGAN(RGBS) + NV + SP_rig_seq | 79.9/87.3/95.6 | 78.3/85.2/92.1 | 85.7/91.3/96.5 | 79.4/86.6/93.7 | 85.2/90.4/95.1 | 80.4/88.6/95.8 |

| Method | Day | Night |

|---|---|---|

| distance [m] | 0.25/0.50/5.0 | 0.25/0.50/5.0 |

| orient. [deg] | 2/5/10 | 2/5/10 |

| Method 1 | 90.9/96.7/99.5 | 78.5/91.1/98.4 |

| Method 2 | 89.8/96.1/99.4 | 77.0/90.6/100.0 |

| Method 3 | 90.0/96.2/99.5 | 72.3/86.4/97.9 |

| Method 4 | 88.8/95.4/99.0 | 74.3/90.6/98.4 |

| Method 5 | 86.0/94.8/98.8 | 72.3/88.5/99.0 |

| Method 6 | 87.1/94.7/98.3 | 74.3/86.9/97.4 |

| Method 7 | 0.0/0.0/0.0 | 73.3/88.0/98.4 |

| Method 8 | 0.0/0.0/0.0 | 69.1/87.4/98.4 |

| Method 9 | 0.0/0.0/0.0 | 73.3/86.9/97.9 |

| Method 10 | 0.0/0.0/0.0 | 71.2/86.9/97.9 |

| Method 11 | 0.0/0.0/0.0 | 71.2/86.9/97.9 |

| Method 12 | 0.0/0.0/0.0 | 72.3/86.4/97.4 |

| Method 13 | 0.0/0.0/0.0 | 72.3/85.3/97.9 |

| Method 14 | 0.0/0.0/0.0 | 67.5/85.9/97.4 |

| Method 15 | 76.5/85.9/94.1 | 34.6/41.4/49.7 |

| Rank | Method |

|---|---|

| 1 | Method 1 |

| 2 | Method 2 |

| 3 | Method 3, Method 4 |

| 5 | Method 5 |

| 6 | Method 6 |

| 7 | Method 7 |

| 8 | Method 8 |

| 9 | Method 9 |

| 10 | Method 10, Method 11 |

| 12 | Method 12, Method 13 |

| 14 | Method 14, Method 15 |

| Rank | Method |

|---|---|

| 1 | Method 1 |

| 2 | Method 2 |

| 3 | Method 4 |

| 4 | Method 3 |

| 5 | Method 5 |

| 6 | Method 6 |

| 7 | Method 7 |

| 8 | Method 8 |

| 9 | Method 9 |

| 10 | Method 10, Method 11 |

| 12 | Method 12 |

| 13 | Method 13 |

| 14 | Method 15 |

| 15 | Method 14 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, S.; Gao, W.; Hu, Z. Why You Cannot Rank First: Modifications for Benchmarking Six-Degree-of-Freedom Visual Localization Algorithms. Sensors 2023, 23, 9580. https://doi.org/10.3390/s23239580

Han S, Gao W, Hu Z. Why You Cannot Rank First: Modifications for Benchmarking Six-Degree-of-Freedom Visual Localization Algorithms. Sensors. 2023; 23(23):9580. https://doi.org/10.3390/s23239580

Chicago/Turabian StyleHan, Sheng, Wei Gao, and Zhanyi Hu. 2023. "Why You Cannot Rank First: Modifications for Benchmarking Six-Degree-of-Freedom Visual Localization Algorithms" Sensors 23, no. 23: 9580. https://doi.org/10.3390/s23239580

APA StyleHan, S., Gao, W., & Hu, Z. (2023). Why You Cannot Rank First: Modifications for Benchmarking Six-Degree-of-Freedom Visual Localization Algorithms. Sensors, 23(23), 9580. https://doi.org/10.3390/s23239580