An Adaptive Two-Dimensional Voxel Terrain Mapping Method for Structured Environment

Abstract

:1. Introduction

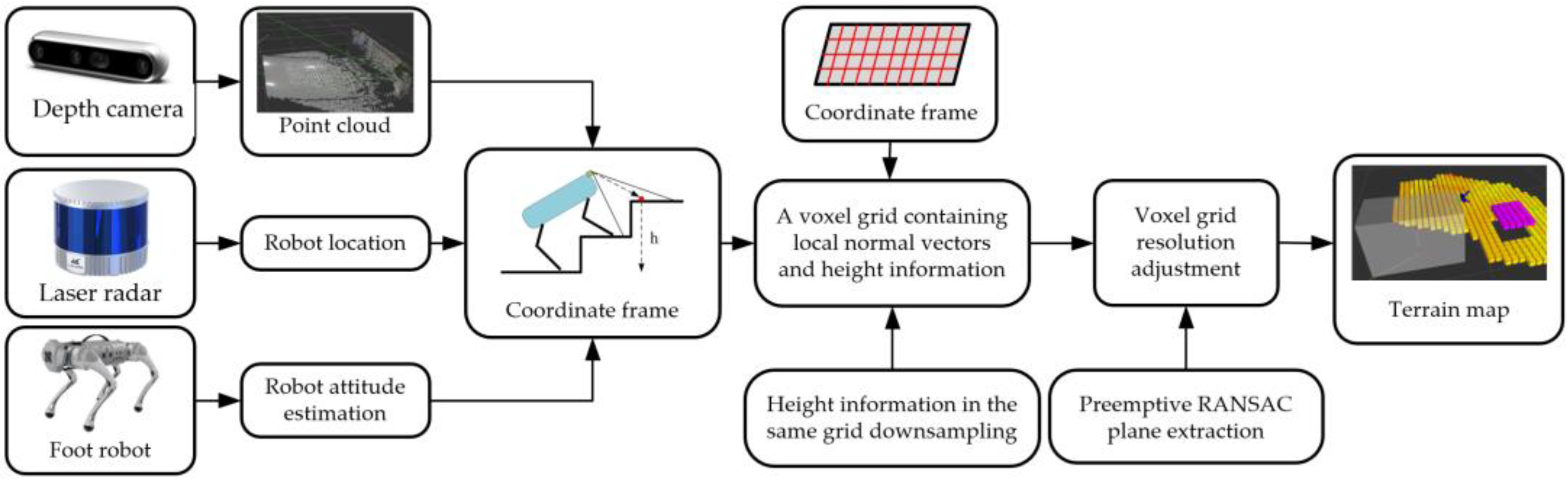

- (1)

- Through the terrain mapping framework, the environmental point cloud undergoes transformation into height information, which is subsequently mapped onto a two-dimensional voxel grid. This height information is then downsampled to streamline the data structure and reduce redundancy.

- (2)

- The utilization of the preemptive RANSAC algorithm for plane extraction from the terrain height information within the voxel grid enables the estimation of parameters such as height and depth in structured environments.

- (3)

- To accommodate the characteristics of various planes, an adaptive resolution strategy is employed to adjust and merge the resolution of the voxel mesh within the extracted planes. The voxel meshes from different planes are then seamlessly integrated. A specialized data structure is utilized to efficiently store the final terrain mapping information.

- (4)

- An environment awareness system is constructed based on the Unitree Go1 platform. This system is deployed on an NUC10 embedded computer, and terrain mapping tests and evaluations are conducted in both simulation and real-world scenarios.

2. Related Work

3. Methods

3.1. Development of a Terrain Mapping System for Legged Robots

3.1.1. Coordinate Framework for Terrain Mapping

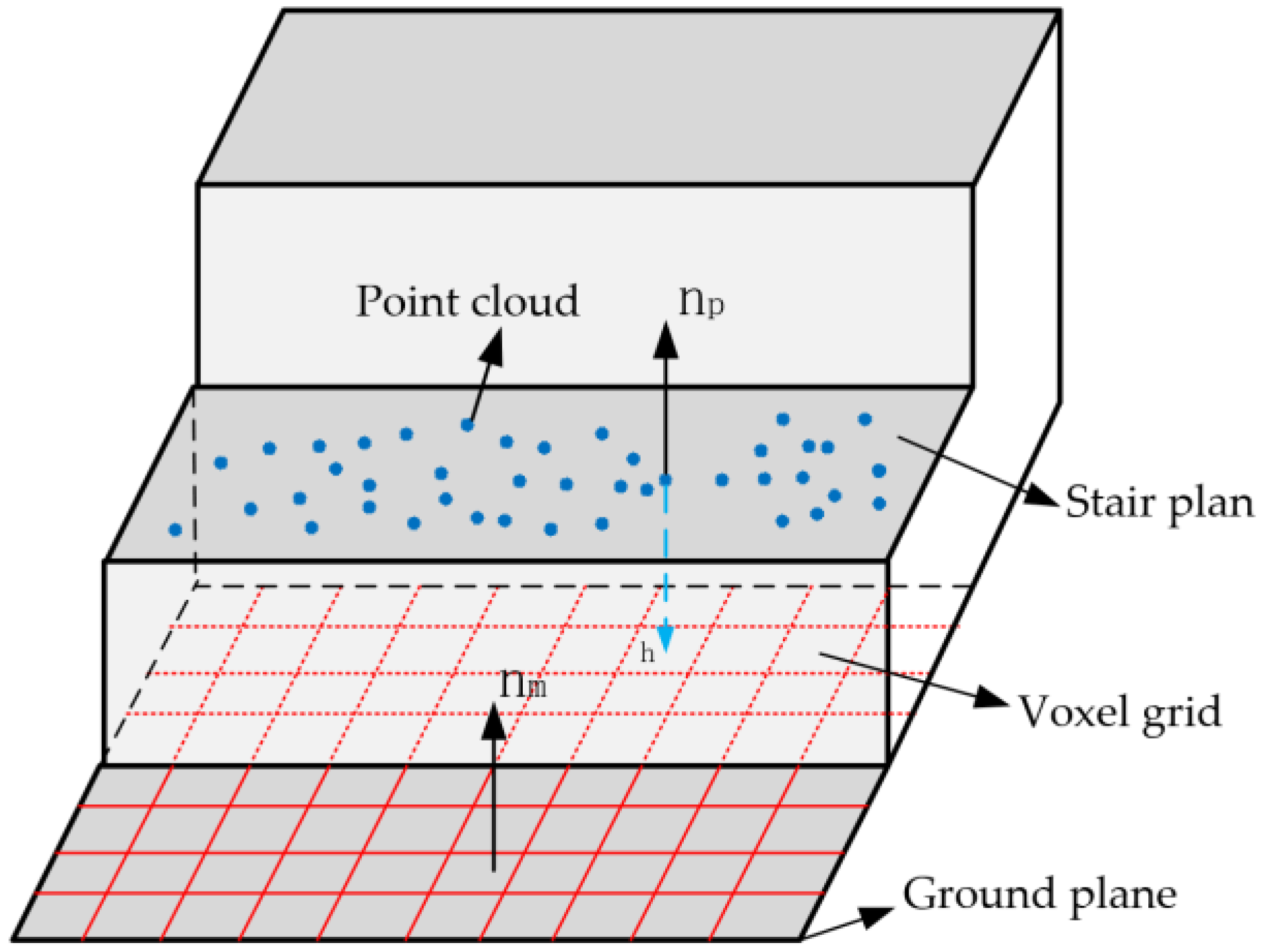

3.1.2. Construction of Two-Dimensional Voxel Grid for Topography Mapping

- (1)

- Height information and position information of the point cloud can be mapped from each point cloud, and the side length of the voxel grid is set to . Then, the two-dimensional voxel mesh can be subdivided into daughter voxel meshes:

- (2)

- Encoding the two-dimensional voxel grid, the spatial index of each voxel grid can be determined:

- (3)

- The terrain mapping height value obtained by point cloud transformation in each grid is traversed, the point cloud height mapping value in each grid is maximized, and the other point cloud height mapping values are removed.

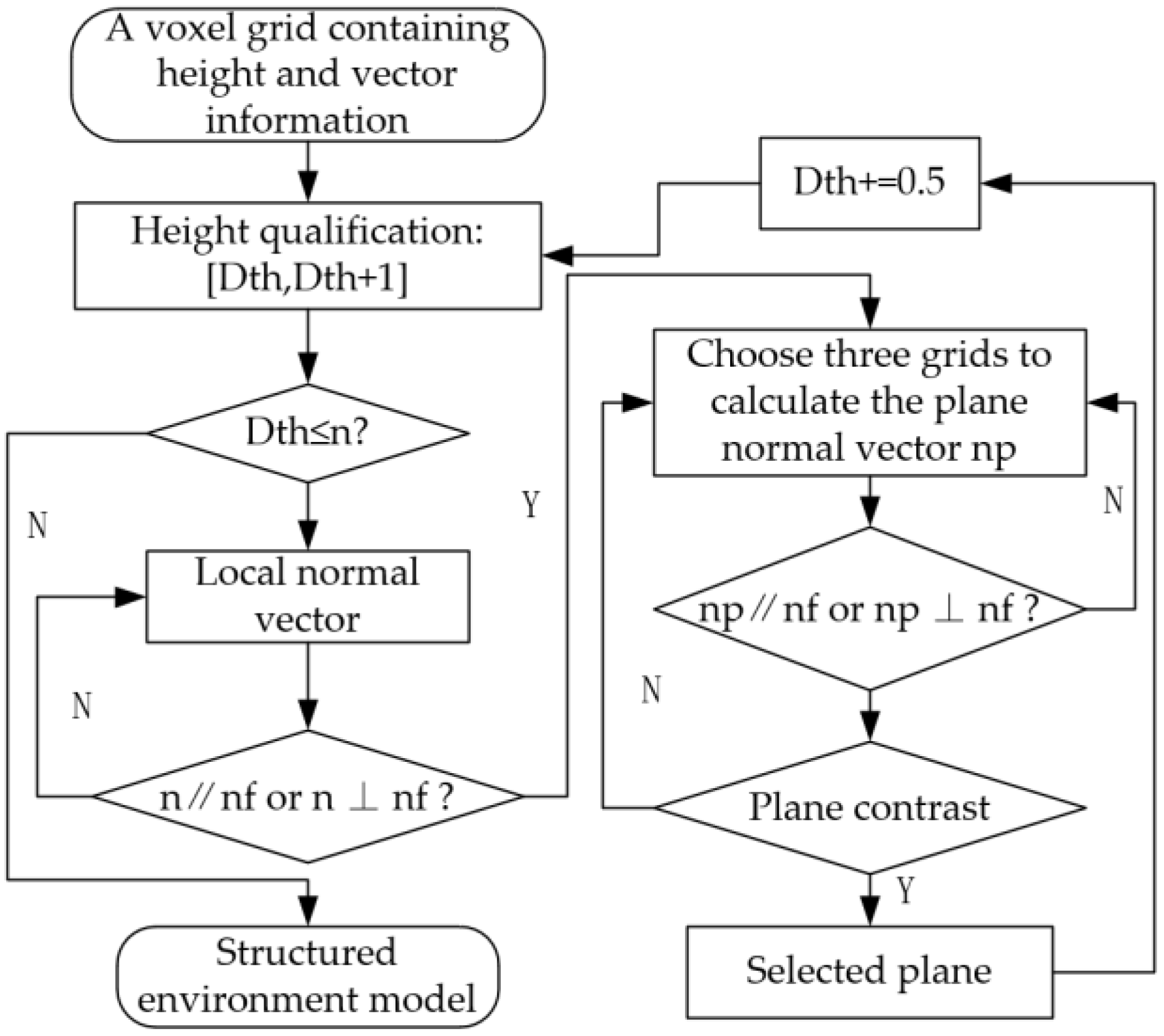

3.2. Plane Segmentation from Height Values

- (1)

- The height values in the three-voxel grid are randomly selected from the voxel grid. If the three-voxel grid is not collinear, the corresponding plane is calculated.

- (2)

- Calculate the difference between the height in the remaining grid and the height of the plane, respectively, .

- (3)

- An appropriate height threshold d is selected. If , the voxel grid is divided into a voxel grid in the plane; otherwise, it is an out-of-plane voxel grid, and the number of voxel grids N in the plane is counted.

- (4)

- Cycle the above three steps and iterate for K times. When N in this plane is the largest, it is identified as the best plane.

3.3. Adaptive Voxel Grid Resolution

4. Experimental Validation

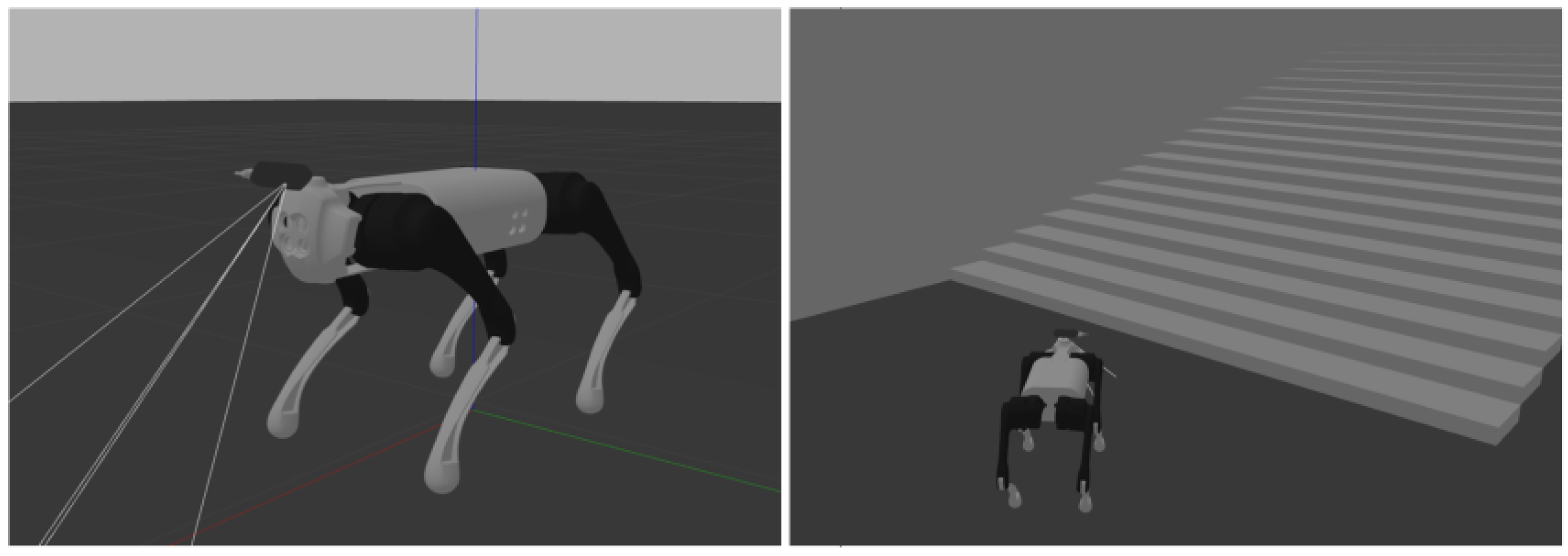

4.1. Simulation Experiment

4.1.1. Construction of the Experimental Environment

4.1.2. Simulated Experiment

4.2. Comparative Verification

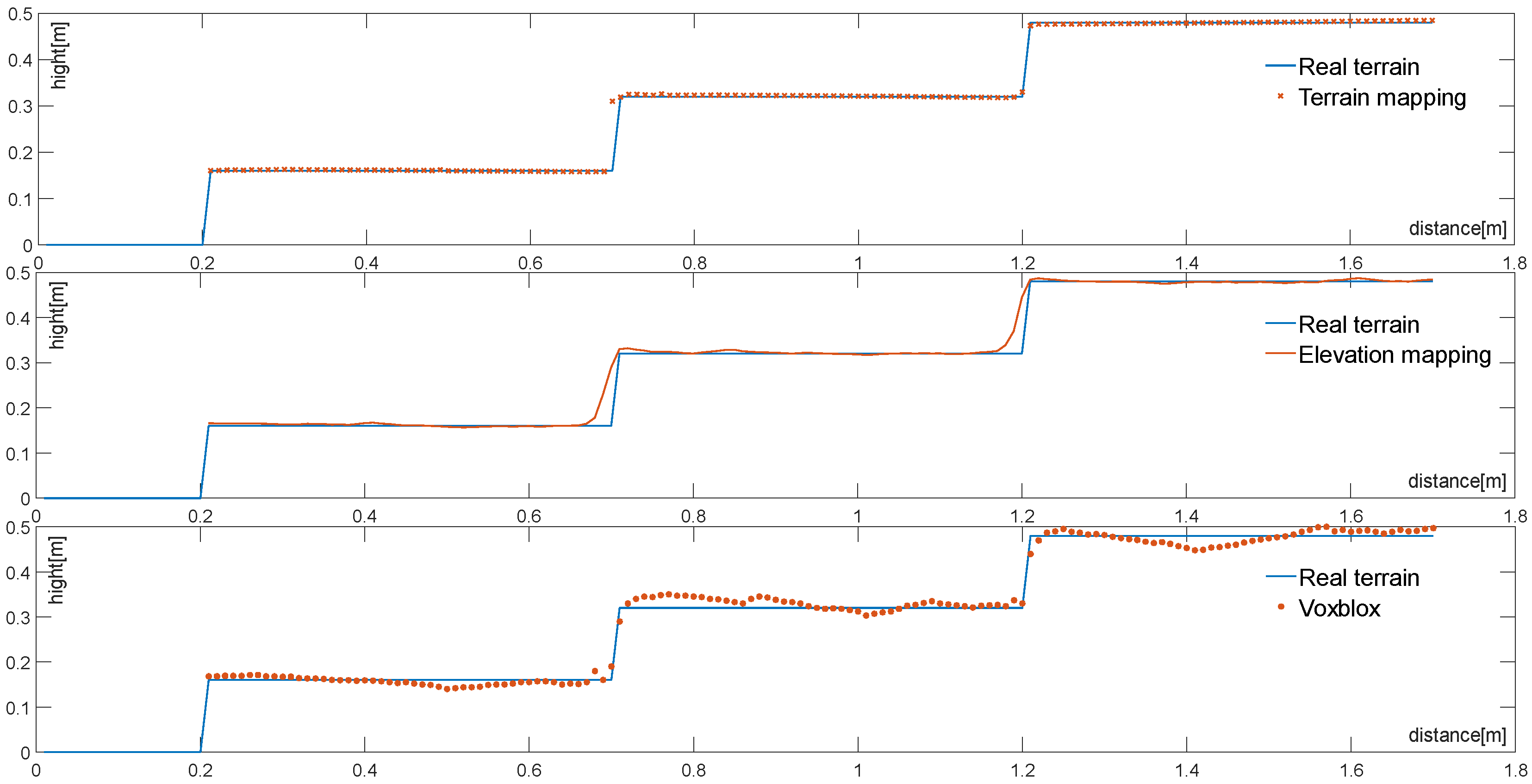

4.2.1. Experiment for Comparing Terrain Fitting Effects

4.2.2. Comparison of Terrain Mapping Errors

4.2.3. Comparison of Terrain Mapping Speed

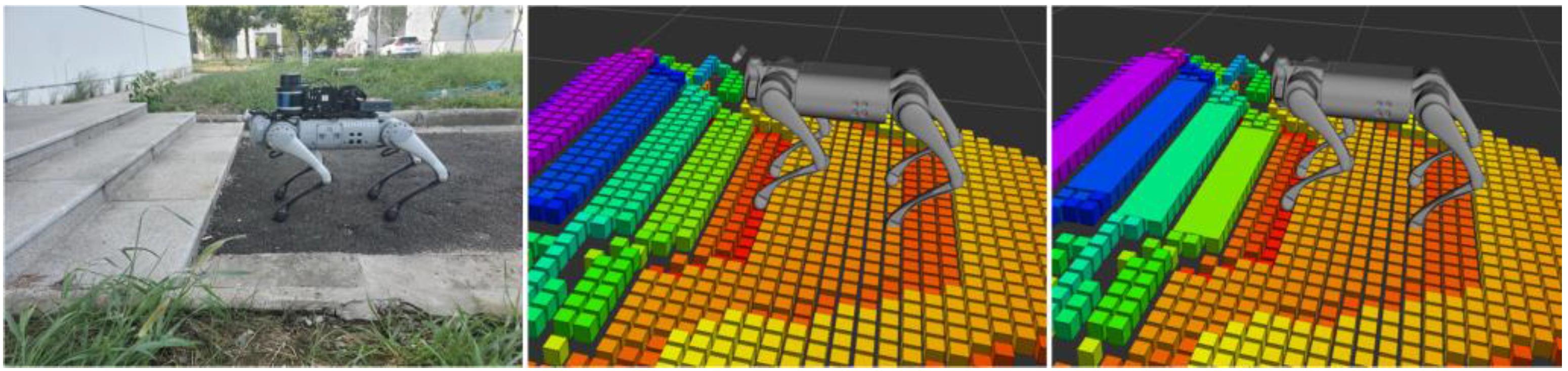

4.3. Real Terrain Testing

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ren, J.; Dai, Y.; Liu, B.; Xie, P.; Wang, G. Hierarchical Vision Navigation System for Quadruped Robots with Foothold Adaptation Learning. Sensors 2023, 23, 5194. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; Xiao, J.; Zhou, Y.; Loianno, G. Multi-robot collaborative perception with graph neural networks. IEEE Robot. Autom. Lett. 2022, 7, 2289–2296. [Google Scholar] [CrossRef]

- Hutter, M.; Gehring, C.; Lauber, A.; Gunther, F. Anymal-toward legged robots for harsh environments. Adv. Robot. 2017, 31, 918–931. [Google Scholar] [CrossRef]

- Spröwitz, A.T.; Tuleu, A.; Ajallooeian, M. Oncilla robot: A versatile open-source quadruped research robot with compliant pantograph legs. Front. Robot. AI 2018, 5, 67. [Google Scholar] [CrossRef] [PubMed]

- Hoeller, D.; Rudin, N.; Choy, C. Neural scene representation for locomotion on structured terrain. IEEE Robot. Autom. Lett. 2022, 7, 8667–8674. [Google Scholar] [CrossRef]

- Jenelten, F.; Miki, T.; Vijayan, A.E. Perceptive locomotion in rough terrain—Online foothold optimization. IEEE Robot. Autom. Lett. 2020, 5, 5370–5376. [Google Scholar] [CrossRef]

- Fankhauser, P.; Bloesch, M.; Hutter, M. Probabilistic terrain mapping for mobile robots with uncertain localization. IEEE Robot. Autom. Lett. 2018, 3, 3019–3026. [Google Scholar] [CrossRef]

- Lu, L.; Yunda, A.; Carrio, A.; Campoy, P. Robust autonomous flight in cluttered environment using a depth sensor. Int. J. Micro Air Veh. 2020, 12. [Google Scholar] [CrossRef]

- Yang, L.; Li, Y.; Li, X.; Meng, Z.; Luo, H. Efficient plane extraction using normal estimation and RANSAC from 3D point cloud. Comput. Stand. Interfaces 2022, 82, 103608. [Google Scholar] [CrossRef]

- Wang, M.; Cong, M.; Du, Y.; Liu, D.; Tian, X. Multi-robot raster map fusion without initial relative position. Robot. Intell. Autom. 2023, 43, 498–508. [Google Scholar] [CrossRef]

- Zhao, W.; Lin, R.; Dong, S.; Cheng, Y. A Study of the Global Topological Map Construction Algorithm Based on Grid Map Representation for Multirobot. IEEE Trans. Autom. Sci. Eng. 2022, 20, 2822–2835. [Google Scholar] [CrossRef]

- Qi, X.; Wang, W.; Yuan, M.; Wang, Y.; Li, M.; Xue, L.; Sun, Y. Building semantic grid maps for domestic robot navigation. Int. J. Adv. Robot. Syst. 2020, 17. [Google Scholar] [CrossRef]

- Rupeng, W.; Ye, L.; Teng, M.; Zheng, C. Underwater digital elevation map gridding method based on optimal partition of suitable matching area. Int. J. Adv. Robot. Syst. 2019, 16. [Google Scholar] [CrossRef]

- Azpúrua, H.; Potje, G.A.; Rezeck, P.A.F. Cooperative digital magnetic-elevation maps by small autonomous aerial robots. J. Field Robot. 2019, 36, 1378–1398. [Google Scholar] [CrossRef]

- Wang, L.; Zhao, D.; Ni, T.; Liu, S. Extraction of preview elevation information based on terrain mapping and trajectory prediction in real-time. IEEE Access 2020, 8, 76618–76631. [Google Scholar] [CrossRef]

- Xue, G.; Wei, J.; Li, R.; Cheng, J. LeGO-LOAM-SC: An Improved Simultaneous Localization and Mapping Method Fusing LeGO-LOAM and Scan Context for Underground Coalmine. Sensors 2022, 22, 520. [Google Scholar] [CrossRef]

- Xue, G.; Li, R.; Liu, S.; Wei, J. Research on underground coal mine map construction method based on LeGO-LOAM improved algorithm. Energies 2022, 15, 6256. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, G. Improved LeGO-LOAM method based on outlier points elimination. Measurement 2023, 214, 112767. [Google Scholar] [CrossRef]

- Dang, X.; Rong, Z.; Liang, X. Sensor fusion-based approach to eliminating moving objects for SLAM in dynamic environments. Sensors 2021, 21, 230. [Google Scholar] [CrossRef]

- Stölzle, M.; Miki, T.; Gerdes, L.; Azkarate, M. Reconstructing occluded elevation information in terrain maps with self-supervised learning. IEEE Robot. Autom. Lett. 2022, 7, 1697–1704. [Google Scholar] [CrossRef]

- Yang, B.; Zhang, Q.; Geng, R.; Wang, L. Real-Time Neural Dense Elevation Mapping for Urban Terrain with Uncertainty Estimations. IEEE Robot. Autom. Lett. 2022, 8, 696–703. [Google Scholar] [CrossRef]

- Zhang, K.; Gui, H.; Luo, Z.; Li, D. Matching for navigation map building for automated guided robot based on laser navigation without a reflector. Ind. Robot: Int. J. Robot. Res. Appl. 2019, 46, 17–30. [Google Scholar] [CrossRef]

- Funk, N.; Tarrio, J.; Papatheodorou, S. Multi-resolution 3D mapping with explicit free space representation for fast and accurate mobile robot motion planning. IEEE Robot. Autom. Lett. 2021, 6, 3553–3560. [Google Scholar] [CrossRef]

- Duong, T.; Yip, M.; Atanasov, N. Autonomous navigation in unknown environments with sparse bayesian kernel-based occupancy mapping. IEEE Trans. Robot. 2022, 38, 3694–3712. [Google Scholar] [CrossRef]

- Zhou, X.; Wang, Z.; Ye, H.; Xu, C. Ego-planner: An esdf-free gradient-based local planner for quadrotors. IEEE Robot. Autom. Lett. 2020, 6, 478–485. [Google Scholar] [CrossRef]

- Matsuki, H.; Scona, R.; Czarnowski, J. Codemapping: Real-time dense mapping for sparse slam using compact scene representations. IEEE Robot. Autom. Lett. 2021, 6, 7105–7112. [Google Scholar] [CrossRef]

- Lang, R.; Fan, Y.; Chang, Q. Svr-net: A sparse voxelized recurrent network for robust monocular slam with direct tsdf mapping. Sensors 2023, 23, 3942. [Google Scholar] [CrossRef]

- Qi, Z.; Zou, Z.; Chen, H.; Shi, Z. 3D reconstruction of remote sensing mountain areas with TSDF-based neural networks. Remote Sens. 2022, 14, 4333. [Google Scholar] [CrossRef]

- Chen, H.; Chen, W.; Wu, R. Plane segmentation for a building roof combining deep learning and the RANSAC method from a 3D point cloud. J. Electron. Imaging 2021, 30, 053022. [Google Scholar] [CrossRef]

- Wu, Y.; Li, G.; Xian, C.; Ding, X.; Xiong, Y. Extracting POP: Pairwise orthogonal planes from point cloud using RANSAC. Comput. Graph. 2021, 94, 43–51. [Google Scholar] [CrossRef]

- Su, Z.; Gao, Z.; Zhou, G.; Li, S.; Song, L.; Lu, X.; Kang, N. Building Plane Segmentation Based on Point Clouds. Remote Sens. 2021, 14, 95. [Google Scholar] [CrossRef]

- Woo, S.; Yumbla, F.; Park, C.; Choi, H.R. Plane-based stairway mapping for legged robot locomotion. Ind. Robot Int. J. Robot. Res. Appl. 2020, 47, 569–580. [Google Scholar] [CrossRef]

| Map Resolution(m) | Voxblox | Elevation Mapping | Ours |

|---|---|---|---|

| 0.005 | 27.84 s | 26.71 s | 24.69 s |

| 0.01 | 22.27 s | 21.36 s | 19.17 s |

| 0.02 | 14.79 s | 14.16 s | 11.44 s |

| 0.05 | 10.27 s | 9.82 s | 6.73 s |

| 0.1 | 4.92 s | 4.76 s | 2.49 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, H.; Ping, P.; Shi, Q.; Chen, H. An Adaptive Two-Dimensional Voxel Terrain Mapping Method for Structured Environment. Sensors 2023, 23, 9523. https://doi.org/10.3390/s23239523

Zhou H, Ping P, Shi Q, Chen H. An Adaptive Two-Dimensional Voxel Terrain Mapping Method for Structured Environment. Sensors. 2023; 23(23):9523. https://doi.org/10.3390/s23239523

Chicago/Turabian StyleZhou, Hang, Peng Ping, Quan Shi, and Hailong Chen. 2023. "An Adaptive Two-Dimensional Voxel Terrain Mapping Method for Structured Environment" Sensors 23, no. 23: 9523. https://doi.org/10.3390/s23239523

APA StyleZhou, H., Ping, P., Shi, Q., & Chen, H. (2023). An Adaptive Two-Dimensional Voxel Terrain Mapping Method for Structured Environment. Sensors, 23(23), 9523. https://doi.org/10.3390/s23239523