Visible Light Communications-Based Assistance System for the Blind and Visually Impaired: Design, Implementation, and Intensive Experimental Evaluation in a Real-Life Situation

Abstract

:1. Introduction

2. State-of-the-Art Systems and Solutions for Visually Impaired Persons Assistance and Visible Light Communications Potential

2.1. Existing Solutions and Approaches in Visually Impaired Persons’ Assistance

2.2. Commercial Software Applications for Blind and Severely Visually Impaired Persons’ Assistance

2.3. Visible Light Communications and Their Potential in Blind and Severely Visually Impaired Persons’ Assistance

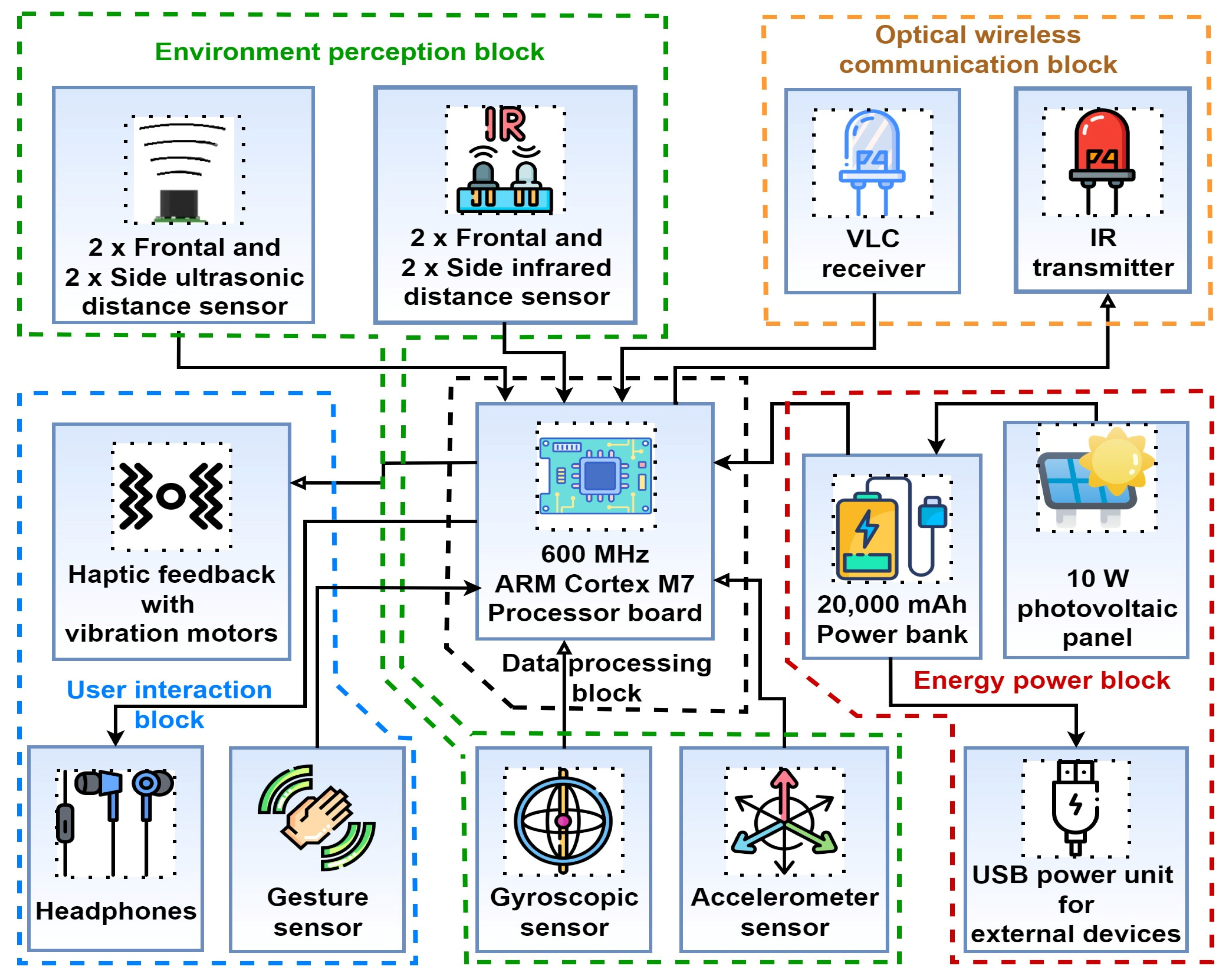

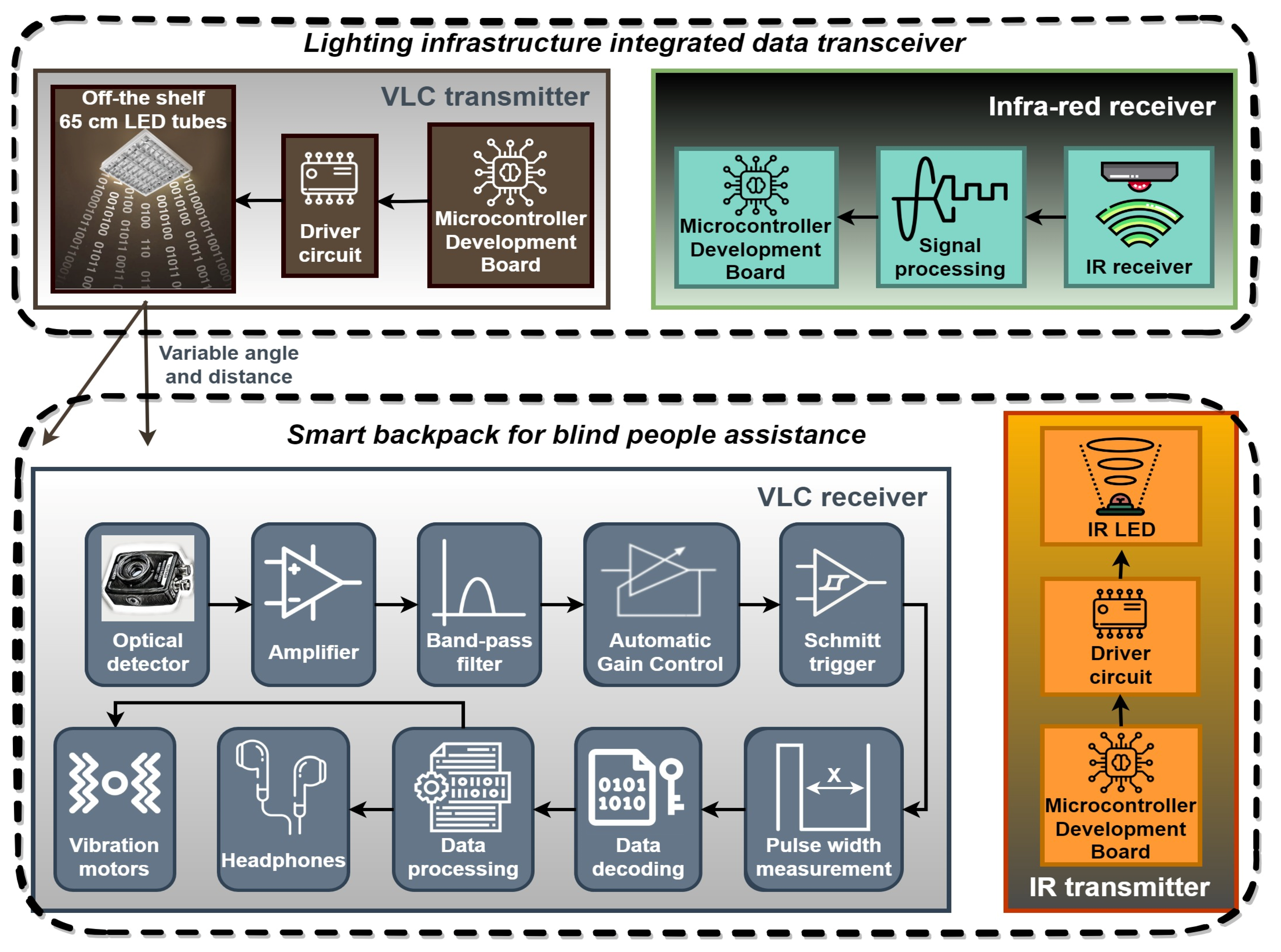

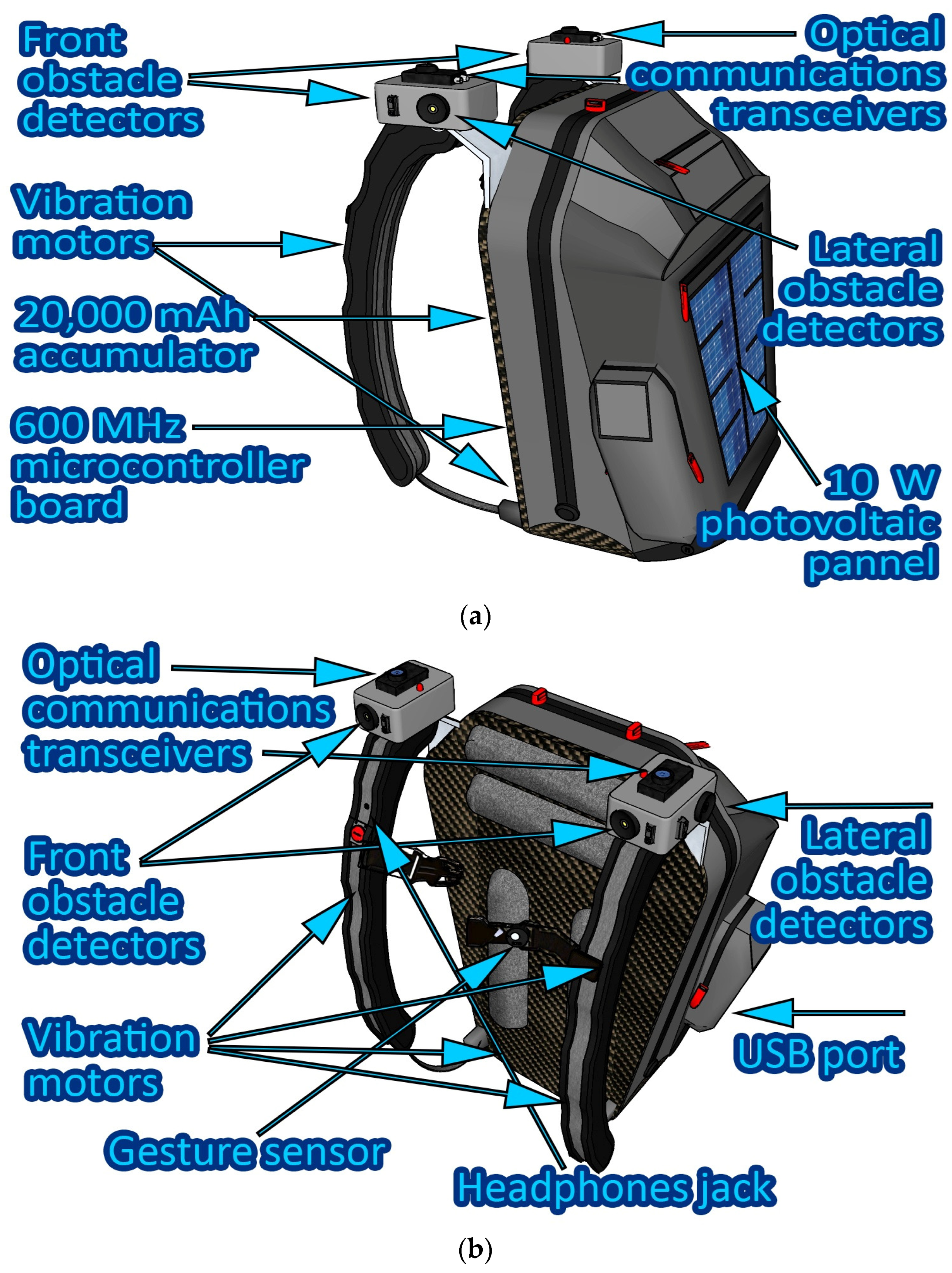

3. Conceptualization and Implementation of the Visible Light Communications-Based Smart Backpack for Blind and Severely Visually Impaired Persons’ Assistance

3.1. Prototype Conceptualization

3.1.1. Purpose Statement

3.1.2. Visible Light Communications-Based Smart Backpack for Visually Impaired Persons’ Assistance: Requirements and Guidelines

- VLC should not impact the existing lighting infrastructure from a hardware point of view or should have a minimum impact;

- VLC function should not affect lighting from a regular user visibility point of view, meaning that the same lighting intensity should be provided; thus, light intensity should not be increased in order to improve the Signal-to-Noise Ratio (SNR), nor it should be decreased unnecessarily;

- VLC should not generate visible or perceivable flickering;

- When light dimming is necessary, data transmission should be available.

3.2. Visible Light Communications-Based Smart Backpack for Visually Impaired Persons: Implementation Process

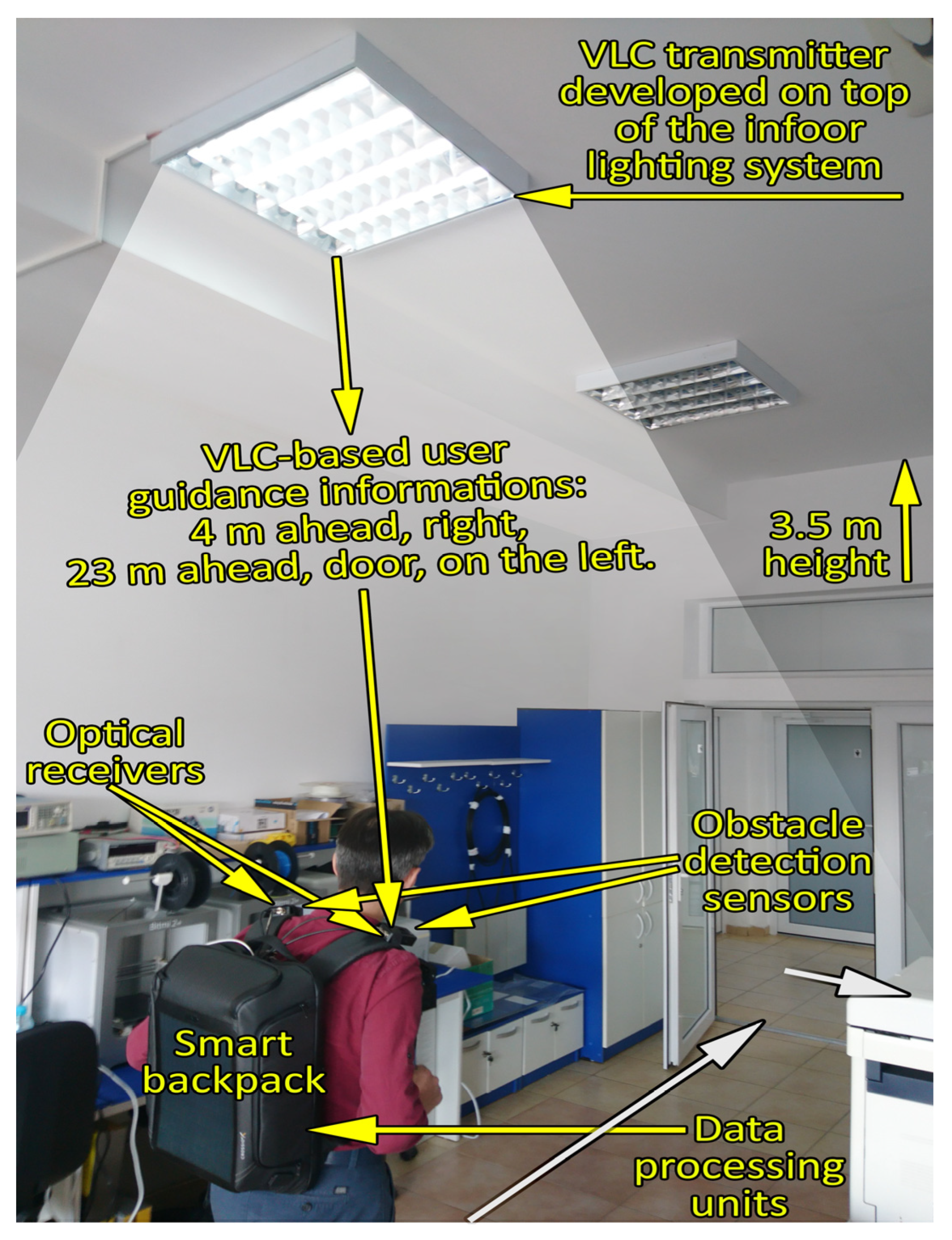

4. Experimental Testing Procedure, Experimental Results and Discussions concerning the Importance of This Work

4.1. Experimental Testing Procedure

4.2. Experimental Results

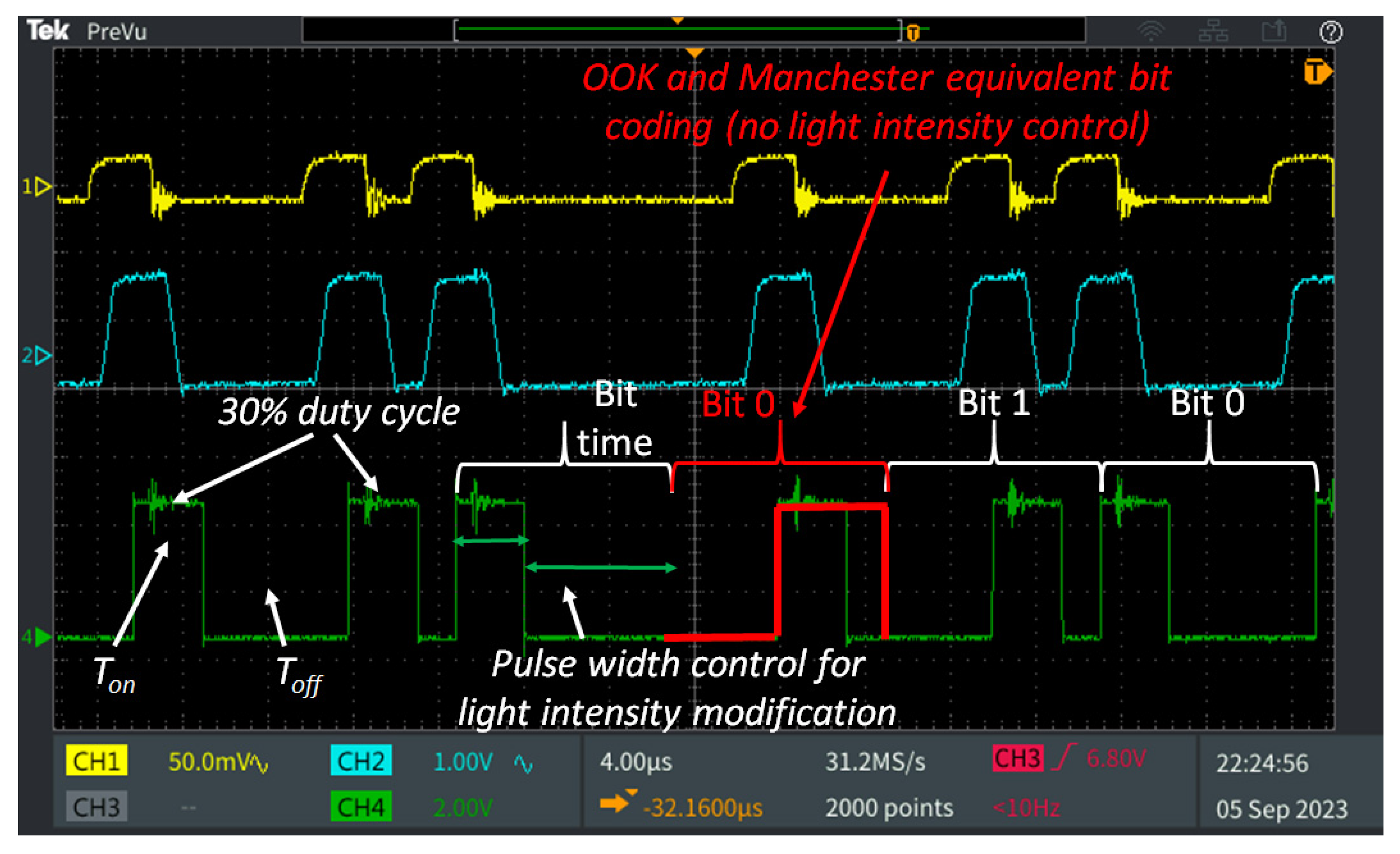

4.2.1. Experimental Results for the Visible Light Communications Component Evaluation

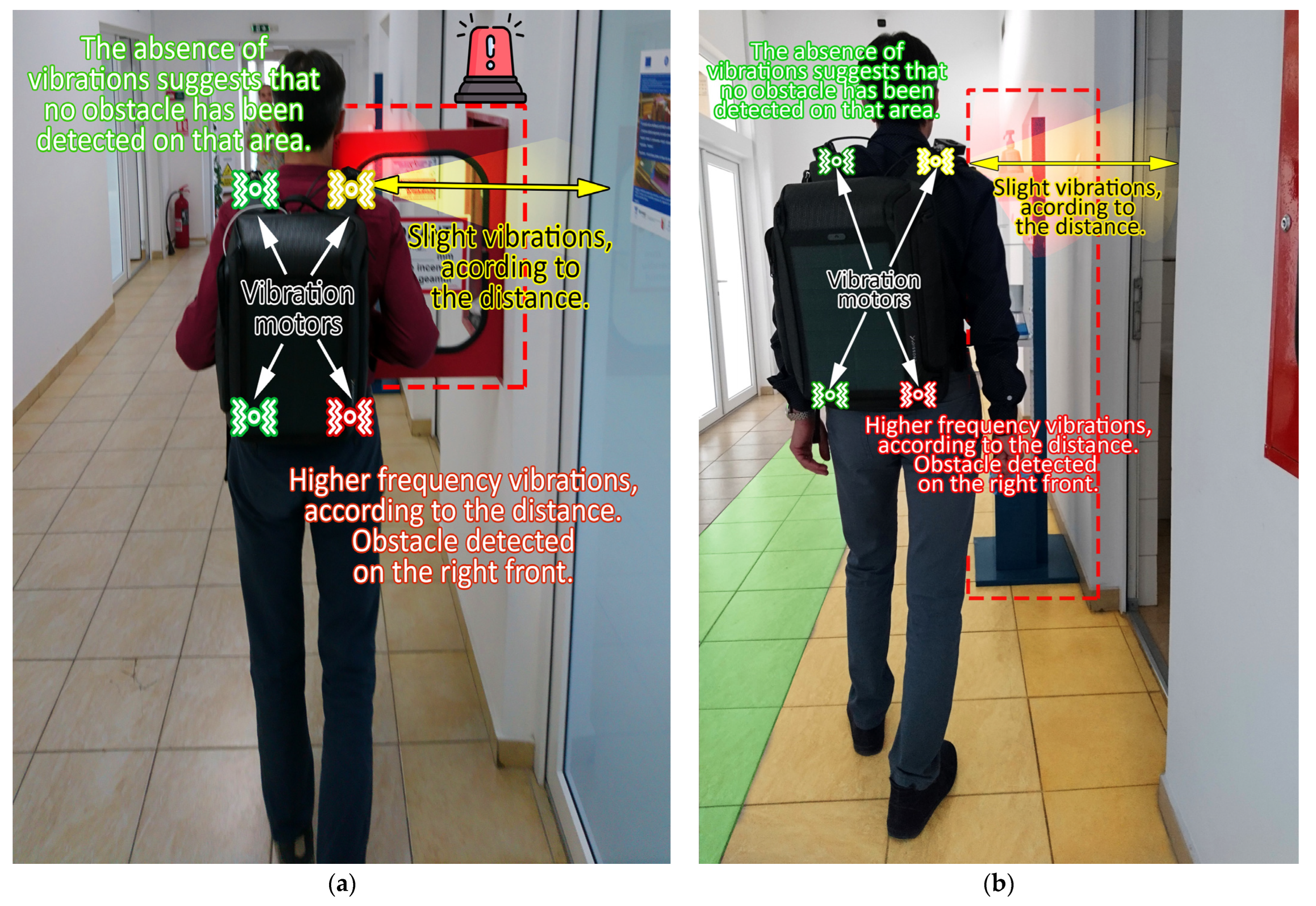

4.2.2. Experimental Results for the Obstacle Detection Component Evaluation

5. Discussion and Future Perspectives Regarding the Use of the Visible Light Communications Technology in Visually Impaired Persons’ Assistance

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- World Health Organization. World Report on Vision; World Health Organization: Geneva, Switzerland, 2019; pp. 1–180. ISBN 978-92-4-151657-0. [Google Scholar]

- Community Medicine for Academics and Lay Learners, WHO Updates Fact Sheet on Blindness and Visual Impairment (11 October 2018). Available online: https://communitymedicine4all.com/2018/10/15/who-updates-fact-sheet-on-blindness-and-visual-impairment/ (accessed on 12 October 2023).

- Qiu, S.; An, P.; Kang, K.; Hu, J.; Han, T.; Rauterberg, M. A Review of Data Gathering Methods for Evaluating Socially Assistive Systems. Sensors 2022, 22, 82. [Google Scholar] [CrossRef]

- Theodorou, P.; Tsiligkos, K.; Meliones, A. Multi-Sensor Data Fusion Solutions for Blind and Visually Impaired: Research and Commercial Navigation Applications for Indoor and Outdoor Spaces. Sensors 2023, 23, 5411. [Google Scholar] [CrossRef] [PubMed]

- Mai, C.; Xie, D.; Zeng, L.; Li, Z.; Li, Z.; Qiao, Z.; Qu, Y.; Liu, G.; Li, L. Laser Sensing and Vision Sensing Smart Blind Cane: A Review. Sensors 2023, 23, 869. [Google Scholar] [CrossRef]

- de Freitas, M.P.; Piai, V.A.; Farias, R.H.; Fernandes, A.M.R.; de Moraes Rossetto, A.G.; Leithardt, V.R.Q. Artificial Intelligence of Things Applied to Assistive Technology: A Systematic Literature Review. Sensors 2022, 22, 8531. [Google Scholar] [CrossRef] [PubMed]

- Căilean, A.M.; Dimian, M. Impact of IEEE 802.15.7 Standard on Visible Light Communications Usage in Automotive Applications. IEEE Commun. Mag. 2017, 55, 169–175. [Google Scholar] [CrossRef]

- Matheus, L.E.M.; Vieira, A.B.; Vieira, L.F.M.; Vieira, M.A.M.; Gnawali, O. Visible Light Communication: Concepts, Applications and Challenges. IEEE Commun. Surv. Tutor. 2019, 21, 3204–3237. [Google Scholar] [CrossRef]

- Zhuang, Y.; Hua, L.; Qi, L.; Yang, J.; Cao, P.; Cao, Y.; Wu, Y.; Thompson, J.; Haas, H. A Survey of Positioning Systems Using Visible LED Lights. IEEE Commun. Surv. Tutor. 2018, 20, 1963–1988. [Google Scholar] [CrossRef]

- Shi, L.; Béchadergue, B.; Chassagne, L.; Guan, H. Joint Visible Light Sensing and Communication Using m-CAP Modulation. IEEE Trans. Broadcast. 2023, 69, 276–288. [Google Scholar] [CrossRef]

- Cailean, A.-M.; Avătămăniței, S.-A.; Beguni, C. Design and Experimental Evaluation of a Visible Light Communications-Based Smart Backpack for Visually Impaired Persons’ Assistance. In Proceedings of the 2023 31st Telecommunications Forum Telfor (TELFOR), Belgrade, Serbia, 21–22 November 2023; pp. 1–4. [Google Scholar]

- Căilean, A.-M.; Beguni, C.; Avătămăniței, S.-A.; Dimian, M.; Chassagne, L.; Béchadergue, B. Experimental Evaluation of an Indoor Visible Light Communications System in Light Dimming Conditions. In Proceedings of the 2023 31st Telecommunications Forum (TELFOR), Belgrade, Serbia, 21–22 November 2023; pp. 1–4. [Google Scholar]

- Messaoudi, M.D.; Menelas, B.-A.J.; Mcheick, H. Review of Navigation Assistive Tools and Technologies for the Visually Impaired. Sensors 2022, 22, 7888. [Google Scholar] [CrossRef]

- AL-Madani, B.; Orujov, F.; Maskeliūnas, R.; Damaševičius, R.; Venčkauskas, A. Fuzzy Logic Type-2 Based Wireless Indoor Localization System for Navigation of Visually Impaired People in Buildings. Sensors 2019, 19, 2114. [Google Scholar] [CrossRef]

- Crabb, R.; Cheraghi, S.A.; Coughlan, J.M. A Lightweight Approach to Localization for Blind and Visually Impaired Travelers. Sensors 2023, 23, 2701. [Google Scholar] [CrossRef] [PubMed]

- Ramesh, K.; Nagananda, S.N.; Ramasangu, H.; Deshpande, R. Real-time localization and navigation in an indoor environment using monocular camera for visually impaired. In Proceedings of the 2018 5th International Conference on Industrial Engineering and Applications (ICIEA), Singapore, 26–28 April 2018; pp. 122–128. [Google Scholar] [CrossRef]

- Hsieh, I.-H.; Cheng, H.-C.; Ke, H.-H.; Chen, H.-C.; Wang, W.-J. A CNN-Based Wearable Assistive System for Visually Impaired People Walking Outdoors. Appl. Sci. 2021, 11, 10026. [Google Scholar] [CrossRef]

- Chen, X.; Su, L.; Zhao, J.; Qiu, K.; Jiang, N.; Zhai, G. Sign Language Gesture Recognition and Classification Based on Event Camera with Spiking Neural Networks. Electronics 2023, 12, 786. [Google Scholar] [CrossRef]

- Al-Faris, M.; Chiverton, J.; Ndzi, D.; Ahmed, A.I. A Review on Computer Vision-Based Methods for Human Action Recognition. J. Imaging 2020, 6, 46. [Google Scholar] [CrossRef] [PubMed]

- Chai, J.; Zeng, H.; Li, A.; Ngai, E. Deep learning in computer vision: A critical review of emerging techniques and application scenarios. Mach. Learn. Appl. 2021, 6, 100134. [Google Scholar] [CrossRef]

- Busaeed, S.; Mehmood, R.; Katib, I.; Corchado, J.M. LidSonic for Visually Impaired: Green Machine Learning-Based Assistive Smart Glasses with Smart App and Arduino. Electronics 2022, 11, 1076. [Google Scholar] [CrossRef]

- Messaoudi, M.D.; Menelas, B.-A.J.; Mcheick, H. Autonomous Smart White Cane Navigation System for Indoor Usage. Technologies 2020, 8, 37. [Google Scholar] [CrossRef]

- Feedom Scientific. Available online: www.freedomscientific.com/products/software/jaws/ (accessed on 19 September 2023).

- NV Access—Empowering Lives through Non-Visual Acces to Tehnology. Available online: www.nvaccess.org/ (accessed on 19 September 2023).

- Lazarillo. Available online: www.lazarillo.app (accessed on 20 September 2023).

- Blind Square. Available online: www.blindsquare.com (accessed on 20 September 2023).

- Apple—Siri. Available online: www.apple.com/siri/ (accessed on 20 September 2023).

- Google Asistant—Hey Googla. Available online: www.assistant.google.com (accessed on 20 September 2023).

- Apple—Voice Over. Available online: www.support.apple.com/ro-ro/guide/iphone/iph3e2e2281/ios (accessed on 20 September 2023).

- Be My AI. Available online: www.bemyeyes.com/ (accessed on 20 September 2023).

- Centre for Accesibility Australia. Available online: www.accessibility.org.au/guides/what-is-the-wcag-standard/ (accessed on 20 September 2023).

- Adoptante, E.B.; Cadag, K.D.; Lualhati, V.R.; Torregoza, M.L.D.R.; Abad, A.C. Audio Multicast by Visible Light Communication for Location Information for the Visually Impaired. In Proceedings of the 2015 International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment and Management (HNICEM), Cebu, Philippines, 9–12 December 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Oshiba, S.; Iki, S.; Yabuchi, J.; Mizutani, Y.; Kawabata, K.; Nakagawa, K.; Kitani, Y.; Kitaguchi, S.; Morimoto, K. Visibility evaluation experiments of optical wireless pedestrian-support system using self-illuminating bollard. In Proceedings of the 2016 IEEE/ACIS 15th International Conference on Computer and Information Science (ICIS), Okayama, Japan, 26–29 June 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Jayakody, A.; Meegama, C.I.; Pinnawalage, H.U.; Muwenwella, R.M.H.N.; Dalpathado, S.C. AVII [Assist Vision Impaired Individual]: An Intelligent Indoor Navigation System for the Vision Impaired Individuals with VLC. In Proceedings of the 2016 IEEE International Conference on Information and Automation for Sustainability (ICIAfS), Galle, Sri Lanka, 16–19 December 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Nikhil, K.; Kalyan, I.S.P.; Sagar, J.; Rohit, M.S.; Nesasudha, M. Li-Fi Based Smart Indoor Navigation System for Visually Impaired People. In Proceedings of the 2019 2nd International Conference on Signal Processing and Communication (ICSPC), Coimbatore, India, 29–30 March 2019; pp. 187–192. [Google Scholar] [CrossRef]

- Manjari, K.; Verma, M.; Singal, G. A Survey on Assistive Technology for Visually Impaired. Internet Things 2020, 11, 100188. [Google Scholar] [CrossRef]

- Beguni, C.; Căilean, A.-M.; Avătămăniței, S.-A.; Dimian, M. Analysis and Experimental Investigation of the Light Dimming Effect on Automotive Visible Light Communications Performances. Sensors 2021, 21, 4446. [Google Scholar] [CrossRef]

- Beguni, C.; Done, A.; Căilean, A.-M.; Avătămăniței, S.-A.; Zadobrischi, E. Experimental Demonstration of a Visible Light Communications System Based on Binary Frequency-Shift Keying Modulation: A New Step toward Improved Noise Resilience. Sensors 2023, 23, 5001. [Google Scholar] [CrossRef]

- Căilean, A.-M.; Beguni, C.; Avătămăniţei, S.-A.; Dimian, M. Experimental Demonstration of a 185 meters Vehicular Visible Light Communications Link. In Proceedings of the 2021 IEEE Photonics Conference (IPC), Vancouver, BC, Canada, 18–21 October 2021; pp. 1–2. [Google Scholar] [CrossRef]

- Avătămăniței, S.-A.; Beguni, C.; Căilean, A.-M.; Dimian, M.; Popa, V. Evaluation of Misalignment Effect in Vehicle-to-Vehicle Visible Light Communications: Experimental Demonstration of a 75 Meters Link. Sensors 2021, 21, 3577. [Google Scholar] [CrossRef] [PubMed]

- You, X.; Chen, J.; Yu, C. Efficient Indoor Data Transmission With Full Dimming Control in Hybrid Visible Light/Infrared Communication Systems. IEEE Access 2018, 6, 77675–77684. [Google Scholar] [CrossRef]

- Li, B.; Xu, W.; Feng, S.; Li, Z. Spectral-Efficient Reconstructed LACO-OFDM Transmission for Dimming Compatible Visible Light Communications. IEEE Photonics J. 2019, 11, 7900714. [Google Scholar] [CrossRef]

- Wang, T.; Yang, F.; Song, J.; Han, Z. Dimming Techniques of Visible Light Communications for Human-Centric Illumination Networks: State-of-the-Art, Challenges, and Trends. IEEE Wirel. Commun. 2020, 27, 88–95. [Google Scholar] [CrossRef]

- Henao Rios, J.L.; Guerrero Gonzalez, N.; García Álvarez, J.C. Experimental Validation of Inverse M-PPM Modulation for Dimming Control and Data Transmission in Visible Light Communications. IEEE Lat. Am. Trans. 2021, 19, 280–287. [Google Scholar] [CrossRef]

- Mohammedi Merah, M.; Guan, H.; Chassagne, L. Experimental Multi-User Visible Light Communication Attocell Using Multiband Carrierless Amplitude and Phase Modulation. IEEE Access 2019, 7, 12742–12754. [Google Scholar] [CrossRef]

- Eldeeb, H.B.; Selmy, H.A.I.; Elsayed, H.M.; Badr, R.I.; Uysal, M. Efficient Resource Allocation Scheme for Multi-User Hybrid VLC/IR Networks. In Proceedings of the 2019 IEEE Photonics Conference (IPC), San Antonio, TX, USA, 29 September–3 October 2019; pp. 1–2. [Google Scholar] [CrossRef]

- Abdalla, I.; Rahaim, M.B.; Little, T.D.C. Interference Mitigation Through User Association and Receiver Field of View Optimization in a Multi-User Indoor Hybrid RF/VLC Illuminance-Constrained Network. IEEE Access 2020, 8, 228779–228797. [Google Scholar] [CrossRef]

- Bian, R.; Tavakkolnia, I.; Haas, H. 15.73 Gb/s Visible Light Communication with Off-the-Shelf LEDs. J. Light. Technol. 2019, 37, 2418–2424. [Google Scholar] [CrossRef]

| Lighting infrastructure transmitter parameters |

|

| Communication parameters |

|

| VLC receiver parameters |

|

| Modulation/Coding Technique | Data Rate [kb/s] | VLC Transmitter–VLC Receiver | Compatible with Light Dimming | BER at a 95% Confidence Level | |

|---|---|---|---|---|---|

| Lateral Distance [cm] | Incidence Angle [degrees] | ||||

| Manchester | 250 | 0–340 | 0–53° | Not in the current setup | <10−7 * |

| VPPM | 100 | 0–340 | 0–53° | 10–90% | <10−7 * |

| 10 | 0–340 | 0–53° | 1–9%; 91–99% | <10−6 ** | |

| Test Objective | Number of Trials | Successful Detections |

|---|---|---|

| Human in the pathway detection | 100 | 100 |

| Chest and head obstacle detection 1 | 100 | 100 |

| Dispenser in the pathway detection 2 | 100 | 100 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Căilean, A.-M.; Avătămăniței, S.-A.; Beguni, C.; Zadobrischi, E.; Dimian, M.; Popa, V. Visible Light Communications-Based Assistance System for the Blind and Visually Impaired: Design, Implementation, and Intensive Experimental Evaluation in a Real-Life Situation. Sensors 2023, 23, 9406. https://doi.org/10.3390/s23239406

Căilean A-M, Avătămăniței S-A, Beguni C, Zadobrischi E, Dimian M, Popa V. Visible Light Communications-Based Assistance System for the Blind and Visually Impaired: Design, Implementation, and Intensive Experimental Evaluation in a Real-Life Situation. Sensors. 2023; 23(23):9406. https://doi.org/10.3390/s23239406

Chicago/Turabian StyleCăilean, Alin-Mihai, Sebastian-Andrei Avătămăniței, Cătălin Beguni, Eduard Zadobrischi, Mihai Dimian, and Valentin Popa. 2023. "Visible Light Communications-Based Assistance System for the Blind and Visually Impaired: Design, Implementation, and Intensive Experimental Evaluation in a Real-Life Situation" Sensors 23, no. 23: 9406. https://doi.org/10.3390/s23239406

APA StyleCăilean, A.-M., Avătămăniței, S.-A., Beguni, C., Zadobrischi, E., Dimian, M., & Popa, V. (2023). Visible Light Communications-Based Assistance System for the Blind and Visually Impaired: Design, Implementation, and Intensive Experimental Evaluation in a Real-Life Situation. Sensors, 23(23), 9406. https://doi.org/10.3390/s23239406