Reduced CPU Workload for Human Pose Detection with the Aid of a Low-Resolution Infrared Array Sensor on Embedded Systems

Abstract

:1. Introduction

1.1. Related Work

2. Materials and Methods

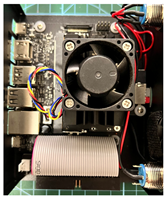

2.1. Test Platforms

2.1.1. Single-Board Computers

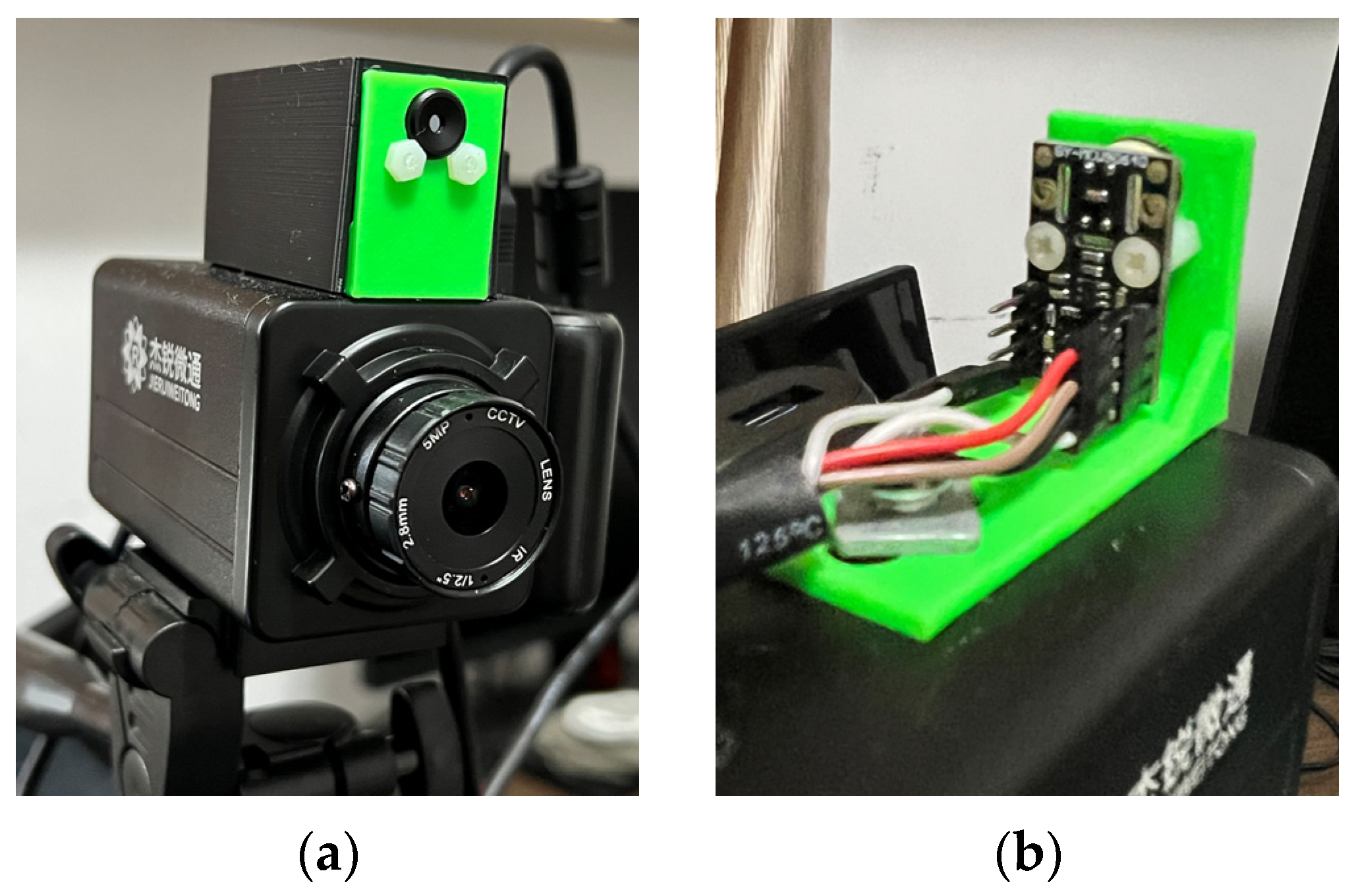

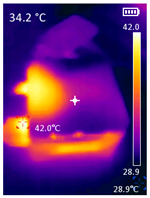

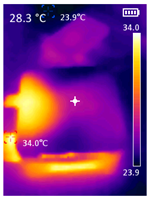

2.1.2. IR Array Sensor

2.1.3. Main Camera IMX219 with a 120-Degree Field-of-View

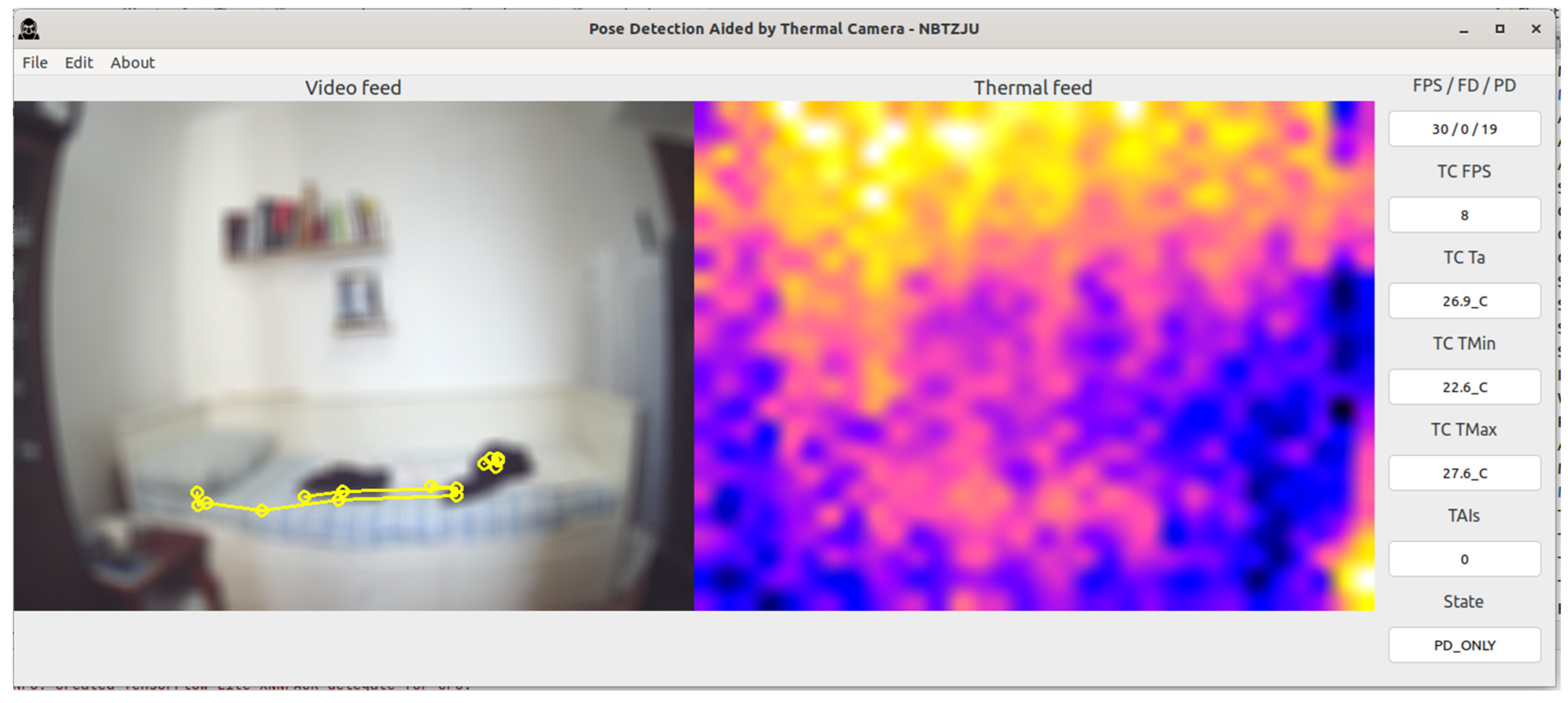

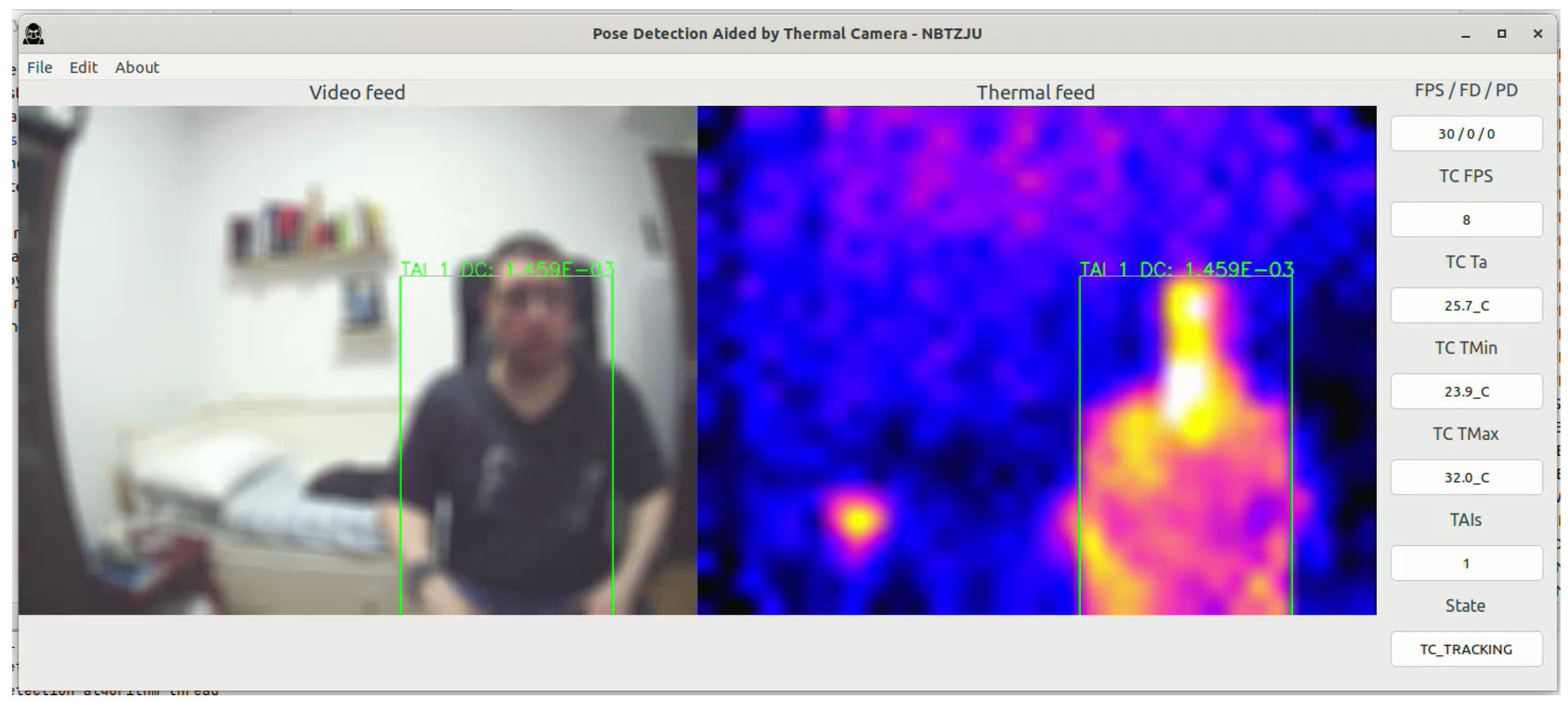

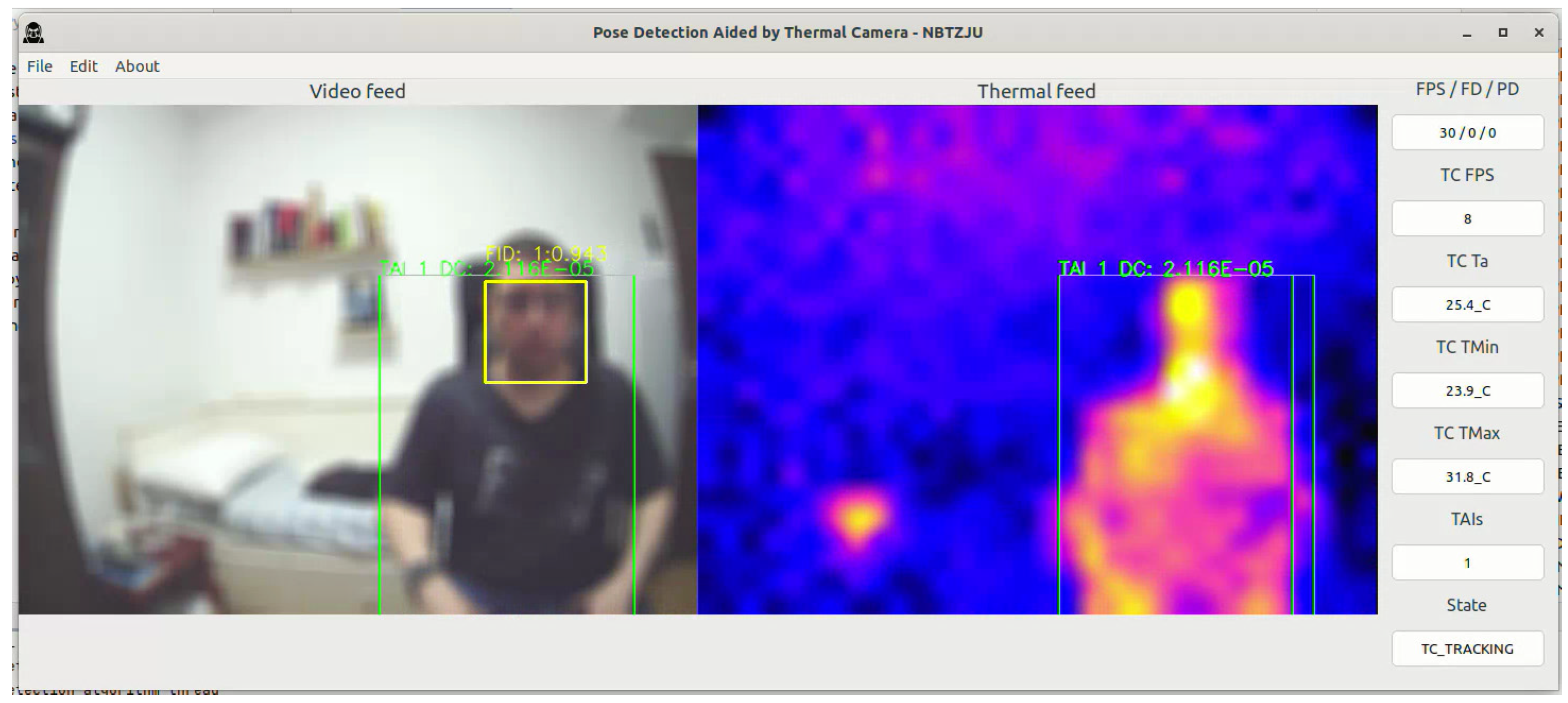

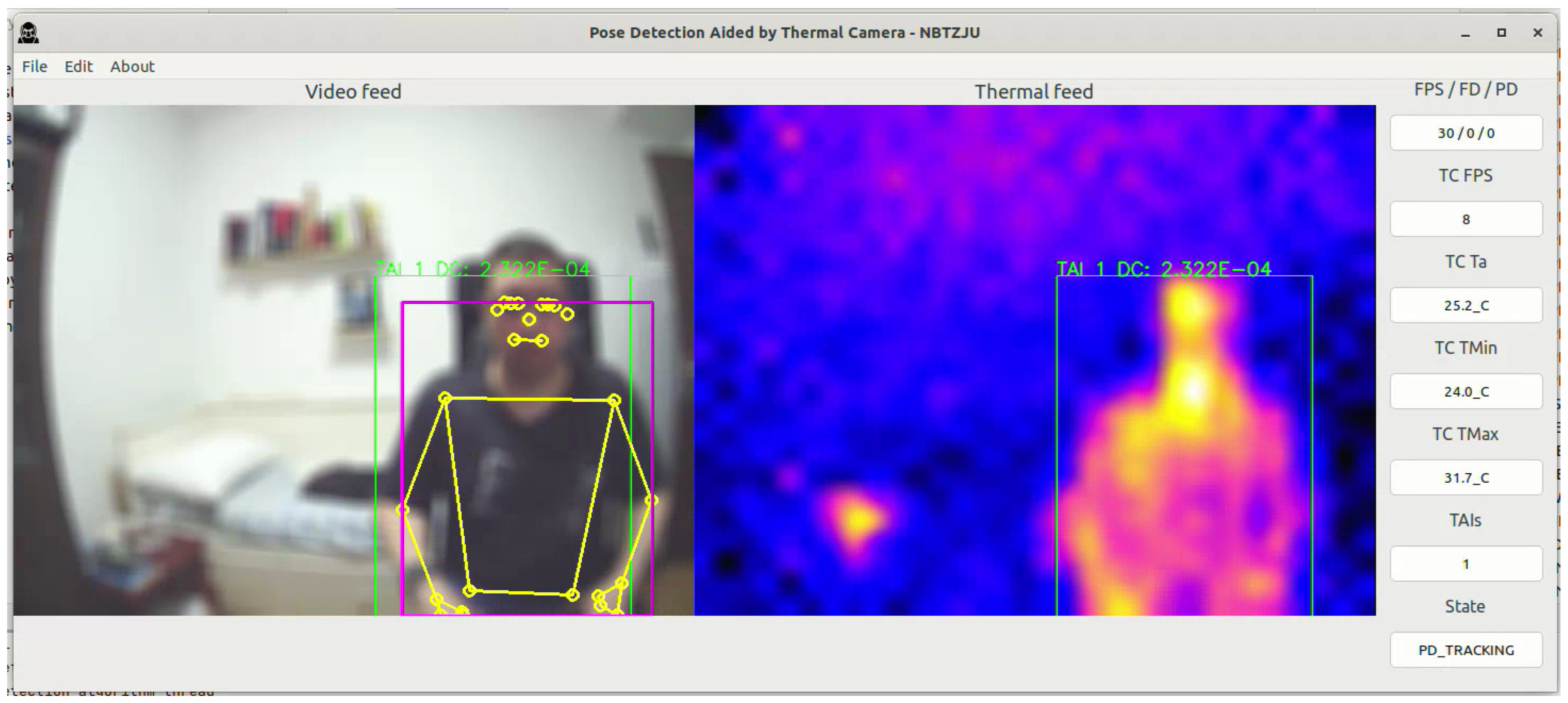

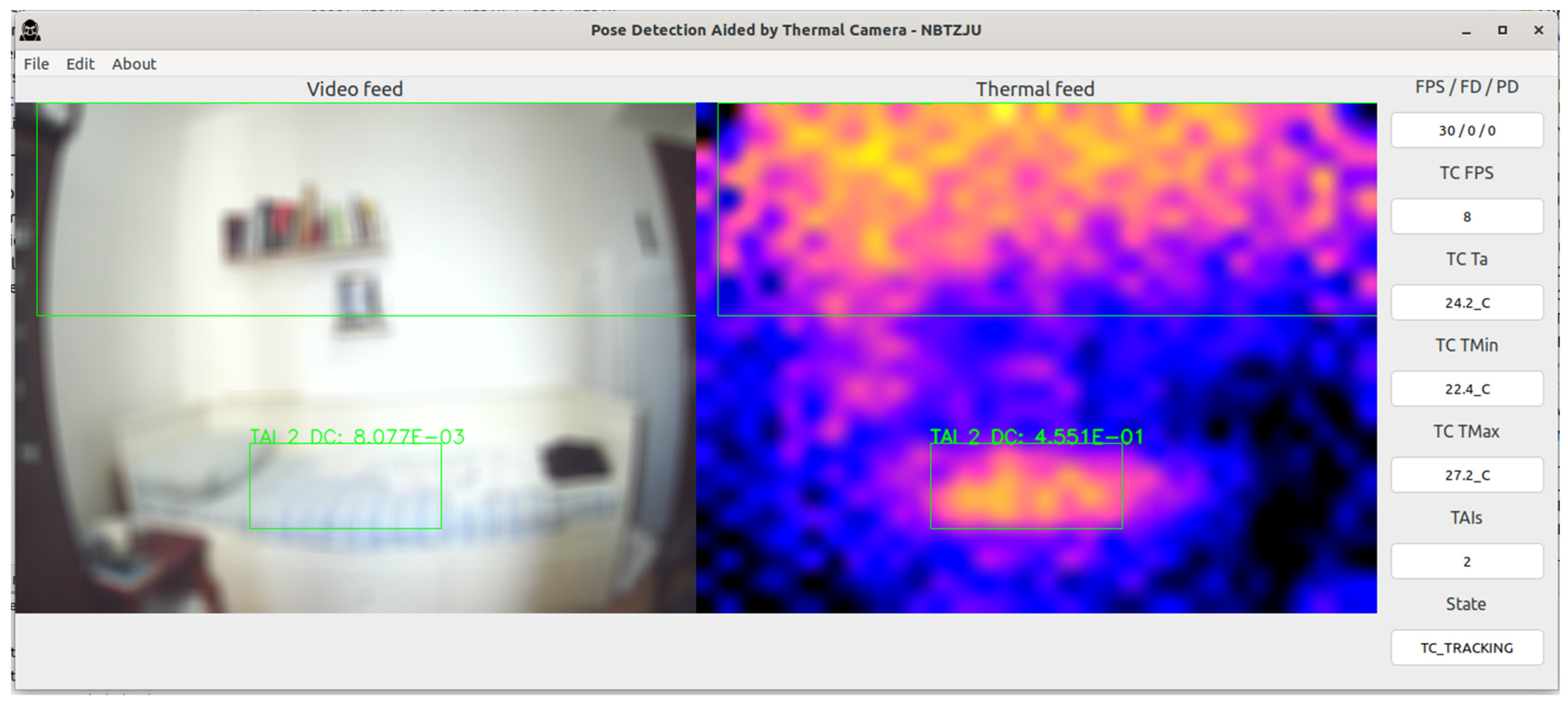

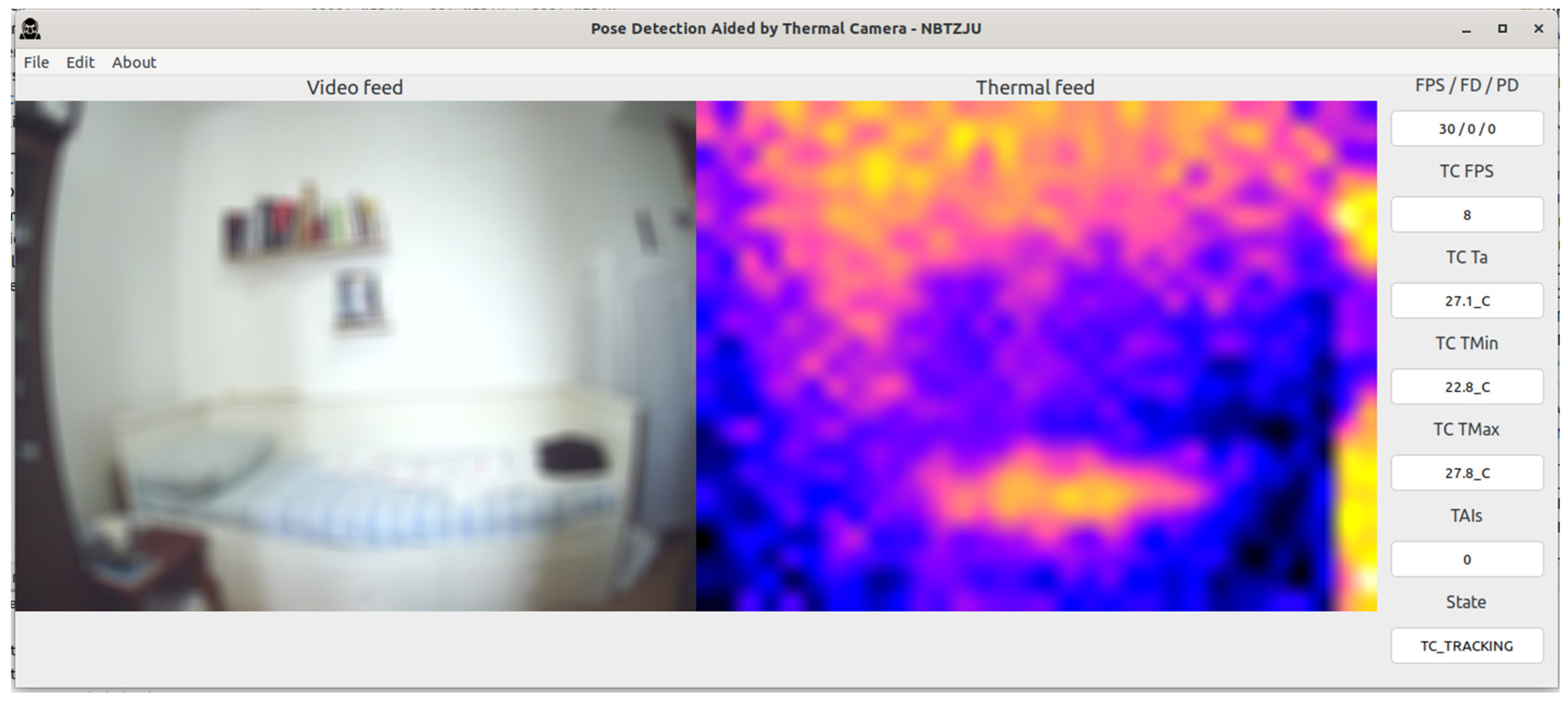

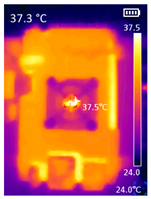

2.2. Pose Detection Aided by the Thermal Camera—PDATC

2.2.1. Thermal Detection Algorithm—TDA

2.2.2. Face Detection Algorithm—FDA

2.2.3. Pose Detection Algorithm—PDA

3. Results

3.1. PDATC Software Detection Results

3.2. SBC Performance Regarding the Algorithms’ FPS

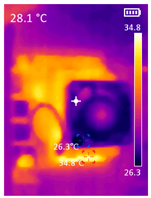

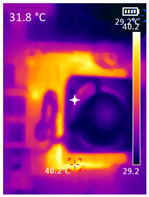

3.3. CPU Utilization and Temperature

3.4. Power Consumption

4. Discussion

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Google MediaPipe. MediaPipe. Available online: https://developers.google.com/mediapipe (accessed on 22 April 2023).

- Ultralytics YOLOv8 Docs. Available online: https://docs.ultralytics.com/tasks/pose/ (accessed on 3 March 2023).

- OpenPose. OpenPose. Available online: https://cmu-perceptual-computing-lab.github.io/openpose/web/html/doc/index.html (accessed on 3 March 2023).

- Toshev, A.; Szegedy, C. DeepPose: Human Pose Estimation via Deep Neural Networks. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1653–1660. [Google Scholar] [CrossRef]

- Cao, Z.; Simon, T.; Wei, S.-E.; Sheikh, Y. Realtime Multi-person 2D Pose Estimation Using Part Affinity Fields. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: New York, NY, USA, 2017; pp. 1302–1310. [Google Scholar] [CrossRef]

- GPU Support. MediaPipe. Available online: https://developers.google.com/mediapipe/framework/getting_started/gpu_support (accessed on 7 May 2023).

- Christian, S.-C.; Dan, G.; Alexandra, F.; Adela, P.P.; Ovidiu, S.; Honoriu, V.; Liviu, M. Hand Gesture Recognition and Infrared Information System. In Proceedings of the 2022 23rd International Carpathian Control Conference (ICCC), Sinaia, Romania, 29 May–1 June 2022; IEEE: New York, NY, USA, 2022; pp. 113–118. [Google Scholar] [CrossRef]

- Bugarin, C.A.Q.; Lopez, J.M.M.; Pineda, S.G.M.; Sambrano, M.F.C.; Loresco, P.J.M. Machine Vision-Based Fall Detection System using MediaPipe Pose with IoT Monitoring and Alarm. In Proceedings of the 2022 IEEE 10th Region 10 Humanitarian Technology Conference (R10-HTC), Hyderabad, India, 16–18 September 2022; IEEE: New York, NY, USA, 2022; pp. 269–274. [Google Scholar] [CrossRef]

- Iamudomchai, P.; Seelaso, P.; Pattanasak, S.; Piyawattanametha, W. Deep Learning Technology for Drunks Detection with Infrared Camera. In Proceedings of the 2020 6th International Conference on Engineering, Applied Sciences and Technology (ICEAST), Chiang Mai, Thailand, 1–4 July 2020; IEEE: New York, NY, USA, 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Pose Landmarks Detection Solution. MediaPipe. Available online: https://developers.google.com/mediapipe/solutions/vision/pose_landmarker (accessed on 6 April 2023).

- Face Detection Solution. MediaPipe. Available online: https://developers.google.com/mediapipe/solutions/vision/face_detector (accessed on 7 April 2023).

- NVIDIA Developer—Jetson Product Lifecycle. Available online: https://developer.nvidia.com/embedded/lifecycle (accessed on 8 August 2023).

- Wissler, E.H. Temperature Distribution in the Body. In Human Temperature Control; Springer: Berlin/Heidelberg, Germany, 2018; pp. 265–287. [Google Scholar] [CrossRef]

- Saha, P.K.; Borgefors, G.; Di Baja, G.S. A survey on skeletonization algorithms and their applications. Pattern Recognit. Lett. 2016, 76, 3–12. [Google Scholar] [CrossRef]

- NASA. Anthropometry and Biomechanics. Man-System Integration Standards. Available online: https://msis.jsc.nasa.gov/sections/section03.htm (accessed on 8 August 2023).

- D’Agostino, R.B. An omnibus test of normality for moderate and large size samples. Biometrika 1971, 58, 341–348. [Google Scholar] [CrossRef]

- D’Agostino, R.; Pearson, E.S. Tests for Departure from Normality. Empirical Results for the Distributions of b 2 and √b 1. Biometrika 1973, 60, 613. [Google Scholar] [CrossRef]

- Panagiotakos, D.B. The Value of p-Value in Biomedical Research. Open Cardiovasc. Med. J. 2008, 2, 97–99. [Google Scholar] [CrossRef] [PubMed]

- Xu, X.; Rioux, T.P.; Castellani, M.P. Three dimensional models of human thermoregulation: A review. J. Therm. Biol. 2023, 112, 103491. [Google Scholar] [CrossRef] [PubMed]

- On-Device, Real-Time Body Pose Tracking with MediaPipe BlazePose. Available online: https://ai.googleblog.com/2020/08/on-device-real-time-body-pose-tracking.html (accessed on 6 April 2023).

| SBC | CPU | GPU | RAM | Storage | OS |

|---|---|---|---|---|---|

| Orange Pi 5 | RK3588S octa-core (Quad-Core Cortex-A76 @ 2.4 GHz and Quad-Core Cortex-A55 @ 1.8 GHz) | Arm Mali-G610 MP4 “Odin” | 8 GB | NVMe 256 GB | Armbian OS—Ubuntu 22.04 |

| Orange Pi 4 LTS | Rockchip RK3399 (Dual-Core Cortex-A72 @ 1.8 GHz and Quad-Core Cortex™-A53 @ 1.8 GHz) | Arm Mali-T860 | 4 GB | eMMC 16 GB | Armbian OS—Ubuntu 22.04 |

| Raspberry Pi 4B | Broadcom BCM2711 Quad core Cortex-A72 (ARM v8) 64-bit SoC @ 1.8 GHz | Broadcom VideoCore VI | 8 GB | microSD card Class 10 64 GB | Armbian OS—Ubuntu 22.04 |

| Nvidia Jetson Nano 2G | Quad-core ARM A57 @ 1.43 GHz | 128-core NVIDIA Maxwell™ architecture-based GPU (accessible to user applications) | 2 GB | microSD card Class 10 64 GB | JetPack 4.6.3 Ubuntu based distribution |

| Nvidia Jetson Nano 4G | Quad-core ARM A57 @ 1.43 GHz | 128-core NVIDIA Maxwell™ architecture-based GPU (accessible to user applications) | 4 GB | USB3.0 SSD 256 GB | JetPack 4.6.3 Ubuntu based distribution |

| MiniPC Morefine N5105 | Intel® Celeron® N5105—Mobile Series Quad-Core @ 2.0 GHz | Intel® UHD Graphics | 8 GB | NVMe 256 GB | Ubuntu 22.04 |

| MiniPC Morefine N5105 | Intel® Celeron® N5105—Mobile Series Quad-Core @ 2.0 GHz | Intel® UHD Graphics | 8 GB | NVMe 256 GB | Windows 11 |

| SBC | Python | MediaPipe | wxPython | Scipy | OpenCV | Numpy |

|---|---|---|---|---|---|---|

| Orange Pi 5 | Python 3.8 | 0.10.1 | 4.2.0 | 1.10.1 | 4.7.0 | 1.24.2 |

| Orange Pi 4 LTS | Python 3.8 | 0.10.1 | 4.2.0 | 1.10.1 | 4.7.0 | 1.24.2 |

| Raspberry Pi 4B | Python 3.8 | 0.10.1 | 4.2.0 | 1.10.1 | 4.6.0 | 1.24.2 |

| Jetson Nano 2G | Python 3.6 | 0.8.5-cuda102 | 4.1.0 | 1.5.4 | 4.6.0-cuda102 | 1.19.4 |

| Jetson Nano 4G | Python 3.6 | 0.8.5-cuda102 | 4.1.0 | 1.5.4 | 4.7.0-cuda102 | 1.19.4 |

| Morefine N5105 Ubuntu | Python 3.8 | 0.10.1 | 4.2.0 | 1.10.1 | 4.7.0 | 1.24.2 |

| Morefine N5105 Windows | Python 3.8 | 0.10.1 | 4.2.0 | 1.10.1 | 4.7.0 | 1.24.2 |

| Byte | 0–1 | 2–3 | 4–1539 | 1540–1541 | 1542–1543 |

|---|---|---|---|---|---|

| Information | Frame header | Data length | Temperature data | Sensor/ambient temperature | Checksum16 |

| SBC | Normal Camera | Thermal Camera | Face Detection | Pose Detection (without Subject) | Pose Detection (with Subject) | |

|---|---|---|---|---|---|---|

| FPS | ||||||

| Orange Pi 5 | 30 | 8 | 15 | 23 | 19 | |

| Orange Pi 4 LTS | 30 | 8 | 13 | 9 | 7 | |

| Raspberry Pi 4B | 15 | 8 | 9 | 7 | 5 | |

| Jetson Nano 2G | 30 | 8 | 15 | 14 | 13 | |

| Jetson Nano 4G | 30 | 8 | 15 | 15 | 13 | |

| N5105 Ubuntu | 30 | 8 | 15 | 13 | 11 | |

| N5105 Windows | 30 | 8 | 15 | 13 | 10 | |

| SBC | CPU Average Usage with Only Pose Detection (%) | CPU Average Usage with PDATC (%) | CPU Temperature with Only Pose Detection (°C) | CPU Temperature with PDATC (°C) |

|---|---|---|---|---|

| Orange Pi 5 | 32.0 | 18.2 | 59.2 | 42.5 |

| Orange Pi 4 LTS | 45.3 | 22.4 | 83.9 | 58.9 |

| Raspberry Pi 4B | 63.8 | 32.2 | 59.2 | 51.6 |

| Jetson Nano 2G | 42.3 | 36.5 | 40.0 | 36.5 |

| Jetson Nano 4G | 51.4 | 43.9 | 43.5 | 41.5 |

| N5105 Ubuntu | 35.1 | 21.7 | 58.0 | 42.0 |

| N5105 Windows | 45.4 | 20.2 | 60.6 | 43.3 |

| # | SBC | With Only Pose Detection | With PDATC |

|---|---|---|---|

| 1 |  (a) Orange Pi 5 |  (b) SBC body average: 41.1 °C |  (c) SBC body average: 36. 3°C |

| 2 |  (a) Orange Pi 4 LTS |  (b) SBC body average: 47.0 °C |  (c) SBC body average: 36.1 °C |

| 3 |  (a) Raspberry Pi 4B |  (b) SBC body average: 39.6 °C |  (c) SBC body average: 36.6 °C |

| 4 |  (a) Jetson Nano 2G |  (b) SBC body average: 32.6 °C |  (c) SBC body average: 30.6 °C |

| 5 |  (a) Jetson Nano 4G |  (b) SBC body average: 33.7 °C |  (c) SBC body average: 30.9 °C |

| 6 |  (a) N5105 Ubuntu |  (b) SBC body average: 34.6 °C |  (c) SBC body average: 31.2 °C |

| 7 |  (a) N5105 Windows 11 |  (b) SBC body average: 33.2 °C |  (c) SBC body average: 28.5 °C |

| SBC | Idle | Only MP Pose Detection | with PDATC | Reduction with PDATC | Relative Power with PDATC (%) | |

|---|---|---|---|---|---|---|

| Power (W) | ||||||

| Orange Pi 5 | 3.72 | 6.99 | 4.96 | 2.04 | −29.12 | |

| Orange Pi 4 LTS | 3.43 | 8.21 | 5.70 | 2.51 | −30.54 | |

| Raspberry Pi 4B | 3.75 | 6.27 | 5.65 | 0.62 | −9.96 | |

| Jetson Nano 2G | 3.38 | 7.21 | 5.42 | 1.79 | −24.81 | |

| Jetson Nano 4G | 4.89 | 8.86 | 7.11 | 1.75 | −19.79 | |

| N5105 Ubuntu | 6.38 | 15.02 | 10.63 | 4.93 | −29.23 | |

| N5105 Windows | 5.62 | 14.34 | 11.79 | 2.55 | −17.76 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alves, M.G.; Chen, G.-L.; Kang, X.; Song, G.-H. Reduced CPU Workload for Human Pose Detection with the Aid of a Low-Resolution Infrared Array Sensor on Embedded Systems. Sensors 2023, 23, 9403. https://doi.org/10.3390/s23239403

Alves MG, Chen G-L, Kang X, Song G-H. Reduced CPU Workload for Human Pose Detection with the Aid of a Low-Resolution Infrared Array Sensor on Embedded Systems. Sensors. 2023; 23(23):9403. https://doi.org/10.3390/s23239403

Chicago/Turabian StyleAlves, Marcos G., Gen-Lang Chen, Xi Kang, and Guang-Hui Song. 2023. "Reduced CPU Workload for Human Pose Detection with the Aid of a Low-Resolution Infrared Array Sensor on Embedded Systems" Sensors 23, no. 23: 9403. https://doi.org/10.3390/s23239403

APA StyleAlves, M. G., Chen, G.-L., Kang, X., & Song, G.-H. (2023). Reduced CPU Workload for Human Pose Detection with the Aid of a Low-Resolution Infrared Array Sensor on Embedded Systems. Sensors, 23(23), 9403. https://doi.org/10.3390/s23239403