Explainable Depression Detection Based on Facial Expression Using LSTM on Attentional Intermediate Feature Fusion with Label Smoothing

Abstract

:1. Introduction

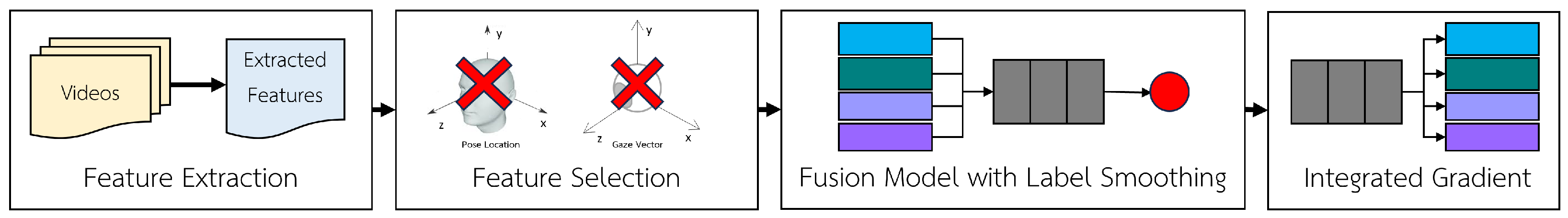

- In order to choose the model that will perform the best, we evaluated three types of fusion modeling techniques that use the transformer model, window block LSTM, and Bi-LSTM. In addition, we used feature selection techniques and label smoothing techniques to improve model accuracy.

- Data used in this study are extracted from clinical interview videos using Openface tools in time-series format. Data are classified as depressed or non-depressed.

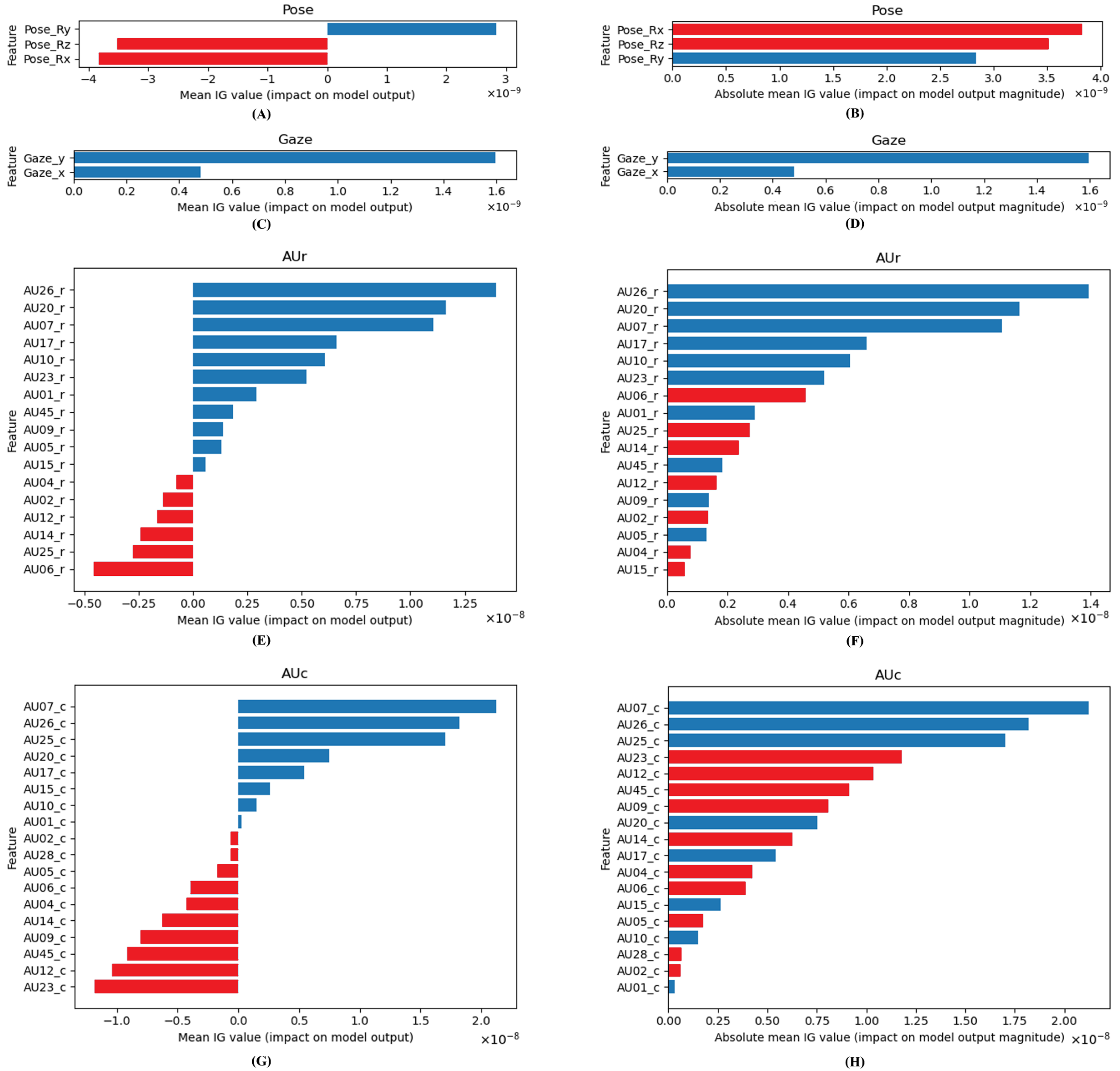

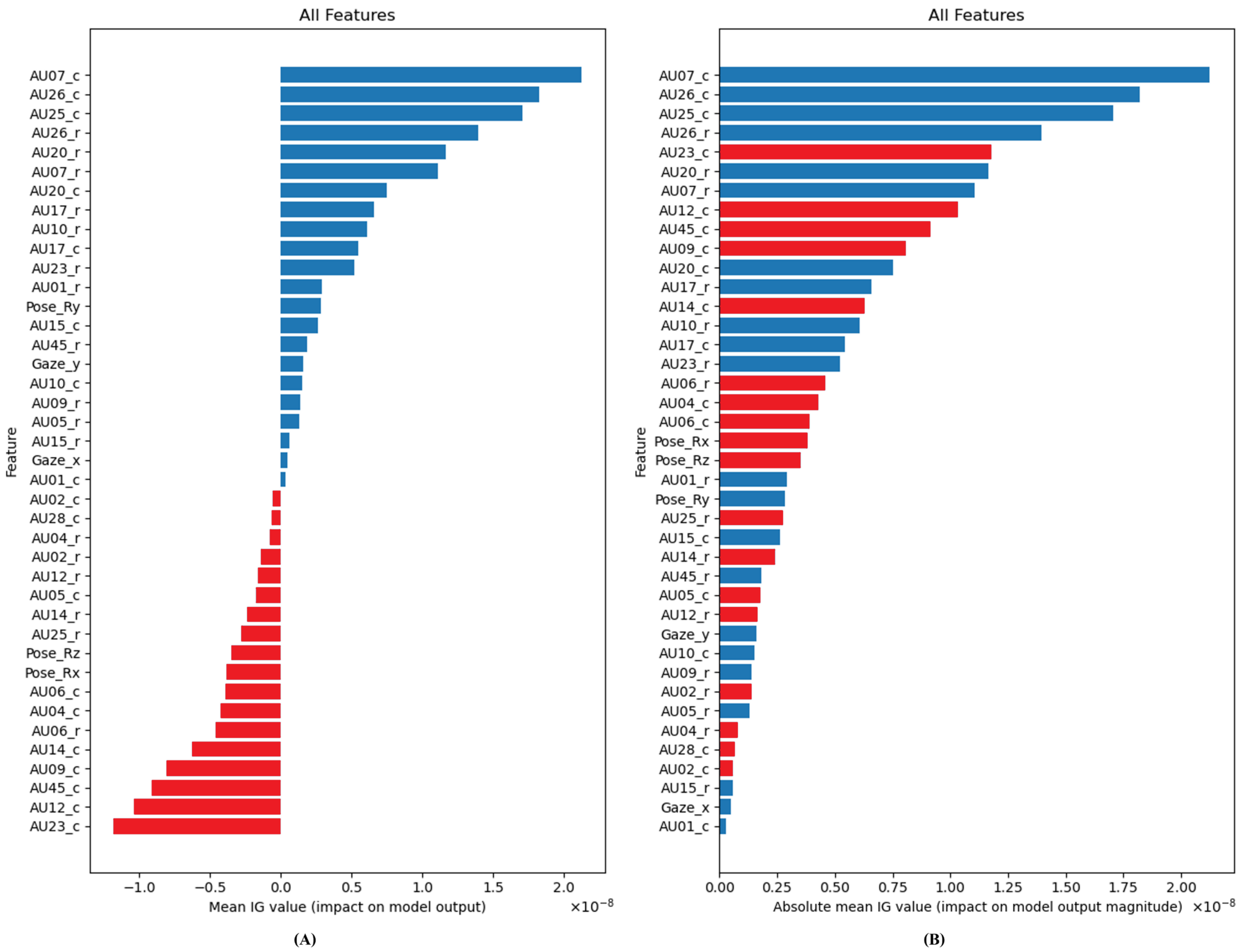

- We apply an integrated gradient [9] over the model and sort the significant features in order to describe the main characteristics of facial expression that can detect depression.

2. Related Work

3. Materials and Methods

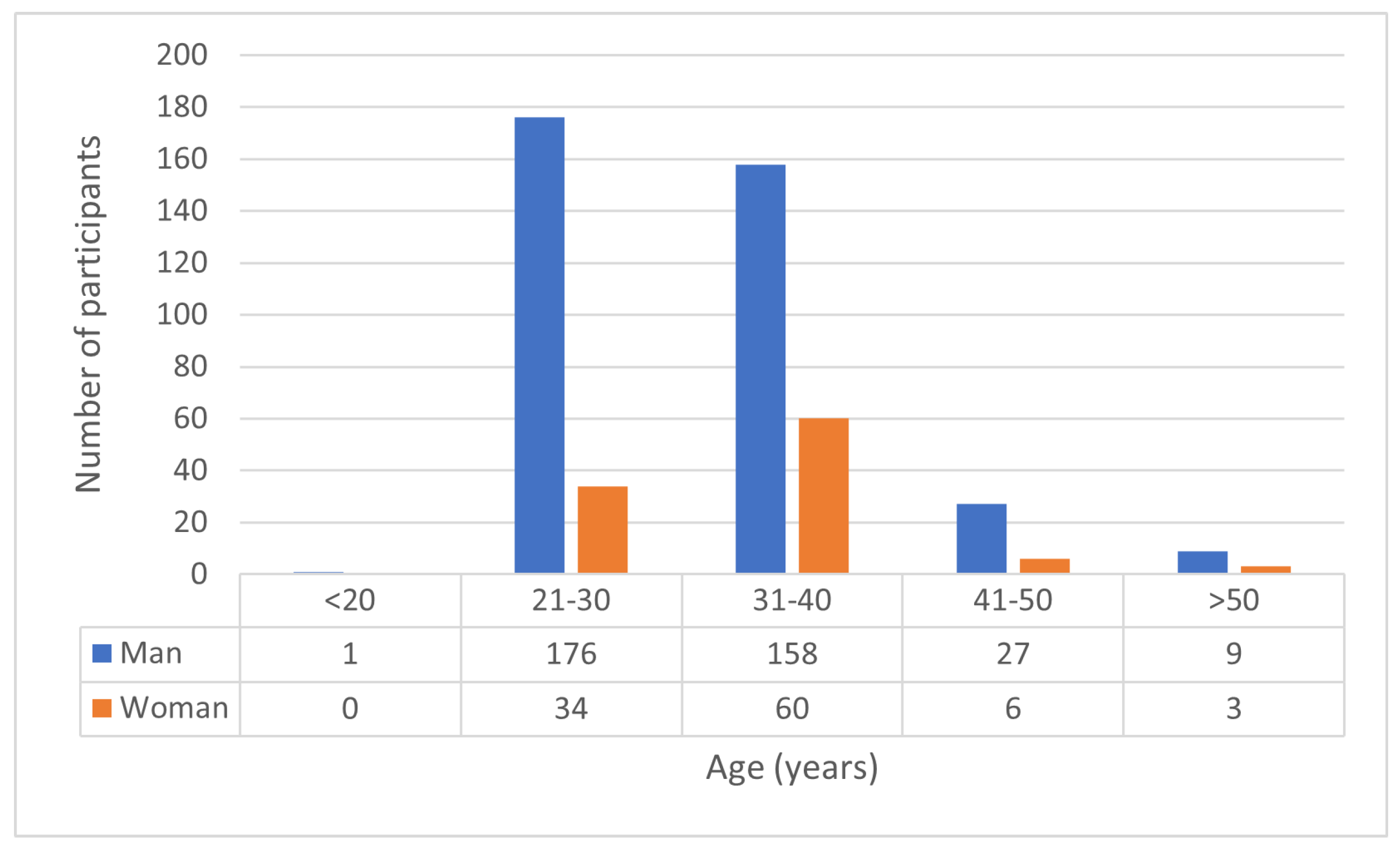

3.1. Input and Target Preparation

3.2. Features Extraction

3.3. Data Preprocessing

3.3.1. Features Selection

3.3.2. Label Smoothing

3.4. Model Architecture

3.4.1. Early Fusion Model Architecture

3.4.2. Intermediate Fusion Model Architecture

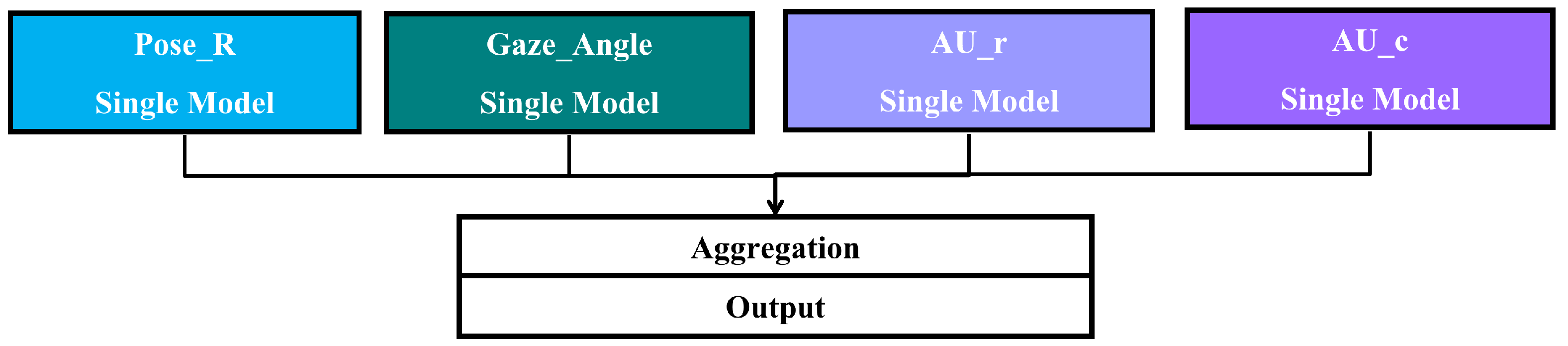

3.4.3. Late Fusion Model Architecture

3.5. Integrated Gradient Explanation

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Results from an Additional Data Set

| Model | TP | FN | TN | FP | Acc (%) | Macro (%) | Micro (%) | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | Precision | Recall | F1 | ||||||

| Bi-LSTM Baseline | 0 | 20 | 30 | 0 | 60.00 | 30.00 | 50.00 | 37.50 | 36.00 | 60.00 | 45.00 |

| Transformer Baseline | 0 | 20 | 30 | 0 | 60.00 | 30.00 | 50.00 | 37.50 | 36.00 | 60.00 | 45.00 |

| Our Bi-LSTM | 9 | 11 | 28 | 2 | 74.00 | 76.81 | 69.17 | 69.61 | 75.80 | 74.00 | 71.92 |

| Our Window Block LSTM | 12 | 8 | 28 | 2 | 80.00 | 81.75 | 76.67 | 77.72 | 80.95 | 80.00 | 79.14 |

References

- Santomauro, D.F.; Herrera, A.M.M.; Shadid, J.; Zheng, P.; Ashbaugh, C.; Pigott, D.M.; Abbafati, C.; Adolph, C.; Amlag, J.O.; Aravkin, A.Y.; et al. Global prevalence and burden of depressive and anxiety disorders in 204 countries and territories in 2020 due to the COVID-19 pandemic. Lancet 2021, 398, 1700–1712. [Google Scholar] [CrossRef]

- Hawes, M.T.; Szenczy, A.K.; Klein, D.N.; Hajcak, G.; Nelson, B.D. Increases in depression and anxiety symptoms in adolescents and young adults during the COVID-19 pandemic. Psychol. Med. 2022, 52, 3222–3230. [Google Scholar] [CrossRef]

- Tabur, A.; Elkefi, S.; Emhan, A.; Mengenci, C.; Bez, Y.; Asan, O. Anxiety, burnout and depression, psychological well-being as predictor of healthcare professionals’ turnover during the COVID-19 pandemic: Study in a pandemic hospital. Healthcare 2022, 10, 525. [Google Scholar] [CrossRef]

- Calegaro, V.C.; Ramos-Lima, L.F.; Hoffmann, M.S.; Zoratto, G.; Kerber, N.; Costa, F.C.D.; Picinin, V.D.; Köchler, J.; Rodrigues, L.; Maciel, L.; et al. Closed doors: Predictors of stress, anxiety, depression, and PTSD during the onset of COVID-19 pandemic in Brazil. J. Affect. Disord. 2022, 310, 441–451. [Google Scholar] [CrossRef]

- Cheng, X.; Wang, Q.; Wang, R.; Wang, Y.; Chen, X.; Mi, G.; Chen, X.; Wang, L.; Wang, C.; Hu, L.; et al. Prevalence of depressive disorders and associated demographic characteristics in Shandong: An epidemiological investigation. J. Affect. Disord. 2022, 311, 198–204. [Google Scholar] [CrossRef]

- Smith, K.M.; Renshaw, P.F.; Bilello, J. The diagnosis of depression: Current and emerging methods. Compr. Psychiatry 2013, 54, 1–6. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V. Facial action coding system. In Environmental Psychology & Nonverbal Behavior; American Psychological Association: Washington, DC, USA, 1978. [Google Scholar]

- Baltrušaitis, T.; Robinson, P.; Morency, L.P. Openface: An open source facial behavior analysis toolkit. In Proceedings of the 2016 IEEE winter conference on applications of computer vision (WACV), Lake Placid, NY, USA, 7–10 March 2016; pp. 1–10. [Google Scholar]

- Sundararajan, M.; Taly, A.; Yan, Q. Axiomatic attribution for deep networks. In Proceedings of the International Conference on Machine Learning, PMLR, Sydney, Australia, 6–11 August 2017; pp. 3319–3328. [Google Scholar]

- Watanabe, A.; Yamazaki, T. Representation of the brain network by electroencephalograms during facial expressions. J. Neurosci. Methods 2021, 357, 109158. [Google Scholar] [CrossRef]

- Fu, C.H.; Williams, S.C.; Brammer, M.J.; Suckling, J.; Kim, J.; Cleare, A.J.; Walsh, N.D.; Mitterschiffthaler, M.T.; Andrew, C.M.; Pich, E.M.; et al. Neural responses to happy facial expressions in major depression following antidepressant treatment. Am. J. Psychiatry 2007, 164, 599–607. [Google Scholar] [CrossRef]

- Nakamura, A.; Yomogida, Y.; Ota, M.; Matsuo, J.; Ishida, I.; Hidese, S.; Kunugi, H. The cerebellum as a moderator of negative bias of facial expression processing in depressive patients. J. Affect. Disord. Rep. 2022, 7, 100295. [Google Scholar] [CrossRef]

- Schirmer, A.; Adolphs, R. Emotion perception from face, voice, and touch: Comparisons and convergence. Trends Cogn. Sci. 2017, 21, 216–228. [Google Scholar] [CrossRef]

- Scott, N.J.; Kramer, R.S.S.; Jones, A.L.; Ward, R. Facial cues to depressive symptoms and their associated personality attributions. Psychiatry Res. 2013, 208, 47–53. [Google Scholar] [CrossRef]

- Stolicyn, A.; Steele, J.D.; Seriès, P. Prediction of depression symptoms in individual subjects with face and eye movement tracking. Psychol. Med. 2022, 52, 1784–1792. [Google Scholar] [CrossRef]

- Liu, G.D.; Li, Y.C.; Zhang, W.; Zhang, L. A brief review of artificial intelligence applications and algorithms for psychiatric disorders. Engineering 2020, 6, 462–467. [Google Scholar] [CrossRef]

- Ringeval, F.; Schuller, B.; Valstar, M.; Cummins, N.; Cowie, R.; Tavabi, L.; Schmitt, M.; Alisamir, S.; Amiriparian, S.; Messner, E.M.; et al. AVEC 2019 workshop and challenge: State-of-mind, detecting depression with AI, and cross-cultural affect recognition. In Proceedings of the 9th International on Audio/Visual Emotion Challenge and Workshop, Nice, France, 21–25 October 2019; pp. 3–12. [Google Scholar]

- Ray, A.; Kumar, S.; Reddy, R.; Mukherjee, P.; Garg, R. Multi-level attention network using text, audio and video for depression prediction. In Proceedings of the 9th International on Audio/Visual Emotion Challenge and Workshop, Nice, France, 21–25 October 2019; pp. 81–88. [Google Scholar]

- Sun, H.; Liu, J.; Chai, S.; Qiu, Z.; Lin, L.; Huang, X.; Chen, Y. Multi-modal adaptive fusion transformer network for the estimation of depression level. Sensors 2021, 21, 4764. [Google Scholar] [CrossRef]

- Akbar, H.; Dewi, S.; Rozali, Y.A.; Lunanta, L.P.; Anwar, N.; Anwar, D. Exploiting facial action unit in video for recognizing depression using metaheuristic and neural networks. In Proceedings of the 2021 1st International Conference on Computer Science and Artificial Intelligence (ICCSAI), Jakarta, Indonesia, 28 October 2021; Volume 1, pp. 438–443. [Google Scholar]

- Rathi, S.; Kaur, B.; Agrawal, R. Selection of relevant visual feature sets for enhanced depression detection using incremental linear discriminant analysis. Multimed. Tools Appl. 2022, 81, 17703–17727. [Google Scholar] [CrossRef]

- Schultebraucks, K.; Yadav, V.; Shalev, A.Y.; Bonanno, G.A.; Galatzer-Levy, I.R. Deep learning-based classification of posttraumatic stress disorder and depression following trauma utilizing visual and auditory markers of arousal and mood. Psychol. Med. 2022, 52, 957–967. [Google Scholar] [CrossRef]

- Serengil, S.I.; Ozpinar, A. HyperExtended LightFace: A Facial Attribute Analysis Framework. In Proceedings of the 2021 International Conference on Engineering and Emerging Technologies (ICEET), Istanbul, Turkey, 27–28 October 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Hamilton, M. A rating scale for depression. J. Neurol. Neurosurg. Psychiatry 1960, 23, 56. [Google Scholar] [CrossRef]

- Müller, R.; Kornblith, S.; Hinton, G.E. When does label smoothing help? Adv. Neural Inf. Process. Syst. 2019, 32. [Google Scholar]

- Fossi, L.; Faravelli, C.; Paoli, M. The ethological approach to the assessment of depressive disorders. J. Nerv. Ment. Dis. 1984, 172, 332–341. [Google Scholar] [CrossRef]

- Schelde, J.T.M. Major depression: Behavioral markers of depression and recovery. J. Nerv. Ment. Dis. 1998, 186, 133–140. [Google Scholar] [CrossRef]

- Fiquer, J.T.; Boggio, P.S.; Gorenstein, C. Talking bodies: Nonverbal behavior in the assessment of depression severity. J. Affect. Disord. 2013, 150, 1114–1119. [Google Scholar] [CrossRef]

- Gahalawat, M.; Fernandez Rojas, R.; Guha, T.; Subramanian, R.; Goecke, R. Explainable Depression Detection via Head Motion Patterns. In Proceedings of the International Conference on Multimodal Interaction, Paris, France, 9–13 October 2023; pp. 261–270. [Google Scholar]

- Doss, A.S.A.; Lingampally, P.K.; Nguyen, G.M.T.; Schilberg, D. A comprehensive review of wearable assistive robotic devices used for head and neck rehabilitation. Results Eng. 2023, 19, 101306. [Google Scholar] [CrossRef]

| Year | Techniques | Data Sets | Questionnaires | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|---|---|---|

| 2019 [18] | Multi-Model, Bi-LSTM | E-DAIC | PHQ-8 | CCC * 0.670 | - | - | - |

| 2021 [19] | Multi-Modal Transformer | E-DAIC | PHQ-8 | CCC * 0.733 | - | - | - |

| 2021 [20] | PSO, FFNN | DAIC WOZ | PHQ-8 | 0.978 | - | - | - |

| 2022 [21] | FDR, ILDA | DAIC WOZ | PHQ-8 | - | - | - | 0.805 |

| 2022 [22] | FFNN | Their own | - | 0.860 | 0.830 | 0.820 | 0.820 |

| Data Set | Non-Depression | Depression | Normal | Mild | Moderate | Severe |

|---|---|---|---|---|---|---|

| Train Data Set | 270 | 108 × 2 = 216 | 84 | 186 | 90 × 2 = 180 | 18 × 2 = 36 |

| Validation Data Set | 35 | 13 | 11 | 24 | 11 | 2 |

| Test Data Set | 35 | 13 | 11 | 24 | 11 | 2 |

| Model | TP | FN | TN | FP | Acc (%) | Macro (%) | Micro (%) | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | Precision | Recall | F1 | |||||||

| Bi-LSTM | PoseR | 6 | 7 | 32 | 3 | 79.17 | 74.36 | 68.79 | 70.52 | 77.88 | 79.17 | 77.84 |

| PoseL | 5 | 8 | 33 | 2 | 79.17 | 75.96 | 66.37 | 68.42 | 78.03 | 79.17 | 76.86 | |

| GazeR | 8 | 5 | 31 | 4 | 81.25 | 76.39 | 75.05 | 75.66 | 80.84 | 81.25 | 81.01 | |

| GazeV | 7 | 6 | 32 | 3 | 81.25 | 77.11 | 72.64 | 74.27 | 80.36 | 81.25 | 80.41 | |

| AUr | 7 | 6 | 33 | 2 | 83.33 | 81.20 | 74.07 | 76.41 | 82.76 | 83.33 | 82.27 | |

| AUc | 8 | 5 | 33 | 2 | 85.42 | 83.42 | 77.91 | 79.99 | 84.99 | 85.42 | 84.77 | |

| Model | TP | FN | TN | FP | Acc (%) | Macro (%) | Micro (%) | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | Precision | Recall | F1 | |||||||

| Bi-LSTM | Early Fusion | 6 | 7 | 32 | 3 | 79.17 | 74.36 | 68.79 | 70.52 | 77.88 | 79.17 | 77.84 |

| Intermediate Fusion | 6 | 7 | 35 | 0 | 85.42 | 91.67 | 73.08 | 77.03 | 87.85 | 85.42 | 83.39 | |

| Late Fusion | 7 | 6 | 33 | 2 | 83.33 | 81.20 | 74.07 | 76.41 | 82.76 | 83.33 | 82.27 | |

| Window Block LSTM | Early Fusion | 8 | 5 | 31 | 4 | 81.25 | 76.39 | 75.05 | 75.66 | 80.84 | 81.25 | 81.01 |

| Intermediate Fusion | 10 | 3 | 33 | 2 | 89.58 | 87.50 | 85.60 | 86.48 | 89.41 | 89.58 | 89.45 | |

| Late Fusion | 7 | 6 | 33 | 2 | 83.33 | 81.20 | 74.07 | 76.41 | 82.76 | 83.33 | 82.27 | |

| Transformer | Early Fusion | 5 | 8 | 32 | 3 | 77.08 | 71.25 | 64.95 | 66.48 | 75.26 | 77.08 | 75.12 |

| Intermediate Fusion | 7 | 6 | 33 | 2 | 83.33 | 81.20 | 74.07 | 76.41 | 82.76 | 83.33 | 82.27 | |

| Late Fusion | 7 | 6 | 32 | 3 | 81.25 | 77.11 | 72.64 | 74.27 | 80.36 | 81.25 | 80.41 | |

| Model | TP | FN | TN | FP | Acc (%) | Macro (%) | Micro (%) | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | Precision | Recall | F1 | |||||||

| Bi-LSTM | (0, 1) | 6 | 7 | 35 | 0 | 85.42 | 91.67 | 73.08 | 77.03 | 87.85 | 85.42 | 83.39 |

| (0.05, 0.95) | 5 | 8 | 33 | 2 | 79.17 | 75.96 | 66.37 | 68.42 | 78.03 | 79.17 | 76.86 | |

| (0.1, 0.9) | 6 | 7 | 33 | 2 | 81.25 | 78.75 | 70.22 | 72.57 | 80.47 | 81.25 | 79.64 | |

| (0.15, 0.85) | 7 | 6 | 33 | 2 | 83.33 | 81.20 | 74.07 | 76.41 | 82.76 | 83.33 | 82.27 | |

| (0.2, 0.8) | 6 | 7 | 32 | 3 | 79.17 | 74.36 | 68.79 | 70.52 | 77.88 | 79.17 | 77.84 | |

| (0.25, 0.75) | 5 | 8 | 34 | 1 | 81.25 | 82.14 | 67.80 | 70.47 | 81.60 | 81.25 | 78.65 | |

| (0.3, 0.7) | 9 | 4 | 35 | 0 | 91.67 | 94.87 | 84.62 | 88.21 | 92.52 | 91.67 | 91.13 | |

| (0.35, 0.65) | 5 | 8 | 34 | 1 | 81.25 | 82.14 | 67.80 | 70.47 | 81.60 | 81.25 | 78.65 | |

| (0.4, 0.6) | 5 | 8 | 34 | 1 | 81.25 | 82.14 | 67.80 | 70.47 | 81.60 | 81.25 | 78.65 | |

| (0.45, 0.55) | 6 | 7 | 34 | 1 | 83.33 | 84.32 | 71.65 | 74.74 | 83.68 | 83.33 | 81.49 | |

| Window Block LSTM | (0, 1) | 10 | 3 | 33 | 2 | 89.58 | 87.50 | 85.60 | 86.48 | 89.41 | 89.58 | 89.45 |

| (0.05, 0.95) | 10 | 3 | 34 | 1 | 91.67 | 91.40 | 87.03 | 88.89 | 91.63 | 91.67 | 91.44 | |

| (0.1, 0.9) | 7 | 6 | 32 | 3 | 81.25 | 77.11 | 72.64 | 74.27 | 80.36 | 81.25 | 80.41 | |

| (0.15, 0.85) | 6 | 7 | 33 | 2 | 81.25 | 78.75 | 70.22 | 72.57 | 80.47 | 81.25 | 79.64 | |

| (0.2, 0.8) | 4 | 9 | 34 | 1 | 79.17 | 79.53 | 63.96 | 65.81 | 79.32 | 79.17 | 75.61 | |

| (0.25, 0.75) | 6 | 7 | 32 | 3 | 79.17 | 74.36 | 68.79 | 70.52 | 77.88 | 79.17 | 77.84 | |

| (0.3, 0.7) | 6 | 7 | 34 | 1 | 83.33 | 84.32 | 71.65 | 74.74 | 83.68 | 83.33 | 81.49 | |

| (0.35, 0.65) | 6 | 7 | 33 | 2 | 81.25 | 78.75 | 70.22 | 72.57 | 80.47 | 81.25 | 79.64 | |

| (0.4, 0.6) | 7 | 6 | 32 | 3 | 81.25 | 77.11 | 72.64 | 74.27 | 80.36 | 81.25 | 80.41 | |

| (0.45, 0.55) | 6 | 7 | 34 | 1 | 85.42 | 86.25 | 75.49 | 78.67 | 85.68 | 85.42 | 84.17 | |

| Model | TP | FN | TN | FP | Acc (%) | Macro (%) | Micro (%) | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | Precision | Recall | F1 | ||||||

| Bi-LSTM Baseline | 0 | 13 | 33 | 2 | 68.75 | 35.87 | 47.14 | 40.74 | 52.31 | 68.75 | 59.41 |

| Transformer Baseline | 5 | 8 | 28 | 7 | 68.75 | 59.72 | 59.23 | 59.44 | 68.00 | 68.75 | 68.35 |

| Our Bi-LSTM | 9 | 4 | 35 | 0 | 91.67 | 94.87 | 84.62 | 88.21 | 92.52 | 91.67 | 91.13 |

| Our Window Block LSTM | 10 | 3 | 34 | 1 | 91.67 | 91.40 | 87.03 | 88.89 | 91.63 | 91.67 | 91.44 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mahayossanunt, Y.; Nupairoj, N.; Hemrungrojn, S.; Vateekul, P. Explainable Depression Detection Based on Facial Expression Using LSTM on Attentional Intermediate Feature Fusion with Label Smoothing. Sensors 2023, 23, 9402. https://doi.org/10.3390/s23239402

Mahayossanunt Y, Nupairoj N, Hemrungrojn S, Vateekul P. Explainable Depression Detection Based on Facial Expression Using LSTM on Attentional Intermediate Feature Fusion with Label Smoothing. Sensors. 2023; 23(23):9402. https://doi.org/10.3390/s23239402

Chicago/Turabian StyleMahayossanunt, Yanisa, Natawut Nupairoj, Solaphat Hemrungrojn, and Peerapon Vateekul. 2023. "Explainable Depression Detection Based on Facial Expression Using LSTM on Attentional Intermediate Feature Fusion with Label Smoothing" Sensors 23, no. 23: 9402. https://doi.org/10.3390/s23239402

APA StyleMahayossanunt, Y., Nupairoj, N., Hemrungrojn, S., & Vateekul, P. (2023). Explainable Depression Detection Based on Facial Expression Using LSTM on Attentional Intermediate Feature Fusion with Label Smoothing. Sensors, 23(23), 9402. https://doi.org/10.3390/s23239402