A Wearable Force Myography-Based Armband for Recognition of Upper Limb Gestures

Abstract

:1. Introduction

2. Materials and Methods

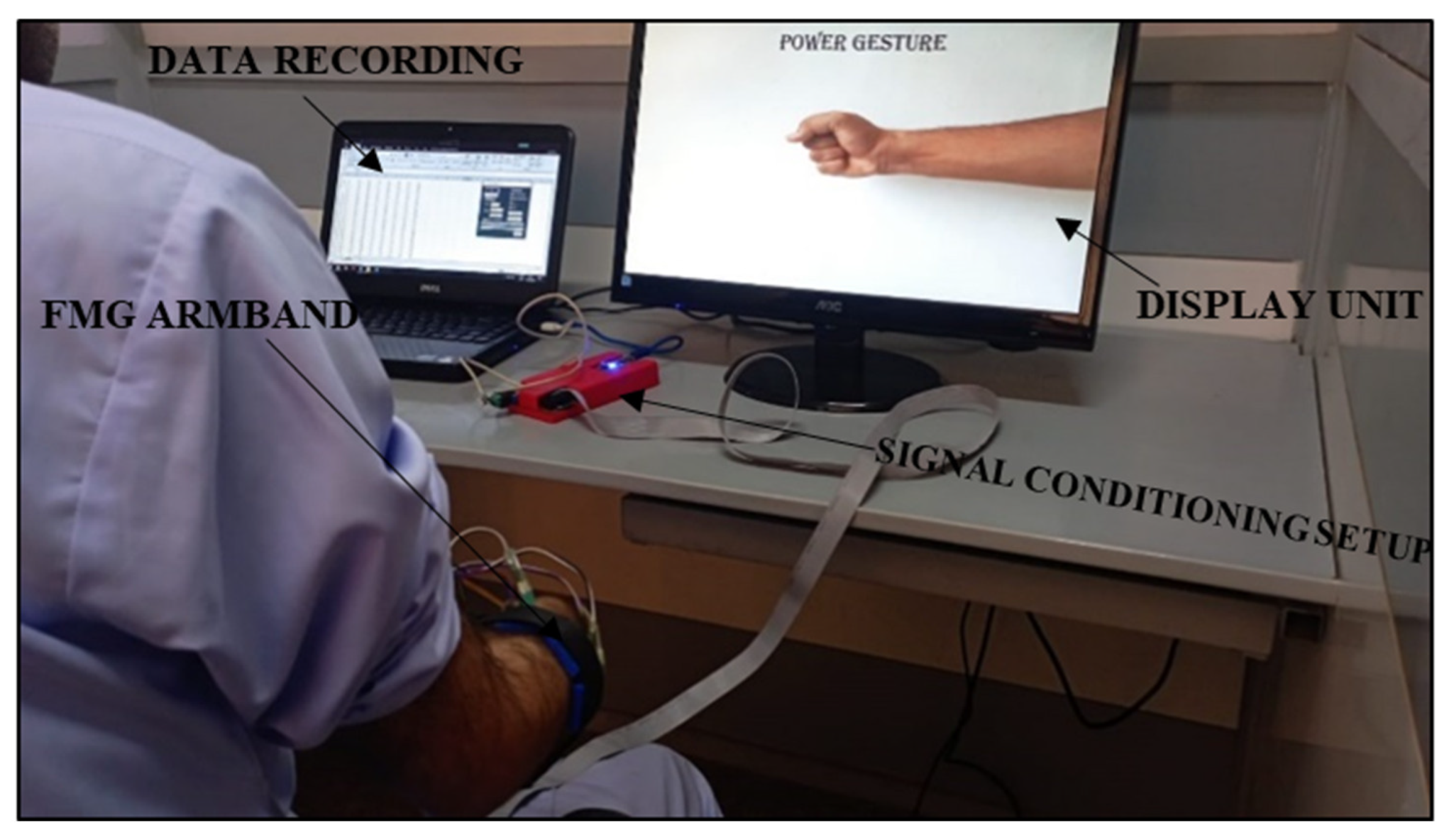

2.1. Development of FMG Band

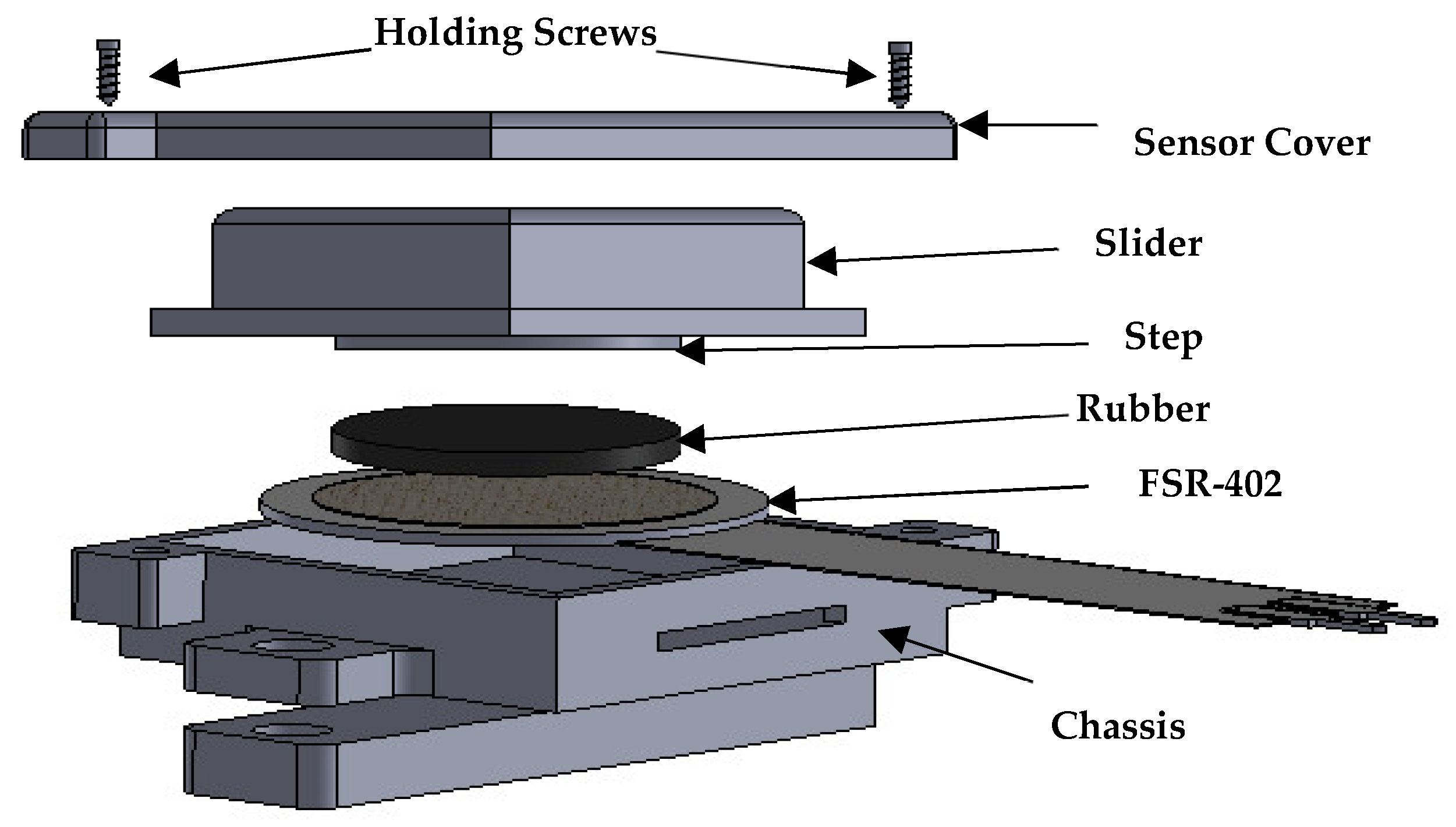

2.1.1. Design of FSR Casing

2.1.2. Signal Extraction and Conditioning Setup

2.2. Sensor Characterization

2.2.1. Load vs. Voltage Characteristics

2.2.2. Stability of FMG Signals

2.3. Subjects

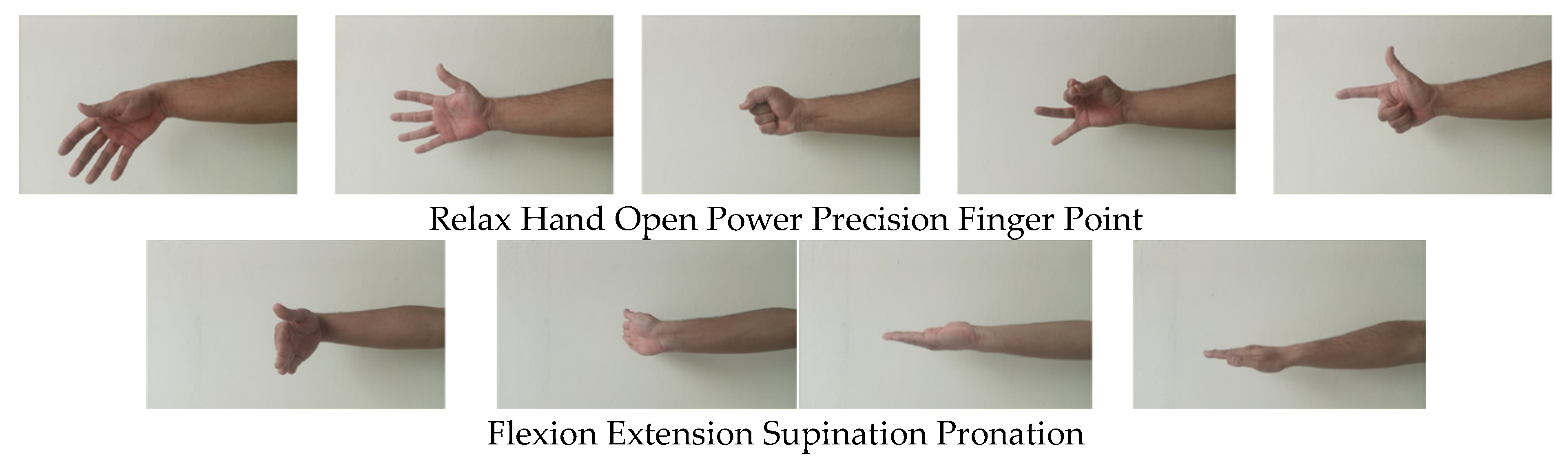

2.4. Protocol for Data Collection

2.5. Data Collection and Analysis

3. Results

3.1. Sensor Characterization Results

3.1.1. Load vs. Voltage

3.1.2. Stability of FMG Signals

3.2. Classification Comparison between Arrangement A and Arrangement B

3.2.1. Overall Cumulative Classification Results

3.2.2. Individual Subject Inter-Trial Classification Results

3.2.3. Hand Gesture Classification Results

3.2.4. Wrist and Forearm Gesture Classification Results

3.2.5. Performance Evaluation and Comparative Analysis of Multiple Classifiers

4. Discussion

4.1. Developed Sensor Characterization

4.2. Classification Performance

4.3. FMG Band Wearability, Cost, Weight, and Satisfaction Survey

4.4. Future Work

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Confusion Matrices

Appendix B

- I would like to use this band frequently.

- I found the band unnecessarily complex.

- I thought the band was easy to use.

- I think that I would need the support of a technical person to be able to use this system.

- I found the various functions in this experimental setup were well-integrated.

- I thought there was too much inconsistency in this band.

- I would imagine that most people would learn how to use this band very quickly.

- I found the band very cumbersome to use.

- I felt very confident using the band.

- I needed to learn a lot of things before I could get going with the experimental setup.

References

- Sahu, A.; Sagar, R.; Sarkar, S.; Sagar, S. Psychological effects of amputation: A review of studies from India. Ind. Psychiatry J. 2016, 25, 4. [Google Scholar]

- Connan, M.; Ruiz Ramírez, E.; Vodermayer, B.; Castellini, C. Assessment of a wearable force-and electromyography device and comparison of the related signals for myocontrol. Front. Neurorobot. 2016, 10, 17. [Google Scholar] [CrossRef]

- Sellegren, K.R. An early history of lower limb amputations and prostheses. Iowa Orthop. J. 1982, 2, 13. [Google Scholar]

- Vitali, M. Amputations and Prostheses; Bailliere Tindall Limited: Richmond, UK, 1978. [Google Scholar]

- Kerr, M.; Barron, E.; Chadwick, P.; Evans, T.; Kong, W.; Rayman, G.; Sutton-Smith, M.; Todd, G.; Young, B.; Jeffcoate, W.J. The cost of diabetic foot ulcers and amputations to the National Health Service in England. Diabet. Med. 2019, 36, 995–1002. [Google Scholar] [CrossRef]

- National Servies Scotland. Number of Upper and Lower Limb Amputations Performed Each Year by the NHS in Scotland from 1981 to 2013. 2014. Available online: https://nhsnss.org/media/1397 (accessed on 23 October 2021).

- Ziegler-Graham, K.; MacKenzie, E.J.; Ephraim, P.L.; Travison, T.G.; Brookmeyer, R. Estimating the prevalence of limb loss in the United States: 2005 to 2050. Arch. Phys. Med. Rehabil. 2008, 89, 422–429. [Google Scholar] [CrossRef]

- Semasinghe, C.; Prasanna, J.; Kandamby, H.; Ranaweera, R.; Madusanka, D.; Gopura, R. Transradial Prostheses: Current Status and Future Directions. In Proceedings of the 2016 Manufacturing & Industrial Engineering Symposium (MIES), Colombo, Sri Lanka, 22 October 2016. [Google Scholar]

- Biddiss, E.A.; Chau, T.T. Upper limb prosthesis use and abandonment: A survey of the last 25 years. Prosthet. Orthot. Int. 2007, 31, 236–257. [Google Scholar] [CrossRef]

- Geethanjali, P.; Ray, K.; Shanmuganathan, P.V. Actuation of prosthetic drive using EMG signal. In Proceedings of the TENCON 2009–2009 IEEE Region 10 Conference, Singapore, 23–26 January 2009. [Google Scholar]

- Merletti, R.; Parker, P.J. Electromyography: Physiology, Engineering, and Non-Invasive Applications; John Wiley & Sons: Hoboken, NJ, USA, 2004; Volume 11. [Google Scholar]

- Scott, R.N.; Parker, P.A. Myoelectric prostheses: State of the art. J. Med Eng. Technol. 1988, 12, 143–151. [Google Scholar] [CrossRef]

- Kamavuako, E.N.; Rosenvang, J.C.; Bøg, M.F.; Smidstrup, A.; Erkocevic, E.; Niemeier, M.J.; Jensen, W.; Farina, D. Influence of the feature space on the estimation of hand grasping force from intramuscular EMG. Biomed. Signal Process. Control. 2013, 8, 1–5. [Google Scholar] [CrossRef]

- Hargrove, L.J.; Englehart, K.; Hudgins, B. A comparison of surface and intramuscular myoelectric signal classification. IEEE Trans. Biomed. Eng. 2007, 54, 847–853. [Google Scholar] [CrossRef]

- Oskoei, M.A.; Hu, H. Myoelectric control systems—A survey. Biomed. Signal Process. Control. 2007, 2, 275–294. [Google Scholar] [CrossRef]

- Criswell, E. Cram’s Introduction to Surface Electromyography; Jones & Bartlett Publishers: Burlington, MA, USA, 2010. [Google Scholar]

- Merletti, R.; Aventaggiato, M.; Botter, A.; Holobar, A.; Marateb, H.; Vieira, T. Advances in surface EMG: Recent progress in detection and processing techniques. Crit. Rev. Biomed. Eng. 2010, 38, 305–345. [Google Scholar] [CrossRef]

- Cavanagh, P.R.; Komi, P.V. Electromechanical delay in human skeletal muscle under concentric and eccentric contractions. Eur. J. Appl. Physiol. 1979, 42, 159–163. [Google Scholar] [CrossRef]

- Viitasalo, J.T.; Komi, P.V. Interrelationships between electromyographic, mechanical, muscle structure and reflex time measurements in man. Acta Physiol. Scand. 1981, 111, 97–103. [Google Scholar] [CrossRef]

- Esposito, D.; Gargiulo, G.D.; Parajuli, N.; Cesarelli, G.; Andreozzi, E.; Bifulco, P. Measurement of muscle contraction timing for prosthesis control: A comparison between electromyography and force-myography. In Proceedings of the 2020 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Bari, Italy, 1 June–1 July 2020. [Google Scholar]

- Esposito, F.; Limonta, E.; Cè, E. Passive stretching effects on electromechanical delay and time course of recovery in human skeletal muscle: New insights from an electromyographic and mechanomyographic combined approach. Eur. J. Appl. Physiol. 2011, 111, 485–495. [Google Scholar] [CrossRef]

- Fougner, A.; Scheme, E.; Chan, A.D.; Englehart, K.; Stavdahl, Ø. Resolving the limb position effect in myoelectric pattern recognition. IEEE Trans. Neural Syst. Rehabil. Eng. 2011, 19, 644–651. [Google Scholar] [CrossRef]

- Jiang, N.; Dosen, S.; Muller, K.-R.; Farina, D. Myoelectric control of artificial limbs—Is there a need to change focus? [In the spotlight]. IEEE Signal Process. Mag. 2012, 29, 150–152. [Google Scholar] [CrossRef]

- Sikdar, S.; Rangwala, H.; Eastlake, E.B.; Hunt, I.A.; Nelson, A.J.; Devanathan, J.; Shin, A.; Pancrazio, J.J. Novel method for predicting dexterous individual finger movements by imaging muscle activity using a wearable ultrasonic system. IEEE Trans. Neural Syst. Rehabil. Eng. 2013, 22, 69–76. [Google Scholar] [CrossRef]

- Esposito, D.; Andreozzi, E.; Fratini, A.; Gargiulo, G.D.; Savino, S.; Niola, V.; Bifulco, P. A piezoresistive sensor to measure muscle contraction and mechanomyography. Sensors 2018, 18, 2553. [Google Scholar] [CrossRef]

- Sturma, A.; Stamm, T.; Hruby, L.A.; Bischof, B.; Salminger, S.; Gstoettner, C.; Prahm, C.; Pittermann, A.; Wakolbinger, R.; Hofer, C. Rehabilitation of high upper limb amputees after Targeted Muscle Reinnervation. J. Hand Ther. 2022, 35, 58–66. [Google Scholar] [CrossRef]

- Xiao, Z.G.; Menon, C.; Menon, C. A review of force myography research and development. Sensors 2019, 19, 4557. [Google Scholar] [CrossRef]

- Prakash, A.; Sahi, A.K.; Sharma, N.; Sharma, S. Force myography controlled multifunctional hand prosthesis for upper-limb amputees. Biomed. Signal Process. Control 2020, 62, 102122. [Google Scholar] [CrossRef]

- Ahmadizadeh, C.; Pousett, B.; Menon, C. Investigation of channel selection for gesture classification for prosthesis control using force myography: A case study. Front. Bioeng. Biotechnol. 2019, 7, 331. [Google Scholar] [CrossRef]

- Esposito, D.; Savino, S.; Andreozzi, E.; Cosenza, C.; Niola, V.; Bifulco, P. The “Federica” Hand. Bioengineering 2021, 8, 128. [Google Scholar] [CrossRef]

- Booth, R.; Goldsmith, P. A wrist-worn piezoelectric sensor array for gesture input. J. Med Biol. Eng. 2018, 38, 284–295. [Google Scholar] [CrossRef]

- Yungher, D.; Craelius, W. Discriminating 6 grasps using force myography of the forearm. In Proceedings of the American Society of Biomechanics Northeast Conference, Maryland, MD, USA, 22–25 August 2007. [Google Scholar]

- Ahmadizadeh, C.; Merhi, L.-K.; Pousett, B.; Sangha, S.; Menon, C. Toward intuitive prosthetic control: Solving common issues using force myography, surface electromyography, and pattern recognition in a pilot case study. IEEE Robot. Autom. Mag. 2017, 24, 102–111. [Google Scholar] [CrossRef]

- Ravindra, V.; Castellini, C. A comparative analysis of three non-invasive human-machine interfaces for the disabled. Front. Neurorobotics 2014, 8, 24. [Google Scholar] [CrossRef]

- Wininger, M.; Kim, N.-H.; Craelius, W. Pressure signature of forearm as predictor of grip force. J. Rehabil. Res. Dev. 2008, 45, 883–892. [Google Scholar] [CrossRef]

- Li, N.; Yang, D.; Jiang, L.; Liu, H.; Cai, H. Combined use of FSR sensor array and SVM classifier for finger motion recognition based on pressure distribution map. J. Bionic Eng. 2012, 9, 39–47. [Google Scholar] [CrossRef]

- Xiao, Z.G.; Menon, C. Performance of forearm FMG and sEMG for estimating elbow, forearm and wrist positions. J. Bionic Eng. 2017, 14, 284–295. [Google Scholar] [CrossRef]

- Ha, N.; Withanachchi, G.P.; Yihun, Y. Performance of forearm FMG for estimating hand gestures and prosthetic hand control. J. Bionic Eng. 2019, 16, 88–98. [Google Scholar] [CrossRef]

- Xiao, Z.G.; Menon, C. Towards the development of a wearable feedback system for monitoring the activities of the upper-extremities. J. Neuroeng. Rehabil. 2014, 11, 2. [Google Scholar] [CrossRef]

- Radmand, A.; Scheme, E.; Englehart, K. High-resolution muscle pressure mapping for upper-limb prosthetic control. In Proceedings of the MEC–Myoelectric Control Symposium, Fredericton, NB, Canada, 19–22 August 2014. [Google Scholar]

- Castellini, C.; Kõiva, R.; Pasluosta, C.; Viegas, C.; Eskofier, B. Tactile myography: An off-line assessment of able-bodied subjects and one upper-limb amputee. Technologies 2018, 6, 38. [Google Scholar] [CrossRef]

- Jiang, X.; Merhi, L.-K.; Xiao, Z.G.; Menon, C. Exploration of force myography and surface electromyography in hand gesture classification. Med. Eng. Phys. 2017, 41, 63–73. [Google Scholar] [CrossRef]

- Chapman, J.; Dwivedi, A.; Liarokapis, M. A wearable, open-source, lightweight forcemyography armband: On intuitive, robust muscle-machine interfaces. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021. [Google Scholar]

- Anvaripour, M.; Khoshnam, M.; Menon, C.; Saif, M. FMG-and RNN-based estimation of motor intention of upper-limb motion in human-robot collaboration. Front. Robot. AI 2020, 7, 573096. [Google Scholar] [CrossRef]

- Xiao, Z.G.; Menon, C. Counting grasping action using force myography: An exploratory study with healthy individuals. JMIR Rehabil. Assist. Technol. 2017, 4, e6901. [Google Scholar] [CrossRef]

- Rehman, M.U.; Shah, K.; Haq, I.U.; Khurshid, H. A Force Myography based HMI for Classification of Upper Extremity Gestures. In Proceedings of the 2022 2nd International Conference on Artificial Intelligence (ICAI), Islamabad, Pakistan, 30–31 March 2022. [Google Scholar]

- Rehman, M.U.; Shah, K.; Haq, I.U.; Iqbal, S.; Ismail, M.A.; Selimefendigil, F. Assessment of Low-Density Force Myography Armband for Classification of Upper Limb Gestures. Sensors 2023, 23, 2716. [Google Scholar] [CrossRef]

- Prakash, A.; Sharma, N.; Sharma, S. Novel force myography sensor to measure muscle contractions for controlling hand prostheses. Instrum. Sci. Technol. 2020, 48, 43–62. [Google Scholar] [CrossRef]

- Cho, E.; Chen, R.; Merhi, L.-K.; Xiao, Z.; Pousett, B.; Menon, C. Force myography to control robotic upper extremity prostheses: A feasibility study. Front. Bioeng. Biotechnol. 2016, 4, 18. [Google Scholar] [CrossRef]

- Sadarangani, G.P.; Jiang, X.; Simpson, L.A.; Eng, J.J.; Menon, C. Force myography for monitoring grasping in individuals with stroke with mild to moderate upper-extremity impairments: A preliminary investigation in a controlled environment. Front. Bioeng. Biotechnol. 2017, 5, 42. [Google Scholar] [CrossRef]

- Atzori, M.; Gijsberts, A.; Castellini, C.; Caputo, B.; Hager, A.-G.M.; Elsig, S.; Giatsidis, G.; Bassetto, F.; Müller, H. Electromyography data for non-invasive naturally-controlled robotic hand prostheses. Sci. Data 2014, 1, 140053. [Google Scholar] [CrossRef]

- Atzori, M.; Gijsberts, A.; Müller, H.; Caputo, B. Classification of hand movements in amputated subjects by sEMG and accelerometers. In Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 26–30 August 2014. [Google Scholar]

- Hermens, H.; Stramigioli, S.; Rietman, H.; Veltink, P.; Misra, S. Myoelectric forearm prostheses: State of the art from a user-centered perspective. J. Rehabil. Res. Dev. 2011, 48, 719. [Google Scholar]

- Mizuno, H.; Tsujiuchi, N.; Koizumi, T. Forearm motion discrimination technique using real-time EMG signals. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011. [Google Scholar]

- Sarrafian, S.K.; Melamed, J.L.; Goshgarian, G. Study of wrist motion in flexion and extension. Clin. Orthop. Relat. Res. 1977, 153–159. [Google Scholar] [CrossRef]

- PLX-DAQ|Parallax. 26 June 2021. Available online: https://www.parallax.com/package/plx-daq/ (accessed on 7 January 2021).

- Narayan, Y. SEMG signal classification using KNN classifier with FD and TFD features. Mater. Today Proc. 2021, 37, 3219–3225. [Google Scholar] [CrossRef]

- Ferigo, D.; Merhi, L.-K.; Pousett, B.; Xiao, Z.G.; Menon, C. A case study of a force-myography controlled bionic hand mitigating limb position effect. J. Bionic Eng. 2017, 14, 692–705. [Google Scholar] [CrossRef]

- Javaid, H.A.; Tiwana, M.I.; Alsanad, A.; Iqbal, J.; Riaz, M.T.; Ahmad, S.; Almisned, F.A. Classification of Hand Movements Using MYO Armband on an Embedded Platform. Electronics 2021, 10, 1322. [Google Scholar] [CrossRef]

- Lei, G.; Zhang, S.; Fang, Y.; Wang, Y.; Zhang, X. Investigation on the Sampling Frequency and Channel Number for Force Myography Based Hand Gesture Recognition. Sensors 2021, 21, 3872. [Google Scholar] [CrossRef]

- Ramot, Y.; Haim-Zada, M.; Domb, A.J.; Nyska, A. Biocompatibility and safety of PLA and its copolymers. Adv. Drug Deliv. Rev. 2016, 107, 153–162. [Google Scholar] [CrossRef]

- Xiao, Z.G.; Menon, C. An investigation on the sampling frequency of the upper-limb force myographic signals. Sensors 2019, 19, 2432. [Google Scholar] [CrossRef]

- Paredes-Madrid, L.; Palacio, C.A.; Matute, A.; Parra Vargas, C.A. Underlying physics of conductive polymer composites and force sensing resistors (FSRs) under static loading conditions. Sensors 2017, 17, 2108. [Google Scholar] [CrossRef]

| Subject ID No. | Healthy/Amputee | Gender | Age | Forearm Circumference (cm) | Arrangement B Configuration Sensor Distance (cm) | Experimentation Arm |

|---|---|---|---|---|---|---|

| 1 | Healthy | F | 62 | 26 | 4.8 | Left |

| 2 | Healthy | M | 30 | 24 | 4.5 | Right |

| 3 | Healthy | M | 45 | 28 | 5.1 | Right |

| 4 | Amputee | M | 55 | 27 | 4.9 | Right |

| 5 | Amputee | M | 32 | 25 | 4.6 | Right |

| 6 | Amputee | M | 29 | 24 | 4.5 | Right |

| FMG Band | No. of Sensors | Casing/Housing for FSRs | Sampling Frequency (Hz) | Weight (g) | Cost |

|---|---|---|---|---|---|

| Our developed band | 7 | Yes | 10 | 97 | USD 93 |

| Ravindra et al. [34] | 10 | No | 50 | 65 | EUR 50 |

| Menrva FMG band [39,49,50,62] | 8 | No | 10–1k | - | - |

| Cannon et al. [2] | 10 | Yes | 10 | - | Below EUR 150 |

| Jiang et al. [42] | 16 | No | 10 | - | USD 125 |

| Ahmadizadeh et al. [33] | 16 | No | 10 | 925, including socket weight | - |

| Ha et al. [38] | 3 | No | 25 | - | - |

| Subject ID No. | 4 | 5 | 6 | Mean |

|---|---|---|---|---|

| SUS score | 82.5 | 95 | 90 | 89.11 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rehman, M.U.; Shah, K.; Haq, I.U.; Iqbal, S.; Ismail, M.A. A Wearable Force Myography-Based Armband for Recognition of Upper Limb Gestures. Sensors 2023, 23, 9357. https://doi.org/10.3390/s23239357

Rehman MU, Shah K, Haq IU, Iqbal S, Ismail MA. A Wearable Force Myography-Based Armband for Recognition of Upper Limb Gestures. Sensors. 2023; 23(23):9357. https://doi.org/10.3390/s23239357

Chicago/Turabian StyleRehman, Mustafa Ur, Kamran Shah, Izhar Ul Haq, Sajid Iqbal, and Mohamed A. Ismail. 2023. "A Wearable Force Myography-Based Armband for Recognition of Upper Limb Gestures" Sensors 23, no. 23: 9357. https://doi.org/10.3390/s23239357

APA StyleRehman, M. U., Shah, K., Haq, I. U., Iqbal, S., & Ismail, M. A. (2023). A Wearable Force Myography-Based Armband for Recognition of Upper Limb Gestures. Sensors, 23(23), 9357. https://doi.org/10.3390/s23239357