1. Introduction

In the construction industry, construction workers constitute the fundamental unit, and their behavior significantly contributes to workplace accidents and injuries. Managing and controlling workers, who are inherently dynamic, prove to be a major challenge on construction sites. A concerning 80–90% of accidents are closely tied to unsafe actions and behaviors exhibited by workers [

1]. Traditional approaches to measure and improve worker behavior, such as self-reports, observations, and direct measurements, are known but are time- and labor-intensive.

Safety risks associated with worker posture are a significant concern in construction projects. Construction workers typically face the risk of immediate or long-term injuries, including unsafe and awkward activities [

2] and falls from ladders. These injuries arise due to repetitive movements, high force exertion, vibrations, and awkward body postures, all of which are common occurrences among construction workers [

3]. Various efforts, including career training, education, and site manager observations, have been implemented to mitigate posture-related safety risks. Nevertheless, current methods still lack effectiveness in managing these risks.

Recently, there has been a growing body of research dedicated to the automation of information extraction from skeleton data using deep learning algorithms. Recurrent Neural Networks (RNNs) [

4] have emerged as a prominent choice for skeleton-based action recognition due to their effectiveness in handling sequential data. On the other hand, Convolutional Neural Networks (CNNs) [

5] are often employed to transform skeleton data into image-like formats. RNN-based methods are highly effective in handling skeleton sequences, being naturally suited for modeling time series data and capturing temporal dependencies. To further enhance the understanding of the temporal context within skeleton sequences, other RNN-based techniques, like Long Short-Term Memory (LSTM) [

6] and Gated Recurrent Units (GRUs) [

7], have been incorporated into skeleton-based action recognition methodologies. The integration of CNNs alongside RNNs offers a complementary approach, as CNN architectures are proficient in capturing spatial cues present in input data, addressing a potential limitation of RNN-based methods.

However, these two approaches lack the ability to comprehensively capture the intricate dependencies among correlated joints of the human body. A more robust analysis algorithm, the Graph Convolutional Network (GCN) [

8,

9,

10], was introduced to enhance skeleton-based action recognition. This was inspired by the understanding that human 3D skeleton data inherently forms a topological graph distinct from the sequence vector or pseudo-image treatment seen in RNN-based or CNN-based methods. In recent times, the GCN has gained prominence in this task due to its effective representation of graph-structured data. There are generally two types of prevalent graph-related neural networks: graph and GCN [

8]; our primary focus in this survey is on the latter. Over the past two years, several classic methods have been proposed in this domain. For instance, Yan et al. [

11] introduced a method in which skeleton sequences are embedded into multiple graphs. In this approach, the joints within a frame of the sequence act as nodes in the graph, with the connections between these joints representing the spatial edges of the graph.

Therefore, the majority of these approaches have traditionally focused on a single, encompassing graph based on the natural connections within the human body structure. This approach confines a node to capturing only a specific type of spatial structure, resulting in an inability of existing GCN methods to fully learn features within a single fixed graph, which typically represents the physical relations of nodes. The convolution operator, which is integral to GCN, heavily relies on meaningful graph structures to capture extensive local and contour features in spatial data. Thus, the significance of developing meaningful graph structures that promise to capture more local features and contour features in spatial data cannot be overstated. Future research in this domain should concentrate on refining GCN methodologies to achieve more robust feature learning within dynamic graph structures, ultimately advancing the field of skeleton-based action recognition.

In this paper, our goal is to introduce a multi-scale graph strategy designed to acquire not only local features, such as the relationship between the head and neck, but also to capture broader connections, like the relationship between the head and foot. We utilize varying scale graphs in distinct network streams, strategically incorporating physically unrelated information. This addition aids in enabling the network to capture local features of individual joints and crucial contour features of significant joints. Moreover, we incorporate velocity and acceleration as novel temporal features, which are fused with spatial features, enhancing the depth of information. The primary contributions of this study can be summarized as follows:

We devised the multi-feature fusion network (MF-Net) incorporating three-level spatial features (body-level, part-level, joint-level) as different network streams. This multi-stream strategic addition facilitates the network in capturing both significant local and global features of workers’ joints.

Going beyond diverse spatial features, we innovatively introduced the velocity and acceleration of each joint as temporal features. Meanwhile, we proposed a spatial–temporal two-step fusion strategy, effectively correlating high-level feature maps from multiple streams. These features were seamlessly integrated with three-scale spatial features, resulting in robust and efficient improvement in the performance of action recognition accuracy. We struck a balance between the independent learning of feature streams and ensuring adequate correlation of fusion streams to guarantee fusion performance.

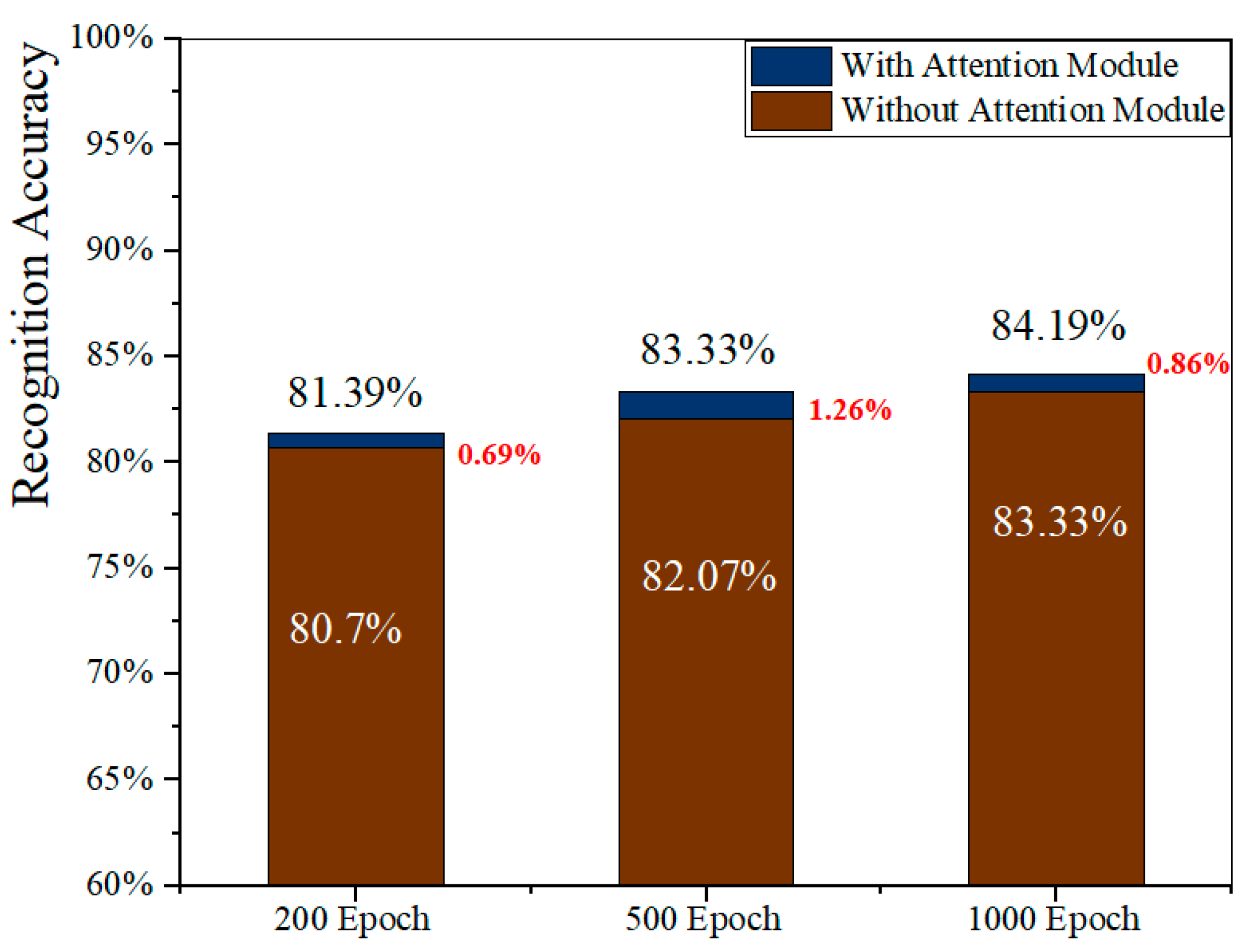

In order to enhance the efficiency of our model, we implemented a bottleneck structure that effectively reduces computational costs during parameter tuning and model inference. This structure is further complemented by the utilization of two temporal kernel sizes, allowing us to cover different receptive fields. Additionally, we introduced a branch-wise attention block, which employs three attention blocks to calculate the attention weights for different streams, contributing to the further refinement of our model’s efficiency.

3. Methodology

The content of this section can be summarized as follows: Firstly, we introduce MF-Net, a robust and efficient multi-feature network designed for learning spatial–temporal feature sequences. Secondly, in terms of spatial features, we design a hierarchical skeleton topology (body, part, joint) and utilize a graph convolution network to extract these features. For temporal features, we incorporate velocity and acceleration as novel features fused with spatial features. Thirdly, TCN serves as the main network for recognizing sequence information. To optimize our model’s efficiency, we implement a bottleneck structure, effectively reducing computational costs during parameter tuning and model inference. Additionally, we set two different temporal kernel sizes to obtain varying receptive fields. Finally, we propose a two-stage fusion strategy integrating a branch-wise attention block to correlate high-level feature maps from multiple streams. We carefully balance the independent learning of feature streams and ensure adequate correlation of fusion streams to optimize fusion performance. Furthermore, we introduce attention mechanisms to compute attention weights for different branches, further enhancing the discriminative capability of the features.

3.1. Pipeline of Multi-Scale Network

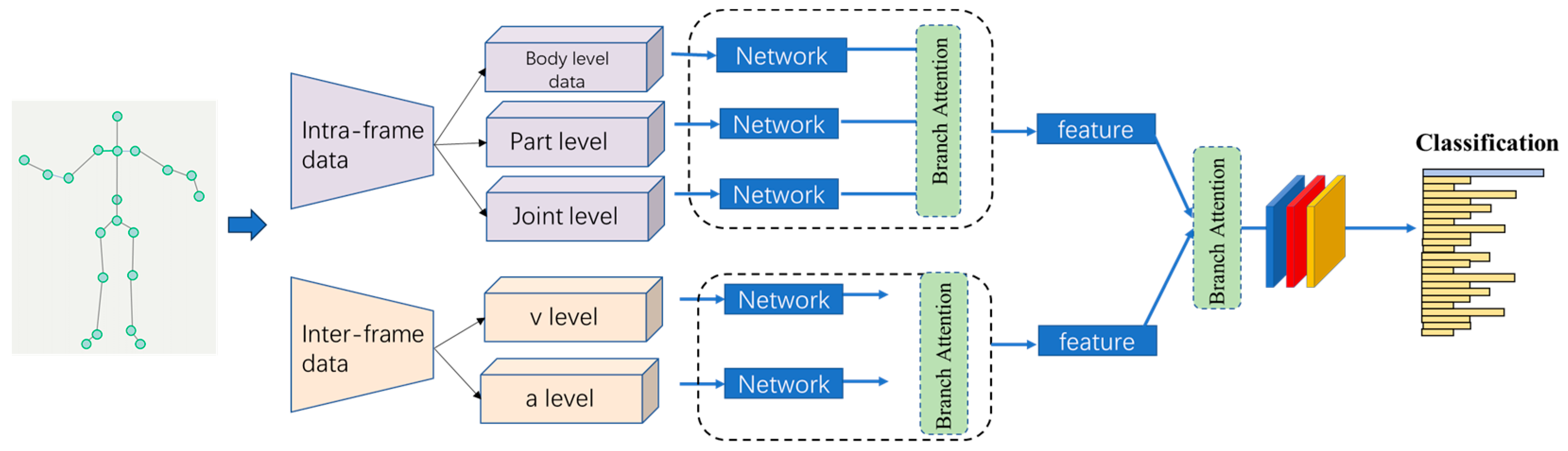

The network comprises two branches, each incorporating five sub-streams based on input features, as shown in

Figure 1. Branch 1 represents the spatial features. Before entering the sequence network, we employ a GCN to extract spatial structural features from each frame. A simplistic fusion approach involves concatenating these features at the feature level and passing them through a fully connected layer. However, this approach is not optimal due to inconsistencies in features across different streams.

3.1.1. GCN Model

In contrast to CNN models, graph neural networks (GNNs) [

8] are designed to process graph-structured data. In essence, the input should depict a set of vertices (or nodes) and a structure that delineates the relationships between them [

40]. Each node undergoes an update to a latent feature vector containing information about its neighborhood, resembling a convolution operation, and leading to the nomenclature of GCN. This latent node representation, often referred to as an embedding, can be of any chosen length. These embeddings can be employed for node prediction, or they can be aggregated to derive predictions at the graph level, providing information about the graph as a whole. Concurrently, various GNN variants have been utilized to address diverse tasks, such as graph classification [

37], relational reasoning tasks [

41], and node classification in extensive graphs [

42].

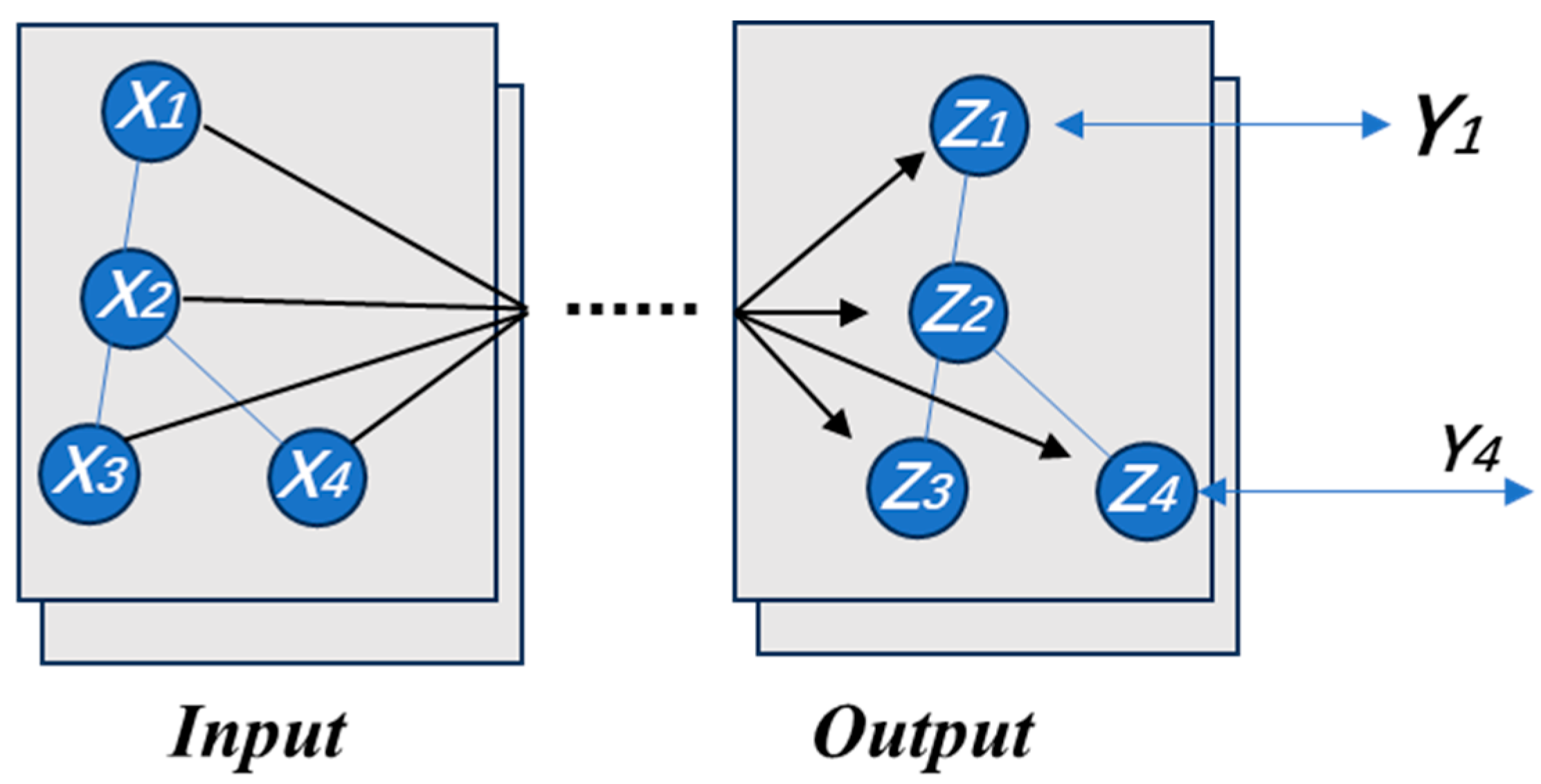

Figure 2 presents a visual description of the GCN. In this example, at the input layer, there are four nodes, and each node takes an input vector of dimension

, together forming the initial activation

. After passing through several hidden layers, the activation is then converted into the values on the output layer nodes

of each dimension

. These are then compared with the partial labels

to generate a loss for the model to train on. Normally, a graph

consists of vertices

and edges

, where each edge in

is a pair of two vertices. A walk is a sequence of nodes in a graph, wherein consecutive nodes are connected by an edge. Each graph can be represented by an adjacency matrix

of size

, where

represents an edge between vertices

and

, and

indicates no connection between them. Vertices and edges attributes are features that possess a single value for each node and edge of a graph.

To perform the convolution operation in a GCN, the skeleton graph is transformed into the adjacency matrix

. When the skeletal joints

and

are connected in the skeleton graph, the value of

is set to 1; otherwise, it is set to 0. Therefore, different adjacency matrices

can represent various skeleton topological structures through the aggregation of information. Specifically, each skeleton frame can be converted into a graph

to represent intra-body connections.

constitutes the joint set representing spatial features, while

E is the set of edges between joints used to represent structural features. Based on the skeleton data

and its corresponding adjacency matrix

, the convolution operation in a GCN can be formulated as shown in Equation (1):

where

is the adjacency matrix of graph

with self-connection identity matrix

.

is the degree matrix of

.

is the learned weight matrix, and

denotes the activation function.

3.1.2. TCN Sequence Model

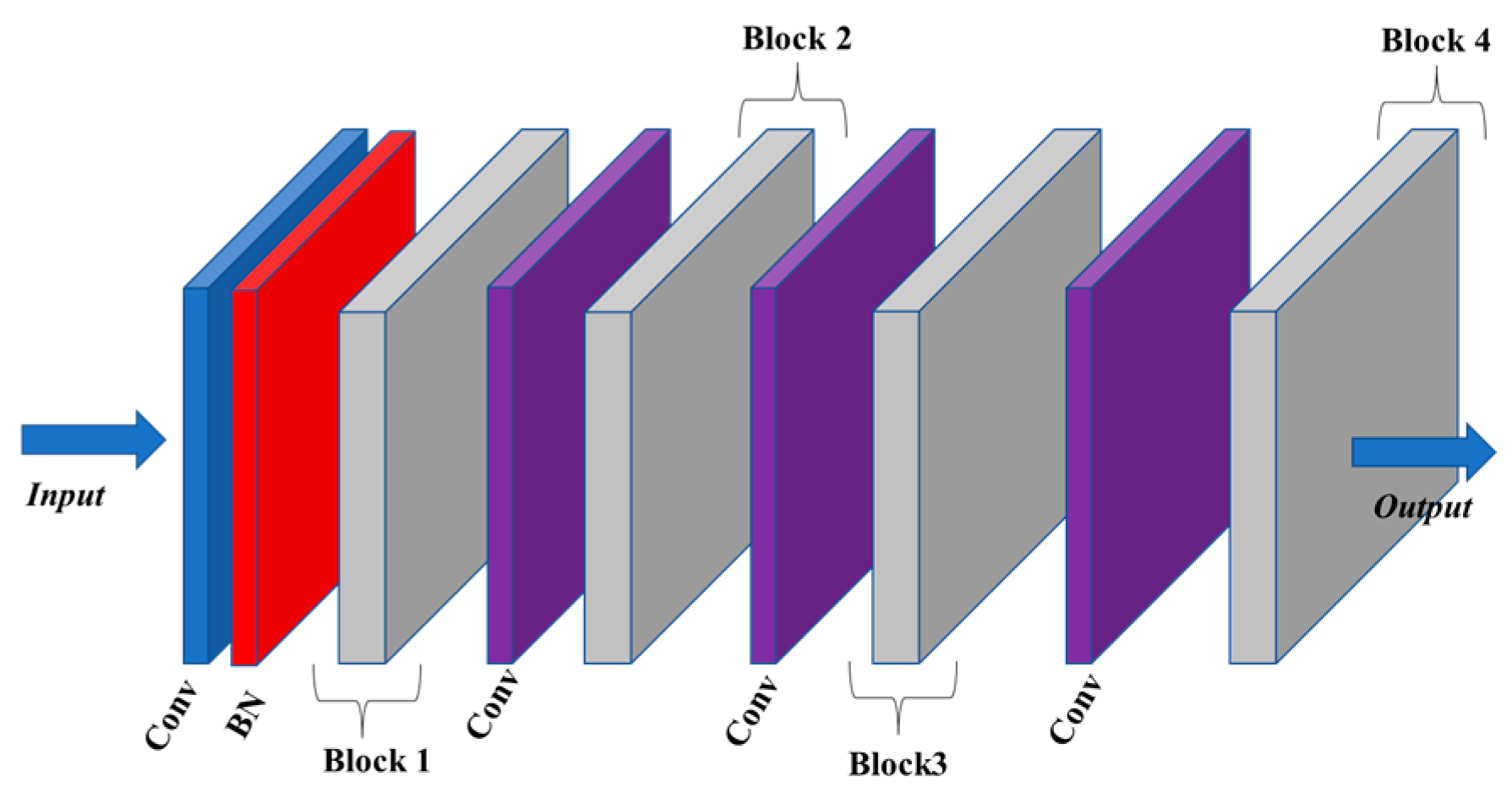

Each stream comprises four blocks connected by a kernel size 1 temporal convolution layer, as shown in

Figure 3. The output dimensions of blocks B1, B2, B3, and B4 are 32, 64, 128, and 256, respectively. These blocks are interconnected in a sequential manner. The input data are initially normalized by a Batch Normalization (BN) layer at the start of the network. Referring to the temporal convolution kernel sizes in related research, 3 is a popular choice [

34]. Accordingly, the temporal convolution kernel sizes in our model are set to 3 and 5, resulting in corresponding receptive fields of 7 and 13, respectively. The TCN model is exclusively composed of convolutional structures and has exhibited excellent results in sequence modeling tasks without utilizing a recurrent structure. The temporal convolutional network can be regarded as a fusion of one-dimensional convolution and causal convolution:

where

is the output feature of layer

, and

represents the input size. The

and

denote the learnable parameter matrices and the bias vectors, respectively.

A nuanced block structure known as the “bottleneck” was introduced [

43]. Its operation involves the insertion of two convolution layers before and after a standard convolution layer. This strategic arrangement substantially reduces the overall network parameters. More precisely, it achieves this by decreasing the number of feature channels during convolution calculations with a reduction rate denoted as “r”. In this section, we replaced the conventional TCN block with a bottleneck structure to significantly expedite model training and responsiveness during implementation.

3.1.3. Branch-Wise Attention

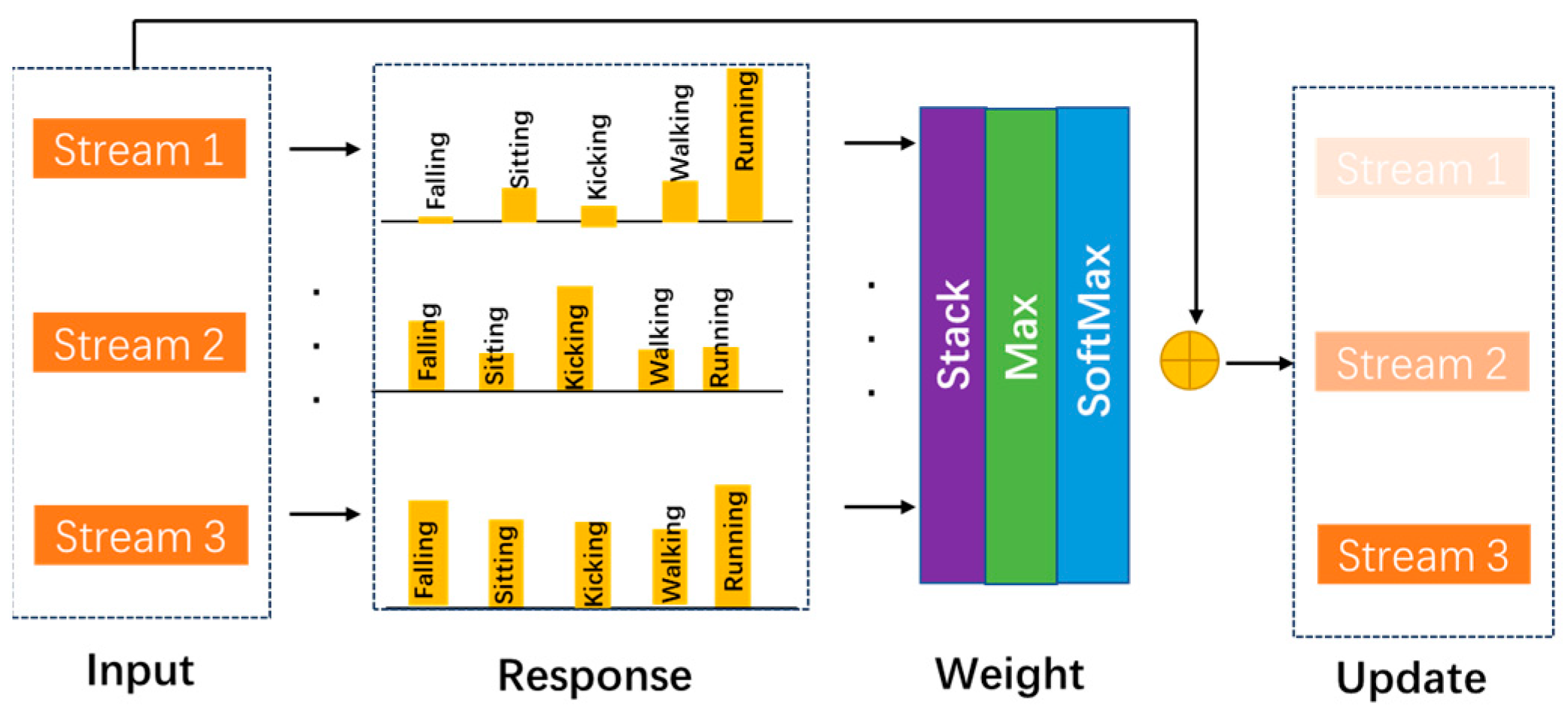

In this work, we propose a two-step fusion strategy integrating an attention block method. Specifically, we focus on discovering the importance of different streams and branches. Inspired by split attention in the ResNeSt model [

44], the attention block is designed as

Figure 4. Taking a branch as an example, first, the features of different streams are taken as input. Secondly, they are passed through a fully connected layer (FC) with a Batch Norm layer and a ReLU function. Thirdly, the features of all streams are stacked. Subsequently, a max function is adopted to calculate the attention matrices, and a SoftMax function is utilized to determine the most vital stream. Finally, the features of all streams are concatenated as an integral skeleton representation with different attention weights. The stream attention block can be formulated as:

where

and

denote input with and without the attention block, and

and

represent ReLU activation and max function. And

W is the learnable parameters of the FC layer.

3.2. Multi-Scale Input Features

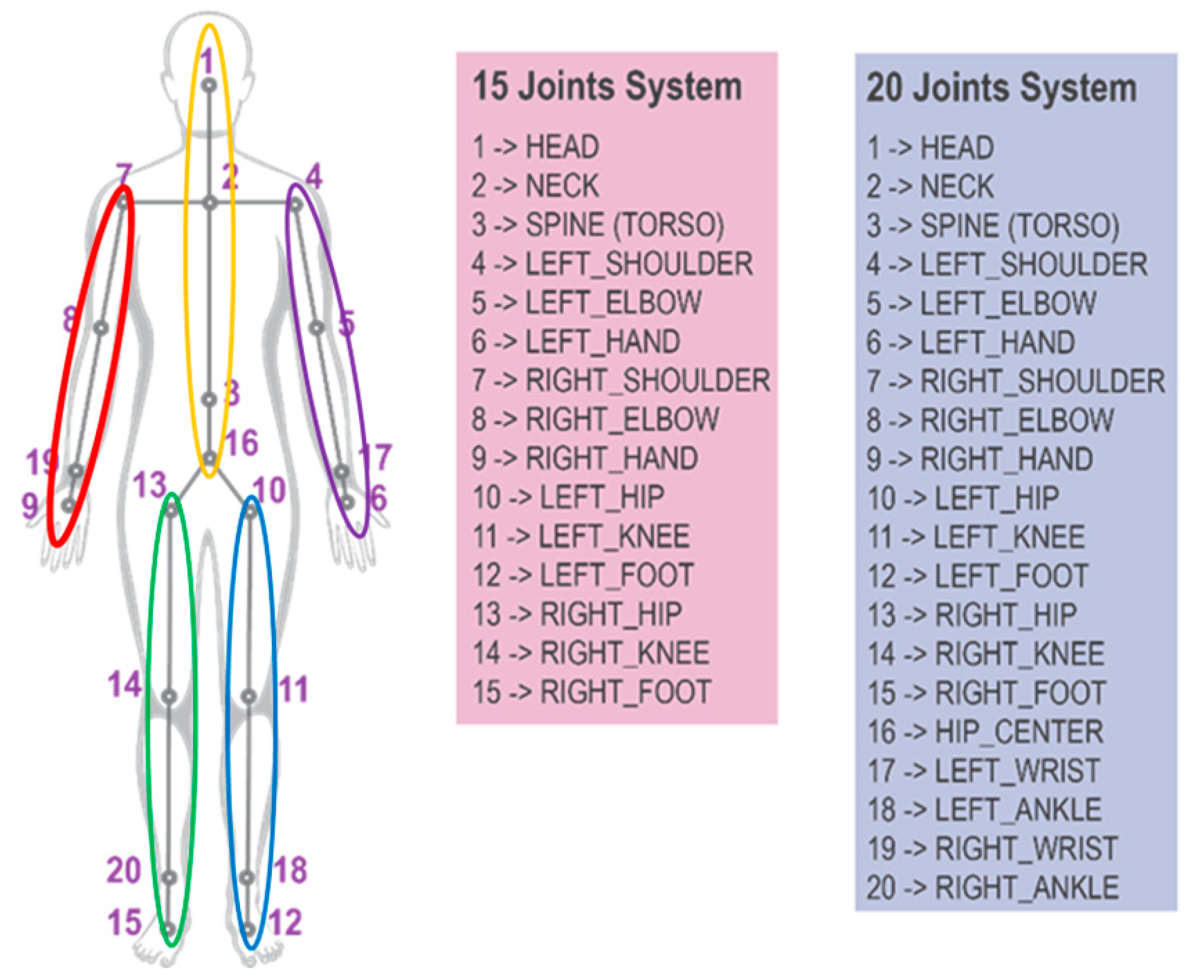

In this research, we described the five different input streams. For the body-level input stream, we used the whole-body nodes based on human skeleton body to represent worker’s actions. For body-level and joint-level inputs, we split human body input five parts and abstract the relationship between important joints. Lastly, two-mode motion data were employed as inputs to encompass a broader range of motion features exhibited by workers.

3.2.1. Part-Level Input

In this section, we divided the whole human body into five main parts, considering the physical structure of the human body for mining more representative spatial–temporal features, as shown in

Figure 5. Instead of taking the whole skeleton as the input of the deep learning model, we established five body parts, including left arm (LA), right arm (RA), left leg (LL), right leg (RL) and trunk.

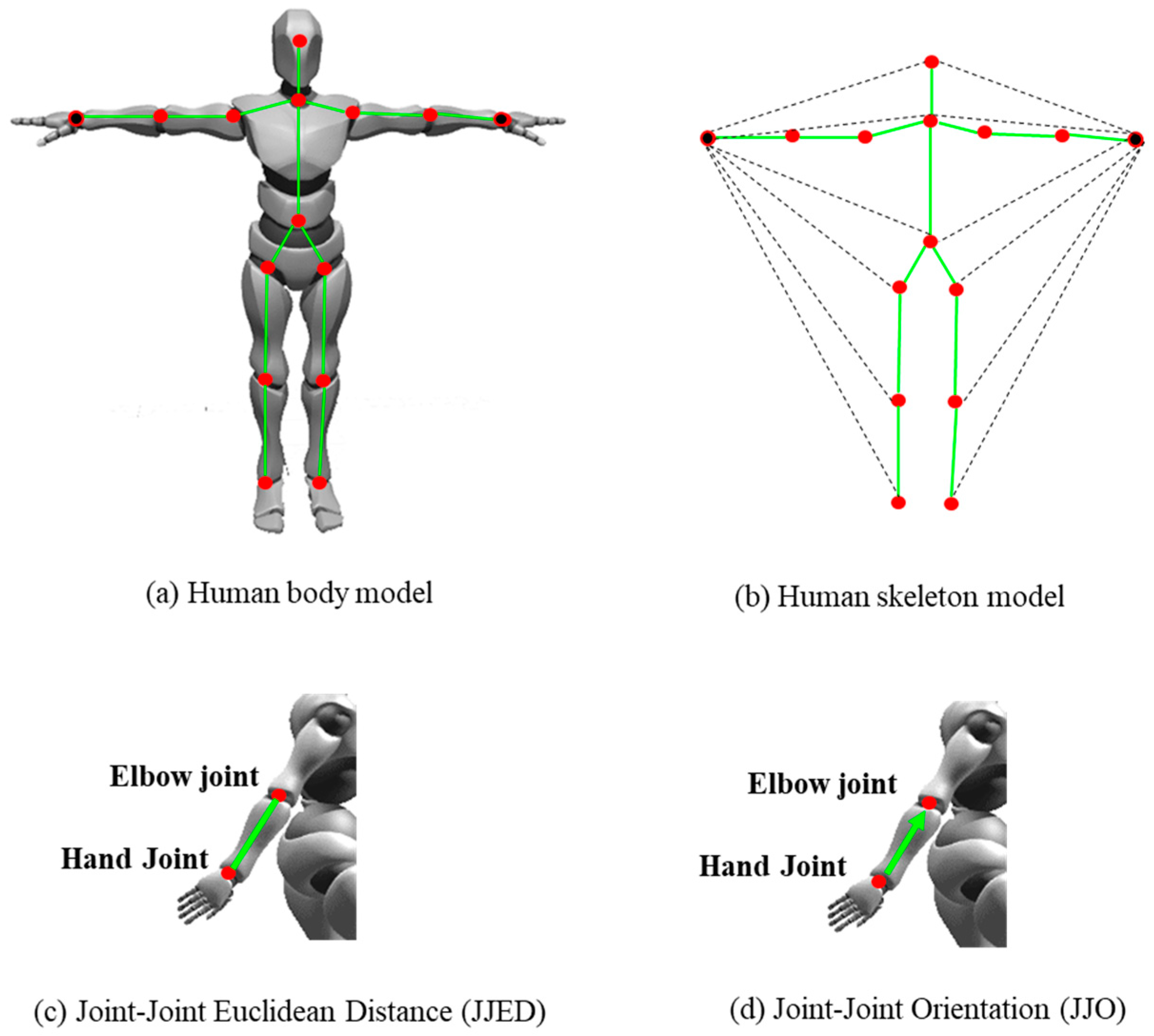

3.2.2. Joint-Level Input

Construction tasks are very heavily reliant on manual actions of workers, such as “installation” or “tying retar”. Therefore, the relationship between the two hands and the relationship between the hands and other joints are highly important to distinguish construction worker actions. Hence, we chose two representations of geometric features, Joint–Joint Euclidean Distance (JJED) and Joint–Joint Orientation (JJO), as the input of this stream. JJD represents the Euclidean distance between any joints within a 20-joint model with two hands, significantly reflecting the relative relationship between directly or indirectly connecting with the hands. JJO represents the x, y, and z orientations from any joints to the two hands within a 20-joint model, and the values are calculated with the unit length vector, as shown in

Figure 6.

3.2.3. Motion Data

Specifically, the raw skeleton at frame

is represented as

, where

is the number of joints,

denotes the 3D coordinates of

joint at time

. Then, the joint accelerations at time

can be calculated as

, where

.

and

can be considered as the first-order and second-order derivatives of the joint coordinates, respectively. Temporal interpolation is applied to the velocity and acceleration information to have a consistent sequence length, as shown in

Figure 7.

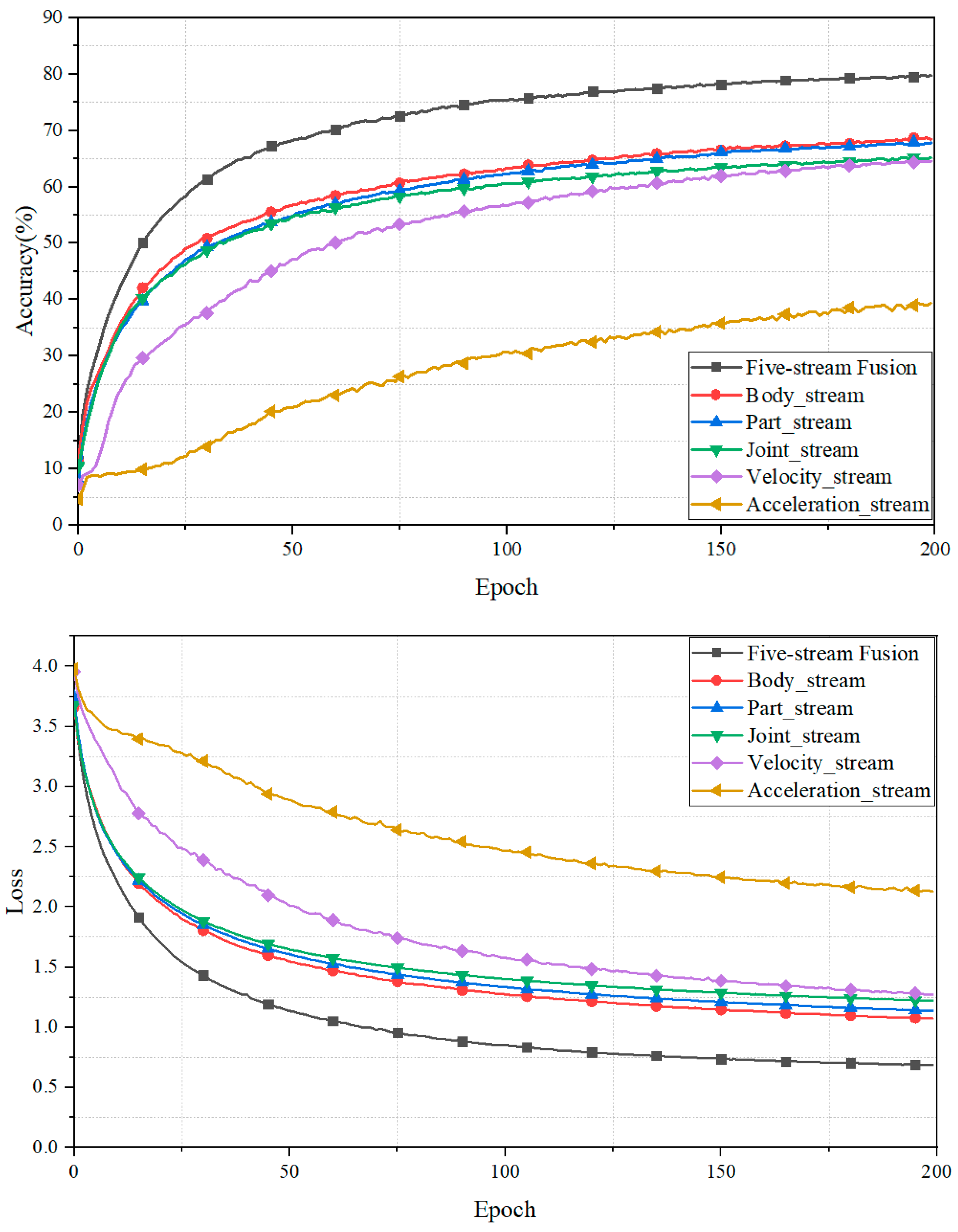

5. Conclusions

In this study, we have presented a novel model with two key innovations. Firstly, we introduced a multi-scale graph strategy to capture local and contour features, effectively utilizing three levels of spatial features. Additionally, we incorporated two levels of motion data to comprehensively capture motion dynamics. Next, the proposed fused multi-stream architecture integrates five spatial–temporal feature sequences deriving from raw skeleton data, including body-level, part-level, joint-level, velocity, and acceleration features. Our experiments have demonstrated the effectiveness of employing additional input features, leading to notable improvements in recognition accuracy. We observed that intra-frame features (body-level input + part-level input + joint-level input) outperformed inter-frame features (velocity and acceleration) by about 10.41%. Moreover, both the body-level stream and velocity stream yielded superior results compared to the other individual features. The five-stream direct fusion strategy achieved the highest accuracy, at about 79.75%. Finally, we also added three attention modules to further improve the accuracy of the whole model, and this enabled our model to extract deep and rich features, significantly enhancing its action recognition performance.

In summary, the recognition of construction workers’ activities is of paramount importance in optimizing project task allocation and safeguarding the long-term health of the workforce. Delving into the impact of deep learning frameworks on skeleton-based automated recognition techniques is a fundamental step in ensuring the safety and productivity of human workers. With the proliferation of tools for extracting and processing human skeleton information in the construction sector, the potential and desirability of automated recognition techniques in future construction sites are evident. This research underscores the effectiveness of the multi-scale graph strategy and the fusion of diverse spatial–temporal features in enhancing action recognition models. These advancements offer the potential not only to refine action recognition but also to make substantial contributions to enhancing workplace safety and efficiency, particularly within the construction industry.