Application of Graph Convolutional Neural Networks Combined with Single-Model Decision-Making Fusion Neural Networks in Structural Damage Recognition

Abstract

:1. Introduction

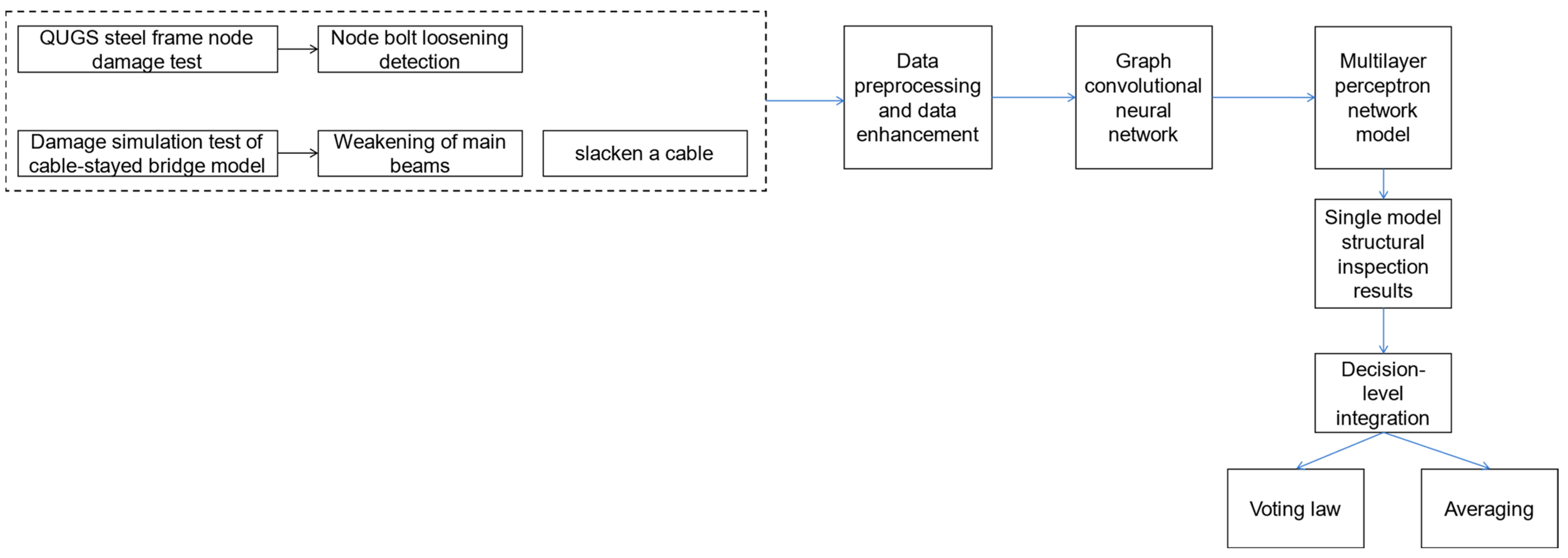

- A graph neural network is introduced to show how the positional relationships of the sensors are fed into the network structure, and it is verified that the GCN model has an advantage over the 1D-CNN model in terms of recognition accuracy due to its ability to mine the spatial structure of the sensors;

- Based on building a high-performance deep learning model, this paper considers incorporating data fusion techniques at the model decision layer to further improve the accuracy and robustness of the model;

- Based on the frame structure model and the self-designed cable-stayed bridge test model of two structures, experimental validation is carried out, which proves that the method has a certain generalization ability and can mine the spatial characteristics of the structure, correctly identify the structural damage, and has a certain improvement in the performance relative to a single GCN.

2. Materials and Methods

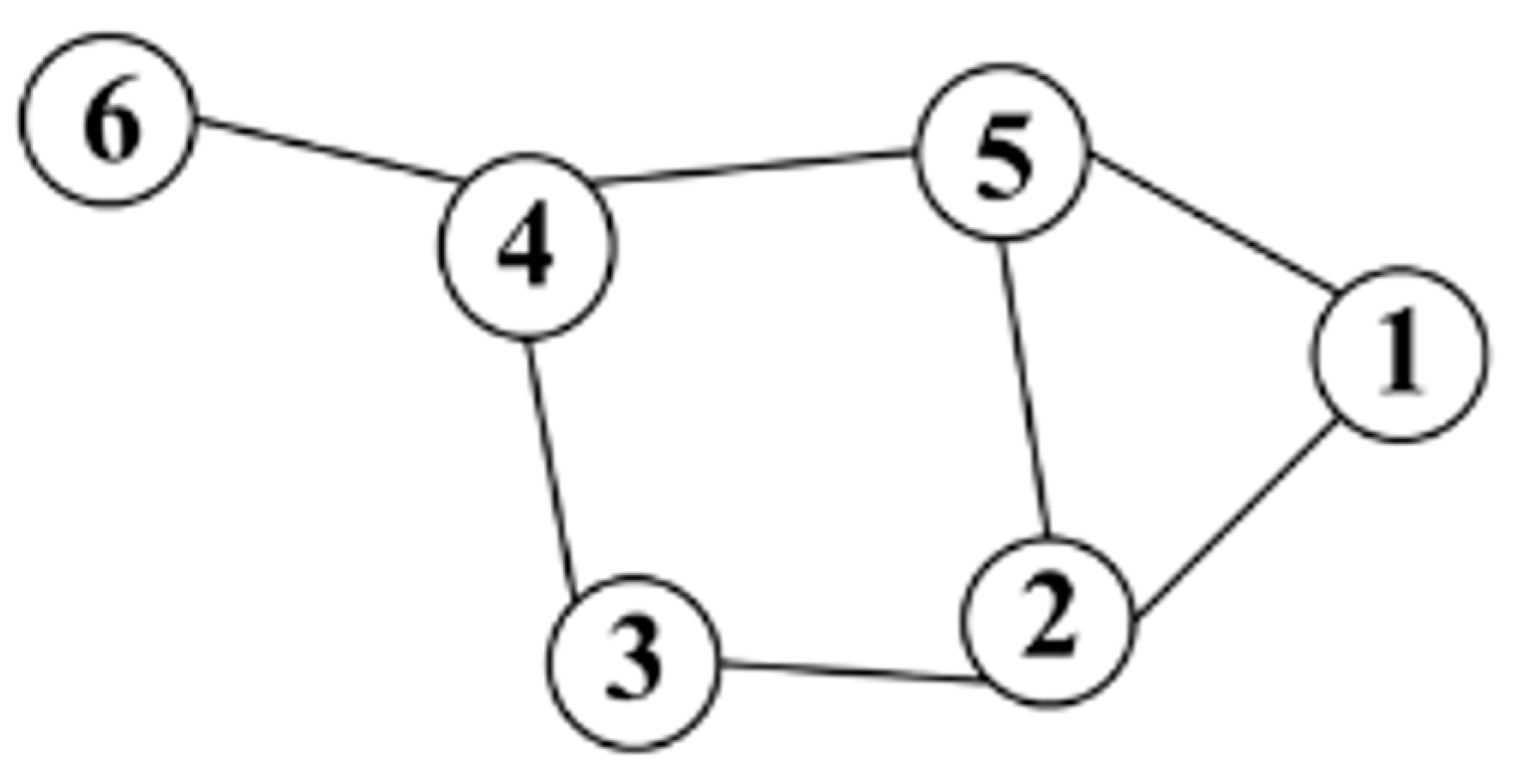

2.1. GCN Module

2.2. MLP Module

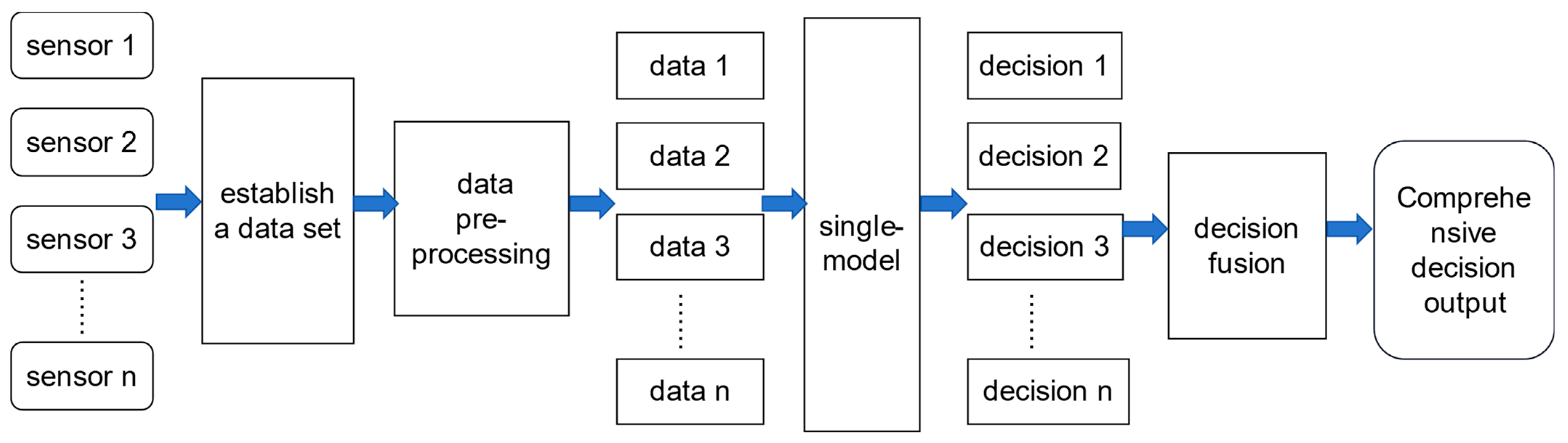

2.3. Decision Level Data Fusion

3. Introduction to the Test

3.1. Verification of Nodal Damage Identification in Steel Frame Structures

3.1.1. Qatar University Grandstand Simulator Basics

3.1.2. Model Parameters and Recognition Effectiveness

3.1.3. Noise Resistance

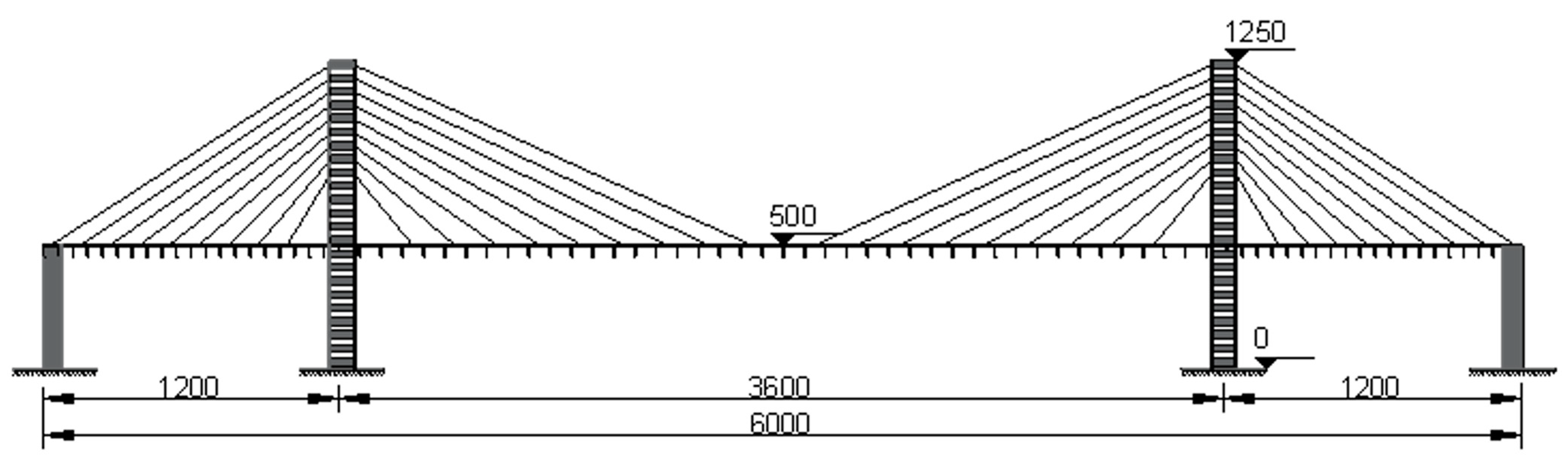

3.2. Introduction to the Laboratory Cable-Stayed Bridge Test Model

- (1)

- Tension sensor measurement point arrangement: Due to the limited number of tension sensors, the demand for arranging tension sensors for each cable-stayed cable is not met. Therefore, through analysis, in order to achieve the purpose of regulating the rope force and controlling the overall state of the cable-stayed bridge, all the tension sensors are embedded in all the cable-stayed cables of the two sectors on the west side, arranged in sparse intervals. In addition, tension sensors are arranged on the east side of the cable-stayed cables to check the numerical values of the tension sensors on the west side with those on the east side. Numerical calibration, to a great extent, is used to monitor the overall state of the bridge rope force. Figure 10 shows the arrangement scheme of tension sensors on the sector tension cables on both sides of the center axis of the main beam, where a total of 38 tension sensors are arranged.

- (2)

- Arrangement of micrometer measurement points: The arrangement of the micrometer is mainly used to monitor the deflection displacement of the key cross-section of the main girder. According to the parameters of the overall arrangement of the bridge model and finite element calculation and analysis, the selection is shown in Figure 11. At the same time, the displacement control of the key cross-section of the micrometer is coordinated with the horizontal filament positioning method, which is used for the zero displacement control of the main girder when the cable-stayed bridge is in a reasonable bridge condition.

- (3)

- Acceleration sensor measurement point arrangement: When arranging the acceleration sensor, it is necessary to consider the sensitivity of the measurement point location for the structural modal changes. Combined with other structural analysis experience, the sensors will try to be arranged in the peak vibration position. The establishment of the cable-stayed bridge model of the finite element model involves modal analysis and the extraction of the model of the first ten orders of vertical vibration pattern. The sensors are placed at the peak vibration position. In the main girder unit we select the measurement point to set up a total of 14 acceleration sensors, as shown in Figure 12.

3.3. Design of Main Beam Damage Conditions

Analysis and Discussion of Main Beam Damage Identification Results

3.4. Design of Damage Conditions of the Cable

Analysis and Discussion of Cable Damage Identification Results

4. Conclusions and Outlook

- We demonstrated that the GCN model has certain advantages through the framework model case, and at the same time, can further improve the recognition accuracy when combined with the decision-level data fusion technology. The GCN reaches 93.03% accuracy, which exceeds the 1D-CNN model with data compression, and after combining it with the S_DFNN model, the model’s recognition accuracy reaches 99.57%, which is a substantial improvement;

- The main girder damage identification and stayed cable relaxation damage identification for the cable-stayed bridge model revealed that the GCN model is more sensitive to the structural change of location, and that the spatial characteristics between the sensor arrays can be mined, but there is room for improvement in identification performance and accuracy. After integrating the S_DFNN model, the accuracy of main girder damage recognition for cable-stayed bridges was improved by 11.44%;

- For the damage identification of the diagonal cable, the combination of decision-level data fusion technology and a deep learning model approach optimizes the identification accuracy of a single model to a certain extent and improves the identification accuracy of the structure by 21.81% to 99.69%, which can achieve the goal of accurately determining the damage to the diagonal cable.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Teng, S.; Chen, G.; Gong, P.; Liu, G.; Cui, F. Structural damage detection using convolutional neural networks combining strain energy and dynamic response. Meccanica 2020, 55, 945–959. [Google Scholar] [CrossRef]

- Azimi, M.; Eslamlou, A.D.; Pekcan, G. Data-Driven Structural Health Monitoring and Damage Detection through Deep Learning: A State-of-the-Art Review. Sensors 2020, 20, 2778. [Google Scholar] [CrossRef] [PubMed]

- Rizzo, P.; Enshaeian, A. Challenges in Bridge Health Monitoring: A Review. Sensors 2021, 21, 4336. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.Y.; Wang, Y.Z.; Wu, J.; Deng, C. Sensor data-driven structural damage detection based on deep convolutional neural networks and continuous wavelet transform. Appl. Intell. 2021, 51, 5598–5609. [Google Scholar] [CrossRef]

- Wang, X.W.; Zhang, X.A.; Shahzad, M.M. A novel structural damage identification scheme based on deep learning framework. Structures 2021, 29, 1537–1549. [Google Scholar] [CrossRef]

- Dong, S.; Wang, P.; Abbas, K. A survey on deep learning and its applications. Comput. Sci. Rev. 2021, 40, 100379. [Google Scholar] [CrossRef]

- Liu, Z. Health Monitoring of Railroad Structure Based on Graph Neural Network; Nanchang University: Nanchang, China, 2022. [Google Scholar]

- Dang, V.H.; Vu, T.C.; Nguyen, B.D.; Nguyen, Q.H.; Nguyen, T.D. Structural damage detection framework based on graph convolutional network directly using vibration data. Structures 2022, 38, 40–51. [Google Scholar] [CrossRef]

- Grande, E.; Imbimbo, M. A multi-stage data-fusion procedure for damage detection of linear systems based on modal strain energy. J. Civ. Struct. Health Monit. 2014, 4, 107–118. [Google Scholar] [CrossRef]

- Zhou, Q.; Zhou, H.; Zhou, Q.; Yang, F.; Luo, L.; Li, T. Structural damage detection based on posteriori probability support vector machine and Dempster-Shafer evidence theory. Appl. Soft Comput. 2015, 36, 368–374. [Google Scholar] [CrossRef]

- Guo, T.; Wu, L.; Wang, C.; Xu, Z. Damage detection in a novel deep-learning framework: A robust method for feature extraction. Struct. Health Monit. 2020, 19, 424–442. [Google Scholar] [CrossRef]

- Thomas, N.K.; Max, W. Semi-Supervised Classification with Graph Convolutional Networks. In Proceedings of the 4th International Conference on Learning Representations, San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Wei, X.R.; Zhou, H.Z. Output-only modal parameter identification of civil engineering structures. Struct. Eng. Mech. 2004, 17, 115949. [Google Scholar]

- Zhang, Y.; Hou, Y.; OuYang, K.; Zhou, S. Multi-scale signed recurrence plot based time series classification using inception architectural networks. Pattern Recognit. 2022, 123, 108385. [Google Scholar] [CrossRef]

- Jeong, S.; Kim, E.J.; Park, J.W.; Sim, S.H. Data fusion-based damage identification for a monopile offshore wind turbine structure using wireless smart sensors. Ocean. Eng. 2020, 195, 106728. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, J.; Teng, S.; Chen, G.; Teng, Z. Structural Damage Detection Based on Vibration Signal Fusion and Deep Learning. J. Vib. Eng. Technol. 2022, 10, 1205–1220. [Google Scholar] [CrossRef]

- He, F.; Zhou, J.; Feng, Z.; Liu, G.; Yang, Y. A hybrid short-term load forecasting model based on variational mode decomposition and long short-term memory networks considering relevant factors with Bayesian optimization algorithm. Appl. Energy 2019, 237, 103–116. [Google Scholar] [CrossRef]

- Liu, T.; Xu, H.; Ragulskis, M.; Cao, M.; Ostachowicz, W. A Data-Driven Damage Identification Framework Based on Transmissibility Function Datasets and One-Dimensional Convolutional Neural Networks: Verification on a Structural Health Monitoring Benchmark Structure. Sensors 2020, 20, 1059. [Google Scholar] [CrossRef] [PubMed]

- Meisam, G.; Saeed, R.S.; Zubaidah, I.; Khaled, G.; Páraic, C. State-of-the-art review on advancements of data mining in structural health monitoring. Measurement 2022, 193, 110939. [Google Scholar]

- Flah, M.; Ragab, M.; Lazhari, M.; Nehdi, M.L. Localization and classification of structural damage using deep learning single-channel signal-based measurement. Autom. Constr. 2022, 139, 104271. [Google Scholar] [CrossRef]

- Azimi, M.; Pekcan, G. Structural health monitoring using extremely compressed data through deep learning. Comput.-Aided Civ. Infrastruct. Eng. 2020, 35, 597–614. [Google Scholar] [CrossRef]

- Truong, T.T.; Lee, J.; Nguyen-Thoi, T. An effective framework for real-time structural damage detection using one-dimensional convolutional gated recurrent unit neural network and high performance computing. Ocean. Eng. 2022, 253, 111202. [Google Scholar] [CrossRef]

| Parameter | Specific Values |

|---|---|

| First GCN layer | 512 |

| Second GCN layer | 512 |

| Third GCN layer | 512 |

| Dropout value | 0.3 |

| Training Optimizer | Adam |

| Flah et al. [20] | 1D-CNN | 30 | All nodes | 86% |

| Azimi et al. [21] | Compressed data + 1D-CNN | 30 | All nodes | 91.9% |

| Truong et al. [22] | 1D-CNN + GRU | 1 | One joint | 91.31% |

| Contrast model | GCN | 30 | All nodes | 93.03% |

| Contrast model | LSTM | 12 | All nodes | 93.37% |

| Proposed model | S_DFNN (GCN) | 30 | All nodes | 99.57% |

| SNR (dB) | LSTM | GCN | S_DFNN (GCN) |

|---|---|---|---|

| 80 | 0.93193 | 0.93095 | 0.99899 |

| 60 | 0.93151 | 0.93128 | 0.99899 |

| 40 | 0.93036 | 0.92960 | 0.99899 |

| 20 | 0.67137 | 0.82191 | 0.97757 |

| 15 | 0.31664 | 0.56939 | 0.80267 |

| 10 | 0.10607 | 0.24530 | 0.38684 |

| 5 | 0.04881 | 0.09224 | 0.14037 |

| Damage Condition | Description of Damage Status |

|---|---|

| Condition 0 (non-destructive) | Structural baseline condition |

| Working condition 1 | Replacement of unit D0 of the main beam with a type A damage unit |

| Condition 2 | Replacement of unit D10 of the main beam with a type B damage unit |

| Condition 3 | Replacement of unit D0 of the main beam with a type A damage unit Replacement of unit D10 of the main beam with a type B damage unit |

| Parameter | Specific Values |

|---|---|

| First GCN layer | 500 |

| Second GCN layer | 500 |

| Third GCN layer | 500 |

| Dropout value | 0.3 |

| Training Optimizer | Adam |

| Model | Damage Unit Number | Total | |||

|---|---|---|---|---|---|

| Non-Destructive | D0 | D10 | D0 + D10 | ||

| GCN | 96.75% | 88.75% | 89.50% | 79.25% | 88.56% |

| S_DFNN (GCN) | 99.99% | 99.91% | 99.97% | 99.99% | 99.97% |

| Damage Lasso Number | Description of Damage Status |

|---|---|

| non-destructive | structural baseline condition |

| LB1_W | No. LB1_W Slope cable slack 3 mm |

| LB9_W | No. LB9_W Slope cable slack 3 mm |

| LB1_W + LB9_W | No. LB1_W Slope cable slack 3 mm No. LB9_W Slope cable slack 3 mm |

| Mold | Damage Lasso Number | (Grand) Total | |||

|---|---|---|---|---|---|

| Non-Destructive | LB1_W | LB9_W | LB1_W + LB9_W | ||

| GCN | 85.25% | 77.75% | 83.75% | 64.75% | 77.88 |

| S_DFNN (GCN) | 99.98% | 99.99% | 99.77% | 98.75% | 99.62% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, X.; Xu, L.; Guo, H.; Yang, L. Application of Graph Convolutional Neural Networks Combined with Single-Model Decision-Making Fusion Neural Networks in Structural Damage Recognition. Sensors 2023, 23, 9327. https://doi.org/10.3390/s23239327

Li X, Xu L, Guo H, Yang L. Application of Graph Convolutional Neural Networks Combined with Single-Model Decision-Making Fusion Neural Networks in Structural Damage Recognition. Sensors. 2023; 23(23):9327. https://doi.org/10.3390/s23239327

Chicago/Turabian StyleLi, Xiaofei, Langxing Xu, Hainan Guo, and Lu Yang. 2023. "Application of Graph Convolutional Neural Networks Combined with Single-Model Decision-Making Fusion Neural Networks in Structural Damage Recognition" Sensors 23, no. 23: 9327. https://doi.org/10.3390/s23239327

APA StyleLi, X., Xu, L., Guo, H., & Yang, L. (2023). Application of Graph Convolutional Neural Networks Combined with Single-Model Decision-Making Fusion Neural Networks in Structural Damage Recognition. Sensors, 23(23), 9327. https://doi.org/10.3390/s23239327