Anomaly Detection of Wind Turbine Driveline Based on Sequence Decomposition Interactive Network

Abstract

:1. Introduction

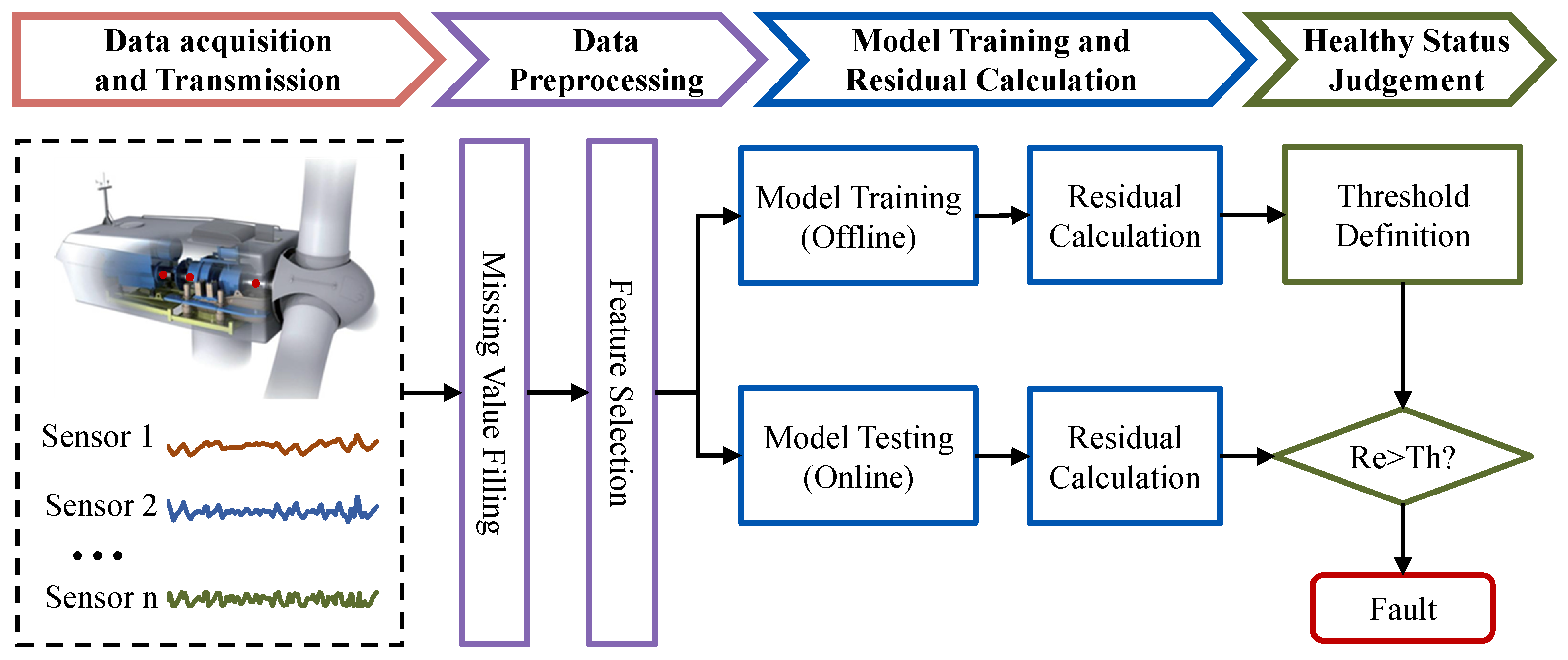

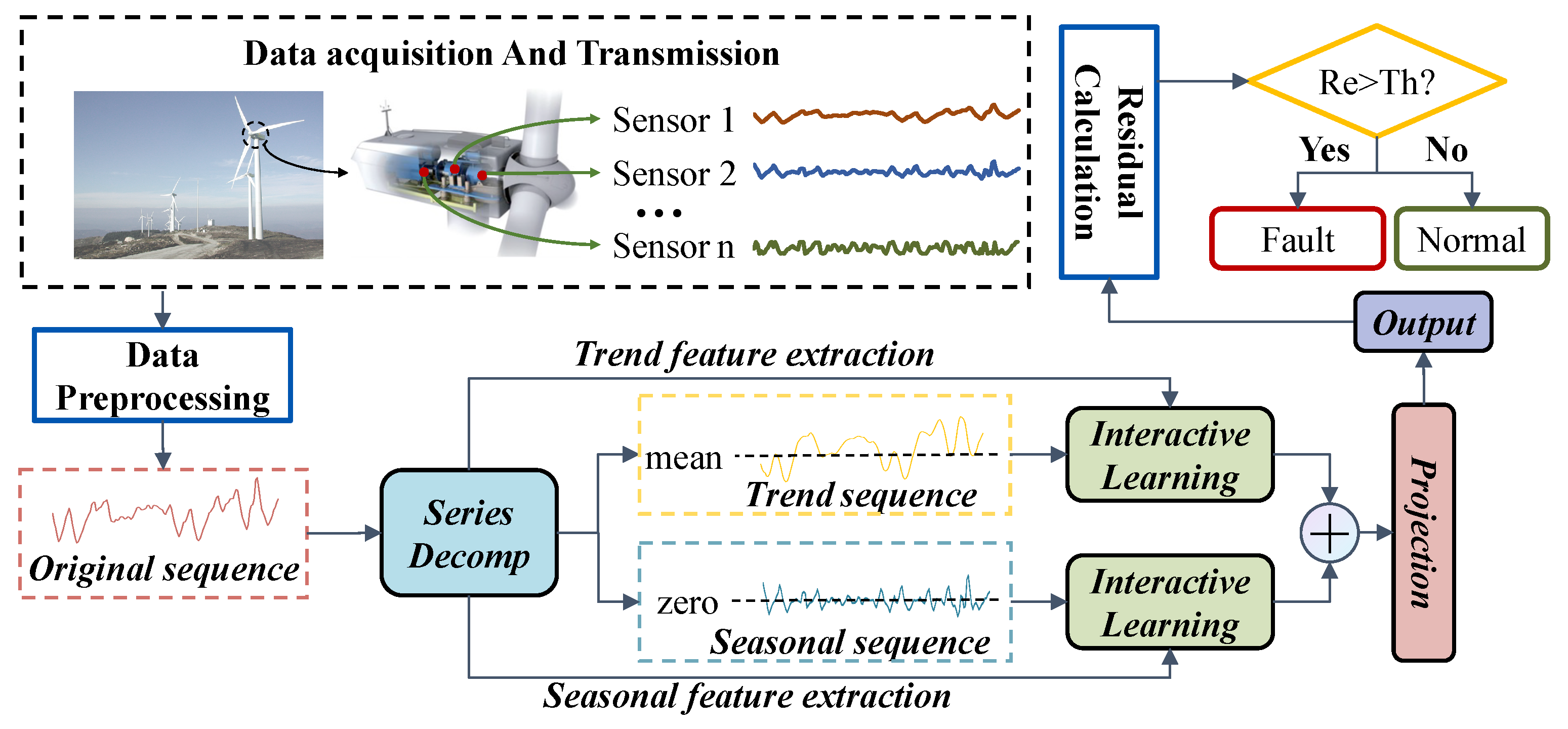

2. General Flow of Condition Monitoring of Wind Turbines

3. Data Preprocessing

3.1. Missing Value Filling

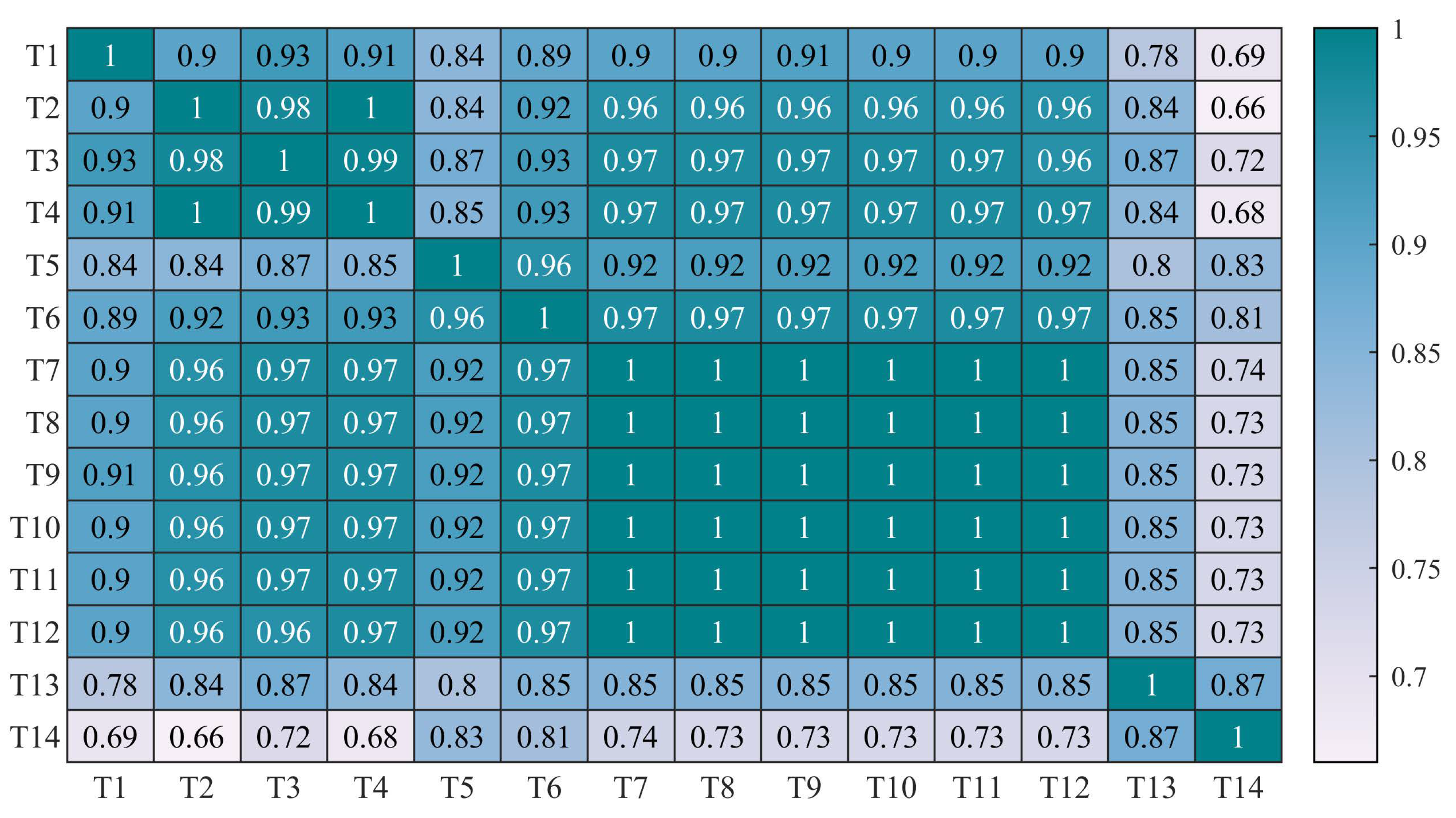

3.2. Selection of Corresponding Variables

4. Methodology

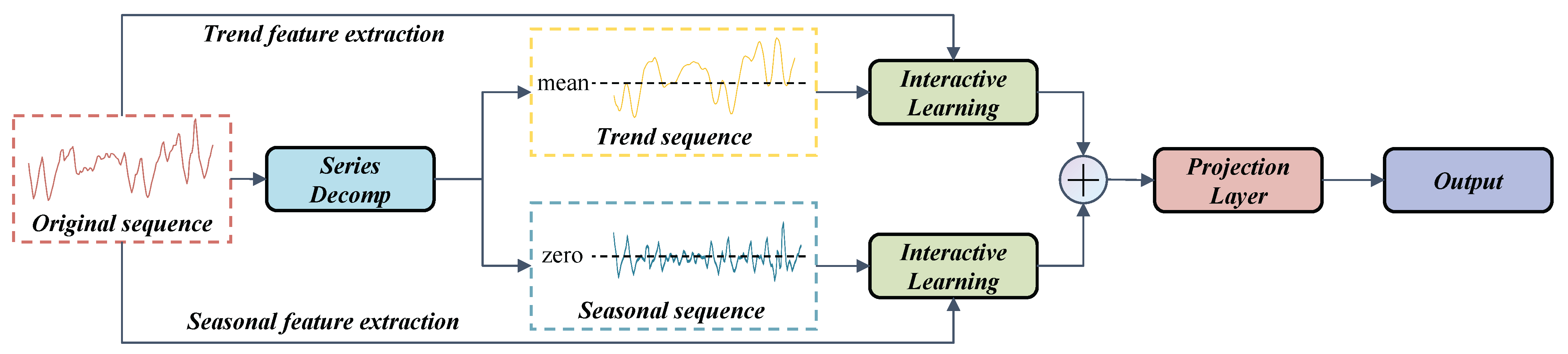

4.1. Sequence Decomposition

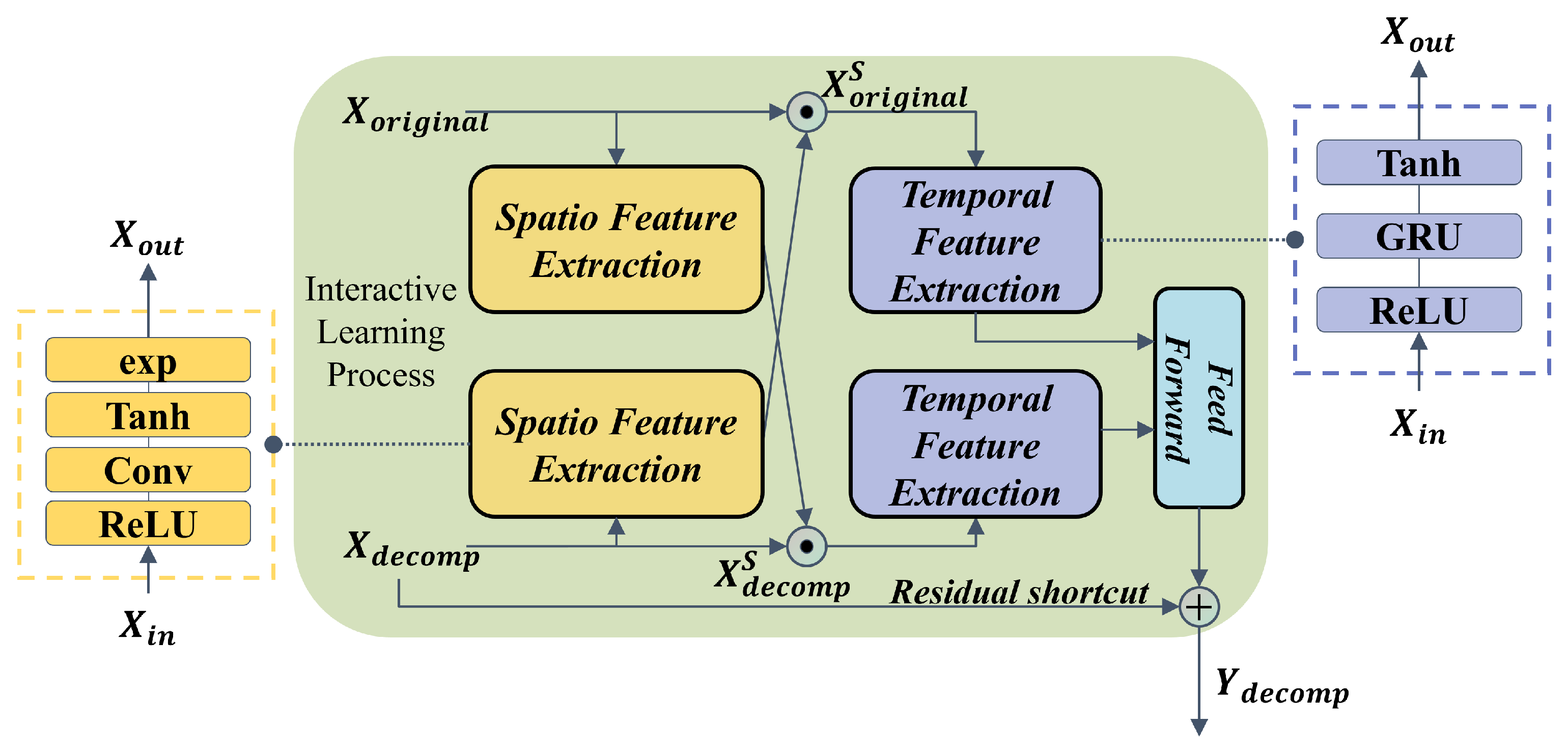

4.2. Interactive Learning

4.3. DSI-Net

4.4. General Procedures of the DSI-Net

5. Validation

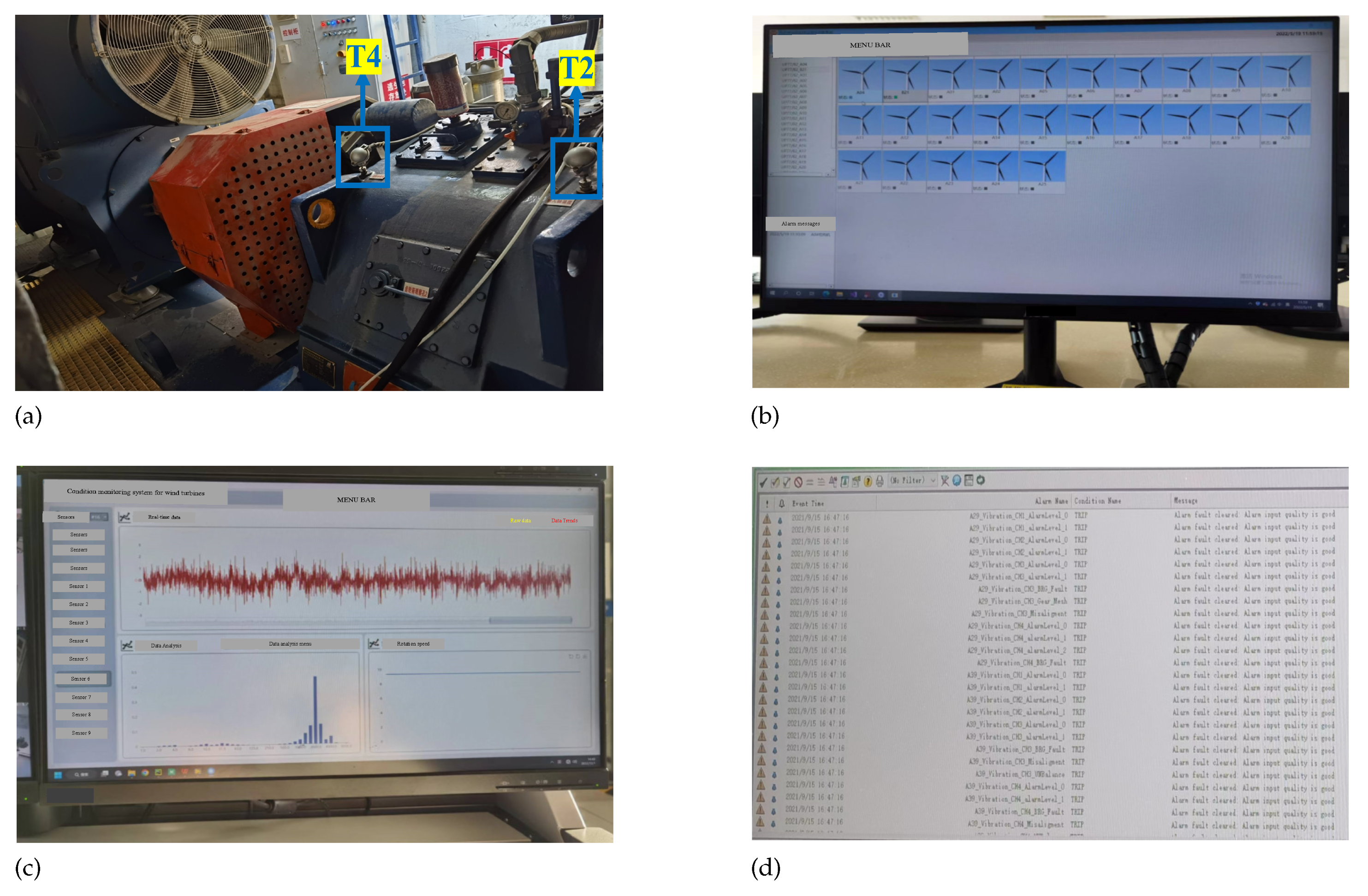

5.1. Dataset Description

5.2. Data Preprocessing

5.3. Experiments Based on the DSI-Net

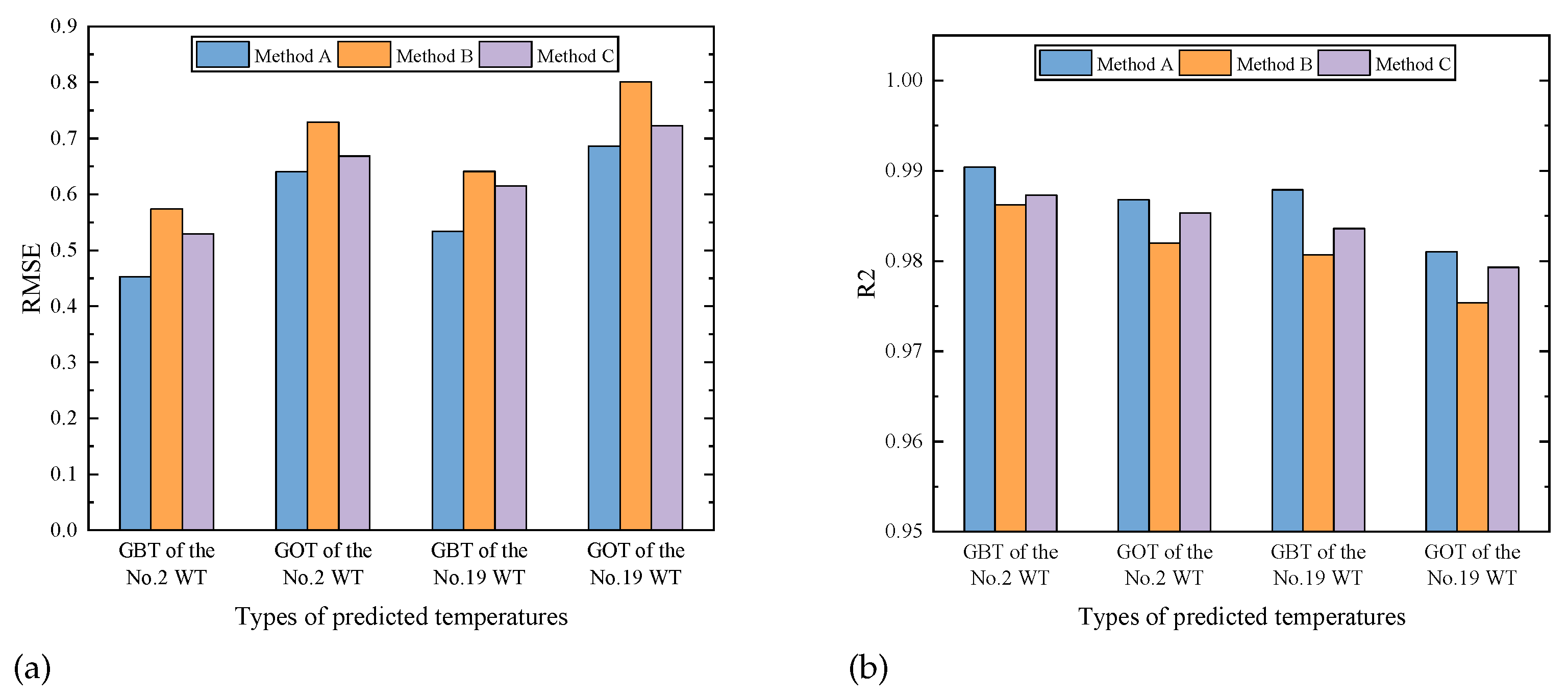

5.3.1. Validation of the Data Preprocessing Method

5.3.2. Validation of Effectiveness of Blocks in the DSI-Net

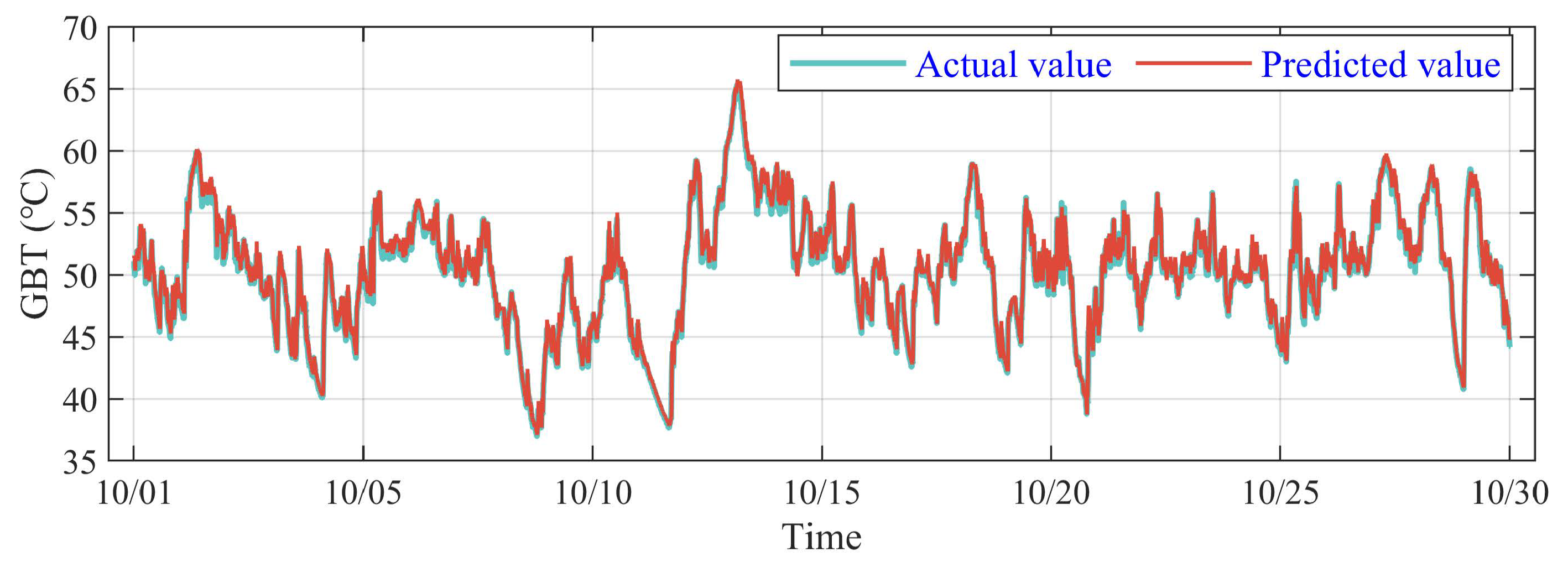

5.3.3. Validation of Healthy Data

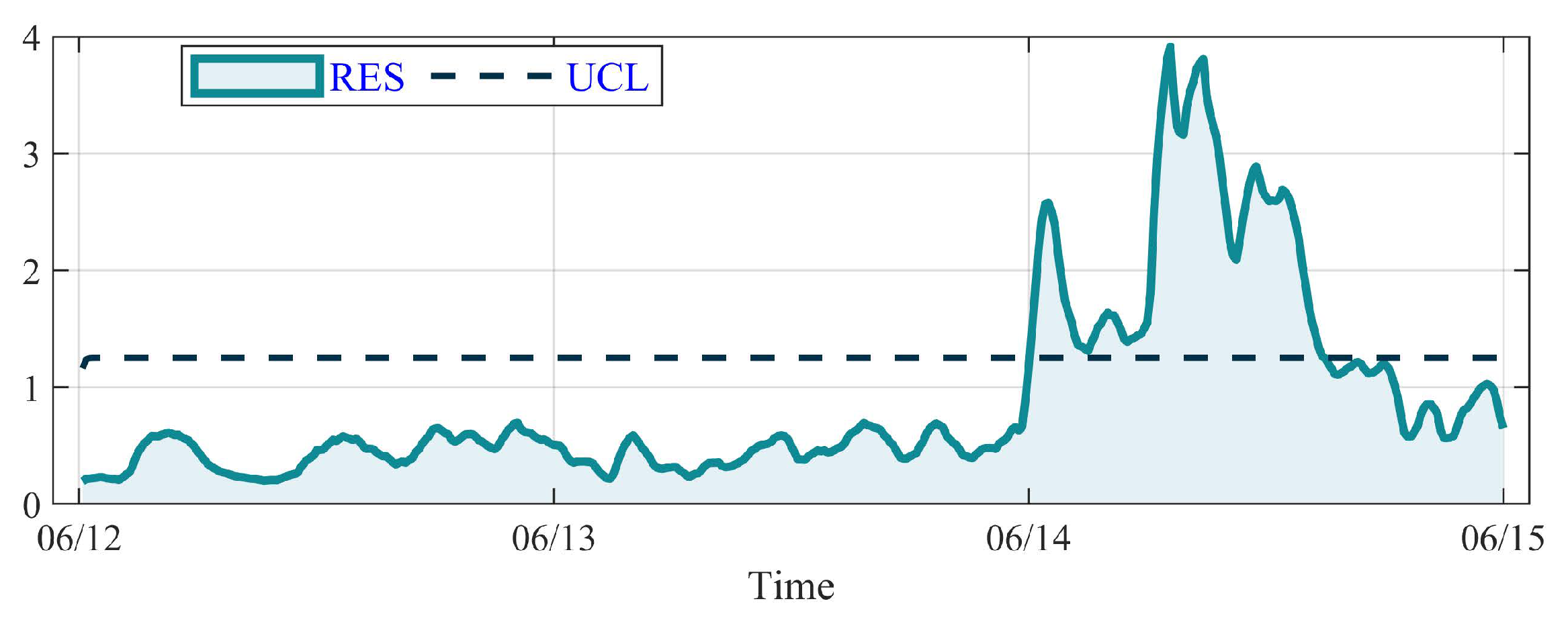

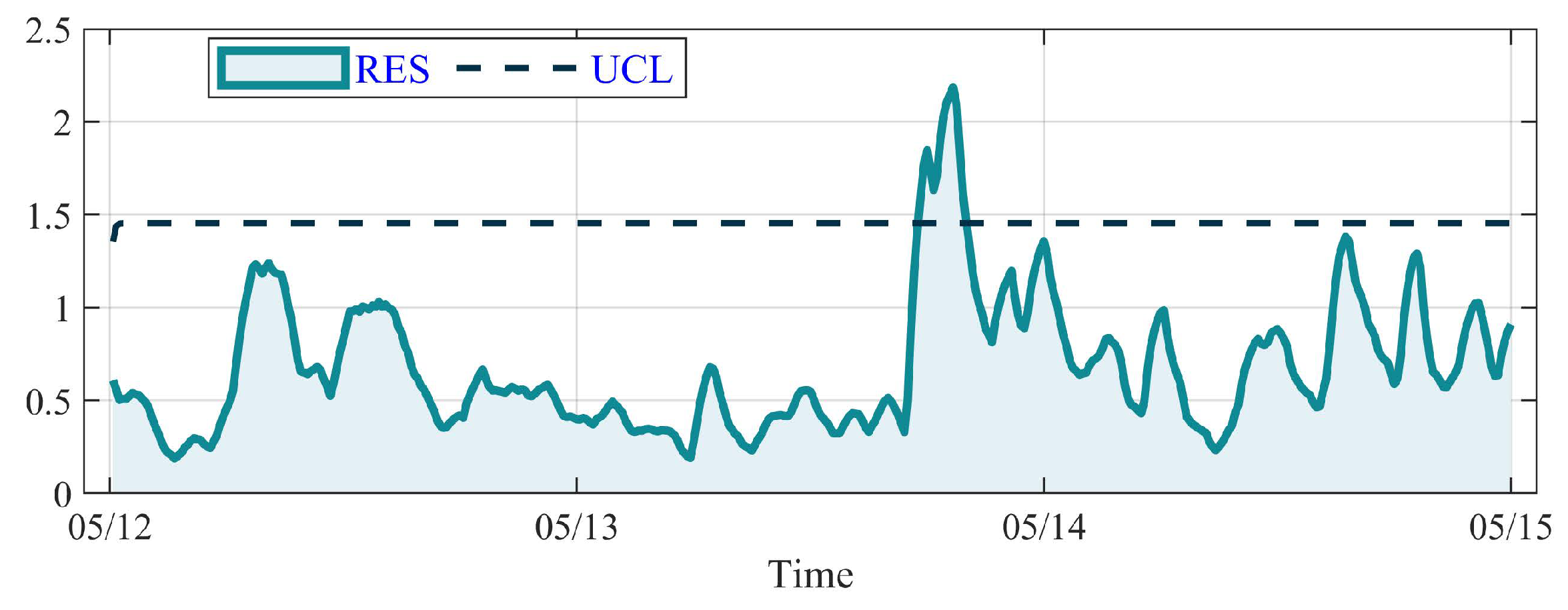

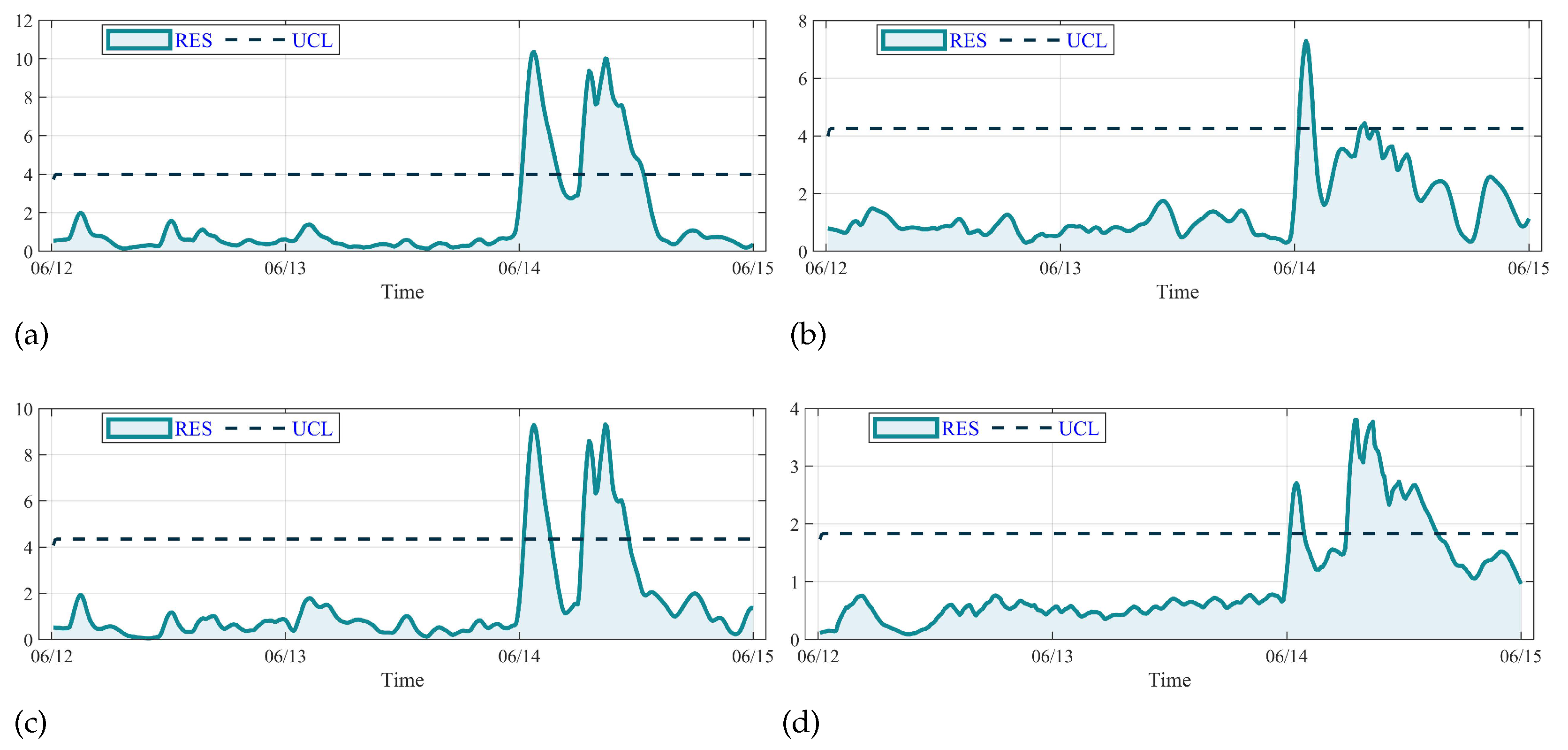

5.3.4. Validation of Anomaly Detection

5.4. Comparative Study of Models

5.4.1. Validation of Healthy Data

5.4.2. Validation of Anomaly Detection

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, Z.; Li, G.; Yao, L.; Qi, X.; Zhang, J. Data-driven fault diagnosis for wind turbines using modified multiscale fluctuation dispersion entropy and cosine pairwise-constrained supervised manifold mapping. Knowl. Based Syst. 2021, 228, 107276. [Google Scholar] [CrossRef]

- Liu, J.; Wang, X.; Wu, S.; Wan, L.; Xie, F. Wind turbine fault detection based on deep residual networks. Expert Syst. Appl. 2023, 213, 119102. [Google Scholar] [CrossRef]

- Kou, L.; Wu, J.; Zhang, F.; Ji, P.; Ke, W.; Wan, J.; Liu, H.; Li, Y.; Yuan, Q. Image encryption for offshore wind power based on 2D-LCLM and Zhou Yi eight trigrams. Int. J. Bio Inspired Comput. 2023, 22, 53–64. [Google Scholar] [CrossRef]

- Zhang, C.; Hu, D.; Yang, T. Research of artificial intelligence operations for wind turbines considering anomaly detection, root cause analysis, and incremental training. Reliab. Eng. Syst. Saf. 2024, 241, 109634. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, M.; Dong, Z.Y.; Meng, K. Probabilistic anomaly detection approach for data-driven wind turbine condition monitoring. CSEE J. Power Energy Syst. 2019, 5, 149–158. [Google Scholar] [CrossRef]

- Liu, J.; Wang, X.; Xie, F.; Wu, S.; Li, D. Condition monitoring of wind turbines with the implementation of spatio-temporal graph neural network. Eng. Appl. Artif. Intell. 2023, 121, 106000. [Google Scholar] [CrossRef]

- Li, Y.; Wu, Z. A condition monitoring approach of multi-turbine based on VAR model at farm level. Renew. Energy 2020, 166, 66–80. [Google Scholar] [CrossRef]

- He, C.; Shi, H.; Si, J.; Li, J. Physics-informed interpretable wavelet weight initialization and balanced dynamic adaptive threshold for intelligent fault diagnosis of rolling bearings. J. Manuf. Syst. 2023, 70, 579–592. [Google Scholar] [CrossRef]

- Dhiman, H.S.; Deb, D.; Muyeen, S.; Kamwa, I. Wind turbine gearbox anomaly detection based on adaptive threshold and twin support vector machines. IEEE Trans. Energy Convers. 2021, 36, 3462–3469. [Google Scholar] [CrossRef]

- Tian, X.; Jiang, Y.; Liang, C.; Liu, C.; Ying, Y.; Wang, H.; Zhang, D.; Qian, P. A Novel Condition Monitoring Method of Wind Turbines Based on GMDH Neural Network. Energies 2022, 15, 6717. [Google Scholar] [CrossRef]

- Kong, Z.; Tang, B.; Deng, L.; Liu, W.; Han, Y. Condition monitoring of wind turbines based on spatio-temporal fusion of SCADA data by convolutional neural networks and gated recurrent units. Renew. Energy 2020, 146, 760–768. [Google Scholar] [CrossRef]

- Vaiciukynas, E.; Danenas, P.; Kontrimas, V.; Butleris, R. Two-step meta-learning for time-series forecasting ensemble. IEEE Access 2021, 9, 62687–62696. [Google Scholar] [CrossRef]

- Silva, P.C.; Sadaei, H.J.; Guimaraes, F.G. Interval forecasting with fuzzy time series. In Proceedings of the 2016 IEEE Symposium Series on ComputationalIntelligence (SSCI), Athens, Greece, 6–9 December 2016; pp. 1–8. [Google Scholar]

- Cruz-Ramírez, A.S.; Martínez-Gutiérrez, G.A.; Martínez-Hernández, A.G.; Morales, I.; Escamirosa-Tinoco, C. Price trends of Agave Mezcalero in Mexico using multiple linear regression models. Ciência Rural 2023, 53, e20210685. [Google Scholar] [CrossRef]

- Box, G.E.; Jenkins, G.M. Some recent advances in forecasting and control. J. R. Stat. Soc. Ser. C Appl. Stat. 1968, 17, 91–109. [Google Scholar] [CrossRef]

- Cipra, T.; Trujillo, J.; Robio, A. Holt-Winters method with missing observations. Manag. Sci. 1995, 41, 174–178. [Google Scholar] [CrossRef]

- Trull, O.; García-Díaz, J.C.; Troncoso, A. Initialization methods for multiple seasonal Holt–Winters forecasting models. Mathematics 2020, 8, 268. [Google Scholar] [CrossRef]

- Betthauser, J.L.; Krall, J.T.; Kaliki, R.R.; Fifer, M.S.; Thakor, N.V. Stable electromyographic sequence prediction during movement transitions using temporal convolutional networks. In Proceedings of the 2019 9th International IEEE/EMBS Conference on Neural Engineering (NER), San Francisco, CA, USA, 20–23 March 2019; pp. 1046–1049. [Google Scholar]

- Qian, P.; Tian, X.; Kanfoud, J.; Lee, J.L.Y.; Gan, T.H. A novel condition monitoring method of wind turbines based on long short-term memory neural network. Energies 2019, 12, 3411. [Google Scholar] [CrossRef]

- Duan, Z.; Xu, H.; Huang, Y.; Feng, J.; Wang, Y. Multivariate time series forecasting with transfer entropy graph. Tsinghua Sci. Technol. 2022, 28, 141–149. [Google Scholar] [CrossRef]

- Zhang, X.; Tang, L.; Chen, J. Fault diagnosis for electro-mechanical actuators based on STL-HSTA-GRU and SM. IEEE Trans. Instrum. Meas. 2021, 70, 1–16. [Google Scholar] [CrossRef]

- Fan, M.; Hu, Y.; Zhang, X.; Yin, H.; Yang, Q.; Fan, L. Short-term load forecasting for distribution network using decomposition with ensemble prediction. In Proceedings of the 2019 Chinese Automation Congress (CAC), Hangzhou, China, 22–24 November 2019; pp. 152–157. [Google Scholar]

- Wang, J.; Li, T.; Xie, R.; Wang, X.-M.; Cao, Y.-M. Fault feature extraction for multiple electrical faults of aviation electro-mechanical actuator based on symbolic dynamics entropy. In Proceedings of the 2015 IEEE International Conference on Signal Processing, Communications and Computing (ICSPCC), Ningbo, China, 19–22 September 2015; pp. 1–6. [Google Scholar]

- Wang, Y.; Liao, W.; Chang, Y. Gated recurrent unit network-based short-term photovoltaic forecasting. Energies 2018, 11, 2163. [Google Scholar] [CrossRef]

- Hauke, J.; Kossowski, T. Comparison of values of Pearson’s and Spearman’s correlation coefficients on the same sets of data. Quaest. Geogr. 2011, 30, 87–93. [Google Scholar] [CrossRef]

- Zar, J.H. Significance testing of the Spearman rank correlation coefficient. J. Am. Stat. Assoc. 1972, 67, 578–580. [Google Scholar] [CrossRef]

- Mallat, S. A theory for multi-resolution approximation: The wavelet approximation. IEEE Trans. PAMI 1989, 11, 674–693. [Google Scholar] [CrossRef]

- Cui, Z.; Chen, W.; Chen, Y. Multi-scale convolutional neural networks for time series classification. arXiv 2016, arXiv:1603.06995v4. [Google Scholar]

- Wu, H.; Xu, J.; Wang, J.; Long, M. Autoformer: Decomposition transformers with auto-correlation for long-term series forecasting. Adv. Neural Inf. Process. Syst. 2021, 34, 22419–22430. [Google Scholar]

- Liu, M.; Zeng, A.; Xu, Z.; Lai, Q.; Xu, Q. Time series is a special sequence: Forecasting with sample convolution and interaction. arXiv 2021, arXiv:2106.09305v1. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Pozo, F.; Vidal, Y. Wind turbine fault detection through principal component analysis and statistical hypothesis testing. Energies 2015, 9, 3. [Google Scholar] [CrossRef]

- Jia, X.; Han, Y.; Li, Y.; Sang, Y.; Zhang, G. Condition monitoring and performance forecasting of wind turbines based on denoising autoencoder and novel convolutional neural networks. Energy Rep. 2021, 7, 6354–6365. [Google Scholar] [CrossRef]

- Abbas, N.; Riaz, M.; Does, R.J. An EWMA-type control chart for monitoring the process mean using auxiliary information. Commun. Stat.-Theory Methods 2014, 43, 3485–3498. [Google Scholar] [CrossRef]

- Cho, K.; Van Merriënboer, B.; Bahdanau, D.; Bengio, Y. On the properties of neural machine translation: Encoder-decoder approaches. arXiv 2014, arXiv:1409.1259v2. [Google Scholar]

- Gers, F.A.; Schmidhuber, J.; Cummins, F. Learning to forget: Continual prediction with LSTM. Neural Comput. 2000, 12, 2451–2471. [Google Scholar] [CrossRef] [PubMed]

- Han, S.; Zhong, X.; Shao, H.; Xu, T.; Zhao, R.; Cheng, J. Novel multi-scale dilated CNN-LSTM for fault diagnosis of planetary gearbox with unbalanced samples under noisy environment. Meas. Sci. Technol. 2021, 32, 124002. [Google Scholar] [CrossRef]

| No. | Variable Name | No. | Variable Name |

|---|---|---|---|

| T1 | Gearbox inlet oil temperature | T8 | Generator winding U2 temperature |

| T2 | Gearbox input shaft temperature | T9 | Generator winding V1 temperature |

| T3 | Gear oil temperature | T10 | Generator winding V2 temperature |

| T4 | Gearbox shaft temperature | T11 | Generator winding W1 temperature |

| T5 | Generator bearing A temperature | T12 | Generator winding W2 temperature |

| T6 | Generator bearing B temperature | T13 | Main bearing gearbox side temperature |

| T7 | Generator winding U1 temperature | T14 | Main bearing rotor side temperature |

| Model | Evaluation Indicators | |||||||

|---|---|---|---|---|---|---|---|---|

| MAE | MSE | RMSE | ||||||

| No. 2 | No. 19 | No. 2 | No. 19 | No. 2 | No. 19 | No. 2 | No. 19 | |

| GRU | 0.7395 | 1.4893 | 1.2894 | 2.9295 | 1.1355 | 1.7115 | 0.9688 | 0.9018 |

| LSTM | 0.9484 | 1.6118 | 1.6777 | 3.4010 | 1.2952 | 1.8441 | 0.9594 | 0.8860 |

| CNN-LSTM | 1.0116 | 1.0546 | 1.8194 | 1.8230 | 1.3488 | 1.3502 | 0.9560 | 0.9389 |

| SCI-Net | 0.5731 | 0.7697 | 0.4706 | 0.7943 | 0.6860 | 0.8912 | 0.9725 | 0.9733 |

| DSI-Net | 0.3728 | 0.4442 | 0.2053 | 0.2852 | 0.4531 | 0.5340 | 0.9904 | 0.9879 |

| Model | Evaluation Indicators | |||||||

|---|---|---|---|---|---|---|---|---|

| MAE | MSE | RMSE | ||||||

| No. 2 | No. 19 | No. 2 | No. 19 | No. 2 | No. 19 | No. 2 | No. 19 | |

| GRU | 1.9581 | 1.3458 | 5.2674 | 3.0874 | 2.295 | 1.7571 | 0.9211 | 0.9135 |

| LSTM | 1.5600 | 1.4737 | 3.8110 | 4.6292 | 1.9526 | 2.1515 | 0.943 | 0.8704 |

| CNN-LSTM | 1.4312 | 1.3580 | 2.8642 | 2.8949 | 1.6924 | 1.7014 | 0.9571 | 0.9189 |

| SCI-Net | 0.7892 | 0.8255 | 0.8725 | 1.0011 | 0.9341 | 1.0005 | 0.9596 | 0.9719 |

| DSI-Net | 0.5180 | 0.5642 | 0.4099 | 0.4710 | 0.6402 | 0.6863 | 0.9868 | 0.9810 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lyu, Q.; He, Y.; Wu, S.; Li, D.; Wang, X. Anomaly Detection of Wind Turbine Driveline Based on Sequence Decomposition Interactive Network. Sensors 2023, 23, 8964. https://doi.org/10.3390/s23218964

Lyu Q, He Y, Wu S, Li D, Wang X. Anomaly Detection of Wind Turbine Driveline Based on Sequence Decomposition Interactive Network. Sensors. 2023; 23(21):8964. https://doi.org/10.3390/s23218964

Chicago/Turabian StyleLyu, Qiucheng, Yuwei He, Shijing Wu, Deng Li, and Xiaosun Wang. 2023. "Anomaly Detection of Wind Turbine Driveline Based on Sequence Decomposition Interactive Network" Sensors 23, no. 21: 8964. https://doi.org/10.3390/s23218964

APA StyleLyu, Q., He, Y., Wu, S., Li, D., & Wang, X. (2023). Anomaly Detection of Wind Turbine Driveline Based on Sequence Decomposition Interactive Network. Sensors, 23(21), 8964. https://doi.org/10.3390/s23218964