A Smartphone-Based Detection System for Tomato Leaf Disease Using EfficientNetV2B2 and Its Explainability with Artificial Intelligence (AI)

Abstract

:1. Introduction

- We optimize a very effective DL model, EfficientNetV2B2, for tomato leaf disease detection.

- The proposed model is evaluated using different matrices such as loss curve, ROC curve, confusion matrix, precision, recall, F1-score, and accuracy with datasets [28,29]. The model is also justified by comparing it with state-of-the-art deep learning models and customized models [30,31,32,33,34,35,36].

- A smart application system has been built to detect and classify tomato leaf diseases, adapting to both smartphone and web-based interfaces. The application provides the results in both English and Bangla.

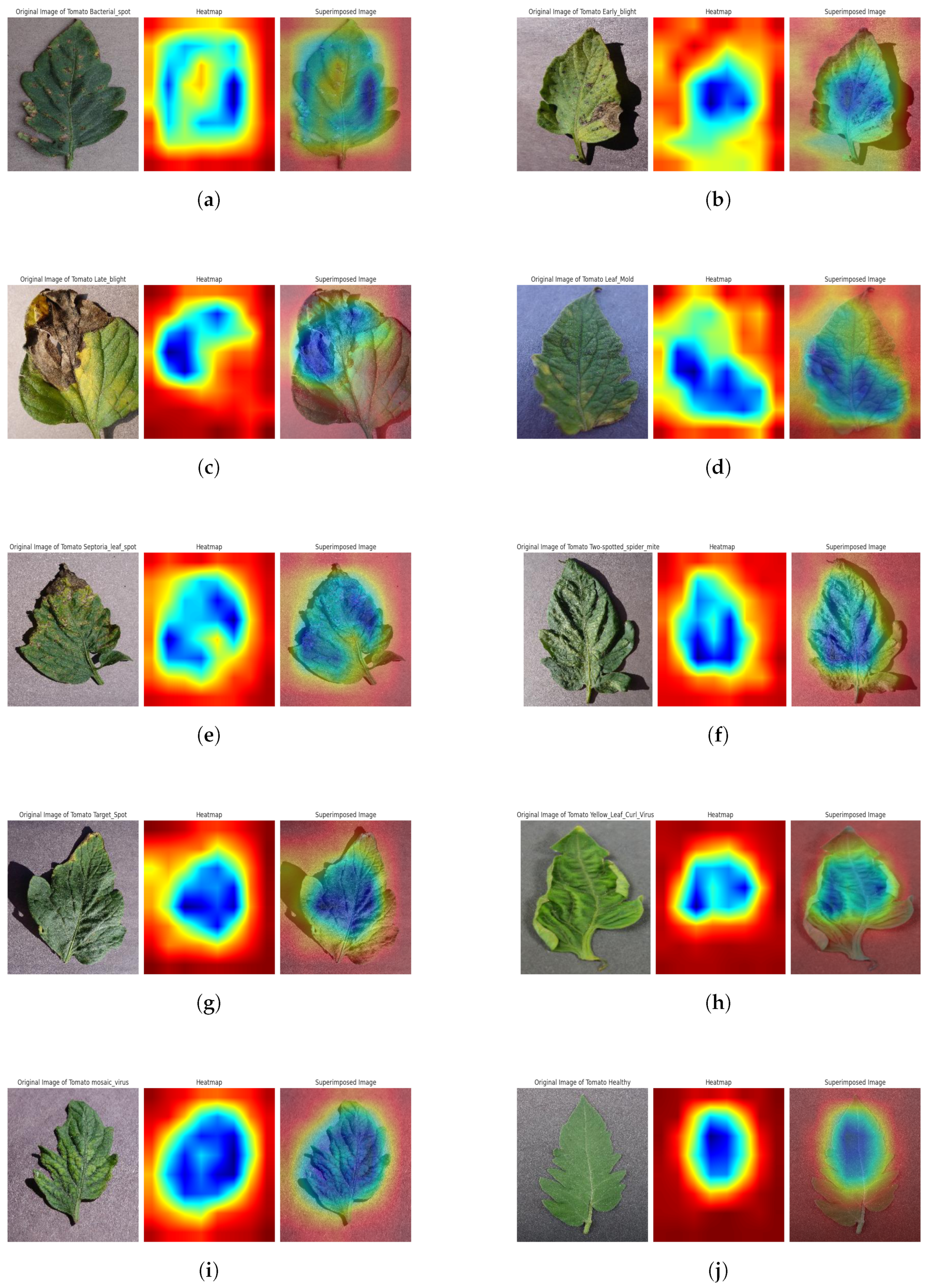

- The explainable AI frameworks such as LIME and Grad-CAM are also used to analyze the model.

2. Literature Review

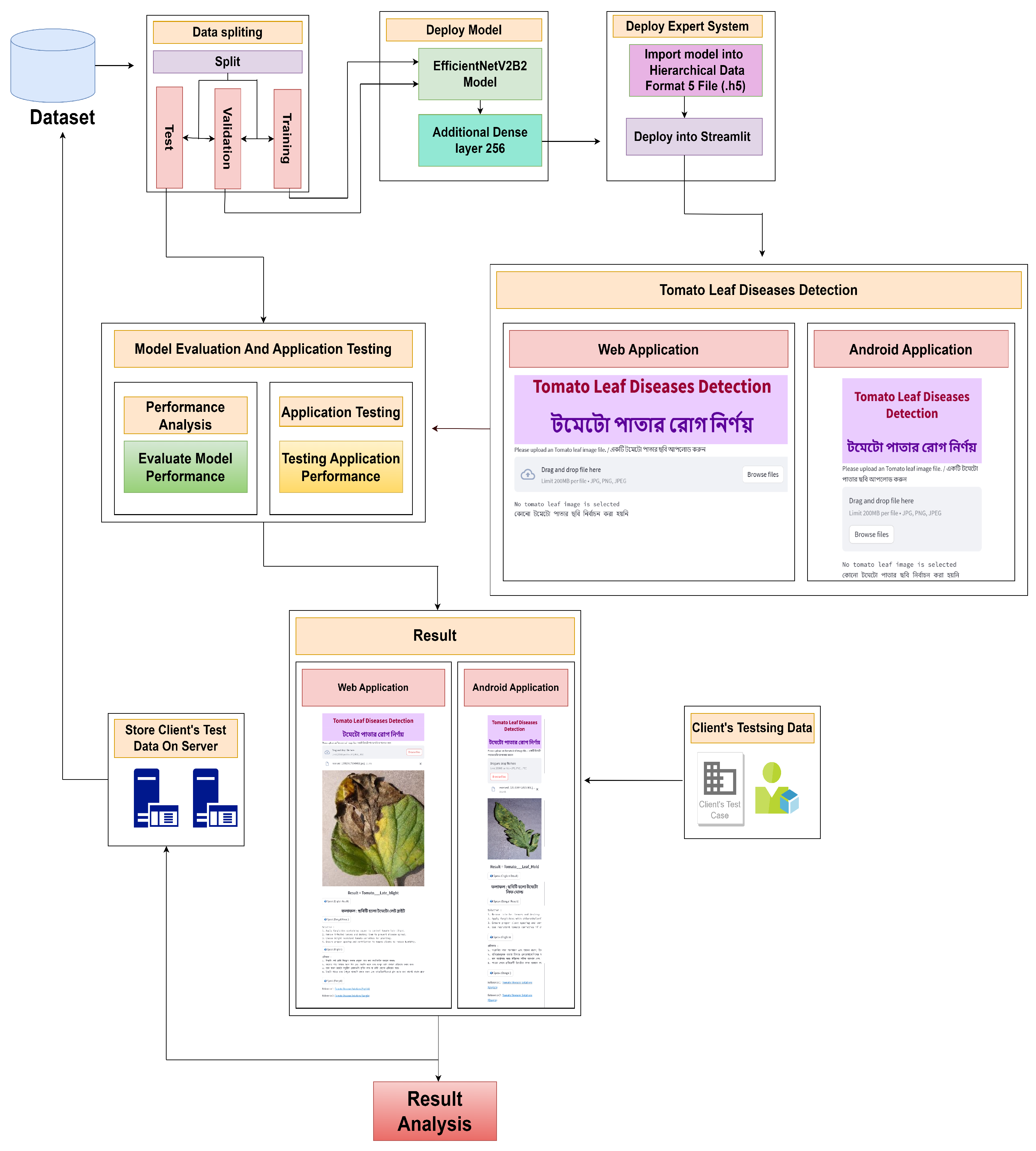

3. Methodology

3.1. Dataset

3.2. Data Split

3.3. InceptionV3 Architecture

3.4. Convolutional Neural Network (CNN) Architecture

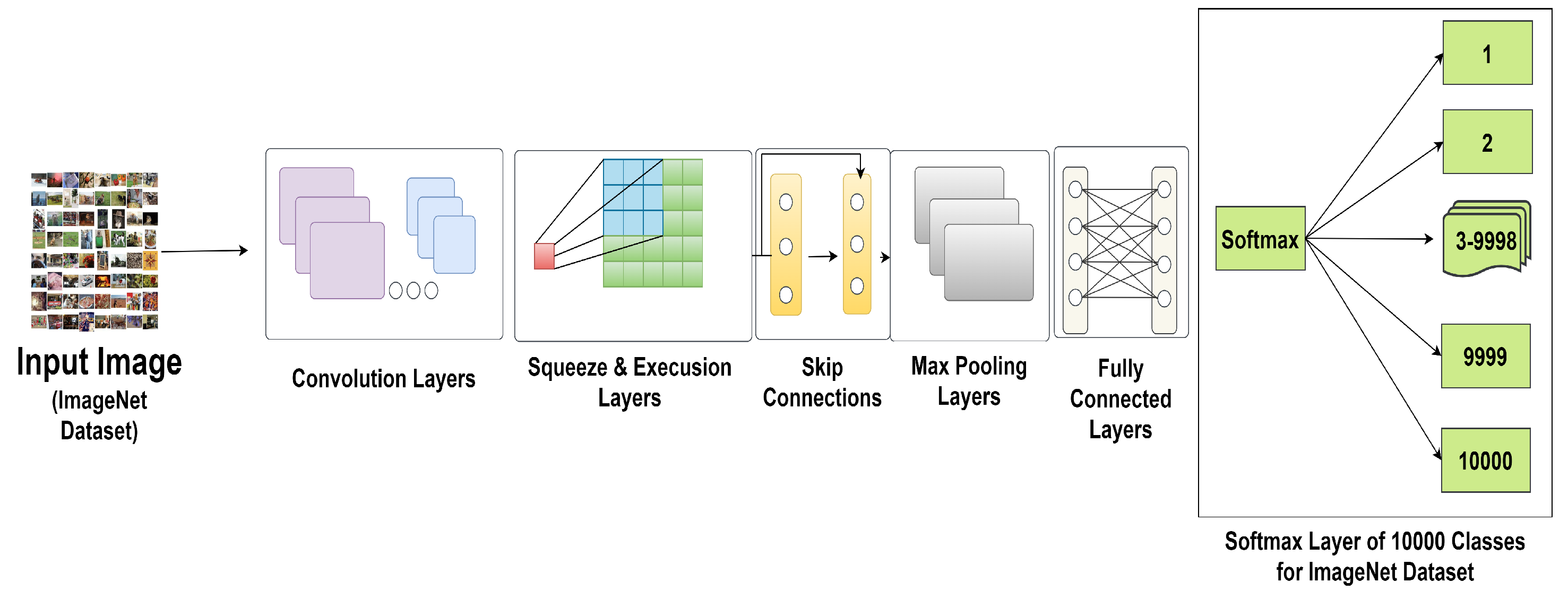

3.5. EfficientNet Architecture

3.6. Architectures of EfficientNetV2

- MBConv Block: Mobile Inverted Bottleneck Convolution, the main component of EfficientNet, is represented by this. Squeeze-and-excitation procedures and depthwise separable convolution are also included.

- Stem Block: This is the first node in the network, and it is in charge of analyzing the input picture and extracting key information.

- Block1, Block2, Block3, …: These are the next blocks in the network, usually sorted in ascending order, with Block1 being nearer the input and higher-numbered blocks being further in the network.

- Head Block: The output layer and final predictions are handled by this network’s last building piece.

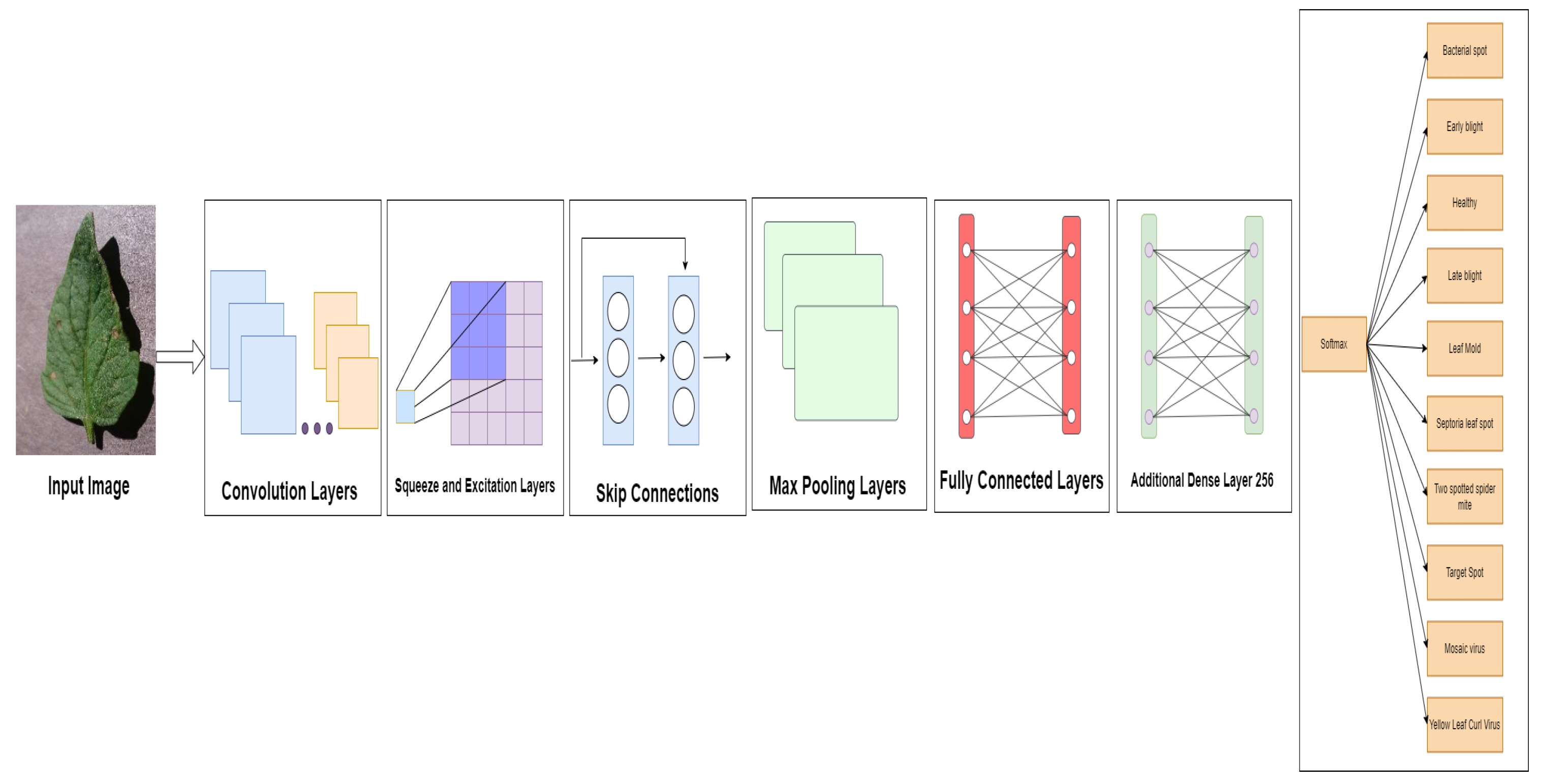

3.7. Deployed Model

- As the first step, the pre-trained model is shown.

- We used a fine-tuning approach and trained the model to classify the various tomato leaf diseases, including healthy leaves, by reshaping the final layer of the EfficientNetV2B2 model with fully connected layers and an additional dense layer of 256, then adding 10 fully connected SoftMax layers.

3.8. User Application Design

4. Experimental Outcomes and Discussions

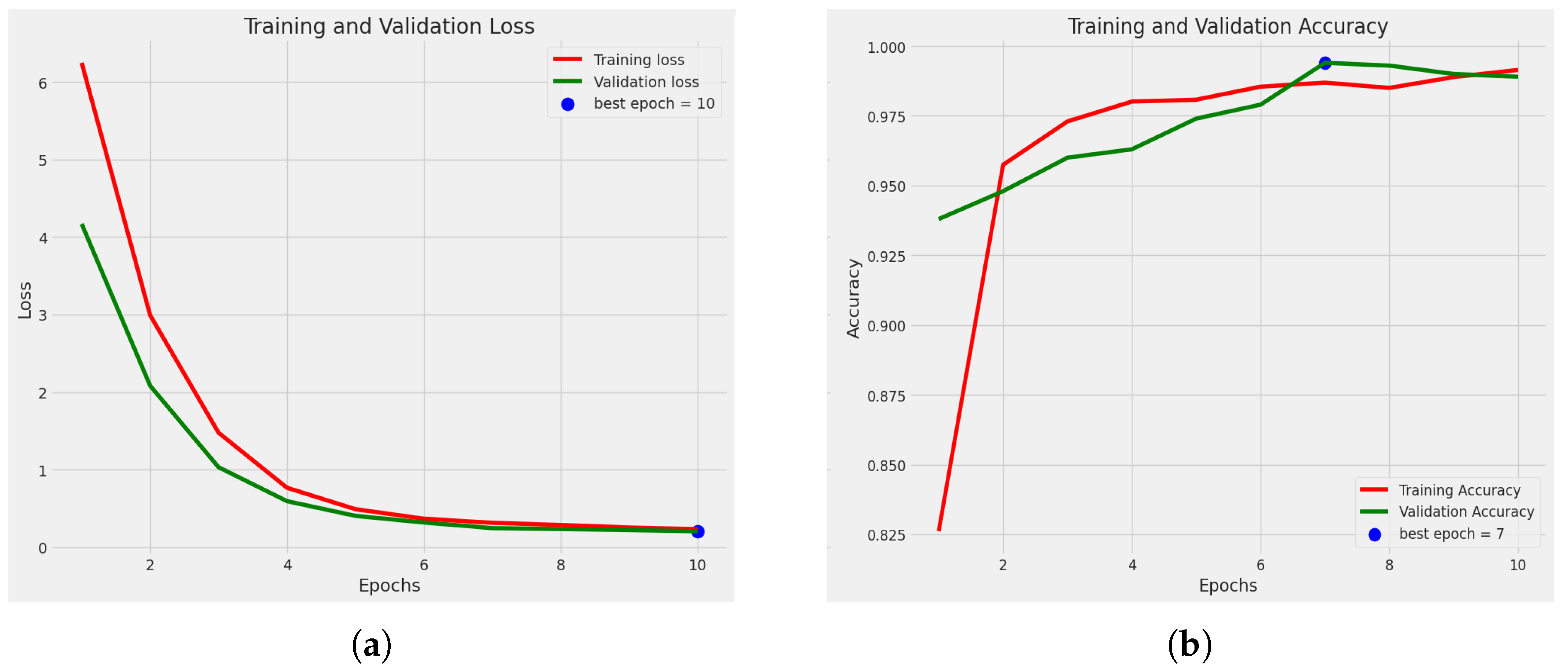

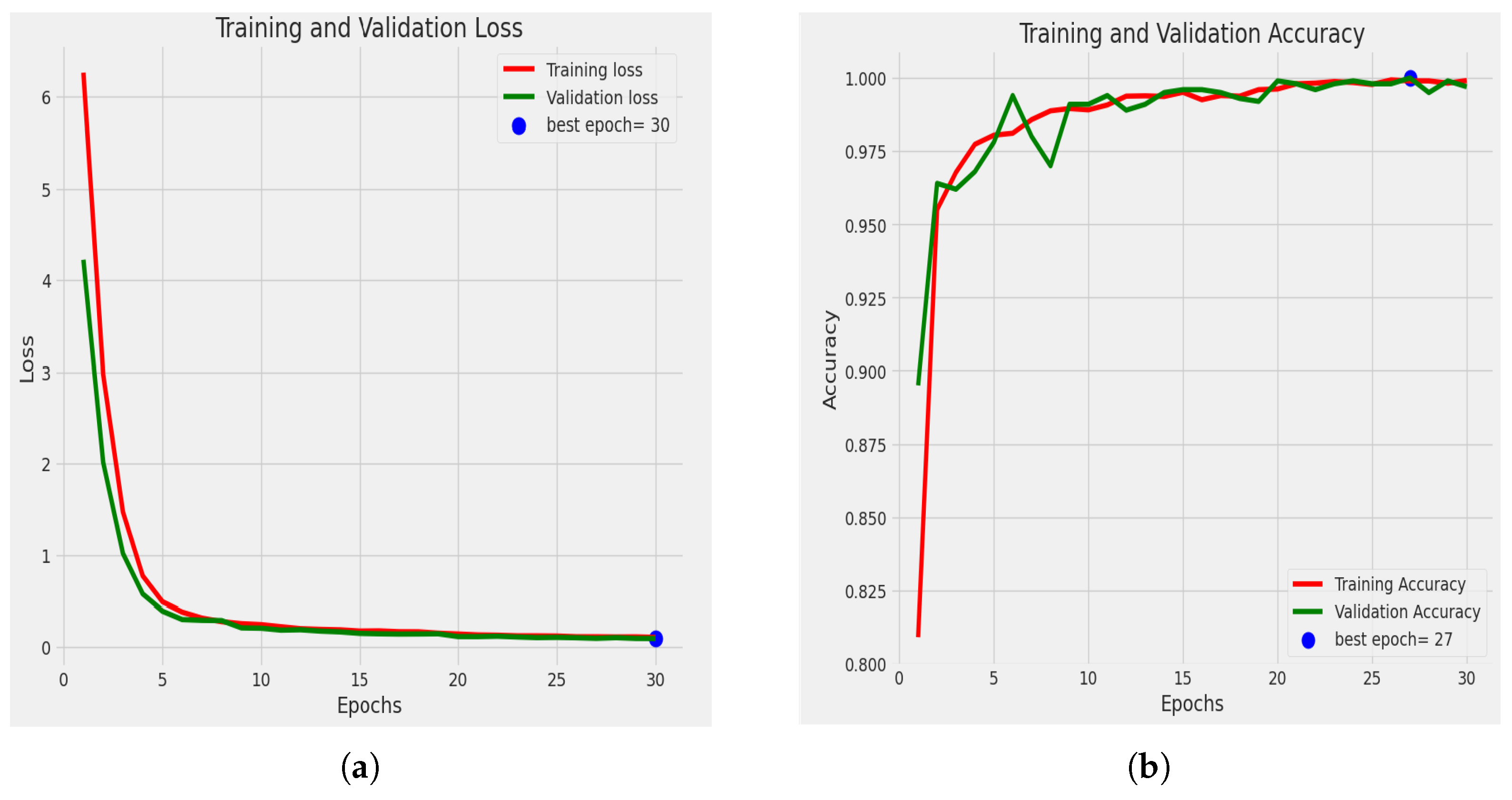

4.1. Cross-Validation Outcome of the EfficientNetV2B2 Model

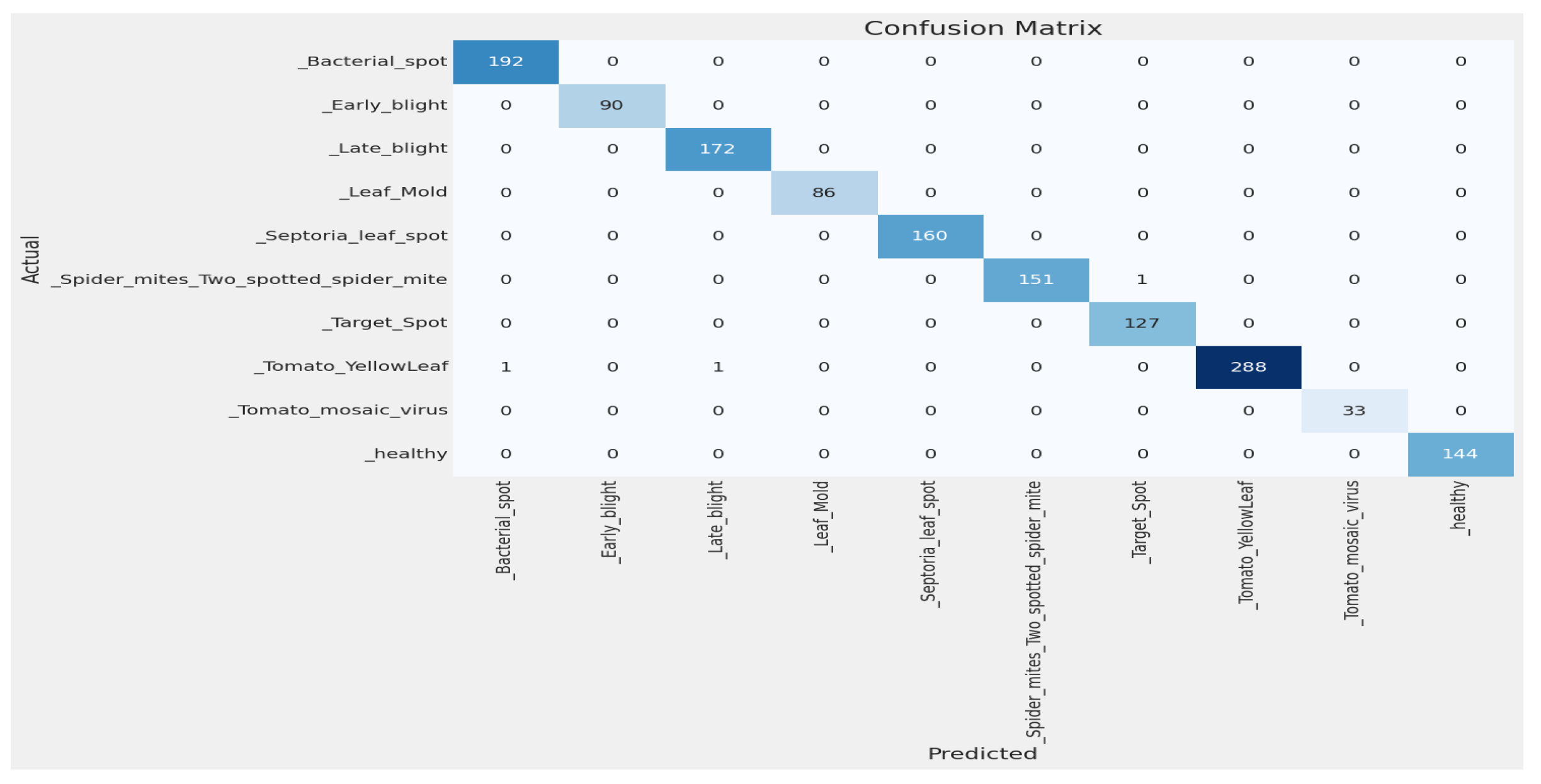

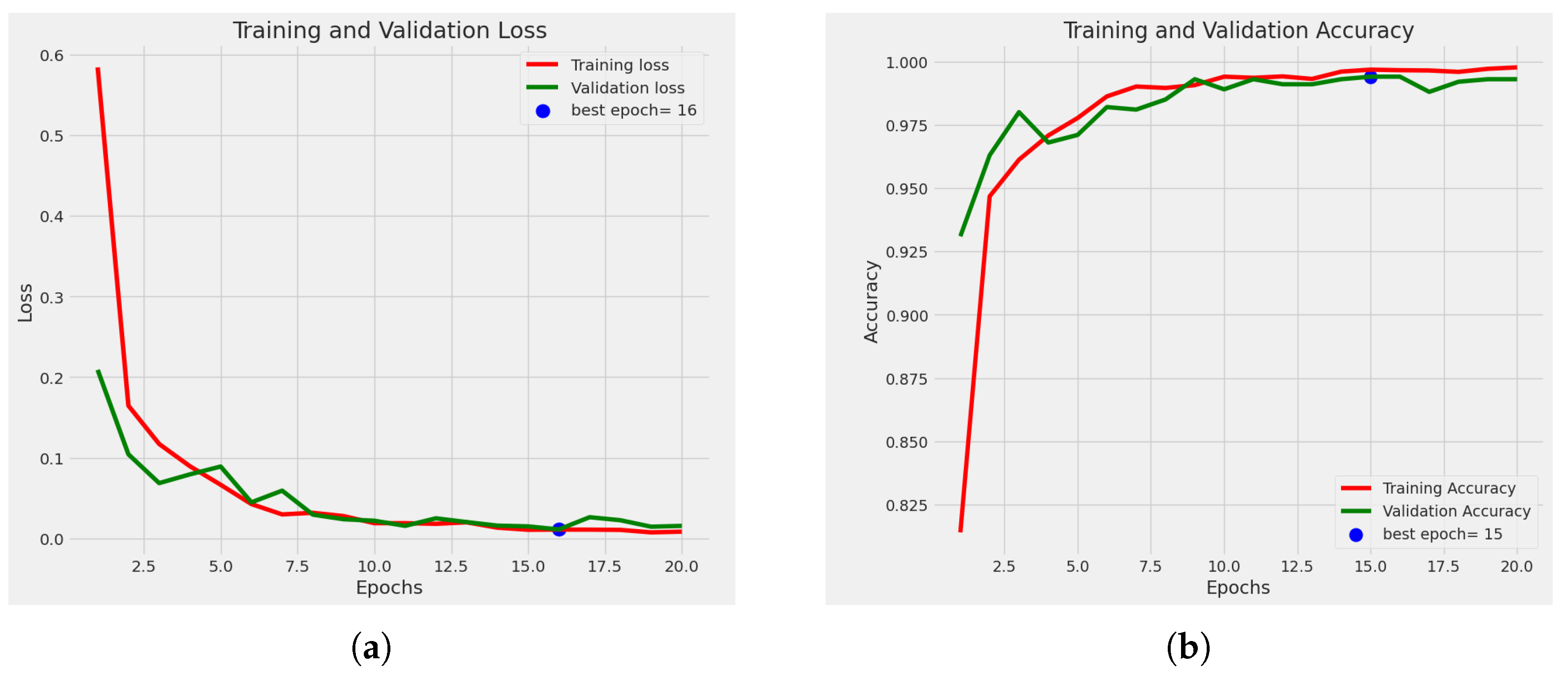

4.2. Split Method Outcome of the EfficientNetV2B2 Model

4.2.1. Statistical Analysis

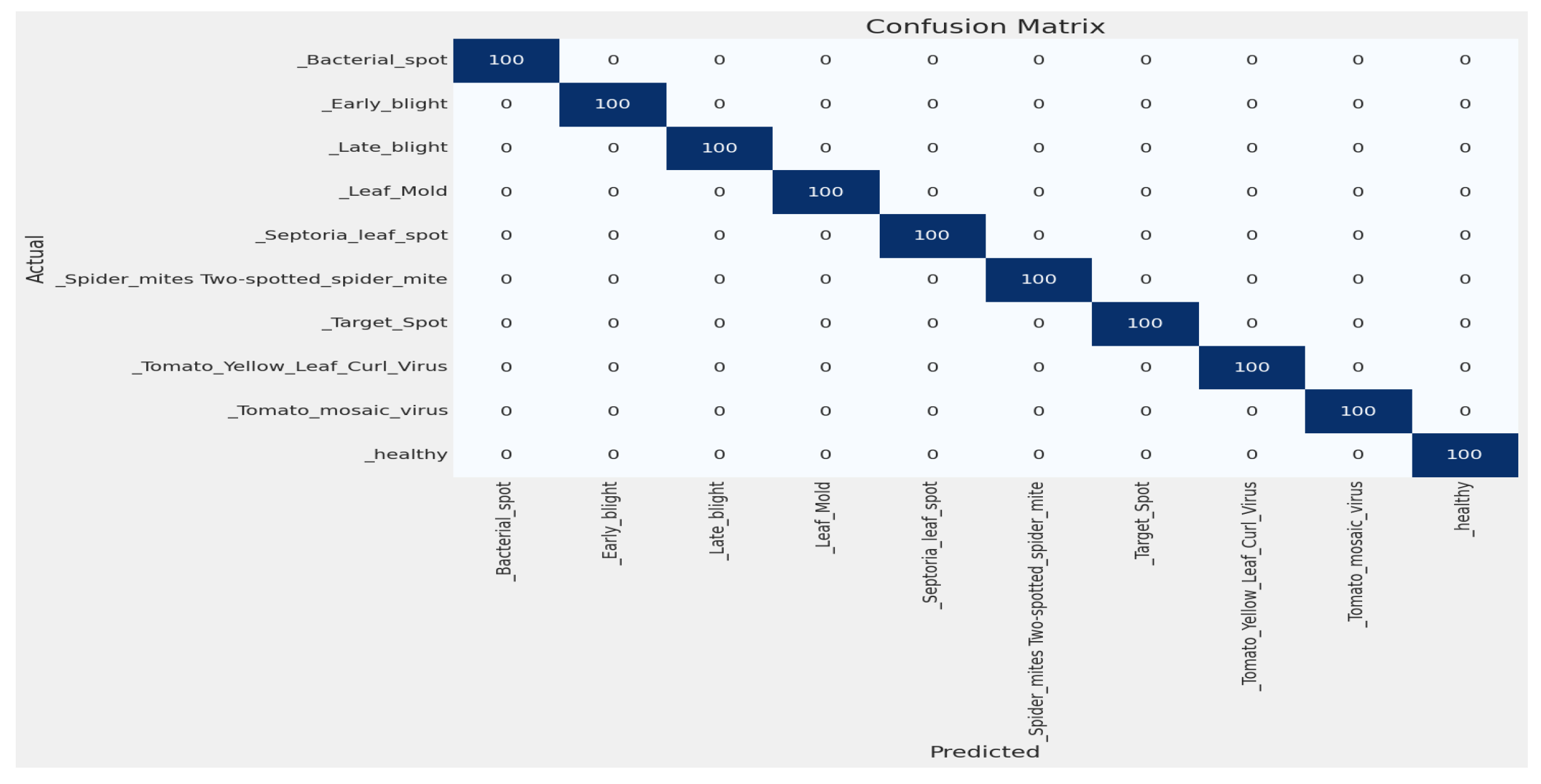

4.2.2. Confusion Matrix

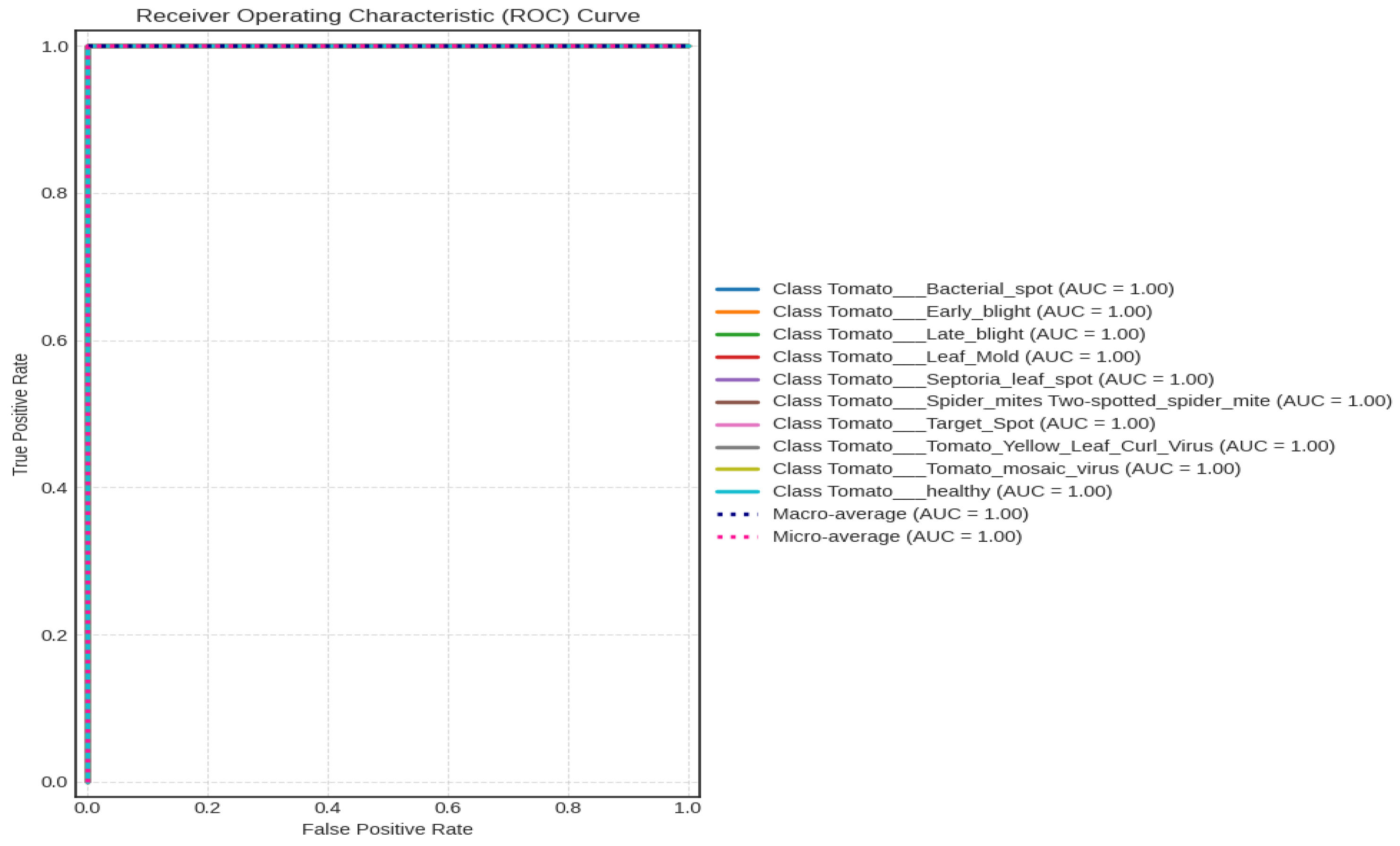

4.2.3. Receiver Operating Characteristic (ROC) Curve

4.2.4. Comparison with State-of-the-Art Models

4.2.5. Comparison with Customized Models on the Plant Village Dataset

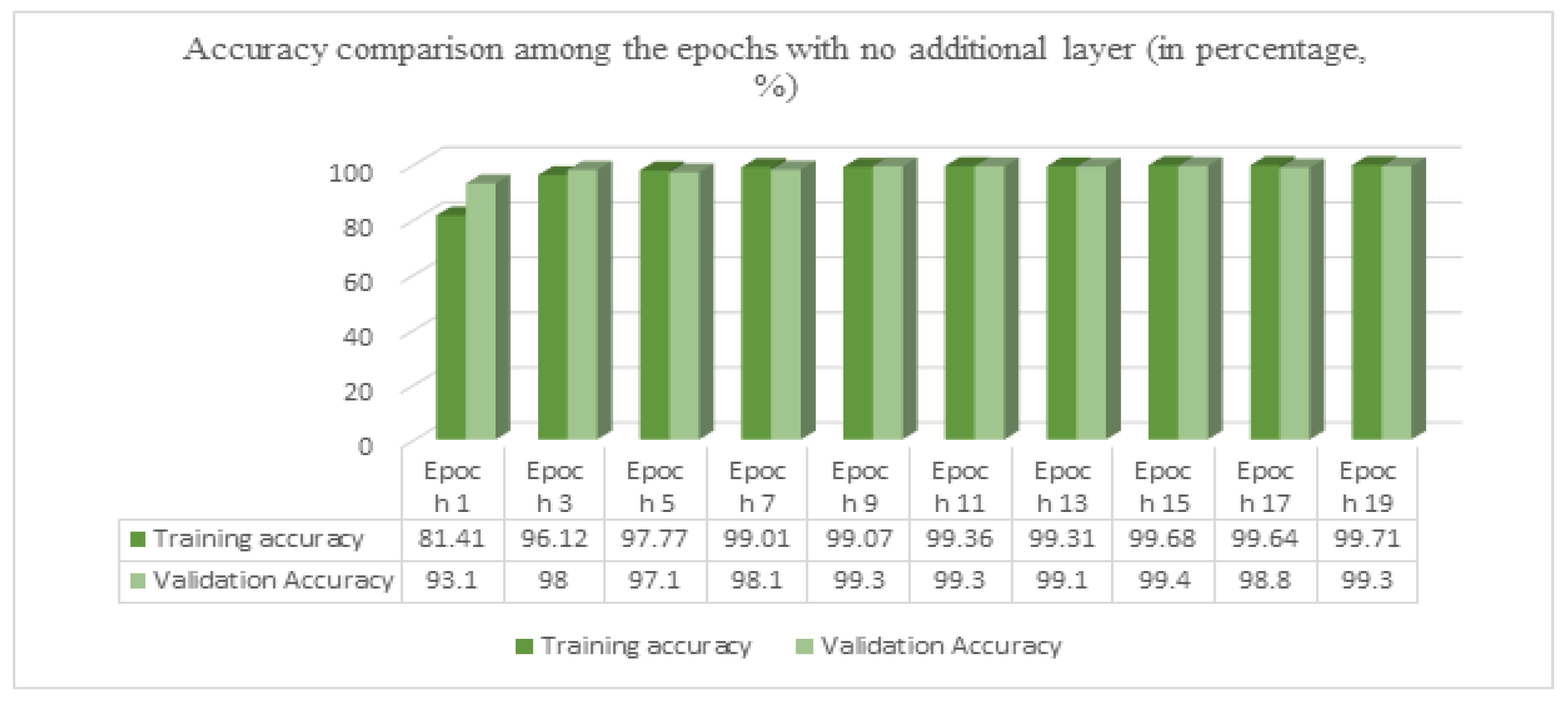

4.3. Ablation Study

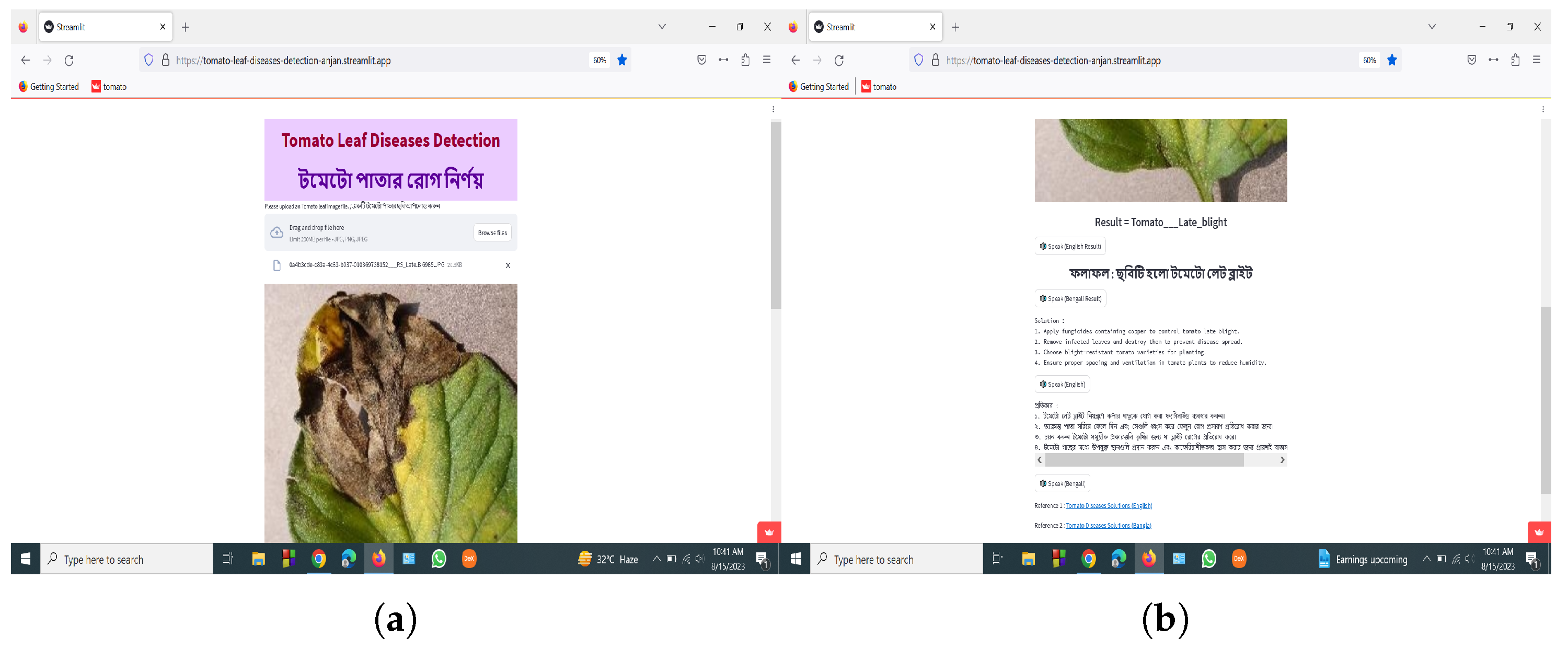

4.4. Development of Smartphone and Web Applications

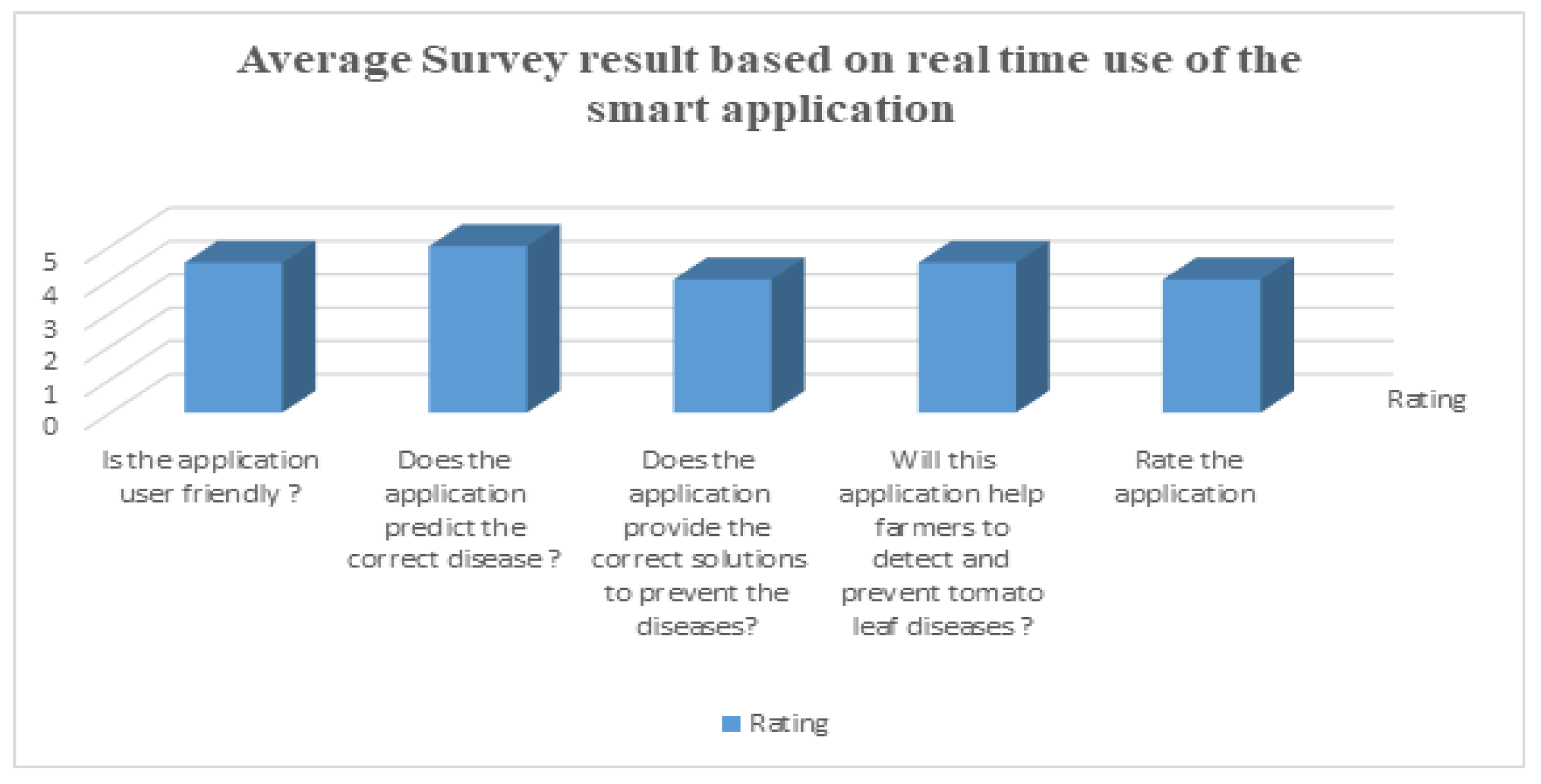

User Feedback on Applications

4.5. Discussion

4.6. Explainablity of the Proposed Model

4.6.1. LIME Analysis

4.6.2. Grad-CAM Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CNN | Convolutional Neural Network |

| Grad-CAM | Gradient-weighted Class Activation Mapping |

| LIME | Local Interpretable Model-Agnostic Explanations |

| .H5 | Hierarchical Data Format 5 |

| DL | Deep Learning |

| ML | Machine Learning |

| TF | Transfer Learning |

| RGB | Red, green and blue |

References

- Thangaraj, R.; Anandamurugan, S.; Pandiyan, P.; Kaliappan, V.K. Artificial intelligence in tomato leaf disease detection: A comprehensive review and discussion. J. Plant Dis. Prot. 2022, 129, 469–488. [Google Scholar] [CrossRef]

- Vasavi, P.; Punitha, A.; Rao, T.V.N. Crop leaf disease detection and classification using machine learning and deep learning algorithms by visual symptoms: A review. Int. J. Electr. Comput. Eng. 2022, 12, 2079. [Google Scholar] [CrossRef]

- Li, L.; Zhang, S.; Wang, B. Plant disease detection and classification by deep learning—A review. IEEE Access 2021, 9, 56683–56698. [Google Scholar] [CrossRef]

- Basavaiah, J.; Arlene Anthony, A. Tomato leaf disease classification using multiple feature extraction techniques. Wirel. Pers. Commun. 2020, 115, 633–651. [Google Scholar] [CrossRef]

- Sarkar, C.; Gupta, D.; Gupta, U.; Hazarika, B.B. Leaf disease detection using machine learning and deep learning: Review and challenges. Appl. Soft Comput. 2023, 145, 110534. [Google Scholar] [CrossRef]

- Ramanjot; Mittal, U.; Wadhawan, A.; Singla, J.; Jhanjhi, N.; Ghoniem, R.M.; Ray, S.K.; Abdelmaboud, A. Plant Disease Detection and Classification: A Systematic Literature Review. Sensors 2023, 23, 4769. [Google Scholar] [CrossRef] [PubMed]

- Kumar, R.; Chug, A.; Singh, A.P.; Singh, D. A Systematic analysis of machine learning and deep learning based approaches for plant leaf disease classification: A review. J. Sens. 2022, 2022, 3287561. [Google Scholar] [CrossRef]

- Yamamoto, K.; Togami, T.; Yamaguchi, N. Super-resolution of plant disease images for the acceleration of image-based phenotyping and vigor diagnosis in agriculture. Sensors 2017, 17, 2557. [Google Scholar] [CrossRef]

- Brahimi, M.; Boukhalfa, K.; Moussaoui, A. Deep learning for tomato diseases: Classification and symptoms visualization. Appl. Artif. Intell. 2017, 31, 299–315. [Google Scholar] [CrossRef]

- Kumar, Y.; Singh, R.; Moudgil, M.R.; Kamini. A Systematic Review of Different Categories of Plant Disease Detection Using Deep Learning-Based Approaches. Arch. Comput. Methods Eng. 2023, 30, 4757–4779. [Google Scholar] [CrossRef]

- Tian, X.; Meng, X.; Wu, Q.; Chen, Y.; Pan, J. Identification of tomato leaf diseases based on a deep neuro-fuzzy network. J. Inst. Eng. (India) Ser. A 2022, 103, 695–706. [Google Scholar] [CrossRef]

- Bhujel, A.; Kim, N.E.; Arulmozhi, E.; Basak, J.K.; Kim, H.T. A lightweight Attention-based convolutional neural networks for tomato leaf disease classification. Agriculture 2022, 12, 228. [Google Scholar] [CrossRef]

- Mkonyi, L.; Rubanga, D.; Richard, M.; Zekeya, N.; Sawahiko, S.; Maiseli, B.; Machuve, D. Early identification of Tuta absoluta in tomato plants using deep learning. Sci. Afr. 2020, 10, e00590. [Google Scholar] [CrossRef]

- John, S.; Rose, A.L. Machine learning techniques in plant disease detection and classification-a state of the art. INMATEH-Agric. Eng. 2021, 65, 362–372. [Google Scholar]

- Tugrul, B.; Elfatimi, E.; Eryigit, R. Convolutional neural networks in detection of plant leaf diseases: A review. Agriculture 2022, 12, 1192. [Google Scholar] [CrossRef]

- Chopra, G.; Whig, P. Analysis of Tomato Leaf Disease Identification Techniques. J. Comput. Sci. Eng. (JCSE) 2021, 2, 98–103. [Google Scholar] [CrossRef]

- Hidayah, A.N.; Radzi, S.A.; Razak, N.A.; Saad, W.H.M.; Wong, Y.; Naja, A.A. Disease Detection of Solanaceous Crops Using Deep Learning for Robot Vision. J. Robot. Control (JRC) 2022, 3, 790–799. [Google Scholar] [CrossRef]

- Coulibaly, S.; Kamsu-Foguem, B.; Kamissoko, D.; Traore, D. Deep neural networks with transfer learning in millet crop images. Comput. Ind. 2019, 108, 115–120. [Google Scholar] [CrossRef]

- Chen, X.; Zhou, G.; Chen, A.; Yi, J.; Zhang, W.; Hu, Y. Identification of tomato leaf diseases based on combination of ABCK-BWTR and B-ARNet. Comput. Electron. Agric. 2020, 178, 105730. [Google Scholar] [CrossRef]

- Zhang, L.; Zhou, G.; Lu, C.; Chen, A.; Wang, Y.; Li, L.; Cai, W. MMDGAN: A fusion data augmentation method for tomato-leaf disease identification. Appl. Soft Comput. 2022, 123, 108969. [Google Scholar] [CrossRef]

- Zaki, S.Z.M.; Zulkifley, M.A.; Stofa, M.M.; Kamari, N.A.M.; Mohamed, N.A. Classification of tomato leaf diseases using MobileNet v2. IAES Int. J. Artif. Intell. 2020, 9, 290. [Google Scholar] [CrossRef]

- Shoaib, M.; Shah, B.; Ei-Sappagh, S.; Ali, A.; Ullah, A.; Alenezi, F.; Gechev, T.; Hussain, T.; Ali, F. An advanced deep learning models-based plant disease detection: A review of recent research. Front. Plant Sci. 2023, 14, 1158933. [Google Scholar] [CrossRef] [PubMed]

- Tan, M.; Le, Q. Efficientnetv2: Smaller models and faster training. In Proceedings of the International Conference on Machine Learning, Virtual Event, 18–24 July 2021; pp. 10096–10106. [Google Scholar]

- Parez, S.; Dilshad, N.; Alghamdi, N.S.; Alanazi, T.M.; Lee, J.W. Visual Intelligence in Precision Agriculture: Exploring Plant Disease Detection via Efficient Vision Transformers. Sensors 2023, 23, 6949. [Google Scholar] [CrossRef] [PubMed]

- Ghosh, P.; Mondal, A.K.; Chatterjee, S.; Masud, M.; Meshref, H.; Bairagi, A.K. Recognition of Sunflower Diseases Using Hybrid Deep Learning and Its Explainability with AI. Mathematics 2023, 11, 2241. [Google Scholar] [CrossRef]

- Khan, H.; Haq, I.U.; Munsif, M.; Mustaqeem; Khan, S.U.; Lee, M.Y. Automated wheat diseases classification framework using advanced machine learning technique. Agriculture 2022, 12, 1226. [Google Scholar] [CrossRef]

- Javeed, D.; Gao, T.; Saeed, M.S.; Kumar, P. An Intrusion Detection System for Edge-Envisioned Smart Agriculture in Extreme Environment. IEEE Internet Things J. 2023. [Google Scholar] [CrossRef]

- Kaustubh, B. Tomato Leaf Disease Detection. 2020. Available online: https://www.kaggle.com/datasets/kaustubhb999/tomatoleaf (accessed on 30 June 2023).

- Sun, J.; Tan, W.; Mao, H.; Wu, X.; Chen, Y.; Wang, L. Recognition of multiple plant leaf diseases based on improved convolutional neural network. Trans. Chin. Soc. Agric. Eng. 2017, 33, 209–215. [Google Scholar]

- Agarwal, M.; Singh, A.; Arjaria, S.; Sinha, A.; Gupta, S. ToLeD: Tomato leaf disease detection using convolution neural network. Procedia Comput. Sci. 2020, 167, 293–301. [Google Scholar] [CrossRef]

- Zhao, S.; Peng, Y.; Liu, J.; Wu, S. Tomato leaf disease diagnosis based on improved convolution neural network by attention module. Agriculture 2021, 11, 651. [Google Scholar] [CrossRef]

- Tan, L.; Lu, J.; Jiang, H. Tomato leaf diseases classification based on leaf images: A comparison between classical machine learning and deep learning methods. AgriEngineering 2021, 3, 542–558. [Google Scholar] [CrossRef]

- Rangarajan, A.K.; Purushothaman, R.; Ramesh, A. Tomato crop disease classification using pre-trained deep learning algorithm. Procedia Comput. Sci. 2018, 133, 1040–1047. [Google Scholar] [CrossRef]

- Naik, B.N.; Malmathanraj, R.; Palanisamy, P. Detection and classification of chilli leaf disease using a squeeze-and-excitation-based CNN model. Ecol. Inform. 2022, 69, 101663. [Google Scholar] [CrossRef]

- Kurmi, Y.; Saxena, P.; Kirar, B.S.; Gangwar, S.; Chaurasia, V.; Goel, A. Deep CNN model for crops’ diseases detection using leaf images. Multidimens. Syst. Signal Process. 2022, 33, 981–1000. [Google Scholar] [CrossRef]

- Paymode, A.S.; Malode, V.B. Transfer learning for multi-crop leaf disease image classification using convolutional neural network VGG. Artif. Intell. Agric. 2022, 6, 23–33. [Google Scholar] [CrossRef]

- Ahmad, I.; Hamid, M.; Yousaf, S.; Shah, S.T.; Ahmad, M.O. Optimizing pretrained convolutional neural networks for tomato leaf disease detection. Complexity 2020, 2020, 8812019. [Google Scholar] [CrossRef]

- Zhou, C.; Zhou, S.; Xing, J.; Song, J. Tomato leaf disease identification by restructured deep residual dense network. IEEE Access 2021, 9, 28822–28831. [Google Scholar] [CrossRef]

- Trivedi, N.K.; Gautam, V.; Anand, A.; Aljahdali, H.M.; Villar, S.G.; Anand, D.; Goyal, N.; Kadry, S. Early detection and classification of tomato leaf disease using high-performance deep neural network. Sensors 2021, 21, 7987. [Google Scholar] [CrossRef] [PubMed]

- Wu, Q.; Chen, Y.; Meng, J. DCGAN-based data augmentation for tomato leaf disease identification. IEEE Access 2020, 8, 98716–98728. [Google Scholar] [CrossRef]

- Lin, C.; Li, L.; Luo, W.; Wang, K.C.; Guo, J. Transfer learning based traffic sign recognition using inception-v3 model. Period. Polytech. Transp. Eng. 2019, 47, 242–250. [Google Scholar] [CrossRef]

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A survey of convolutional neural networks: Analysis, applications, and prospects. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 6999–7019. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Garreau, D.; Luxburg, U. Explaining the explainer: A first theoretical analysis of LIME. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Online, 26–28 August 2020; pp. 1287–1296. [Google Scholar]

| Authors | Datasets | Model | Accuracy |

|---|---|---|---|

| Agarwal et al. [30] | Plant Village [29] | Convolution Neural Network | 91.20% |

| Ahmad et al. [37] | Laboratory-Based | InceptionV3 | 99.60% |

| Zhao et al. [31] | Plant Village [29] | SE-ResNet50 | 96.81% |

| Zhou et al. [38] | Tomato leaf disease | RRDN | 95% |

| Trivedi et al. [39] | Tomato leaf disease | Convolution Neural Network | 98.58% |

| Wu et al. [40] | Plant Village [29] | GoogLeNet | 94.33% |

| Chen et al. [19] | Hunan Vegetable Institute | B-ARNet | 88.43% |

| Class Names | Training Images | Validation Images | Test Images | Total Images |

|---|---|---|---|---|

| Mosaic Virus | 800 | 100 | 100 | 1000 |

| Target Spot | 800 | 100 | 100 | 1000 |

| Bacterial Spot | 800 | 100 | 100 | 1000 |

| Yellow Leaf Curl Virus | 800 | 100 | 100 | 1000 |

| Late Blight | 800 | 100 | 100 | 1000 |

| Leaf Mold | 800 | 100 | 100 | 1000 |

| Early Blight | 800 | 100 | 100 | 1000 |

| Spider Mites Two-Spotted Spider Mite | 800 | 100 | 100 | 1000 |

| Septoria Leaf Spot | 800 | 100 | 100 | 1000 |

| Healthy | 800 | 100 | 100 | 1000 |

| Hyperparameters | Short Description |

|---|---|

| Batch Normalization | Technique used in deep learning to stabilize and accelerate training by normalizing the inputs of each layer in a mini-batch. |

| Learning Rate | Controls how quickly a machine learning model adapts its parameters during training. |

| Kernel Regularizer | Discourages excessive weight values in neural networks to prevent overfitting. |

| Activity Regularizer | Penalizes neural activation values to prevent overfitting in deep learning models. |

| Bias Regularizer | Discourages large bias values in neural networks to improve generalization and prevent overfitting. |

| Activation | Introduces non-linearity to model data by transforming neuron outputs. |

| Adamax | An optimization algorithm for deep learning, a variant of Adam. |

| Fold Numbers | Training Accuracy | Validation Accuracy | Test Accuracy | Required Time (Minutes) |

|---|---|---|---|---|

| 1 | 99.03% | 99.20% | 99.20% | 36.0 |

| 2 | 99.01% | 99.40% | 98.90% | 40.18 |

| 3 | 99.14% | 99.40% | 99.50% | 42.56 |

| 4 | 98.91% | 99.40% | 98.10 | 37.10 |

| 5 | 99.02% | 98.70% | 99.10% | 42.53 |

| Average Weighted Accuracy | 99.02% | 99.22% | 98.96% | 39.67 |

| Name | Precision (%) | Recall (%) | F1-Score (%) | Accuracy (%) |

|---|---|---|---|---|

| Bacterial spot | 100 | 100 | 100 | 100 |

| Early blight | 100 | 100 | 100 | 100 |

| Late blight | 100 | 100 | 100 | 100 |

| Leaf Mold | 100 | 100 | 100 | 100 |

| Septoria Leaf Spot | 100 | 100 | 100 | 100 |

| Spider Mites Two-Spotted Spider Mite | 100 | 100 | 100 | 100 |

| Target spot | 100 | 100 | 100 | 100 |

| Yellow Leaf Curl Virus | 100 | 100 | 100 | 100 |

| Mosaic virus | 100 | 100 | 100 | 100 |

| Healthy | 100 | 100 | 100 | 100 |

| Macro average | 100 | 100 | 100 | 100 |

| Weighted average | 100 | 100 | 100 | 100 |

| Class Names | Training Images | Validation Images | Test Images | Total Images |

|---|---|---|---|---|

| Mosaic Virus | 303 | 37 | 33 | 373 |

| Target Spot | 1120 | 157 | 127 | 1404 |

| Bacterial Spot | 1720 | 215 | 192 | 2127 |

| Yellow Leaf Curl Virus | 4758 | 310 | 289 | 5357 |

| Late Blight | 1580 | 157 | 172 | 1909 |

| Leaf Mold | 761 | 105 | 86 | 952 |

| Early Blight | 800 | 110 | 90 | 1000 |

| Spider Mites Two-Spotted Spider Mite | 1375 | 150 | 151 | 1676 |

| Septoria Leaf Spot | 1433 | 178 | 160 | 1771 |

| Healthy | 1287 | 160 | 144 | 1591 |

| Authors | Datasets | Model | Accuracy Rate | Year |

|---|---|---|---|---|

| Rangarajan et al. [33] | Plant Village Dataset [29] | AlexNet | 97.49% | 2018 |

| Agarwal et al. [30] | Plant Village Dataset [29] | Convolution Neural Network | 91.20% | 2020 |

| Zhao et al. [31] | Plant Village Dataset [29] | SE-ResNet50 | 96.81% | 2021 |

| Tan et al. [32] | Plant Village Dataset [29] | ResNet34 | 99.70% | 2021 |

| Naik et al. [34] | Plant Village Dataset [29] | SECNN | 97.90% | 2022 |

| Kurmi et al. [35] | Plant Village Dataset [29] | CNN | 92.60% | 2022 |

| Paymode et al. [36] | Plant Village Dataset [29] | VGG16 | 95.71% | 2022 |

| Proposed approach | Plant Village Dataset [29] | EfficientNetV2B2 | 99.80% | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Debnath, A.; Hasan, M.M.; Raihan, M.; Samrat, N.; Alsulami, M.M.; Masud, M.; Bairagi, A.K. A Smartphone-Based Detection System for Tomato Leaf Disease Using EfficientNetV2B2 and Its Explainability with Artificial Intelligence (AI). Sensors 2023, 23, 8685. https://doi.org/10.3390/s23218685

Debnath A, Hasan MM, Raihan M, Samrat N, Alsulami MM, Masud M, Bairagi AK. A Smartphone-Based Detection System for Tomato Leaf Disease Using EfficientNetV2B2 and Its Explainability with Artificial Intelligence (AI). Sensors. 2023; 23(21):8685. https://doi.org/10.3390/s23218685

Chicago/Turabian StyleDebnath, Anjan, Md. Mahedi Hasan, M. Raihan, Nadim Samrat, Mashael M. Alsulami, Mehedi Masud, and Anupam Kumar Bairagi. 2023. "A Smartphone-Based Detection System for Tomato Leaf Disease Using EfficientNetV2B2 and Its Explainability with Artificial Intelligence (AI)" Sensors 23, no. 21: 8685. https://doi.org/10.3390/s23218685

APA StyleDebnath, A., Hasan, M. M., Raihan, M., Samrat, N., Alsulami, M. M., Masud, M., & Bairagi, A. K. (2023). A Smartphone-Based Detection System for Tomato Leaf Disease Using EfficientNetV2B2 and Its Explainability with Artificial Intelligence (AI). Sensors, 23(21), 8685. https://doi.org/10.3390/s23218685