Abstract

Aiming at the problems of Uyghur oblique deformation, character adhesion and character similarity in scene images, this paper proposes a scene Uyghur recognition model with enhanced visual prediction. First, the content-aware correction network TPS++ is used to perform feature-level correction for skewed text. Then, ABINet is used as the basic recognition network, and the U-Net structure in the vision model is improved to aggregate horizontal features, suppress multiple activation phenomena, better describe the spatial characteristics of character positions, and alleviate the problem of character adhesion. Finally, a visual masking semantic awareness (VMSA) module is added to guide the vision model to consider the language information in the visual space by masking the corresponding visual features on the attention map to obtain more accurate visual prediction. This module can not only alleviate the correction load of the language model, but also distinguish similar characters using the language information. The effectiveness of the improved method is verified by ablation experiments, and the model is compared with common scene text recognition methods and scene Uyghur recognition methods on the self-built scene Uyghur dataset.

1. Introduction

Texts in natural scenes are highly generalizable and highly logical, which can intuitively convey high-level semantic information and help people analyze and understand scene content [1]. Scene Uyghur can be seen everywhere in public places in Xinjiang, such as signboards, billboards, and banners on both sides of the roads. Accurate recognition of scene Uyghur can not only provide a solid technical foundation for downstream artificial intelligence applications, such as machine translation and intelligent transportation [2], but also promote the development of information construction in Xinjiang.

In the past few years, STR [3,4] has paid more attention to the recognition of Chinese and English, and less research work has been conducted on Uyghur recognition. In recent years, thanks to the “One Belt, One Road” strategy, Uyghur recognition has developed relatively mature technologies in printing and handwriting recognition, but there are still few studies on scene Uyghur recognition.

There are two difficulties in scene Uyghur recognition. On the one hand, there is a lack of public scene Uyghur datasets in the academic community [5]. Most of the work [6,7,8] is performed using different private datasets, so the relative quality of any method cannot be compared fairly. In addition, many models [5,6] are applied to synthetic datasets, and synthetic images cannot reproduce the complexity and variability of natural images, resulting in the poor practical application of models.

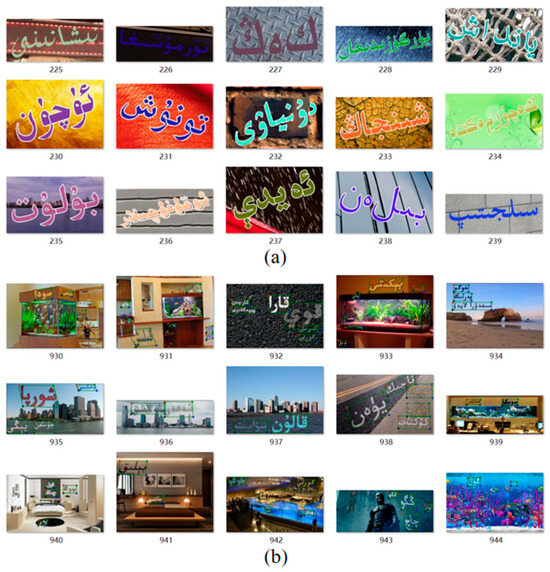

Aiming at the dataset problem, this paper builds a real-scene Uyghur image dataset based on the existing dataset in the laboratory and carries out word-level annotation. The dataset contains 4000 Street View images, including road signs, billboards, and other street scenes. Some data augmentation strategies are used to expand the training set of this real scene Uyghur image dataset. In addition, this paper also constructs a Uyghur image dataset of synthetic scene by combining two text image synthesis methods, with a total of 200,000 synthetic images, to pre-train the model.

On the other hand, in addition to the common challenges faced by computer vision tasks such as background complexity and blur occlusion, scene Uyghur has its own unique challenges. In terms of morphological structure, Uyghur is a typical adhesive language [9], where characters are glued together to form concatenated segments, a feature that is particularly common in scenes. There is no uniform regulation for the width and height of characters, which are relatively random; the shape and structure vary greatly [10], which hinders the application of general character recognition algorithms. In terms of writing rules, Uyghur is written around a baseline from right to left, and many letters on the baseline have the same main body part, which can only be distinguished by subtle differences in the number and position of upper and lower punctuation marks or symbols outside the baseline. One more point and symbol or one less point and symbol will affect the shape of the character, resulting in the inability to spell or the changing of the meaning of the word [9], causing recognition errors. Figure 1 shows an example of the Uyghur features of the scene.

Figure 1.

Scene Uyghur features example diagram. Black line: write along the baseline from right to left. Black arrow: writing direction. Green box: characters are glued together to form a concatenated segment. Blue box: example of similar characters.

Aiming at visual problems such as the oblique deformation of characters, character adhesion and character similarity, this paper believes that the visual recognition ability of the model should be improved. Firstly, a set of text recognition models based on deep learning [3,4,11,12,13] were tested and analyzed on the real scene Uyghur dataset (see Section 4.6.1 for details), and ABINet [12] was identified as the basic recognition network. The first input of ABINet’s language model is visual prediction, which is corrected according to the learned language rules. However, the vision model only pays attention to visual texture information. For challenging Uyghur scenes, it is difficult to generate high-precision visual prediction. Poor-quality visual predictions increase the correction load on the language model, limiting the ability of recognition networks. Inspired by VisionLAN [13], this paper explores how to use language information in the visual space to improve the accuracy of visual predictions and improve ABINet’s vision model.

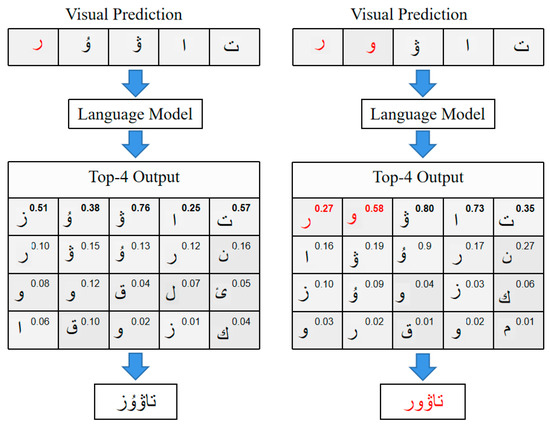

The top four probabilities output by the language model are visualized in Figure 2. The input word “ ” is corrected by the language model, and the output “

” is corrected by the language model, and the output “ ” is obtained (left side of Figure 2). However, as the number of wrong characters in the input visual prediction increases, the correction of the language model becomes challenging and may lead to wrong corrections (the input word on the right side of Figure 2 is “

” is obtained (left side of Figure 2). However, as the number of wrong characters in the input visual prediction increases, the correction of the language model becomes challenging and may lead to wrong corrections (the input word on the right side of Figure 2 is “ ”, and the corrected word is “

”, and the corrected word is “ ”).

”).

” is corrected by the language model, and the output “

” is corrected by the language model, and the output “ ” is obtained (left side of Figure 2). However, as the number of wrong characters in the input visual prediction increases, the correction of the language model becomes challenging and may lead to wrong corrections (the input word on the right side of Figure 2 is “

” is obtained (left side of Figure 2). However, as the number of wrong characters in the input visual prediction increases, the correction of the language model becomes challenging and may lead to wrong corrections (the input word on the right side of Figure 2 is “ ”, and the corrected word is “

”, and the corrected word is “ ”).

”).

Figure 2.

The top four recognition results of the language model. The input word is “ ” in the left image, the input word is “

” in the left image, the input word is “ ” in the right image, and the label is “

” in the right image, and the label is “ ”. Numbers in the upper right corner indicate the probability of the output character, and red characters indicate incorrect predictions.

”. Numbers in the upper right corner indicate the probability of the output character, and red characters indicate incorrect predictions.

” in the left image, the input word is “

” in the left image, the input word is “ ” in the right image, and the label is “

” in the right image, and the label is “ ”. Numbers in the upper right corner indicate the probability of the output character, and red characters indicate incorrect predictions.

”. Numbers in the upper right corner indicate the probability of the output character, and red characters indicate incorrect predictions.

The main contributions of this paper are as follows:

- (1)

- This paper constructs a real scene Uyghur image dataset and a synthetic scene Uyghur image dataset, open at https://github.com/kongfnajie/SUST-and-RUST-datasets-for-Uyghur-STR, accessed on 31 August 2023.

- (2)

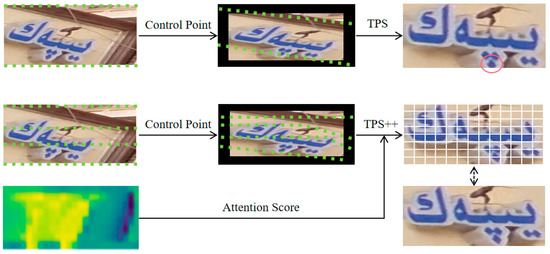

- An attention-enhanced correction network TPS++ is added before the recognition network to correct the inclined Uyghur characters in the scene image into a horizontal form, which is easier to be read by subsequent recognizers.

- (3)

- The vision model of ABINet is improved. A Transformer network is introduced in U-Net to enhance the information flow along the baseline direction, aggregate horizontal features, highlight the spatial features of character positions, and alleviate the problem of character adhesion.

- (4)

- A visual masking semantic awareness module is proposed to occlude the visual features of the selected character area, and the internal relationship between local visual features is used to guide the vision model to consider the language information in the visual space to reason about the occluded characters, so as to obtain high-precision visual prediction and alleviate the correction load of the language model. When visual cues are confused, language information is used to highlight discriminative visual cues to better distinguish similar characters.

The remainder of this paper is arranged as follows. Section 2 introduces the research status of scene text recognition, text correction, and Uyghur recognition. Section 3 describes the pipeline of our model and then details each improvement in order. Section 4 shows the results of ablation experiments and comparison experiments, verifying the effectiveness of each improvement and the superiority of our method compared with other methods. Section 5 summarizes the work and offers prospects.

2. Related Work

2.1. Scene Text Recognition

It has become a trend to use language information in scene text recognition [11]. According to whether language information is used, this paper divides text recognition methods based on deep learning into two categories: language-free methods and language-aware methods.

2.1.1. Language-Free Methods

Language-free methods utilize only the visual features of images for recognition. Shi et al. proposes a method based on CTC [14] using CNN to extract visual features, using RNN to model feature sequences, and finally, using CTC decoding to predict. Bai Xiang’s team applied this method to CRNN [3] for the first time, achieving recognition accuracy far beyond that of traditional methods. Most of the subsequent research has adopted the same strategy. Such models have four main stages: image preprocessing, feature extraction, sequence processing, and prediction. Along this process, the development of a pure visual recognition model is promoted by integrating multiple RNNs [15], optimizing CTC algorithm [16], guiding CTC decoding with GTC [17], and using VIT [18] to extend to SVTR [19]. Segmentation-based methods [20,21,22] treat recognition as a pixel-level classification task, which is not limited by text layout, but requires character-level annotation and is sensitive to segmentation noise. Due to language-free methods focusing solely on visual information, the recognition effect of low-quality images is poor.

2.1.2. Language-Aware Methods

Language-aware methods use language information to assist recognition. The encoder-decoder architecture [23] integrates recognition cues of visual and language information, the encoder extracts image features, and the decoder predicts characters by attending to relevant information from one-dimensional image features [24] or two-dimensional image features [25]. For example, Lee et al. [26] introduces the attention mechanism to scene text recognition for the first time and learns language information by combining one-dimensional image feature sequence and character sequence embedding. Attention-based methods [27] use RNN [4] or Transformer [28,29] for language modeling. The RNN-based language model usually infers serially from left to right [30], using the characters predicted by the previous time step to model language information and identify characters one by one. This method requires expensive repetitive calculations, and the learned language knowledge is limited. Therefore, a parallel inference method [12] is introduced, which processes the sequence in parallel in the decoder and simultaneously deduces the language information of each character. Yu et al. proposes a semantic reasoning network SRN [11], which integrates visual information and semantic information and has a good recognition effect on irregular text.

The above methods also consider visual features while modeling language features. It is unknown what language features are really learned, so explicit language modeling methods are proposed [27,31,32]. ABINet [12] decouples the vision model and the language model and can pre-train the language model to obtain rich prior knowledge. The language model is an iterative bidirectional cloze network, which not only adapts to the problem of the direction of Uyghur writing from right to left, but also provides two-way language guidance for recognition. The language model iteratively corrects the prediction results and alleviates the impact of noise input, which is beneficial for scene Uyghur recognition. Therefore, ABINet is used for scene Uyghur recognition in this paper. In addition, CDistNet [31] also designs a dedicated location module to alleviate the problem of attention drift. Chu et al. [33] proposes an iterative visual modeling network, IterNet, to improve the visual prediction ability.

2.2. Text Correction

Part of the reason why irregular text is difficult to recognize is that the text is not presented in a standardized form, causing the text to appear distorted [34]. Popular methods usually use image preprocessing modules [35] to make the input text image easier to recognize. For example, Shi et al. [4] uses the learnable spatial transformation network STN [36] to correct irregular text to canonical form, thereby improving recognition accuracy. Luo et al. proposes a pixel-level correction network, multi-objective correction attention network MORAN [37], but the distortion correction effect of horizontal direction is poor. Recently, Zheng et al. [34] proposes a TPS++ more suitable for scene text recognition, which incorporates the attention mechanism into text correction to generate natural text corrections at the feature level that are easier to be read by subsequent recognizers. According to this method, this paper adopts special gated attention in horizontal and vertical dimensions, respectively, and finds that for Uyghur written along the horizontal baseline, horizontal attention can better locate characters, and vertical attention can better distinguish characters.

2.3. Uyghur Recognition

2.3.1. Uyghur Printing and Handwriting Recognition

At present, Uyghur recognition has relatively mature technologies in printing [38,39,40,41,42,43,44,45,46,47,48] and handwriting [49,50,51,52]. The main research teams include Xinjiang University, Tsinghua University, Xidian University and so on.

Traditional Uyghur recognition methods [38] mostly focus on more effective character segmentation algorithms and more robust feature extraction research. Chen Qing performed heuristic feature extraction and coding for Uyghur characters, implemented a four-level classifier based on discriminant rules, and completed character recognition with template matching [39]. Bai Yunhui used the traditional recognition method of feature plus classification to realize word recognition [40]. Professor Ding Xiaoqing’s team innovated the unsegmented Uyghur recognition technology based on the implicit Markov model [10]. Lang Xiao extracts the gradient and directional line element features of Uyghur characters and uses the Euclidean distance classifier to complete the recognition task [41]. Halimulati conducts word-level recognition based on the local and overall visual features of Uyghur words and uses the N-gram language model to correct the recognition results [42].

The application of deep learning technology has promoted the development of Uyghur recognition. Li et al. first applied a CTC-based method to Uyghur recognition [43]. Li Dandan designed a “first character-word” two-stage cascade classifier [45]. Chen Yang improved the decoding order of CRNN to adapt the network to the right-to-left writing order of Uyghur [46]. The team of Professor Eskar Aimdullah used Latin forms to label Uyghur word images for text sequence recognition [47]. The TRBGA model proposed by Tang et al. realized the accurate recognition of Uyghur printing characters [48]. Zhang et al. proposed an offline signature identification method based on texture feature fusion and classified and identifies signature images by training random forests [49]. The team of Professor Maire Ibrain combined RNN and CTC to build an end-to-end online Uyghur handwriting recognition system [50].

2.3.2. Scene Uyghur Recognition

Compared with printing and handwriting recognition, there is little research on scene Uyghur recognition. The outstanding work in recent years is as follows. Xiong Lijian used a spatial transformer network (TPS-STN) for preprocessing and an attention-based dense convolutional recognition network (ABDCRN) for recognition, which improves the accuracy of the self-built dataset by 11.29% compared with the baseline CRNN [6]. Fu Zilong proposes to introduce a parallel contextual attention mechanism on the parallel encoder-decoder framework to improve the visual feature alignment ability of the model, and the accuracy of the synthesized dataset is 9.00% higher than that of the baseline CRNN, but the recognition effect is poor on the samples with low contrast [5]. The team of Professor Maire Ibrain carried out text sequence recognition on Uyghur characters in images with simple backgrounds, trained them on a synthetic dataset, and evaluated them on a self-built dataset, and the accuracy was 8.20% higher than that of baseline CRNN [8]. The team of Professor Kurban Wubuli built a scene text recognition algorithm with embedded coordinate attention for multi-directional Uyghur, extracting the spatial texture information of the image, and improved the accuracy of the self-built dataset by 2.34% compared with the baseline ASTER [7]. In general, most studies use common recognition models, and these methods achieve good recognition results on their own self-built datasets. However, the recognition effect of Uyghur characters in actual scenes is not satisfactory, and there is still a large space for development in this field.

3. Methodology

In this section, the flow of the methods is first presented, and then each improvement is detailed in order.

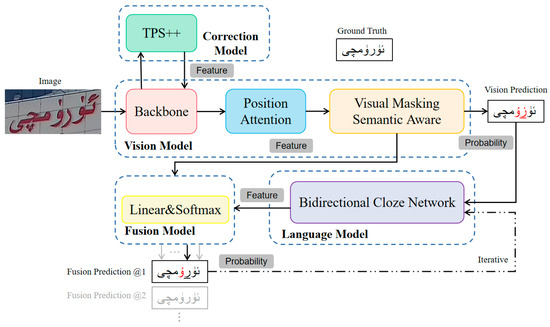

3.1. Process

As shown in Figure 3, the proposed model is based on Transformer’s encoder–decoder framework and consists of four parts: Correction Model (CM), Vision Model (VM), Language Model (LM) and Fusion Model (FM). First, TPS++ is used to perform feature-level correction on the text in the input image, and the correction result is fed back to the two-dimensional visual features. Then, the visual features are horizontally aggregated through the improved small U-Net. In the training phase, through the Visual Masking Semantic Awareness (VMSA) module, the visual features of the selected characters are occluded to obtain a masked feature map, and the occluded characters are reasoned to obtain visual probability predictions. During the test phase, the VMSA module is removed, and the visual probability prediction is performed. Then, the visual prediction is inputted into LM for language rule learning to obtain semantic features. FM combines visual features and semantic features into fusion features for fusion probability prediction. Finally, the prediction results are input into LM for repeated iterations to correct the prediction results.

Figure 3.

Proposed Text Recognition Model Framework. The red font is the wrong prediction.

3.2. Correction Model

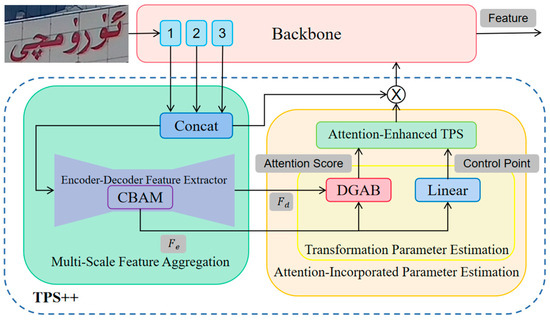

Aiming at the problem of scene Uyghur oblique deformation, the correction results of TPS [35] are not satisfactory because the calculation of TPS parameters is contentless, resulting in the corrected image only focusing on the geometric structure of the text, causing character deformation and the out-of-bounds phenomenon. This paper adopts TPS++ [34] with added attention mechanisms to suppress deformation and out-of-bounds by using text content to constrain the movement of control points.

The framework of TPS++ is shown in Figure 4, which consists of two parts: Multi-Scale Feature Aggregation (MSFA) and Attention Merging Parameter Estimation (AIPE), which are used for visual feature aggregation and attention-enhanced TPS parameter estimation, respectively.

Figure 4.

Architecture of TPS++. CBAM [53] is channel–space joint attention, which is often used to highlight important features. DGAB [34] is a specially designed dynamic gated attention block.

Input image , where H and W are the height and width of the image, and D is the feature dimension. The feature maps of the first three blocks in the backbone network are input to MSFA, scaled to the same size, and concatenated from the channel dimension. Then, a codec-based feature extractor is applied for feature extraction. The shrinking path is used to extract aggregated features in shallow blocks containing more position-related cues—that is, encoding features —and the expanding path is used to extract general visual features—that is, decoding features .

AIPE uses these two features to predict the movement of control points and calculate content-based attention scores. The control points are initialized in a grid-like manner, uniformly distributed on the feature map. is used to predict the offset of each control point in the x-dimension and y-dimension, combined with the initialization coordinates, to obtain a set of regression control points . The attention score matrix between control points and text is adaptively computed, and the process can be formalized as follows:

where is implemented by a dynamic gated attention block DGAB [34], and stands for transpose.

Attention-enhanced TPS correction is performed based on control points and attention scores, and the corrected features are fed back to the backbone. TPS++ shares the visual feature extraction process with the recognition network, which saves a lot of resources compared with the correctors [4], which extract features separately in the correction stage.

3.3. Vision Model

VM is divided into three phases: feature extraction, sequence representation and vision prediction. The corrected features are fed back to the backbone and continue to participate in subsequent feature extraction. Then, the visual features are obtained through a three-layer Transformer.

The position attention module generates attention maps based on the query paradigm:

where is the position encoding of the character sequence, and T is the maximum length of the character sequence, which is set to 30 in this paper. is realized by a modified small U-Net.

Since the CM corrects the skewed Uyghur text to a horizontally represented text, the recognition model processes the characters in a horizontal arrangement. In order to adapt the recognition model to the characteristics of Uyghur adhesion, this paper introduces a Transformer network in U-Net, and the U-Net* structure is shown in Table 1. The information flow is enhanced along the baseline direction, the horizontal features are aggregated and interact through a non-local mechanism, and the spatial features of character positions are enhanced, thereby alleviating the problem of character adhesion.

Table 1.

U-Net* structure.

Both the encoder and decoder consist of 4 convolutional layers with 3 × 3 kernels and 1 padding unit. The encoder performs downsampling by adjusting the convolution stride directly, while the decoder uses interpolation layers for upsampling. Skip connections are omitted, and functions are combined via additive operations.

LM iteratively updates semantic features and fusion features to correct visual recognition results. The initial input to the LM is the visual probability prediction , and in subsequent iterations, the input to the LM is the fusion probability prediction from the previous iteration. The process of raw visual probability prediction can be formalized as follows:

where represents the flattened visual features, represents a linear transition matrix, and C represents the number of character categories.

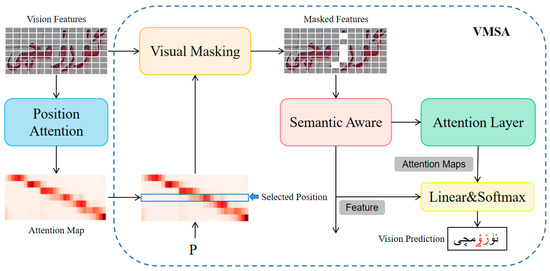

Since VM does not participate in iterations, providing high-precision visual prediction can alleviate the correction load of LM, which has a great impact on improving the accuracy of model recognition. Therefore, this paper proposes a VMAS module, as shown in Figure 5.

Figure 5.

Architecture of the VMSA module. The red font is the wrong prediction.

In the training phase, based on the attention map , a position P is randomly selected in the character sequence, and then the top I visual features related to the selected position are found, which are replaced by .

where represents the index of the occluded character, which is randomly obtained for the input word image with length . is the index of the top I maximum value of the corresponding attention score selected according to position P on the attention map . The values of the visual feature corresponding to these indexes are replaced by , and is the process of using to transform the visual feature into the masking visual feature .

Remote dependencies in the visual space are modeled by stacking N transformer units. Each unit is composed of a self-attention layer and a position feedforward layer, followed by residual connection and layer normalization. The obtained visual context features are sent to the prediction layer to generate visual probability prediction . The prediction process is shown in Equation (9).

where is the relation map, is the linear transformation layer, and T is the maximum length of the character sequence, which is set to 30 in this paper. represents the relation score at time step t, and represents the linear transition matrix.

During the test phase, the VMSA module is removed, and only the prediction layer is used for recognition. Since the character information is accurately occluded during the training phase, the guided prediction layer infers the semantics of characters according to the dependencies between the visual features. Therefore, VM can adaptively consider language information in visual space and generate high-precision visual predictions.

3.4. Language Model

Following the method of ABINet, four Transformer decoding blocks are adopted as . It uses Q as the input and as the key/value of the attention layer. By processing multiple decoder blocks, LM obtains semantic features :

3.5. Fusion Model

Conceptually, image-trained VM and text-based LM come from different patterns. Semantic features have been aligned as sequences, and to align visual context features into a sequence, a position attention module is applied again to aggregate visual context features into visual sequence features . Visual sequence features and semantic sequence features are combined into fusion recognition features through a gating mechanism [11] for final character estimation.

where is the weight, [;] means concatenation, and ⊙ is the element-wise product. Finally, the linear layer and SoftMax function are applied on to estimate the character sequence .

3.6. Loss Function

Following the approach of ABINet, supervision is added in VM, LM and FM. The loss function formula is as follows:

where , and denote the loss of vision model, language model and fusion model, respectively. M is the number of iteration rounds, set to 3. and are the losses for the ith iteration. and are balance factors, set = = 1.

The vision model loss consists of three parts: the loss for predicting masked characters, the loss for predicting other characters, and the recognition loss .

where and are balance factors, set = = 0.5.

Loss uses the loss formula above, where T is the maximum length of the word, is the predicted character at time step t, and is the ground truth.

4. Experiments

In this section, new datasets will be constructed to train and test the proposed method, and all experiments are conducted on self-built datasets.

4.1. Datasets

At present, it is basically impossible to find public scene Uyghur recognition datasets. Based on the existing Uyghur recognition dataset [6] in the laboratory (Xinjiang Multilingual Information Technology Laboratory and Xinjiang Multilingual Information Technology Research Center), this paper masks effective image screening and text annotation checks to produce a real scene Uyghur image dataset. It contains 4000 Uyghur Street scene images collected in Urumqi, Kashgar and other places, of which 3200 are used as the training set, and 800 are used as test set, as shown in Figure 6. Label generation is writing word information directly into text documents.

Figure 6.

Example of real scene Uyghur recognition data.

In addition, to meet the requirements of the ABINet improvements, this paper uses SynthText [54] and the TextRecognitionDataGenerator two text image synthesis method and builds a synthetic scene Uyghur image dataset containing 200,000 composite images for the pre-training model.

The process of constructing the synthetic scene Uyghur image dataset is as follows: First, 1 million initial entries are selected from the Uyghur website. These entries undergo effective word filtering, and 500,000 entries are chosen as the foundational corpus. Then, background images are collected. This paper uses the natural scene images provided by SynthText to build the background image library. Finally, image synthesis is performed. Following the SynthText method, during the synthesis process, the maximum width-to-height ratio of text regions is set to 20, the minimum width-to-height ratio is set to 0.3, and the minimum text region area is set to 100. Each image contains no less than one Uyghur text region, resulting in the generation of 50,000 synthetic images, as shown in Figure 7a. In the synthesis process using TextRecognitionDataGenerator, the maximum word length is set to 30, the minimum length is set to 3, and random Gaussian noise, optical distortions, and other enhancements are added to enrich the training samples; this process generates 150,000 synthetic images, as shown in Figure 7b.

Figure 7.

Example of synthetic scene Uyghur recognition data. (a) example of SynthText synthetic images. (b) example of TextRecognitionDataGenerator synthetic images.

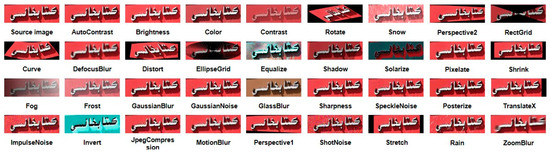

4.2. Data Augmentation

Google introduced STRAug [55], which consists of 36 image enhancement functions designed specifically for STR. Each function simulates certain image attributes found in natural scenes. These enhancement functions are categorized into eight groups: Warp, Geometry, Pattern, Blur, Camera, Process, Noise, and Weather. In this paper, five random augmentation strategies from the thirty-six are applied to 3200 real images in the training dataset. The resulting 19,200 data points serve as the training dataset, and the specific augmentation effects are illustrated in Figure 8.

Figure 8.

The effect of 36 augmentation strategies on the same image.

4.3. Evaluation Criteria

This paper evaluates the recognition performance of scene Uyghur from the aspect of accuracy. The definition of accuracy is shown in the formula

where represents accuracy, represents the number of correctly recognized words, and represents the total number of sample words.

4.4. Experiment Settings

The model can recognize 43-character categories, including 10 numbers, 32 Uyghur characters, and 1 end-of-sequence (EOS) marker. Following ABINet [12], images are resized to 256 × 64 through data augmentation operations such as geometric transformation and perspective distortion. The feature dimension D is always set to 512, BCN is set to four layers, each layer has eight attention heads, and the number of iterations M is set to three.

In the training phase, the proportion of the number of occluded in the control batch is 10%, and the number I of visual feature masking is set to 10. Since all training images have word-level annotations, using the character index P and the original word-level annotations to generate labels for the VMSA module (for example, when P = 4 and the word is “ ”, the labels are “

”, the labels are “ ” and “

” and “ ”, respectively), the label generation process is automatic without human intervention.

”, respectively), the label generation process is automatic without human intervention.

”, the labels are “

”, the labels are “ ” and “

” and “ ”, respectively), the label generation process is automatic without human intervention.

”, respectively), the label generation process is automatic without human intervention.For different models, follow the parameter settings in their original papers. All models are trained end-to-end using the Adam optimizer, using only word-level annotations. The training batch size is 384, the initial learning rate is , the learning rate decays to after six epochs, and the training period is set to 100 epochs. All models are trained using a server equipped with four NVIDIA AV100 GPUs.

In order to make the model converge faster, the idea of transfer learning is used in the experiment. Specifically, the synthetic dataset is used for pre-training, and then the real dataset is used for fine-tuning, during which the 10 model weights with the best training performances are saved. For the pre-training of the vision model and language model, the learning rate is kept constant during training.

4.5. Ablation Study

Using ABINet as the base network, several experiments are performed to prove the effectiveness of each improvement.

4.5.1. Effectiveness of TPS++ Correction

The impact of common correction methods on the performance of the recognition network is studied, and the relationship between different attention methods in TPS++ and scene Uyghur recognition performance is analyzed. The number of control points is set to 4 × 16.

Baseline is ABINet [12], which does not consider correction. (W), (H), and (W + H) indicate that DGAB is used only in the horizontal dimension, DGAB is used only in the vertical dimension, and DGAB is used in both dimensions, respectively.

As shown in Table 2, ASTER uses TPS transformation correction, MORAN uses image-level grid correction, and TPS++ is applied at baseline to obtain obvious accuracy advantages, indicating that the feature-level correction integrated into the attention mechanism has a significant effect on scene Uyghur recognition effectiveness. TPS++ improves the accuracy by 0.07% when adopting DGAB in the horizontal dimension. When adopting DGAB in the vertical dimension, the accuracy is significantly improved by 0.18%. When using DGAB in both horizontal and vertical dimensions, the best accuracy is 85.55%. The reason is that Uyghur characters are arranged along the horizontal baseline, TPS++ guides the spatial position information during the feature extraction process, horizontal attention along the character direction is beneficial to better localize the characters, and the discriminative visual cues are more distributed in the vertical direction. Therefore, for scene Uyghur correction, the best results can be obtained by adopting DGAB in both dimensions.

Table 2.

The results of the ablation experiments for the correction model.

By projecting onto the image, the corrected images of TPS and TPS++ are exemplified, as shown in Figure 9. TPS (upper part) is unable to accurately predict the control points due to the lack of guidance, and the phenomenon of character out of bounds appears. TPS++ (bottom half) uniformly initializes control points and calculates transformation parameters based on control point movement and attention scores to provide more natural text correction.

Figure 9.

Example of correction effect comparison. Green dot: control point. Red circle: character out of bounds.

4.5.2. Effectiveness of Horizontal Feature Aggregation

Due to the adhesion of Uyghur characters, the activation areas are scattered. In order to suppress the multi-activation phenomenon during the recognition process, some feature aggregation methods are explored experimentally, and it is found that enhancing the information flow along the baseline direction is of great significance for alleviating the character adhesion problem.

“Stride” is the total downsampling stride of U-Net (see Table 1). “Aggregation” is the aggregation method used in U-Net. As the downsampling operation expands the receptive field, U-Net can aggregate information. When evaluate the diverse U-Net strides’ effects on scene Uyghur recognition, as shown in Table 3(a–e), increasing the horizontal stride can bring improvement, while reducing it curtails accuracy. Furthermore, the change in horizontal stride along the baseline direction has a greater impact on the accuracy than the vertical stride. Therefore, aggregating the information along the baseline direction can improve the recognition performance of the model for scene Uyghur.

Table 3.

Ablation experiment results with U-Net modification.

Based on this observation, this paper further explores some more general feature aggregation methods than manipulating the U-Net stride, as shown in Table 3(f–i). For U-Net with an 8 × 16 stride, operational layers are embedded after the most detailed features (1 × 8) to aggregate information. Both the mean operation and the use of convolutional layers with a kernel size of 1 × 8 can improve the performance of the model. The transformer can aggregate features along the character order through a non-local mechanism, and using a four-layer transformer can increase the accuracy by 0.19%. Although further increasing the transformer unit may achieve better results, considering efficiency and scalability, this paper finally embeds a four-layer transformer in U-Net. After aggregating horizontal features, the activation regions provide more concentrated responses, which confirms that horizontal feature aggregation can effectively suppress the multiple activation phenomenon and alleviate the problem of Uyghur character adhesion to a certain extent.

4.5.3. Effectiveness of the VMSA Module

This paper proposes that the VMSA module aims to improve the visual prediction accuracy and help distinguish similar Uyghur characters. In this section, a series of ablation experiments are carried out to verify the effectiveness of VMSA.

As shown in Table 4, ABINet_V is the vision model of ABINet, using ResNet-45 and Transformer unit for STR. The first two experiments only consider visual information. Compared with the original vision model, the vision model guided by VMSA can use the language information in visual space to assist character decoding and obtain higher visual prediction accuracy. The last two experiments consider the addition of the language model. After adding the VMSA module to the baseline, the recognition accuracy increased by 0.62% compared with the baseline. It proves that the high-precision visual prediction obtained through the VMSA module can improve the correction performance of the language model.

Table 4.

Ablation experiment results of the VMSA module.

As shown in Figure 10, similar characters (lines 1 and 2) are significantly processed by the VMSA module. For example, the character “ ” and the character “

” and the character “ ” have similar visual cues, the original vision model is wrong, while the vision model guided by VMSA gives the correct prediction of “

” have similar visual cues, the original vision model is wrong, while the vision model guided by VMSA gives the correct prediction of “ ”. In addition, the correct recognition of images with complex backgrounds and poor visibility (rows 3 and 4) also proves the effectiveness of VMSA in improving the accuracy of visual prediction. For example, because the image is blurred, it is difficult to distinguish the character “

”. In addition, the correct recognition of images with complex backgrounds and poor visibility (rows 3 and 4) also proves the effectiveness of VMSA in improving the accuracy of visual prediction. For example, because the image is blurred, it is difficult to distinguish the character “ ” from the character “

” from the character “ ”, and the vision model guided by VMSA accurately recognizes the word “

”, and the vision model guided by VMSA accurately recognizes the word “ ”.

”.

” and the character “

” and the character “ ” have similar visual cues, the original vision model is wrong, while the vision model guided by VMSA gives the correct prediction of “

” have similar visual cues, the original vision model is wrong, while the vision model guided by VMSA gives the correct prediction of “ ”. In addition, the correct recognition of images with complex backgrounds and poor visibility (rows 3 and 4) also proves the effectiveness of VMSA in improving the accuracy of visual prediction. For example, because the image is blurred, it is difficult to distinguish the character “

”. In addition, the correct recognition of images with complex backgrounds and poor visibility (rows 3 and 4) also proves the effectiveness of VMSA in improving the accuracy of visual prediction. For example, because the image is blurred, it is difficult to distinguish the character “ ” from the character “

” from the character “ ”, and the vision model guided by VMSA accurately recognizes the word “

”, and the vision model guided by VMSA accurately recognizes the word “ ”.

”.

Figure 10.

Qualitative analysis of the VMSA module. Upper string: Predictions for VMs that do not use the VMSA module. Lower String: Identification of the VM using the VMSA module. Red characters are mis predicted.

4.5.4. Step by Step Assessment

Improvements are made step by step to demonstrate the effectiveness of each part of the improvement.

As shown in Table 5, the TPS++, U-Net* and VMSA modules gradually enhanced the performance of the model for recognizing scene Uyghur. “√” indicates add the proposed modules. The final accuracy of the model reached 86.35%.

Table 5.

Progressively improved ablation experimental results.

4.6. Comparative Experiments with Related Methods

4.6.1. Text recognition Algorithm

Compare the performance of the method in this paper with the existing general text recognition algorithms. These mainly include RNN-based methods [3,4] and transformer-based methods [11,12,13]. For a fair comparison, this paper refers to their official code. The evaluation results are shown in Table 6.

Table 6.

Text recognition model evaluation results.

When comparing existing text recognition methods, general text recognition methods are less effective, CRNN only uses visual features for recognition, ASTER has weak visual feature alignment ability, and language model generalization ability is not strong. ABINet shows state-of-the-art performance, achieving an 85.29% recognition accuracy and a speed of 31.5 ms per image. The visual language model ABINet is more suitable for scene Uyghur recognition, so ABINet is chosen as the baseline method. The scene Uyghur recognition method proposed in this paper outperforms other methods, benefiting from accurate visual prediction and language information-assisted character decoding, which is 1.06% higher than the baseline method. This result shows that the proposed improvements are effective. In summary, the text recognition method proposed in this paper is more suitable for scene Uyghur recognition than the general text recognition methods.

4.6.2. Scene Uyghur Recognition Algorithm

Compare the performance of the method in this paper with the scene Uyghur recognition algorithms proposed in recent years. These mainly include improved methods based on CRNN [5,6,8] and improved methods based on ASTER [7]. Since there is no public code, this paper reproduces their method. For fair comparison, the settings of each model are consistent during the experiment. The experimental results are shown in Table 7.

Table 7.

Scene Uyghur recognition model evaluation results.

The method in this paper achieves the best recognition performance in the comparison experiment, which reflects that the visual prediction enhancement method proposed in this paper can effectively solve the problems of oblique deformation, character adhesion and character similarity in scene Uyghur recognition. In the scene Uyghur recognition algorithms, it is very competitive.

4.7. Analysis of Failure Samples

This section shows samples of this paper’s method recognition failures. As can be seen from Figure 11, when encountering samples with low background contrast or severe visual interference, the model captures wrong visual information, and the recognition effect is poor. Such situations are still common difficulties in STR.

Figure 11.

Example of failure samples. Upper string: prediction result. Lower string: ground truth label. The red parts are the wrong prediction.

5. Conclusions

This paper proposes a scene Uyghur recognition model with enhanced visual prediction, which improves the basic model ABINet on the difficulty of scene Uyghur recognition. Firstly, TPS++ is used to perform feature-level correction on the text to solve the problem of slanting deformation. Then, the U-Net structure is improved to alleviate the character adhesion problem. Finally, a visual masking semantic awareness module is introduced to guide the vision model to utilize language information, which improves the accuracy of visual prediction and not only alleviates the correction load of the language model, but also facilitates the distinction of similar characters. Experiments on a publicly self-built scene Uyghur image dataset show that the model is competitive among existing scene Uyghur recognition algorithms. However, the method of horizontal feature aggregation can only alleviate the problem of character adhesion during the encoding process, and it does not fundamentally solve the problem, which needs to be improved in future work. In addition, the model structure is relatively complex, and future work will further optimize the network structure and improve the adaptability of the model based on the current research.

Author Contributions

Methodology, Y.L. (Yaqi Liu); writing—original draft preparation, Y.L. (Yaqi Liu); writing—review and editing, F.K. and M.X.; visualization, F.K.; funding acquisition, W.S.; supervision, Y.L. (Yanbing Li). All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National 973 Key R&D Program—Internet Chinese Information Processing Verification System for Public Safety and Social Management (Grant No.2014CB340506) and the National Natural Science Foundation of China—Key Technology Research on Uyghur Chinese Speech Translation System (Grant No. U1603262).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Liu, X.; Meng, G.; Pan, C. Scene text detection and recognition with advances in deep learning: A survey. Int. J. Doc. Anal. Recognit. (IJDAR) 2019, 22, 143–162. [Google Scholar] [CrossRef]

- Sun, W.; Du, Y.; Zhang, X.; Zhang, G. Detection and recognition of text traffic signs above the road. Int. J. Sens. Netw. 2021, 35, 69–78. [Google Scholar] [CrossRef]

- Shi, B.; Bai, X.; Yao, C. An end-to-end trainable neural network for image-based sequence recognition and its application to scene text recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 2298–2304. [Google Scholar] [CrossRef] [PubMed]

- Shi, B.; Yang, M.; Wang, X.; Lyu, P.; Yao, C.; Bai, X. Aster: An attentional scene text recognizer with flexible rectification. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 2035–2048. [Google Scholar] [CrossRef] [PubMed]

- Fu, Z. Research on Uighur Text Recognition Technology in Scene Images. Master’s Thesis, University of Science and Technology of China, Hefei, China, 2021. [Google Scholar]

- Xiong, L. Research and Application of Uighur Text Detection and Recognition Methods. Master’s Thesis, Xinjiang University, Urumqi, China, 2021. [Google Scholar]

- Wang, Y.; Ao, N.; Guo, R.; Mamat, H.; Ubul, K. Scene Uyghur Recognition with Embedded Coordinate Attention. In Proceedings of the 2022 3rd International Conference on Pattern Recognition and Machine Learning (PRML), Chengdu, China, 22–24 July 2022; pp. 253–260. [Google Scholar]

- Ibrayim, M.; Mattohti, A.; Hamdulla, A. An effective method for detection and recognition of Uyghur texts in images with backgrounds. Information 2022, 13, 332. [Google Scholar] [CrossRef]

- GB 12050–1989; Graphic Character Set for Information Interchange in Uyghur for Information Processing. State Bureau of Quality and Technical Supervision (SBTS): Beijing, China, 1989.

- Jiang, Z.; Ding, X.; Peng, L. Character Model Optimization for Recognition of Unsegmented Uighur Text Lines. J. Tsinghua Univ. (Sci. Technol.) 2015, 55, 873–877. [Google Scholar]

- Yu, D.; Li, X.; Zhang, C.; Liu, T.; Han, J.; Liu, J.; Ding, E. Towards accurate scene text recognition with semantic reasoning networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 12113–12122. [Google Scholar]

- Fang, S.; Xie, H.; Wang, Y.; Mao, Z.; Zhang, Y. Read like humans: Autonomous, bidirectional and iterative language modeling for scene text recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 7098–7107. [Google Scholar]

- Wang, Y.; Xie, H.; Fang, S.; Wang, J.; Zhu, S.; Zhang, Y. From two to one: A new scene text recognizer with visual language modeling network. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 14194–14203. [Google Scholar]

- Graves, A.; Fernández, S.; Gomez, F.; Schmidhuber, J. Connectionist temporal classification: Labelling unsegmented sequence data with recurrent neural networks. In Proceedings of the 23rd International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 369–376. [Google Scholar]

- Su, B.; Lu, S. Accurate recognition of words in scenes without character segmentation using recurrent neural network. Pattern Recognit. 2017, 63, 397–405. [Google Scholar] [CrossRef]

- Xie, Z.; Huang, Y.; Zhu, Y.; Jin, L.; Liu, Y.; Xie, L. Aggregation cross-entropy for sequence recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6538–6547. [Google Scholar]

- Hu, W.; Cai, X.; Hou, J.; Yi, S.; Lin, Z. Gtc: Guided training of ctc towards efficient and accurate scene text recognition. AAAI Conf. Artif. Intell. 2020, 34, 11005–11012. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Du, Y.; Chen, Z.; Jia, C.; Yin, X.; Zheng, T.; Li, C.; Du, Y.; Jiang, Y.G. Svtr: Scene text recognition with a single visual model. arXiv 2022, arXiv:2205.00159. [Google Scholar]

- Jaderberg, M.; Simonyan, K.; Vedaldi, A.; Zisserman, A. Reading text in the wild with convolutional neural networks. Int. J. Comput. Vis. 2016, 116, 1–20. [Google Scholar] [CrossRef]

- Wan, Z.; He, M.; Chen, H.; Bai, X.; Yao, C. Textscanner: Reading characters in order for robust scene text recognition. AAAI Conf. Artif. Intell. 2020, 34, 12120–12127. [Google Scholar] [CrossRef]

- Xing, L.; Tian, Z.; Huang, W.; Scott, M.R. Convolutional character networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9126–9136. [Google Scholar]

- Fang, S.; Xie, H.; Zha, Z.J.; Sun, N.; Tan, J.; Zhang, Y. Attention and language ensemble for scene text recognition with convolutional sequence modeling. In Proceedings of the 26th ACM International Conference on Multimedia, Seoul, Republic of Korea, 22–26 October 2018; pp. 248–256. [Google Scholar]

- Cheng, Z.; Xu, Y.; Bai, F.; Niu, Y.; Pu, S.; Zhou, S. Aon: Towards arbitrarily-oriented text recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 5571–5579. [Google Scholar]

- Yang, X.; He, D.; Zhou, Z.; Kifer, D.; Giles, C.L. Learning to read irregular text with attention mechanisms. In Proceedings of the 2017 International Joint Conference on Artificial Intelligence (IJCAI), Melbourne, Australia, 19–25 August 2017; Volume 1, p. 3. [Google Scholar]

- Lee, C.Y.; Osindero, S. Recursive recurrent nets with attention modeling for ocr in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2231–2239. [Google Scholar]

- Yue, X.; Kuang, Z.; Lin, C.; Sun, H.; Zhang, W. Robustscanner: Dynamically enhancing positional clues for robust text recognition. In European Conference on Computer Vision; Springer International Publishing: Cham, Switzerland, 2020; pp. 135–151. [Google Scholar]

- Lyu, P.; Yang, Z.; Leng, X.; Wu, X.; Li, R.; Shen, X. 2d attentional irregular scene text recognizer. arXiv 2019, arXiv:1906.05708. [Google Scholar]

- Sheng, F.; Chen, Z.; Xu, B. NRTR: A no-recurrence sequence-to-sequence model for scene text recognition. In Proceedings of the 2019 International Conference on Document Analysis and Recognition (ICDAR), Sydney, NSW, Australia, 20–25 September 2019; pp. 781–786. [Google Scholar]

- Wang, T.; Zhu, Y.; Jin, L.; Luo, C.; Chen, X.; Wu, Y.; Wang, Q.; Cai, M. Decoupled attention network for text recognition. Proc. AAAI Conf. Artif. Intell. 2020, 34, 12216–12224. [Google Scholar] [CrossRef]

- Zheng, T.; Chen, Z.; Fang, S.; Xie, H.; Jiang, Y.G. Cdistnet: Perceiving multi-domain character distance for robust text recognition. arXiv 2021, arXiv:2111.11011. [Google Scholar] [CrossRef]

- Na, B.; Kim, Y.; Park, S. Multi-modal text recognition networks: Interactive enhancements between visual and semantic features. In European Conference on Computer Vision; Springer Nature: Cham, Switzerland, 2022; pp. 446–463. [Google Scholar]

- Chu, X.; Wang, Y. IterVM: Iterative Vision Modeling Module for Scene Text Recognition. In Proceedings of the 2022 26th International Conference on Pattern Recognition (ICPR), Montreal, QC, Canada, 21–25 August 2022; pp. 1393–1399. [Google Scholar]

- Zheng, T.; Chen, Z.; Bai, J.; Xie, H.; Jiang, Y.G. TPS++: Attention-Enhanced Thin-Plate Spline for Scene Text Recognition. arXiv 2023, arXiv:2305.05322. [Google Scholar]

- Bookstein, F.L. Principal warps: Thin-plate splines and the decomposition of deformations. IEEE Trans. Pattern Anal. Mach. Intell. 1989, 11, 567–585. [Google Scholar] [CrossRef]

- Jaderberg, M.; Simonyan, K.; Zisserman, A. Spatial transformer networks. Adv. Neural Inf. Process. Syst. 2015, 28, 7–12. [Google Scholar]

- Luo, C.; Jin, L.; Sun, Z. Moran: A multi-object rectified attention network for scene text recognition. Pattern Recognit. 2019, 90, 109–118. [Google Scholar] [CrossRef]

- Ai, L.; Jumai, H.; Halidan, H.; Huang, H. Recognition of Uighur Text in Video Images. Comput. Eng. Appl. 2011, 47, 190–192. [Google Scholar]

- Chen, Q. Research on Classification and Recognition Technology of Printed Uyghur Text Recognition System. Master’s Thesis, Xinjiang University, Urumqi, China, 2012. [Google Scholar]

- Bai, Y. Printed Uighur Word Recognition in Arabic Script. Doctoral Dissertation, Xidian University, Xi’an, China, 2014. [Google Scholar]

- Lang, X. Segmentation-Based Recognition of Printed Uighur Words in Arabic Script. Doctoral Dissertation, Xidian University, Xi’an, China, 2015. [Google Scholar]

- Peng, L. A Recognition Method for Uighur and Arabic Text Based on HMM and Statistical Language Model. Comput. Appl. Softw. 2015, 32, 171–174. [Google Scholar]

- Li, P.; Zhu, J.; Peng, L.; Guo, Y. RNN based Uyghur text line recognition and its training strategy. In Proceedings of the 2016 12th IAPR Workshop on Document Analysis Systems (DAS), Santorini, Greece, 11–14 April 2016; pp. 19–24. [Google Scholar]

- Wang, X. Research and Application of Key Technologies in Recognition of Printed Uighur Text. Doctoral Dissertation, Xidian University, Xi’an, China, 2017. [Google Scholar]

- Li, D. Classifier Design for Recognition of Printed Uighur Word. Doctoral Dissertation, Xidian University, Xi’an, China, 2019. [Google Scholar]

- Chen, Y. Research and Design of Uighur Text Detection and Recognition Based on Deep Learning. Master’s Thesis, Chengdu University of Technology, Chengdu, China, 2020. [Google Scholar]

- Maitituoheti, A. Neural Network-Based Uighur Image Text Detection and Recognition Technology. Master’s Thesis, Xinjiang University, Urumqi, China, 2020. [Google Scholar]

- Tang, J.; Silamu, W.; Xu, M.; Xiong, L.; Wang, M. Scan-based Recognition of Uighur Text Using Deep Learning. J. Northeast. Norm. Univ. (Nat. Sci. Ed.) 2021, 53, 71–76. [Google Scholar]

- Zhang, S. Research on Offline Uighur Handwritten Signature Authentication Technology Based on Local Features. Master’s Thesis, Xinjiang University, Urumqi, China, 2019. [Google Scholar]

- Ibrayim, M.; Simayi, W.; Hamdulla, A. Unconstrained online handwritten Uyghur word recognition based on recurrent neural networks and connectionist temporal classification. Int. J. Biom. 2021, 13, 51–63. [Google Scholar] [CrossRef]

- Li, W.; Mahpirat Kang, W.; Aysa, A.; Ubul, K. Multi-lingual Hybrid Handwritten Signature Recognition Based on Deep Residual Attention Network. In Biometric Recognition: 15th Chinese Conference, CCBR 2021, Shanghai, China, 10–12 September 2021, Proceedings 15; Springer International Publishing: Cham, Switzerland, 2021; pp. 148–156. [Google Scholar]

- Xamxidin, N.; Mahpirat; Yao, Z.; Aysa, A.; Ubul, K. Multilingual Offline Signature Verification Based on Improved Inverse Discriminator Network. Information 2022, 13, 293. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Gupta, A.; Vedaldi, A.; Zisserman, A. Synthetic data for text localisation in natural images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2315–2324. [Google Scholar]

- Atienza, R. Data augmentation for scene text recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 1561–1570. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).