Diabetic Plantar Foot Segmentation in Active Thermography Using a Two-Stage Adaptive Gamma Transform and a Deep Neural Network

Abstract

:1. Introduction

2. Methods

2.1. Thermography Setup

2.2. Experimental Procedure

2.3. Experimental Subjects

2.4. Plantar Foot Thermal Images

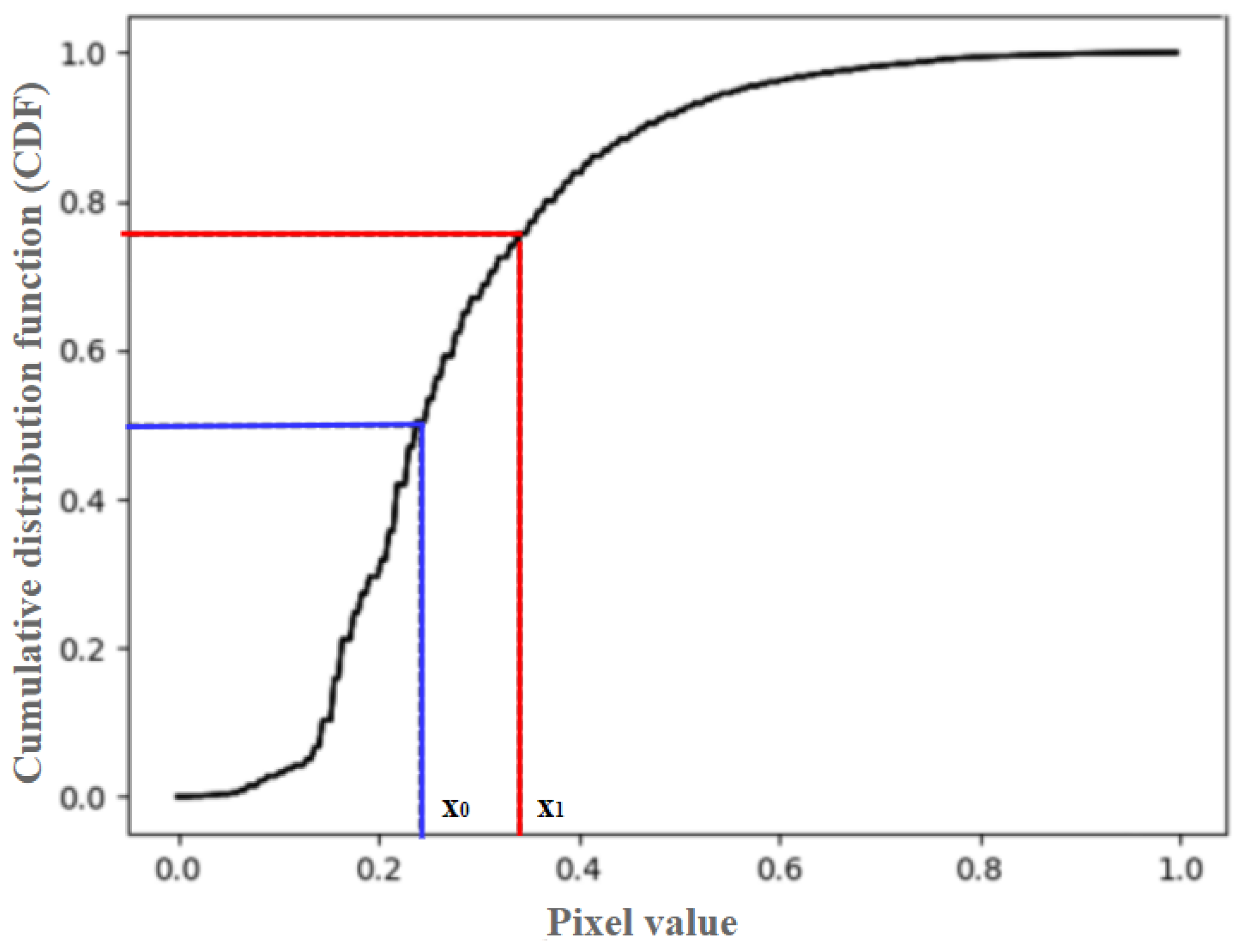

2.5. Two-Stage Adaptive Gamma Transform

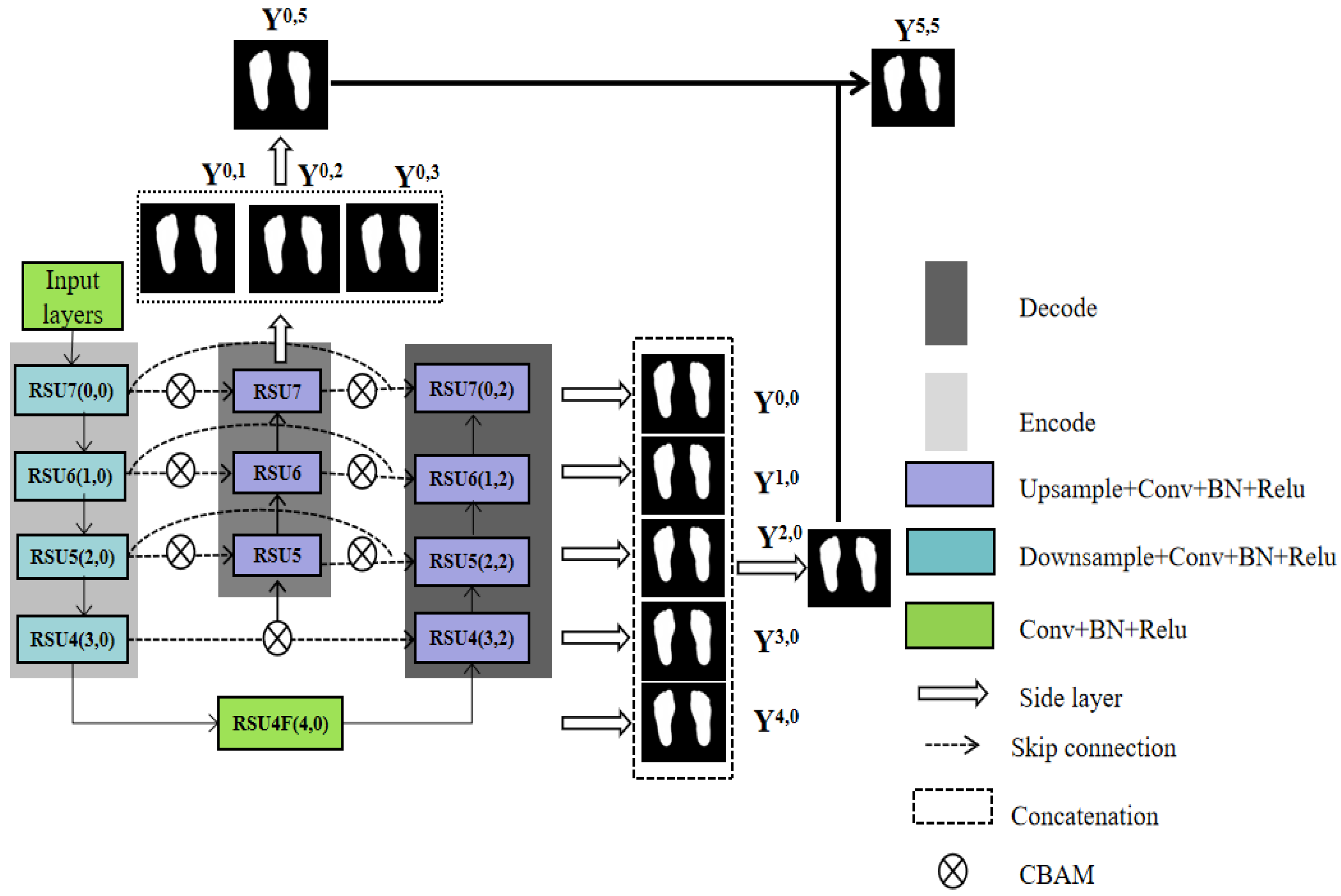

2.6. Plantar Foot Segmentation Network (PFSNet)

2.6.1. Input Module

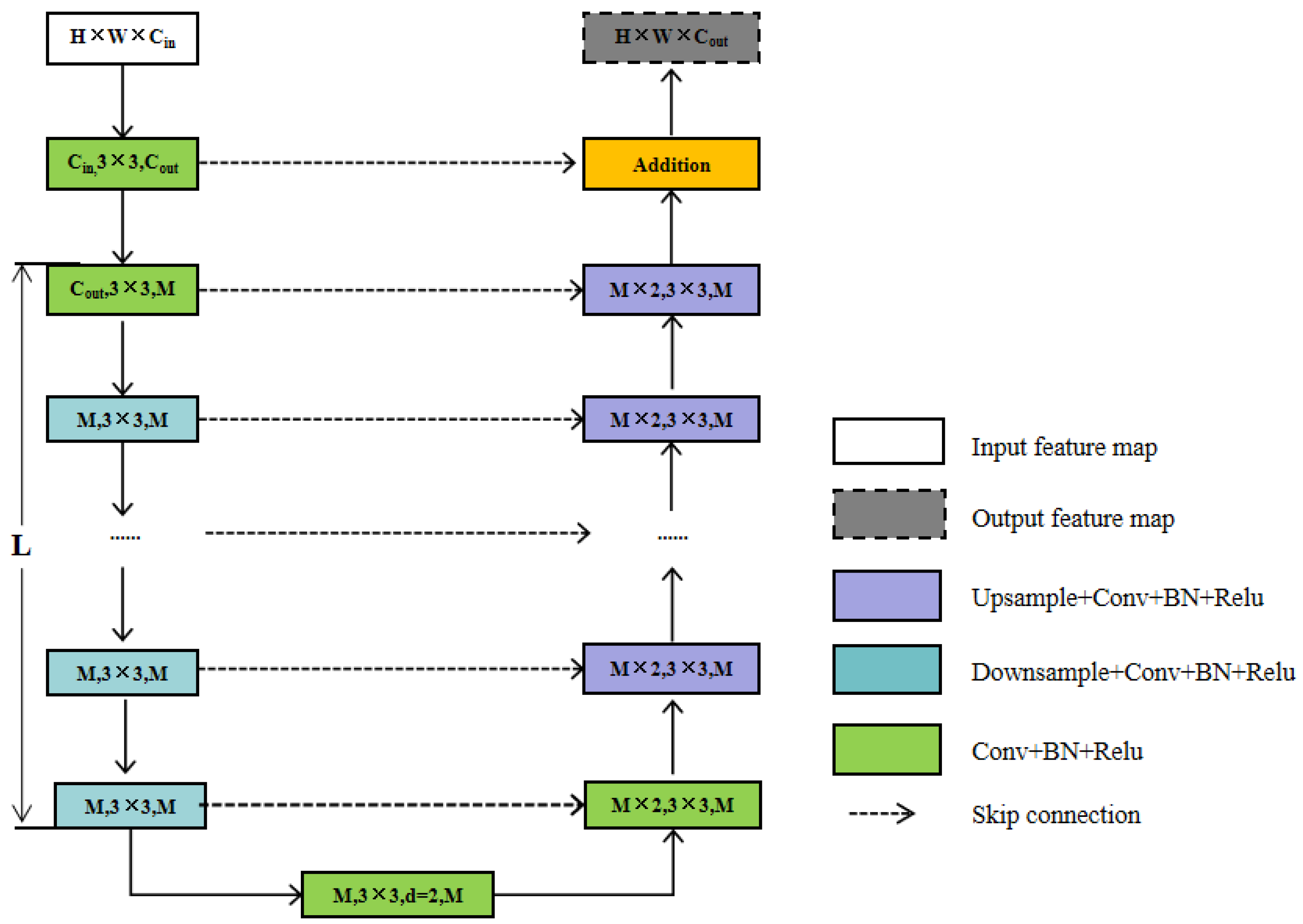

2.6.2. Feature Extraction Module

- (1)

- Input layer. It transforms the input feature map, X(H × W × Cin), into an intermediate map, F1(x), with Cout number of channels.

- (2)

- Using the intermediate feature map, F1(x), as input to extract and encode multi-scale contextual information U(F1(x). When L is larger, the RSU is deeper and has more pooling operations, with a larger perceptual area to extract richer local and global features. Configuring this parameter allows the extraction of multi-scale features from an input with an arbitrary spatial resolution. This process reduces the detail loss caused by direct up-sampling at large scales.

- (3)

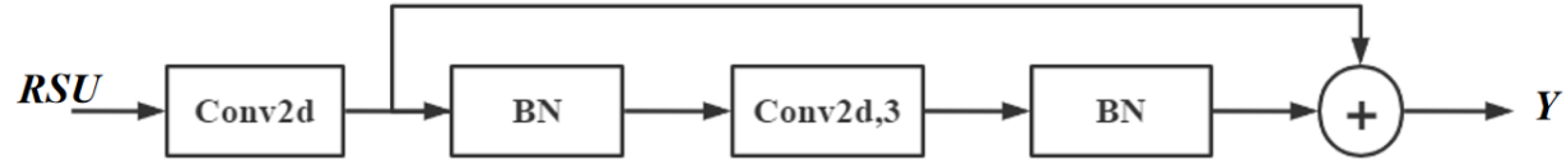

- Fusion of local features and multi-scale features by summation F1(x) + U(F1(x)). RSU7, RSU6, RSU5, RSU4, and RSU4F are used in the encoder. The numbers 7, 6, 5, and 4, refer to the height L of the RSU. L is usually configured according to the spatial resolution of the input feature map. At RSU4, the feature map resolution is relatively low, and further down-sampling of these feature maps results in the loss of helpful context. Therefore, in the RSU4 stage, RSU4F is used, where F denotes that the RSU-L is an extended version in which we replace the merging and up-sampling operations by increasing the number of inflated convolutions. This means that all intermediate feature maps of RSU-4F have the same resolution as the input feature maps.

2.6.3. Deep Supervision by Multiple Side-Output Fusion

2.7. Loss Function

3. Experimental Results

3.1. Dataset

3.2. Implementation Details

3.3. Experimental Results of the Two-Stage Adaptive Gamma Transform

3.4. Experimental Results of PFSNet

4. Discussion

4.1. Comparison to Other Recent Studies

4.2. Limitations of the Current Study

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zimmet, P.Z.; Magliano, D.J.; Herman, W.H.; Shaw, J.E. Diabetes: A 21st century challenge. Lancet Diabetes Endocrinol. 2014, 2, 56–64. [Google Scholar] [CrossRef] [PubMed]

- International Diabetes Federation. IDF Diabetes Atlas, 10th ed.; International Diabetes Federation: Brussels, Belgium, 2021. [Google Scholar]

- Fard, A.S.; Esmaelzadeh, M.; Larijani, B. Assessment and treatment of diabetic foot ulcer. Int. J. Clin. Pract. 2007, 61, 1931–1938. [Google Scholar] [CrossRef] [PubMed]

- Care, D. Care in Diabetesd2019. Diabetes Care 2019, 42, S13–S28. [Google Scholar]

- Leung, P.C. Diabetic foot ulcers—A comprehensive review. Surgeon 2007, 5, 219–231. [Google Scholar] [CrossRef] [PubMed]

- Yap, M.H.; Hachiuma, R.; Alavi, A.; Brüngel, R.; Cassidy, B.; Goyal, M.; Zhu, H.; Rückert, J.; Olshansky, M.; Huang, X.; et al. Deep learning in diabetic foot ulcers detection: A comprehensive evaluation. Comput. Biol. Med. 2021, 135, 104596. [Google Scholar] [CrossRef] [PubMed]

- Brady, L.; Wang, Y.-N.; Rombokas, E.; Ledoux, W.R. Comparison of texture-based classification and deep learning for plantar soft tissue histology segmentation. Comput. Biol. Med. 2021, 134, 104491. [Google Scholar] [CrossRef]

- Herman, W.H.; Ye, W.; Griffin, S.J.; Simmons, R.K.; Davies, M.J.; Khunti, K.; Rutten, G.E.H.M.; Sandbaek, A.; Lauritzen, T.; Borch-Johnsen, K.; et al. Early detection and treatment of Type 2 diabetes reduce cardiovascular morbidity and mortality: A Simulation of the results of the Anglo-Danish-Dutch study of intensive treatment in people with screen-detected diabetes in primary care (ADDITION-Europe). Diabetes Care 2015, 38, 1449–1455. [Google Scholar] [CrossRef]

- Bagavathiappan, S.; Philip, J.; Jayakumar, T.; Raj, B.; Rao, P.N.S.; Varalakshmi, M.; Mohan, V. Correlation between plantar foot temperature and diabetic neuropathy: A case study by using an infrared thermal imaging technique. J. Diabetes Sci. Technol. 2010, 4, 1386–1392. [Google Scholar] [CrossRef]

- Lavery, L.A.; Higgins, K.R.; Lanctot, D.R.; Constantinides, G.P.; Zamorano, R.G.; Armstrong, D.G.; Athanasiou, K.A.; Agrawal, C.M. Home monitoring of foot skin temperatures to prevent ulceration. Diabetes Care 2004, 27, 2642–2647. [Google Scholar] [CrossRef]

- Lavery, L.A.; Higgins, K.R.; Lanctot, D.R.; Constantinides, G.P.; Zamorano, R.G.; Athanasiou, K.A.; Armstrong, D.G.; Agrawal, C.M. Preventing diabetic foot ulcer recurrence in high-risk patients: Use of temperature monitoring as a self-assessment tool. Diabetes Care 2007, 30, 14–20. [Google Scholar] [CrossRef]

- Roback, K.; Johansson, M.; Starkhammar, A. Feasibility of a thermographic method for early detection of foot disorders in diabetes. Diabetes Technol. Ther. 2009, 11, 663–667. [Google Scholar] [CrossRef] [PubMed]

- Hernandez-Contreras, D.; Peregrina-Barreto, H.; Rangel-Magdaleno, J.; Gonzalez-Bernal, J. Narrative review: Diabetic foot and infrared thermography. Infrared Phys. Technol. 2016, 78, 105–117. [Google Scholar] [CrossRef]

- Adam, M.; Ng, E.Y.K.; Tan, J.H.; Heng, M.L.; Tong, J.W.K.; Acharya, U.R. Computer aided diagnosis of diabetic foot using infrared thermography: A review. Comput. Biol. Med. 2017, 91, 326–336. [Google Scholar] [CrossRef] [PubMed]

- Hazenberg, C.E.V.B.; van Netten, J.J.; van Baal, S.G.; Bus, S.A. Assessment of signs of foot infection in diabetes patients using photographic foot imaging and infrared thermography. Diabetes Technol. Ther. 2014, 16, 370–377. [Google Scholar] [CrossRef] [PubMed]

- Oduola-Owoo, L.T.; Adeyomoye, A.A.; Olowoyeye, O.A.; Odeniyi, I.A.; Idowu, B.M.; Oduola-Owoo, B.B.; Aderibigbe, A.S. Comparative Doppler ultrasound findings of foot arteries in patients with Type 2 diabetes mellitus and normoglycaemic patients. J. West Afr. Coll. Surg. 2022, 12, 55–63. [Google Scholar] [CrossRef]

- Kurkela, O.; Lahtela, J.; Arffman, M.; Forma, L. Infrared thermography compared to standard care in the prevention and care of diabetic foot: A cost analysis utilizing real-world data and an expert panel. Clin. Outcomes Res. 2023, 15, 111–123. [Google Scholar] [CrossRef]

- Hernandez-Contreras, D.A.; Peregrina-Barreto, H.; Rangel-Magdaleno, J.d.J.; Renero-Carrillo, F.J. Plantar Thermogram Database for the Study of Diabetic Foot Complications. IEEE Access 2019, 7, 161296–161307. [Google Scholar] [CrossRef]

- Kaabouch, N.; Chen, Y.; Anderson, J.; Ames, F.; Paulson, R. Asymmetry analysis based on genetic algorithms for the prediction of foot ulcers. In Proceedings of the Visualization and Data Analysis, San Jose, CA, USA, 19–20 January 2009; Volume 7243, p. 724304. [Google Scholar] [CrossRef]

- Liu, C.; van Netten, J.J.; van Baal, J.G.; Bus, S.A.; van der Heijden, F. Automatic detection of diabetic foot complications with infrared thermography by asymmetric analysis. J. Biomed. Opt. 2015, 20, 26003. [Google Scholar] [CrossRef]

- Bougrine, A.; Harba, R.; Canals, R.; Ledee, R.; Jabloun, M. A joint snake and atlas-based segmentation of plantar foot thermal images. In Proceedings of the 2017 Seventh International Conference on Image Processing Theory, Tools and Applications (IPTA), Montreal, QC, Canada, 28 November–1 December 2017; pp. 1–6. [Google Scholar]

- Bougrine, A.; Harba, R.; Canals, R.; Ledee, R.; Jabloun, M.; Villeneuve, A. Segmentation of plantar foot thermal images using prior information. Sensors 2022, 22, 3835. [Google Scholar] [CrossRef]

- Khandakar, A.; Chowdhury, M.E.H.; Ibne Reaz, M.B.; Md Ali, S.H.; Hasan, M.A.; Kiranyaz, S.; Rahman, T.; Alfkey, R.; Bakar, A.A.A.; Malik, R.A. A machine learning model for early detection of diabetic foot using thermogram images. Comput. Biol. Med. 2021, 137, 104838. [Google Scholar] [CrossRef]

- Bouallal, D.; Douzi, H.; Harba, R. Diabetic foot thermal image segmentation using Double Encoder-ResUnet (DE-ResUnet). J. Med. Eng. Technol. 2022, 46, 378–392. [Google Scholar] [CrossRef] [PubMed]

- Arteaga-Marrero, N.; Hernández, A.; Villa, E.; González-Pérez, S.; Luque, C.; Ruiz-Alzola, J. Segmentation Approaches for Diabetic Foot Disorders. Sensors 2021, 21, 934. [Google Scholar] [CrossRef]

- Bougrine, A.; Harba, R.; Canals, R.; Ledee, R.; Jabloun, M. Jabloun On the segmentation of plantar foot thermal images with Deep Learning. In Proceedings of the 2019 27th European Signal Processing Conference (EUSIPCO), A Coruña, Spain, 2–6 September 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A seep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Brown, D.R.; Heijl, R.; Borup, K.A.; Iversen, B.B.; Palmqvist, A.; Snyder, G.J. Relating phase transition heat capacity to thermal conductivity and effusivity in Cu2Se. Phys. Status Solidi (RRL) Rapid Res. Lett. 2016, 10, 618–621. [Google Scholar] [CrossRef]

- Ring, E.F.J.; Ammer, K. Infrared thermal imaging in medicine. Physiol. Meas. 2012, 33, R33–R46. [Google Scholar] [CrossRef]

- Poynton, C. Digital Video and HD: Algorithms and Interfaces. In Electronics & Electrical; Elsevier Science: San Francisco, CA, USA, 2003; Available online: https://books.google.com.hk/books?id=ra1lcAwgvq4C (accessed on 3 September 2023).

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. UNet++: A nested U-Net architecture for medical image segmentation. In Proceedings of the Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support, Québec City, QC, Canada, 14 September 2018; Stoyanov, D., Taylor, Z., Carneiro, G., Syeda-Mahmood, T., Martel, A., Maier-Hein, L., Tavares, J.M.R.S., Bradley, A., Papa, J.P., Belagiannis, V., et al., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 3–11. [Google Scholar]

- Qin, X.; Zhang, Z.; Huang, C.; Dehghan, M.; Zaiane, O.R.; Jagersand, M. U2-Net: Going deeper with nested U-structure for salient object detection. Pattern Recognit. 2020, 106, 107404. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Li, Z.; Zuo, J.; Zhang, C.; Sun, Y. Pneumothorax image segmentation and prediction with UNet++ and MSOF strategy. In Proceedings of the 2021 IEEE International Conference on Consumer Electronics and Computer Engineering (ICCECE), Guangzhou, China, 15–17 January 2021; pp. 710–713. [Google Scholar] [CrossRef]

- Bottou, L. Stochastic Gradient Descent Tricks. In Neural Networks: Tricks of the Trade: Second Edition; Montavon, G., Orr, G.B., Müller, K.-R., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 421–436. ISBN 978-3-642-35289-8. [Google Scholar]

- Huttenlocher, D.P.; Klanderman, G.A.; Rucklidge, W.J. Comparing images using the Hausdorff distance. IEEE Trans. Pattern Anal. Mach. Intell. 1993, 15, 850–863. [Google Scholar] [CrossRef]

- Jha, S.; Son, L.H.; Kumar, R.; Priyadarshini, I.; Smarandache, F.; Long, H.V. Neutrosophic image segmentation with Dice Coefficients. Measurement 2019, 134, 762–772. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. AAAI 2020, 34, 12993–13000. [Google Scholar] [CrossRef]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, B. Attention U-Net: Learning where to look for the pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar] [CrossRef]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.; Zhou, Y. TransUNet: Transformers make strong encoders for medical image segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar] [CrossRef]

- Ciampa, F.; Mahmoodi, P.; Pinto, F.; Meo, M. Recent advances in active infrared thermography for non-destructive testing of aerospace components. Sensors 2018, 18, 609. [Google Scholar] [CrossRef] [PubMed]

- Bharara, M.; Viswanathan, V.; Cobb, J.E. Cold immersion recovery responses in the diabetic foot with neuropathy. Int. Wound J. 2008, 5, 562–569. [Google Scholar] [CrossRef] [PubMed]

- Bharara, M.; Viswanathan, V.; Cobb, J.E. Warm immersion recovery test in assessment of diabetic neuropathy—A proof of concept study. Int. Wound J. 2008, 5, 570–576. [Google Scholar] [CrossRef] [PubMed]

- Soliz, P.; Duran-Valdez, E.; Saint-Lot, S.; Kurup, A.; Bancroft, A.; Schade, D.S. Schade Functional thermal video imaging of the plantar foot for identifying biomarkers of diabetic peripheral neuropathy. In Proceedings of the 2022 56th Asilomar Conference on Signals, Systems, and Computers, Pacific Grove, CA, USA, 31 October–2 November 2022; pp. 106–110. [Google Scholar]

| Methods | Information Entropy | MSE | PSNR |

|---|---|---|---|

| Two-stage adaptive gamma transform | 5. 14 | 94. 36 | 31. 58 |

| Adaptive gamma transform | 6. 15 | 99. 82 | 28. 33 |

| Fixed gamma transform | 5. 87 | 97. 86 | 27. 39 |

| Histogram equalization | 5. 96 | 98. 37 | 32. 65 |

| No transform (original image) | 6. 80 | - | - |

| Metrics/ Methods | HD (Mean ± STD) | DSC (Mean ± STD) | IOU (Mean ± STD) | Data Augmentation | |

|---|---|---|---|---|---|

| Flipping | Proposed Transform | ||||

| PFSNet | 0.962 ± 0.032 | 0.954 ± 0.005 | 0.973 ± 0.015 | Yes | Yes |

| PFSNet | 0.954 ± 0.026 | 0.942 ± 0.016 | 0.961 ± 0.023 | No | Yes |

| PFSNet | 0.942 ± 0.043 | 0.938 ± 0.007 | 0.956 ± 0.035 | No | No |

| Methods | HD (Mean ± STD) | DSC (Mean ± STD) | IOU (Mean ± STD) |

|---|---|---|---|

| PFSNet (proposed) | 0.962 ± 0.032 | 0.954 ± 0.005 | 0.973 ± 0.014 |

| TransUNet | 0.941 ± 0.016 | 0.949 ± 0.011 | 0.951 ± 0.015 |

| U2Net | 0.952 ± 0.014 | 0.933 ± 0.021 | 0.943 ± 0.041 |

| UNet ++ | 0.933 ± 0.042 | 0.931 ± 0.036 | 0.943 ± 0.043 |

| AttentionUNet | 0.918 ± 0.016 | 0.923 ± 0.018 | 0.916 ± 0.026 |

| UNet | 0.914 ± 0.037 | 0.853 ± 0.026 | 0.896 ± 0.032 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cao, Z.; Zeng, Z.; Xie, J.; Zhai, H.; Yin, Y.; Ma, Y.; Tian, Y. Diabetic Plantar Foot Segmentation in Active Thermography Using a Two-Stage Adaptive Gamma Transform and a Deep Neural Network. Sensors 2023, 23, 8511. https://doi.org/10.3390/s23208511

Cao Z, Zeng Z, Xie J, Zhai H, Yin Y, Ma Y, Tian Y. Diabetic Plantar Foot Segmentation in Active Thermography Using a Two-Stage Adaptive Gamma Transform and a Deep Neural Network. Sensors. 2023; 23(20):8511. https://doi.org/10.3390/s23208511

Chicago/Turabian StyleCao, Zhenjie, Zhi Zeng, Jinfang Xie, Hao Zhai, Ying Yin, Yue Ma, and Yibin Tian. 2023. "Diabetic Plantar Foot Segmentation in Active Thermography Using a Two-Stage Adaptive Gamma Transform and a Deep Neural Network" Sensors 23, no. 20: 8511. https://doi.org/10.3390/s23208511

APA StyleCao, Z., Zeng, Z., Xie, J., Zhai, H., Yin, Y., Ma, Y., & Tian, Y. (2023). Diabetic Plantar Foot Segmentation in Active Thermography Using a Two-Stage Adaptive Gamma Transform and a Deep Neural Network. Sensors, 23(20), 8511. https://doi.org/10.3390/s23208511