Microsurgery Robots: Applications, Design, and Development

Abstract

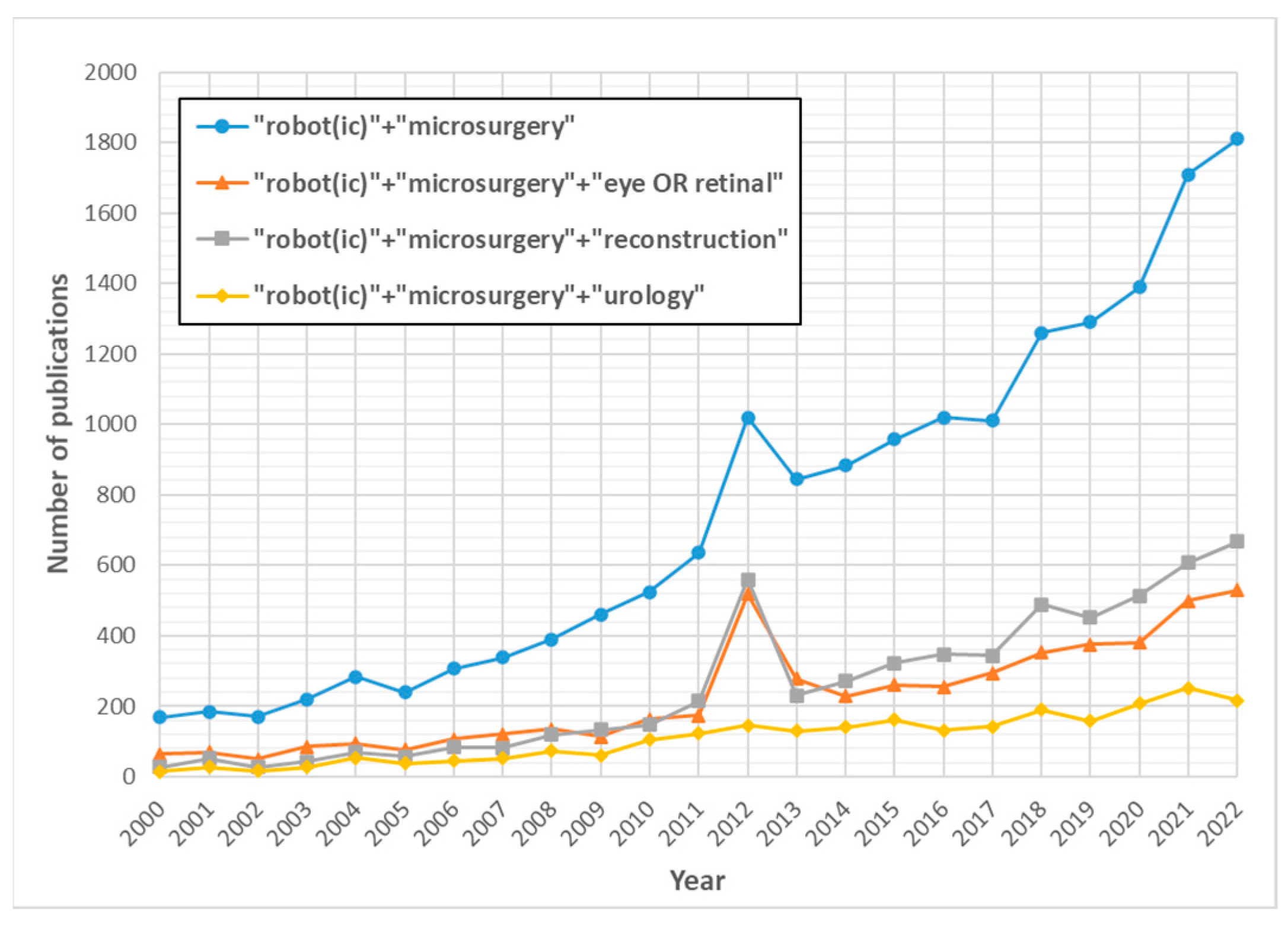

1. Introduction

2. Challenges of Microsurgery in Various Surgical Specialties

2.1. Ophthalmology

2.2. Otolaryngology

2.3. Neurosurgery

2.4. Reconstructive Surgery

2.5. Urology

2.6. Summary

- Microsurgery involves the manipulation of micron-scale targets, including the treatment of delicate and fragile tissues (such as epiretinal membranes or brain tissues), as well as suturing or injecting small vessels, nerves, and lymphatic channels. These tasks require a high degree of precision, and inadvertent tremors as the surgeon manipulates the instruments can reduce accuracy and potentially damage the targets;

- The surgeon’s perception of the surgical environment is limited in microsurgery. The limited field of view and depth of field of the surgical microscope makes it difficult to perceive the position and depth information of small or transparent targets, and the subtle interaction forces during the surgical procedure can sometimes go unnoticed by the surgeon;

- Microsurgical procedures require surgeons to maintain a high level of concentration, often performing prolonged surgical tasks in ergonomically unfavorable positions. This can lead to physical and mental fatigue, increasing the risk of inadvertent errors;

- Due to the precision and complexity of microsurgical tasks, surgeons require extensive professional training before performing clinical procedures.

3. Key Technologies of the MSR Systems

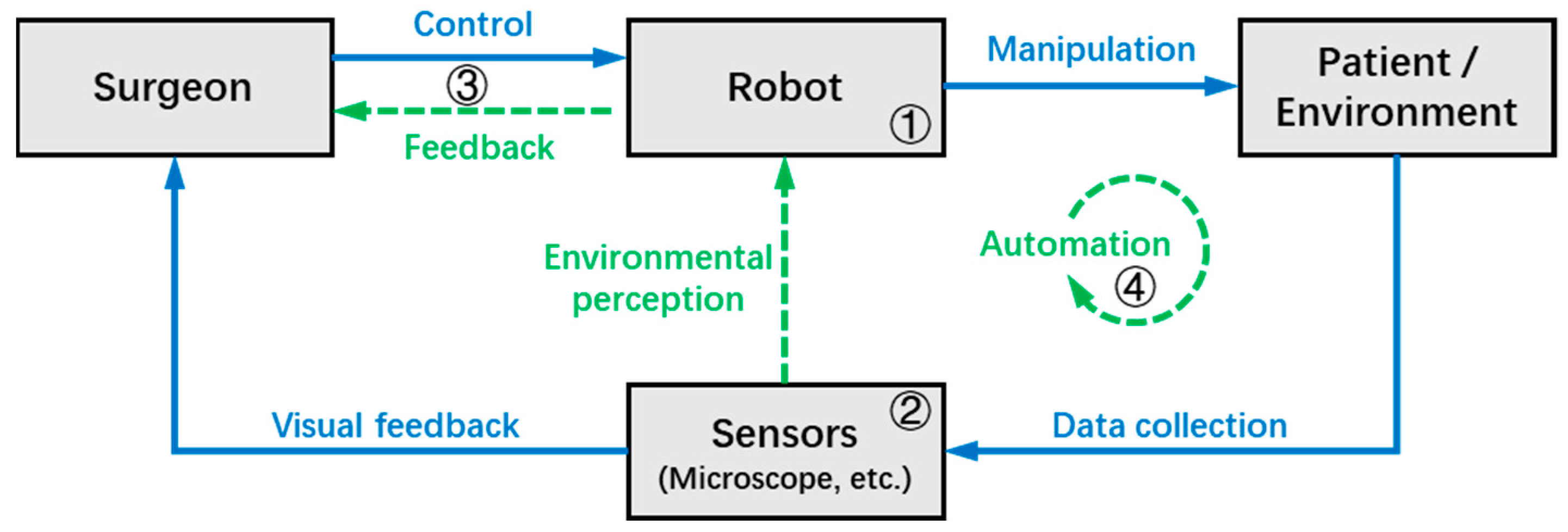

3.1. Concept of Robotics in Microsurgery

- ➀

- Operation modes and mechanism designs: As the foundation of the robot system, this section discusses the structural design and control types of the MSR;

- ➁

- Sensing and perception: This is the medium through which surgeons and robots perceive the surgical environment, and this section discusses the techniques that use MSR systems to collect environmental data;

- ➂

- Human–machine interaction (HMI): This section focuses on the interaction and collaboration between the surgeon and MSR, discussing techniques that can improve surgeon precision and comfort, as well as provide more intuitive feedback on surgical information;

- ➃

- Automation: This section discusses technologies for the robot to automatically or semi-automatically perform surgical tasks, which can improve surgical efficiency and reduce the workload of the surgeon.

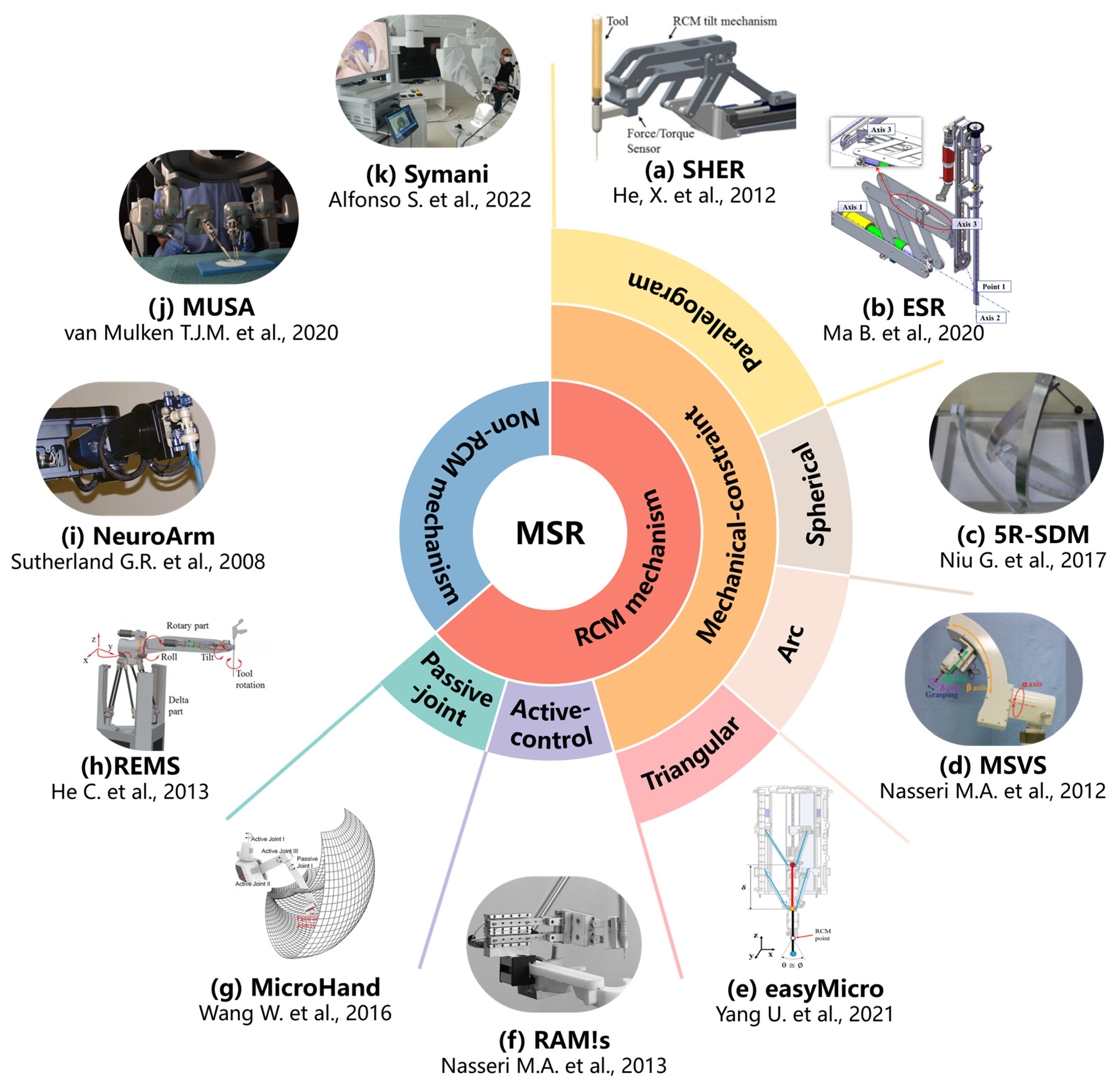

3.2. MSR Operation Modes and Mechanism Designs

- Passive-joint RCM mechanism.

- Active-control RCM mechanism.

- Mechanical-constraint RCM mechanism.

3.3. Sensing and Perception in MSR

3.3.1. Imaging Modalities

- Magnetic resonance imaging and computed tomography

- Optical coherence tomography

- Near-infrared fluorescence

- Other imaging technologies

3.3.2. 3D Localization

- Target detection

- Depth perception based on microscope

- Depth perception based on other imaging methods

3.3.3. Force Sensing

- Electrical strain gauge-based force sensors

- Optical fiber-based force sensors

3.4. Human–Machine Interaction (HMI)

3.4.1. Force Feedback

- Direct force feedback

- Sensory substitution-based force feedback

3.4.2. Improved Control Performance

- Tremor filtering

- Motion scaling

- Virtual fixture

3.4.3. Extended Reality (XR) Environment

- High-fidelity 3D reconstruction

- XR for preoperative training

- XR for intraoperative guidance

3.5. Automation

- Current automated methods

- Potential automated methods

4. Classic MSR Systems

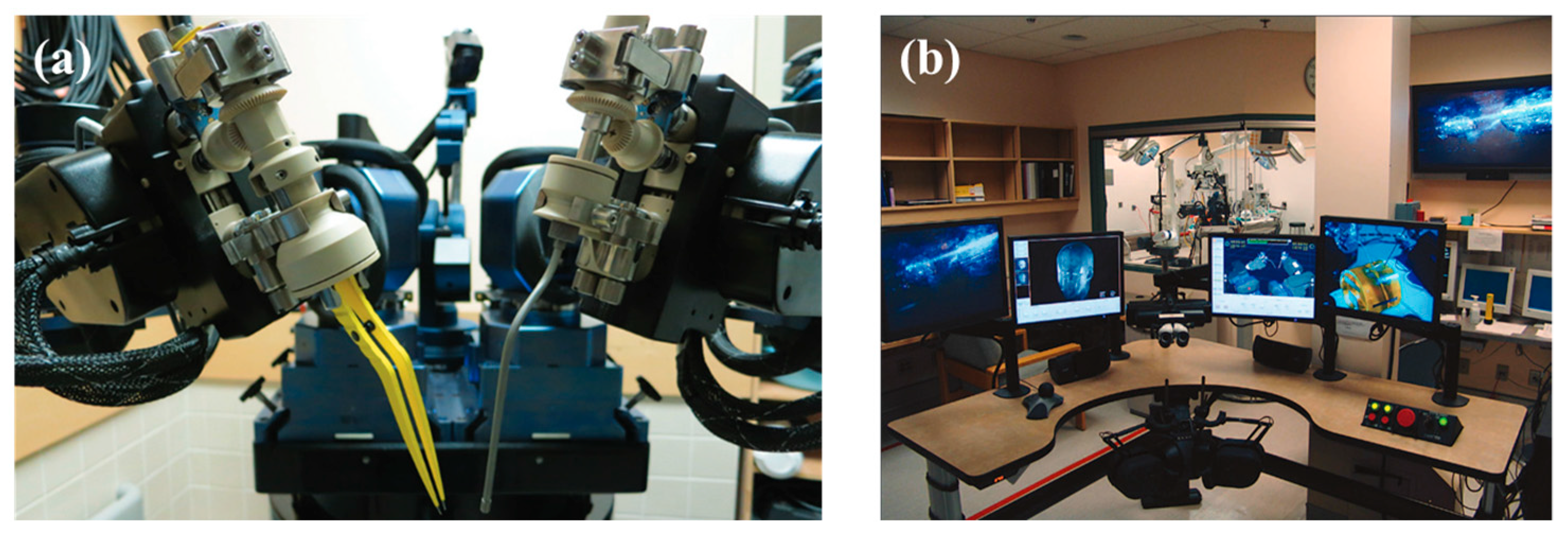

4.1. NeuroArm System

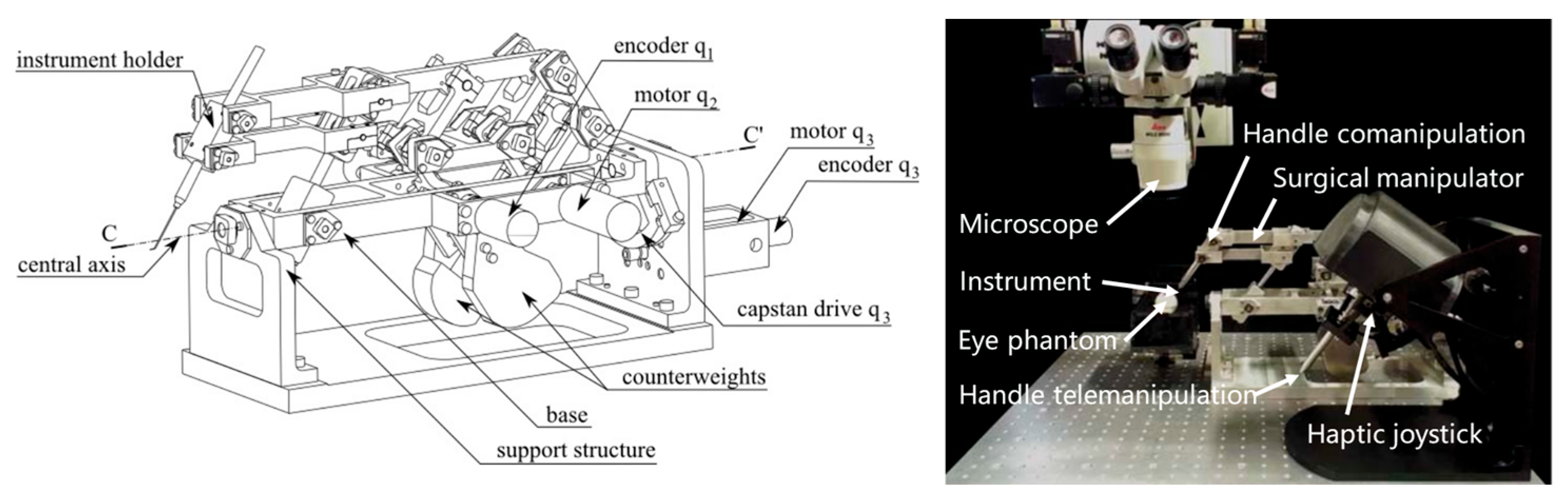

4.2. REMS

4.3. MUSA System

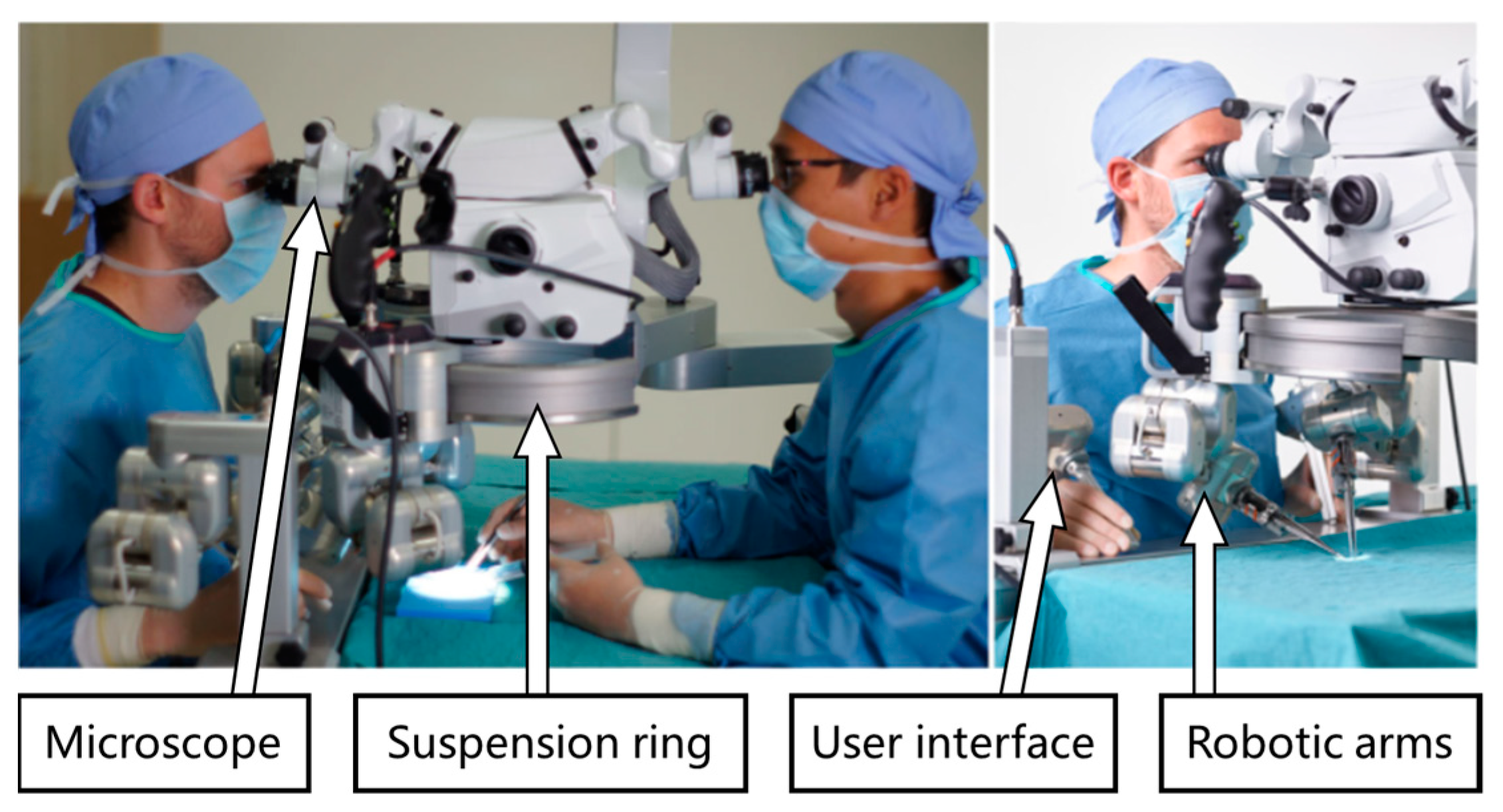

4.4. IRISS

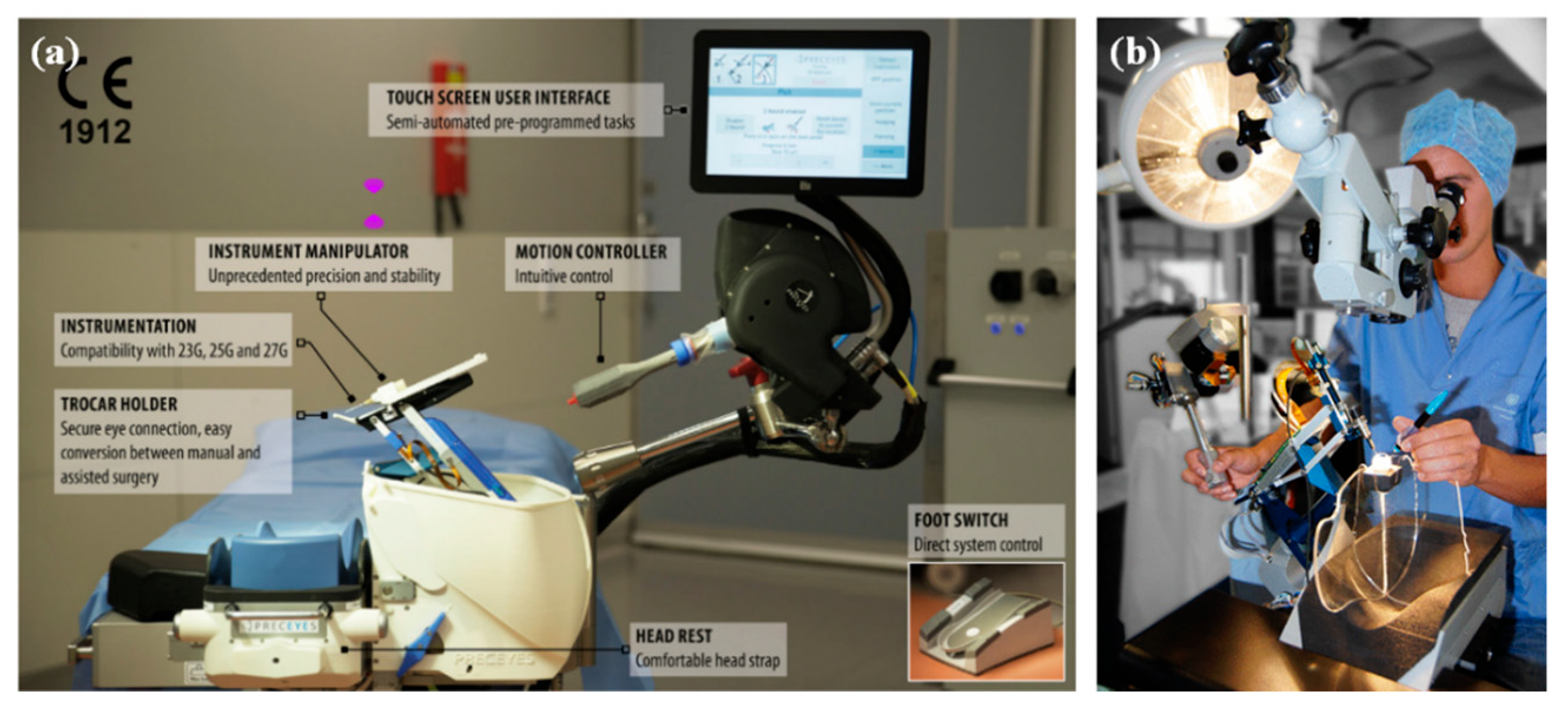

4.5. Preceyes Surgical System

4.6. Co-Manipulator System

4.7. Main Parameters of Classic MSR Systems

5. Current Challenges and Future Directions

5.1. Current Challenges

5.2. Future Directions

5.2.1. Further Human Factors Consideration

5.2.2. Multiple Sensor Fusion

5.2.3. Higher Level of Autonomy

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mattos, L.; Caldwell, D.; Peretti, G.; Mora, F.; Guastini, L.; Cingolani, R. Microsurgery Robots: Addressing the Needs of High-Precision Surgical Interventions. Swiss Med. Wkly. 2016, 146, w14375. [Google Scholar] [CrossRef] [PubMed]

- Ahronovich, E.Z.; Simaan, N.; Joos, K.M. A Review of Robotic and OCT-Aided Systems for Vitreoretinal Surgery. Adv. Ther. 2021, 38, 2114–2129. [Google Scholar] [CrossRef] [PubMed]

- Gijbels, A.; Vander Poorten, E.B.; Stalmans, P.; Van Brussel, H.; Reynaerts, D. Design of a Teleoperated Robotic System for Retinal Surgery. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 2357–2363. [Google Scholar]

- Sutherland, G.; Maddahi, Y.; Zareinia, K.; Gan, L.; Lama, S. Robotics in the Neurosurgical Treatment of Glioma. Surg. Neurol. Int. 2015, 6, 1. [Google Scholar] [CrossRef] [PubMed]

- Xiao, J.; Wu, Q.; Sun, D.; He, C.; Chen, Y. Classifications and Functions of Vitreoretinal Surgery Assisted Robots-A Review of the State of the Art. In Proceedings of the 2019 International Conference on Intelligent Transportation, Big Data & Smart City (ICITBS), Changsha, China, 12–13 January 2019; pp. 474–484. [Google Scholar]

- Uneri, A.; Balicki, M.A.; Handa, J.; Gehlbach, P.; Taylor, R.H.; Iordachita, I. New Steady-Hand Eye Robot with Micro-Force Sensing for Vitreoretinal Surgery. In Proceedings of the 2010 3rd IEEE RAS & EMBS International Conference on Biomedical Robotics and Biomechatronics, Tokyo, Japan, 26–29 September 2010; pp. 814–819. [Google Scholar]

- Gijbels, A.; Smits, J.; Schoevaerdts, L.; Willekens, K.; Vander Poorten, E.B.; Stalmans, P.; Reynaerts, D. In-Human Robot-Assisted Retinal Vein Cannulation, A World First. Ann. Biomed. Eng. 2018, 46, 1676–1685. [Google Scholar] [CrossRef] [PubMed]

- Tan, Y.P.A.; Liverneaux, P.; Wong, J.K.F. Current Limitations of Surgical Robotics in Reconstructive Plastic Microsurgery. Front. Surg. 2018, 5, 22. [Google Scholar] [CrossRef] [PubMed]

- Aitzetmüller, M.M.; Klietz, M.-L.; Dermietzel, A.F.; Hirsch, T.; Kückelhaus, M. Robotic-Assisted Microsurgery and Its Future in Plastic Surgery. JCM 2022, 11, 3378. [Google Scholar] [CrossRef] [PubMed]

- Vander Poorten, E.; Riviere, C.N.; Abbott, J.J.; Bergeles, C.; Nasseri, M.A.; Kang, J.U.; Sznitman, R.; Faridpooya, K.; Iordachita, I. Robotic Retinal Surgery. In Handbook of Robotic and Image-Guided Surgery; Elsevier: Amsterdam, The Netherlands, 2020; pp. 627–672. ISBN 978-0-12-814245-5. [Google Scholar]

- Smith, J.A.; Jivraj, J.; Wong, R.; Yang, V. 30 Years of Neurosurgical Robots: Review and Trends for Manipulators and Associated Navigational Systems. Ann. Biomed. Eng. 2016, 44, 836–846. [Google Scholar] [CrossRef] [PubMed]

- Gudeloglu, A.; Brahmbhatt, J.V.; Parekattil, S.J. Robotic Microsurgery in Male Infertility and Urology—Taking Robotics to the next Level. Transl. Androl. Urol. 2014, 3, 11. [Google Scholar]

- Zhang, D.; Si, W.; Fan, W.; Guan, Y.; Yang, C. From Teleoperation to Autonomous Robot-Assisted Microsurgery: A Survey. Mach. Intell. Res. 2022, 19, 288–306. [Google Scholar] [CrossRef]

- Burk, S.E.; Mata, A.P.D.; Snyder, M.E.; Rosa, R.H.; Foster, R.E. Indocyanine Green–Assisted Peeling of the Retinal Internal Limiting Membrane. Ophthalmology 2000, 107, 2010–2014. [Google Scholar] [CrossRef]

- Matsunaga, N.; Ozeki, H.; Hirabayashi, Y.; Shimada, S.; Ogura, Y. Histopathologic Evaluation of the Internal Limiting Membrane Surgically Excised from Eyes with Diabetic Maculopathy. Retina 2005, 25, 311–316. [Google Scholar] [CrossRef] [PubMed]

- Iordachita, I.I.; De Smet, M.D.; Naus, G.; Mitsuishi, M.; Riviere, C.N. Robotic Assistance for Intraocular Microsurgery: Challenges and Perspectives. Proc. IEEE 2022, 110, 893–908. [Google Scholar] [CrossRef]

- Charles, S. Techniques and Tools for Dissection of Epiretinal Membranes. Graefe’s Arch. Clin. Exp. Ophthalmol. 2003, 241, 347–352. [Google Scholar] [CrossRef]

- Wilkins, J.R.; Puliafito, C.A.; Hee, M.R.; Duker, J.S.; Reichel, E.; Coker, J.G.; Schuman, J.S.; Swanson, E.A.; Fujimoto, J.G. Characterization of Epiretinal Membranes Using Optical Coherence Tomography. Ophthalmology 1996, 103, 2142–2151. [Google Scholar] [CrossRef] [PubMed]

- Rogers, S.; McIntosh, R.L.; Cheung, N.; Lim, L.; Wang, J.J.; Mitchell, P.; Kowalski, J.W.; Nguyen, H.; Wong, T.Y. The Prevalence of Retinal Vein Occlusion: Pooled Data from Population Studies from the United States, Europe, Asia, and Australia. Ophthalmology 2010, 117, 313–319.e1. [Google Scholar] [CrossRef] [PubMed]

- McIntosh, R.L.; Rogers, S.L.; Lim, L.; Cheung, N.; Wang, J.J.; Mitchell, P.; Kowalski, J.W.; Nguyen, H.P.; Wong, T.Y. Natural History of Central Retinal Vein Occlusion: An Evidence-Based Systematic Review. Ophthalmology 2010, 117, 1113–1123.e15. [Google Scholar] [CrossRef] [PubMed]

- Willekens, K.; Gijbels, A.; Schoevaerdts, L.; Esteveny, L.; Janssens, T.; Jonckx, B.; Feyen, J.H.M.; Meers, C.; Reynaerts, D.; Vander Poorten, E.; et al. Robot-Assisted Retinal Vein Cannulation in an in Vivo Porcine Retinal Vein Occlusion Model. Acta Ophthalmol. 2017, 95, 270–275. [Google Scholar] [CrossRef] [PubMed]

- Gerber, M.J.; Hubschman, J.-P.; Tsao, T.-C. Automated Retinal Vein Cannulation on Silicone Phantoms Using Optical-Coherence-Tomography-Guided Robotic Manipulations. IEEE/ASME Trans. Mechatron. 2021, 26, 2758–2769. [Google Scholar] [CrossRef]

- Riviere, C.N.; Jensen, P.S. A Study of Instrument Motion in Retinal Microsurgery. In Proceedings of the 22nd Annual International Conference of the IEEE Engineering in Medicine and Biology Society (Cat. No.00CH37143), Chicago, IL, USA, 23–28 July 2000; Volume 1, pp. 59–60. [Google Scholar]

- Gonenc, B.; Tran, N.; Riviere, C.N.; Gehlbach, P.; Taylor, R.H.; Iordachita, I. Force-Based Puncture Detection and Active Position Holding for Assisted Retinal Vein Cannulation. In Proceedings of the 2015 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), San Diego, CA, USA, 13–15 September; pp. 322–327.

- Stout, J.T.; Francis, P.J. Surgical Approaches to Gene and Stem Cell Therapy for Retinal Disease. Human. Gene Ther. 2011, 22, 531–535. [Google Scholar] [CrossRef]

- Peng, Y.; Tang, L.; Zhou, Y. Subretinal Injection: A Review on the Novel Route of Therapeutic Delivery for Vitreoretinal Diseases. Ophthalmic Res. 2017, 58, 217–226. [Google Scholar] [CrossRef]

- Yang, K.; Jin, X.; Wang, Z.; Fang, Y.; Li, Z.; Yang, Z.; Cong, J.; Yang, Y.; Huang, Y.; Wang, L. Robot-Assisted Subretinal Injection System: Development and Preliminary Verification. BMC Ophthalmol. 2022, 22, 484. [Google Scholar] [CrossRef] [PubMed]

- Chan, A. Normal Macular Thickness Measurements in Healthy Eyes Using Stratus Optical Coherence Tomography. Arch. Ophthalmol. 2006, 124, 193. [Google Scholar] [CrossRef] [PubMed]

- Zhou, M.; Huang, K.; Eslami, A.; Roodaki, H.; Zapp, D.; Maier, M.; Lohmann, C.P.; Knoll, A.; Nasseri, M.A. Precision Needle Tip Localization Using Optical Coherence Tomography Images for Subretinal Injection. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 4033–4040. [Google Scholar]

- He, C.; Olds, K.; Akst, L.M.; Ishii, M.; Chien, W.W.; Iordachita, I.; Taylor, R. Evaluation, Optimization, and Verification of the Wrist Mechanism of a New Cooperatively Controlled Bimanual ENT Microsurgery Robot. In Proceedings of the ASME 2012 International Mechanical Engineering Congress and Exposition, Houston, TX, USA, 9-15 November 2012; Volume 2: Biomedical and Biotechnology; American Society of Mechanical Engineers: New York, NY, USA, 2012; pp. 155–164. [Google Scholar]

- Leinung, M.; Baron, S.; Eilers, H.; Heimann, B.; Bartling, S.; Heermann, R.; Lenarz, T.; Majdani, O. Robotic-Guided Minimally-Invasive Cochleostomy: First Results. GMS CURAC 2007, 2, Doc05. [Google Scholar]

- San-juan, D.; Barges-Coll, J.; Gómez Amador, J.L.; Díaz, M.P.; Alarcón, A.V.; Escanio, E.; Anschel, D.J.; Padilla, J.A.M.; Barradas, V.A.; Alcantar Aguilar, M.A.; et al. Intraoperative Monitoring of the Abducens Nerve in Extended Endonasal Endoscopic Approach: A Pilot Study Technical Report. J. Electromyogr. Kinesiol. 2014, 24, 558–564. [Google Scholar] [CrossRef] [PubMed]

- Burgner, J.; Rucker, D.C.; Gilbert, H.B.; Swaney, P.J.; Russell, P.T.; Weaver, K.D.; Webster, R.J. A Telerobotic System for Transnasal Surgery. IEEE/ASME Trans. Mechatron. 2014, 19, 996–1006. [Google Scholar] [CrossRef] [PubMed]

- Cherian, I.; Burhan, H.; Efe, I.E.; Jacquesson, T.; Maldonado, I.L. Innovations in Microscopic Neurosurgery. In Digital Anatomy; Uhl, J.-F., Jorge, J., Lopes, D.S., Campos, P.F., Eds.; Human–Computer Interaction Series; Springer International Publishing: Cham, Germany, 2021; pp. 243–256. ISBN 978-3-030-61904-6. [Google Scholar]

- Doulgeris, J.J.; Gonzalez-Blohm, S.A.; Filis, A.K.; Shea, T.M.; Aghayev, K.; Vrionis, F.D. Robotics in Neurosurgery: Evolution, Current Challenges, and Compromises. Cancer Control 2015, 22, 352–359. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, S.I.; Javed, G.; Mubeen, B.; Bareeqa, S.B.; Rasheed, H.; Rehman, A.; Phulpoto, M.M.; Samar, S.S.; Aziz, K. Robotics in Neurosurgery: A Literature Review. J. Pak. Med Assoc. 2018, 68, 258–263. [Google Scholar] [PubMed]

- Sutherland, G.R.; Latour, I.; Greer, A.D.; Fielding, T.; Feil, G.; Newhook, P. AN IMAGE-GUIDED MAGNETIC RESONANCE-COMPATIBLE SURGICAL ROBOT. Neurosurgery 2008, 62, 286–293. [Google Scholar] [CrossRef]

- Mamelak, A.N.; Jacoby, D.B. Targeted Delivery of Antitumoral Therapy to Glioma and Other Malignancies with Synthetic Chlorotoxin (TM-601). Expert. Opin. Drug Deliv. 2007, 4, 175–186. [Google Scholar] [CrossRef]

- Maddahi, Y.; Zareinia, K.; Gan, L.S.; Sutherland, C.; Lama, S.; Sutherland, G.R. Treatment of Glioma Using neuroArm Surgical System. BioMed Res. Int. 2016, 2016, 9734512. [Google Scholar] [CrossRef]

- Mitsuishi, M.; Morita, A.; Sugita, N.; Sora, S.; Mochizuki, R.; Tanimoto, K.; Baek, Y.M.; Takahashi, H.; Harada, K. Master-Slave Robotic Platform and Its Feasibility Study for Micro-Neurosurgery. Int. J. Med. Robot. Comput. Assist. Surg. 2013, 9, 180–189. [Google Scholar] [CrossRef] [PubMed]

- Cau, R.R. Design and Realization of a Master-Slave System for Reconstructive Microsurgery. JNS 2014, 111, 1141–1149. [Google Scholar] [CrossRef]

- Chang, C.-C.; Huang, J.-J.; Wu, C.-W.; Craft, R.O.; Liem, A.A.M.-L.; Shen, J.-H.; Cheng, M.-H. A Strategic Approach for DIEP Flap Breast Reconstruction in Patients With a Vertical Midline Abdominal Scar. Ann. Plast. Surg. 2014, 73, S6–S11. [Google Scholar] [CrossRef] [PubMed]

- Vanthournhout, L.; Szewczyk, J.; Duisit, J.; Lengelé, B.; Raucent, B.; Herman, B. ASTEMA: Design and Preliminary Performance Assessment of a Novel Tele-Microsurgery System. Mechatronics 2022, 81, 102689. [Google Scholar] [CrossRef]

- Cano, R.; Lasso, J. Practical Solutions for Lymphaticovenous Anastomosis. J. Reconstr Microsurg. 2012, 29, 1–4. [Google Scholar] [CrossRef] [PubMed]

- Chao, A.H.; Schulz, S.A.; Povoski, S.P. The Application of Indocyanine Green (ICG) and near-Infrared (NIR) Fluorescence Imaging for Assessment of the Lymphatic System in Reconstructive Lymphaticovenular Anastomosis Surgery. Expert. Rev. Med. Devices 2021, 18, 367–374. [Google Scholar] [CrossRef] [PubMed]

- Pastuszak, A.W.; Wenker, E.P.; Lipshultz, L.I. The History of Microsurgery in Urology. Urology 2015, 85, 971–975. [Google Scholar] [CrossRef] [PubMed]

- Parekattil, S.J.; Esteves, S.C.; Agarwal, A. (Eds.) Male Infertility: Contemporary Clinical Approaches, Andrology, ART and Antioxidants; Springer International Publishing: Cham, Germany, 2020; ISBN 978-3-030-32299-1. [Google Scholar]

- Ladha, R.; Meenink, T.; Smit, J.; de Smet, M.D. Advantages of Robotic Assistance over a Manual Approach in Simulated Subretinal Injections and Its Relevance for Gene Therapy. Gene Ther. 2021, 30, 264–270. [Google Scholar] [CrossRef]

- Zhang, D.; Chen, J.; Li, W.; Bautista Salinas, D.; Yang, G.-Z. A Microsurgical Robot Research Platform for Robot-Assisted Microsurgery Research and Training. Int. J. CARS 2020, 15, 15–25. [Google Scholar] [CrossRef]

- Barbon, C.; Grünherz, L.; Uyulmaz, S.; Giovanoli, P.; Lindenblatt, N. Exploring the Learning Curve of a New Robotic Microsurgical System for Microsurgery. JPRAS Open 2022, 34, 126–133. [Google Scholar] [CrossRef]

- van Mulken, T.J.M.; Boymans, C.A.E.M.; Schols, R.M.; Cau, R.; Schoenmakers, F.B.F.; Hoekstra, L.T.; Qiu, S.S.; Selber, J.C.; van der Hulst, R.R.W.J. Preclinical Experience Using a New Robotic System Created for Microsurgery. Plast. Reconstr. Surg. 2018, 142, 1367–1376. [Google Scholar] [CrossRef]

- Yang, S.; MacLachlan, R.A.; Riviere, C.N. Manipulator Design and Operation of a Six-Degree-of-Freedom Handheld Tremor-Canceling Microsurgical Instrument. IEEE/ASME Trans. Mechatron. 2015, 20, 761–772. [Google Scholar] [CrossRef]

- Meenink, H.C.M. Vitreo-Retinal Eye Surgery Robot: Sustainable Precision. Ph.D. Thesis, Technische Universiteit Eindhoven, Eindhoven, The Netherlands, 2011. [Google Scholar] [CrossRef]

- Hendrix, R. (Ron) Robotically Assisted Eye Surgery: A Haptic. Master Console. Ph.D. Thesis, Technische Universiteit Eindhoven, Eindhoven, The Netherlands, 2011. [Google Scholar] [CrossRef]

- Chen, C.; Lee, Y.; Gerber, M.J.; Cheng, H.; Yang, Y.; Govetto, A.; Francone, A.A.; Soatto, S.; Grundfest, W.S.; Hubschman, J.; et al. Intraocular Robotic Interventional Surgical System (IRISS): Semi-automated OCT-guided Cataract Removal. Int. J. Med. Robot. Comput. Assist. Surg. 2018, 14. [Google Scholar] [CrossRef] [PubMed]

- Taylor, R.H.; Funda, J.; Grossman, D.D.; Karidis, J.P.; Larose, D.A. Remote Center-of-Motion Robot for Surgery. 1995. Available online: https://patents.google.com/patent/US5397323A/en (accessed on 16 July 2023).

- He, X.; Roppenecker, D.; Gierlach, D.; Balicki, M.; Olds, K.; Gehlbach, P.; Handa, J.; Taylor, R.; Iordachita, I. Toward Clinically Applicable Steady-Hand Eye Robot for Vitreoretinal Surgery. In Proceedings of the ASME 2012 International Mechanical Engineering Congress and Exposition, Huston, TX, USA, 9–15 November 2012; Volume 2: Biomedical and Biotechnology; American Society of Mechanical Engineers: New York, NY, USA; pp. 145–153.

- Ma, B. Mechanism Design and Human-Robot Interaction of Endoscopic Surgery Robot. Master Thesis, Shanghai University of Engineering and Technology, Shanghai, China, 2020. (In Chinese). [Google Scholar]

- Essomba, T.; Nguyen Vu, L. Kinematic Analysis of a New Five-Bar Spherical Decoupled Mechanism with Two-Degrees of Freedom Remote Center of Motion. Mech. Mach. Theory 2018, 119, 184–197. [Google Scholar] [CrossRef]

- Ida, Y.; Sugita, N.; Ueta, T.; Tamaki, Y.; Tanimoto, K.; Mitsuishi, M. Microsurgical Robotic System for Vitreoretinal Surgery. Int. J. CARS 2012, 7, 27–34. [Google Scholar] [CrossRef] [PubMed]

- Yang, U.; Kim, D.; Hwang, M.; Kong, D.; Kim, J.; Nho, Y.; Lee, W.; Kwon, D. A Novel Microsurgery Robot Mechanism with Mechanical Motion Scalability for Intraocular and Reconstructive Surgery. Int. J. Med. Robot. 2021, 17. [Google Scholar] [CrossRef] [PubMed]

- Nasseri, M.A.; Eder, M.; Eberts, D.; Nair, S.; Maier, M.; Zapp, D.; Lohmann, C.P.; Knoll, A. Kinematics and Dynamics Analysis of a Hybrid Parallel-Serial Micromanipulator Designed for Biomedical Applications. In Proceedings of the 2013 IEEE/ASME International Conference on Advanced Intelligent Mechatronics, Karlsruhe, Germany, 9–12 July 2013; pp. 293–299. [Google Scholar]

- Wang, W.; Li, J.; Wang, S.; Su, H.; Jiang, X. System Design and Animal Experiment Study of a Novel Minimally Invasive Surgical Robot. Int. J. Med. Robot. Comput. Assist. Surg. 2016, 12, 73–84. [Google Scholar] [CrossRef] [PubMed]

- He, C.; Olds, K.; Iordachita, I.; Taylor, R. A New ENT Microsurgery Robot: Error Analysis and Implementation. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 1221–1227. [Google Scholar]

- Sutherland, G.R.; Latour, I.; Greer, A.D. Integrating an Image-Guided Robot with Intraoperative MRI. IEEE Eng. Med. Biol. Mag. 2008, 27, 59–65. [Google Scholar] [CrossRef] [PubMed]

- van Mulken, T.J.M.; Schols, R.M.; Scharmga, A.M.J.; Winkens, B.; Cau, R.; Schoenmakers, F.B.F.; Qiu, S.S.; van der Hulst, R.R.W.J.; Keuter, X.H.A.; Lauwers, T.M.A.S.; et al. First-in-Human Robotic Supermicrosurgery Using a Dedicated Microsurgical Robot for Treating Breast Cancer-Related Lymphedema: A Randomized Pilot Trial. Nat. Commun. 2020, 11, 757. [Google Scholar] [CrossRef] [PubMed]

- Savastano, A.; Rizzo, S. A Novel Microsurgical Robot: Preliminary Feasibility Test in Ophthalmic Field. Trans. Vis. Sci. Tech. 2022, 11, 13. [Google Scholar] [CrossRef]

- Nasseri, M.A.; Eder, M.; Nair, S.; Dean, E.C.; Maier, M.; Zapp, D.; Lohmann, C.P.; Knoll, A. The Introduction of a New Robot for Assistance in Ophthalmic Surgery. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; pp. 5682–5685. [Google Scholar]

- Niu, G. Research on Slave Mechanism and Control of a Medical Robot System for Celiac Minimally Invasive Surgery. Ph.D. Thesis, Harbin Institute of Technology, Harbin, China, 2017. (In Chinese). [Google Scholar]

- Gijbels, A.; Willekens, K.; Esteveny, L.; Stalmans, P.; Reynaerts, D.; Vander Poorten, E.B. Towards a Clinically Applicable Robotic Assistance System for Retinal Vein Cannulation. In Proceedings of the 2016 6th IEEE International Conference on Biomedical Robotics and Biomechatronics (BioRob), Singapore, 26–29 June 2016; pp. 284–291. [Google Scholar]

- Bernasconi, A.; Cendes, F.; Theodore, W.H.; Gill, R.S.; Koepp, M.J.; Hogan, R.E.; Jackson, G.D.; Federico, P.; Labate, A.; Vaudano, A.E.; et al. Recommendations for the Use of Structural Magnetic Resonance Imaging in the Care of Patients with Epilepsy: A Consensus Report from the International League Against Epilepsy Neuroimaging Task Force. Epilepsia 2019, 60, 1054–1068. [Google Scholar] [CrossRef]

- Spin-Neto, R.; Gotfredsen, E.; Wenzel, A. Impact of Voxel Size Variation on CBCT-Based Diagnostic Outcome in Dentistry: A Systematic Review. J. Digit. Imaging 2013, 26, 813–820. [Google Scholar] [CrossRef] [PubMed]

- Li, G.; Su, H.; Cole, G.A.; Shang, W.; Harrington, K.; Camilo, A.; Pilitsis, J.G.; Fischer, G.S. Robotic System for MRI-Guided Stereotactic Neurosurgery. IEEE Trans. Biomed. Eng. 2015, 62, 1077–1088. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Godage, I.; Su, H.; Song, A.; Yu, H. Stereotactic Systems for MRI-Guided Neurosurgeries: A State-of-the-Art Review. Ann. Biomed. Eng. 2019, 47, 335–353. [Google Scholar] [CrossRef] [PubMed]

- Su, H.; Shang, W.; Li, G.; Patel, N.; Fischer, G.S. An MRI-Guided Telesurgery System Using a Fabry-Perot Interferometry Force Sensor and a Pneumatic Haptic Device. Ann. Biomed. Eng. 2017, 45, 1917–1928. [Google Scholar] [CrossRef] [PubMed]

- Monfaredi, R.; Cleary, K.; Sharma, K. MRI Robots for Needle-Based Interventions: Systems and Technology. Ann. Biomed. Eng. 2018, 46, 1479–1497. [Google Scholar] [CrossRef] [PubMed]

- Lang, M.J.; Greer, A.D.; Sutherland, G.R. Intra-Operative Robotics: NeuroArm. In Intraoperative Imaging; Pamir, M.N., Seifert, V., Kiris, T., Eds.; Acta Neurochirurgica Supplementum; Springer Vienna: Vienna, Austria, 2011; Volume 109, pp. 231–236. ISBN 978-3-211-99650-8. [Google Scholar]

- Fang, G.; Chow, M.C.K.; Ho, J.D.L.; He, Z.; Wang, K.; Ng, T.C.; Tsoi, J.K.H.; Chan, P.-L.; Chang, H.-C.; Chan, D.T.-M.; et al. Soft Robotic Manipulator for Intraoperative MRI-Guided Transoral Laser Microsurgery. Sci. Robot. 2021, 6, eabg5575. [Google Scholar] [CrossRef] [PubMed]

- Aumann, S.; Donner, S.; Fischer, J.; Müller, F. Optical Coherence Tomography (OCT): Principle and Technical Realization. In High Resolution Imaging in Microscopy and Ophthalmology: New Frontiers in Biomedical Optics; Bille, J.F., Ed.; Springer International Publishing: Cham, Germany, 2019; pp. 59–85. ISBN 978-3-030-16638-0. [Google Scholar]

- Carrasco-Zevallos, O.M.; Viehland, C.; Keller, B.; Draelos, M.; Kuo, A.N.; Toth, C.A.; Izatt, J.A. Review of Intraoperative Optical Coherence Tomography: Technology and Applications. Biomed. Opt. Express 2017, 8, 1607. [Google Scholar] [CrossRef] [PubMed]

- Fujimoto, J.; Swanson, E. The Development, Commercialization, and Impact of Optical Coherence Tomography. Invest. Ophthalmol. Vis. Sci. 2016, 57, OCT1. [Google Scholar] [CrossRef]

- Cheon, G.W.; Huang, Y.; Cha, J.; Gehlbach, P.L.; Kang, J.U. Accurate Real-Time Depth Control for CP-SSOCT Distal Sensor Based Handheld Microsurgery Tools. Biomed. Opt. Express 2015, 6, 1942. [Google Scholar] [CrossRef]

- Cheon, G.W.; Gonenc, B.; Taylor, R.H.; Gehlbach, P.L.; Kang, J.U. Motorized Microforceps With Active Motion Guidance Based on Common-Path SSOCT for Epiretinal Membranectomy. IEEE/ASME Trans. Mechatron. 2017, 22, 2440–2448. [Google Scholar] [CrossRef] [PubMed]

- Yu, H.; Shen, J.-H.; Shah, R.J.; Simaan, N.; Joos, K.M. Evaluation of Microsurgical Tasks with OCT-Guided and/or Robot-Assisted Ophthalmic Forceps. Biomed. Opt. Express 2015, 6, 457. [Google Scholar] [CrossRef]

- Gerber, M.J.; Hubschman, J.; Tsao, T. Robotic Posterior Capsule Polishing by Optical Coherence Tomography Image Guidance. Int. J. Med. Robot. 2021, 17, e2248. [Google Scholar] [CrossRef] [PubMed]

- Cornelissen, A.J.M.; van Mulken, T.J.M.; Graupner, C.; Qiu, S.S.; Keuter, X.H.A.; van der Hulst, R.R.W.J.; Schols, R.M. Near-Infrared Fluorescence Image-Guidance in Plastic Surgery: A Systematic Review. Eur. J. Plast. Surg. 2018, 41, 269–278. [Google Scholar] [CrossRef] [PubMed]

- Jaffer, F.A. Molecular Imaging in the Clinical Arena. JAMA 2005, 293, 855. [Google Scholar] [CrossRef] [PubMed]

- Orosco, R.K.; Tsien, R.Y.; Nguyen, Q.T. Fluorescence Imaging in Surgery. IEEE Rev. Biomed. Eng. 2013, 6, 178–187. [Google Scholar] [CrossRef] [PubMed]

- Gioux, S.; Choi, H.S.; Frangioni, J.V. Image-Guided Surgery Using Invisible Near-Infrared Light: Fundamentals of Clinical Translation. Mol. Imaging 2010, 9, 237–255. [Google Scholar] [CrossRef]

- van den Berg, N.S.; van Leeuwen, F.W.B.; van der Poel, H.G. Fluorescence Guidance in Urologic Surgery. Curr. Opin. Urol. 2012, 22, 109–120. [Google Scholar] [CrossRef]

- Schols, R.M.; Connell, N.J.; Stassen, L.P.S. Near-Infrared Fluorescence Imaging for Real-Time Intraoperative Anatomical Guidance in Minimally Invasive Surgery: A Systematic Review of the Literature. World J. Surg. 2015, 39, 1069–1079. [Google Scholar] [CrossRef]

- Lee, Y.-J.; van den Berg, N.S.; Orosco, R.K.; Rosenthal, E.L.; Sorger, J.M. A Narrative Review of Fluorescence Imaging in Robotic-Assisted Surgery. Laparosc. Surg. 2021, 5, 31. [Google Scholar] [CrossRef]

- Yamamoto, T.; Yamamoto, N.; Azuma, S.; Yoshimatsu, H.; Seki, Y.; Narushima, M.; Koshima, I. Near-Infrared Illumination System-Integrated Microscope for Supermicrosurgical Lymphaticovenular Anastomosis. Microsurgery 2014, 34, 23–27. [Google Scholar] [CrossRef] [PubMed]

- Wang, K.K.; Carr-Locke, D.L.; Singh, S.K.; Neumann, H.; Bertani, H.; Galmiche, J.-P.; Arsenescu, R.I.; Caillol, F.; Chang, K.J.; Chaussade, S.; et al. Use of Probe-based Confocal Laser Endomicroscopy (pCLE) in Gastrointestinal Applications. A Consensus Report Based on Clinical Evidence. United Eur. Gastroenterol. J. 2015, 3, 230–254. [Google Scholar] [CrossRef] [PubMed]

- Payne, C.J.; Yang, G.-Z. Hand-Held Medical Robots. Ann. Biomed. Eng. 2014, 42, 1594–1605. [Google Scholar] [CrossRef]

- Tun Latt, W.; Chang, T.P.; Di Marco, A.; Pratt, P.; Kwok, K.-W.; Clark, J.; Yang, G.-Z. A Hand-Held Instrument for in Vivo Probe-Based Confocal Laser Endomicroscopy during Minimally Invasive Surgery. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 1982–1987. [Google Scholar]

- Li, Z.; Shahbazi, M.; Patel, N.; O’Sullivan, E.; Zhang, H.; Vyas, K.; Chalasani, P.; Deguet, A.; Gehlbach, P.L.; Iordachita, I.; et al. Hybrid Robot-Assisted Frameworks for Endomicroscopy Scanning in Retinal Surgeries. IEEE Trans. Med. Robot. Bionics 2020, 2, 176–187. [Google Scholar] [CrossRef] [PubMed]

- Nishiyama, K. From Exoscope into the Next Generation. J. Korean Neurosurg. Soc. 2017, 60, 289–293. [Google Scholar] [CrossRef] [PubMed]

- Wan, J.; Oblak, M.L.; Ram, A.; Singh, A.; Nykamp, S. Determining Agreement between Preoperative Computed Tomography Lymphography and Indocyanine Green near Infrared Fluorescence Intraoperative Imaging for Sentinel Lymph Node Mapping in Dogs with Oral Tumours. Vet. Comp. Oncol. 2021, 19, 295–303. [Google Scholar] [CrossRef] [PubMed]

- Montemurro, N.; Scerrati, A.; Ricciardi, L.; Trevisi, G. The Exoscope in Neurosurgery: An Overview of the Current Literature of Intraoperative Use in Brain and Spine Surgery. JCM 2021, 11, 223. [Google Scholar] [CrossRef]

- Mamelak, A.N.; Nobuto, T.; Berci, G. Initial Clinical Experience with a High-Definition Exoscope System for Microneurosurgery. Neurosurgery 2010, 67, 476–483. [Google Scholar] [CrossRef]

- Khalessi, A.A.; Rahme, R.; Rennert, R.C.; Borgas, P.; Steinberg, J.A.; White, T.G.; Santiago-Dieppa, D.R.; Boockvar, J.A.; Hatefi, D.; Pannell, J.S.; et al. First-in-Man Clinical Experience Using a High-Definition 3-Dimensional Exoscope System for Microneurosurgery. Neurosurg. 2019, 16, 717–725. [Google Scholar] [CrossRef]

- Dada, T.; Sihota, R.; Gadia, R.; Aggarwal, A.; Mandal, S.; Gupta, V. Comparison of Anterior Segment Optical Coherence Tomography and Ultrasound Biomicroscopy for Assessment of the Anterior Segment. J. Cataract. Refract. Surg. 2007, 33, 837–840. [Google Scholar] [CrossRef]

- Kumar, R.S.; Sudhakaran, S.; Aung, T. Angle-Closure Glaucoma: Imaging. In Pearls of Glaucoma Management; Giaconi, J.A., Law, S.K., Nouri-Mahdavi, K., Coleman, A.L., Caprioli, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2016; pp. 517–531. ISBN 978-3-662-49042-6. [Google Scholar]

- Chen, W.; He, S.; Xiang, D. Management of Aphakia with Visual Axis Opacification after Congenital Cataract Surgery Based on UBM Image Features Analysis. J. Ophthalmol. 2020, 2020, 9489450. [Google Scholar] [CrossRef] [PubMed]

- Ursea, R.; Silverman, R.H. Anterior-Segment Imaging for Assessment of Glaucoma. Expert. Rev. Ophthalmol. 2010, 5, 59–74. [Google Scholar] [CrossRef] [PubMed]

- Smith, B.R.; Gambhir, S.S. Nanomaterials for In Vivo Imaging. Chem. Rev. 2017, 117, 901–986. [Google Scholar] [CrossRef] [PubMed]

- Jeppesen, J.; Faber, C.E. Surgical Complications Following Cochlear Implantation in Adults Based on a Proposed Reporting Consensus. Acta Oto-Laryngol. 2013, 133, 1012–1021. [Google Scholar] [CrossRef] [PubMed]

- Sznitman, R.; Richa, R.; Taylor, R.H.; Jedynak, B.; Hager, G.D. Unified Detection and Tracking of Instruments during Retinal Microsurgery. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1263–1273. [Google Scholar] [CrossRef]

- Sznitman, R.; Ali, K.; Richa, R.; Taylor, R.H.; Hager, G.D.; Fua, P. Data-Driven Visual Tracking in Retinal Microsurgery. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2012; Ayache, N., Delingette, H., Golland, P., Mori, K., Eds.; Lecture Notes in Computer Science; Springer Berlin Heidelberg: Berlin/Heidelberg, Germany, 2012; Volume 7511, pp. 568–575. ISBN 978-3-642-33417-7. [Google Scholar]

- Sznitman, R.; Becker, C.; Fua, P. Fast Part-Based Classification for Instrument Detection in Minimally Invasive Surgery. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2014; Golland, P., Hata, N., Barillot, C., Hornegger, J., Howe, R., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Germany, 2014; Volume 8674, pp. 692–699. ISBN 978-3-319-10469-0. [Google Scholar]

- Rieke, N.; Tan, D.J.; Alsheakhali, M.; Tombari, F.; Di San Filippo, C.A.; Belagiannis, V.; Eslami, A.; Navab, N. Surgical Tool Tracking and Pose Estimation in Retinal Microsurgery. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Germany, 2015; Volume 9349, pp. 266–273. ISBN 978-3-319-24552-2. [Google Scholar]

- Rieke, N.; Tan, D.J.; Tombari, F.; Vizcaíno, J.P.; Di San Filippo, C.A.; Eslami, A.; Navab, N. Real-Time Online Adaption for Robust Instrument Tracking and Pose Estimation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2016; Ourselin, S., Joskowicz, L., Sabuncu, M.R., Unal, G., Wells, W., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Germany, 2016; Volume 9900, pp. 422–430. ISBN 978-3-319-46719-1. [Google Scholar]

- Rieke, N.; Tombari, F.; Navab, N. Computer Vision and Machine Learning for Surgical Instrument Tracking. In Computer Vision for Assistive Healthcare; Elsevier: Amsterdam, The Netherlands, 2018; pp. 105–126. ISBN 978-0-12-813445-0. [Google Scholar]

- Lu, W.; Tong, Y.; Yu, Y.; Xing, Y.; Chen, C.; Shen, Y. Deep Learning-Based Automated Classification of Multi-Categorical Abnormalities From Optical Coherence Tomography Images. Trans. Vis. Sci. Tech. 2018, 7, 41. [Google Scholar] [CrossRef] [PubMed]

- Loo, J.; Fang, L.; Cunefare, D.; Jaffe, G.J.; Farsiu, S. Deep Longitudinal Transfer Learning-Based Automatic Segmentation of Photoreceptor Ellipsoid Zone Defects on Optical Coherence Tomography Images of Macular Telangiectasia Type 2. Biomed. Opt. Express 2018, 9, 2681. [Google Scholar] [CrossRef]

- Santos, V.A.D.; Schmetterer, L.; Stegmann, H.; Pfister, M.; Messner, A.; Schmidinger, G.; Garhofer, G.; Werkmeister, R.M. CorneaNet: Fast Segmentation of Cornea OCT Scans of Healthy and Keratoconic Eyes Using Deep Learning. Biomed. Opt. Express 2019, 10, 622. [Google Scholar] [CrossRef]

- Kurmann, T.; Neila, P.M.; Du, X.; Fua, P.; Stoyanov, D.; Wolf, S.; Sznitman, R. Simultaneous Recognition and Pose Estimation of Instruments in Minimally Invasive Surgery. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2017: 20th International Conference, Quebec City, QC, Canada, 11–13 September 2017; Volume 10434, pp. 505–513. [Google Scholar]

- Laina, I.; Rieke, N.; Rupprecht, C.; Vizcaíno, J.P.; Eslami, A.; Tombari, F.; Navab, N. Concurrent Segmentation and Localization for Tracking of Surgical Instruments. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2017: 20th International Conference, Quebec City, QC, Canada, 11–13 September 2017; pp. 664–672. [Google Scholar]

- Park, I.; Kim, H.K.; Chung, W.K.; Kim, K. Deep Learning Based Real-Time OCT Image Segmentation and Correction for Robotic Needle Insertion Systems. IEEE Robot. Autom. Lett. 2020, 5, 4517–4524. [Google Scholar] [CrossRef]

- Ding, A.S.; Lu, A.; Li, Z.; Galaiya, D.; Siewerdsen, J.H.; Taylor, R.H.; Creighton, F.X. Automated Registration-Based Temporal Bone Computed Tomography Segmentation for Applications in Neurotologic Surgery. Otolaryngol.—Head Neck Surg. 2022, 167, 133–140. [Google Scholar] [CrossRef]

- Tayama, T.; Kurose, Y.; Marinho, M.M.; Koyama, Y.; Harada, K.; Omata, S.; Arai, F.; Sugimoto, K.; Araki, F.; Totsuka, K.; et al. Autonomous Positioning of Eye Surgical Robot Using the Tool Shadow and Kalman Filtering. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018; pp. 1723–1726. [Google Scholar]

- Hu, Z.; Niemeijer, M.; Abramoff, M.D.; Garvin, M.K. Multimodal Retinal Vessel Segmentation From Spectral-Domain Optical Coherence Tomography and Fundus Photography. IEEE Trans. Med. Imaging 2012, 31, 1900–1911. [Google Scholar] [CrossRef] [PubMed]

- Yousefi, S.; Liu, T.; Wang, R.K. Segmentation and Quantification of Blood Vessels for OCT-Based Micro-Angiograms Using Hybrid Shape/Intensity Compounding. Microvasc. Res. 2015, 97, 37–46. [Google Scholar] [CrossRef] [PubMed]

- Spaide, R.F.; Fujimoto, J.G.; Waheed, N.K.; Sadda, S.R.; Staurenghi, G. Optical Coherence Tomography Angiography. Progress. Retin. Eye Res. 2018, 64, 1–55. [Google Scholar] [CrossRef] [PubMed]

- Leitgeb, R.A. En Face Optical Coherence Tomography: A Technology Review. Biomed. Opt. Express 2019, 10, 2177. [Google Scholar] [CrossRef] [PubMed]

- Li, M.; Chen, Y.; Ji, Z.; Xie, K.; Yuan, S.; Chen, Q.; Li, S. Image Projection Network: 3D to 2D Image Segmentation in OCTA Images. IEEE Trans. Med. Imaging 2020, 39, 3343–3354. [Google Scholar] [CrossRef] [PubMed]

- Burschka, D.; Corso, J.J.; Dewan, M.; Lau, W.; Li, M.; Lin, H.; Marayong, P.; Ramey, N.; Hager, G.D.; Hoffman, B.; et al. Navigating Inner Space: 3-D Assistance for Minimally Invasive Surgery. Robot. Auton. Syst. 2005, 52, 5–26. [Google Scholar] [CrossRef]

- Probst, T.; Maninis, K.-K.; Chhatkuli, A.; Ourak, M.; Poorten, E.V.; Van Gool, L. Automatic Tool Landmark Detection for Stereo Vision in Robot-Assisted Retinal Surgery. IEEE Robot. Autom. Lett. 2018, 3, 612–619. [Google Scholar] [CrossRef]

- Kim, J.W.; Zhang, P.; Gehlbach, P.; Iordachita, I.; Kobilarov, M. Towards Autonomous Eye Surgery by Combining Deep Imitation Learning with Optimal Control. In Proceedings of the Conference on Robot Learning, London, UK, 8–11 November 2021. [Google Scholar]

- Kim, J.W.; He, C.; Urias, M.; Gehlbach, P.; Hager, G.D.; Iordachita, I.; Kobilarov, M. Autonomously Navigating a Surgical Tool Inside the Eye by Learning from Demonstration. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 7351–7357. [Google Scholar]

- Tayama, T.; Kurose, Y.; Nitta, T.; Harada, K.; Someya, Y.; Omata, S.; Arai, F.; Araki, F.; Totsuka, K.; Ueta, T.; et al. Image Processing for Autonomous Positioning of Eye Surgery Robot in Micro-Cannulation. Procedia CIRP 2017, 65, 105–109. [Google Scholar] [CrossRef]

- Koyama, Y.; Marinho, M.M.; Mitsuishi, M.; Harada, K. Autonomous Coordinated Control of the Light Guide for Positioning in Vitreoretinal Surgery. IEEE Trans. Med. Robot. Bionics 2022, 4, 156–171. [Google Scholar] [CrossRef]

- Richa, R.; Balicki, M.; Sznitman, R.; Meisner, E.; Taylor, R.; Hager, G. Vision-Based Proximity Detection in Retinal Surgery. IEEE Trans. Biomed. Eng. 2012, 59, 2291–2301. [Google Scholar] [CrossRef]

- Wu, B.-F.; Lu, W.-C.; Jen, C.-L. Monocular Vision-Based Robot Localization and Target Tracking. J. Robot. 2011, 2011, 548042. [Google Scholar] [CrossRef]

- Yang, S.; Martel, J.N.; Lobes, L.A.; Riviere, C.N. Techniques for Robot-Aided Intraocular Surgery Using Monocular Vision. Int. J. Robot. Res. 2018, 37, 931–952. [Google Scholar] [CrossRef] [PubMed]

- Yoshimura, M.; Marinho, M.M.; Harada, K.; Mitsuishi, M. Single-Shot Pose Estimation of Surgical Robot Instruments’ Shafts from Monocular Endoscopic Images. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 9960–9966. [Google Scholar]

- Bergeles, C.; Kratochvil, B.E.; Nelson, B.J. Visually Servoing Magnetic Intraocular Microdevices. IEEE Trans. Robot. 2012, 28, 798–809. [Google Scholar] [CrossRef]

- Bergeles, C.; Shamaei, K.; Abbott, J.J.; Nelson, B.J. Single-Camera Focus-Based Localization of Intraocular Devices. IEEE Trans. Biomed. Eng. 2010, 57, 2064–2074. [Google Scholar] [CrossRef] [PubMed]

- Bruns, T.L.; Webster, R.J. An Image Guidance System for Positioning Robotic Cochlear Implant Insertion Tools. In Medical Imaging 2017: Image-Guided Procedures, Robotic Interventions, and Modeling; Webster, R.J., Fei, B., Eds.; SPIE: Orlando, FL, USA, 2017; Volume 10135, pp. 199–204. [Google Scholar]

- Bruns, T.L.; Riojas, K.E.; Ropella, D.S.; Cavilla, M.S.; Petruska, A.J.; Freeman, M.H.; Labadie, R.F.; Abbott, J.J.; Webster, R.J. Magnetically Steered Robotic Insertion of Cochlear-Implant Electrode Arrays: System Integration and First-In-Cadaver Results. IEEE Robot. Autom. Lett. 2020, 5, 2240–2247. [Google Scholar] [CrossRef] [PubMed]

- Clarke, C.; Etienne-Cummings, R. Design of an Ultrasonic Micro-Array for Near Field Sensing During Retinal Microsurgery. In Proceedings of the 2006 International Conference of the IEEE Engineering in Medicine and Biology Society, New York, NY, USA, 30 August–3 September 2006. [Google Scholar]

- Weiss, J.; Rieke, N.; Nasseri, M.A.; Maier, M.; Eslami, A.; Navab, N. Fast 5DOF Needle Tracking in iOCT. Int. J. CARS 2018, 13, 787–796. [Google Scholar] [CrossRef] [PubMed]

- Roodaki, H.; Filippatos, K.; Eslami, A.; Navab, N. Introducing Augmented Reality to Optical Coherence Tomography in Ophthalmic Microsurgery. In Proceedings of the 2015 IEEE International Symposium on Mixed and Augmented Reality, Fukuoka, Japan, 29 September–3 October 2015; pp. 1–6. [Google Scholar]

- Zhou, M.; Wang, X.; Weiss, J.; Eslami, A.; Huang, K.; Maier, M.; Lohmann, C.P.; Navab, N.; Knoll, A.; Nasseri, M.A. Needle Localization for Robot-Assisted Subretinal Injection Based on Deep Learning. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 8727–8732. [Google Scholar]

- Zhou, M.; Hamad, M.; Weiss, J.; Eslami, A.; Huang, K.; Maier, M.; Lohmann, C.P.; Navab, N.; Knoll, A.; Nasseri, M.A. Towards Robotic Eye Surgery: Marker-Free, Online Hand-Eye Calibration Using Optical Coherence Tomography Images. IEEE Robot. Autom. Lett. 2018, 3, 7. [Google Scholar] [CrossRef]

- Zhou, M.; Wu, J.; Ebrahimi, A.; Patel, N.; Liu, Y.; Navab, N.; Gehlbach, P.; Knoll, A.; Nasseri, M.A.; Iordachita, I. Spotlight-Based 3D Instrument Guidance for Autonomous Task in Robot-Assisted Retinal Surgery. IEEE Robot. Autom. Lett. 2021, 6, 7750–7757. [Google Scholar] [CrossRef]

- Esteveny, L.; Schoevaerdts, L.; Gijbels, A.; Reynaerts, D.; Poorten, E.V. Experimental Validation of Instrument Insertion Precision in Robot-Assisted Eye-Surgery. Available online: https://eureyecase.eu/publications/papers/Esteveny_CRAS2015.pdf (accessed on 10 July 2023).

- Riojas, K.E. Making Cochlear-Implant Electrode Array Insertion Less Invasive, Safer, and More Effective through Design, Magnetic Steering, and Impedance Sensing. Ph.D. Thesis, Vanderbilt University, Nashville, TN, USA, 2021. [Google Scholar]

- Heller, M.A.; Heller, M.A.; Schiff, W.; Heller, M.A. The Psychology of Touch; Psychology Press: New York, NY, USA, 2013; ISBN 978-1-315-79962-9. [Google Scholar]

- Culmer, P.; Alazmani, A.; Mushtaq, F.; Cross, W.; Jayne, D. Haptics in Surgical Robots. In Handbook of Robotic and Image-Guided Surgery; Elsevier: Amsterdam, The Netherlands, 2020; pp. 239–263. ISBN 978-0-12-814245-5. [Google Scholar]

- Maddahi, Y.; Gan, L.S.; Zareinia, K.; Lama, S.; Sepehri, N.; Sutherland, G.R. Quantifying Workspace and Forces of Surgical Dissection during Robot-Assisted Neurosurgery. Int. J. Med. Robot. Comput. Assist. Surg. 2016, 12, 528–537. [Google Scholar] [CrossRef]

- Francone, A.; Huang, J.M.; Ma, J.; Tsao, T.-C.; Rosen, J.; Hubschman, J.-P. The Effect of Haptic Feedback on Efficiency and Safety During Preretinal Membrane Peeling Simulation. Trans. Vis. Sci. Tech. 2019, 8, 2. [Google Scholar] [CrossRef]

- van den Bedem, L.; Hendrix, R.; Rosielle, N.; Steinbuch, M.; Nijmeijer, H. Design of a Minimally Invasive Surgical Teleoperated Master-Slave System with Haptic Feedback. In Proceedings of the 2009 International Conference on Mechatronics and Automation, Changchun, China, 9–12 August 2009; pp. 60–65. [Google Scholar]

- Patel, R.V.; Atashzar, S.F.; Tavakoli, M. Haptic Feedback and Force-Based Teleoperation in Surgical Robotics. Proc. IEEE 2022, 110, 1012–1027. [Google Scholar] [CrossRef]

- Abushagur, A.; Arsad, N.; Reaz, M.; Bakar, A. Advances in Bio-Tactile Sensors for Minimally Invasive Surgery Using the Fibre Bragg Grating Force Sensor Technique: A Survey. Sensors 2014, 14, 6633–6665. [Google Scholar] [CrossRef] [PubMed]

- Rao, Y.J. Recent Progress in Applications of In-Fibre Bragg Grating Sensors. Opt. Lasers Eng. 1999, 31, 297–324. [Google Scholar] [CrossRef]

- Bell, B.; Stankowski, S.; Moser, B.; Oliva, V.; Stieger, C.; Nolte, L.-P.; Caversaccio, M.; Weber, S. Integrating Optical Fiber Force Sensors into Microforceps for ORL Microsurgery. In Proceedings of the 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology, Buenos Aires, Argentina, 31 August–4 September 2010; pp. 1848–1851. [Google Scholar]

- He, X.; Balicki, M.A.; Kang, J.U.; Gehlbach, P.L.; Handa, J.T.; Taylor, R.H.; Iordachita, I.I. Force Sensing Micro-Forceps with Integrated Fiber Bragg Grating for Vitreoretinal Surgery. In Proceedings of the Optical Fibers and Sensors for Medical Diagnostics and Treatment Applications XII., San Francisco, CA, USA, 9 February 2012; p. 82180W. [Google Scholar]

- He, X.; Gehlbach, P.; Handa, J.; Taylor, R.; Iordachita, I. Development of a Miniaturized 3-DOF Force Sensing Instrument for Robotically Assisted Retinal Microsurgery and Preliminary Results. In Proceedings of the 5th IEEE RAS/EMBS International Conference on Biomedical Robotics and Biomechatronics, Sao Paulo, Brazil, 12–15 August 2014; pp. 252–258. [Google Scholar]

- He, X.; Balicki, M.A.; Kang, J.U.; Gehlbach, P.L.; Handa, J.T.; Taylor, R.H.; Iordachita, I.I.; He, X.; Gehlbach, P.; Handa, J.; et al. 3-DOF Force-Sensing Motorized Micro-Forceps for Robot-Assisted Vitreoretinal Surgery. IEEE Sens. J. 2017, 17, 3526–3541. [Google Scholar] [CrossRef]

- He, X.; Balicki, M.; Gehlbach, P.; Handa, J.; Taylor, R.; Iordachita, I. A Multi-Function Force Sensing Instrument for Variable Admittance Robot Control in Retinal Microsurgery. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 1411–1418. [Google Scholar]

- Gonenc, B.; Balicki, M.A.; Handa, J.; Gehlbach, P.; Riviere, C.N.; Taylor, R.H.; Iordachita, I. Preliminary Evaluation of a Micro-Force Sensing Handheld Robot for Vitreoretinal Surgery. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 4125–4130. [Google Scholar]

- Gonenc, B.; Tran, N.; Gehlbach, P.; Taylor, R.H.; Iordachita, I. Robot-Assisted Retinal Vein Cannulation with Force-Based Puncture Detection: Micron vs. the Steady-Hand Eye Robot. In Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 5107–5111. [Google Scholar]

- Gijbels, A.; Vander Poorten, E.B.; Stalmans, P.; Reynaerts, D. Development and Experimental Validation of a Force Sensing Needle for Robotically Assisted Retinal Vein Cannulations. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 2270–2276. [Google Scholar]

- Smits, J.; Ourak, M.; Gijbels, A.; Esteveny, L.; Borghesan, G.; Schoevaerdts, L.; Willekens, K.; Stalmans, P.; Lankenau, E.; Schulz-Hildebrandt, H.; et al. Development and Experimental Validation of a Combined FBG Force and OCT Distance Sensing Needle for Robot-Assisted Retinal Vein Cannulation. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 129–134. [Google Scholar]

- Enayati, N.; De Momi, E.; Ferrigno, G. Haptics in Robot-Assisted Surgery: Challenges and Benefits. IEEE Rev. Biomed. Eng. 2016, 9, 49–65. [Google Scholar] [CrossRef] [PubMed]

- Maddahi, Y.; Zareinia, K.; Sepehri, N.; Sutherland, G. Surgical Tool Motion during Conventional Freehand and Robot-Assisted Microsurgery Conducted Using neuroArm. Adv. Robot. 2016, 30, 621–633. [Google Scholar] [CrossRef]

- Zareinia, K.; Maddahi, Y.; Ng, C.; Sepehri, N.; Sutherland, G.R. Performance Evaluation of Haptic Hand-Controllers in a Robot-Assisted Surgical System. Int. J. Med. Robot. Comput. Assist. Surg. 2015, 11, 486–501. [Google Scholar] [CrossRef]

- Hoshyarmanesh, H.; Zareinia, K.; Lama, S.; Sutherland, G.R. Structural Design of a Microsurgery-Specific Haptic Device: NeuroArmPLUS Prototype. Mechatronics 2021, 73, 102481. [Google Scholar] [CrossRef]

- Hutchison, D.; Kanade, T.; Kittler, J.; Kleinberg, J.M.; Mattern, F.; Mitchell, J.C.; Naor, M.; Nierstrasz, O.; Pandu Rangan, C.; Steffen, B.; et al. Micro-Force Sensing in Robot Assisted Membrane Peeling for Vitreoretinal Surgery. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2010; Jiang, T., Navab, N., Pluim, J.P.W., Viergever, M.A., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2010; Volume 6363, pp. 303–310. ISBN 978-3-642-15710-3. [Google Scholar]

- El Rassi, I.; El Rassi, J.-M. A Review of Haptic Feedback in Tele-Operated Robotic Surgery. J. Med. Eng. Technol. 2020, 44, 247–254. [Google Scholar] [CrossRef]

- Gonenc, B.; Chae, J.; Gehlbach, P.; Taylor, R.H.; Iordachita, I. Towards Robot-Assisted Retinal Vein Cannulation: A Motorized Force-Sensing Microneedle Integrated with a Handheld Micromanipulator. Sensors 2017, 17, 2195. [Google Scholar] [CrossRef]

- Gonenc, B.; Handa, J.; Gehlbach, P.; Taylor, R.H.; Iordachita, I. A Comparative Study for Robot Assisted Vitreoretinal Surgery: Micron vs. the Steady-Hand Robot. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 4832–4837. [Google Scholar]

- Talasaz, A.; Trejos, A.L.; Patel, R.V. The Role of Direct and Visual Force Feedback in Suturing Using a 7-DOF Dual-Arm Teleoperated System. IEEE Trans. Haptics 2017, 10, 276–287. [Google Scholar] [CrossRef] [PubMed]

- Prattichizzo, D.; Pacchierotti, C.; Rosati, G. Cutaneous Force Feedback as a Sensory Subtraction Technique in Haptics. IEEE Trans. Haptics 2012, 5, 289–300. [Google Scholar] [CrossRef] [PubMed]

- Meli, L.; Pacchierotti, C.; Prattichizzo, D. Sensory Subtraction in Robot-Assisted Surgery: Fingertip Skin Deformation Feedback to Ensure Safety and Improve Transparency in Bimanual Haptic Interaction. IEEE Trans. Biomed. Eng. 2014, 61, 1318–1327. [Google Scholar] [CrossRef] [PubMed]

- Aggravi, M.; Estima, D.; Krupa, A.; Misra, S.; Pacchierotti, C. Haptic Teleoperation of Flexible Needles Combining 3D Ultrasound Guidance and Needle Tip Force Feedback. IEEE Robot. Autom. Lett. 2021, 9, 4859–4866. [Google Scholar] [CrossRef]

- Gijbels, A.; Wouters, N.; Stalmans, P.; Van Brussel, H.; Reynaerts, D.; Poorten, E.V. Design and Realisation of a Novel Robotic Manipulator for Retinal Surgery. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 3598–3603. [Google Scholar]

- Li, H.-Y.; Nuradha, T.; Xavier, S.A.; Tan, U.-X. Towards A Compliant and Accurate Cooperative Micromanipulator Using Variable Admittance Control. In Proceedings of the 2018 3rd International Conference on Advanced Robotics and Mechatronics (ICARM), Singapore, 18–20 July 2018; pp. 230–235. [Google Scholar]

- Ballestín, A.; Malzone, G.; Menichini, G.; Lucattelli, E.; Innocenti, M. New Robotic System with Wristed Microinstruments Allows Precise Reconstructive Microsurgery: Preclinical Study. Ann. Surg. Oncol. 2022, 29, 7859–7867. [Google Scholar] [CrossRef] [PubMed]

- MacLachlan, R.A.; Becker, B.C.; Tabares, J.C.; Podnar, G.W.; Lobes, L.A.; Riviere, C.N. Micron: An Actively Stabilized Handheld Tool for Microsurgery. IEEE Trans. Robot. 2012, 28, 195–212. [Google Scholar] [CrossRef] [PubMed]

- Zhang, D.; Xiao, B.; Huang, B.; Zhang, L.; Liu, J.; Yang, G.-Z. A Self-Adaptive Motion Scaling Framework for Surgical Robot Remote Control. IEEE Robot. Autom. Lett. 2019, 4, 359–366. [Google Scholar] [CrossRef]

- Abbott, J.J.; Hager, G.D.; Okamura, A.M. Steady-Hand Teleoperation with Virtual Fixtures. In Proceedings of the 12th IEEE International Workshop on Robot and Human Interactive Communication, 2003, ROMAN 2003, Millbrae, CA, USA, 2 November 2003; pp. 145–151. [Google Scholar]

- Becker, B.C.; MacLachlan, R.A.; Lobes, L.A.; Hager, G.D.; Riviere, C.N. Vision-Based Control of a Handheld Surgical Micromanipulator With Virtual Fixtures. IEEE Trans. Robot. 2013, 29, 674–683. [Google Scholar] [CrossRef]

- Dewan, M.; Marayong, P.; Okamura, A.M.; Hager, G.D. Vision-Based Assistance for Ophthalmic Micro-Surgery. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2004; Barillot, C., Haynor, D.R., Hellier, P., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2004; Volume 3217, pp. 49–57. ISBN 978-3-540-22977-3. [Google Scholar]

- Yang, Y.; Jiang, Z.; Yang, Y.; Qi, X.; Hu, Y.; Du, J.; Han, B.; Liu, G. Safety Control Method of Robot-Assisted Cataract Surgery with Virtual Fixture and Virtual Force Feedback. J. Intell. Robot. Syst. 2020, 97, 17–32. [Google Scholar] [CrossRef]

- Balicki, M.; Han, J.-H.; Iordachita, I.; Gehlbach, P.; Handa, J.; Taylor, R.; Kang, J. Single Fiber Optical Coherence Tomography Microsurgical Instruments for Computer and Robot-Assisted Retinal Surgery. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2009; Yang, G.-Z., Hawkes, D., Rueckert, D., Noble, A., Taylor, C., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2009; Volume 5761, pp. 108–115. ISBN 978-3-642-04267-6. [Google Scholar]

- Maberley, D.A.L.; Beelen, M.; Smit, J.; Meenink, T.; Naus, G.; Wagner, C.; de Smet, M.D. A Comparison of Robotic and Manual Surgery for Internal Limiting Membrane Peeling. Graefes Arch. Clin. Exp. Ophthalmol. 2020, 258, 773–778. [Google Scholar] [CrossRef]

- Kang, J.; Cheon, G. Demonstration of Subretinal Injection Using Common-Path Swept Source OCT Guided Microinjector. Appl. Sci. 2018, 8, 1287. [Google Scholar] [CrossRef]

- Razavi, C.R.; Wilkening, P.R.; Yin, R.; Barber, S.R.; Taylor, R.H.; Carey, J.P.; Creighton, F.X. Image-Guided Mastoidectomy with a Cooperatively Controlled ENT Microsurgery Robot. Otolaryngol. Head. Neck Surg. 2019, 161, 852–855. [Google Scholar] [CrossRef] [PubMed]

- Li, M.; Taylor, R.H. Optimum Robot Control for 3D Virtual Fixture in Constrained ENT Surgery. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2003; Ellis, R.E., Peters, T.M., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2003; Volume 2878, pp. 165–172. ISBN 978-3-540-20462-6. [Google Scholar]

- Andrews, C.; Southworth, M.K.; Silva, J.N.A.; Silva, J.R. Extended Reality in Medical Practice. Curr. Treat. Options Cardio Med. 2019, 21, 18. [Google Scholar] [CrossRef] [PubMed]

- Mathew, P.S.; Pillai, A.S. Role of Immersive (XR) Technologies in Improving Healthcare Competencies: A Review. In Advances in Computational Intelligence and Robotics; Guazzaroni, G., Pillai, A.S., Eds.; IGI Global: Hershey, PA, USA, 2020; pp. 23–46. ISBN 978-1-79981-796-3. [Google Scholar]

- Fu, J.; Palumbo, M.C.; Iovene, E.; Liu, Q.; Burzo, I.; Redaelli, A.; Ferrigno, G.; De Momi, E. Augmented Reality-Assisted Robot Learning Framework for Minimally Invasive Surgery Task. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May 2023; pp. 11647–11653. [Google Scholar]

- Zhou, X.; He, J.; Bhushan; Qi, W.; Hu, Y.; Dai, J.; Xu, Y. Hybrid IMU/Muscle Signals Powered Teleoperation Control of Serial Manipulator Incorporating Passivity Adaptation. In Proceedings of the 2020 5th International Conference on Advanced Robotics and Mechatronics (ICARM), Shenzhen, China, 18–21 December 2020; pp. 228–233. [Google Scholar]

- Shaikh, H.J.F.; Hasan, S.S.; Woo, J.J.; Lavoie-Gagne, O.; Long, W.J.; Ramkumar, P.N. Exposure to Extended Reality and Artificial Intelligence-Based Manifestations: A Primer on the Future of Hip and Knee Arthroplasty. J. Arthroplast. 2023, 38, S0883540323004813. [Google Scholar] [CrossRef] [PubMed]

- Serafin, S.; Geronazzo, M.; Erkut, C.; Nilsson, N.C.; Nordahl, R. Sonic Interactions in Virtual Reality: State of the Art, Current Challenges, and Future Directions. IEEE Comput. Grap. Appl. 2018, 38, 31–43. [Google Scholar] [CrossRef] [PubMed]

- Ugwoke, C.K.; Albano, D.; Umek, N.; Dumić-Čule, I.; Snoj, Ž. Application of Virtual Reality Systems in Bone Trauma Procedures. Medicina 2023, 59, 562. [Google Scholar] [CrossRef] [PubMed]

- Phang, J.T.S.; Lim, K.H.; Chiong, R.C.W. A Review of Three Dimensional Reconstruction Techniques. Multimed. Tools Appl. 2021, 80, 17879–17891. [Google Scholar] [CrossRef]

- Tserovski, S.; Georgieva, S.; Simeonov, R.; Bigdeli, A.; Röttinger, H.; Kinov, P. Advantages and Disadvantages of 3D Printing for Pre-Operative Planning of Revision Hip Surgery. J. Surg. Case Rep. 2019, 2019, rjz214. [Google Scholar] [CrossRef]

- Xu, L.; Zhang, H.; Wang, J.; Li, A.; Song, S.; Ren, H.; Qi, L.; Gu, J.J.; Meng, M.Q.-H. Information Loss Challenges in Surgical Navigation Systems: From Information Fusion to AI-Based Approaches. Inf. Fusion. 2023, 92, 13–36. [Google Scholar] [CrossRef]

- Stoyanov, D.; Mylonas, G.P.; Lerotic, M.; Chung, A.J.; Yang, G.-Z. Intra-Operative Visualizations: Perceptual Fidelity and Human Factors. J. Disp. Technol. 2008, 4, 491–501. [Google Scholar] [CrossRef]

- Lavia, C.; Bonnin, S.; Maule, M.; Erginay, A.; Tadayoni, R.; Gaudric, A. Vessel density of superficial, intermediate, and deep capillary plexuses using optical coherence tomography angiography. Retina 2019, 39, 247–258. [Google Scholar] [CrossRef] [PubMed]

- Tan, B.; Wong, A.; Bizheva, K. Enhancement of Morphological and Vascular Features in OCT Images Using a Modified Bayesian Residual Transform. Biomed. Opt. Express 2018, 9, 2394. [Google Scholar] [CrossRef] [PubMed]

- Thomsen, A.S.S.; Smith, P.; Subhi, Y.; Cour, M.L.; Tang, L.; Saleh, G.M.; Konge, L. High Correlation between Performance on a Virtual-Reality Simulator and Real-Life Cataract Surgery. Acta Ophthalmol. 2017, 95, 307–311. [Google Scholar] [CrossRef] [PubMed]

- Thomsen, A.S.S.; Bach-Holm, D.; Kjærbo, H.; Højgaard-Olsen, K.; Subhi, Y.; Saleh, G.M.; Park, Y.S.; La Cour, M.; Konge, L. Operating Room Performance Improves after Proficiency-Based Virtual Reality Cataract Surgery Training. Ophthalmology 2017, 124, 524–531. [Google Scholar] [CrossRef] [PubMed]

- Popa, A. Performance Increase of Non-Experienced Trainees on a Virtual Reality Cataract Surgery Simulator. Available online: https://www2.surgsci.uu.se/Ophthalmology/Teaching/MedicalStudents/SjalvstandigtArbete/025R.pdf (accessed on 15 July 2023).

- Söderberg, P.; Erngrund, M.; Skarman, E.; Nordh, L.; Laurell, C.-G. VR-Simulation Cataract Surgery in Non-Experienced Trainees: Evolution of Surgical Skill; Manns, F., Söderberg, P.G., Ho, A., Eds.; SPIE: San Francisco, CA, USA, 2011; p. 78850L. [Google Scholar]

- Kozak, I.; Banerjee, P.; Luo, J.; Luciano, C. Virtual Reality Simulator for Vitreoretinal Surgery Using Integrated OCT Data. OPTH 2014, 8, 669. [Google Scholar] [CrossRef] [PubMed]

- Sikder, S.; Luo, J.; Banerjee, P.P.; Luciano, C.; Kania, P.; Song, J.; Saeed Kahtani, E.; Edward, D.; Al Towerki, A.-E. The Use of a Virtual Reality Surgical Simulator for Cataract Surgical Skill Assessment with 6 Months of Intervening Operating Room Experience. OPTH 2015, 9, 141. [Google Scholar] [CrossRef] [PubMed]

- Iskander, M.; Ogunsola, T.; Ramachandran, R.; McGowan, R.; Al-Aswad, L.A. Virtual Reality and Augmented Reality in Ophthalmology: A Contemporary Prospective. Asia-Pac. J. Ophthalmol. 2021, 10, 244–252. [Google Scholar] [CrossRef] [PubMed]

- Thomsen, A.S.S.; Subhi, Y.; Kiilgaard, J.F.; La Cour, M.; Konge, L. Update on Simulation-Based Surgical Training and Assessment in Ophthalmology. Ophthalmology 2015, 122, 1111–1130.e1. [Google Scholar] [CrossRef]

- Choi, M.Y.; Sutherland, G.R. Surgical Performance in a Virtual Environment. Horizon 2009, 17, 345–355. [Google Scholar] [CrossRef]

- Muñoz, E.G.; Fabregat, R.; Bacca-Acosta, J.; Duque-Méndez, N.; Avila-Garzon, C. Augmented Reality, Virtual Reality, and Game Technologies in Ophthalmology Training. Information 2022, 13, 222. [Google Scholar] [CrossRef]

- Seider, M.I.; Carrasco-Zevallos, O.M.; Gunther, R.; Viehland, C.; Keller, B.; Shen, L.; Hahn, P.; Mahmoud, T.H.; Dandridge, A.; Izatt, J.A.; et al. Real-Time Volumetric Imaging of Vitreoretinal Surgery with a Prototype Microscope-Integrated Swept-Source OCT Device. Ophthalmol. Retin. 2018, 2, 401–410. [Google Scholar] [CrossRef]

- Sommersperger, M.; Weiss, J.; Ali Nasseri, M.; Gehlbach, P.; Iordachita, I.; Navab, N. Real-Time Tool to Layer Distance Estimation for Robotic Subretinal Injection Using Intraoperative 4D OCT. Biomed. Opt. Express 2021, 12, 1085. [Google Scholar] [CrossRef]

- Pan, J.; Liu, W.; Ge, P.; Li, F.; Shi, W.; Jia, L.; Qin, H. Real-Time Segmentation and Tracking of Excised Corneal Contour by Deep Neural Networks for DALK Surgical Navigation. Comput. Methods Programs Biomed. 2020, 197, 105679. [Google Scholar] [CrossRef]

- Draelos, M.; Keller, B.; Viehland, C.; Carrasco-Zevallos, O.M.; Kuo, A.; Izatt, J. Real-Time Visualization and Interaction with Static and Live Optical Coherence Tomography Volumes in Immersive Virtual Reality. Biomed. Opt. Express 2018, 9, 2825. [Google Scholar] [CrossRef] [PubMed]

- Draelos, M. Robotics and Virtual Reality for Optical Coherence Tomography-Guided Ophthalmic Surgery and Diagnostics. Ph.D. Thesis, Duke University, Durham, NC, USA, 2019. [Google Scholar]

- Tang, N.; Fan, J.; Wang, P.; Shi, G. Microscope Integrated Optical Coherence Tomography System Combined with Augmented Reality. Opt. Express 2021, 29, 9407. [Google Scholar] [CrossRef]

- Sun, M.; Chai, Y.; Chai, G.; Zheng, X. Fully Automatic Robot-Assisted Surgery for Mandibular Angle Split Osteotomy. J. Craniofacial Surg. 2020, 31, 336–339. [Google Scholar] [CrossRef]

- Davids, J.; Makariou, S.-G.; Ashrafian, H.; Darzi, A.; Marcus, H.J.; Giannarou, S. Automated Vision-Based Microsurgical Skill Analysis in Neurosurgery Using Deep Learning: Development and Preclinical Validation. World Neurosurg. 2021, 149, e669–e686. [Google Scholar] [CrossRef] [PubMed]

- Padoy, N.; Hager, G.D. Human-Machine Collaborative Surgery Using Learned Models. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 5285–5292. [Google Scholar]

- Donaldson, K.E.; Braga-Mele, R.; Cabot, F.; Davidson, R.; Dhaliwal, D.K.; Hamilton, R.; Jackson, M.; Patterson, L.; Stonecipher, K.; Yoo, S.H. Femtosecond Laser–Assisted Cataract Surgery. J. Cataract. Refract. Surg. 2013, 39, 1753–1763. [Google Scholar] [CrossRef]

- Palanker, D.; Schuele, G.; Friedman, N.J.; Andersen, D.; Blumenkranz, M.S.; Batlle, J.; Feliz, R.; Talamo, J.; Marcellino, G.; Seibel, B.; et al. Cataract Surgery with OCT-Guided Femtosecond Laser. In Proceedings of the Optics in the Life Sciences; OSA: Monterey, CA, USA, 2011; p. BTuC4. [Google Scholar]

- Allen, D.; Habib, M.; Steel, D. Final Incision Size after Implantation of a Hydrophobic Acrylic Aspheric Intraocular Lens: New Motorized Injector versus Standard Manual Injector. J. Cataract. Refract. Surg. 2012, 38, 249–255. [Google Scholar] [CrossRef]

- Shin, C.; Gerber, M.; Lee, Y.-H.; Rodriguez, M.; Aghajani Pedram, S.; Hubschman, J.-P.; Tsao, T.-C.; Rosen, J. Semi-Automated Extraction of Lens Fragments Via a Surgical Robot Using Semantic Segmentation of OCT Images With Deep Learning—Experimental Results in Ex Vivo Animal Model. IEEE Robot. Autom. Lett. 2021, 6, 5261–5268. [Google Scholar] [CrossRef] [PubMed]

- Krag, S.; Andreassen, T.T. Mechanical Properties of the Human Posterior Lens Capsule. Invest. Ophthalmol. Vis. Sci. 2003, 44, 691. [Google Scholar] [CrossRef] [PubMed]

- Wilson, J.T.; Gerber, M.J.; Prince, S.W.; Chen, C.-W.; Schwartz, S.D.; Hubschman, J.-P.; Tsao, T.-C. Intraocular Robotic Interventional Surgical System (IRISS): Mechanical Design, Evaluation, and Master-Slave Manipulation: Intraocular Robotic Interventional Surgical System (IRISS). Int. J. Med. Robot. Comput. Assist. Surg. 2018, 14, e1842. [Google Scholar] [CrossRef] [PubMed]

- Yang, S.; MacLachlan, R.A.; Martel, J.N.; Lobes, L.A.; Riviere, C.N. Comparative Evaluation of Handheld Robot-Aided Intraocular Laser Surgery. IEEE Trans. Robot. 2016, 32, 246–251. [Google Scholar] [CrossRef] [PubMed]

- Yang, S.; MacLachlan, R.A.; Riviere, C.N. Toward Automated Intraocular Laser Surgery Using a Handheld Micromanipulator. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 1302–1307. [Google Scholar]

- Ho, J.; Ermon, S. Generative Adversarial Imitation Learning. Adv. Neural Inf. Process. Syst. 2016, 29, 4565–4573. [Google Scholar]

- Si, W.; Wang, N.; Yang, C. A Review on Manipulation Skill Acquisition through Teleoperation-based Learning from Demonstration. Cogn. Comp. Syst. 2021, 3, 1–16. [Google Scholar] [CrossRef]

- Pertsch, K.; Lee, Y.; Wu, Y.; Lim, J.J. Demonstration-Guided Reinforcement Learning with Learned Skills. arXiv, 2021; arXiv:2107.10253. [Google Scholar] [CrossRef]

- Singh, V.; Kodamana, H. Reinforcement Learning Based Control of Batch Polymerisation Processes. IFAC-Pap. 2020, 53, 667–672. [Google Scholar] [CrossRef]

- Keller, B.; Draelos, M.; Zhou, K.; Qian, R.; Kuo, A.N.; Konidaris, G.; Hauser, K.; Izatt, J.A. Optical Coherence Tomography-Guided Robotic Ophthalmic Microsurgery via Reinforcement Learning from Demonstration. IEEE Trans. Robot. 2020, 36, 1207–1218. [Google Scholar] [CrossRef]

- Chiu, Z.-Y.; Richter, F.; Funk, E.K.; Orosco, R.K.; Yip, M.C. Bimanual Regrasping for Suture Needles Using Reinforcement Learning for Rapid Motion Planning. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May 2021; pp. 7737–7743. [Google Scholar]

- Varier, V.M.; Rajamani, D.K.; Goldfarb, N.; Tavakkolmoghaddam, F.; Munawar, A.; Fischer, G.S. Collaborative Suturing: A Reinforcement Learning Approach to Automate Hand-off Task in Suturing for Surgical Robots. In Proceedings of the 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 31 August–4 September 2020; pp. 1380–1386. [Google Scholar]

- Osa, T.; Harada, K.; Sugita, N.; Mitsuishi, M. Trajectory Planning under Different Initial Conditions for Surgical Task Automation by Learning from Demonstration. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 6507–6513. [Google Scholar]

- Sutherland, G.R.; Wolfsberger, S.; Lama, S.; Zarei-nia, K. The Evolution of neuroArm. Neurosurgery 2013, 72, A27–A32. [Google Scholar] [CrossRef]

- Pandya, S.; Motkoski, J.W.; Serrano-Almeida, C.; Greer, A.D.; Latour, I.; Sutherland, G.R. Advancing Neurosurgery with Image-Guided Robotics. JNS 2009, 111, 1141–1149. [Google Scholar] [CrossRef]

- Sutherland, G.R.; Lama, S.; Gan, L.S.; Wolfsberger, S.; Zareinia, K. Merging Machines with Microsurgery: Clinical Experience with neuroArm. JNS 2013, 118, 521–529. [Google Scholar] [CrossRef]

- Razavi, C.R.; Creighton, F.X.; Wilkening, P.R.; Peine, J.; Taylor, R.H.; Akst, L.M. Real-Time Robotic Airway Measurement: An Additional Benefit of a Novel Steady-Hand Robotic Platform: Real-Time Robotic Airway Measurement. Laryngoscope 2019, 129, 324–329. [Google Scholar] [CrossRef] [PubMed]

- Akst, L.M.; Olds, K.C.; Balicki, M.; Chalasani, P.; Taylor, R.H. Robotic Microlaryngeal Phonosurgery: Testing of a “Steady-Hand” Microsurgery Platform: Robotic Microlaryngeal Phonosurgery with REMS. Laryngoscope 2018, 128, 126–132. [Google Scholar] [CrossRef] [PubMed]

- Olds, K.C. Global Indices for Kinematic and Force Transmission Performance in Parallel Robots. IEEE Trans. Robot. 2015, 31, 494–500. [Google Scholar] [CrossRef]

- Olds, K.C.; Chalasani, P.; Pacheco-Lopez, P.; Iordachita, I.; Akst, L.M.; Taylor, R.H. Preliminary Evaluation of a New Microsurgical Robotic System for Head and Neck Surgery. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 1276–1281. [Google Scholar]

- Feng, A.L.; Razavi, C.R.; Lakshminarayanan, P.; Ashai, Z.; Olds, K.; Balicki, M.; Gooi, Z.; Day, A.T.; Taylor, R.H.; Richmon, J.D. The Robotic ENT Microsurgery System: A Novel Robotic Platform for Microvascular Surgery: The Robotic ENT Microsurgery System. Laryngoscope 2017, 127, 2495–2500. [Google Scholar] [CrossRef] [PubMed]

- Teichmann, H.; Innocenti, M. Development of a New Robotic Platform for Microsurgery. In Robotics in Plastic and Reconstructive Surgery; Selber, J.C., Ed.; Springer International Publishing: Cham, Germany, 2021; pp. 127–137. ISBN 978-3-030-74243-0. [Google Scholar]

- Olds, K.C. Robotic Assistant Systems for Otolaryngology-Head and Neck Surgery. Ph.D. Thesis, Johns Hopkins University, Baltimore, MD, USA, 2015. [Google Scholar]

- Galen Robotics, Inc; Puleo, O.; Sevimli, Y.; Levi, D.; Bhat, A.; Saunders, D.; Taylor, R.H. Laboratory for Computational Sensing and Robotics, Johns Hopkins University Quantifying the Benefits of Robotic Assistance in Various Microsurgical Procedures. In Proceedings of the The Hamlyn Symposium on Medical Robotics; The Hamlyn Centre, Faculty of Engineering, Imperial College: London, UK, 2019; pp. 115–116. [Google Scholar]

- Ding, A.S.; Capostagno, S.; Razavi, C.R.; Li, Z.; Taylor, R.H.; Carey, J.P.; Creighton, F.X. Volumetric Accuracy Analysis of Virtual Safety Barriers for Cooperative-Control Robotic Mastoidectomy. Otol. Neurotol. 2021, 42, e1513–e1517. [Google Scholar] [CrossRef] [PubMed]

- Ding, A.S.; Lu, A.; Li, Z.; Sahu, M.; Galaiya, D.; Siewerdsen, J.H.; Unberath, M.; Taylor, R.H.; Creighton, F.X. A Self-Configuring Deep Learning Network for Segmentation of Temporal Bone Anatomy in Cone-Beam CT Imaging. Otolaryngol.-Head Neck Surg. 2023, 169, 998. [Google Scholar] [CrossRef] [PubMed]

- van Mulken, T.J.M.; Scharmga, A.M.J.; Schols, R.M.; Cau, R.; Jonis, Y.; Qiu, S.S.; van der Hulst, R.R.W.J. The Journey of Creating the First Dedicated Platform for Robot-Assisted (Super)Microsurgery in Reconstructive Surgery. Eur. J. Plast. Surg. 2020, 43, 1–6. [Google Scholar] [CrossRef]

- Mulken, T.J.M.; Schols, R.M.; Qiu, S.S.; Brouwers, K.; Hoekstra, L.T.; Booi, D.I.; Cau, R.; Schoenmakers, F.; Scharmga, A.M.J.; Hulst, R.R.W.J. Robotic (Super) Microsurgery: Feasibility of a New Master-slave Platform in an in Vivo Animal Model and Future Directions. J. Surg. Oncol. 2018, 118, 826–831. [Google Scholar] [CrossRef]

- Gerber, M.J.; Pettenkofer, M.; Hubschman, J.-P. Advanced Robotic Surgical Systems in Ophthalmology. Eye 2020, 34, 1554–1562. [Google Scholar] [CrossRef]

- Rahimy, E.; Wilson, J.; Tsao, T.-C.; Schwartz, S.; Hubschman, J.-P. Robot-Assisted Intraocular Surgery: Development of the IRISS and Feasibility Studies in an Animal Model. Eye 2013, 27, 972–978. [Google Scholar] [CrossRef]

- Chen, C.-W.; Francone, A.A.; Gerber, M.J.; Lee, Y.-H.; Govetto, A.; Tsao, T.-C.; Hubschman, J.-P. Semiautomated Optical Coherence Tomography-Guided Robotic Surgery for Porcine Lens Removal. J. Cataract. Refract. Surg. 2019, 45, 1665–1669. [Google Scholar] [CrossRef] [PubMed]

- Gerber, M.J.; Hubschman, J.P. Intraocular Robotic Surgical Systems. Curr. Robot. Rep. 2022, 3, 1–7. [Google Scholar] [CrossRef]

- De Smet, M.D.; Stassen, J.M.; Meenink, T.C.M.; Janssens, T.; Vanheukelom, V.; Naus, G.J.L.; Beelen, M.J.; Jonckx, B. Release of Experimental Retinal Vein Occlusions by Direct Intraluminal Injection of Ocriplasmin. Br. J. Ophthalmol. 2016, 100, 1742–1746. [Google Scholar] [CrossRef] [PubMed]

- de Smet, M.D.; Meenink, T.C.M.; Janssens, T.; Vanheukelom, V.; Naus, G.J.L.; Beelen, M.J.; Meers, C.; Jonckx, B.; Stassen, J.-M. Robotic Assisted Cannulation of Occluded Retinal Veins. PLoS ONE 2016, 11, e0162037. [Google Scholar] [CrossRef] [PubMed]

- Cehajic-Kapetanovic, J.; Xue, K.; Edwards, T.L.; Meenink, T.C.; Beelen, M.J.; Naus, G.J.; de Smet, M.D.; MacLaren, R.E. First-in-Human Robot-Assisted Subretinal Drug Delivery Under Local Anesthesia. Am. J. Ophthalmol. 2022, 237, 104–113. [Google Scholar] [CrossRef] [PubMed]

- Cereda, M.G.; Parrulli, S.; Douven, Y.G.M.; Faridpooya, K.; van Romunde, S.; Hüttmann, G.; Eixmann, T.; Schulz-Hildebrandt, H.; Kronreif, G.; Beelen, M.; et al. Clinical Evaluation of an Instrument-Integrated OCT-Based Distance Sensor for Robotic Vitreoretinal Surgery. Ophthalmol. Sci. 2021, 1, 100085. [Google Scholar] [CrossRef] [PubMed]

- Meenink, T.; Naus, G.; de Smet, M.; Beelen, M.; Steinbuch, M. Robot Assistance for Micrometer Precision in Vitreoretinal Surgery. Investig. Ophthalmol. Vis. Sci. 2013, 54, 5808. [Google Scholar]

- Edwards, T.L.; Xue, K.; Meenink, H.C.M.; Beelen, M.J.; Naus, G.J.L.; Simunovic, M.P.; Latasiewicz, M.; Farmery, A.D.; de Smet, M.D.; MacLaren, R.E. First-in-Human Study of the Safety and Viability of Intraocular Robotic Surgery. Nat. Biomed. Eng. 2018, 2, 649–656. [Google Scholar] [CrossRef]

- Xue, K.; Edwards, T.L.; Meenink, H.C.M.; Beelen, M.J.; Naus, G.J.L.; Simunovic, M.P.; de Smet, M.D.; MacLaren, R.E. Robot-Assisted Retinal Surgery: Overcoming Human Limitations. Surg. Retin. 2019, 109–114. [Google Scholar] [CrossRef]

- He, B.; De Smet, M.D.; Sodhi, M.; Etminan, M.; Maberley, D. A Review of Robotic Surgical Training: Establishing a Curriculum and Credentialing Process in Ophthalmology. Eye 2021, 35, 3192–3201. [Google Scholar] [CrossRef]

- Forslund Jacobsen, M.; Konge, L.; Alberti, M.; la Cour, M.; Park, Y.S.; Thomsen, A.S.S. ROBOT-ASSISTED VITREORETINAL SURGERY IMPROVES SURGICAL ACCURACY COMPARED WITH MANUAL SURGERY: A Randomized Trial in a Simulated Setting. Retina 2020, 40, 2091–2098. [Google Scholar] [CrossRef]

- Brodie, A.; Vasdev, N. The Future of Robotic Surgery. Annals 2018, 100, 4–13. [Google Scholar] [CrossRef] [PubMed]

- Faridpooya, K.; Van Romunde, S.H.M.; Manning, S.S.; Van Meurs, J.C.; Naus, G.J.L.; Beelen, M.J.; Meenink, T.C.M.; Smit, J.; De Smet, M.D. Randomised Controlled Trial on Robot-assisted versus Manual Surgery for Pucker Peeling. Clin. Exper Ophthalmol. 2022, 50, 1057–1064. [Google Scholar] [CrossRef] [PubMed]

- Gijbels, A.; Vander Poorten, E.B.; Gorissen, B.; Devreker, A.; Stalmans, P.; Reynaerts, D. Experimental Validation of a Robotic Comanipulation and Telemanipulation System for Retinal Surgery. In Proceedings of the 5th IEEE RAS/EMBS International Conference on Biomedical Robotics and Biomechatronics, Sao Paulo, Brazil, 12–15 August 2014; pp. 144–150. [Google Scholar]

- Guerrouad, A.; Vidal, P. SMOS: Stereotaxical Microtelemanipulator for Ocular Surgery. In Proceedings of the Annual International Engineering in Medicine and Biology Society, Seattle, WA, USA, 9–12 November 1989; pp. 879–880. [Google Scholar]

- Wei, W.; Goldman, R.; Simaan, N.; Fine, H.; Chang, S. Design and Theoretical Evaluation of Micro-Surgical Manipulators for Orbital Manipulation and Intraocular Dexterity. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Roma, Italy, 10–14 April 2007; pp. 3389–3395. [Google Scholar]

- Wei, W.; Simaan, N. Modeling, Force Sensing, and Control of Flexible Cannulas for Microstent Delivery. J. Dyn. Syst. Meas. Control 2012, 134, 041004. [Google Scholar] [CrossRef]

- Yu, H.; Shen, J.-H.; Joos, K.M.; Simaan, N. Calibration and Integration of B-Mode Optical Coherence Tomography for Assistive Control in Robotic Microsurgery. IEEE/ASME Trans. Mechatron. 2016, 21, 2613–2623. [Google Scholar] [CrossRef]

- Ueta, T.; Yamaguchi, Y.; Shirakawa, Y.; Nakano, T.; Ideta, R.; Noda, Y.; Morita, A.; Mochizuki, R.; Sugita, N.; Mitsuishi, M.; et al. Robot-Assisted Vitreoretinal Surgery. Ophthalmology 2009, 116, 1538–1543.e2. [Google Scholar] [CrossRef] [PubMed]

- Taylor, R.; Jensen, P.; Whitcomb, L.; Barnes, A.; Kumar, R.; Stoianovici, D.; Gupta, P.; Wang, Z.; Dejuan, E.; Kavoussi, L. A Steady-Hand Robotic System for Microsurgical Augmentation. Int. J. Robot. Res. 1999, 18, 1201–1210. [Google Scholar] [CrossRef]

- Mitchell, B.; Koo, J.; Iordachita, I.; Kazanzides, P.; Kapoor, A.; Handa, J.; Hager, G.; Taylor, R. Development and Application of a New Steady-Hand Manipulator for Retinal Surgery. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Roma, Italy, 10–14 April 2007; pp. 623–629. [Google Scholar]

- Song, J.; Gonenc, B.; Guo, J.; Iordachita, I. Intraocular Snake Integrated with the Steady-Hand Eye Robot for Assisted Retinal Microsurgery. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 6724–6729. [Google Scholar]

- Becker, B.C.; MacLachlan, R.A.; Lobes, L.A., Jr.; Riviere, C.N. Position-Based Virtual Fixtures for Membrane Peeling with a Handheld Micromanipulator. In Proceedings of the IEEE International Conference on Robotics and Automation: ICRA: IEEE International Conference on Robotics and Automation; NIH Public Access: Milwaukee, WI, USA, 2012; Volume 2012, p. 1075. [Google Scholar]

- Yang, S.; Wells, T.S.; MacLachlan, R.A.; Riviere, C.N. Performance of a 6-Degree-of-Freedom Active Microsurgical Manipulator in Handheld Tasks. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; pp. 5670–5673. [Google Scholar]

- Barthel, A.; Trematerra, D.; Nasseri, M.A.; Zapp, D.; Lohmann, C.P.; Knoll, A.; Maier, M. Haptic Interface for Robot-Assisted Ophthalmic Surgery. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milano, Italy, 25–29 August 2015; pp. 4906–4909. [Google Scholar]