Segment-Based Unsupervised Learning Method in Sensor-Based Human Activity Recognition

Abstract

1. Introduction

- We propose a new unsupervised learning method, SimCLR (seg) + SDFD, for sensor-based HAR in an environment where the training dataset consists of segmented data. In the proposed method, segmented data are used only during training and are not used during the evaluation.

- The ablation study showed that SimCLR (seg) and SDFD improved the f1-score compared to methods that do not focus on the data structure. This shows that focusing on the structure of the training dataset is effective.

- We demonstrated that our proposed method acquires generalized feature representations for sensor-based HAR through our experiments.

2. Related Works

3. Proposed Method

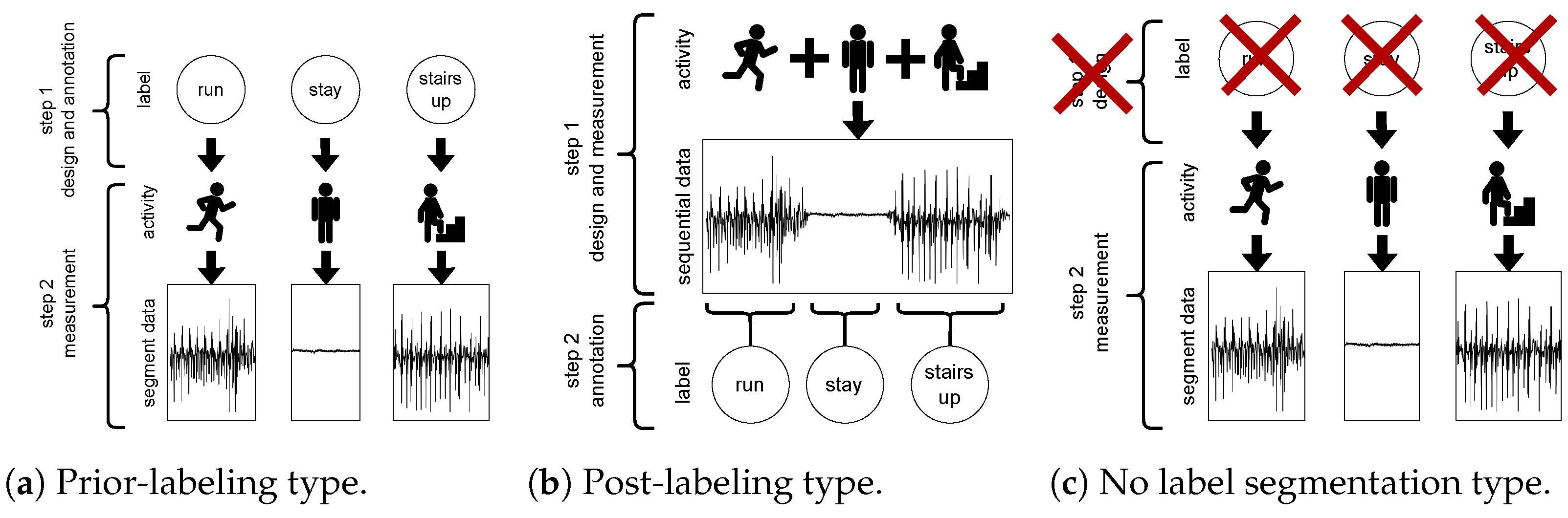

3.1. Data-Measurement Method

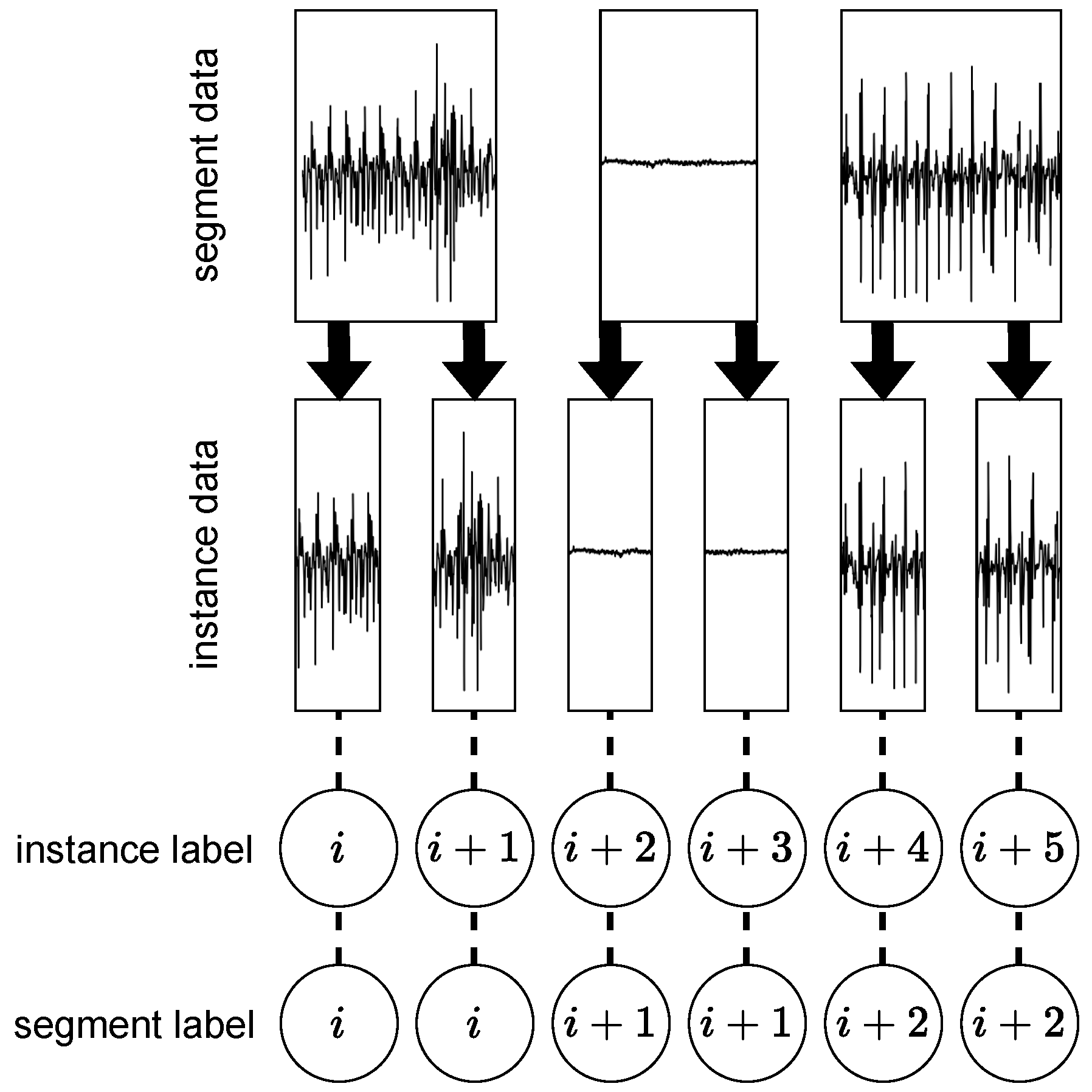

3.2. Preprocessing

3.3. Model Training Method

3.3.1. Segment Discrimination

3.3.2. SD and Feature Decorrelation

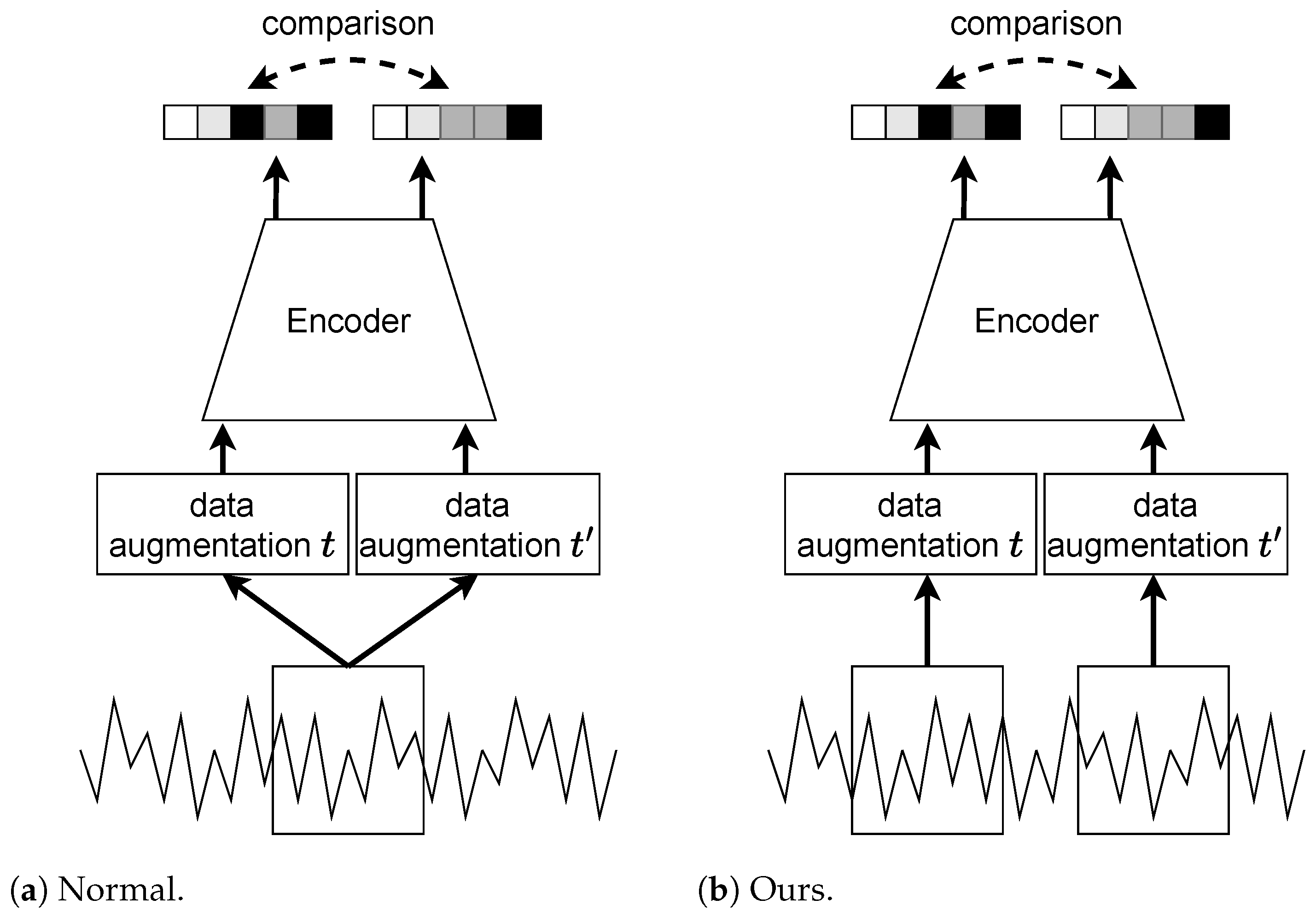

3.3.3. Segment-Based SimCLR

3.3.4. Segment-Based SimCLR with SDFD

4. Experiments

4.1. Experimental Setup

4.2. Ten-Shot Transfer Learning Verification

4.3. Confusion Matrix

4.4. Instance Effectiveness for Few-Shot TL

4.5. Effectiveness for Different Domains

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Thiel, D.V.; Sarkar, A.K. Swing Profiles in Sport: An Accelerometer Analysis. Procedia Eng. 2014, 72, 624–629. [Google Scholar] [CrossRef][Green Version]

- Klein, M.C.; Manzoor, A.; Mollee, J.S. Active2Gether: A Personalized m-Health Intervention to Encourage Physical Activity. Sensors 2017, 17, 1436. [Google Scholar] [CrossRef] [PubMed]

- Plangger, K.; Campbell, C.; Robson, K.; Montecchi, M. Little rewards, big changes: Using exercise analytics to motivate sustainable changes in physical activity. Inf. Manag. 2022, 59, 103216. [Google Scholar] [CrossRef]

- Thomas, B.; Lu, M.L.; Jha, R.; Bertrand, J. Machine Learning for Detection and Risk Assessment of Lifting Action. IEEE Trans.-Hum.-Mach. Syst. 2022, 52, 1196–1204. [Google Scholar] [CrossRef]

- Zeng, M.; Nguyen, L.T.; Yu, B.; Mengshoel, O.J.; Zhu, J.; Wu, P.; Zhang, J. Convolutional Neural Networks for human activity recognition using mobile sensors. In Proceedings of the 6th International Conference on Mobile Computing, Applications and Services, Austin, TX, USA, 6–7 November 2014; pp. 197–205. [Google Scholar]

- Ordóñez, F.J.; Roggen, D. Deep Convolutional and LSTM Recurrent Neural Networks for Multimodal Wearable Activity Recognition. Sensors 2016, 16, 115. [Google Scholar] [CrossRef] [PubMed]

- Murad, A.; Pyun, J.Y. Deep Recurrent Neural Networks for Human Activity Recognition. Sensors 2017, 17, 2556. [Google Scholar] [CrossRef] [PubMed]

- Guinea, A.S.; Sarabchian, M.; Mühlhäuser, M. Improving Wearable-Based Activity Recognition Using Image Representations. Sensors 2022, 22, 1840. [Google Scholar] [CrossRef] [PubMed]

- Jalal, A.; Quaid, M.A.K.; Hasan, A.S. Wearable Sensor-Based Human Behavior Understanding and Recognition in Daily Life for Smart Environments. In Proceedings of the 2018 International Conference on Frontiers of Information Technology (FIT), Islamabad, Pakistan, 17–19 December 2018; pp. 105–110. [Google Scholar]

- Liu., H.; Schultz., T. A Wearable Real-time Human Activity Recognition System using Biosensors Integrated into a Knee Bandage. In Proceedings of the 12th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2019)-BIODEVICES, Prague, Czech Republic, 22–24 February 2019; INSTICC; SciTePress: Setúbal, Portugal, 2019; pp. 47–55. [Google Scholar]

- Liu, H.; Gamboa, H.; Schultz, T. Sensor-Based Human Activity and Behavior Research: Where Advanced Sensing and Recognition Technologies Meet. Sensors 2023, 23, 125. [Google Scholar] [CrossRef]

- Haresamudram, H.; Essa, I.; Plötz, T. Contrastive Predictive Coding for Human Activity Recognition. ACM Interact. Mob. Wearable Ubiquitous Technol. 2021, 5, 1–26. [Google Scholar] [CrossRef]

- Tonekaboni, S.; Eytan, D.; Goldenberg, A. Unsupervised Representation Learning for Time Series with Temporal Neighborhood Coding. In Proceedings of the ninth International Conference on Learning Representations, Virtual only, 3–7 May 2021. [Google Scholar]

- Zerveas, G.; Jayaraman, S.; Patel, D.; Bhamidipaty, A.; Eickhoff, C. A Transformer-Based Framework for Multivariate Time Series Representation Learning. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, New York, NY, USA, 14–18 August 2021; pp. 2114–2124. [Google Scholar]

- Xiao, Z.; Xing, H.; Zhao, B.; Qu, R.; Luo, S.; Dai, P.; Li, K.; Zhu, Z. Deep Contrastive Representation Learning with Self-Distillation. IEEE Trans. Emerg. Top. Comput. Intell. 2023, 1–13. [Google Scholar] [CrossRef]

- Franceschi, J.Y.; Dieuleveut, A.; Jaggi, M. Unsupervised scalable representation learning for multivariate time series. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, Canada, 8–14 December 2019; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2019; Volume 32. [Google Scholar]

- Sohn, K. Improved Deep Metric Learning with Multi-class N-pair Loss Objective. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; Lee, D., Sugiyama, M., Luxburg, U., Guyon, I., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2016; Volume 29. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A Simple Framework for Contrastive Learning of Visual Representations. In Proceedings of the 37th International Conference on Machine Learning, Virtual only, 12–18 July 2020. [Google Scholar]

- Takenaka, K.; Hasegawa, T. Unsupervised Representation Learning Method In Sensor Based Human Activity Recognition. In Proceedings of the International Conference on Machine Learning and Cybernetics, Toyama, Japan, 9–11 September 2022; pp. 25–30. [Google Scholar]

- Hartmann, Y.; Liu, H.; Schultz, T. Feature Space Reduction for Multimodal Human Activity Recognition. In Proceedings of the 13th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2020)-BIOSIGNALS, Valletta, Malta, 24–26 February 2020; INSTICC. SciTePress: Setúbal, Portugal, 2020; pp. 135–140.

- Liu., H.; Xue, T.; Schultz, T. On a Real Real-Time Wearable Human Activity Recognition System. In Proceedings of the 16th International Joint Conference on Biomedical Engineering Systems and Technologies-WHC, Lisbon, Portugal, 16–18 February 2023; INSTICC. SciTePress: Setúbal, Portugal, 2023; pp. 711–720.

- Bento, N.; Rebelo, J.; Barandas, M.; Carreiro, A.V.; Campagner, A.; Cabitza, F.; Gamboa, H. Comparing Handcrafted Features and Deep Neural Representations for Domain Generalization in Human Activity Recognition. Sensors 2022, 22, 7324. [Google Scholar] [CrossRef] [PubMed]

- Dirgová Luptáková, I.; Kubovčík, M.; Pospíchal, J. Wearable Sensor-Based Human Activity Recognition with Transformer Model. Sensors 2022, 22, 1911. [Google Scholar] [CrossRef] [PubMed]

- Mahmud, T.; Sazzad Sayyed, A.Q.M.; Fattah, S.A.; Kung, S.Y. A Novel Multi-Stage Training Approach for Human Activity Recognition From Multimodal Wearable Sensor Data Using Deep Neural Network. IEEE Sens. J. 2021, 21, 1715–1726. [Google Scholar] [CrossRef]

- Qian, H.; Pan, S.J.; Da, B.; Miao, C. A Novel Distribution-Embedded Neural Network for Sensor-Based Activity Recognition. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence, IJCAI-19, Macao SAR, China, 10–16 August 2019; pp. 5614–5620. [Google Scholar]

- Gao, W.; Zhang, L.; Teng, Q.; He, J.; Wu, H. DanHAR: Dual Attention Network for multimodal human activity recognition using wearable sensors. Appl. Soft Comput. 2021, 111, 107728. [Google Scholar] [CrossRef]

- Sozinov, K.; Vlassov, V.; Girdzijauskas, S. Human Activity Recognition Using Federated Learning. In Proceedings of the IEEE Intl Conf on Parallel & Distributed Processing with Applications, Ubiquitous Computing & Communications, Big Data & Cloud Computing, Social Computing & Networking, Sustainable Computing & Communications, Melbourne, VIC, Australia, 11–13 December 2018; pp. 1103–1111. [Google Scholar]

- Li, C.; Niu, D.; Jiang, B.; Zuo, X.; Yang, J. Meta-HAR: Federated Representation Learning for Human Activity Recognition. In Proceedings of the Web Conference 2021, New York, NY, USA, 19–23 April 2021; pp. 912–922. [Google Scholar]

- Ma, H.; Zhang, Z.; Li, W.; Lu, S. Unsupervised Human Activity Representation Learning with Multi-Task Deep Clustering. Acm Interact. Mob. Wearable Ubiquitous Technol. 2021, 5, 1–25. [Google Scholar] [CrossRef]

- He, K.; Fan, H.; Wu, Y.; Xie, S.; Girshick, R. Momentum Contrast for Unsupervised Visual Representation Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9726–9735. [Google Scholar]

- Chen, X.; He, K. Exploring simple siamese representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 15750–15758. [Google Scholar]

- Tao, Y.; Takagi, K.; Nakata, K. Clustering-friendly Representation Learning via Instance Discrimination and Feature Decorrelation. In Proceedings of the International Conference on Learning Representations, Virtual, 3–7 May 2021. [Google Scholar]

- Wu, Z.; Xiong, Y.; Yu, S.X.; Lin, D. Unsupervised Feature Learning via Non-Parametric Instance Discrimination. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3733–3742. [Google Scholar]

- Grill, J.B.; Strub, F.; Altché, F.; Tallec, C.; Richemond, P.H.; Buchatskaya, E.; Doersch, C.; Pires, B.A.; Guo, Z.D.; Azar, M.G.; et al. Bootstrap Your Own Latent a New Approach to Self-Supervised Learning. In Proceedings of the Advances in Neural Information Processing Systems, Virtual only, 6–12 December 2020; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M.F., Lin, H., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2020; Volume 33. [Google Scholar]

- Rodrigues, J.; Liu, H.; Folgado, D.; Belo, D.; Schultz, T.; Gamboa, H. Feature-Based Information Retrieval of Multimodal Biosignals with a Self-Similarity Matrix: Focus on Automatic Segmentation. Biosensors 2022, 12, 1182. [Google Scholar] [CrossRef] [PubMed]

- Folgado, D.; Barandas, M.; Antunes, M.; Nunes, M.L.; Liu, H.; Hartmann, Y.; Schultz, T.; Gamboa, H. TSSEARCH: Time Series Subsequence Search Library. SoftwareX 2022, 18, 101049. [Google Scholar] [CrossRef]

- Oord, A.v.d.; Li, Y.; Vinyals, O. Representation learning with contrastive predictive coding. arXiv 2018, arXiv:1807.03748. [Google Scholar]

- Kwapisz, J.R.; Weiss, G.M.; Moore, S.A. Activity Recognition Using Cell Phone Accelerometers. Acm Sigkdd Explor. Newsl. 2011, 12, 74–82. [Google Scholar] [CrossRef]

- Zhang, M.; Sawchuk, A.A. USC-HAD: A Daily Activity Dataset for Ubiquitous Activity Recognition Using Wearable Sensors. In Proceedings of the 2012 ACM Conference on Ubiquitous Computing, New York, NY, USA, 5–8 September 2012; pp. 1036–1043. [Google Scholar]

- Ichino, H.; Kaji, K.; Sakurada, K.; Hiroi, K.; Kawaguchi, N. HASC-PAC2016: Large Scale Human Pedestrian Activity Corpus and Its Baseline Recognition. In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct, New York, NY, USA, 12–16 September 2016; pp. 705–714. [Google Scholar]

- Kawaguchi, N.; Yang, Y.; Yang, T.; Ogawa, N.; Iwasaki, Y.; Kaji, K.; Terada, T.; Murao, K.; Inoue, S.; Kawahara, Y.; et al. HASC2011corpus: Towards the Common Ground of Human Activity Recognition. In Proceedings of the 13th International Conference on Ubiquitous Computing, New York, NY, USA, 17–21 September 2011; pp. 571–572. [Google Scholar]

- Chavarriaga, R.; Sagha, H.; Calatroni, A.; Digumarti, S.T.; Tröster, G.; del R. Millán, J.; Roggen, D. The Opportunity challenge: A benchmark database for on-body sensor-based activity recognition. Pattern Recognit. Lett. 2013, 34, 2033–2042. [Google Scholar] [CrossRef]

- Liu, H.; Schultz, T. How Long Are Various Types of Daily Activities? Statistical Analysis of a Multimodal Wearable Sensor-Based Human Activity Dataset. In Proceedings of the 15th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2022)-Volume 5: HEALTHINF, Online Streaming, 9–11 February 2022; pp. 680–688. [Google Scholar]

- Liu, H.; Schultz, T. ASK: A Framework for Data Acquisition and Activity Recognition. In Proceedings of the International Conference on Bio-inspired Systems and Signal Processing, Funchal, Madeira, Portugal, 19–21 January 2018. [Google Scholar]

- Liu, H.; Hartmann, Y.; Schultz, T. CSL-SHARE: A Multimodal Wearable Sensor-Based Human Activity Dataset. Front. Comput. Sci. 2021, 3, 759136. [Google Scholar] [CrossRef]

- Castro, R.L.; Andrade, D.; Fraguela, B. Reusing Trained Layers of Convolutional Neural Networks to Shorten Hyperparameters Tuning Time. arXiv 2020, arXiv:2006.09083. [Google Scholar]

- Oyelade, O.N.; Ezugwu, A.E. A comparative performance study of random-grid model for hyperparameters selection in detection of abnormalities in digital breast images. Concurr. Comput. Pract. Exp. 2022, 34, e6914. [Google Scholar] [CrossRef]

- Anguita, D.; Ghio, A.; Oneto, L.; Parra, X.; Reyes-Ortiz, J.L. A Public Domain Dataset for Human Activity Recognition Using Smartphones. In Proceedings of the European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning, Bruges, Belgium, 24–26 April 2013; pp. 437–442. [Google Scholar]

- Um, T.T.; Pfister, F.M.J.; Pichler, D.; Endo, S.; Lang, M.; Hirche, S.; Fietzek, U.; Kulić, D. Data Augmentation of Wearable Sensor Data for Parkinson’s Disease Monitoring Using Convolutional Neural Networks. In Proceedings of the 19th ACM International Conference on Multimodal Interaction, New York, NY, USA, 13–17 November 2017; pp. 216–220. [Google Scholar]

| Dataset | Training | Validation | Testing |

|---|---|---|---|

| HASC [40] | 80 | 20 | 30 |

| Wisdm [38] | 21 | 6 | 9 |

| Opportunity [42] | 2 | 1 | 1 |

| UCI-HAR [48] | 19 | 5 | 6 |

| USC-HAD [39] | 8 | 2 | 4 |

| HASC | Wisdm | UCI-HAR | |

|---|---|---|---|

| Non-pre | 0.3714 | 0.7286 | 0.9397 |

| BYOL [34] | 0.6072 | 0.5676 | 0.7198 |

| SimCLR [18] | 0.6287 | 0.6717 | 0.8492 |

| ID [33] | 0.2354 | 0.6661 | 0.8926 |

| IDFD [32] | 0.6195 | 0.6962 | 0.8986 |

| SimCLR (seg) + SDFD (ours) | 0.7012 | 0.7636 | 0.9108 |

| HASC | Wisdm | UCI-HAR | |

|---|---|---|---|

| IDFD [32] | 0.6195 | 0.6962 | 0.8986 |

| SDFD | 0.6875 | 0.7980 | 0.9047 |

| SimCLR [18] | 0.6287 | 0.6717 | 0.8492 |

| SimCLR (seg) | 0.6948 | 0.7679 | 0.8986 |

| SimCLR (seg) + SDFD | 0.7012 | 0.7636 | 0.9108 |

| Stay | Walk | Jog | Skip | stUp | stDown | |

|---|---|---|---|---|---|---|

| IDFD [32] | 0.8970 | 0.3520 | 0.7752 | 0.6862 | 0.5017 | 0.5048 |

| SimCLR [18] | 0.8992 | 0.3916 | 0.7682 | 0.7092 | 0.4895 | 0.5147 |

| SDFD | 0.8977 | 0.4470 | 0.8548 | 0.8183 | 0.5170 | 0.5905 |

| SimCLR (seg) | 0.8756 | 0.4942 | 0.8525 | 0.8213 | 0.5432 | 0.5819 |

| SimCLR (seg) + SDFD | 0.8857 | 0.5143 | 0.8738 | 0.8268 | 0.4827 | 0.6241 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Takenaka, K.; Kondo, K.; Hasegawa, T. Segment-Based Unsupervised Learning Method in Sensor-Based Human Activity Recognition. Sensors 2023, 23, 8449. https://doi.org/10.3390/s23208449

Takenaka K, Kondo K, Hasegawa T. Segment-Based Unsupervised Learning Method in Sensor-Based Human Activity Recognition. Sensors. 2023; 23(20):8449. https://doi.org/10.3390/s23208449

Chicago/Turabian StyleTakenaka, Koki, Kei Kondo, and Tatsuhito Hasegawa. 2023. "Segment-Based Unsupervised Learning Method in Sensor-Based Human Activity Recognition" Sensors 23, no. 20: 8449. https://doi.org/10.3390/s23208449

APA StyleTakenaka, K., Kondo, K., & Hasegawa, T. (2023). Segment-Based Unsupervised Learning Method in Sensor-Based Human Activity Recognition. Sensors, 23(20), 8449. https://doi.org/10.3390/s23208449