Abstract

Dragon fruit (Hylocereus undatus) is a tropical and subtropical fruit that undergoes multiple ripening cycles throughout the year. Accurate monitoring of the flower and fruit quantities at various stages is crucial for growers to estimate yields, plan orders, and implement effective management strategies. However, traditional manual counting methods are labor-intensive and inefficient. Deep learning techniques have proven effective for object recognition tasks but limited research has been conducted on dragon fruit due to its unique stem morphology and the coexistence of flowers and fruits. Additionally, the challenge lies in developing a lightweight recognition and tracking model that can be seamlessly integrated into mobile platforms, enabling on-site quantity counting. In this study, a video stream inspection method was proposed to classify and count dragon fruit flowers, immature fruits (green fruits), and mature fruits (red fruits) in a dragon fruit plantation. The approach involves three key steps: (1) utilizing the YOLOv5 network for the identification of different dragon fruit categories, (2) employing the improved ByteTrack object tracking algorithm to assign unique IDs to each target and track their movement, and (3) defining a region of interest area for precise classification and counting of dragon fruit across categories. Experimental results demonstrate recognition accuracies of 94.1%, 94.8%, and 96.1% for dragon fruit flowers, green fruits, and red fruits, respectively, with an overall average recognition accuracy of 95.0%. Furthermore, the counting accuracy for each category is measured at 97.68%, 93.97%, and 91.89%, respectively. The proposed method achieves a counting speed of 56 frames per second on a 1080ti GPU. The findings establish the efficacy and practicality of this method for accurate counting of dragon fruit or other fruit varieties.

1. Introduction

Dragon fruit (Hylocereus undatus), also known as pitahaya or pitaya, is a tropical and subtropical fruit that has gained popularity among consumers due to its nutritional value and pleasant taste [1,2]. China stands as the leading global producer, with an annual output of 1.297 million tons in 2019. Accurate monitoring of flower and fruit quantities during various stages is vital for growers to forecast yield and optimize order planning. Nevertheless, the prevalent reliance on labor-intensive, time-consuming, and inefficient manual counting methods persists among dragon fruit growers in different regions [3,4].

With the advancement of information technology, machine vision technology has emerged as a significant tool for plant monitoring and yield estimation. Its benefits, including cost-effectiveness, ease of acquisition, and high accuracy, have propelled its adoption. Orchards typically feature wide row spacing, enabling the collection of video data using ground moving platforms, facilitating large-scale detection. Video-based target statistics involve two essential steps: target recognition and target tracking. Traditional target recognition algorithms such as the Deformable Part Model (DPM) [5] and Support Vector Machine (SVM) have been gradually replaced by deep learning algorithms due to their limitations in detection accuracy, robustness, and speed.

Currently, deep learning-based object detection algorithms can be broadly categorized into two-stage detection algorithms based on candidate regions and one-stage detection algorithms based on regression principles. Two-stage detection algorithms, such as Faster R-CNN [6], have found extensive application in fruit (prickly pear fruit [7], apples [8], passion fruit [9], etc.) detection, crop (Sugarcane Seedlings [10], weeds [11]) detection, disease (sweet peppers disease [12], soybean leaf disease [13]) detection, and so on, achieving accuracy levels ranging from 55.5% to 93.67%, depending on the complexity and difficulty. However, those kinds of two-stage algorithms have obvious drawbacks of being large in size, slow in speed, and challenging to integrate into mobile devices.

One-stage detection algorithms, prominently represented by the YOLO [14,15,16] series, are preferred for real-time performance requirements owing to their compact size, rapid speed, and high accuracy. YOLO series models were built for variable objects in agriculture, such as detection of weeds [17] or diseases [18] with a similar appearance or features. YOLO models have also shown balanced performance on fruit detection though the biggest challenge that exists in the similar colors and occlusion in some conditions. Wang [19] employed YOLOv4 [20] for apple detection, achieving an average detection accuracy of 92.23%, surpassing Faster R-CNN by 2.02% under comparable conditions. Yao [21] utilized YOLOv5 to identify defects in kiwi fruits, accomplishing a detection accuracy of 94.7% with a mere 0.1 s processing time per image. Yan [22] deployed YOLOv5 to automatically distinguish graspable and ungraspable apples in apple tree images for a picking robot. The method achieved an average recognition time of 0.015 s per image. YOLO models of different versions also have performance differences. Comparative analysis revealed that YOLOv5 enhanced the model’s mean Average Precision (mAP) by 14.95% and 4.74% compared to YOLOv3 and YOLOv4, respectively, while significantly compressing the model size by 94.6% and 94.8%. Moreover, YOLOv5 demonstrated average recognition speeds 1.13 and 3.53 times faster than YOLOv4 and YOLOv3 models, respectively. Thus, the YOLOv5 algorithm effectively balances speed and accuracy in one-stage detection algorithms.

Conventional target tracking methods, including optical flow [23] and frame difference [24], exhibit limited real-time performance, high complexity, and susceptibility to environmental factors. Conversely, tracking algorithms based on Kalman filtering [25] and Hungarian matching [26], such as SORT [27], DeepSORT [28], and ByteTrack [29], excel in rapid tracking speed and high accuracy, meeting the demands of video detection. SORT optimizes tracking efficiency by correlating frames before and after an image, yet struggles to address occluded target tracking challenges. DeepSORT enhances occluded target tracking by leveraging deep appearance features extracted through a convolutional neural network (CNN), augmenting the deep feature matching capability, albeit at the expense of detection speed. Similar to SORT, ByteTrack does not employ deep appearance features but solely relies on the inter-frame information correlation. However, ByteTrack effectively addresses occluded target recognition challenges by emphasizing low-confidence detection targets, thereby achieving a good balance between tracking accuracy and speed. In summary, ByteTrack surpasses other tracking algorithms in performance, yet it is restricted to single-class target tracking and does not provide direct support for multi-class target classification tracking.

To address existing challenges of current tracking algorithms and meet practical demands, this study introduces an enhanced ByteTrack tracking algorithm for real-time recognition, tracking, and counting of dragon fruit at various growth stages in inspection videos. The proposed method comprises three core modules: (1) a dragon fruit multi-classification recognition model based on YOLOv5; (2) the multi-class detection outcomes are utilized as inputs for the improved ByteTrack tracker, enabling end-to-end tracking of dragon fruit across different growth stages; (3) regions of interest (ROI) are defined to accomplish classification statistics for dragon fruit at distinct growth stages. This method can be further integrated into mobile devices, facilitating automated inspection of dragon fruit orchards and offering a viable approach for timely prediction of dragon fruit yield and maturity.

The organization of the rest of this paper is as follows. In Section 2, we present the proposed method and performance metrics in detail. Section 3 is dedicated to the discussion of our results. Following that, in Section 4, we delve into the discussion of future work. Finally, in Section 5, we draw our conclusions.

Our contributions include (1) an enhanced ByteTrack tracking algorithm was proposed to simultaneously recognize, track, and count dragon fruit of different ripeness at both sides; (2) the YOLOv5 object detector was employed as the detection component of the ByteTrack tracker, and multi-class tags were introduced into ByteTrack, achieving efficient and rapid tracking of multiple classes.

2. Materials and Methods

2.1. Image Acquisition for Dragon Fruit

A series of videos were recorded using several handheld smartphones along the inter-row paths of a dragon fruit plantation situated in Long’an, Guangxi, on 7 November 2021. Based on the observed conditions, the plots can be categorized into three scenarios: (a) plots with coexisting green fruits (immature dragon fruit) and red fruits (mature dragon fruit), as depicted in Figure 1a; (b) plots with coexisting dragon fruit flowers and green fruits, as shown in Figure 1b; and (c) plots with coexisting flowers, green fruits, and red fruits, as illustrated in Figure 1c. The video recordings were conducted in the afternoon and night time (3:00 p.m.–9:00 p.m.), with supplementary illumination provided by grow lights during night time. The smartphones were handheld with a gimble system at a height of 1.0–1.5 m above the ground. The camera lens was put straight forward, assuring the dragon fruit plants on both sides of the path being recorded. The lighting conditions included front light and backlight during the day. The video acquisition speed was about 1 m/s. The collected videos were stored in MP4 format, featuring a resolution of 1920 (horizontal) × 1080 (vertical) and a frame rate of 30 frames per second. In total, 61 videos were acquired, with a combined duration of approximately 150 min.

Figure 1.

The plots with different scenarios: (a) plots with coexisting green and red fruits; (b) plots with coexisting dragon fruit flowers and green fruits; and (c) plots with coexisting flowers and green and red fruits.

2.2. The Proposed Technical Framework

The technical framework of this study, as illustrated in Figure 2, encompasses the following five key components:

Figure 2.

The proposed technical framework in this study.

(1) Construction of a dragon fruit detection dataset: Curating a dataset by selecting videos captured under diverse environmental conditions, extracting relevant key frames, and annotating them to establish a comprehensive dragon fruit detection dataset.

(2) Training a dragon fruit detection model: Utilizing the constructed dataset to train a detection algorithm, thereby developing a dragon fruit recognition model capable of identifying dragon fruit at distinct growth stages. The recognition outcomes serve as an input for subsequent object tracking processes.

(3) Tracking dragon fruit at different growth stages: Adding multi-classification information on the basis of multi-object tracking technology and integrating the results of dragon fruit detection to achieve real-time tracking of dragon fruit across various growth stages.

(4) Counting dragon fruit using the ROI region: Incorporating a region of interest (ROI) within the video and utilizing the results obtained from object tracking to perform accurate counting of dragon fruit at different growth stages within the defined ROI.

(5) Result analysis: Conducting a comprehensive assessment of the dragon fruit counting method by evaluating the performance of object detection, object tracking, and the effectiveness of counting within the ROI region.

2.3. Construction of the Dragon Fruit Dataset

Twenty videos, each with a duration ranging from 30 s to 3 min, were randomly selected for the study. Out of these, 16 videos were utilized to extract a raw image dataset by capturing one frame for every 30 frames. Images depicting figs or suffering from blurriness were manually eliminated. The resulting dataset comprised 5500 images, which were subsequently numbered for manual classification of flowers, green fruits, and red fruits using labelimg software. Targets exhibiting occlusion areas exceeding 90% or those with blurry attributes were excluded from the labeling process. Finally, the annotated dataset was divided into a training set (5000 images) and a validation set (500 images), maintaining a 9:1 ratio. The remaining four videos were employed for system testing purposes, encompassing frame rates spanning from 1800 to 5400 frames. Videos 1 and 2 were captured during night time, while videos 3 and 4 were obtained in daylight conditions, employing the same filming techniques involving the simultaneous recording of two rows of dragon fruit plants. The basic information of the dataset is listed in Table 1.

Table 1.

Basic information of the dataset.

2.4. Multi-Object Tracking with Multi-Class

The ByteTrack algorithm, comprising object detection and object tracking stages, encounters challenges regarding real-time performance in detection and the lack of class information in tracking. To address these limitations, this study presents an enhanced version of the algorithm for efficient detection and tracking of dragon fruits at various growth stages. Notably, we replaced the original detector in ByteTrack with YOLOv5 to enhance both accuracy and speed of detection. Additionally, multi-class information was integrated into the tracking module so that the object numbers of specific classes could be counted.

2.4.1. Object Detection

The YOLO (You Only Look Once) algorithm is a renowned one-stage object detection algorithm that identifies objects within an image in a single pass. Unlike region-based convolutional neural network (RCNN)-type algorithms that generate candidate box regions, YOLO directly produces bounding box coordinates and class probabilities of each bounding box through regression. This unique approach enables faster detection speeds, as it eliminates the need for multiple passes or region proposal steps.

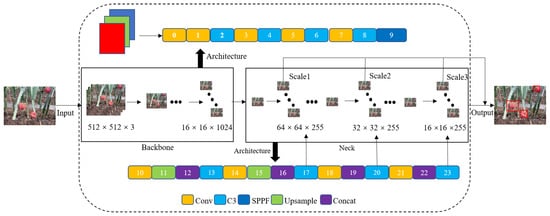

The YOLOv5 architecture offers four variants, distinguished by the network’s depth and width: YOLOv5-s, YOLOv5-m, YOLOv5-l, and YOLOv5-x. Among these, YOLOv5-s is the smallest model with the fastest inference speed, making it ideal for deployment on edge detection devices. In this study, we employed the YOLOv5-s network model, as depicted in Figure 3, which comprises four key components: Input, Backbone, Neck, and Output. The Input module performs essential pre-processing tasks on the dragon fruit images, including adaptive scaling and data augmentation using Mosaic. The Backbone module, consisting of C3 and SPPF structures, focuses on feature extraction from the input image. The C3 module divides the basic feature layer into two parts. One part conducts convolutional operations, while the other part fuses with the convolutional operation results of the first part through cross-layer combination to obtain the feature layer. The SPPF structure plays a crucial role in aggregating multi-scale features obtained from C3 to effectively expand the image’s receptive field. In the Neck module, the FPN+PAN structure is employed to fuse features extracted from different layers. FPN facilitates the propagation of features from top to bottom layers, while PAN enables the propagation of features from bottom to higher layers. By combining both approaches, features from diverse layers are fused to mitigate information loss. The Output module generates feature maps of various sizes. By analyzing these feature maps, the location, class, and confidence level of the dragon fruit can be predicted. In this study, 5000 images containing dragon fruit flowers and fruits were used as the training dataset. The information about the dataset was listed in Table 1.

Figure 3.

The network architecture of YOLOv5-s.

2.4.2. Object Tracking

Multiple Object Tracking (MOT) aims to estimate the bounding boxes and identify objects within video sequences. Many existing methods for MOT achieve this by associating bounding boxes with scores exceeding a predefined threshold to establish object identities. However, this approach poses a challenge when dealing with objects that have low detection scores, including occluded instances, as they are often discarded, thus resulting in the loss of object trajectories.

The ByteTrack algorithm introduces an innovative data association approach called BYTE, which offers a simple, efficient, and versatile solution. In contrast to conventional tracking methods that primarily focus on high-scoring bounding boxes, BYTE adopts a different strategy. It retains most of the bounding boxes and classifies them based on their confidence scores into high and low categories. Rather than discarding the low-confidence detection targets outright, BYTE leverages the similarity between the bounding boxes and existing tracking trajectories. This enables the algorithm to effectively distinguish foreground targets from the background, mitigating the risk of missed detections and enhancing the continuity of object trajectories.

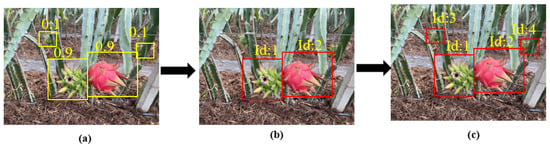

The BYTE workflow, depicted in Figure 4, encompasses three key steps: (1) partitioning bounding boxes into high and low scoring categories; (2) prioritizing the matching of high-scoring boxes with existing tracking trajectories, resorting to low-scoring boxes only when the matching using high-scoring boxes is unsuccessful; (3) generating new tracking trajectories for high-scoring bounding boxes that fail to find suitable matches within existing trajectories, discarding them if no suitable match is found within 30 frames. By employing this streamlined data association approach, ByteTrack demonstrates exceptional performance in addressing the challenges of MOT. Moreover, its attention on low-scoring bounding boxes allows for effective handling of scenarios featuring significant object occlusion.

Figure 4.

The workflow of BYTE. (a) Partitioning bounding boxes into high and low scoring categories; (b) matching of high-scoring boxes with existing tracking trajectories; and (c) matching of all bounding boxes.

In this study, we incorporated multi-class information into the tracking process of ByteTrack, as depicted in Figure 5 with dashed boxes indicating the inclusion of multi-class information. Based on the category of the bounding box, a Kalman filter was utilized with classification information to enhance the prediction accuracy, as depicted in Equation (1), which represents the state equation.

Figure 5.

The tracking process of ByteTrack with multi-class information incorporated.

In this equation, u and v denote the center point of the bounding box, s represents the aspect ratio, r corresponds to the height of the bounding box, , , and denote their respective rates of change, and class indicates the category information of the bounding box. Following the generation of predicted objects, matching of tracking trajectories is performed based on the category. Upon successful matching, detection boxes with classification and identity IDs are outputted.

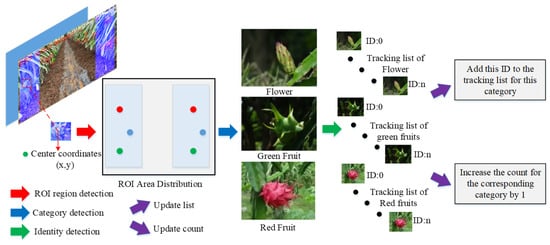

2.5. Counting Method Using the ROI Region

Considering the characteristics of inspection videos, which involve single-side and double-side inspections, this paper presents a ROI counting method capable of counting on either one side or both sides. Here, we mainly focus on introducing the double-side ROI counting method, which enables simultaneous capture of both sides of the dragon fruit in the aisle. The layout of the double-side ROI region is shown in the inspection video schematic in Figure 6. In this schematic, a counting belt is positioned on each side of the video, highlighted by a blue translucent mask overlay. Additionally, the current video frame number, processing frequency, and statistics related to various categories of flowers and fruits are displayed in the upper-left corner of the video.

Figure 6.

The workflow of the counting method using the ROI region.

The counting method, as illustrated in Figure 6, involves two primary steps. (1) Frame-by-frame analysis: The center coordinates of the identified dragon fruit target box are assessed to determine if they fall within the designated ROI counting region. If they do not, the process is repeated. If the coordinates are within the ROI counting region, the procedure proceeds to the next step. (2) Categorization and tracking: The category of the flower/fruit target entering the ROI region is confirmed, and the corresponding category’s tracking list is checked for the presence of the target’s ID. If the ID is not already included in the tracking list, it is added, and the counter for the respective category is incremented by 1. If the ID is already present in the tracking list, it is not counted. Once all video frames have been analyzed, the tracking lists for all categories within the ROI region are cleared.

2.6. Evaluation Metrics

The Intersection over Union (IOU) is a widely used metric for evaluating the accuracy of object detection. It quantifies the overlap between the predicted bounding boxes and the ground truth boxes, as expressed in Equation (2). In this study, we employed an IOU threshold of 0.5, indicating that a detection is deemed correct if the IOU is greater than or equal to 0.5, and incorrect otherwise.

The intersection area between the predicted bounding box and the ground truth box is represented as , while the union area is denoted as . By considering the differences between the predicted and ground truth results, the samples can be categorized into four groups: true positive (), false positive (), false negative (), and true negative (). From these groups, various evaluation metrics can be derived, including accuracy (P), recall (R), average precision (), and mean average precision (), which are expressed in Equations (3)–(6).

The evaluation of the counting results is conducted using counting accuracy (), counting precision (), and mean counting precision (). These metrics are defined in Equations (7)–(9), where represents the automated counting values, represents the true values, and N denotes the total number of tested videos

2.7. Experimental Environment and Parameter Setting

In this study, the models were built and improved using the PyTorch deep learning framework. Model training and validation were conducted on Ubuntu 18.04. The computer used had an Intel(R) Core(TM) i7-8700K CPU of 3.70 GHz, 16 GB RAM, and an NVIDIA GeForce RTX 3090 GPU with 24 GB of memory. GPU acceleration was employed to expedite network training, utilizing Cuda version 11.3.0 and Cudnn version 8.2.0. Stochastic Gradient Descent (SGD) was chosen as the optimizer for neural network optimization and to accelerate the training process. The momentum parameter for SGD was set to 0.9, and the weight decay parameter was set to 0.0005. The input images were uniformly resized to 512 × 512 pixels, and a batch size of 16 was used during training.The trained model was then tested on another computer which has an NVIDIA GeForce GTX 1080ti GPU with 16 GB of memory.

3. Results

3.1. YOLOv5 Object Detection Results

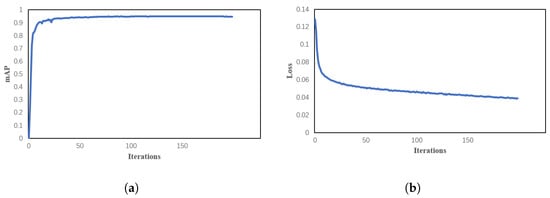

The training progress of the YOLOv5 model for dragon fruit recognition is depicted in Figure 7, illustrating the curves for loss and mAP. Notably, the loss exhibits a rapid decline while mAP experiences a substantial increase throughout the training iterations. As training progresses, the loss value gradually converges towards the minimum of 0.04, reaching this threshold after approximately 150 iterations.

Figure 7.

The curves of (a) loss; and (b) mAP during the training process.

Figure 8 presents the actual image recognition results obtained from the YOLOv5 model. The recognition outcomes are indicated by blue, green, and red boxes, corresponding to flowers, green fruits, and red fruits, respectively. In Figure 8a, the image highlights the significant variation in the size and shape of dragon fruit flowers due to factors such as blooming stage and shooting angle. Despite this challenge, the YOLOv5 detection model demonstrates the precise identification of the flowers. Figure 8b shows the recognition of green and red fruits in their coexisting state, where the detection model accurately distinguishes and identifies both types of fruits. This successful recognition serves as a crucial foundation for subsequent counting of dragon fruits across various growth stages.

Figure 8.

The recognition results obtained by the YOLOv5 model for dragon fruits in different growth stages. (a) Detection results of different types of dragon fruit flowers, and (b) detection results of red and green fruits.

Table 2 presents the recognition results of the YOLOv5 object detection model on the test set. The findings reveal that the red fruit achieves the highest recognition accuracy, reaching 96.1%. It is followed by the green fruit, which attains a recognition accuracy of 94.8%. The flowers exhibit the lowest recognition accuracy, yet still reach 94.1%. Overall, the average detection accuracy across the three categories is 95.0%. Furthermore, the model demonstrates a fast detection speed of 0.008s per frame image, equivalent to detecting 125 frames per second. These outcomes indicate the model’s capability to rapidly and accurately identify various flower and fruit targets, thereby providing essential technical support for subsequent tracking and counting tasks

Table 2.

Recognition results of YOLOv5 object detection model on the test set.

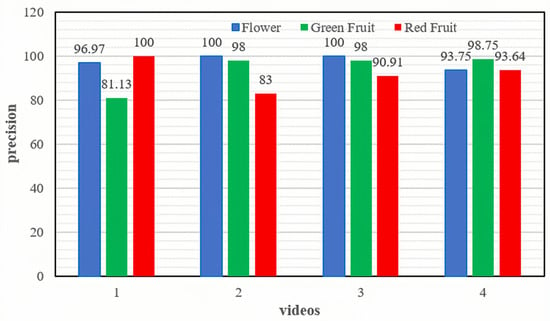

3.2. Results of Object Tracking and Counting

To evaluate the performance of the counting method proposed in this study, four videos collected from different locations and times, as outlined in Table 3, were utilized. The obtained results, as illustrated in Figure 9, reveal notable insights. Notably, the counting accuracy for red fruits in Video 1 and flowers in Video 2 achieved a perfect score of 100%. However, the counting accuracy for green fruits in Video 1 and red fruits in Video 2 was comparatively lower, standing at 81.13% and 83%, respectively. Conversely, the counting accuracy for flowers in Video 3 and green fruits in Video 4 demonstrated the highest performance, reaching 100% and 98.75%, respectively. Nevertheless, the counting accuracy for red fruits in these videos was relatively lower, at 90.91% and 93.64%, respectively. These results robustly demonstrate the accuracy of the proposed counting method in effectively enumerating dragon fruits at various growth stages within orchard environments.

Table 3.

The details of the test videos and their detection results using the proposed counting method.

Figure 9.

The counting accuracies achieved by the proposed counting method for videos collected at different locations and times.

The experimental results of dragon fruit counting using the proposed method under varying lighting conditions, including night illumination from supplementary lights and direct sunlight during the day, are presented in Figure 10. Figure 10a reveals a higher abundance of dragon fruit flowers and green fruits in the considered plot, while the count of red fruits is comparatively lower. In contrast, Figure 10b shows a substantial presence of red and green fruits but a smaller count of dragon fruit flowers. Notably, Figure 10a emphasizes the challenges encountered during night time, characterized by dim lighting conditions and obstruction by branches, which contribute to the difficulty in recognizing certain dragon fruit specimens. Consequently, counting dragon fruit at different growth stages becomes more challenging during night time operations. The fluctuation in counting results displayed in Figure 10 suggests a higher propensity for misidentification and reduced counting accuracy during nocturnal activities compared to daytime operations. Conversely, Figure 10b demonstrates that direct sunlight during the day facilitates easier identification of dragon fruit, leading to improved counting outcomes. Overall, considering the results obtained from both daytime and night time counting scenarios, it can be concluded that the proposed counting method in this study exhibits robustness in diverse lighting conditions, ultimately enhancing counting efficiency.

Figure 10.

The experimental results of dragon fruit counting using the proposed method under varying lighting conditions for (a) night time, and (b) day time.

Table 4 displays the average counting accuracies for various growth stages of dragon fruit in the test videos. The counting accuracy for dragon fruit flowers achieved a high accuracy of 97.68%, while the accuracy for counting red dragon fruit was the lowest at 91.89%, and the accuracy for green dragon fruit counting reached 93.97%. The overall average counting accuracy for all growth stages of dragon fruit was 94.51%. These results demonstrate the effectiveness of the proposed method. Additionally, the counting frequency reached 56 frames per second, indicating real-time counting capability.

Table 4.

The counting accuracies obtained by the counting method for various growth stages of dragon fruits in the test videos.

3.3. Performance Comparison of Different Object Detection Algorithms

To assess the effectiveness of the proposed YOLOv5 model, a performance comparison was made with two other lightweight models, namely YOLOX [30] and YOLOv3-tiny [31], using the same dataset. The comparative results are presented in Table 5. Notably, YOLOv5s exhibited the smallest parameter count, while its floating-point operation (FLOPs) fell between YOLOXs and YOLOv3-tiny. Remarkably, YOLOv5s achieved the highest average detection accuracy, reaching 95%, while maintaining an impressive inference speed of just 8ms, significantly faster than YOLOXs and YOLOv3-tiny. These findings indicate that when it comes to detecting various maturity stages of dragon fruit, YOLOv5s outperforms the other models in terms of performance.

Table 5.

The performance comparison of object detection results with other lightweight models.

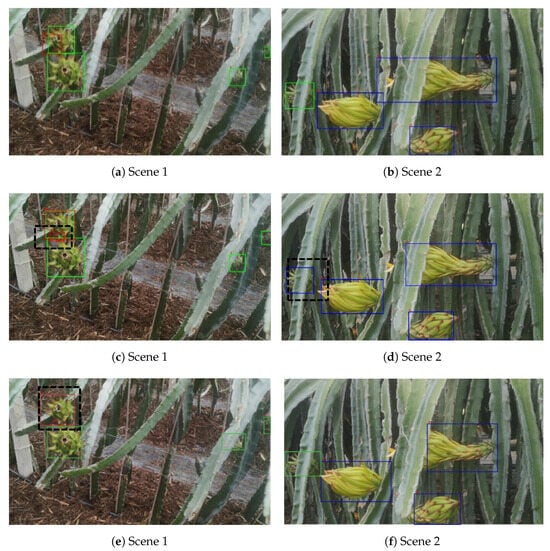

Figure 11 depicts the detection performance of the three models under the same confidence level of 0.5 in two typical scenarios. Specifically, Figure 11a,b exhibits the detection results of YOLOv5, Figure 11c,d displays the results of YOLOX, and Figure 11e,f depicts the outcomes of YOLOv3-tiny. A comparative analysis of the detection results highlights that YOLOX and YOLOv3-tiny are more susceptible to false positives and false negatives when objects are occluded. For example, in Figure 11c, a single occluded red fruit target is mistakenly identified as two separate red fruit targets. In Figure 11d, an occluded green fruit target is incorrectly recognized as a dragon fruit flower. Additionally, Figure 11e shows a missed detection of a green fruit target. The experimental findings emphasize that YOLOv5 exhibits superior detection accuracy and faster detection speed compared to the other models. Moreover, YOLOv5 demonstrates enhanced robustness in occluded environments, rendering it more suitable for effectively detecting dragon fruit targets at various growth stages.

Figure 11.

The detection performances of models in different scenes. (a) YOLOv5 in Scene 1, (b) YOLOv5 in Scene 2, (c) YOLOX in Scene 1, (d) YOLOX in Scene 2, (e) YOLOv3-tiny in Scene 1, and (f) YOLOv3-tiny in Scene 2.

3.4. Performance Comparison of Different Counting Methods

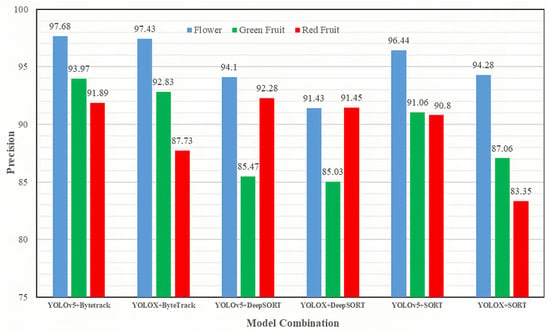

To validate the counting effectiveness of the proposed method, two object detection algorithms, namely YOLOv5 and YOLOX, were employed as object detectors. These detectors were then combined with three object tracking algorithms, namely SORT, DeepSORT, and ByteTrack, resulting in six distinct combinations of counting methods. Figure 12 visually presents the counting results achieved by these six algorithms on the dragon fruit test video.

Figure 12.

The counting accuracies achieved by different combinations of object detectors and counting methods.

The combination of YOLOv5 and ByteTrack exhibited the highest counting accuracy of 97.68% for flower counting. For the counting results of green and red fruits, the combinations of YOLOv5 and ByteTrack, as well as YOLOv5 and DeepSORT, achieved the most favorable results, with counting accuracies of 93.97% and 92.28%, respectively. Analyzing the object detection aspect, the counting results obtained from the YOLOv5 algorithm combination generally surpassed those of the YOLOX algorithm combination. This suggests that YOLOX yielded more false detections during the detection process, consequently introducing more counting errors. Considering object tracking, ByteTrack outperformed DeepSORT and SORT when utilizing the same object detection input. The superior performance of ByteTrack can be attributed to its ability to sustain attention on detection targets with lower confidence scores, often corresponding to occluded targets. By emphasizing attention on these targets, ByteTrack facilitates tracking a higher number of targets simultaneously, ultimately leading to improved counting results.

Table 6 presents a comprehensive comparison of the six algorithm combinations in terms of counting frequency and average counting accuracy to evaluate their counting performance. The combination of YOLOv5+ByteTrack exhibited the highest counting frequency, achieving 56 frames per second. This outcome can be attributed to YOLOv5’s lower parameter count and reduced floating-point computation, resulting in faster inference speed compared to YOLOX. Additionally, ByteTrack and Sort algorithms rely solely on image correlation for target matching, eliminating the need to load the re-identification (ReID) network model. In contrast, the DeepSort algorithm requires loading the ReID network model for deep feature matching, which reduces the detection and tracking speed. In terms of average counting accuracy, the YOLOv5+ByteTrack combination also demonstrated the highest accuracy among all combinations. Considering both the counting results and counting frequency, the YOLOv5+ByteTrack combination delivered the most favorable experimental outcomes. This combination not only achieved high counting accuracy for dragon fruit at different reproductive stages but also maintained a high counting frequency.

Table 6.

Comparison of counting frequency and average counting accuracy using different methods.

4. Discussion and Future Work

The proposed counting method based on ROI regions in this paper achieves over 90% counting accuracy and a detection speed of 56 FPS, primarily focusing on high-performance GPU platforms such as the 1080 ti. However, in practical orchard applications, detection tasks often need to be executed on mobile devices. When applied to mobile devices, the speed will inevitably decrease. We have also conducted preliminary experiments on smartphones (Huawei P20), and the frame rate detection typically ranges from 18 to 24 fps, which largely meets the real-time detection requirements. Therefore, future research will aim to implement the recognition, tracking, and counting of various flowers and fruits in orchards on mobile devices, providing a more portable solution for estimating orchard yields.

In the field of agricultural detection, mobile platform-based detection has been a hot research topic. For instance, Huang [32] deployed an object detection model on edge computing devices to detect citrus images in orchards, achieving a detection accuracy of 93.32% with a processing speed of 180ms/frame. Mao [33] implemented fruit detection on CPU platforms, achieving a detection speed of 50ms/frame on smartphone platforms. This demonstrates the significant potential of fruit detection on mobile platforms.

Considering these promising developments, our future work will focus on optimizing our model and adapting it for mobile platforms. This adaptation will enable the real-time counting of fruits using these models in the field, serving as a valuable direction to meet the needs of orchard management

5. Conclusions

This study proposes a high-efficiency counting method for dragon fruit in orchards, which involves capturing two rows of dragon fruit plants simultaneously from the middle aisle and employing two ROI counting areas based on object detection and tracking. The proposed ROI counting method yields promising results for dragon fruit at various maturity stages. Furthermore, six combinations of two object detection algorithms and three object tracking algorithms are evaluated. Among these combinations, the YOLOv5+ByteTrack pairing achieves the most accurate counting results, exceeding 90% counting accuracy for dragon fruit flowers, green fruit, and red fruit. Notably, it also maintains a high counting frequency of 56 frames per second, ensuring efficient and rapid detection, tracking, and counting processes. The proposed counting method exhibits robustness across different environments, making it suitable for dragon fruit counting in orchards. Additionally, its applicability can be extended to quantifying other crops, providing a feasible implementation solution for fruit quantity statistics and yield prediction in orchards

Author Contributions

Conceptualization, methodology, formal analysis, visualization, supervision, writing—review and editing, X.L.; methodology, software, validation, visualization, writing—original draft and editing, X.W.; writing—review and editing, P.O.; data collection, methodology, writing—review and editing, supervision, Z.Y.; data collection, methodology, writing—review and editing, L.D.; writing—review and editing, C.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by 2023 Guangxi University young and middle-aged teachers scientific research basic ability improvement project (2023KY0012), Guangxi Science and Technology Base and Talent Project (2022AC21272), and Science and Technology Major Project of Guangxi, China (Gui Ke AA22117004).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available upon reasonable request.

Acknowledgments

The authors would like to thank Luo Wei, Liang Yuming, and Dong Sifan for their kind help on video data acquisition.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Luu, T.-T.-H.; Le, T.-L.; Huynh, N.; Quintela-Alonso, P. Dragon fruit: A review of health benefits and nutrients and its sustainable development under climate changes in Vietnam. Czech J. Food Sci. 2021, 39, 71–94. [Google Scholar] [CrossRef]

- Jiang, H.; Zhang, W.; Li, X.; Shu, C.; Jiang, W.; Cao, J. Nutrition, phytochemical profile, bioactivities and applications in food industry of pitaya (Hylocereus spp.) peels: A comprehensive review. Trends Food Sci. Technol. 2021, 116, 199–217. [Google Scholar] [CrossRef]

- Vijayakumar, T.; Vinothkanna, M.R. Mellowness detection of dragon fruit using deep learning strategy. J. Innov. Image Process. (JIIP) 2020, 2, 35–43. [Google Scholar]

- Ruzainah, A.J.; Ahmad, R.; Nor, Z.; Vasudevan, R. Proximate analysis of dragon fruit (Hylecereus polyhizus). American Journal of Applied Sciences 2009, 6, 1341–1346. [Google Scholar]

- Felzenszwalb, P.; McAllester, D.; Ramanan, D. A discriminatively trained, multiscale, deformable part model. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 2969239–2969250. [Google Scholar] [CrossRef]

- Yan, J.; Zhao, Y.; Zhang, L.; Su, X.; Liu, H.; Zhang, F.; Fan, W.; He, L. Recognition of Rosa roxbunghii in natural environment based on improved faster RCNN. Trans. Chin. Soc. Agric. Eng. 2019, 35, 143–150. [Google Scholar]

- Gao, F.; Fu, L.; Zhang, X.; Majeed, Y.; Li, R.; Karkee, M.; Zhang, Q. Multi-class fruit-on-plant detection for apple in SNAP system using Faster R-CNN. Comput. Electron. Agric. 2020, 176, 105634. [Google Scholar] [CrossRef]

- Tu, S.; Pang, J.; Liu, H.; Zhuang, N.; Chen, Y.; Zheng, C.; Wan, H.; Xue, Y. Passion fruit detection and counting based on multiple scale faster R-CNN using RGB-D images. Precis. Agric. 2020, 21, 1072–1091. [Google Scholar] [CrossRef]

- Pan, Y.; Zhu, N.; Ding, L.; Li, X.; Goh, H.H.; Han, C.; Zhang, M. Identification and Counting of Sugarcane Seedlings in the Field Using Improved Faster R-CNN. Remote Sens. 2022, 14, 5846. [Google Scholar] [CrossRef]

- Le, V.N.T.; Truong, G.; Alameh, K. Detecting weeds from crops under complex field environments based on Faster RCNN. In Proceedings of the 2020 IEEE Eighth International Conference on Communications and Electronics (ICCE), Phu Quoc Island, Vietnam, 13–15 January 2021; pp. 350–355. [Google Scholar]

- Lin, T.L.; Chang, H.Y.; Chen, K.H. The pest and disease identification in the growth of sweet peppers using faster R-CNN and mask R-CNN. J. Internet Technol. 2020, 21, 605–614. [Google Scholar]

- Zhang, K.; Wu, Q.; Chen, Y. Detecting soybean leaf disease from synthetic image using multi-feature fusion faster R-CNN. Comput. Electron. Agric. 2021, 183, 106064. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Parico, A.I.B.; Ahamed, T. An aerial weed detection system for green onion crops using the you only look once (YOLOv3) deep learning algorithm. Eng. Agric. Environ. Food 2020, 13, 42–48. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Wang, X. Tomato diseases and pests detection based on improved Yolo V3 convolutional neural network. Front. Plant Sci. 2020, 11, 898. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Jin, L.; Wang, S.; Xu, H. Apple stem/calyx real-time recognition using YOLO-v5 algorithm for fruit automatic loading system. Postharvest Biol. Technol. 2022, 185, 111808. [Google Scholar] [CrossRef]

- Wu, D.; Lv, S.; Jiang, M.; Song, H. Using channel pruning-based YOLO v4 deep learning algorithm for the real-time and accurate detection of apple flowers in natural environments. Comput. Electron. Agric. 2020, 178, 105742. [Google Scholar] [CrossRef]

- Yao, J.; Qi, J.; Zhang, J.; Shao, H.; Yang, J.; Li, X. A real-time detection algorithm for Kiwifruit defects based on YOLOv5. Electronics 2021, 10, 1711. [Google Scholar] [CrossRef]

- Yan, B.; Fan, P.; Lei, X.; Liu, Z.; Yang, F. A real-time apple targets detection method for picking robot based on improved YOLOv5. Remote Sens. 2021, 13, 1619. [Google Scholar] [CrossRef]

- Wang, Z.; Yang, X. Moving target detection and tracking based on Pyramid Lucas-Kanade optical flow. In Proceedings of the 2018 IEEE 3rd International Conference on Image, Vision and Computing (ICIVC), Chongqing, China, 27–29 June 2018; pp. 66–69. [Google Scholar]

- Singla, N. Motion detection based on frame difference method. Int. J. Inf. Comput. Technol. 2014, 4, 1559–1565. [Google Scholar]

- Li, Q.; Li, R.; Ji, K.; Dai, W. Kalman filter and its application. In Proceedings of the 2015 8th International Conference on Intelligent Networks and Intelligent Systems (ICINIS), Tianjin, China, 1–3 November 2015; pp. 74–77. [Google Scholar]

- Lee, W.; Kim, H.; Ahn, J. Defect-free atomic array formation using the Hungarian matching algorithm. Phys. Rev. A 2017, 95, 053424. [Google Scholar] [CrossRef]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple online and realtime tracking. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3464–3468. [Google Scholar]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Weng, F.; Yuan, Z.; Luo, P.; Liu, W.; Wang, X. Bytetrack: Multi-object tracking by associating every detection box. In Proceedings of the Computer Vision–ECCV 2022, 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; pp. 1–21. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Fu, L.; Feng, Y.; Wu, J.; Liu, Z.; Gao, F.; Majeed, Y.; Al-Mallahi, A.; Zhang, Q.; Li, R.; Cui, Y. Fast and accurate detection of kiwifruit in orchard using improved YOLOv3-tiny model. Precis. Agric. 2021, 22, 754–776. [Google Scholar] [CrossRef]

- Huang, H.; Huang, T.; Li, Z.; Lyu, S.; Hong, T. Design of citrus fruit detection system based on mobile platform and edge computer device. Sensors 2021, 22, 59. [Google Scholar] [CrossRef] [PubMed]

- Mao, D.; Sun, H.; Li, X.; Yu, X.; Wu, J.; Zhang, Q. Real-time fruit detection using deep neural networks on CPU (RTFD): An edge AI application. Comput. Electron. Agric. 2023, 204, 107517. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).