Abstract

Images produced by CMOS sensors may contain defective pixels due to noise, manufacturing errors, or device malfunction, which must be detected and corrected at early processing stages in order to produce images that are useful to human users and image-processing or machine-vision algorithms. This paper proposes a defective pixel detection and correction algorithm and its implementation using CMOS analog circuits, which are integrated with the image sensor at the pixel and column levels. During photocurrent integration, the circuit detects defective values in parallel at each pixel using simple arithmetic operations within a neighborhood. At the image-column level, the circuit replaces the defective pixels with the median value of their neighborhood. To validate our approach, we designed a -pixel imager in a m CMOS process, which integrates our defective-pixel detection/correction circuits and processes images at 694 frames per second, according to post-layout simulations. Operating at that frame rate, our proposed algorithm and its CMOS implementation produce better results than current state-of-the-art algorithms: it achieves a Peak Signal to Noise Ratio (PSNR) and Image Enhancement Factor (IEF) of 45 dB and 198.4, respectively, in images with random defective pixels, and a PSNR of 44.4 dB and IEF of 194.2, respectively, in images with random defective pixels.

1. Introduction

Image sensors are devices that convert incident light into electrical signals using photosensitive elements [1]. These devices are at the heart of every imaging system, and their core operation consists of two steps: first, the image sensor converts the incident photons into electron–hole pairs, and then, it transforms the resulting current into a voltage [2]. The voltage at each photosensitive element is proportional to the number of photons received during the acquisition time [3].

Currently, Charge Coupled Device (CCD) and Complementary Metal Oxide Semiconductor (CMOS) image sensors are the preferred technologies for digital imaging devices [4,5]. Although CCDs often deliver better image quality and low-light robustness, CMOS Image Sensors (CIS) are preferred on mobile applications due to their higher dynamic range and smaller current and voltage requirements [5]. Furthermore, recent advances in deep submicron CMOS have brought significant advantages for CIS, such as compatibility with standard CMOS technology, the possibility of integrating sensors with analog and digital circuits for image processing at the pixel-level, random access to image data, low power consumption, and high-speed image acquisition [4,5,6,7,8]. However, they frequently contain defective pixels as a result of fabrication errors or device malfunction due to sensor wear and tear [9,10]. This problem is exacerbated by the increasing demand for high-resolution and high-density imagers [11,12,13]. Therefore, it is essential to detect and correct these defects at early processing stages in order to produce images that are useful to human users as well as to image processing and machine vision algorithms [13,14,15].

Defective pixels are grouped into three classes: hot, dead, and stuck [16]. The first two are used for grayscale imagers, while the third is used in multi-color sensors where one or more sub-pixels (e.g., red, blue, or green) are hot or dead. Dead pixels generate a zero or near-zero value regardless of the incident light intensity. In contrast, a hot pixel responds to all illumination levels with a high output value.

Many defective pixel detection and correction algorithms have been reported in the literature [12,13,17,18,19]. Most algorithms that operate online using data from a single image detect defective pixels by computing statistical variations within a neighborhood centered around each pixel. Although these algorithms frequently run on programmable architectures such as traditional computers and embedded processors, custom hardware architectures can provide higher performance with lower resources and power consumption, which are required for applications such as assisted-driving automobiles [20,21,22], surveillance systems [23,24,25], and biometric recognition [26,27,28], among many others. Numerous researchers have proposed custom hardware devices to perform image processing in real time, which reduces power consumption and increases hardware integration. Examples of such devices are Smart Image Sensors (SIS) [29], which integrate image capture and processing on the same die. The image processing hardware can be integrated at the pixel, column, or chip level [3], increasing the parallelism available to the algorithm and throughput of the implementation while reducing latency, memory requirements, and power consumption [30,31].

In this paper, we propose a novel CMOS SIS architecture for on-chip defective pixel detection and correction using pixel- and column-level processing with analog circuits. The SIS architecture implements a modified version of the algorithm proposed by Tchendjou et al. [12], which simplifies the computation to make it more suitable for custom hardware implementation. The SIS circuits detect all defective pixels in the image in parallel by performing arithmetical operations at the pixel level during photocurrent integration. Column-level circuits correct defective pixels by computing the median value of a neighborhood. A physical design of the SIS using a m CMOS process can acquire and process -pixel images at 694 frames per second (fps). Using 500 images from the Linnaeus 5 dataset [32] with randomly added defective pixels, the proposed algorithm and its analog-hardware implementation achieve better performance than the best single-frame detection and correction algorithms available in the literature [12,13,17,18,19].

The rest of this paper is organized as follows: Section 2 discusses related works; Section 3 presents our defective pixel detection and correction algorithms; Section 4 describes the design of the SIS; Section 5 evaluates the performance of the proposed algorithm and the SIS; and Section 6 presents our conclusions.

2. Related Works

The defective pixel rate is one of the most significant characteristics used to evaluate the quality of an image sensor at the end of its manufacturing process and during its useful life [13,33,34]. The occurrence of defective pixels results in degradation of the overall visual quality of the captured image, which can have a critical effect on its subsequent interpretation, especially if additional processing is performed on the image. For example, in astronomy, it is crucial to discriminate between a hot pixel and a pixel that represents a bright star [10], while in mammographic imaging defective pixels can be confused with microcalcifications [35]. Therefore, defective pixel detection and correction algorithms are crucial for correctly interpreting the information in the image. In this work, we focus on online algorithms, which detect defective pixels in the image sensor from one or more video frames acquired during normal operation in real time [9,13,36]. In particular, we focus on single-frame defective pixel detection algorithms, which are faster and require less memory than multi-frame algorithms.

Chan [17] proposed a dead pixel detection method which labels a pixel as defective when its value lies outside of an interval defined as , where a is the average of its eight neighbors except for the second-to-largest and second-to-smallest values and r is the range between these two values. Cho et al. [18] detected defective pixels based on whether the difference between its value and the average between two of its neighbors is greater than the mean of the pixel and its four neighbors or the maximum pixel value minus this mean. These algorithms use only basic operations such as absolute difference, comparison, spatial neighborhood averages, and rank-ordering. Although they can be implemented efficiently, these algorithms produce high false positive rates, increasing the number of pixels corrections and degrading the quality of the resulting image [12]. El-Yamany [37] showed a robust method of identifying singlets and couplets of defective pixels that uses comparison, averaging, minimum, and maximum operations. They used two tunable parameters that control the detection sensitivity and specificity. Tchendjou et al. [12] proposed an algorithm that flags defective pixels when the difference between the actual and estimated value is greater than a threshold (or the maximum pixel value minus this threshold). The threshold is computed as the mean between the pixel value and a simple average of its eight neighbors. The estimated value is computed as a weighted (or in the simplified version, unweighted) average of the adjacent pixels. They achieved better sensibility, specificity, positive predictive value, and phi-coefficient than [17,18]. The authors of [38] proposed an optimization algorithm to detect and correct defective pixels in an image using two defective pixel detection methods; the first uses the distance between the pixel and its neighbors [12], while the second uses the dispersion of the pixel values within a neighborhood window [39]. Yongji et al. [19] used a method similar to Chan [17] for defective pixel detection, except that they used a -pixel window to compute the average and excluded the two largest and the two smallest pixels in this window.

The algorithms described above can achieve good accuracy; however, they must be programmed on high-performance processors in order to achieve short processing times. While the subsequent cost in die area and power consumption of these solutions may not be a limitation in larger applications, they are typically unsuitable for portable and mobile devices. Consequently, many researchers have proposed special-purpose processing systems based on custom hardware architectures in order to focus on speed, portability, and low power consumption [13,36,39,40]. In fully-digital solutions, FPGAs (Field Programmable Gate Array) are a widely used platform to implement these architectures thanks to their large fine-grained parallelism and relatively low cost and power consumption compared to traditional programmable processors. Kumar et al. [40] implemented real-time non-uniformity correction and defective pixel detection on a Xilinx XC2S1500 FPGA using a thresholding criterion based on the mean ± 3 times the standard deviation of the pixel values within a neighborhood. In [36], the authors implemented a combination of offline and online defective pixel detection and correction on a Lattice Semiconductors ECP2 FPGA. In each frame, they computed the difference between the current pixel and the average of its neighbors and then compared it to a threshold in order to select candidate defective pixels. If the same pixel was detected in consecutive frames, that pixel was considered to be defective. In [39], the authors used the median, standard deviation, range, interquartile range, and other statistical dispersion parameters of pixel blocks to detect and correct defective pixels in an image. Running their algorithm on a Microblaze microcontroller embedded in a Xilinx VC707 FPGA, the quality of their results was only slightly lower than those in [12] while achieving a 3.5 times faster computation time. Tchendjou et al. [13] presented a heterogeneous architecture on a Xilinx ZC702 device implementing three detection algorithms: the first was the algorithm presented in [12]; the second was similar to the first, except that it computed the estimated pixel value as the median of the neighborhood window; the third, described in [39], was faster and able to achieve slightly better results than the first two, though it used more hardware resources. Moreover, the last algorithm required five parameters to be determined empirically based on the images in the dataset.

Despite the significant advantages of the architectures described above, these custom digital processors operate outside the CIS, accessing the pixel values in sequential fashion after analog-to-digital conversion. This limits the intrinsic data parallelism of the algorithm, reducing the throughput and increasing the latency of the implementation. Moreover, sequential access to the pixel values requires the use of line buffers in the digital processor to implement window-based operations. As an alternative, all or part of the operations of the algorithm can be performed in an SIS at the pixel or column level, usually via analog circuits integrated into the image sensor [41,42,43]. This approach greatly increases the parallelism of the implementation, though at the cost of increasing the pixel size or decreasing the area available for photodetectors. Ginhac et al. [44] reported a dynamically configurable parallel processor array integrated into a CMOS image sensor. A single-instruction multiple-data architecture enabled programmable mask-based image processing computation on each pixel. Garcia-Lamont [45] described an analog SIS to compute the Prewitt edge detection algorithm using a simple arithmetic analog circuit integrated with each photodetector in the imaging array. Suárez et al. [46] presented an SIS for Gaussian pyramid extraction with per-pixel analog processing circuits and local analog memories. Gottardi et al. [47] proposed a vision sensor that computes a four-bit Local Binary Pattern (LBP) for each pixel during integration time. They demonstrated the use of these LBPs to perform software-based image description and retrieval.

To reduce the impact of pixel-level processing on the pixel area and photodetector utilization, a number of works have been proposed in the literature implementing computation at the column level [48,49,50,51,52]. Young et al. [48] presented an SIS that used column-parallel readout analog circuits to compute histograms of oriented gradients for object detection. Jin et al. [49] proposed an edge detection SIS that used column-level analog circuits and digital static memory to compare pixels in adjacent columns. In [50], the authors presented a vision sensor that computed frame differences and background subtraction for motion sensing and object segmentation. Hsu et al. [51] presented an SIS for feature extraction that computed the convolution between the image and a kernel, with the programmable weights represented as analog currents and the pixels as pulse-width modulated voltages. In our own previous work [52,53], we combined pixel- and column-level analog computation during photocurrent integration using a custom digital processor. In [52], the analog circuits were used to compute a simplified version of LBP for use by the digital coprocessor in performing facial recognition on images. In [53], we used pixel-level analog memory to compute frame differences and motion estimation, with the digital processor using a connected components algorithm to detect objects in motion.

The research described above shows the importance of detecting and correcting defective pixels before displaying or processing an image. While most hardware implementations of these algorithms have used digital programmable logic devices, research shows that implementing the computation at the pixel or column level can greatly improve the parallelism of the implementation. Moreover, performing computation in the analog domain during photocurrent integration can reduce the impact of device mismatch and nonlinearity on the accuracy of the results and lower the area overhead of the computation circuits.

3. Methods

The circuits in our SIS implement a detection and correction algorithms for defective pixels. We detect defective pixels by comparing each pixel value to its four neighbors (north, south, east, and west). The correction algorithm simply replaces the defective pixel value with the median of the same neighbors. In the rest of this section, we describe our proposed pixel detection algorithm.

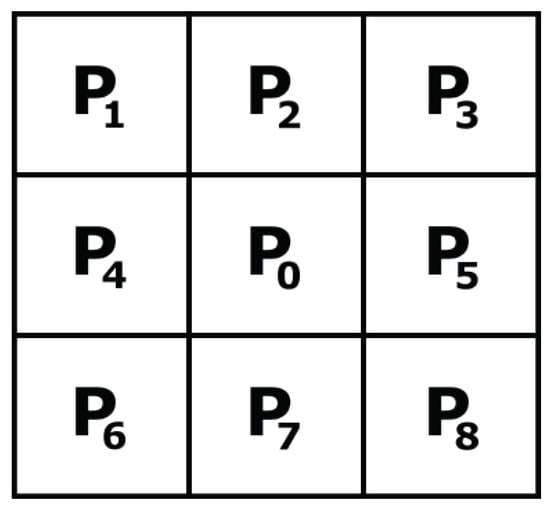

Our proposed algorithm for detecting defective pixels modifies the algorithm presented in [12] to make it more suitable for implementation on analog hardware. The original algorithm operates within a single-image frame, first defining a -pixel window centered on the pixel under test, denoted as , as shown in Figure 1. If has neither the smallest nor the largest value in the window, the algorithm assumes that the pixel is not defective and continues with the next pixel in the image. Otherwise, is marked as possibly defective and the algorithm computes an estimate for its value as the average of its eight neighbors, as described in Equation (1):

where is the estimated value for and are its neighboring pixels in the -pixel window. Then, the algorithm computes the average and difference between and , as described in Equations (2) and (3):

Figure 1.

-pixel window used by the defective pixel detection algorithm; is the pixel under test and – are its neighbors.

The algorithm marks as a defective pixel when is greater than the absolute difference between and the minimum (0) or maximum () pixel value, as described in Equations (4) and (5):

where is the maximum possible value of any pixel in the image.

Assuming that and substituting Equations (2) and (3) into Equations (4) and (5), the algorithm marks as a dead pixel when one of the conditions described in Equations (6) and (7) are met:

Likewise, when , substituting Equations (2) and (3) into Equations (4) and (5), the algorithm marks as a hot pixel when it meets one of the conditions in Equations (8) and (9):

In order to simplify the hardware implementation of the algorithm, we propose the following changes to the original algorithm:

- 1.

- We use only four pixels in the -pixel neighborhood to compute the estimated value of , as follows:

- 2.

- Instead of using computation to determine whether is the pixel with the maximum or minimum value in the neighborhood, we mark as possibly dead when and possibly hot when , with and [18,54,55].

- 3.

- 4.

- 5.

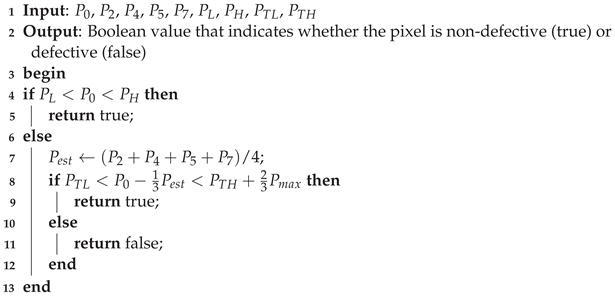

In summary, Algorithm 1 shows the our proposed defective pixel detection algorithm. The next section describes the analog CMOS implementation of our detection and correction algorithm.

| Algorithm 1: Proposed detection method. |

|

4. Defective Pixel Detection and Correction Circuits

In the proposed readout circuit, the defective pixel detection stage of the algorithm is implemented in the analog domain at the pixel level. The defective pixel correction stage operates at the column level, again in the analog domain. This allows us to reduce the number of transistors used to implement the algorithm, thereby increasing the fill factor of the imager (i.e., the ratio between the die area occupied by the photodetector and the area of the entire pixel circuit) and reducing its power consumption.

4.1. Defective Pixel Detection Circuit

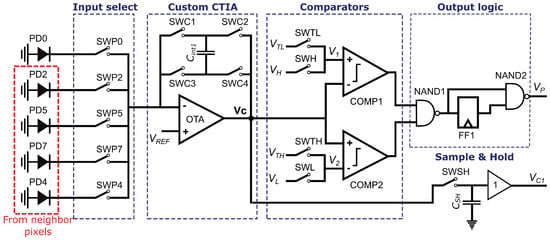

Each pixel in the imager is composed of a photodetector and a defective pixel detection circuit. This circuit receives as input the current from its own photodetector PD0 and its four neighbors, PD2, PD5, PD7, and PD4, as shown in Figure 3, and produces a single-bit output that signals whether the pixel is defective or not. The circuit is based on a Capacitive Transimpedance Amplifier (CTIA), which integrates a photodetector current and produces a voltage that represents the intensity of the incident light at the pixel during the integration time. Compared to other readout circuits, the CTIA has a larger dynamic range and higher linearity due to the high gain of its Operational Transconductance Amplifier (OTA) [56,57]. We used a conventional two-stage OTA [58,59] for the CTIA and the two comparators.

Figure 3.

Defective pixel detection circuit.

The circuit shown in Figure 3 first compares the value of the current pixel to the limits and , as required by Line Four of Algorithm 1, then evaluates Equations (11) and (12). The circuit output is a logic 0 when is a defective pixel.

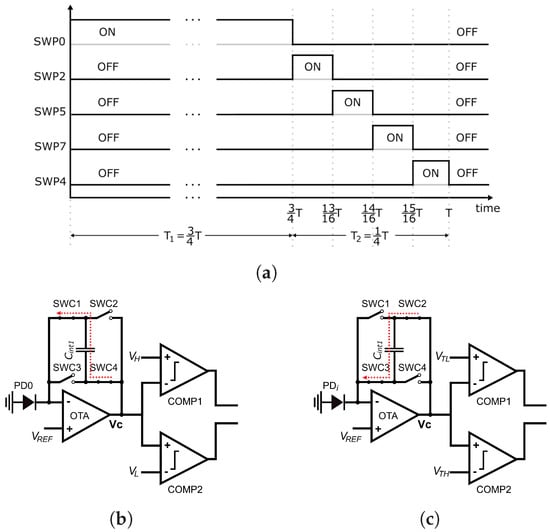

During the integration time T, the circuit operates in two phases. Figure 4a shows the activation of the switches SWP0, SWP2, SWP5, SWP7, and SWP4 that select the input to the defective pixel detection circuit of Figure 3 during the integration time. As shown in the figure, Phase 1 operates during and Phase 2 during . Figure 4b shows the equivalent circuit of the defective pixel detector during Phase 1. Switch SWP0 in the Input Select block of Figure 3 is closed, and the other input select switches are open, providing the output current of photodetector PD0 as the input to the Custom CTIA block. In this block, switches SWC1 and SWC4 are closed; thus, the CTIA integrates the current generated by the photodetector PD0 in the positive direction. At the end of Phase 1, the output voltage of the CTIA is provided by Equation (13):

where is the integration capacitor value, is the PD0 photodetector current, and is the output voltage at the end of Phase 1. Choosing guarantees that the OTA output signal is symmetrical and has a maximum dynamic range. In the Comparators block, switches SWH and SWL are closed; thus, after , the OTA output is compared against and using comparators COMP1 and COMP2, storing a logic 1 in flip-flop FF1 if either or (Line Four of Algorithm 1). At the end of Phase 1, is stored in the sample-and-hold circuit shown at the bottom right corner of Figure 3 for later use by the defective pixel correction circuit.

Figure 4.

Input-select switch states and equivalent circuits for defective pixel detection during Phases 1 and 2 of circuit operation. The output logic stage is omitted for clarity. (a) Input-select switch states during Phases 1 and 2. (b) Equivalent circuit during Phase 1. (c) Equivalent circuit during Phase 2. In (c), alternates between PD2, PD5, PD7, and PD4 during Phase 2.

Figure 4c shows the equivalent circuit during Phase 2. At the start of this phase, the voltage across the integration capacitor represents . At this point, switches SWC1 and SWC4 are opened and switches SWC2 and SWC3 are closed, inverting the polarity of the output. The circuit divides Phase 2 into four equal time intervals with duration . As shown in Figure 4a, within each time interval only one of switches SWP2, SWP5, SWP7, or SWP4 is closed at a time; thus, the CTIA successively integrates the current from photodetectors PD2, PD5, PD7, or PD4 in the negative direction. At the end of Phase 2, the output voltage is provided by Equation (14):

where , , , and are the output currents of the photodetectors PD2, PD5, PD7, and PD4, respectively.

At this point, voltage represents . Therefore, instead of evaluating Equations (11) and (12), the Comparators block evaluates

by closing switches SWTH and SWTL and opening SWH and SWL. In Figure 4c, the voltages and represent and , respectively. The output of comparators COMP1 and COMP2 is a logic 0 when the corresponding conditions in Equations (15) and (16) are met, producing a logic 1 at the output of gate NAND1.

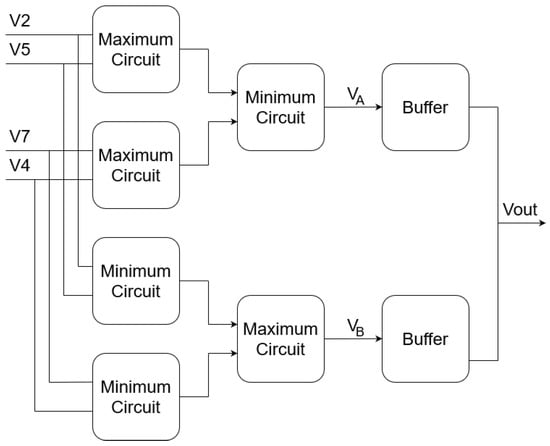

4.2. Defective Pixel Correction Circuit

Figure 5 shows the block diagram of the defective pixel correction circuit. The inputs , , , and are the output voltages of the configurable CTIA stage (see Figure 3) of the four neighboring pixels. The circuit is a median filter that computes the median value of its four inputs using a two-stage analog sorting network, followed by an output stage that computes the average value of the two central inputs and after sorting:

where

Figure 5.

Block diagram of defective pixel correction circuit.

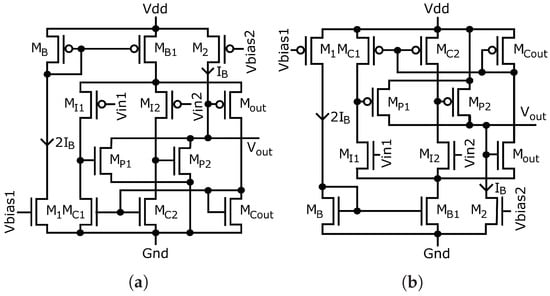

The buffers are implemented using a single-stage OTA [58,59], and the sorting network is composed of circuits that compute the minimum and maximum value of their two inputs. Figure 6a and Figure 6b show the minimum and maximum circuits, respectively.

Figure 6.

Circuit diagram to determine the minimum and maximum values between two voltages: (a) Minimum circuit diagram. (b) Maximum circuit diagram.

Figure 6 shows the circuits that compute the minimum and maximum operations used in Equations (18) and (19), which were proposed by Soleimani [60]. Both circuits consist of a differential amplifier stage composed of , , , , , and , along with a common-source stage with an active load, composed of , and . Because the operation of both circuits is similar, we explain only the operation of the minimum-value circuit shown in Figure 6a. When , is almost off and the drain currents of and are equal to because of the current mirror formed by and . Due to the state of , the gate voltage of is very small, and this transistor is almost turned off as well. Therefore, all of current from passes through . Because the current passing through and has the same value , the gate voltages of both transistors are the same. Finally, the source and drain voltages and drain current of and are equal, which results in . The same reasoning applies when , in which case . The operation of the circuit in Figure 6b follows the same principle, except with computation of the maximum value between the two inputs and .

4.3. General Readout Circuit

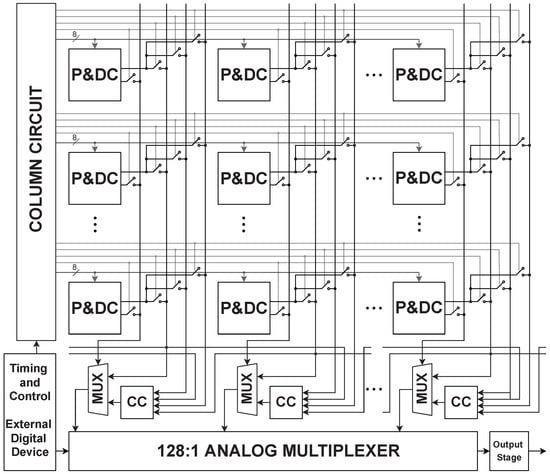

Figure 7 shows the smart pixel array. Each smart pixel, denoted as P&DC, includes a photodetector and a defective pixel detection circuit. Each column includes a defective pixel correction circuit (CC) and a 2:1 analog multiplexer. An external digital device generates the timing and control signals to the array and the 128:1 analog multiplexer that generates the output.

Figure 7.

Smart pixel array.

Each P&DC circuit has two outputs; the first is the output value of the defective-pixel detection circuit at the end of Phase 1, and the second is the output value that indicates whether the pixel is defective or not. Each column reads the output of three smart pixels, which are the center , top , and bottom pixels of the -pixel window in Figure 1, as well as the output of the central pixel . The pixel values are stored in a sample and hold circuit at the end of Phase 1. The defective pixel correction circuit uses the and outputs along with the and outputs of the neighboring columns to compute the corrected pixel value . The 2:1 multiplexer selects between and the corrected value depending on the logic value of , which indicates whether or not is defective. The 128:1 analog multiplexer serially outputs all the pixel voltage values within each row.

5. Results

5.1. Physical Layout

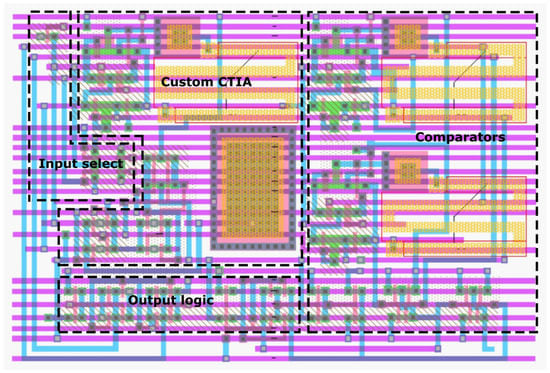

Figure 8 shows the physical layout of the defective pixel detection circuit on a m mixed-signal CMOS processor with three metal layers, two polysilicon layers, and a supply voltage.

Figure 8.

Layout of the defective pixel detection circuit.

We used a double-poly capacitor with a capacitance per unit area of 950 aF/m. Assuming an integration time T = 16 s (Section 4.1) and a maximum photodetector current of 14 nA, the pixel requires a 100 fF integration capacitor of m by m. The area of the complete defective pixel detection circuit is m by m. Considering a m by m pixel [61], the circuit achieves a fill factor of . In comparison, the fill factor without these additional stages is . It is worth noting that only two wires are required between neighboring cells, each transporting photocurrent in a different direction. This is because, as described in Section 4.1, the defective pixel detection circuit implements Equations (10)–(12) using time multiplexing, reading the photocurrent of only one pixel at a time. Moreover, because at most only one of the two wires operates in low impedance at the same time, the effects of crosstalk are diminished.

Although the results presented in this section use the 0.35 m physical design described above, we additionally evaluated the scaleability of our circuits by porting the 0.35 m physical design to a standard m CMOS processor frequently used in the literature [62,63,64,65]. The 0.18 m processor uses a supply voltage and features metal–insulator–metal capacitors with a capacitance per unit area of 2 m. The total area of the defective pixel detection circuit in the 0.18 m process is m by m, which achieves a fill factor of in the same m by m pixel. Without the comparators and output logic stages, the fill factor is .

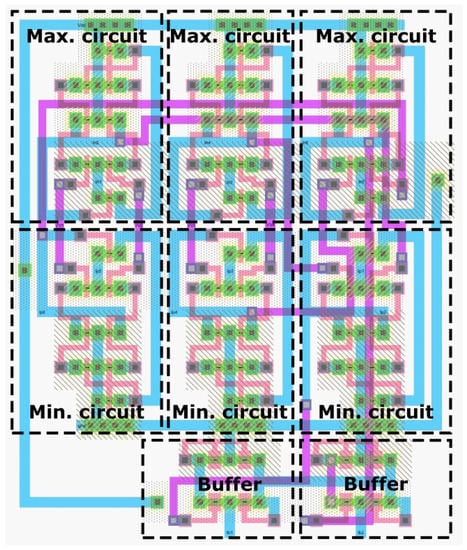

Figure 9 shows the layout of the defective pixel correction circuit, which has an area of m by m in the m processoror and m by m in the m standard CMOS processor.

Figure 9.

Layout of defective pixel correction circuit.

5.2. Algorithm Parameters

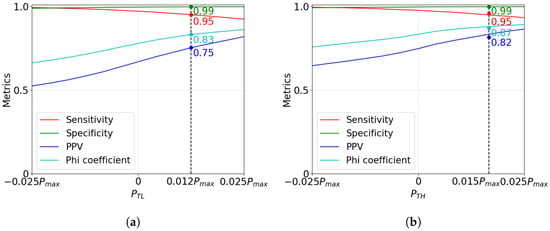

To select the values of and in Algorithm 1, we used 250 images with different levels of brightness, contrast, and dynamic range randomly selected from the Linnaeus 5 dataset [32]. To determine the value for , we randomly added 0.5% dead pixels to the image with values between 0 and . Similarly, we added 0.5% hot pixels (between and ) to determine the value of .

We simulated the performance of the algorithm as a function of the values of and in the interval . We evaluated the performance of the algorithm using four metrics: Sensitivity (Se), Specificity (Sp), precision or Positive Predictive Value (PPV), and Phi coefficient (), defined as follows:

where TP is the number of true positive defective pixels in the image detected by the algorithm, TN is the number of true negatives, FP is the number of false positives, and FN is the number of false negatives; Sensitivity quantifies the fraction of pixels marked as defective by the algorithm that are truly defective; Specificity expresses the fraction of good pixels correctly identified as such by the algorithm; PPV is the precision of the algorithm, that is, the fraction of truly defective pixels that were detected; and the coefficient is a special case of the Pearson correlation coefficient that measures the difference between the ratios of correctly and incorrectly classified pixels.

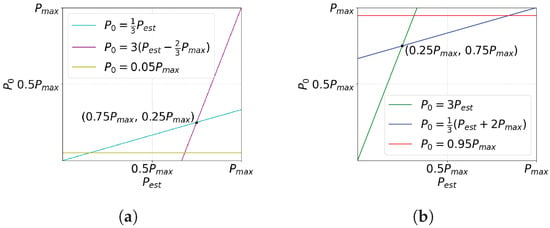

Figure 10a and Figure 10b respectively show the four metrics averaged over the 250 images for a range of and values. Figure 10a shows the performance of the algorithm when detecting dead pixels, and Figure 10b depicts the performance metrics when detecting hot pixels. These graphs show that increasing the value of and improves the and metrics and degrades , while the Specificity remains approximately constant at . Based on these results, we chose and , which resulted in a sensitivity of . With these values, we achieve and when detecting dead pixels and and when detecting hot pixels.

Figure 10.

Performance of the algorithm using Sensitivity, Specificity, PPV, and Phi coefficient as functions of and : (a) Performance of the algorithm as a function of . (b) Performance of the algorithm as a function of .

5.3. Post-Layout Simulation Results

We randomly selected 500 images with different levels of brightness, contrast, and dynamic range from the Linnaeus 5 dataset [32] to create the images used to evaluate the detection and correction algorithms. This set of test images is different from the set of training images used to obtain the values of and . All images have the same resolution size, matching the proposed CMOS readout circuit array size. The most common type of defective pixel is the random distribution of dead and hot pixels in an image. In this paper, we use two variants of this type of defective pixel: randomness ( of all pixels in the image are defective), and randomness ( of all pixels in the image are defective). Furthermore, we impose the requirement that the dead pixel and hot pixel ranges be the lowest and highest , respectively, of the full dynamic range. These values are consistent with the evaluation of other algorithms reported in the literature [12,13,17,18,19].

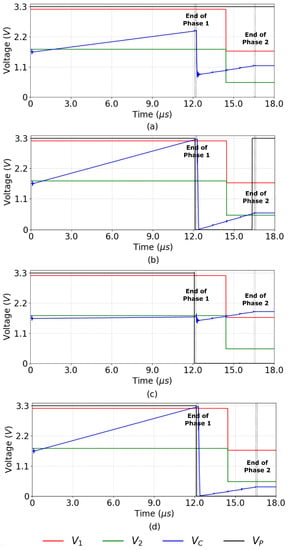

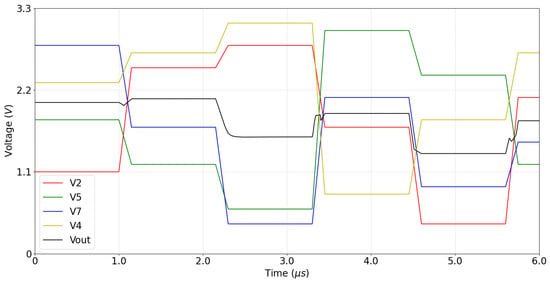

Figure 11 shows a post-layout simulation of the defective pixel detection circuit. The graphs in the figure show the voltages at four nodes of the circuit shown in Figure 3: the output of configurable CTIA, the reference inputs and of the comparators COMP1 and COMP2, and the output , which is a logic 1 when the pixel is not defective. Figure 11a shows an example where the input pixel is not defective and passes the test in Line Four of Algorithm 1 (). At , FF1 is preset to 1 and capacitor is discharged. Between and s, the circuit operates in Phase 1, integrating the value of the input pixel , and the switches at the input of COMP1 and COMP2 select and as reference voltages. At s, both comparators output a logic 1, because and ; thus, FF1 stores a logic 0 and . At the same time, switch is opened to sample the pixel value. At s, the CTIA changes its integration direction and the circuit initiates Phase 2. Switch SWP0 opens and, for the next four s-intervals, switches SWP2, SWP5, SWP7, and SWP4 select the neighboring pixels as inputs to the CTIA. At s, the input switches of COMP1 and COMP2 select and . At s, Phase 2 ends and COMP1 and COMP2 output a logic 1 (the input pixel passes the test in Line Eight of Algorithm 1), and .

Figure 11.

Post-layout simulation of defective pixel detection circuit: (a) a good pixel that passes the test in Line Four of Algorithm 1; (b) a good pixel that fails the test in Line Four of Algorithm 1; (c) a dead pixel. (d) a hot pixel.

Note that in Figure 11a it is actually irrelevant whether or not passes the test in Line Eight, because it has already passed the test in Line Four. In contrast, Figure 11b shows a case where the does not pass the test in Line Four of Algorithm 1, because . Nevertheless, the pixel is not defective because it does pass the test in Line Eight. At the end of Phase 1, COMP1 outputs a logic 0 and COMP2 outputs a 1; therefore FF1 stores a logic 1 (marking the pixel as potentially defective) and . However, at the end of Phase 2 both comparators output a logic 1, because the pixel passes the test in Line Eight; thus, the NAND1 in Figure 3 outputs a logic 0 and , marking the pixel as not defective.

Figure 11c,d present examples where does not pass the test in Line Four of Algorithm 1, here because or , respectively. Furthermore, in both examples does not pass the test in Line Eight, because or , respectively. In the case illustrated in Figure 11c, at the end of Phase 1 COMP1 outputs a logic 1 and COMP2 outputs a 0 (). Therefore, FF1 stores a logic 1 and . At the end of Phase 2, COMP1 outputs a 0 because , while COMP2 outputs a 1. Thus, the first NAND in Figure 3 outputs a logic 1 and , marking the pixel as defective. In the case shown in Figure 11d, at the end of Phase 1, COMP1 outputs a logic 0 () and COMP2 outputs a 1; thus, FF1 stores a logic 1 and . At the end of Phase 2, COMP1 outputs a logic 1 and COMP2 outputs a 0, because . Thus, the first NAND in Figure 3 outputs a logic 1 and , marking the pixel as defective.

Figure 12 shows a post-layout simulation of the median computation in the defective pixel correction circuit shown in Figure 5. In this test, we simulate the readout of five consecutive pixels of a column in the image. For each central pixel , the row-select circuit outputs the voltage values of its four neighbors, denoted as , , , and in the figure. For each of the neighbors, their voltage value is provided by the sample-and-hold circuit shown at the bottom right corner of Figure 3. As shown in Figure 12, we select a new pixel every s; then, after a delay of , the output voltage settles at the median value shown in Equation (17), with a worst-case error of 14.8% in the pixel range. The delay is introduced by the minimum, maximum, and buffer circuits shown in Figure 5. The worst-case delay occurs when the minimum or maximum circuit at the input changes its output between two consecutive pixels (e.g., when first and next ) and that change propagates through to . Moreover, the delay is maximal when the output voltage swing is close to . In this case the worst-case settling time for is 800 ns.

Figure 12.

Post-layout simulation of the defective pixel correction circuit.

Figure 11 shows that the defective pixel detection circuit operates in s, plus 50 ns to reset the integration capacitor after each detection. This circuit operates in parallel for all pixels in the image. During readout, the row-select circuit has a delay of 60 ns, the worst-case delay of the correction circuit is 800 ns at column level, and the column-select circuit has a delay of 80 ns. In this case, the readout time for the complete array is 1.44 ms, which allows for acquiring and processing images at a maximum frame rate of 694 fps.

5.4. Algorithm and Circuit Performance

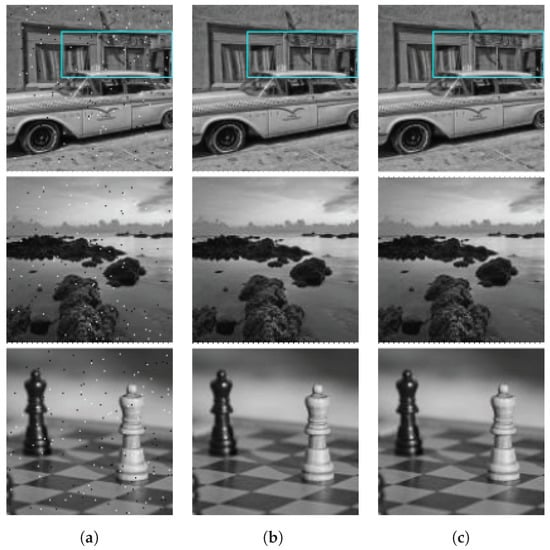

Figure 13 illustrates the operation of the proposed algorithm and circuit. Figure 13a shows three images taken from the Linnaeus 5 dataset [32] which were contaminated with salt-and-pepper noise randomly affecting 1% of the pixels. Dead pixels were generated in a range of , while hot pixels were generated in a range of . Figure 13b,c show the outputs of a software implementation of Algorithm 1 and our detection/correction circuit, respectively. In Figure 13b,c, false positive detections are highlighted in red and false negatives are marked in yellow.

Figure 13.

Original images taken from the Linnaeus 5 dataset [32] with defective pixels added randomly along with example images showing the results of the defective pixel detection and correction process: (a) Original images with defective pixels. (b) Results of the proposed algorithm. (c) Results of the readout circuit.

To produce the images in Figure 13c, pixel values in the input image were converted to currents in the range from 0 to 14 nA in order to simulate the operation of a photodetector. Then, we ran a post-layout Spice simulation of the circuits described in Section 4 to produce the output pixel values and the list of pixels marked as defective by the circuit. Finally, we converted the output pixel voltages in the range from 0 to 3.3 V to digital values between 0 and 255 in order to display the output images.

A visual inspection of Figure 13 shows that the algorithm detects and corrects most defective pixels. Moreover, our pixel-correction circuit produces an output that is visually almost identical to the software implementation of the algorithm.

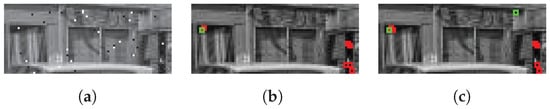

Figure 14 shows an enlarged version of the -pixel rectangle highlighted in cyan in the first image of Figure 13. Figure 14b,c show the output of the algorithm and correction circuit, respectively, with false positive detections highlighted in red and false negatives marked in green. Out of the 35 defective pixels in the image, the algorithm correctly detects 34 and the circuit detects 33. Moreover, out of the 2940 good pixels, the algorithm and circuit produce only seven false positives, which differ in only one detection. All the false positives and false negatives in Figure 14 correspond to dead pixels, which is explained by the mostly dark background in the image, and these do not significantly affect the appearance of the image.

Figure 14.

Zoom of a region of the original image taken from tthe Linnaeus 5 dataset [32] with false positive and false negative examples: (a) Noisy image. (b) Results of proposed algorithm. (c) Circuit output.

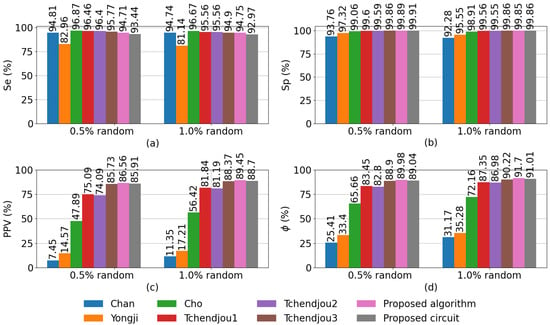

Figure 15 compares the performance of our algorithm and hardware implementation to six other defective pixel detection algorithms, namely, those proposed by Chan [17], Yongji [19], Cho [18], the original algorithm proposed by Tchendjou et al., its simplification [12] (Tchendjou1 and Tchendjou2), and the median-based version of Tchendjou1 [13] (Tchendjou3), which does not requires dataset-dependent parameters. The figure plots the Se, Sp, PPV, and metrics, defined in Section 5.2, averaged over 500 -pixel images randomly selected from the Linnaeus 5 dataset [32], to which we added salt-and-pepper noise affecting 0.5% and 1.0% of the pixels. These images are different from the 250 images used to determine the values of and in Section 5.2. The hardware implementation results were obtained from post-layout Spice simulations.

Figure 15.

Comparison of defective pixel detection methods using (a) Sensitivity, (b) Specificity, (c) Positive Predictive Value (PPV), and (d) Phi coefficient.

Figure 15a shows that Cho achieves the highest sensitivity (96.87%), though all the algorithms except for Yongji achieve sensitivity above 93%. In Figure 15b–d, it can be seen that our algorithm and its hardware implementation achieve the highest performance based on the Sp, PPV, and metrics. In summary, the classification performance of our algorithm is competitive with the state-of-the-art algorithms compared in Figure 15, while being based on simple arithmetical operations that can be efficiently implemented in analog hardware with comparable performance to software implementations.

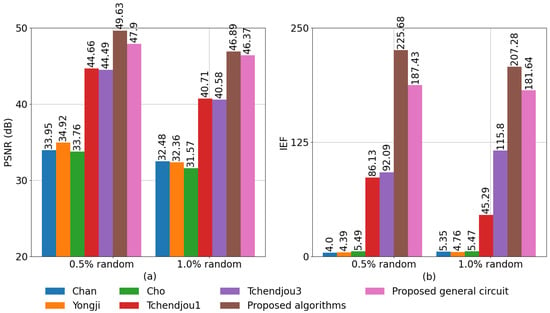

To assess the combined performance of the detection and correction algorithms and their hardware implementations, we used the Peak Signal to Noise Ratio (PSNR) and Image Enhancement Factor (IEF) metrics. The PSNR metric compares a reference image without defective pixels to an image which results from applying a correction algorithm to a noisy version of . The PSNR is defined by Equation (24):

where is the maximum pixel value and MSE is the Mean Square Error, provided by Equation (25):

where M and N are the dimensions of the images.

IEF is computed as the ratio between the MSE of the noisy image and the MSE of the corrected image, using the noiseless image as a reference, as shown in Equation (26):

where and are the noiseless and corrected images, respectively, and is the noisy image. If the correction algorithm reduces the MSE, then ; conversely, if the algorithm increases the MSE, then .

Figure 16 compares the PSNR and IEF of our detection and correction algorithms and their hardware implementations to five algorithms proposed in the literature, labeled in the image as Chan [17], Yongji [19], Cho [18], Tchendjou1 [12], and Tchendjou3 [13]. In all cases, the algorithms performed defective pixel detection and correction on the same set of images used to generate the results shown in Figure 15. The hardware implementation results were obtained from post-layout Spice simulations of the circuits described in Section 4. Figure 16 shows that our algorithm performs better than all the other detection/correction methods as measured by both metrics. Our PSNR results are 11–15% higher than Tchendjou1 and Tchendjou3, and are more than 42% higher than the other three methods. In addition, the IEF achieved by our algorithm is more than 79% higher than the value achieved by Tchendjou1 and Tchendjou3, and more than 38 times the IEF achieved by Chan, Yongji, and Cho. These three methods produce a significant number of false positives, as shown in the PPV results in Figure 15c. The IEF metric is highly sensitive to the number of false positives, resulting in significant degradation in the performance of these three methods. Lastly, it can be observed that the PSNR of the analog hardware implementation is 1–3.7% worse than the algorithm, and the IEF is 12–17% lower. This is mainly due to charge injection in the detection circuit and the nonlinearities and offsets in the response of the detection and median computation circuits.

Figure 16.

Comparison of defective pixel detection/correction methods using (a) PSNR and (b) IEF.

6. Conclusions

In this paper, we have presented an algorithm for defective pixel detection/correction along with its analog-CMOS hardware implementation. Our algorithm detects defective pixels using a simplification of the algorithm presented in [12] that replaces a pixel’s value with the median value of its four neighbors. The hardware implements defective pixel detection at the pixel level during photocurrent integration and corrects defective values at the column level during pixel readout.

An evaluation using 500 images with 0.5% and 1% random defective pixels shows that our algorithm outperforms similar methods published in the literature. Post-layout simulations of the hardware implementation of the algorithm achieves slightly lower performance than the software implementation, though it continues to perform better than the software versions of the other published methods. Similar to other detection/correction algorithms based on local statistics, our algorithm is sensitive to the occurrence of two or more defective pixels within a small window. In these cases, the estimations computed by the algorithms may be biased by neighboring defective pixels.

Performing arithmetical operations at the pixel level allows our circuit to detect incorrect values for all pixels in the imager in parallel. Moreover, using photocurrent integration to perform the operations reduces the impact on the die area compared to using dedicated arithmetic circuits. Although we use dedicated circuits to replace the defective pixel values, they are placed at each image column, reducing their impact on total circuit area while achieving column-level parallelism.

We are currently extending our work to other algorithms that can exploit pixel-level parallelism in hardware using the same approach presented in this paper. In particular, we are focusing on feature extraction using local gradients, local image filters, and non-uniformity correction for infrared image sensors.

Author Contributions

Conceptualization, B.M.L.-P., W.V., P.Z.-H. and M.F.; methodology, B.M.L.-P., W.V., P.Z.-H. and M.F.; software, B.M.L.-P.; supervision, M.F.; writing—original draft, B.M.L.-P., W.V. and M.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Chilean National Agency for Research and Development (ANID) through FONDECYT Regular Grant No 1220960, graduate scholarship folio 21170172, and graduate scholarship folio 21161616.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

This study uses the following publicly available dataset: Linnaeus 5 Dataset http://chaladze.com/l5/ (accessed on 28 April 2022).

Conflicts of Interest

The authors declare no conflict of interest.

References

- El Gamal, A.; Eltoukhy, H. CMOS image sensors. IEEE Circuits Devices Mag. 2005, 21, 6–20. [Google Scholar] [CrossRef]

- Sarkar, M.; Bello, D.S.S.; van Hoof, C.; Theuwissen, A.J.P. Biologically Inspired CMOS Image Sensor for Fast Motion and Polarization Detection. IEEE Sens. J. 2013, 13, 1065–1073. [Google Scholar] [CrossRef]

- Kuroda, T. Essential Principles of Image Sensors; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Durini, D. High Performance Silicon Imaging: Fundamentals and Applications of Cmos and Ccd Sensors; Woodhead Publishing: Cambridge, MA, USA, 2020. [Google Scholar] [CrossRef]

- El-Desouki, M.; Jamal Deen, M.; Fang, Q.; Liu, L.; Tse, F.; Armstrong, D. CMOS image sensors for high speed applications. Sensors 2009, 9, 430–444. [Google Scholar] [CrossRef]

- Fossum, E.R.; Hynecek, J.; Tower, J.; Teranishi, N.; Nakamura, J.; Magnan, P.; Theuwissen, A.J.P. Special Issue on Solid-State Image Sensors. IEEE Trans. Electron Devices 2009, 56, 2376–2379. [Google Scholar] [CrossRef]

- Hassanli, K.; Sayedi, S.M.; Dehghani, R.; Jalili, A.; Wikner, J.J. A highly sensitive, low-power, and wide dynamic range CMOS digital pixel sensor. Sens. Actuators A Phys. 2015, 236, 82–91. [Google Scholar] [CrossRef]

- Cevik, I.; Huang, X.; Yu, H.; Yan, M.; Ay, S.U. An ultra-low power CMOS image sensor with on-chip energy harvesting and power management capability. Sensors 2015, 15, 5531–5554. [Google Scholar] [CrossRef] [PubMed]

- Premachandran, V.; Kakarala, R. Measuring the effectiveness of bad pixel detection algorithms using the ROC curve. IEEE Trans. Consum. Electron. 2010, 56, 2511–2519. [Google Scholar] [CrossRef]

- Ghosh, S.; Froebrich, D.; Freitas, A. Robust autonomous detection of the defective pixels in detectors using a probabilistic technique. Appl. Opt. 2008, 47, 6904–6924. [Google Scholar] [CrossRef]

- An, J.; Lee, W.; Kim, J. Adaptive Detection and Concealment Algorithm of Defective Pixel. In Proceedings of the 2007 IEEE Workshop on Signal Processing Systems, Shanghai, China, 17–19 October 2007; pp. 651–656. [Google Scholar] [CrossRef]

- Tchendjou, G.T.; Simeu, E. Self-healing imager based on detection and conciliation of defective pixels. In Proceedings of the 2018 IEEE 24th International Symposium on On-Line Testing And Robust System Design (IOLTS), Platja d’Aro, Spain, 2–4 July 2018; pp. 251–254. [Google Scholar] [CrossRef]

- Tchendjou, G.T.; Simeu, E. Detection, Location and Concealment of Defective Pixels in Image Sensors. IEEE Trans. Emerg. Top. Comput. 2020, 9, 664–679. [Google Scholar] [CrossRef]

- Chen, Y.; Kang, J.U.; Zhang, G.; Cao, J.; Xie, Q.; Kwan, C. High-performance concealment of defective pixel clusters in infrared imagers. Appl. Opt. 2020, 59, 4081–4090. [Google Scholar] [CrossRef]

- Ahmed, F.N.; Khelifi, F.; Lawgaly, A.; Bouridane, A. A machine learning-based approach for picture acquisition timeslot prediction using defective pixels. Forensic Sci. Int. Digit. Investig. 2021, 39, 301311. [Google Scholar] [CrossRef]

- ISO. Ergonomic Requirements for Work with Visual Displays Based on Flat Panels Part 2: Ergonomic Requirements for Flat Panel Displays; Standard, International Organization for Standardization: Geneva, Switzerland, 2002. [Google Scholar]

- Chan, C.h. Dead Pixel Real-Time Detection Method for Image. U.S. Patent 7,589,770, 15 September 2009. [Google Scholar]

- Cho, C.Y.; Chen, T.M.; Wang, W.S.; Liu, C.N. Real-time photo sensor dead pixel detection for embedded devices. In Proceedings of the 2011 International Conference on Digital Image Computing: Techniques and Applications, Noosa, QLD, Australia, 6–8 December 2011; pp. 164–169. [Google Scholar] [CrossRef]

- Yongji, L.; Xiaojun, Y. A Design of Dynamic Defective Pixel Correction for Image Sensor. In Proceedings of the 2020 IEEE International Conference on Artificial Intelligence and Information Systems (ICAIIS), Dalian, China, 20–22 March 2020; pp. 713–716. [Google Scholar] [CrossRef]

- Mata-Carballeira, O.; Gutiérrez-Zaballa, J.; del Campo, I.; Martínez, V. An FPGA-Based Neuro-Fuzzy Sensor for Personalized Driving Assistance. Sensors 2019, 19, 4011. [Google Scholar] [CrossRef] [PubMed]

- Chaudhary, V. Reconfigurable computing for smart vehicles. In Smart Cities; Springer: Berlin/Heidelberg, Germany, 2018; pp. 135–147. [Google Scholar] [CrossRef]

- Algredo-Badillo, I.; Morales-Rosales, L.A.; Hernández-Gracidas, C.A.; Cruz-Victoria, J.C.; Bautista, D.P.; Morales-Sandoval, M. Real time FPGA-ANN architecture for outdoor obstacle detection focused in road safety. J. Intell. Fuzzy Syst. 2019, 36, 4425–4436. [Google Scholar] [CrossRef]

- Mary, A.; Rose, L.; Karunakaran, A. Chapter 12 - FPGA-Based Detection and Tracking System for Surveillance Camera. In The Cognitive Approach in Cloud Computing and Internet of Things Technologies for Surveillance Tracking Systems; Peter, D., Alavi, A.H., Javadi, B., Fernandes, S.L., Eds.; Intelligent Data-Centric Systems, Academic Press: Cambridge, MA, USA, 2020; pp. 173–180. [Google Scholar] [CrossRef]

- Torres, V.A.; Jaimes, B.R.; Ribeiro, E.S.; Braga, M.T.; Shiguemori, E.H.; Velho, H.F.; Torres, L.C.; Braga, A.P. Combined weightless neural network FPGA architecture for deforestation surveillance and visual navigation of UAVs. Eng. Appl. Artif. Intell. 2020, 87, 103227. [Google Scholar] [CrossRef]

- Shan, Y. ADAS and Video Surveillance Analytics System Using Deep Learning Algorithms on FPGA. In Proceedings of the 2018 28th International Conference on Field Programmable Logic and Applications (FPL), Dublin, Ireland, 27–31 August 2018; pp. 465–4650. [Google Scholar] [CrossRef]

- Li, C.; Benezeth, Y.; Nakamura, K.; Gomez, R.; Yang, F. A robust multispectral palmprint matching algorithm and its evaluation for FPGA applications. J. Syst. Archit. 2018, 88, 43–53. [Google Scholar] [CrossRef]

- Khan, T.M.; Bailey, D.G.; Khan, M.A.; Kong, Y. Real-time iris segmentation and its implementation on FPGA. J. Real-Time Image Process. 2020, 17, 1089–1102. [Google Scholar] [CrossRef]

- Sharma, V.; Joshi, A.M. VLSI Implementation of Reliable and Secure Face Recognition System. Wirel. Pers. Commun. 2022, 122, 3485–3497. [Google Scholar] [CrossRef]

- Ohta, J. Smart CMOS Image Sensors and Applications; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar] [CrossRef]

- Kim, D.; Song, M.; Choe, B.; Kim, S.Y. A Multi-Resolution Mode CMOS Image Sensor with a Novel Two-Step Single-Slope ADC for Intelligent Surveillance Systems. Sensors 2017, 17, 1497. [Google Scholar] [CrossRef]

- Lee, S.; Jeong, B.; Park, K.; Song, M.; Kim, S.Y. On-cmos image sensor processing for lane detection. Sensors 2021, 21, 3713. [Google Scholar] [CrossRef]

- Chaladze, G.; Kalatozishvili, L. Linnaeus 5 Dataset for Machine Learning, 2017. Available online: http://chaladze.com/l5/ (accessed on 28 April 2022).

- Chapman, G.H.; Leung, J.; Namburete, A.; Koren, I.; Koren, Z. Predicting pixel defect rates based on image sensor parameters. In Proceedings of the 2011 IEEE International Symposium on Defect and Fault Tolerance in VLSI and Nanotechnology Systems, Vancouver, BC, USA, 3–5 October 2011; pp. 408–416. [Google Scholar] [CrossRef]

- Chapman, G.H.; Thomas, R.; Koren, Z.; Koren, I. Empirical formula for rates of hot pixel defects based on pixel size, sensor area, and ISO. In Proceedings of the Sensors, Cameras, and Systems for Industrial and Scientific Applications XIV; IS&T/SPIE Electronic Imaging: Burlingame, CA, USA, 2013; Volume 8659, pp. 119–129. [Google Scholar] [CrossRef]

- Ongeval, C.V.; Jacobs, J.; Bosmans, H. Classification of artifacts in clinical digital mammography. In Digital Mammography; Springer: Berlin/Heidelberg, Germany, 2010; pp. 55–67. [Google Scholar] [CrossRef]

- Mijatović, L.; Dean, H.; Rožić, M. Implementation of algorithm for detection and correction of defective pixels in FPGA. In Proceedings of the 2012 35th International Convention MIPRO, Opatija, Croatia, 21–25 May 2012; pp. 1731–1735. [Google Scholar]

- El-Yamany, N. Robust Defect Pixel Detection and Correction for Bayer Imaging Systems. Electron. Imaging 2017, 2017, 46–51. [Google Scholar] [CrossRef]

- Tchendjou, G.T.; Simeu, E. Self-Healing Image Sensor Using Defective Pixel Correction Loop. In Proceedings of the 2019 International Conference on Control, Automation and Diagnosis (ICCAD), Grenoble, France, 2–4 July 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Tchendjou, G.T.; Simeu, E. Defective pixel analysis for image sensor online diagnostic and self-healing. In Proceedings of the 2019 IEEE 37th VLSI Test Symposium (VTS), Monterey, CA, USA, 23–25 April 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Kumar, A.; Sarkar, S.; Agarwal, R. A novel algorithm and hardware implementation for correcting sensor non-uniformities in infrared focal plane array based staring system. Infrared Phys. Technol. 2007, 50, 9–13. [Google Scholar] [CrossRef]

- Hameed, R.; Qadeer, W.; Wachs, M.; Azizi, O.; Solomatnikov, A.; Lee, B.C.; Richardson, S.; Kozyrakis, C.; Horowitz, M. Understanding sources of inefficiency in general-purpose chips. In Proceedings of the 37th Annual International Symposium on Computer Architecture, New York, NY, USA, 19–23 June 2010; pp. 37–47. [Google Scholar] [CrossRef]

- Allen, P.E.; Holberg, D.R. CMOS Analog Circuit Design; Elsevier: Amsterdam, The Netherlands, 2011. [Google Scholar]

- Jendernalik, W.; Blakiewicz, G.; Jakusz, J.; Szczepanski, S.; Piotrowski, R. An analog sub-miliwatt CMOS image sensor with pixel-level convolution processing. IEEE Trans. Circuits Syst. I Regul. Pap. 2013, 60, 279–289. [Google Scholar] [CrossRef]

- Ginhac, D.; Dubois, J.; Heyrman, B.; Paindavoine, M. A high speed programmable focal-plane SIMD vision chip. Analog Integr. Circuits Signal Process. 2010, 65, 389–398. [Google Scholar] [CrossRef]

- Garcia-Lamont, J. Analogue CMOS prototype vision chip with prewitt edge processing. Analog Integr. Circuits Signal Process. 2012, 71, 507–514. [Google Scholar] [CrossRef]

- Suarez, M.; Brea, V.M.; Fernandez-Berni, J.; Carmona-Galan, R.; Cabello, D.; Rodriguez-Vazquez, A. Low-power CMOS vision sensor for Gaussian pyramid extraction. IEEE J. Solid-State Circuits 2016, 52, 483–495. [Google Scholar] [CrossRef]

- Gottardi, M.; Lecca, M. A 64 × 64 Pixel Vision Sensor for Local Binary Pattern Computation. IEEE Trans. Circuits Syst. I Regul. Pap. 2018, 66, 1831–1839. [Google Scholar] [CrossRef]

- Young, C.; Omid-Zohoor, A.; Lajevardi, P.; Murmann, B. A data-compressive 1.5/2.75-bit log-gradient QVGA image sensor with multi-scale readout for always-on object detection. IEEE J. Solid-State Circuits 2019, 54, 2932–2946. [Google Scholar] [CrossRef]

- Jin, M.; Noh, H.; Song, M.; Kim, S.Y. Design of an edge-detection cmos image sensor with built-in mask circuits. Sensors 2020, 20, 3649. [Google Scholar] [CrossRef]

- Zhong, X.; Law, M.K.; Tsui, C.Y.; Bermak, A. A fully dynamic multi-mode CMOS vision sensor with mixed-signal cooperative motion sensing and object segmentation for adaptive edge computing. IEEE J. Solid-State Circuits 2020, 55, 1684–1697. [Google Scholar] [CrossRef]

- Hsu, T.H.; Chen, Y.R.; Liu, R.S.; Lo, C.C.; Tang, K.T.; Chang, M.F.; Hsieh, C.C. A 0.5-V real-time computational CMOS image sensor with programmable kernel for feature extraction. IEEE J. Solid-State Circuits 2020, 56, 1588–1596. [Google Scholar] [CrossRef]

- Valenzuela, W.; Soto, J.E.; Zarkesh-Ha, P.; Figueroa, M. Face recognition on a smart image sensor using local gradients. Sensors 2021, 21, 2901. [Google Scholar] [CrossRef] [PubMed]

- Valenzuela, W.; Saavedra, A.; Zarkesh-Ha, P.; Figueroa, M. Motion-Based Object Location on a Smart Image Sensor Using On-Pixel Memory. Sensors 2022, 22, 6538. [Google Scholar] [CrossRef] [PubMed]

- Chapman, G.H.; Koren, I.; Koren, Z.; Dudas, J.; Jung, C. Methods and Apparatus for Detecting Defects in Imaging Arrays by Image Analysis. U.S. Patent 8,009,209, 2011. [Google Scholar]

- Yang, M.; Chen, S.; Wu, X.; Fu, W.; Huang, Z. Identification and replacement of defective pixel based on Matlab for IR sensor. Front. Optoelectron. China 2011, 4, 434–437. [Google Scholar] [CrossRef]

- Palmisano, G.; Palumbo, G.; Pennisi, S. Design procedure for two-stage CMOS transconductance operational amplifiers: A tutorial. Analog Integr. Circuits Signal Process. 2001, 27, 179–189. [Google Scholar] [CrossRef]

- Sabry, M.N.; Omran, H.; Dessouky, M. Systematic design and optimization of operational transconductance amplifier using gm/ID design methodology. Microelectron. J. 2018, 75, 87–96. [Google Scholar] [CrossRef]

- Razavi, B. Design of Analog CMOS Integrated Circuits; IRWIN ELECTRONICS & COMPUTER E, McGraw-Hill: Los Angeles, CA, USA, 2017. [Google Scholar]

- Baker, R.J. CMOS: Circuit Design, Layout, and Simulation; John Wiley & Sons: Hoboken, NJ, USA, 2019. [Google Scholar]

- Soleimani, M. Design and Implementation of Voltage—Mode MIN/MAX Circuits. J. Eng. Sci. Technol. Rev. 2015, 8, 166–171. [Google Scholar] [CrossRef]

- Vornicu, I.; Carmona-Galán, R.; Rodríguez-Vázquez, Á. Compensation of PVT variations in ToF imagers with in-pixel TDC. Sensors 2017, 17, 1072. [Google Scholar] [CrossRef]

- Lee, K.; Park, S.; Park, S.Y.; Cho, J.; Yoon, E. A 272.49 pJ/pixel CMOS image sensor with embedded object detection and bio-inspired 2D optic flow generation for nano-air-vehicle navigation. In Proceedings of the 2017 Symposium on VLSI Circuits, Kyoto, Japan, 5–8 June 2017; pp. C294–C295. [Google Scholar] [CrossRef]

- Park, K.; Song, M.; Kim, S.Y. The design of a single-bit CMOS image sensor for iris recognition applications. Sensors 2018, 18, 669. [Google Scholar] [CrossRef]

- Nazhamaiti, M.; Xu, H.; Liu, Z.; Chen, Y.; Wei, Q.; Wu, X.; Qiao, F. NS-MD: Near-Sensor Motion Detection with Energy Harvesting Image Sensor for Always-On Visual Perception. IEEE Trans. Circuits Syst. II Express Briefs. 2021, 68, 3078–3082. [Google Scholar] [CrossRef]

- Nomura, T.; Zhang, R.; Nakashima, Y. An Energy Efficient Stochastic+Spiking Neural Network. Bull. Netw. Comput. Syst. Softw. 2021, 10, 30–35. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).